Abstract

Purpose

The aim of this study is to investigate the efficacy of a mobile platform that combines smartphone-based retinal imaging with automated grading for determining the presence of referral-warranted diabetic retinopathy (RWDR).

Methods

A smartphone-based camera (RetinaScope) was used by non-ophthalmic personnel to image the retina of patients with diabetes. Images were analyzed with the Eyenuk EyeArt® system, which generated referral recommendations based on presence of diabetic retinopathy (DR) and/or markers for clinically significant macular oedema. Images were independently evaluated by two masked readers and categorized as refer/no refer. The accuracies of the graders and automated interpretation were determined by comparing results to gold standard clinical diagnoses.

Results

A total of 119 eyes from 69 patients were included. RWDR was present in 88 eyes (73.9%) and in 54 patients (78.3%). At the patient-level, automated interpretation had a sensitivity of 87.0% and specificity of 78.6%; grader 1 had a sensitivity of 96.3% and specificity of 42.9%; grader 2 had a sensitivity of 92.5% and specificity of 50.0%. At the eye-level, automated interpretation had a sensitivity of 77.8% and specificity of 71.5%; grader 1 had a sensitivity of 94.0% and specificity of 52.2%; grader 2 had a sensitivity of 89.5% and specificity of 66.9%.

Discussion

Retinal photography with RetinaScope combined with automated interpretation by EyeArt achieved a lower sensitivity but higher specificity than trained expert graders. Feasibility testing was performed using non-ophthalmic personnel in a retina clinic with high disease burden. Additional studies are needed to assess efficacy of screening diabetic patients from general population.

Similar content being viewed by others

Introduction

In 2017, the International Diabetes Federation estimated that 13% of the United States population (30.2 million people) had diabetes. Moreover, it was estimated that 1 in 3 of these individuals had some degree of diabetic retinopathy (DR), a common visual complication of diabetes [1]. There are numerous vision-saving interventions for DR including intensive glycemic control [2,3,4], intraocular anti-vascular endothelial growth factor (anti-VEGF) injections [5,6,7,8], steroid injections [9], laser photocoagulation, and/or surgery [10,11,12]. Using these techniques, it is estimated that early interventions can prevent over 90% of significant vision loss at five years [13]; yet, DR remains the leading cause of blindness in working-age adults (20–65 years old) [1].

Many individuals, especially those from a lower socioeconomic status, do not seek medical evaluation and intervention [14,15,16]. Four years after the American Diabetes Association began recommending annual eye exams for individuals with diabetes [17], less than one-half of adults in the United States with diabetes received DR screening either at recommended intervals or at all [18]. Despite significant improvements in treatments and awareness, two decades later, this statistic has not significantly improved. A 2016 study following 339,646 individuals with diabetes found that less than half received the recommended annual eye exam [19]. Major risk factors for not receiving eye screening include young age [14], low socioeconomic status [14,15,16, 20, 21], black or Latino ethnicity [21,22,23], low health literacy [21], and lack of access to screening services [21, 24, 25]. In addition, low screening rates may be influenced by referral practices, and one study found that as few as 35% of diabetic patients were referred for eye exams by other specialists [26].

A possible solution to reducing DR-related vision loss is improving access to high-quality DR screening programs, which offer early and accurate referral for vision-threatening DR. Telemedicine and remote, digital retinal imaging have emerged as a potential resource-effective, technological solutions to the growing need for accurate and widely available DR screening. Recently, attention has been drawn to smartphones as a screening solution which combines telecommunication and imaging capabilities [27]. Remote imaging programs have already succeeded in improving DR screening rates and lowering the incidence of DR-related vision loss in select populations [28,29,30,31,32]. To date, the majority of these remote screening programs are designed upon a two-step approach, wherein patients are first imaged, and then the images are sent for delayed diagnostic grading by an ophthalmologist or a trained grader [33,34,35,36,37,38]. Yet, this approach may introduce unnecessary cost and delay, posting barriers to patients receiving needed treatment.

Attention has recently been drawn to artificial intelligence (AI) as a potential solution to this logistical dilemma. By training on large, pre-scored data sets, these mathematical models and algorithms are capable of learning complex behaviors, such as image classification, which have traditionally required a human. Thus far, researchers have utilized AI to diagnose a range of diseases, including pediatric pneumonia [39], malaria [40], and numerous ocular conditions [39, 41,42,43,44,45,46,47]. Recent programs have been shown to identify DR with diagnostic accuracy similar to trained graders and ophthalmologists [41, 48,49,50,51,52,53,54]. AI systems displaying both high sensitivity and specificity could allow for preliminary screening of large patient populations and could reduce screening costs. However, AI alone will not allow for greater access to remote patient populations, and increased screening for DR remains a critical need across the globe. About 79% of individuals with diabetes live either in low or middle-income countries [1], and estimates suggest that 84% of these cases remain undiagnosed [55]. Thus, if paired with portable imaging devices, AI could allow for rapid, autonomous identification of RWDR in near real-time; thereby drastically simplifying the screening process and improving accessibility.

Herein, we present a mobile platform that combines portable, smartphone-based wide-field retinal imaging with automated grading to detect RWDR. The sensitivity and specificity of the system was determined through a comparison to current gold-standard slit-lamp biomicroscopy.

Materials and methods

RetinaScope hardware

For a detailed description of the device hardware and software design, please reference previous publications from our group [56, 57]. Briefly, the RetinaScope weighs roughly 310 g. Its 3D-printed plastic housing encloses optics for illuminating and imaging the retina onto the smartphone camera. Deep red (655-nm peak wavelength) light emitting diodes are used for focusing and to minimize photopic response. During imaging, polarized bright white illumination is used in conjunction with two polarizing filters to minimize unwanted glare (Fig. 1a). A display may be magnetically attached to either side of the device to display a fixation target (Fig. 1b). The electronic hardware in RetinaScope communicates with an iPhone (Apple Inc., Cupertino, CA) application via Bluetooth (Fig. 1c). Prior to image acquisition, operators can employ intuitive touch and swipe motions to adjust focus, zoom, and exposure. This approach reduces the time necessary to capture the image and minimizes patient discomfort. After pharmacological mydriasis, each fundus image has an ~50° field of view. Using a custom algorithm running directly on the smartphone, sequential images may be computationally merged to create an ~100°, wide-field montage of the retina (Fig. 2). Images can then either be stored on the iPhone or directly uploaded to a secure server using Wi-Fi or cellular service for remote reviewing.

a A series of optical lenses are used to focus light from the LED array on the retina (orange light). Reflecting off the retina, light passes through the wire grid beamsplitter and is focused on the smartphone’s camera sensor (blue arrow). b During image acquisition, patients focus a fixation dot on the magnetic display (grey arrow) to guide their gaze while a front element (blue arrow) acquires the image. c Technicians are capable of controlling the device via intuitive touch controls and an application running on the paired iPhone.

Study participants

Study participants were recruited at the University of Michigan Kellogg Eye Center Retina Clinic and the ophthalmology consultation service at the University of Michigan Hospital, Ann Arbor, MI, in accordance with the University of Michigan Institutional Review Board Committee approval (HUM00097907 and HUM00091492). The study adhered to the tenets of the Declaration of Helsinki and was registered at ClinicalTrials.gov (Identifier NCT03076697). Inclusion criteria required patients be at least 18 years of age and show no significant bilateral media opacity (e.g. vitreous haemorrhage or advanced cataract). Participantsʼ demographic data, including age and sex, and clinical findings were recorded.

Photography and remote interpretation

Images were acquired by a medical student and a medical intern rather than ophthalmologists or ophthalmic photographers. Patients underwent dilated fundus imaging in a dimmed room at the Kellogg Eye Center Retina Clinic. Smartphone imaging was used in conjunction with a custom software application to capture five sequential images (central, inferior, superior, nasal, and temporal). Both eyes were imaged except when one eye was not dilated, had severe media opacity, or the patient was monocular. Patients subsequently underwent a gold-standard dilated eye examination as part of routine care. The images were subsequently evaluated by a retina specialist and a comprehensive ophthalmologist who specialized in telemedicine. The investigators were masked to the clinical DR severity grading. Images were graded in a controlled environment on a high-resolution (1600 × 1200 pixels) 19-inch display with standard luminance and contrast on a black background. A grading template was used to assess the severity of the DR as mild, moderate, or severe non-proliferative DR (NPDR); proliferative DR (PDR); or no DR. The presence of clinically significant macular oedema (CSMO) was also evaluated. All grading was assessed in accordance with the modified Airlie House classification system used in the Early Treatment Diabetic Retinopathy Study (ETDRS) severity classification criteria [58]. Eyes displaying moderate or severe NPDR, PDR, or CSMO were classified as RWDR.

EyeArt® AI eye screening system for autonomous grading

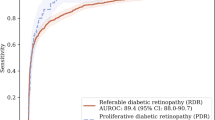

After the patients’ identities were masked, the smartphone images were uploaded to the EyeArt® (v2.0) system. The EyeArt AI eye screening system is an autonomous, cloud based, deep neural network software designed to detect the presence of RWDR. Image quality was evaluated and images with quality insufficient for DR screening were excluded from further analyses. Gradable images were enhanced and normalized to improve lesion visibility, and then analyzed to localize and identify lesions. The location, size, and degree of confidence of lesion detection were used to assess DR severity on the International Classification of DR (ICDR) scale. Finally, hard exudates within one disc-diameter of the macula were used as biomarkers indicating the presence of CSMO. Using this data from all the images belonging to the eye/patient, the system assigned a referral score to the eye/patient, which if above a preset threshold, would result in a binary decision to refer the patient (Fig. 3). A ROC curve was generated by varying this threshold and plotting the true positive rate against the false positive rate (view Supplemental Fig.).

EyeArt system flow diagram indicating the sequence of operations performed on the input retinal images for binary classification of images as RWDR or non-RWDR. After the initial image analysis operations, the DR severity and presence/absence of surrogate markers for CSMO in all images of the patient is used to assign a referral score for the patient, which if above a preset threshold results in a binary decision to refer the patient.

Statistical analysis

At the eye level, a generalized estimating equation (GEE) logistic regression with an exchangeable working correlation matrix was used in RStudio to estimate the sensitivity and the specificity for each grader and account for the inter eye correlations. The clinical diagnoses were used as the true references for each grader. The GEE logistic regression was then used to determine whether there was a significant difference in sensitivity between graders. Robust standard errors were employed when deriving the 95% confidence intervals. At the patient level, if a patient had both the right and the left eyes imaged, the corresponding eyes were paired. Patients were classified as referral warranted if either eye was clinically diagnosed as having RWDR. Independence was assumed between each patient, and thus, a standard, 2 by 2 matrix was used. Due to the high sensitivity and specificity values, Wilson 95% confidence intervals were calculated for each grader. A McNemar’s (chi-square) test was used to determine whether there was a significant difference in sensitivity between the human graders and the AI. Finally, a weighted kappa was calculated to assess inter-grader reliability between the human graders.

Ethics

All patients consented to enrol in this study and were informed of the involved risks. It was emphasized that each patient had the ability to leave the study at any point. In addition, imaging personnel were instructed to cease patient evaluations when potential harm to the patient became a possibility.

Results

Demographic data and gold-standard evaluation

A total of 72 patients with diabetes undergoing routine dilated clinical exams were recruited from the University of Michigan Kellogg Eye Center Retina Clinic. Twenty-six eyes were excluded from smartphone imaging due to either dense media opacity (e.g. vitreous haemorrhage), lack of mydriasis, or imaging deferral by patient. Three patients were removed due to lack of imaging of either eye. A total of 119 eyes from 69 patients were included for analysis. The mean age of the cohort was 57.0 years (standard deviation = 15.7 years); 26 patients (37.7%) were female. One patient was excluded from patient level analysis due to the absence of a smartphone image necessary to make an accurate referral recommendation. By gold-standard clinical diagnosis, RWDR was present in 88 eyes (73.9%) and 54 individuals (78.3%).

Sensitivity and specificity

At the patient level, automated analysis achieved a sensitivity and specificity of 87.0% and 78.6%, respectively (Table 1). Automated analysis maintained a greater specificity than both grader 1 (42.9%) and grader 2 (50.0%). Grader 1 achieved a significantly greater sensitivity than the automated analysis (96.3%; p = 0.02); however, grader 2 did not (92.5%; p = 0.3).

At the eye-level, automated analysis achieved a sensitivity and specificity of 77.8% and 71.5%, respectively (Table 2). Both graders 1 and 2 demonstrated greater sensitivities (94.0% and 89.5%, respectively) but lower specificities (52.2% and 66.9%, respectively) than the automated analysis. Yet, when accounting for the inter-eye correlation using the GEE logistic regression, it was found that neither grader 1 or grader 2 were significantly more sensitive than the automated analysis (p = 0.2 and p = 0.7, respectively).

Inter-grader agreement

The trained graders demonstrated moderate inter grader agreement, achieving a kappa value of 0.452 ± 0.334. Of note, the kappa value was impacted by high disease prevalence within the cohort.

Discussion

We previously presented the RetinaScope, a portable and easy-to-use smartphone-based imaging device capable of capturing high-quality images of the retina [56, 57, 59]. In this study, we evaluated the efficacy of handheld imaging with RetinaScope by non-ophthalmic personnel with automated interpretation by the EyeArt system as a complete mobile platform for generating referral recommendations for DR. Our results suggest that this approach can achieve the necessary sensitivity, as defined by the British Diabetic Association, to be used as a screening tool for DR [60, 61]. At the patient-level, only one grader was significantly more sensitive than the automated analysis, while the automated analysis maintained a higher specificity than both graders. At the eye-level, automated analysis maintained a higher specificity but a lower sensitivity than human graders. Yet, the differences in sensitivity did not achieve statistical significance once the inter-eye correlations were accounted for using the GEE logistic regression. It should be noted that in practice, referrals will be based upon patient-level evaluations.

Recently, several studies have demonstrated the promise of smartphone-based devices in screening and monitoring diseases such as DR. To date, only one study has combined smartphone imaging and AI based image classification [62]. Rajalakshmi et al. reported on the Remidio ‘Fundus on phone’ (FOP), a smartphone-based device, which was used in conjugation the EyeArt system for automated grading. The system produced excellent results in screening for RWDR with a 95.8% sensitivity and 80.2% specificity; however, the findings lacked validation by concurrent gold-standard dilated fundus examination. Instead, the ophthalmologists were asked to grade the images captured using the FOP device, and these scores were used as the true clinical references. Studies employing this structure rest upon the assumption that a clinician reviewing an image from a mobile imaging device is equivalent to a dilated fundus exam. Yet, recent research has shown that there is a notable difference in trained, human graders scoring smartphones images and traditional images. Within the past five years, studies evaluating trained graders ability to detect RWDR within smartphone images reported sensitivities ranging from 50 to 91% [33, 63, 64]. Thus, it is imperative that researchers reference gold-standard clinical diagnoses when validating the sensitivity and specificity of new modalities, especially when combining two new modalities simultaneously. Without such validation, results are more representative of inter-grader agreement, and the reported sensitivities may be erroneously high.

In addition, Rajalakshmi et al. did not specify whether images were acquired by expert or non-ophthalmic operators. The FOP system is reported to be capable of handheld operation or mounted on a slit-lamp frame; however, their study did not disclose whether the device was mounted to the slit lamp when acquiring their data. Rigid stabilization of the camera and patients’ heads improves imaging quality substantially, but it also introduces barriers for implementation in the community including higher cost, lack of portability, and necessity of higher operator skill. Importantly, our study tested the feasibility of imaging by non-ophthalmic operators (a medical intern and medical student), handheld operation, and a lack of rigid head stabilization. Our study validates the efficacy of combining smartphone-based retinal imaging and automated grading for RWDR, and further demonstrates the feasibility of RetinaScope with the EyeArt system in detecting RWDR under non-ideal imaging conditions.

This study presents several notable strengths. First, images used for DR grading were acquired by non-ophthalmic operators and, as a result, images of lesser quality were included in analysis, more closely simulating conditions that may be encountered when screening in the community where such imaging is most needed. Second, our study utilized gold-standard dilated examination by a retina specialist for validating the combination of smartphone imaging and automated image interpretation. Third, our study adheres with wide-field imaging guidelines for photographic screening of DR. Complex imaging tasks, such as imaging multiple regions of the retina for wide-field analysis, were simplified and standardized by leveraging computational capabilities of the smartphone to guide imaging and achieved up to ~100-degree fields-of-view, as previously reported [57]. It should be noted that ETDRS guidelines for photographic screening of DR utilized 7-field retinal images comprising a 90-degree field-of-view [65]. Numerous studies have emphasized the need for wide-field imaging when screening for DR [66,67,68]. For example, a single 45° field of view retinal image had relatively good detection of disease but was inadequate to determine severity of DR as necessary for referral [65, 69, 70].

There are several limitations to this study. First, participants in this study were recruited from the retina clinic in a tertiary care eye hospital, where the prevalence of DR and other retinal diseases is much higher than in the general population. While our feasibility study shows promising results, additional work is required to validate the accuracy and utility of the RetinaScope in the general population. Second, RetinaScope is currently designed as a mydriatic device that requires patients' eyes to be pharmacologically dilated. This can be time-consuming, uncomfortable for patients, and unfamiliar to non-ophthalmic providers in the community. Third, the EyeArt system was trained using traditional retinal photographs rather than smartphone-based imaging. It is possible that automated grading was unable to identify pathology in the smartphone images that it would have recognized in traditional fundus images. The incorporation of smartphone images into the algorithm’s training will help address this limitation.

Smartphone-based retinal imaging combined with automated interpretation is a promising method for increasing accessibility of DR screening. However, it is important to recognize that image quality from handheld or smartphone-based technologies can be highly variable [64]. A key benefit of a smartphone-based approach is the familiarity of this technology, which can assist in usability and rapid learning among inexperienced and non-ophthalmic operators [59]. In addition, we found that device improvement guided by user feedback could dramatically reduce the learning time associated with smartphone-based retinal imaging among inexperienced operators [59]. Minimizing the variability in data quality that arises from nonideal conditions and inexperienced operators will become increasingly important for effective and widespread deployment of automated screening technologies in the community. RetinaScope implements hardware and software automation to simplify acquisition of high-quality retinal images [57]. The field will benefit greatly from continued innovation that improves the reliability and quality of smartphone-based retinal imaging.

Conclusion

At the patient level, RetinaScope combined with the EyeArt system achieved a sensitivity similar to that of trained human graders while maintaining a higher specificity. Future refinements to both the algorithm and the hardware should continue to improve the device accuracy and help to eliminate current burdens on the healthcare system.

Summary

What was known before

-

Artificial intelligence based programs now allow for rapid and accurate analysis of retinal images. Researchers are beginning to integrate autonomous grading with mobile imaging platforms to increase access to medical screening programs.

What this study adds

-

This study is the first to reference the gold standard diagnosis when evaluating the efficacy of combining smartphone-based retinal imaging and autonomous grading. The imaging platform allows for wide-field imaging, which has not been previously explored in conjunction with autonomous grading.

References

IDF diabetes atlas - 2017 Atlas. 2018. http://diabetesatlas.org/resources/2017-atlas.html.

UK Prospective Diabetes Study (UKPDS) Group. Intensive blood-glucose control with sulphonylureas or insulin compared with conventional treatment and risk of complications in patients with type 2 diabetes (UKPDS 33). Lancet Lond Engl. 1998;352:837–53.

Nathan DM, Genuth S, Lachin J, Cleary P, Crofford O, Davis M. Diabetes Control and Complications Trial Research Group et al. The effect of intensive treatment of diabetes on the development and progression of long-term complications in insulin-dependent diabetes mellitus. N. Engl J Med. 1993;329:977–86.

Lachin JM, White NH, Hainsworth DP, Sun W, Cleary PA, Nathan DM. Diabetes Control and Complications Trial (DCCT)/Epidemiology of Diabetes Interventions and Complications (EDIC) Research Group Effect of intensive diabetes therapy on the progression of diabetic retinopathy in patients with type 1 diabetes: 18 years of follow-up in the DCCT/EDIC. Diabetes. 2015;64:631–42.

Antonetti DA, Klein R, Gardner TW. Diabetic retinopathy. N. Engl J Med. 2012;366:1227–39. Mar 29.

Ajlan RS, Silva PS, Sun JK. Vascular endothelial growth factor and diabetic retinal disease. Semin Ophthalmol. 2016;31:40–8.

Osaadon P, Fagan XJ, Lifshitz T, Levy J. A review of anti-VEGF agents for proliferative diabetic retinopathy. Eye. 2014;28:510–20

Gündüz K, Bakri SJ. Management of proliferative diabetic retinopathy. Compr Ophthalmol Update. 2007;8:245–56.

Boyer DS, Yoon YH, Belfort R, Bandello F, Maturi RK, Augustin AJ, et al. Three-year, randomized, sham-controlled trial of dexamethasone intravitreal implant in patients with diabetic macular edema. Ophthalmology. 2014;121:1904–14.

Early Treatment Diabetic Retinopathy Study Research Group Early photocoagulation for diabetic retinopathy. ETDRS report number 9.Ophthalmology . 1991;98:766–85.

Early Treatment Diabetic Retinopathy Study research group. Photocoagulation for diabetic macular edema. Early Treatment Diabetic Retinopathy Study report number 1. Arch Ophthalmol Chic Ill 1960. 1985;103:1796–806.

The Diabetic Retinopathy Study Research Group. Photocoagulation treatment of proliferative diabetic retinopathy. Clinical application of Diabetic Retinopathy Study (DRS) findings, DRS Report Number 8. Ophthalmology. 1981;88:583–600.

Ferris FL. How effective are treatments for diabetic retinopathy? JAMA. 1993;269:1290–1.

Murchison AP, Hark L, Pizzi LT, Dai Y, Mayro EL, Storey PP, et al. Non-adherence to eye care in people with diabetes. BMJ Open Diabetes Res Care. 2017;5. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5574424/.

Tao X, Li J, Zhu X, Zhao B, Sun J, Ji L, et al. Association between socioeconomic status and metabolic control and diabetes complications: a cross-sectional nationwide study in Chinese adults with type 2 diabetes mellitus. Cardiovasc Diabetol. 2016;15:61.

Wang SY, Andrews CA, Herman WH, Gardner TW, Stein JD. Incidence and risk factors for developing diabetic retinopathy among youths with type 1 or type 2 diabetes throughout the United States. Ophthalmology. 2017;124:424–30.

Association AD. Standards of medical care for patients with diabetes mellitus. Diabetes Care 1989;12:365–8.

Brechner RJ, Cowie CC, Howie LJ, Herman WH, Will JC, Harris MI. Ophthalmic examination among adults with diagnosed diabetes mellitus. JAMA 1993;270:1714–8.

Fisher MD, Rajput Y, Gu T, Singer JR, Marshall AR, Ryu S, et al. Evaluating adherence to dilated eye examination recommendations among patients with diabetes, combined with patient and provider perspectives. Am Health Drug Benefits. 2016;9:385–93.

Zhang X, Beckles GL, Chou C-F, Saaddine JB, Wilson MR, Lee PP, et al. Socioeconomic disparity in use of eye care services among US adults with age-related eye diseases: National Health Interview Survey, 2002 and 2008. JAMA Ophthalmol. 2013;131:1198–206.

Fathy C, Patel S, Sternberg P, Kohanim S. Disparities in adherence to screening guidelines for diabetic retinopathy in the United States: a comprehensive review and guide for future directions. Semin Ophthalmol. 2016;31:364–77.

Paz SH, Varma R, Klein R, Wu J, Azen SP. Los Angeles Latino Eye Study Group Noncompliance with vision care guidelines in Latinos with type 2 diabetes mellitus: the Los Angeles Latino Eye Study. Ophthalmology. 2006;113:1372–7.

Ellish NJ, Royak-Schaler R, Passmore SR, Higginbotham EJ. Knowledge, attitudes, and beliefs about dilated eye examinations among African-Americans. Invest Ophthalmol Vis Sci. 2007;48:1989–94.

Gibson DM. Eye care availability and access among individuals with diabetes, diabetic retinopathy, or age-related macular degeneration. JAMA Ophthalmol. 2014;132:471–7.

Brown M, Kuhlman D, Larson L, Sloan K, Ablah E, Konda K, et al. Does availability of expanded point-of-care services improve outcomes for rural diabetic patients? Prim Care. Diabetes. 2013;7:129–34.

Rosenberg JB, Friedman IB, Gurland JE. Compliance with screening guidelines for diabetic retinopathy in a large academic children’s hospital in the Bronx. J Diabetes Complications. 2011;25:222–6.

DeBuc DC. The role of retinal imaging and portable screening devices in tele-ophthalmology applications for diabetic retinopathy management. Curr Diab Rep. 2016;16:132.

Mansberger SL, Sheppler C, Barker G, Gardiner SK, Demirel S, Wooten K, et al. Long-term comparative effectiveness of telemedicine in providing diabetic retinopathy screening examinations: a randomized clinical trial. JAMA Ophthalmol. 2015;133:518–25.

Jani PD, Forbes L, Choudhury A, Preisser JS, Viera AJ, Garg S. Evaluation of diabetic retinal screening and factors for ophthalmology referral in a telemedicine network. JAMA Ophthalmol. 2017;135:706–14.

Kirkizlar E, Serban N, Sisson JA, Swann JL, Barnes CS, Williams MD. Evaluation of telemedicine for screening of diabetic retinopathy in the Veterans Health Administration. Ophthalmology. 2013;120:2604–10.

Zimmer-Galler IE, Kimura AE, Gupta S. Diabetic retinopathy screening and the use of telemedicine. Curr Opin Ophthalmol. 2015;26:167–72.

Liew G, Michaelides M, Bunce C. A comparison of the causes of blindness certifications in England and Wales in working age adults (16–64 years), 1999–2000 with 2009–2010. BMJ Open. 2014;4:e004015.

Toy BC, Myung DJ, He L, Pan CK, Chang RT, Polkinhorne A, et al. Smartphone-based dilated fundus photography and near visual acuity testing as inexpensive screening tools to detect referral warranted diabetic eye disease. Retin Philos Pa. 2016;36:1000–8.

Russo A, Mapham W, Turano R, Costagliola C, Morescalchi F, Scaroni N, et al. Comparison of smartphone ophthalmoscopy with slit-lamp biomicroscopy for grading vertical cup-to-disc ratio. J Glaucoma. 2016;25:e777–81.

Muiesan ML, Salvetti M, Paini A, Riviera M, Pintossi C, Bertacchini F, et al. Ocular fundus photography with a smartphone device in acute hypertension. J Hypertens. 2017;35:1660–5.

Toslak D, Ayata A, Liu C, Erol MK, Yao X. Wide-field smartphone fundus video camera based on miniaturized indirect ophthalmoscopy. Retin Philos Pa. 2018;38:438–41.

Giardini ME, Livingstone IAT, Jordan S, Bolster NM, Peto T, Burton M, et al. A smartphone based ophthalmoscope. Conf Proc Annu Int Conf IEEE Eng Med Biol Soc IEEE Eng Med Biol Soc Annu Conf. 2014;2014:2177–80.

3D printed smartphone indirect lens adapter for rapid, high quality retinal imaging. J Mobile Technol Med. 2018. https://www.journalmtm.com/2014/3d-printed-smartphone-indirect-lens-adapter-for-rapid-high-quality-retinal-imaging.

Kermany DS, Goldbaum M, Cai W, Valentim CCS, Liang H, Baxter SL, et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell. 2018;172:1122–31.e9.

Poostchi M, Silamut K, Maude RJ, Jaeger S, Thoma G. Image analysis and machine learning for detecting malaria. Transl Res J Lab Clin Med. 2018;194:36–55.

Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316:2402–10.

Raju M, Pagidimarri V, Barreto R, Kadam A, Kasivajjala V, Aswath A. Development of a deep learning algorithm for automatic diagnosis of diabetic retinopathy. Stud Health Technol Inform. 2017;245:559–63.

Walton OB, Garoon RB, Weng CY, Gross J, Young AK, Camero KA, et al. Evaluation of Automated Teleretinal Screening Program for Diabetic Retinopathy. JAMA Ophthalmol. 2016;134:204–9.

Li Z, He Y, Keel S, Meng W, Chang RT, He M. Efficacy of a deep learning system for detecting glaucomatous optic neuropathy based on color fundus photographs. Ophthalmology. 2018;125:1199–206.

Abràmoff MD, Folk JC, Han DP, Walker JD, Williams DF, Russell SR, et al. Automated analysis of retinal images for detection of referable diabetic retinopathy. JAMA Ophthalmol. 2013;131:351–7.

Patel TP, Aaberg MT, Paulus YM, Lieu P, Dedania VS, Qian CX, et al. Smartphone-based fundus photography for screening of plus-disease retinopathy of prematurity. Graefes Arch Clin Exp Ophthalmol Albrecht Von Graefes Arch Klin Exp Ophthalmol. 2019;257:2579–85.

Patel TP, Kim TN, Yu G, Dedania VS, Lieu P, Qian CX, et al. Smartphone-based, rapid, wide-field fundus photography for diagnosis of pediatric retinal diseases. Transl Vis Sci Technol. 2019;8:29.

Ramachandran N, Hong SC, Sime MJ, Wilson GA. Diabetic retinopathy screening using deep neural network. Clin Exp Ophthalmol. 2018;46:412–6.

Xu K, Feng D, Mi H Deep. Convolutional neural network-based early automated detection of diabetic retinopathy using fundus image. Mol Basel Switz. 2017;22:2054.

Bhaskaranand M, Ramachandra C, Bhat S, Cuadros J, Nittala MG, Sadda S, et al. Automated diabetic retinopathy screening and monitoring using retinal fundus image analysis. J Diabetes Sci Technol. 2016;10:254–61.

Ting DSW, Cheung CY-L, Lim G, Tan GSW, Quang ND, Gan A, et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA. 2017;318:2211–23.

Abràmoff MD, Lou Y, Erginay A, Clarida W, Amelon R, Folk JC, et al. Improved automated detection of diabetic retinopathy on a publicly available dataset through integration of deep learning. Invest Ophthalmol Vis Sci. 2016;57:5200–6.

Krause J, Gulshan V, Rahimy E, Karth P, Widner K, Corrado GS, et al. Grader variability and the importance of reference standards for evaluating machine learning models for diabetic retinopathy. Ophthalmology. 2018;125:1264–72.

Gargeya R, Leng T. Automated identification of diabetic retinopathy using deep learning. Ophthalmology. 2017;124:962–9.

Beagley J, Guariguata L, Weil C, Motala AA. Global estimates of undiagnosed diabetes in adults. Diabetes Res Clin Pract. 2014;103:150–60.

Maamari RN, Keenan JD, Fletcher DA, Margolis TP. A mobile phone-based retinal camera for portable wide field imaging. Br J Ophthalmol. 2014;98:438–41.

Kim TN, Myers F, Reber C, Loury PJ, Loumou P, Webster D, et al. A smartphone-based tool for rapid, portable, and automated wide-field retinal imaging. Transl Vis Sci Technol. 2018;7:21–21.

Early Treatment Diabetic Retinopathy Study Research Group Grading diabetic retinopathy from stereoscopic color fundus photographs--an extension of the modified Airlie House classification. ETDRS report number 10. Ophthalmology. 1991;98:786–806.

Li P, Paulus Y, Davila J, Gosbee J, Margolis T, Fletcher D, et al. Usability testing of a smartphone-based retinal camera among first-time users in the primary care setting. BMJ Innov. 2019;5:120–6.

British Diabetic Association. Retinal photographic screening for diabetic eye disease. A British Diabetic Association Report. London: British Diabetic Association; 1997.

Scanlon PH. The English National Screening Programme for diabetic retinopathy 2003-2016. Acta Diabetol. 2017;54:515–25.

Rajalakshmi R, Subashini R, Anjana RM, Mohan V. Automated diabetic retinopathy detection in smartphone-based fundus photography using artificial intelligence. Eye Lond Engl. 2018;32:1138–44.

Russo A, Morescalchi F, Costagliola C, Delcassi L, Semeraro F. Comparison of smartphone ophthalmoscopy with slit-lamp biomicroscopy for grading diabetic retinopathy. Am J Ophthalmol. 2015;159:360–364.e1.

Ryan ME, Rajalakshmi R, Prathiba V, Anjana RM, Ranjani H, Narayan KMV, et al. Comparison Among Methods of Retinopathy Assessment (CAMRA) study: smartphone, nonmydriatic, and mydriatic photography. Ophthalmology. 2015;122:2038–43.

Vujosevic S, Benetti E, Massignan F, Pilotto E, Varano M, Cavarzeran F, et al. Screening for diabetic retinopathy: 1 and 3 nonmydriatic 45-degree digital fundus photographs vs 7 standard early treatment diabetic retinopathy study fields. Am J Ophthalmol. 2009;148:111–8.

Silva PS, Cavallerano JD, Haddad NMN, Kwak H, Dyer KH, Omar AF, et al. Peripheral lesions identified on ultrawide field imaging predict increased risk of diabetic retinopathy progression over 4 years. Ophthalmology. 2015;122:949–56.

Sun JK, Aiello LP. The future of ultrawide field imaging for diabetic retinopathy: pondering the retinal periphery. JAMA Ophthalmol. 2016;134:247–8.

Sim DA, Keane PA, Rajendram R, Karampelas M, Selvam S, Powner MB, et al. Patterns of peripheral retinal and central macula ischemia in diabetic retinopathy as evaluated by ultra-widefield fluorescein angiography. Am J Ophthalmol. 2014;158:144–153.e1.

Aptel F, Denis P, Rouberol F, Thivolet C. Screening of diabetic retinopathy: effect of field number and mydriasis on sensitivity and specificity of digital fundus photography. Diabetes Metab. 2008;34:290–3.

Murgatroyd H, Ellingford A, Cox A, Binnie M, Ellis JD, MacEwen CJ, et al. Effect of mydriasis and different field strategies on digital image screening of diabetic eye disease. Br J Ophthalmol. 2004;88:920–4.

Acknowledgements

We would like to thank the Knights Templar Eye Foundation, Research to Prevent Blindness, the Rogers Family Foundation, and the University of Michigan for their support. Additionally, we would like to thank the Eyenuk team for their willingness to partner with us and their continued commitment to ocular research.

Funding

This work was supported by the Knights Templar Eye Foundation Career-Starter Research Grant, the University of Michigan Translational Research and Commercialization for Life Sciences, the University of Michigan Center for Entrepreneurship Dean’s Engineering Translational Prototype Research Fund, the QB3 Bridging the Gap Award from the Rogers Family Foundation, the Bakar Fellows Award, the Chan-Zuckerberg Biohub Investigator Award, the National Eye Institute grant 1K08EY027458, and the University of Michigan Department of Ophthalmology and Visual Sciences.

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

DAF is a co-founder of CellScope, Inc., a company commercializing a cellphone-based otoscope, and holds shares in CellScope, Inc. DAF, TPM, CR, FM, and TNK are all inventors on the US patents and related applications pertaining to a “Retinal CellScope Apparatus”. Furthermore, MB, SB, CR, and KS are employees of Eyenuk, Inc. and are listed as inventors on the US patents and related applications pertaining to disease screening and monitoring using retinal images.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

About this article

Cite this article

Kim, T.N., Aaberg, M.T., Li, P. et al. Comparison of automated and expert human grading of diabetic retinopathy using smartphone-based retinal photography. Eye 35, 334–342 (2021). https://doi.org/10.1038/s41433-020-0849-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41433-020-0849-5

This article is cited by

-

Single retinal image for diabetic retinopathy screening: performance of a handheld device with embedded artificial intelligence

International Journal of Retina and Vitreous (2023)

-

Development and validation of a risk prediction model for diabetic retinopathy in type 2 diabetic patients

Scientific Reports (2023)

-

Deep learning for automated detection of neovascular leakage on ultra-widefield fluorescein angiography in diabetic retinopathy

Scientific Reports (2023)

-

Artificial Intelligence for Diabetic Retinopathy Screening Using Color Retinal Photographs: From Development to Deployment

Ophthalmology and Therapy (2023)

-

Deep learning-based automated detection for diabetic retinopathy and diabetic macular oedema in retinal fundus photographs

Eye (2022)