Abstract

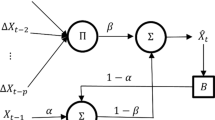

Multi-step prediction is a difficult task that has attracted increasing interest in recent years. It tries to achieve predictions several steps ahead into the future starting from current information. The interest in this work is the development of nonlinear neural models for the purpose of building multi-step time series prediction schemes. In that context, the most popular neural models are based on the traditional feedforward neural networks. However, this kind of model may present some disadvantages when a long-term prediction problem is formulated because they are trained to predict only the next sampling time. In this paper, a neural model based on a partially recurrent neural network is proposed as a better alternative. For the recurrent model, a learning phase with the purpose of long-term prediction is imposed, which allows to obtain better predictions of time series in the future. In order to validate the performance of the recurrent neural model to predict the dynamic behaviour of the series in the future, three different data time series have been used as study cases. An artificial data time series, the logistic map, and two real time series, sunspots and laser data. Models based on feedforward neural networks have also been used and compared against the proposed model. The results suggest than the recurrent model can help in improving the prediction accuracy.

Similar content being viewed by others

References

Laepes, A. and Farben, R.: Nonlinear signal processing using neural networks: prediction and system modelling, Technical report, Los Alamos National Laboratory, Los Alamos, NM (1987).

Whit, H.: Artificial Neural Networks: Approximation and Learning Theory, Oxford, Blackwell (1992).

Hoptroff, R.: The principles and practice of times series forecasting and business modelling using neural nets, Neural Computing and Applications 1 (1993), 59–66.

Wasserman, P.: Neural Computing: Theory and Practice, New York, Van Nostrand Reinhold (1989).

Kou, C. and Reitsch, A.: Neural networks vs. conventional methods of forecasting, The Journal of Business Forecasting (1995), pp. 17–22.

Weiggend, A., Huberman, B. and Rumelhart, D.: Predicting the future: A connectionist approach, Technical report, PARC (1990).

Cottrell, M., Girard, B., Girard, Y., Mangeas, M. and Muller, C.: Neural modelling for times series: A statistical stepwise method for weight elimination, IEEE Transactions on Neural Networks 6(6) (995), 1355–1363. s

Yu, H.-Y. and Bang, S.-Y.: An improved time series prediction by applying the layer-by-layer learning method to FIR neural networks, Neural Networks 10(9) (1997), 1717–1729.

Deco, G. and Schurmann, M.: Neural learning of chaotic system behaviour, IEICE Transactions Fundamentals E77(A) (1994), 1840–1845.

Principe, J. and Kuo, J.: Dynamic modelling of chaotic time series with neural networks, In: R. Lippman, J. Moody and D. S. Touretzky (eds), Advances in Neural Information Processing System (NIPS), (1995).

Stagge, P. and Senhoff, B.: An extended Elman net for modelling times series, In: International Conference on Artificial Neural Networks (1997).

Rumelhart, D., Hinton, G. and Williams, R.: Parallel Distributed Processing, Learning internal representations by error propagation, Cambridge, MIT Press (1986).

Galván, I. and Zaldivar, J.: Application of recurrent neural networks in batch reactors. Part I: NARMA modelling of the dynamic behaviour of the heat transfer fluid temperature, Chemical Engineering and Processing 36 (1997), 505–518.

Navendra, K. and Parthasarathy, K.: Gradient methods for the optimization of dynamical systems containing neural networks, IEEE Transactions on Neural Networks 2 (1991), 252–262.

Galván, I., Alonso, J. and Isasi, P.: New Frontiers in Computational Intelligence and its Applications, Improving multi-step time series prediction with recurrent neural modelling, IOS Press Ohmsha (2000).

Yule, G.: On a method of investigating periodicities in disturbed series with special reference to Wolfer's sunspot numbers, Philosophical transactions of the Royal Society of London, Series A(267), (1927).

Priestly, M.: Spectral Analysis and Time Series, New York, Academic Press (1981).

Tong, F.: Nonlinear Times Series, Oxford, Clarendon Press (1990).

Aussen, A. and Murtag, F.: Combining neural network forecasts on wavelet transformed time series, Connection Science 9(1) (1997), 113–121.

Huebner, U., Abraham, N. and Weiss, C: Dimensions and entropies of chaotic intensity pulsations in a single-mode far-infrared NH3 laser, Physical Review A(40) (1989a), 6354–6360.

Huebner, U., Klische, W., Abraham, N. and Weiss, C.: Coherence and Quantum Optics VI, Comparison of Lorentz-like laser behaviour with the Lorentz Model, New York, Plenum Press (1989b).

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Galván, I.M., Isasi, P. Multi-step Learning Rule for Recurrent Neural Models: An Application to Time Series Forecasting. Neural Processing Letters 13, 115–133 (2001). https://doi.org/10.1023/A:1011324221407

Issue Date:

DOI: https://doi.org/10.1023/A:1011324221407