Abstract

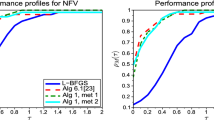

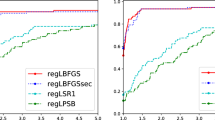

In this paper, we present variants of Shor and Zhurbenko's r-algorithm, motivated by the memoryless and limited memory updates for differentiable quasi-Newton methods. This well known r-algorithm, which employs a space dilation strategy in the direction of the difference between two successive subgradients, is recognized as being one of the most effective procedures for solving nondifferentiable optimization problems. However, the method needs to store the space dilation matrix and update it at every iteration, resulting in a substantial computational burden for large-sized problems. To circumvent this difficulty, we first propose a memoryless update scheme, which under a suitable choice of parameters, yields a direction of motion that turns out to be a convex combination of two successive anti-subgradients. Moreover, in the space transformation sense, the new update scheme can be viewed as a combination of space dilation and reduction operations. We prove convergence of this new method, and demonstrate how it can be used in conjunction with a variable target value method that allows a practical, convergent implementation of the method. We also examine a memoryless variant that uses a fixed dilation parameter instead of varying degrees of dilation and/or reduction as in the former algorithm, as well as another variant that examines a two-step limited memory update. These variants are tested along with Shor's r-algorithm and also a modified version of a related algorithm due to Polyak that employs a projection onto a pair of Kelley's cutting planes. We use a set of standard test problems from the literature as well as randomly generated dual transportation and assignment problems in our computational experiments. The results exhibit that the proposed space dilation and reduction method and the modification of Polyak's method are competitive, and offer a substantial advantage over the r-algorithm and over the other tested limited memory variants with respect to accuracy as well as effort.

Similar content being viewed by others

References

E. Allen, R. Helgason, J. Kennington, and B. Shetty, “A generalization of Polyak's convergence result for subgradient optimization, ” Mathematical Programming, vol. 37, pp. 309–317, 1987.

M.S. Bazaraa, H.D. Sherali, and C.M. Shetty, Nonlinear Programming: Theory and Algorithms, 2nd edn., John Wiley & Sons: New York, 1993.

P.M. Camerini, L. Fratta, and F. Maffioli, “On improving relaxation methods by modified gradient techniques, ” Mathematical Programming Study, vol. 3, pp. 26–34, 1975.

V.F. Demyanov and L.V. Vasilev, Nondifferentiable Optimization, Spring-Verlag, 1985.

R. Fletcher and C.M. Reeves, “Function minimization by conjugate gradients, ” Computer Journal, vol. 7, pp. 149–154, 1959.

J.L. Goffin, “On the convergence rate of the subgradient methods, ” Mathematical Programming, vol. 13, pp. 329–347, 1977.

J.L. Goffin, “Convergence Results in a Class of Variable Metric Subgradient Methods, ” in Nonlinear Programming, vol. 4, O.L. Mangasarian, R.R. Meyer, and S.M. Robinson (Eds.), 1980, pp. 283–326.

M.R. Hestenes and E. Stiefel, “Methods of conjugate gradients for solving linear systems, ” Journal of Research, National Bureau of Standards, vol. 29, pp. 403–439, 1952.

S. Kim and H. Ahn, “Convergence of a generalized subgradient method for nondifferentiable convex optimization, ” Mathematical Programming, vol. 50, pp. 75–80, 1991.

S. Kim, S. Koh, and H. Ahn, “Two-direction subgradient method for nondifferentiable optimization problems, ” Operation Research Letters, vol. 6, pp. 43–46, 1987.

K.C. Kiwiel, Methods of Descent of Nondifferentiable Optimization, Springer-Verlag, 1985. Lecture Notes in Mathematics, No. 1133.

K.C. Kiwiel, “Asurvey of bundle methods for nondifferentiable optimization, ” in Mathematical Programming, M. Iri and K. Tanabe (Eds.), KTK Scientific Publishers: Tokyo, Japan, 1989, pp. 263–282.

K.C. Kiwiel, “Proximality control in bundle methods for convex nondifferentiable minimization, ” Mathematical Programming, vol. 46, pp. 105–122, 1990.

K.C. Kiwiel, “A tilted cutting plane proximal bundle method for convex nondifferentiable optimization, ” OR Letters, vol. 10, pp. 75–81, 1991.

C. Lemarechal, “An extension of Davidon methods to nondifferentiable problems, ” Mathematical Programming Study, vol. 3, pp. 95–109, 1975.

C. Lemarechal, “Bundle method in nonsmooth optimization, ” in Nonsmooth Optimization: Proceedings of IIASA Workshop, C. Lemarechal and R. Mifflin (Eds.), 1978, pp. 79–109.

C. Lemarechal, “A view of line-search, ” in Lecture Notes in Control and Information Science, A. Auslender, W. Oettli, and J. Stoer (Eds.), Optimization and Optimal Control; Proceedings of a Conference Held at Oberwolfach, March 16–22, 1980, pp. 59–78.

C. Lemarechal, “Numerical Experiments in Nonsmooth Optimization, ” in Progress in Nondifferentiable Optimization, E.A. Nurminski (Ed.), IIASA, 1982, pp. 61–84.

C. Lemarechal, “Constructing bundle methods for convex optimization, ” in Fermat Days 85: Mathematics for Optimization, Hiriart-Urrurty (Ed.), Elsevier Science Publisher B.V.: North-Holland, 1986, pp. 201–241.

D.G. Luenberger, Introduction to Linear and Nonlinear Programming, 2nd edn., Addison-Wesley: Reading, Mass, 1984.

R. Mifflin, “An algorithm for constrained optimization with semismooth functions, ” Mathematics of Operations Research, vol. 2, pp. 191–207, 1977.

J.L. Nazareth, “A relationship between the BFGS and conjugate-gradient algorithms and its implications for new algorithms, ” SIAM Journal on Numerical Analysis, vol. 26, pp. 794–800, 1979.

E.A. Nurminski and A.A. Zhelikhovskii, “"-Quasigradient method for solving nonsmooth extremal problems, ” Kibernetika, no. 1, pp. 109–113, 1977.

B.T. Polyak, “Minimization of unsmooth functionals, ” Zh. Vychisl. Mat. I Mat. Fiz., vol. 9, pp. 509–521, 1969 (Russian). English transl. in U.S.S.R. Comput. Math. and Math. Phys., vol. 9, pp. 14–29, 1969.

J. Rosen and S. Suzuki, “Construction of nonlinear programming test problems, ” Communications of the ACM, vol. 8, p. 113, 1965.

H.D. Sherali, G. Choi, and C.H. Tuncbilek, “A variable target value method, ” Operations Research Letters, vol. 26, no. 1, pp. 1–8, 2000.

H.D. Sherali and D.C. Myers, “Dual formulations and subgradient optimization strategies for linear programming relaxations of mixed-integer programs, ” Discrete Applied Mathematics, vol. 20, pp. 51–68, 1988.

H.D. Sherali and O. Ulular, “A primal-dual conjugate subgradient algorithm for specially structured linear and convex programming problems, ” Applied Mathematics and Optimization, vol. 20, pp. 193–221, 1989.

H.D. Sherali and O. Ulular, “Conjugate gradient methods using quasi-Newton updates with inexact line searches, ” Journal of Mathematical Analysis and Applications, vol. 150, no. 2, pp. 359–377, 1990.

N.Z. Shor, “Utilization of the operation of space dilatation in the minimization of convex functions, ” Kibernetika, No. 1, pp. 6–12, 1970.

N.Z. Shor, “Convergence rate of the gradient descent method with dilatation of the space, ” Kibernetika, vol. 2, pp. 80–85, 1970.

N.Z. Shor, Minimization Methods for Nondifferentiable Functions, Springer-Verlag, 1985. Translated from the Russian by K.C. Kiwiel and A. Ruszczynski.

N.Z. Shor, Nondifferentiable Optimization and Polynomial Problems, Kluwer Academic Publishers: Dordrecht/Boston, London, 1998.

N.Z. Shor and L.P. Shabashova, “Solution of minimax problems by the method of generalized gradient descent with dilatation of the space, ” Kibernetika, no. 1, pp. 82–88, 1972.

N.Z. Shor and N.G. Zhurbenko, “Aminimization method using the operation of space dilatation in the direction of the difference of two successive gradients, ” Kibernetika, no. 3, pp. 51–59, 1971.

V.A. Skokov, “Note on minimization methods employing space stretching, ” Kibernetika, no. 4, pp. 115–117, 1974.

P. Wolfe, “A method of conjugate subgradients for minimizing nondifferentiable functions, ” Mathematical Programming Study, vol. 3, pp. 145–173, 1975.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Sherali, H.D., Choi, G. & Ansari, Z. Limited Memory Space Dilation and Reduction Algorithms. Computational Optimization and Applications 19, 55–77 (2001). https://doi.org/10.1023/A:1011272319638

Issue Date:

DOI: https://doi.org/10.1023/A:1011272319638