Abstract

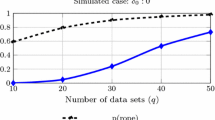

A common approach to evaluating competing models in a classification context is via accuracy on a test set or on cross-validation sets. However, this can be computationally costly when using genetic algorithms with large datasets and the benefits of performing a wide search are compromised by the fact that estimates of the generalization abilities of competing models are subject to noise. This paper shows that clear advantages can be gained by using samples of the test set when evaluating competing models. Further, that applying statistical tests in combination with Occam's razor produces parsimonious models, matches the level of evaluation to the state of the search and retains the speed advantages of test set sampling.

Similar content being viewed by others

References

S. Salzberg, “A critique of current research and methods,” Department of Computer Science, John Hopkins University, Technical Report JHU-95/06, 1995.

A. Miller, Subset Selection in Regression, Chapman and Hall, 1990.

Statlog data and documentation at ftp.ncc.up.pt/pub/statlog.

R. Kohavi and D. Sommerfield, “Feature subset selection using the wrapper model: Overfitting and dynamic search space toplogogy,” in First Int. Conf. on Knowledge Discovery and Data Mining, 1995, pp. 192-197.

J. Fitzpatrick and T. Grefenstette, “Genetic algorithms in noisy environments,” Machine Learning, vol. 3,no. 2/3, pp. 101-120, 1985.

S. Rana and D. Whitley et al., “Searching in the presence of noise,” Parallel Problem Solving from Nature, vol. 4, pp. 198-207, 1996.

J. Holland, Adaptation in Natural and Artificial Systems, University of Michigan Press, 1975.

F. Brill and D. Brown et al., “Fast genetic selection of features for neural network classifiers,” IEEE Trans. Neural Networks, vol. 3,no. 2, pp. 324-328, 1992.

R. Smith and E. Dike et al., “Inheritance in genetic algorithms,” in Proceedings of the ACM 1995 Symposium on Applied Computing, ACM Press, 1994.

O. Maron and A. Moore, “Hoeffding races: Accelerating model selection search for classification and function approximation,” Advances in Neural Information Processing Systems 6, Morgan Kaufmann, 1994.

A. Moore and M. Lee, “Efficient algorithms for minimizing cross validation error,” in Proc. Eleventh Int. Conf. on Machine Learning, Morgan Kaufmann, 1994.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Sharpe, P.K., Glover, R.P. Efficient GA Based Techniques for Classification. Applied Intelligence 11, 277–284 (1999). https://doi.org/10.1023/A:1008386925927

Issue Date:

DOI: https://doi.org/10.1023/A:1008386925927