Abstract

Conventional reconstruction techniques, such as filtered back projection (FBP) and iterative reconstruction (IR), which have been utilised widely in the image reconstruction process of computed tomography (CT) are not suitable in the case of low-dose CT applications, because of the unsatisfying quality of the reconstructed image and inefficient reconstruction time. Therefore, as the demand for CT radiation dose reduction continues to increase, the use of artificial intelligence (AI) in image reconstruction has become a trend that attracts more and more attention. This systematic review examined various deep learning methods to determine their characteristics, availability, intended use and expected outputs concerning low-dose CT image reconstruction. Utilising the methodology of Kitchenham and Charter, we performed a systematic search of the literature from 2016 to 2021 in Springer, Science Direct, arXiv, PubMed, ACM, IEEE, and Scopus. This review showed that algorithms using deep learning technology are superior to traditional IR methods in noise suppression, artifact reduction and structure preservation, in terms of improving the image quality of low-dose reconstructed images. In conclusion, we provided an overview of the use of deep learning approaches in low-dose CT image reconstruction together with their benefits, limitations, and opportunities for improvement.

Similar content being viewed by others

Introduction

The main purpose of computer tomography (CT) imaging in clinical practice is to provide detailed information about the inside of the body, and it has been found to have more and more important functions in screening, diagnosis, staging, and management decision-making. On the other hand, excessive use of CT will expose patients to excessive radiation, especially women, the elderly, and children. Therefore, when performing a CT scan, the ALARA (as low as reasonably achievable) concept must be followed. There are two commonly used methods to reduce CT radiation dose. The first is the reduction of X-ray exposure by changing the tube current or reducing the exposure time to X-ray source, thereby reducing the CT radiation; the second is to reduce the estimated number of scan trajectories. However, both options will reduce resolution, and increase noise and artifacts, thereby reducing image quality and leading to unreliable diagnostic results. To improve the reconstructed image quality, several reconstruction algorithms have been proposed. Filtered back projection (FBP) was used until the early 2010s, and iterative reconstruction (IR) was later used. These are two algorithms that have been frequently used since the advent of computed tomography.

FBP technology is the most used method to reconstruct CT images with measured projection data. It performs high-pass filtering on the sinogram data obtained from multiple angles before back-projecting. The high-pass filter is an essential part of the blur suppression and sharpness enhancement of the image. The FBP algorithm has simple mathematical methods and high computational efficiency and can reconstruct images of acceptable quality in a short time. On the other hand, FBP reconstructed images are susceptible to the influence of the projection dose, reducing the dose can easily lead to higher image noise and fringing artifacts, especially when treating patients who are obese due to photon starvation. With the improvement of industrial computing power and graphics processing power, the traditional FBP methods are finally replaced by iterative reconstruction (IR).

IR methods are superior to FBP and have become the standard methods of CT image reconstruction. The initial image estimation obtained by the measurement data is forward projected to the artificial raw data, and iterative correction is performed through comparison. When the predefined stopping criterion is met, the entire iterative process stops. There are two main categories of iterative reconstruction algorithms: hybrid IR methods and model-based IR (MBIR) methods. The hybrid IR method is also called the statistical IR method, which involves the statistical adjustments of the sinogram domain and the image domain. Model-based IR methods use process modeling to achieve iterative filtering in the sinogram domain and the image domain. The model-based IR method requires higher computing power and more reconstruction time than the hybrid IR method, but it is better than the hybrid infrared method in denoising and de-artefacting. However, the slow reconstruction speed and low computational efficiency limit the clinical application of IR imaging.

Compressed sensing (CS) is a widely used tool for representing compressible signals at a rate lower than the Nyquist rate. This method has been used in various radar and CT tests. However, due to the need to repeat forward-projection and back-projection during the iterative update process, this method is computationally expensive. In addition, these optimization algorithms are not generalisable and must be solved on a problem-by-problem basis. The advantages of the CS system are that the image is reconstructed by achieving data consistency conditions in each iteration, and the regulariser is manually tuned using known image features. The shortcomings of this framework are the long image restoration time and the complicated evaluation of the quality of reconstructed images, due to the location-dependent spatial resolution, contrast resolution and noise texture. Using regular lifting factors such as total variation, CS reconstructed images can be smoothed and patchy.

Recently, researchers have been trying to use AI technology especially deep learning to improve the image quality reconstructed in CT. The application of artificial intelligence in image reconstruction has become a trend that attracts more and more attention based on the promising contribution of these technologies. This review will introduce an overview of the use of deep learning approaches in low-dose CT image reconstruction together with their benefits, limitations, and opportunities for improvement.

Methods

The study of deep learning methods for low-dose CT image reconstruction was conducted according to the methodology of Kitchenham and Charter [1] and was divided into three stages: (i) planning the review, finding related works and determining the need for the review, and research question; (ii) conducting the review, choosing data sources, and extracting data and synthesis, and (iii) results, finding out what deep learning methods are being used, how are they being used, what are the advantages and disadvantages of deep learning methods, and what are the effects of deep learning use on low-dose CT image reconstruction.

Planning the review

Related works and needs for the review

To the best of our knowledge, the literature that surveys and compares available deep learning approaches for low-dose CT image reconstruction is quite restricted. To begin, a total of six reviews in this field were chosen [2,3,4,5,6,7] using a systematic search as described in “Data sources”, to gain a general understanding of the topic. The goal of this review was to evaluate and characterize deep learning approaches in a broad context. Deep learning approaches have the potential to improve both the efficiency and accuracy of low-dose CT image reconstruction. To fill a vacuum in the available literature, we conducted a thorough search of electronic bibliographic databases from January 2016 to February 2021 for low-dose/sparse-view CT image reconstruction using deep learning algorithms.

Review questions

The research questions are as follows: (i) identify and critically appraise what deep learning methods are being used in low-dose CT reconstruction and their targeted outcomes; (ii) evaluate the advantages and disadvantages of using deep learning methods in CT reconstruction based on the literature; and (iii) evaluate the effects on CT image and diagnosis because of deep learning use.

Conducting the review

Data sources

Springer, Science Direct, arXiv, PubMed, ACM, IEEE, and Scopus were used to conduct a systematic search of the literature. The searches were exclusively conducted in English. Only deep learning for CT reconstruction was selected; however, some other types of medical imaging such as PET or MRI were added to provide general context. The following key terms were searched in the title, abstract or keywords of the published papers: “low-dose CT”, “CT reconstruction”, “deep learning”, “neural network”, “sparse-view CT” and “few-view CT”. Other key terms were utilized to narrow and focus the search, and unrelated papers were eliminated. We looked for studies published between January 1, 2016, and February 1, 2022. Before eliminating irrelevant papers, a total of 255 papers were discovered at this stage.

Based on the purpose of our systematic review, the following seven exclusion criteria were applied to papers: (i) studies that do not use deep learning methods in low-dose CT reconstruction; (ii) studies that use inferior methods; (iii) studies that provide insufficient method information; (iv) studies of deep learning methods that focus on diagnosis or segmentation of CT images—our focus was on image reconstruction; and (v) papers that are only available in the form of abstracts or PowerPoint presentations due to insufficient funding. A total of 191 papers were finally selected in this stage.

Extracting data and synthesis

To ensure the quality of the selected research, one reviewer abstracted each article that satisfied the inclusion criteria and completed a questionnaire for each manuscript. Each question was aimed to extract information on potential flaws in the study's quality. The evaluation questions were as follows: (i) Was the deep learning method well described (network structure, parameters, training process)? (ii) Was the dataset well described (i.e., source of data, number of images)? (iii) Did the authors provide open-source code for replication? (iv) Was the result well described? Answers that suggested quality problems were assessed to see if they were significant enough to diminish confidence in the results.

Results

The included studies were analysed to answer our three research questions. The first research question “to identify and critically appraise what deep learning methods are being used in low-dose CT reconstruction and their targeted outcomes” is covered in “Deep learning methods for image reconstruction in low-dose CT”. There, we present an analysis of the available deep learning methods for low-dose CT image reconstruction considering their target outcomes, action domains, network structures, results, computational costs and dataset(s), among others. Table 1 is an analysis of medical cases using FDA-approved CT reconstruction systems. Table 2 summarises the deep learning models and supporting results of the studies and help answer our research questions. Table 3 introduces different unrolling dynamics methods. Table 4 contains a summary of reviewed studies. Figure 1 introduces the process of deep learning methods applied in different domains. The second research question “to evaluate, based on the literature, the advantages and disadvantages of using deep learning methods in CT reconstruction” is covered in “Advantages and disadvantages of using deep learning methods”. The third research question “to evaluate the effects on CT image and diagnosis as a consequence of deep learning use” is covered in “Effects of using deep learning methods”.

Deep learning methods for image reconstruction in low-dose CT

Mainstream approaches in deep learning-based methods

By reviewing studies, we found that deep learning-based methods have several most common models such as CNNs (especially ResNet, 36 studies), U-Net (18 studies) and generative adversarial network (GAN, 18 studies).

The dominant neural network framework applied in imaging problems is the convolutional neural network (CNN). CNN consists of various kinds of layers and activation functions, there are three groups of layers: convolution layer, pooling layer, and fully connected layer. In CNN, convolutional layers, batch normalization, residual connection and ReLu activation function are the most prevalent components. The residual neural network (ResNet) gained popularity because of its skipping connections which bypasses one layer or more, thus the neural network’s training procedure becomes less complex while avoiding additional parameters that need to be tuned. With the use of ResNet, prior information from earlier layers can be simply transferred to later layers without extra computation.

The architecture of U‐Net [8] comprises two components: an encoder and a decoder. The encoding path is usually a common convolutional network topology including convolutional layers, batch norm, pooling layers and ReLu activation function so that input images can be downsampled to feature maps. The symmetrical decoding path has similar architecture except the pooling layers are changed to deconvolutional layers to upsample feature maps back to reconstructed images. Residual connections on various layers link the two components together so that properties from encoding layers can be simply transferred to decoding layers without extra computational complexity.

A generative adversarial network (GAN) comprises two networks: a generator network (G) and a discriminator network (D). The goal of G network is to produce fake images which are as real as possible to fool D network, while the goal of D network is to distinguish whether the input image is a real one or a fake one and not be fooled. GAN trains G network and D network at the same time until the two networks attain the Nash equilibrium. However, due to the dynamic procedure of GAN, this kind of network is very sensitive and hard to train.

In terms of practical application, the US Food and Drug Administration (FDA) has approved two deep learning-based CT image reconstruction systems. The first system is Canon Medical Systems’ Advanced intelligent ClearIQ Engine (AiCE). AiCE is trained with MBIR to distinguish signals from noise using deep convolutional neural networks (DCNN). The second system is GE Healthcare’s TrueFidelity (DLIR). TrueFidelity uses deep neural networks (DNN) to process high noise sinogram data and comparing the resulting image to the same image with high quality. Table 1 contains an analysis of some studies using AiCE and TrueFidelity (DLIR), which shows that results from AiCE and DLIR are always better than conventional methods such as hybrid IR and MBIR.

Applications in different domains

To solve the low-quality imaging problem, there are many algorithms used trying to improve the low-dose CT image reconstruction process. Those algorithms can generally be divided into four categories: (1) image processing, (2) sinogram domain interpolation, (3) mixed domain and (4) data-image direct transformation. Figure 1 introduces the process of DL methods in various domains. Table 2 contains the analysis of some studies in different domains with a comparison of their results.

In the first pathway, starting from the measured data, we first obtain the FBP image, and then use a neural network to suppress the artifacts produced by the FBP. Most of the deep learning methods focus on the image domain [2, 13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57] because this method is the most straightforward and mature. For example, [14] proposed the concept of residual encoder CNN (RED-CNN), which combined deconvolutional network, autoencoder and shortcut connection to realize LDCT imaging. [20] proposed a near-end front-rear splitting (PFBS) frame expansion method based on data-driven image regularization based on a deep neural network. A new and improved GoogLeNet was proposed to reduce the sparse view CT reconstruction artifacts in [51, 52]. [36] built a deep learning framework including convolutional neural network (CNN) blocks, residual learning, and exponential linear units (ELUs). In particular, the image quality was improved by the combination of the structural similarity loss index (SSIM) and the final objective function.

-

Advantages: Direct image processing is the most straightforward solution in CT image reconstruction problems because there are already massive number of applicable models and technologies in this area. Deep learning-based methods can better learn and detect patterns automatically than conventional methods even without prior information, while conventional methods such as IR still have problems of high computational cost and presence of artifacts.

-

Disadvantages: The outcomes of traditional methods such as FBP and IR have a significant impact on image domain DL methods since outcome images are initial images to be inputted to DL methods. Thus, it is difficult to retrieve the information lost from raw data or first step reconstruction.

The second approach is sinogram domain data inpainting, which preprocesses a neural network in a few-view sinogram domain and synthesizes it into a complete view sinogram [58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76]. Applying analytical image reconstruction algorithms such as filtered back projection (FBP) directly to sparse view data will result in poor image quality and serious fringe artifacts. People try to fill in the data which are missed to input the complete data into the image reconstruction process. Data synthesis with interpolation in the raw data domain is a simple example. Sinogram domain learning method tries to use a neural network to learn sensor domain interpolation and denoising. For example, [72] proposed a method to restore the angular resolution in the raw data domain according to the deep residual convolutional neural network (CNN), which can accurately estimate the projection of the unmeasured view. [76] proposed a projection domain denoising method based on a convolutional neural network (CNN) together with a filter loss function. In comparison to the denoising method in the image domain, the estimate of the noise level in the projection can be obtained with the measured value of every detector box. [73] used a network called Pix2Pix to complete the sparsely sampled sinogram, which was a conditional GAN structure.

-

Advantages: Signal loss can be lowered and errors in sinogram can be adjusted in the first place, allowing the reconstruction procedure to start with a rather low-noise condition. As a result, methods in sinogram domain have higher robustness when dealing with errors.

-

Disadvantages: However, if deep learning methods are restricted in sinogram domain, the shortcomings of conventional methods can still significantly affect the results of reconstruction in post-processing.

The third approach is to connect the first approach with the second approach [77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98]. In this type of method, the image quality is improved by introducing data consistency items when training the neural network. [83] proposed an improved dual-domain U-net (MDD-U-net), which used the combination of losses of sinogram domain together with image domain. [87] proposed an algorithm that combined a deep convolutional neural network (CNN) together with the wavelet transform coefficients of low-dose CT images, the directional component of artifacts were extracted by directional wavelet transform, based on intra-band and inter-band correlation. [86] proposed a denoising algorithm which was frame-based using a wavelet residual network, this method utilized the deep learning’s expression ability with the performance guarantee of frame-based denoising algorithm. Apart from the wavelet method, [97] proposed a function optimization method with deep learning technology for this low-dose reconstruction problem, which combined the Radon inverse operator and unentangles every piece.

-

Advantages: Hybrid domain applications can process both raw projections and post images using DL methods, thus reconstructed images can achieve higher quality compared to one-domain methods. It can recover images with smaller differences to the ground truth using two DL methods in distinct areas.

-

Disadvantages: Dual-domain applications require much larger datasets since it has two training procedures, which certainly increases the computation complexity and training time.

The fourth approach is recently developed, through the intelligent CT network [99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116] to achieve image domain transformation. For example, in AUTOMAP, this data-image transformation method can directly convert measured data into reconstructed images [115]. However, the fully connected layers used in AUTOMAP made it not easy to be achieved in practice due to high dimensionality. [111] proposed a deep efficient end-to-end reconstruction (DEER) network for the reconstruction of breast CT images with few views. The reconstruction can be achieved by a neural network with only O (N) parameters, in which N was the number of images to be reconstructed. O was side length. [102] was inspired by the idea of expanding the near-end gradient-based optimization algorithm to limited iterations, then instead of the near-end term, a trainable deep artificial neural network was used. It proposed an end-to-end solution that can be directly reconstructed from low-dose measurements to full-dose tomography image. [101] proposed a direct reconstruction framework specifically using deep learning architecture, which was built of three parts: denoising part, reconstruction part and super-resolution (SR) part. In the reconstruction part, a new dual U-Net generator (DUG) was used, to learn the conversion of symbolic images to images.

-

Advantages: Direct transformation is the most advanced kind of method because it can automatically obtain information about features and complex patterns using numerous numbers of layers in deep neural networks.

-

Disadvantages: The entire sinogram data as input to the network demands massive memory space and can cause a tremendous computational burden. The execution of high-dimensional measurements can be very challenging because of the high processing cost.

Applications in different dimensions

In practice, radiologists can obtain pathological information with more accuracy and reliability by circulating adjacent slices. The three-dimensional reconstruction of the tumor by magnetic resonance (MR) or computer tomography (CT) scans shows key information that cannot be obtained in 2D images. Computed tomography works by taking hundreds or thousands of 2D digital projections around a 360-degree rotation of an object. Some specific algorithms are then can be used to reconstruct the 2D projections into a 3D CT volume, which can be viewed and sliced part at any angle. Whereas the resolution of CT and MR images in the z-direction is rather low, compared to the resolution in the x-direction and y-direction, so the quality of the three-dimensional reconstructed images is lower. Therefore, optimising the network and extending it from 2 to 3D or even 4D is a good opportunity for improvement, so that more structural details can be recovered by denoising models. 4DCT records multiple images over time. It allows playback of scans as a video so that internal movement can be tracked. In this case, 3D [32, 34, 49, 89, 97, 98, 117,118,119,120,121,122,123] and even 4D [59, 120, 124,125,126] applications have been proposed. For example, in [120], the end-to-end DeepOrganNet framework was based on the three-variable tensor product deformation technology. Smooth deformation fields were obtained from multiple templates, then lung models in 3D or 4D of various geometric shapes can be reconstructed efficiently and effectively. Using information-rich latent descriptors extracted from the input 2D image, [117] proposed to use of convolutional auto-encoders (CAEs) to solve this defect and developed an interslice interpolation (CARISI) framework based on convolutional auto-encoders.

Applications in semi-supervised/unsupervised manner

Most of the deep learning methods used in image reconstruction belong to supervised learning. Supervised learning means that the neural network performs low-dose CT reconstruction by learning the mapping between noisy images and noise-free (or high-dose) labelled images. However, it is very difficult or even impossible to obtain noise-free labelled images. On the issue of low-dose CT, experiments that require two exposures using both low and high doses are rarely approved by the Institutional Review Board (IRB) because they greatly increase the radiation risk to the patient. Therefore, in the AAPM challenge, low-dose images are produced by inputting artificial noise to the full-dose projection raw data. Therefore, in the field of image reconstruction, the use of no pairing or few pairing data to train neural networks is vital. Semi-supervised learning is a method that associates a small fraction of paired data with a large fraction of unpaired data during training. Semi-supervised learning falls between unsupervised learning (with no labeled training data) and supervised learning (with only labeled training data). Some of semi-supervised and unsupervised studies reviewed are [39, 66, 74, 127,128,129,130,131,132,133,134,135,136,137,138,139,140]. For example, [129] proposed an unsupervised model-based deep learning (MBDL) for LDCT reconstruction. The network was trained with only the LDCT data set using the in-group maximum a posteriori (G-MAP) loss function. In [59], the proposed method first studied Poisson Unbiased Risk Estimator (PURE) to train DNN to reduce the noise in CT measurement data and proposed a method of using filtered back projection (FBP) and the method of reconstructing CT images with DNN trained by PURE. Then, a weighted Stein's Unbiased Risk Estimator (WSURE) based on the CT forward model was proposed, which trained DNN to denoise CT signals, and then used FBP to reconstruct CT images. In addition to unsupervised learning, [128] proposed a deep learning neural network in the sense of penalty weighted least squares (PWLS) for low-loss CT reconstruction, so that it can be self-supervised without ground-truth information. [139] proposed Noise2Inverse, a denoising method based on deep CNN, which was used in linear image reconstruction algorithms without any additional cleaning or noise data.

Applications in unrolling dynamics

Unrolling dynamics approach is to unroll traditional reconstruction methods into deep learning frameworks so that both benefits from conventional iterative approaches and deep learning technologies can be combined.

[80] used CNN for the unrolled iterative scheme in which a learned alternative minimization method acted as a forward operator in a deep neural network. [50] unrolled the proximal gradient descent algorithm for iterative image reconstruction to finite iterations where CNN was used instead of cost function, which significantly reduced memory required and training time. AirNet [77] combined analytic reconstruction (AR) and iterative reconstruction (IR) using modified proximal forward–backward splitting (PFBS). By unrolling PFBS into IR, AirNet included all the benefits from AR, IR and DNN. Metalvn-Net [96] proposed a novel unrolling dynamics model which needed much less parameters to train because it only learned an initializer for the conjugate gradient (CG) algorithm without image priors and hyperparameter settings. The performance, efficiency and generalizability of MetaInv-Net are superior.

The combination of DL models and conventional models provides better interpretability than DL models alone. Training with a small dataset can also be feasible because of the reduced amount of parameters needed in unrolling dynamics method. Referring to [96], various unrolling dynamics models have their own structures of image reconstruction subproblem and image denoising subproblem with or without learnable parameters. Table 3 introduces some different categories of different unrolling dynamics methods based on their designs of corresponding subproblems.

Other applications

In recent years, generative adversarial networks (GAN) have been extensively developed in the field of low-dose CT reconstruction [71, 73, 109, 118, 123, 127, 132, 134, 146,147,148,149,150,151,152,153,154,155]. In contrast to convolutional neural networks (CNNs) in patches, [147] proposed denoising networks which are FCN-based using images in full size for training, and because they reused the underlying feature maps, the computational efficiency was very high. In the training phase, the denoising network was included with a CNN-based classifier to ensure that the generated high-quality image is similar to the input image. The classifier combined the CT noise model to evaluate the consistency of the FBP reconstructed image and the denoising network image. Then, the entire network became a type of generative adversarial network (GAN) with this complementary structure. Another current trend applied more complex loss functions so that observed smoothing artifacts can be overcome [18, 54, 71, 95, 100, 109, 123, 129, 130, 134, 146, 150, 151, 154, 156,157,158,159]. Especially in [159], the loss function utilized in comparison has two pixel-level losses (mean square error and mean absolute error), the perceptual loss was based on the Visual Geometry Group network (VGG loss), and the one generated by Wasserstein for training gradient penalty adversarial network (WGAN-GP) adversarial loss, and their weighted sum. The evaluation results based on tSNR, NPS and MTF showed that CNN based on VGG loss was more effective in natural denoising of low-dose images than CNN without VGG loss. WGAN-GP loss can improve the noise-reducing effect of CNN based on VGG loss.

What is more, [160] proposed a sparse reconstruction framework (aNETT) for solving inverse problems. [161] proposed quadratic neurons in which the inner product in current artificial neurons was replaced with a quadratic operation on inputs, thereby enhancing the capability of an individual neuron. Then it used quadratic neurons to construct an encoder-decoder structure, referred to as the quadratic autoencoder, and apply it for low-dose CT denoising. [162] proposed an approach that employed deep reinforcement learning to train a system that can automatically adjust parameters in a human-like manner during optimisation. Other than medical application, [163] explored the use of deep learning techniques for the reconstruction of baggage CT data and compared these techniques to constrained reconstruction methods (Table 4).

Advantages and disadvantages of using deep learning methods

According to reviews, several advantages of deep learning-based image reconstruction are closely related to the shortcomings of conventional reconstruction methods [2,3,4,5,6,7].

-

(a)

The reconstruction results of conventional methods are always restricted to lack of prior information, while prior knowledge is not necessary for DL-based techniques, they are more robust and generalizable. However, additional prior information can help deep learning-based methods achieve better results.

-

(b)

Compared to conventional methods, deep learning-based methods has the capability to deal with a massive amount of data and learn complex patterns.

-

(c)

Deep learning-based approaches are capable of real-time reconstruction so that the diagnosis time can be reduced due to its higher efficiency.

Despite the fact that the DLR algorithm appears to be quite effective at enhancing image quality, there are still some limitations or concerns to be addressed [2,3,4,5,6,7].

-

(a)

Unlike the clear theoretical understanding of traditional technologies, the deep learning algorithm's decision-making mechanism is a black box. The intricacy of neural networks is enormous, particularly in the realm of CT image reconstruction. Even if the DL image reconstruction method produces the right image, its rationale could be flawed.

-

(b)

Traditional approaches might be simpler to implement, while DL methods require a complex design of the network and are challenging to train. Choices of parameters (and hyperparameters) are crucial in both ways and demand a significant amount of computation.

-

(c)

The results of deep learning-based methods can be significantly affected by a little change in parameters. The robustness, together with convergence remain unanswered in DL approaches.

-

(d)

The training process of deep learning-based methods could be a much lengthier time than traditional approaches, a small modification may result in a restart of training.

It's not easy to solve the problem of unreliability. To be adopted with confidence, deep learning methods need to be lawful, ethical and robust [164]. Data used for training must be correctly priced, and transactions with AI firms must be consolidated. Companies must clearly specify their policies on anonymization and consent, and patients must fully understand how their data will be used. To make data capable of artificial intelligence, it must first be cleansed, purified, digitized, and centralized before being fed into algorithms. Finally, data should indicate properties on behalf of demographics. Before applying deep learning algorithms, they must be approved by a health authority to be adequately supervised. Protocols for error duties supervised and unsupervised AI roles and equitable workforce distribution (between AI and radiologists) should be established, with agreements updated at predetermined intervals. If any errors occur, a thorough error analysis should be performed, and the results should be communicated to firms. This ethical training and integration can lead to deep learning technology that is dependable and trustworthy in the application of medical imaging.

Effects of using deep learning methods

According to several comparisons and phantom studies [107, 165,166,167,168,169,170,171,172,173,174,175,176,177,178,179], DL-based image reconstruction is superior to other conventional reconstruction techniques for image quality and lesion detection.

[180] proposed a Discriminative feature representation (DFR) approach with good adaptability to various CT systems because it can be directly applied to DICOM image without the need for raw measurement data. DFR outperformed iterative TV reconstruction in visual and quantitative results which showed its good robustness and performance. A 3D feature constrained reconstruction method (3D-FCR) based on 3D feature dictionary was proposed in [181]. By assessing its performance with 3D-TV method on simulated and clinical experiments, PSNR of 3D-FCR gained 40.82 while 3D-TV was 36.59. The DIRE network [34] was trained to learn the mapping from low-dose analytically reconstructed images to normal-dose IR reconstructed images. Compared with FBP, RED-CNN and ResNet, DIRE achieved the best PSNR and SSIM indexes while FBP had the worst. [182] proved the feasibility of the proposed material-decomposition-based deep learning model using two independent data groups while both groups showed significant improvement compared to standard dual-energy CT imaging. DP-ResNet [183] combined the traditional FBP reconstruction method with network processing in both sinogram domain (SD-net) and image domain (ID-net). Comparing to FBP, TV, S-DFR and RED-CNN, the proposed DP-ResNet provided better image quality than the other four approaches and still be quite efficient in practical applications.

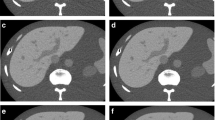

[179] compared the image and diagnostic qualities of a DEep Learning Trained Algorithm (DELTA) for half-dose contrast-enhanced liver computed tomography (CT) with those of a commercial hybrid iterative reconstruction (HIR) method used for standard-dose CT (SDCT). The results showed that the noise of LDCTDL was significantly lower than that of SDCTHIR and LDCTHIR. The SNR and CNR of LDCTDL were significantly higher than those of the other two groups, LDCT with DELTA had approximately 49% dose reduction compared to SDCT with HIR while maintaining image quality on contrast-enhanced liver CT. [169] compared the image noise characteristics, spatial resolution, and task-based detectability on deep learning reconstruction (DLR) images and those images reconstructed with other state-of-art techniques. On images reconstructed with DLR, the noise was lower than on images subjected to other reconstructions, especially at low radiation dose settings. The image noise was lower on DLR images, and high-contrast spatial resolution and task-based detectability were better than on those images reconstructed with other state-of-art techniques.

Conclusions and future work

It will undoubtedly confront more and more problems when deep learning is applied more extensively in the field of low-dose CT image reconstruction.

As we have mentioned earlier, DL methods are capable of processing a huge amount of data and extract complex patterns from it. On the other hand, a small sample size can be a severe issue in the field of low-dose CT reconstruction, performance of network training and results of DL methods in such case become unreliable and even worse than conventional analytical methods. The following machine learning algorithms (not limited to these methods) have the potential to solve this problem: (1) unsupervised learning: can address the fine labeling problem; (2) transfer learning: applying current machine learning models or knowledge of related modalities and diseases to new tasks; (3) one-shot learning; (4) self-supervised learning; and (5) gradual learning.

In supervised learning-based technologies, normal dose projection data which still contains unavoidable missing raw measurements and error signals is considered to be the ground truth data, resulting in the reduction of reconstructed image quality. What is more, data labeling of CT images is also a challenge that greatly limits the wide and in-depth application of deep learning, it is very challenging to acquire labelled data pairs in clinical practice considering the radiation risk exposed to patients. In reviewed supervised studies, their used clinical data for training is usually from several public datasets such as NBIA/NCIA dataset [184], AAPM-Mayo dataset, piglet dataset [185], etc. To obtain paired low-dose measurements, earlier studies tend to add artificial noises such as Gaussian noise to normal-dose projection data to generate the simulated paired dataset. The quality of the training dataset directly affects the performance and efficiency of DL reconstruction methods. As a result, the proposal of new and reliable unsupervised learning-based DL methods is empirical in the future development of low-dose CT reconstruction.

Current DL frameworks are usually too generic and not finely tuned for specific situations. The topology and structure of deep learning networks can be improved by resolving those different key problems in corresponding applications. In another point of view, the generalizability of DL methods has a crucial impact on their adaptability and usefulness in practical use. Thus, it is vital to propose novel deep learning-based methods that can be applied to different datasets, noise levels, various scanners and vendors, and different organs and parts of the body while remaining reliable in all cases in the future. Furthermore, generalizability can be improved by making use of feature similarity in data obtained across different modalities, which can also reduce the amount of radiation needed for patients. Hence, inter-modality image reconstruction technologies, such as MR/CT, and CT/PET have become a rising topic and further study in this field is needed.

In addition, interpretability is critical in the use of CT image analysis. Improving the interpretability of deep neural networks in diverse tasks of CT image analysis has always been a difficult problem. It is also vital to understand how to construct human–machine collaboration medical therapy. The lightweight deep neural network is simple to deploy to portable medical equipment, allowing portable equipment to perform more powerful functions, which is also an area worth investigating.

The final goal of reconstructing the noise-free images is to obtain the most accurate diagnosis and prediction. Other than reconstructing high quality images from limited raw measurements, LDCT technology can also be applied to some clinical tasks. Because of its efficiency and convenience of use, LDCT-based cancer screening is now widely used. [186] proposed a multi-dimensional nodule detection network (MD-NDNet) for the increase of pulmonary nodule detection accuracy, since nodule detection plays an important role in early-stage lung cancer screening. There are two steps in automatic nodule detection: the detection of possible nodules and the reduction of false-positive identification. Low-dose measurements may cause raise in the number of false-positive candidates thus lead to less accurate diagnosis results. Through experiments with LUNA16 data, MD-NDNet obtained a CPM score of 0.9008 which showed accurate and reliable results. Another task is the segmentation of various body structures, such as bones, and spine. The detected labelling of segmented, say composition of bones, may be able to offer somewhat accurate reference of other human organs, enabling furthermore analysis. [187] presented a completely automatic system for segmenting and identifying specific bones framework based on LDCT chest images and achieved highly accurate results. The denoising deep-learning-based algorithms in LDCT methods can also be used in other subtle imaging applications. For example, a deep CNN based on residual learning (DeSpecNet) [188] was proposed to reduce speckle in retinal optical coherence tomography images. When applied to OCT pictures, the suggested technique resulted in significant improvements in both visual quality and quantitative indicators. In terms of more applications in clinical trials, [189] presented an evaluation model for input image based on a composition of a fuzzy system combined with a neural network and reached 92.56% accuracy of prediction. Adaptive Independent Subspace Analysis (AISA) method [190] was capable to discover meaningful electroencephalogram activity in the MRI scan of brains while a novel correlation learning mechanism (CLM) method was proposed in [191] for evaluation of CT brain scans. Apart from that, it is considered to skip the image reconstruction step and obtain classification or prediction of diagnosis and treatment directly from raw measurements. Furthermore, concentrating on task-specific performance guarantees that every computation work is dedicated to task-specific training but not the unneeded intermediary phase of image reconstruction [4].

In conclusion, deep learning has produced outstanding results in a variety of CT imaging jobs, but a further in-depth study in such as unsupervised-manner methods and 3D/4D reconstruction applications is required to enable the widespread application of intelligent diagnosis and treatment solutions based on CT imaging.

References

Kitchenham B, Charters S (2007) Guidelines for performing systematic literature reviews in software. Engineering 45:1051

Alla Takam C et al (2020) Spark Architecture for deep learning-based dose optimization in medical imaging. Inf Med Unlocked 19:100335

Arndt C et al (2020) Deep learning CT image reconstruction in clinical practice. Rofo. https://doi.org/10.1055/a-1248-2556

Shi J et al (2020) Applications of deep learning in medical imaging: a survey. J Image Graph 25(10):1953–1981

Singh R et al (2020) Artificial intelligence in image reconstruction: the change is here. Phys Med 79:113–125

Zhang Z, Seeram E (2020) The use of artificial intelligence in computed tomography image reconstruction: a literature review. J Med Imaging Radiat Sci 51(4):671–677

Wang T et al (2021) A review on medical imaging synthesis using deep learning and its clinical applications. J Appl Clin Med Phys 22(1):11–36

Ronneberger O, Fischer P, Brox T (2015) U-Net: convolutional networks for biomedical image segmentation. in medical image computing and computer-assisted intervention–MICCAI 2015. Springer International Publishing, Cham

Benz DC et al (2020) Validation of deep-learning image reconstruction for coronary computed tomography angiography: impact on noise, image quality and diagnostic accuracy. J Cardiovasc Comput Tomogr 14(5):444–451

Akagi M et al (2019) Deep learning reconstruction improves image quality of abdominal ultra-high-resolution CT. Eur Radiol 29(11):6163–6171

Nakamura Y et al (2019) Deep learning–based CT image reconstruction: initial evaluation targeting hypovascular hepatic metastases. Radiology 1(6):e180011

Narita K et al (2020) Deep learning reconstruction of drip-infusion cholangiography acquired with ultra-high-resolution computed tomography. Abdom Radiol 45(9):2698–2704

Bazrafkan S et al (2021) To recurse or not to recurse: a low-dose CT study. Progr Artif Intell. https://doi.org/10.1007/s13748-020-00224-0

Chen H et al (2017) Low-dose CT with a residual encoder-decoder convolutional neural network. IEEE Trans Med Imaging 36(12):2524–2535

Chen H et al (2017) Low-dose CT via convolutional neural network. Biomed Opt Express 8(2):679–694

Chen Q et al (2020) Low-dose dental CT image enhancement using a multiscale feature sensing network. Nucl Instrum Methods Phys Res Sect A 981:164530

Chen Z, Yong Z (20199) Low-Dose CT Image Denoising and Pulmonary Nodule Identification. In: Proceedings of the 2019 2nd International Conference on Sensors, Signal and Image Processing. 2019, Association for Computing Machinery, Prague, Czech Republic, pp 40–44

Chi J et al (2019) Computed tomography (CT) image quality enhancement via a uniform framework integrating noise estimation and super-resolution networks. Sensors (Basel) 19(15):3348

Choi D et al (2019) Noise reduction method in low-dose CT data combining neural networks and an iterative reconstruction technique. In Proceedings of SPIE. The International Society for Optical Engineering

Ding Q et al (2020) Low-dose CT with deep learning regularization via proximal forward-backward splitting. Phys Med Biol 65(12):125009

Du W et al (2017) Stacked competitive networks for noise reduction in low-dose CT. PLoS ONE 12(12):e0190069

Fu Z et al (2020) A residual dense network assisted sparse view reconstruction for breast computed tomography. Sci Rep 10(1):21111

Gantt C, Jin Y, Lu E (2019) Deep neural networks for sparse-view filtered backprojection imaging. In: Proceedings of SPIE. The International Society for Optical Engineering.

Gao Y et al (2017) A deep convolutional network for medical image super-resolution. In: 2017 Chinese Automation Congress (CAC)

Gong K et al (2019) Low-dose dual energy CT image reconstruction using non-local deep image prior. In: 2019 IEEE Nuclear Science Symposium and Medical Imaging Conference (NSS/MIC)

Han Y, Ye JC (2018) Framing U-net via deep convolutional framelets: application to sparse-view CT. IEEE Trans Med Imaging 37(6):1418–1429

Hizukuri A et al (2020) Construction of virtual normal dose CT images from ultra-low dose CT images using dilated residual networks. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Hu Z et al (2016) Image reconstruction from few-view CT data by gradient-domain dictionary learning. J Xray Sci Technol 24(4):627–638

Jiang Z et al (2019) Augmentation of CBCT Reconstructed From Under-Sampled Projections Using Deep Learning. IEEE Trans Med Imaging 38(11):2705–2715

Kang E, Ye JC (2018) Framelet denoising for low-dose CT using deep learning. In: 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018)

Kofler A et al (2018) A u-nets cascade for sparse view computed tomography. Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics). Springer, Cham, pp 91–99

Li S et al (2020) Non-local texture learning approach for CT imaging problems using convolutional neural network. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Li Z et al (2020) UNet-ESPC-Cascaded Super-Resolution Reconstruction In Spectral CT. In: 2020 15th IEEE International Conference on Signal Processing (ICSP)

Liu J et al (2019) Deep iterative reconstruction estimation (DIRE): approximate iterative reconstruction estimation for low dose CT imaging. Phys Med Biol 64(13):135007

Liu Y, Zhang Y (2018) Low-dose CT restoration via stacked sparse denoising autoencoders. Neurocomputing 284:80–89

Ma Y et al (2019) Low-dose CT with a deep convolutional neural network blocks model using mean squared error loss and structural similar loss. In: Proceedings of SPIE. The International Society for Optical Engineering

Ma Z et al (2016) Noise reduction in low-dose CT with stacked sparse denoising autoencoders. In: 2016 IEEE Nuclear Science Symposium, Medical Imaging Conference and Room-Temperature Semiconductor Detector Workshop (NSS/MIC/RTSD)

Matsuura M et al (2021) Feature-aware deep-learning reconstruction for context-sensitive X-ray computed tomography. IEEE Trans Radiat Plasma Med Sci 5(1):99–107

Meng M et al (2020) Progressive transfer learning strategy for low-dose CT image reconstruction with limited annotated data. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Mustafa W et al (2020) Sparse-view spectral CT reconstruction using deep learning. arXiv preprint arXiv:2011.14842

Park J et al (2018) Computed tomography super-resolution using deep convolutional neural network. Phys Med Biol 63(14):145011

Qiu D et al (2021) Multi-window back-projection residual networks for reconstructing COVID-19 CT super-resolution images. Comput Methods Progr Biomed 200:105934

Shan H, Kruger U, Wang G (2019) A novel transfer learning framework for low-dose CT. In: Proceedings of SPIE. The International Society for Optical Engineering

Shan H et al (2019) Competitive performance of a modularized deep neural network compared to commercial algorithms for low-dose CT image reconstruction. Nat Mach Intell 1(6):269–276

Wang J et al (2020) Deep learning based image reconstruction algorithm for limited-angle translational computed tomography. PLoS ONE 15(1):e0226963

Wang T et al (2020) Deep learning-based low dose CT Imaging. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Wang T et al (2019) Deep learning-based image quality improvement for low-dose computed tomography simulation in radiation therapy. J Med Imaging (Bellingham) 6(4):043504

Wang Y et al (2018) Iterative quality enhancement via residual-artifact learning networks for low-dose CT. Phys Med Biol 63(21):215004

Wu D et al (2019) Computational-efficient cascaded neural network for CT image reconstruction. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Wu D, Kim K, Li Q (2019) Computationally efficient deep neural network for computed tomography image reconstruction. Med Phys 46(11):4763–4776

Xie S et al (2018) Sparse-view CT reconstruction with improved GoogLeNet. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Xie S et al (2018) Artifact removal using improved googlenet for sparse-view CT reconstruction. Sci Rep. https://doi.org/10.1038/s41598-018-25153-w

Yim D, Kim B, Lee S (2020) A deep convolutional neural network for simultaneous denoising and deblurring in computed tomography. J Instrum 15(12):12001

Zhang Y et al (2019) Deep residual network based medical image reconstruction. In: Chinese Control Conference, CCC

Zhang Z et al (2018) A sparse-View ct reconstruction method based on combination of densenet and deconvolution. IEEE Trans Med Imaging 37(6):1407–1417

Zhao J et al (2016) Few-view CT reconstruction method based on deep learning. In 2016 IEEE Nuclear Science Symposium, Medical Imaging Conference and Room-Temperature Semiconductor Detector Workshop, NSS/MIC/RTSD 2016

Zhong A et al (2020) Image restoration for low-dose CT via transfer learning and residual network. IEEE Access 8:112078–112091

Ahn CK et al (2018) A deep learning-enabled iterative reconstruction of ultra-low-dose CT: use of synthetic sinogram-based noise simulation technique. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Beaudry J, Esquinas PL, Shieh CC (2019) Learning from our neighbours: a novel approach on sinogram completion using bin-sharing and deep learning to reconstruct high quality 4DCBCT. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Bellos D et al (2019) A convolutional neural network for fast upsampling of undersampled tomograms in X-ray CT time-series using a representative highly sampled tomogram. J Synchrotron Radiat 26(Pt 3):839–853

Dong J, Fu J, He Z (2019) A deep learning reconstruction framework for X-ray computed tomography with incomplete data. PLoS ONE 14(11):e024426

Dong X, Vekhande S, Cao G (2019) Sinogram interpolation for sparse-view micro-CT with deep learning neural network. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Fu J, Dong J, Zhao F (2020) A deep learning reconstruction framework for differential phase-contrast computed tomography with incomplete data. IEEE Trans Image Process 29:2190–2202

Ghani MU, Karl WC (2018) Deep learning-based sinogram completion for low-dose CT. In: 2018 IEEE 13th Image, Video, and Multidimensional Signal Processing Workshop (IVMSP)

Ghani MU, Karl WC (2020) Fast enhanced CT metal artifact reduction using data domain deep learning. IEEE Trans Computat Imaging 6:181–193

Kim K, Soltanayev S, Chun SY (2020) Unsupervised training of denoisers for low-dose CT reconstruction without full-dose ground truth. IEEE J Sel Top Signal Process 14(6):1112–1125

Lee H et al (2019) Machine friendly machine learning: interpretation of computed tomography without image reconstruction. Sci Rep 9(1):15540

Lee H, Lee J, Cho S (2017) View-interpolation of sparsely sampled sinogram using convolutional neural network. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Lee H et al (2019) Deep-neural-network-based sinogram synthesis for sparse-view CT Image reconstruction. IEEE Trans Radiat Plasma Med Sci 3(2):109–119

Lee J, Lee H, Cho S (2018) Sinogram synthesis using convolutional-neural-network for sparsely view-sampled CT. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Li Z et al (2019) Promising generative adversarial network based sinogram inpainting method for ultra-limited-angle computed tomography imaging. Sensors (Basel) 19(18):3941

Liang K et al (2018) Improve angular resolution for sparse-view CT with residual convolutional neural network. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Liu J, Li J (2020) Sparse-sampling CT sinogram completion using generative adversarial networks. In: 2020 13th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI)

Meng M et al (2020) Semi-supervised learned sinogram restoration network for low-dose CT image reconstruction. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Vekhande SS (2019) Deep Learning Neural Network-Based Sinogram Interpolation For Sparse-View CT reconstruction. Virginia Tech, Blacksburg

Yuan N et al (2019) Low-dose CT count-domain denoising via convolutional neural network with filter loss. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Chen G et al (2020) AirNet: fused analytical and iterative reconstruction with deep neural network regularization for sparse-data CT. Med Phys 47(7):2916–2930

Chen H et al (2018) LEARN: learned experts’ assessment-based reconstruction network for sparse-data CT. IEEE Trans Med Imaging 37(6):1333–1347

Cheng W et al (2019) Learned full-sampling reconstruction. Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics). Springer, Cham, pp 375–384

Cheng W et al (2020) Learned full-sampling reconstruction from incomplete Data. IEEE Trans Comput Imaging 6:945–957

Chun IY et al (2019) BCD-net for low-dose ct reconstruction: acceleration, convergence, and generalization. Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics). Springer, Cham, pp 31–40

Fang W, Li L (2019) Comparison of ring artifacts removal by using neural network in different domains. In: 2019 IEEE Nuclear Science Symposium and Medical Imaging Conference (NSS/MIC)

Feng Z et al (2020) A preliminary study on projection denoising for low-dose CT Imaging Using Modified Dual-Domain U-net. In: 2020 3rd International Conference on Artificial Intelligence and Big Data, ICAIBD 2020

He J et al (2018) LdCT-Net: Low-dose CT image reconstruction strategy driven by a deep dual network. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

He J et al (2019) Optimizing a parameterized plug-and-play ADMM for iterative low-dose CT reconstruction. IEEE Trans Med Imaging 38(2):371–382

Kang E et al (2018) Deep convolutional framelet denosing for low-dose CT via wavelet residual network. IEEE Trans Med Imaging 37(6):1358–1369

Kang E, Min J, Ye JC (2017) A deep convolutional neural network using directional wavelets for low-dose X-ray CT reconstruction. Med Phys 44(10):e360–e375

Kim J, Han Y, Ye JC (2020) Cone-angle artifact removal using differentiated backprojection domain deep learning. In: 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI)

Lee D, Choi S, Kim HJ (2019) High quality imaging from sparsely sampled computed tomography data with deep learning and wavelet transform in various domains. Med Phys 46(1):104–115

Lee M, Kim H, Kim HJ (2020) Sparse-view CT reconstruction based on multi-level wavelet convolution neural network. Phys Med 80:352–362

Su T et al (2020) Generalized iterative sparse-view CT reconstruction with deep neural network. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE. 2020.

Wang J et al (2019) ADMM-based deep reconstruction for limited-angle CT. Phys Med Biol 64(11):115011

Ye DH et al (2018) Deep residual learning for model-based iterative ct reconstruction using plug-and-play framework. In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)

Yuan, H., J. Jia, and Z. Zhu. SIPID: A deep learning framework for sinogram interpolation and image denoising in low-dose CT reconstruction. in 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018). 2018.

Zhang H, Dong B, Liu B (2019) JSR-Net: a deep network for joint spatial-radon domain CT reconstruction from incomplete data. In: ICASSP 2019 - 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)

Zhang H et al (2020) MetaInv-Net: meta inversion network for sparse view CT image reconstruction. IEEE Trans Med Imaging. https://doi.org/10.1109/TMI.2020.3033541

Zheng A et al (2020) A dual-domain deep learning-based reconstruction method for fully 3D sparse data helical CT. Phys Med Biol. https://doi.org/10.1088/1361-6560/ab8fc1

Ziabari A et al (2018) 2.5D deep learning For CT image reconstruction using a multi-GPU implementation. In: 2018 52nd Asilomar Conference on Signals, Systems, and Computers

Ding Q et al (2020) AHP-Net: adaptive-hyper-parameter deep learning based image reconstruction method for multilevel low-dose CT. arXiv preprint arXiv:2008.04656

Ge Y et al (2020) ADAPTIVE-NET: deep computed tomography reconstruction network with analytical domain transformation knowledge. Quant Imaging Med Surg 10(2):415–427

Kandarpa VSS et al (2021) DUG-RECON: a framework for direct image reconstruction using convolutional generative networks. IEEE Trans Radiat Plasma Med Sci 5(1):44–53

Kida S et al (2018) Cone beam computed tomography image quality improvement using a deep convolutional neural network. Cureus 10(4):e2548

Kim H et al (2019) Extreme few-view CT reconstruction using deep inference. arXiv preprint arXiv:1910.05375

Li Y et al (2019) Learning to reconstruct computed tomography images directly from sinogram data under a variety of data acquisition conditions. IEEE Trans Med Imaging 38(10):2469–2481

Ma G, Zhu Y, Zhao X (2020) Learning image from projection: a full-automatic reconstruction (FAR) net for computed tomography. IEEE Access 8:219400–219414

Shi Y et al (2019) Combination strategy of deep learning and direct back projection for high-efficiency computed tomography reconstruction. In: Proceedings of the Third International Symposium on Image Computing and Digital Medicine. 2019, Association for Computing Machinery, Xi'an, China. pp 293–297

Steuwe A et al (2021) Influence of a novel deep-learning based reconstruction software on the objective and subjective image quality in low-dose abdominal computed tomography. Br J Radiol 94(1117):20200677

Syben C et al (2019) Technical note: PYRO-NN: python reconstruction operators in neural networks. Med Phys 46(11):5110–5115

Vizitiu A et al (2019) Data-driven adversarial learning for sinogram-based iterative low-dose CT image reconstruction. In: 2019 23rd International Conference on System Theory, Control and Computing (ICSTCC)

Wang W et al (2020) An end-to-end deep network for reconstructing CT images directly from sparse sinograms. IEEE Trans Comput Imaging 6:1548–1560

Xie H et al (2020) Deep efficient end-to-end reconstruction (DEER) network for few-view breast CT image reconstruction. IEEE Access 8:196633–196646

Xie H et al (2019) Dual network architecture for few-view CT trained on ImageNet data and transferred for medical imaging. In: Proceedings of SPIE-The International Society for Optical Engineering

Xie H, Shan H, Wang G (2020) 3D few-view CT image reconstruction with deep learning. In: 2020 IEEE 17th International Symposium on Biomedical Imaging Workshops (ISBI Workshops)

Ye DH et al (2019) Deep back projection for sparse-view CT reconstruction. In: 2018 IEEE Global Conference on Signal and Information Processing, GlobalSIP 2018-Proceedings

Zhu B et al (2018) Image reconstruction by domain-transform manifold learning. Nature 555(7697):487–492

Zhu J et al (2020) Low-dose CT reconstruction with simultaneous sinogram and image domain denoising by deep neural network. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Afshar P et al (2018) CARISI: convolutional autoencoder-based inter-slice interpolation of brain tumor volumetric images. In: 2018 25th IEEE International Conference on Image Processing (ICIP)

Shan H et al (2018) 3-D convolutional encoder-decoder network for low-dose CT via transfer learning from a 2-D trained network. IEEE Trans Med Imaging 37(6):1522–1534

Tong F et al (2020) X-ray2Shape: reconstruction of 3D liver shape from a single 2D projection image. In: 2020 42nd Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC)

Wang Y, Zhong Z, Hua J (2020) DeepOrganNet: on-the-fly reconstruction and visualization of 3D / 4D lung models from single-view projections by deep deformation network. IEEE Trans Vis Comput Graph 26(1):960–970

Xie H, Shan H, Wang G (2019) Deep encoder-decoder adversarial reconstruction(DEAR) network for 3D CT from few-view data. Bioengineering (Basel) 6(4):111

Yang H et al (2018) Improve 3D cone-beam CT reconstruction by slice-wise deep learning. In: 2018 IEEE Nuclear Science Symposium and Medical Imaging Conference Proceedings (NSS/MIC)

Zhang J et al (2020) 3D reconstruction for super-resolution CT images in the internet of health things using deep learning. IEEE Access 8:121513–121525

Madesta F et al (2019) Self-consistent deep learning-based boosting of 4D cone-beam computed tomography reconstruction. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Madesta F et al (2020) Self-contained deep learning-based boosting of 4D cone-beam CT reconstruction. Med Phys 47(11):5619–5631

Majee S et al (2019) 4D X-ray CT reconstruction using multi-slice fusion. In: 2019 IEEE International Conference on Computational Photography (ICCP)

Kuanar S et al (2019) Low dose abdominal CT image reconstruction: an unsupervised learning based approach. In: 2019 IEEE International Conference on Image Processing (ICIP)

Li Z et al (2019) SUPER learning: a supervised-unsupervised framework for low-dose CT image reconstruction. In: 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW)

Liang K et al (2020) A model-based unsupervised deep learning method for low-dose CT reconstruction. IEEE Access 8:159260–159273

Ozan UM, Ertas M, Yildirim I (2020) Low-dose CT reconstruction using deep generative regularization prior. arXiv e-prints. arXiv:2012.06448

Zhang M et al (2018) Sparse-view CT reconstruction via robust and multi-channels autoencoding priors. In: ACM International Conference Proceeding Series

Choi K, Vania M, Kim S (2019) Semi-supervised learning for low-dose CT image restoration with hierarchical deep generative adversarial network (HD-GAN). Annu Int Conf IEEE Eng Med Biol Soc 2019:2683–2686

Li D et al (2020) Unsupervised data fidelity enhancement network for spectral CT reconstruction. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Tang C et al (2019) Unpaired low-dose CT denoising network based on cycle-consistent generative adversarial network with prior image information. Comput Math Methods Med 2019:8639825

Wang L et al (2020) Semi-supervised noise distribution learning for low-dose CT restoration. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Yuan N, Zhou J, Qi J (2019) Low-dose CT image denoising without high-dose reference images. In: Proceedings of SPIE-The International Society for Optical Engineering

Zhu M et al (2019) Teacher-student network for CT image reconstruction via meta-learning strategy. In: 2019 IEEE Nuclear Science Symposium and Medical Imaging Conference (NSS/MIC)

Zhu M et al (2020) Deep neural networks for low-dose CT image reconstruction via cooperative meta-learning strategy. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Hendriksen AA, Pelt DM, Batenburg KJ (2020) Noise2Inverse: self-supervised deep convolutional denoising for tomography. IEEE Trans Comput Imaging 6:1320–1335

Liang K et al (2018) A self-supervised deep learning network for low-dose CT reconstruction. In: 2018 IEEE nuclear science symposium and medical imaging conference proceedings (NSS/MIC)

Li Y et al (2020) Efficient and interpretable deep blind image deblurring via algorithm unrolling. IEEE Trans Computat Imaging 6:666–681

Yang Y et al (2016) Deep ADMM-Net for compressive sensing MRI. In: Proceedings of the 30th International Conference on Neural Information Processing Systems. 2016, Curran Associates Inc., Barcelona, Spain, pp 10–18

Zhang K et al (2017) Learning deep CNN denoiser prior for image restoration. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

Yang Y et al (2020) ADMM-CSNet: a deep learning approach for image compressive sensing. IEEE Trans Pattern Anal Mach Intell 42(3):521–538

Adler J, Öktem O (2018) Learned primal-dual reconstruction. IEEE Trans Med Imaging 37(6):1322–1332

Bing X et al (2019) Medical image super resolution using improved generative adversarial networks. IEEE Access 7:145030–145038

Choi K, Kim SW, Lim JS (2018) Real-time image reconstruction for low-dose CT using deep convolutional generative adversarial networks (GANs). In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Ge R et al (2019) Stereo-correlation and noise-distribution aware ResVoxGAN for dense slices reconstruction and noise reduction in thick low-dose CT. Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics). Springer, Cham, pp 328–338

Guha I et al (2020) Deep learning based high-resolution reconstruction of trabecular bone microstructures from low-resolution CT scans using GAN-CIRCLE. Proc SPIE Int Soc Opt Eng. https://doi.org/10.1117/12.2549318

Hu Z et al (2019) Artifact correction in low-dose dental CT imaging using Wasserstein generative adversarial networks. Med Phys 46(4):1686–1696

Kim J et al (2020) Low-dose CT image restoration using generative adversarial networks. Inf Med Unlocked 21:1004

Podgorsak AR, Shiraz Bhurwani MM, Ionita CN (2020) CT artifact correction for sparse and truncated projection data using generative adversarial networks. Med Phys 48:615–626

Yang Q et al (2018) Low-dose CT Image denoising using a generative adversarial network with wasserstein distance and perceptual loss. IEEE Trans Med Imaging 37(6):1348–1357

Yang Q et al (2019) Generative low-dose CT image denoising. Advances in computer vision and pattern recognition. Springer, Cham, pp 277–297

Zhao Z, Sun Y, Cong P (2018) Sparse-view CT reconstruction via generative adversarial networks. In: 2018 IEEE Nuclear Science Symposium and Medical Imaging Conference Proceedings (NSS/MIC)

Ataei S, Alirezaie J, Babyn P (2020) Cascaded convolutional neural networks with perceptual loss for low dose CT denoising. In: Proceedings of the International Joint Conference on Neural Networks

Choi K, Lim JS, Kim SK (2020) StatNet: statistical image restoration for low-dose CT using deep learning. IEEE J Sel Top Sign Proces 14(6):1137–1150

Gou S et al (2019) Gradient regularized convolutional neural networks for low-dose CT image enhancement. Phys Med Biol 64(16):165017

Kim B et al (2019) A performance comparison of convolutional neural network-based image denoising methods: the effect of loss functions on low-dose CT images. Med Phys 46(9):3906–3923

Obmann D et al (2020) Sparse anett for solving inverse problems with deep learning. In: ISBI Workshops 2020 International Symposium on Biomedical Imaging Workshops, Proceedings

Fan F, Shan H, Wang G (2019) Quadratic autoencoder for low-dose CT denoising. In: Proceedings of SPIE - The International Society for Optical Engineering

Shen C et al (2018) Intelligent parameter tuning in optimization-based iterative CT reconstruction via deep reinforcement learning. IEEE Trans Med Imaging 37(6):1430–1439

Mandava S, Ashok A, Bilgin A (2018) Deep learning based sparse view x-ray CT reconstruction for checked baggage screening. In: Proceedings of SPIE. The International Society for Optical Engineering

Mudgal KS, Das N (2020) The ethical adoption of artificial intelligence in radiology. BJR Open 2(1):20190020–20190020

Brusokas J, Petkevicius L (2020) Analysis of deep neural network architectures and similarity metrics for low-dose CT Reconstruction. In: 2020 IEEE Open Conference of Electrical, Electronic and Information Sciences, eStream 2020-Proceedings

Cao L et al (2020) A study of using a deep learning image reconstruction to improve the image quality of extremely low-dose contrast-enhanced abdominal CT for patients with hepatic lesions. Br J Radiol 93:20201086

Franck C et al (2021) Preserving image texture while reducing radiation dose with a deep learning image reconstruction algorithm in chest CT: A phantom study. Physica Med 81:86–93

Hata A et al (2020) Combination of deep learning-based denoising and iterative reconstruction for ultra-low-dose CT of the chest: image quality and lung-RADS evaluation. Am J Roentgenol 215(6):1321–1328

Higaki T et al (2020) Deep learning reconstruction at CT: phantom study of the image characteristics. Acad Radiol 27(1):82–87

Humphries T et al (2019) Comparison of deep learning approaches to low dose CT using low intensity and sparse view data. In: Progress in Biomedical Optics and Imaging-Proceedings of SPIE

Kim JH et al (2021) Validation of deep-learning image reconstruction for low-dose chest computed tomography scan: emphasis on image quality and noise. Korean J Radiol 22(1):131–138

Thammakhoune S, Yavuz E (2020) Deep learning methods for image reconstruction from angularly sparse data for CT and SAR imaging. In: Algorithms for synthetic aperture radar imagery XXVII. International Society for Optics and Photonics, vol 11393, p 1139306

Liu P et al (2020) Impact of deep learning-based optimization algorithm on image quality of low-dose coronary CT angiography with noise reduction: a prospective study. Acad Radiol 27(9):1241–1248

Nakai H et al (2020) Quantitative and qualitative evaluation of convolutional neural networks with a deeper U-net for sparse-view computed tomography reconstruction. Acad Radiol 27(4):563–574

Shin YJ et al (2020) Low-dose abdominal CT using a deep learning-based denoising algorithm: a comparison with CT reconstructed with filtered back projection or iterative reconstruction algorithm. Korean J Radiol 21(3):356–364

Singh R et al (2020) Image quality and lesion detection on deep learning reconstruction and iterative reconstruction of submillisievert chest and abdominal CT. Am J Roentgenol 214(3):566–573

Thammakhoune S, Yavuz E (2020) Deep learning methods for image reconstruction from angularly sparse data for CT and SAR imaging. In: Proceedings of SPIE The International Society for Optical Engineering

Urase Y et al (2020) Simulation study of low-dose sparse-sampling CT with deep learning-based reconstruction: usefulness for evaluation of ovarian cancer metastasis. Appl Sci (Switzerland) 10(13):4446

Zeng L et al (2021) Deep learning trained algorithm maintains the quality of half-dose contrast-enhanced liver computed tomography images: Comparison with hybrid iterative reconstruction: study for the application of deep learning noise reduction technology in low dose. Eur J Radiol 135:10987

Chen Y et al (2017) Discriminative feature representation: an effective postprocessing solution to low dose CT imaging. Phys Med Biol 62(6):2103–2131

Liu J et al (2018) 3D feature constrained reconstruction for low-dose ct imaging. IEEE Trans Circuits Syst Video Technol 28(5):1232–1247

Lyu T et al (2021) Estimating dual-energy CT imaging from single-energy CT data with material decomposition convolutional neural network. Med Image Anal 70:102001

Yin X et al (2019) Domain progressive 3D residual convolution network to improve low-dose CT imaging. IEEE Trans Med Imaging 38(12):2903–2913

Prior F et al (2017) The public cancer radiology imaging collections of the cancer imaging archive. Sci Data 4(1):170124

Yi X, Babyn P (2018) Sharpness-aware low-dose CT denoising using conditional generative adversarial network. J Digit Imaging 31(5):655–669

Wu Z et al (2020) MD-NDNet: a multi-dimensional convolutional neural network for false-positive reduction in pulmonary nodule detection. Phys Med Biol 65(23):235053

Liu S, Xie Y, Reeves AP (2017) Individual bone structure segmentation and labeling from low-dose chest CT. Medical imaging 2017: computer-aided diagnosis. International Society for Optics and Photonics, Washington

Shi F et al (2019) DeSpecNet: a CNN-based method for speckle reduction in retinal optical coherence tomography images. Phys Med Biol 64(17):175010

Capizzi G et al (2020) Small lung nodules detection based on fuzzy-logic and probabilistic neural network with bioinspired reinforcement learning. IEEE Trans Fuzzy Syst 28(6):1178–1189

Ke Q et al (2019) Adaptive independent subspace analysis of brain magnetic resonance imaging data. IEEE Access 7:12252–12261

Woźniak M, Siłka J, Wieczorek M (2021) Deep neural network correlation learning mechanism for CT brain tumor detection. Neural Comput Appl. https://doi.org/10.1007/s00521-021-05841-x

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, M., Gu, S. & Shi, Y. The use of deep learning methods in low-dose computed tomography image reconstruction: a systematic review. Complex Intell. Syst. 8, 5545–5561 (2022). https://doi.org/10.1007/s40747-022-00724-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-022-00724-7