Abstract

The first-order temporal structure functions (SFs), i.e., the first-order statistical moment of absolute increments of scaled multichannel resting state EEG signals in healthy children and teenagers over a wide range of temporal separation (time lags) are computed. Our research shows that the sill level (asymptote) of the SF is mainly defined by a determinant of EEG correlation matrix reflecting the EEG spatial structure. The temporal structure of EEG is found to be characterized by power-law scaling or statistical-scale invariance over time scales less than 0.028 s and at least by two dominant frequencies differing by less than 0.3 Hz. These frequencies define the oscillation behavior of the SF and are mainly distributed within the range of 7.5–12.0 Hz. In this paper, we propose the combined Bessel and exponential model that fits well the empirical SF. It provides a good fit with the mean relative error fitting of 2.8 % over the time lag range of 1 s, using a sampling interval of 4 ms, for all cases under analysis. We also show that the hyper gamma distribution (HGD) fits to the empirical probability density functions (PDFs) of absolute increments of scaled multichannel resting state EEG signals at any given time lag. It means that only two parameters (sample mean of absolute increments and relevant coefficient of variation) may approximately define the empirical PDFs for a given number of channels. A three-dimensional feature vector constructed from the shape and scale parameters of the HGD and the sill level may be used to estimate the closeness of the real EEG to the “random” EEG characterized by the absence of temporal and spatial correlation.

Similar content being viewed by others

1 Introduction

Electroencephalogram (EEG) signals or EEG time series are the ones of the basic methods for analysis of brain activity in health and disease [1]. However, it is not yet fully known now how the EEG activity recorded at any location on the human scalp is formed. With a certain EEG signal, one can only guess something about the behavior of the underlying neuronal elements, but nobody can precisely reconstruct it since the relevant inverse problem does not have a unique solution. The absence of adequate physiological or mathematical ideas of EEG generation stimulates researchers to analyze EEG time series using various algorithms based on the concepts of different theories where the progress is evident. Since the foundations of these theories are fundamentally different, one can get a variety of descriptive measures concerning the same EEG signal. The most popular measures used in EEG time series analysis [2] came from the information theory [3], non-linear dynamics, and deterministic chaos theory [4].

Fulcher et al. [5] found that there are now over 9000 methods for time series analysis, which quantify a wide range of time series properties. Actually, a reduced set of 200 methods, including autocorrelation, auto-mutual information, stationarity, entropy, long-range scaling, correlation dimension, wavelet transforms, linear and non-linear model fits, and measures from the power spectrum, is enough to form a concise summary of the different behaviors of time series analysis methods applied to scientific time series [5].

It should be noted that the various entropy methods that are very popular in EEG time series analysis [1, 6, 7] like Sample Entropy (SampEn) [8], Lempel–Ziv complexity [9], auto-mutual information, Shannon’s entropy, and other approximate entropies are most similar according to Fulcher et al. [5] to the approximate entropy algorithm (ApEn) proposed by Pincus [10]. Unfortunately, current entropy measures are mostly unable to quantify the complexity of any underlying structure in the series, as well as determine if the variation arises from a random process [11]. Since a high entropy score indicates a random or chaotic series, and a low score indicates a high degree of regularity [11], it would be better to use them, at least just, for now, only for comparisons between different conditions (e.g., resting vs. task) or systems (e.g., young vs. old) as was suggested in [12].

The presence of chaos in time series is investigated through the correlation dimension D 2 [13] and Lyapunov exponent (LE) [14] methods. The D 2 reflects the self-similarity, and the maximal LE reflects the predictability. Zang et al. [15] found that both are associated with harmonic content in the time series. The fractional or scaling property is studied in terms of the Hurst exponent [16] and Rényi dimension [17].

Recent detailed examination of commonly used complexity measures from information theory, chaos theory, and random fractal theory for characterizing EEG time series shows that their variations with time are either similar or reciprocal, but the behaviors of some of them are counter-intuitive and puzzling [2]. It is not surprising, and one has to be careful with using various complexity measures adopted from theories mentioned above for EEG analysis. There are at least two reasons for that.

The first one is that the brain is not completely deterministic, and the stochasticity may influence its function in some cases [18]. Furthermore, time series arising from chaotic systems share with those generated by stochastic processes several properties like a wide-band power spectrum, a delta-like autocorrelation function, and an irregular behavior of the measured signals that make them very similar and, as a result, the distinction between them is not trivial [19]. Indeed, the D 2 values and ‘maximum likelihood estimate of the correlation dimension’ (DML value) found in [20] for the white (gamma, uniform, Gaussian, and k-distributed) and correlated stochastic time series consisted of 50,000 data points which suggest that these data have a low fractal dimension which might be interpreted as the presence of chaos. The LE estimated for the same time series also suggests this interpretation [20]. It would be better to use the D 2, the DML, and the LE, at least just, for now, for comparisons between different conditions (e.g., resting vs. task) or systems (e.g., young vs. old) as was suggested in [12] for using entropy measures.

The second reason is that a basic requirement for using measures adopted from non-linear dynamics and chaos theory implies the stationarity in the EEG time series, but it is not the case. Even detrended fluctuation analysis (DFA) specifically introduced by Peng et al. [21] to address non-stationarity estimates can be affected by very simple sinusoidal periodicities [22] or large-amplitude transient artifacts [23].

To avoid any speculations about what types of deterministic and/or stochastic processes govern the EEG signals, we will use here some properties of these signals that depend on processes underlying them but do not require knowing their exact nature. The one-dimensional probability density functions (PDFs) of the absolute increments of scaled multichannel resting state EEG signals calculated over a wide range of temporal separation (time lags) may be used as one of the properties [24]. It is not sufficient to infer the EEG dynamics, but it is enough to capture some of its features. It should be noted that the moment of order p of the relevant distribution represents the structure functions (SFs) of order p at a given time lag. The term “structure function” as such was proposed by Obukhov [25] but Kolmogorov [26, 27] was the first to introduce the formal definition of the second- and third-order SFs under a theoretical analysis of velocity difference of a turbulent fluid. Kolmogorov’s second-order SF is also known today as the variogram. The latter has been widely used for many years to quantify the spatial variability of spatial phenomena for many years in geostatistical studies (e.g., Gringarten and Deutsch [28]) as well as to describe a pseudo-periodic signal [29]. It is important that the SF approach is applicable to non-stationary time series. It does not require the mean estimation and is one of the several techniques available for calculating the generalized Hurst exponent.

Since the EEG absolute increment distributions are non-Gaussian [24], it is reasonable to use the SFs of various orders to analyze EEG data. It is more applicable than restricting the analysis using the autocorrelation function only. There are just a few examples of using variograms and SFs in neurosciences. The first attempt to investigate the brain’s electrical activity by deriving the second-order temporal SF for every separate electrode that appears was performed by Sergeev et al. in 1968 [30]. The application of the variogram technique for analysis of fundamental brain waves, as recorded by the EEG, was done by Conte et al. [31]. In their research, records from only four electrodes (CZ, FZ, OZ, and T4) were used and the multivariate variogram was calculated for the time series formed as the Euclidean norm of these four records. Timashev et al. [32] used the scale of fluctuations in the difference moment of the second-order SFs as an objective diagnosis of psychiatric disorders, such as schizophrenia, by analyzing the EEG signals recorded from scalp-mounted frontal electrodes at locations F3 and F4. The example of the first-order temporal SF calculated for the scaled EEG time series formed as the Euclidean norm of sixteen EEG signals was presented in [24]. Recently, Sleimen-Malkoun et al. [12] used a battery of multiscale metrics, including variogram to investigate the changes of cortical dynamics with aging. Chernyavskiy et al. [33] reported a successful attempt to classify EEG data of subjects with traumatic brain injury symptoms on the base of the spatiotemporal variogram computed from their EEG. In the latter case, the variogram is formally considered as isotropic but the authors said nothing about the number of electrodes (spatial sampling points) they used. This is important since for reliable estimation of two-dimensional isotropic variogram, Webster and Oliver [34] recommend ideally having 150 sampling points. For comparison, a minimum of some 30–50 increments is needed to estimate the one-dimensional variogram reliably as suggested in [35]. In this case, the variogram is only calculated for values of 15–25.

All results of the application of variograms and SFs for analysis of EEG time series mentioned above are very promising, and the studies in this area need to be continued. The present paper provides the detailed analysis of multivariate temporal SF of multichannel EEG. Generally, the SF may exhibit complex behavior over time lag and very short EEG time series may be not enough for its analysis. It imposes the limit on the applicability of the method.

This paper is organized as follows: the EEG data and the proposed method of their analysis are introduced in Sect. 2. Section 3 presents the results of the EEG analysis. The conclusions are drawn in Sect. 4. In the same section, the suggestions for further studies are proposed.

2 The methods

2.1 EEG data collection

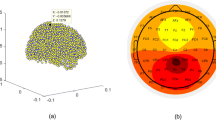

90 cases of the eye closed resting state EEG signals were obtained for several years from healthy children and teenagers aged 5–19.8 using computer-aided electroencephalography analyzer Entsefalan-131-03 (Medikom, Russia) in 16 channels with sampling frequency F d of 250 Hz. 16 Ag/AgCl electrodes were placed at Fp1, Fp2, F3, F4, F7, F8, C3, C4, P3, P4, T3, T4, T5, T6, O1, and O2 on the scalp according to the international 10–20 system. The option of linked earlobes was used as a reference for a montage. The written permission from parents for all subjects to participate in the study was obtained. Only artifact-free epochs with a length longer than 36 s have been selected for further analysis. An example of real 16-channel EEG fragment recorded from a subject s1 is shown in Fig. 1 where 100 μV amplitude scale (vertical line) and 1 s duration (horizontal line) are shown in the top right corner.

2.2 EEG data analysis

In this research, we used the method of the EEG signals analysis briefly presented in our previous paper [4]. Some relevant parts of it are reproduced below to help the understanding of the results given in Sect. 3.

Let {X(t) = [X 1(t), X 2(t), …, X m (t)]T, t = 1, 2,…, N} represent the observed EEG m-dimensional time series, where m is a number of channels (electrodes), here equal to 16, X(t) is an m-dimensional vector, X j (t) is the signal amplitude on the channel j expressed in microvolts at the discrete integer valued time (sampling point) t, N is the length of series, and the superscript T denotes the matrix transpose operation. The sampling interval (in seconds) equates to 1/F d.

Since signals reveal significant spread of amplitude values from subject to subject the original EEG time series is centered by subtracting their mean in every channel first and then scaled by the [det(Σ X )](1/2m), where det denotes the determinant, Σ X = E[δ X δ X T] is the sampling covariance matrix, δ X = X − E[X], and E[·] denotes the statistical expectation or average. As a result, any new vector Y(t) = δ X(t)/[det(Σ X )](1/2m) is dimensionless and has the same generalized variance independently on the subject since the determinant of the covariance matrix Σ Y = E[δ Y δ Y T] is equal to 1. Geometrically, the quantity [det(Σ Y )]1/2 determines the volume of the confidence ellipsoid for any particular confidence level, and the scaling proposed here makes the distributions of any vector Y to be equivalent in the sense that they occupy the same volume in m-dimensional space. It means that the ellipsoids with different orientations and different semi-axes but having the same generalized variance will be considered equivalent.

Define the absolute increment (relative displacement) ΔY τ as the Euclidean distance between two vectors Y(t) and Y(t + τ) which are separated by a dimensionless and relative lag parameter τ = 1, 2…, N − τ

The actual time difference (in seconds) between samples Y(t + τ) and Y(t) is calculated as the time lag τ divided by the sampling frequency F d, i.e., equal to τ/F d.

Recently, Trifonov and Rozhkov [24] have analyzed the PDFs of ΔY τ and found them closely matched to hyper gamma distribution (HGD) proposed by Suzuki [36]

where γ = αβ ν/α/Γ(ν/α), Γ(·) is the gamma function, α and ν are shape parameters, and β is the scale parameter. Generally, all these parameters may depend on τ, but for simplicity of notation the index τ is omitted from them.

The choice of given type of fitting function was based on the fact that the “random” EEG absolute increment ΔY τ (obtained from m-dimensional vector X(t) whose components are independent normal random variables having zero mean and variance σ 2) is distributed according to the scaled chi-distribution that is a special case of (2) when ν = m, α = 2, and β = 0.25/σ 2. It should be noted that both the HGD and the scaled chi-distribution are special cases of the Amoroso distribution [37]. The HGD is closed under scaling by a positive factor, and the random variable (E[ΔY 1]/E[ΔY τ ])·ΔY τ has the same distribution as ΔY 1.

Letting ν be m, and solving a single non-linear equation

where σ τ is the sample standard deviation one can estimate the value of parameter α. The scale parameter β is estimated then as

Since the EEG absolute increment distributions are non-Gaussian, a basic tool for ΔY τ analysis may be given by the pth-order SF S p (τ) which is defined as the expectation of the pth moment of ΔY τ

where τ max is maximal time lag value. At least for p = 2 (the SF S 2(τ) represents well-known multivariate variogram originally developed for spatial data analysis by Bourgault and Marcotte [38]) there is an upper limit for τ max. According to Petersen and Esbensen [39], τ max should not be higher than N/2 (rounded down).

In practical, data analysis order value p may range from 1 to at most 10 or so [40]. However, according to Fisher et al. [41], the first-order SF is more robust than higher-order SFs with respect to outliers in the absolute increment. This conclusion may be partly illustrated by comparing the coefficients of variation (CV) of the first- and the second-order SF in the limiting case of “random EEG.” Since the random variable ΔY τ is distributed according to the scaled chi-distribution, while ΔY 2 τ is distributed according to the scaled χ 2 distribution, then one can easily derive analytical expressions for CV1 and CV2 as follows:

Using these expressions, one can get that the ratio CV1/CV2 is slightly above 0.5 even for small m value. Preliminary analysis of real EEG data shows that using S 1 is preferable as having the smallest coefficient of variation CV1 as well. For this reason, in this study, we concentrate our attention on and discuss the first-order SF only.

3 The results

An example of the individual empirical PDF f(ΔY 1) and its HGD fit f(ΔŶ 1) is shown in Fig. 2. In this case, the value of N is equal to 9000 and corresponds to 36-s EEG record with the sampling frequency F d = 250 Hz. Time lag τ = 1 corresponds to the sampling interval of 0.004 s and the histogram bin size W 1 = 0.2. For comparison, the PDFs for the “random” EEG with σ n = 1 and for the original EEG with randomly permuted temporal sequence of time series X(t) are shown in the same Fig. 2. Random permutation of real EEG time series and generation of the “random” EEG time series was done in Matlab using randperm and randn functions, respectively. Visually, all three theoretical HGD f(ΔŶ 1) provide a reasonably good fit for all three empirical PDF f(ΔY 1).

The closeness of the empirical normalized histogram Δy 1 = W 1·ΔY 1 (Σ i Δy 1i = 1) to the fitting normalized histogram Δŷ 1 was checked by using Bhattacharyya coefficient BC(Δy 1, Δŷ 1) = Σ i sqrt(Δy 1i ·Δŷ 1i ) as a measure of similarity between them [42]. It was found that this coefficient equates to 0.999 for the HGD fitting to the empirical distribution in the case of real and “random” EEG time series and 0.997 in the case of real, but randomly permuted EEG time series. For comparison, the closeness of real and “random” EEG normalized histograms is about 0.147.

The same approach was used to check the closeness between the empirical normalized histograms Δy 1 and Δŷ 1 = (E[ΔY 1]/E[ΔY τ ])·W τ ·ΔY τ at different time lags τ under scaling mentioned above. We found that for randomly selected real EEG time series, and for time lags τ up to 100, this coefficient oscillates between 0.994 and 1 about a mean value of 0.997. It means that empirical distribution of ΔY τ is really closed under the scaling and has approximately the same parameters α and ν as the distribution of ΔY 1 has. This result allows us to use empirical PDFs estimated at unity time lag only in our further analysis.

Estimating α and β parameters at τ = 1 according to the approach mentioned above, we have found that their values are in the range [0.30, 0.71] and [12.28, 45.67], respectively. The analysis of the behavior of these parameters allows us to make a suggestion that they are not completely independent and that α decreases approximately exponentially with β. We could not test this suggestion carefully because we have not found yet empirical PDFs with extremely high values of β which may imply that real EEG becomes strongly deterministic. It seems we need to estimate α and β in this case theoretically as it was done in another extreme case of “random” EEG (in the sense defined above) when we get α = 2 and β = 0.25. The latter two parameters may serve as a reference point and the distance to it from any point on the αβ diagram can be used as a quantitative measure of the degree of temporal randomness in real EEG.

The values of the BC values for the whole set of 90 real EEG time series range between 0.994 and 0.999 with the average value of 0.998 and standard deviation of less than 0.001. Such result indicates that the HGD provides a good fit to the empirical PDFs. The closeness of any real EEG normalized histograms from our dataset to the “random” EEG normalized histogram expressed in terms of the BC value is in the range [0.013, 0.621].

The empirical first-order SFs over the τ max = 1000 (N = 9000) were calculated using Eq. (3). The three typical examples of S 1(τ) with lag τ up to 250 corresponding to the 16-channel real EEG time series, are shown in Fig. 3a. Figure 3b represents S 1(τ) for subject s1 (in the case of real EEG and its randomly permuted version), and for “random” EEG. The randomly permuted EEG means disregarding the temporal order of the original EEG time series in all channels simultaneously keeping the mean vector E[X] and the sampling covariance matrix Σ X unchanged. It is obvious that such permutation keeps the original inter-channel (spatial) correlation structure of any EEG time series, but destroys original temporal correlation within each channel.

Actually, Fig. 3a shows that the individual first-order SF S 1(τ) increases approximately exponentially with increasing time lag τ for the first few τ values, but starting with some τ* value the S 1(τ) begins to oscillate around its sill level V rather than approaches it monotonically. In geostatistical studies, the variogram (the second-order SF) exhibiting cyclicity (under-dumped behavior) has been termed a “hole-effect variogram” (e.g., Ma and Jones [43]). Such behavior means that there is a high temporal correlation between a given EEG sample and neighboring EEG samples as well as some relatively distant EEG samples. The curve s1 in Fig. 3a exhibits strong cyclicity while curves s2 and s3 exhibit low cyclicity which corresponds to a higher damping ratio in terms of under-damped motion.

At the same time, both randomly permuted real EEG, and “random” EEG should not reveal significant temporal correlation by definition, and one can see that the relevant S 1(τ) does not really depend on τ. They differ only in the sill level V. The first one is around the sill value corresponding the original EEG while the second one is close to the theoretical value V 0 of 5.569 corresponding to the “random” EEG time series for σ n = 1 and m = 16. These levels appear to be defined by the type of spatial correlation characterized by the sampling correlation matrix R X . According to Peña and Rodríguez [44], the determinant of R X summarizes the linear relationships between the variables and may be used as the scalar measure of multivariate linear dependence. Proposed in [44] measure named as effective dependence is defined by

It was found here that there is an empirical dependence between D e and the sill V which can be fitted by the non-linear expression

The value of V 0/V may serve as a measure of randomness of the spatial structure of real EEG.

The empirical dependence between D e and V derived from 90 cases of the eyes closed resting state EEG time series is shown in Fig. 4. The fitting parameter q is equal here to 0.535. (The coefficient of determination R 2 = 0.936 in the relevant log–log regression model). These findings approve the suggestion that the spatial EEG structure define the sill level of the first-order SFs. On the other hand, the temporal EEG structure is reflected in the under-damped oscillatory behavior of S 1(τ). It was found that this behavior depends on the subject’s individuality and appears most conspicuous in the resting state. As usual, the dominant oscillation frequency lies in the alpha range. The amplitudes of peaks and troughs attenuate with increasing lag distance and the sill level V here is only meaningful at the larger time lags, where vectors X (and Y) no longer exhibit any significant temporal correlation. The time lag τ 0 at which the S 1(τ 0) reaches the sill value is usually called as range, but in practice, it is not rigorously defined. Theoretically, the expected range value τ 0 should be equal to 0 for both first-order SFs corresponding to the randomly permuted real EEG and “random” EEG. And it is actually true as one can see from Fig. 3b.

It is reasonable to suggest that the cyclical pattern is typical for the spatial first-order SFs as well. This suggestion is not discussed because the number of channels is too small here, but it is indirectly supported by fMRI data analysis [45], where variograms waving behavior was found as the spatial lag distance increases.

It was found that all SFs S 1(τ) derived from EEG time series appear to exhibit scaling, i.e., S 1(τ) ∝ τ ζ at time lags τ ranging from 3 to 7 in dependence on the subject. To estimate the scaling exponent ζ, we used linear least squares fit to the SFs in log–log coordinates and R-squared method proposed by Pressel and Collins [46]. We let a lower bound on the coefficient of determination R 2 of 0.998 for our analysis. Such choice is explained here by a relatively low number of S 1(τ) samples within the range between 1 and τ 0, where S 1(τ 0) reaches (intersects) first the sill value V. Most likely by increasing the sampling frequency F d one can identify more distinct scaling regions with the breaks in S 1(τ) slope. It should be noted that according to [41, 46], the first-order SF scaling exponent ζ is simply the Hurst exponent H that has a clear physical meaning. We have found that the average value of ζ in our analysis is 0.88 ± 0.03 for time scales less than 0.028 s. It means that one-dimensional random process \(|{X(t)}|\) (and \(|{Y(t)}|\)) is characterized at these scales by persistent increments and long-range correlations. Since the Hurst exponent of such a process is related to the fractal dimension D by D = 2 − H, then D = 1.12 and this process has a distinctly less space filling. Within the EEG dataset considered here, the scaling behavior slightly depends on subject and condition during the eyes closed resting state EEG is recorded.

We adopt the idea of using the Bessel model for describing the pronounced hole-effect structure in the variogram function proposed in [45]. We propose here to fit the combined Bessel and exponential model S 1 *(τ) to the empirical first-order SF S 1(τ). The model S 1 *(τ) is defined as

where K is the order of the model, J 0(·) is the Bessel function of the first kind and zero order, p k is weight (p k = w k /J 0(θ k ), w k ≥ 0, Σ k w k = 1), ω k and θ k are frequency and phase parameters, respectively, and α 0 and β 0 are positive constants (α 0 > β 0). It should be noted that the model (4) is valid only if the derivative [S 1 *(τ)]′ ≤ 0 at τ → 0. This condition imposes a limitation on the choice of phase parameters θ k .

The beat pattern clearly pronounced in empirical S 1(τ) for large time lags (see the curve s1 in Fig. 3b) can arise from interference between at least two cosine signals of slightly different frequencies. Since J 0(z) for large argument z is just cosine (J 0(z) ~ cos(z − π/4)), the model (4) should have at least order 2 for the case of strong cyclicity. The mean values of frequency and phase parameter ω 0 and θ 0 for such a model can be estimated from the matching of root locations for function J 0(·) and C(τ) = (1 − S 1(τ)/V) within the time lag τ range [0, 260]. If R J0 is a set of actual roots of J 0(·) and r S1 is a set of roots of C(τ) estimated by linear interpolation, then ω0 and θ 0 are evaluated as regression coefficients in the simple linear regression model

where \(R_{J0}^{*}\) is the estimate of the known R J0.

We tried to fit the model (4) with K = 3 to the empirical S 1(τ). The examples of such a fitting are shown in Fig. 5. To find the estimates for model parameters, we used a simplified algorithm and estimate of ω 0. At first, the frequencies ω 1, ω 2, and ω 3 were estimated by finding the first three maximum values of the discrete form of integral \(\int_{0}^{{\tau_{ \hbox{max} } }} {C(\tau )\cos \left( {\omega \tau } \right){\text{d}}\tau }\) calculated with frequency step Δω = 0.001. This approach is based on the Neumann’s addition theorem and the fact that \(\int_{0}^{\infty } {J_{v} \left( {\omega \tau } \right)\cos \left( {\omega \tau } \right){\text{d}}\tau }\) = ∞ for any order v. The phases θ 1, θ 2, and θ 3 were estimated by finding the maximum ∫C(τ)J 0(ω k τ + θ k )dτ by varying θ k separately for k = 1, 2, and 3. After that the weights w 1, and w 2 from the range [0, 1] were chosen (w 3 = 1 − w 1 − w 2) by exhaustive search with step Δw 1,2 = 0.1 using the Nash and Sutcliffe criterion or F value [47, 48]

as the criterion of the goodness of fit. The same searching approach was used at the final step for finding parameters of α 0 and β 0 with step Δα 0 = 0.01 and Δβ 0 = 0.001, respectively, under condition α 0 > β 0.

Two examples of the model (4) fitting to the empirical S 1(τ): a–c Subject sK, 16 years old, (high cyclicity), ω 1 = 0.239 (f 1 = 9.51 Hz), θ 1 = 0.6, ω 2 = 0.232 (f 2 = 9.23 Hz), θ 2 = 0.95, ω 3 = 0.245 (f 3 = 9.75 Hz), θ 3 = 0.05, α 0 = 0.17, β 0 = 0.054, w 1 = 0.7, w 2 = 0.2, w 3 = 0.1; mean relative error fitting is 1.4 %; d Subject sR, 12 years old, (low cyclicity), ω 1 = 0.239 (f 1 = 9.51 Hz), θ 1 = −0.05, ω 2 = 0.157 (f 2 = 6.25 Hz), θ 2 = −0.05, ω 3 = 0.129 (f 3 = 5.13 Hz), θ 3 = 0.15, α 0 = 0.17, β 0 = 0.001, w 1 = 0.6, w 2 = 0.2, w 3 = 0.2, mean error fitting is 2 %; Error fitting = 100 % \(|{S_{1}} (\tau)- {S_{1}^{*}}(\tau)|/{S_{1}} (\tau)\), mean relative error fitting is calculated over the time lag τ range of 1 s (τ \(\in\) [0, 250]); [S 1 *(0)]′ are everywhere ≥ 0

The approach mentioned above does not yield the optimal set of estimated parameters to the model (4). However, the mean error fitting defined as 100 %·E \(\left[\left|{S_{1}} (\tau)- {S_{1}^{*}}(\tau)\right|/{S_{1}} (\tau) \right]\) is 2.8 % for over the time lag τ within the range [0, 250] for all cases under analysis. It was found that frequencies f 1, f 2, and f 3 are mainly distributed within the range of 7.5–12.0 Hz (84.4 % of all cases) with two clearly pronounced modes located at 9.75 and 10.75 Hz. There is also a less pronounced mode located at 6.25 Hz corresponding to the frequency distribution within the range of 4.5–7.5 Hz (15.6 % of all cases). These frequency estimates may be considered as trivial but for the high correlation of 0.94 between two nearest frequencies and the difference about 0.3 Hz between them that is observed for roughly 70 % of all cases.

4 Conclusions and future work

The analysis of the first-order SFs derived from 90 eye closed resting state EEG records in healthy children and teenagers shows that this function contains integral information about spatial and temporal EEG organization. It was found that the sill level (asymptote) V of the S 1(τ) indicates how strong the correlations between channels take place and, therefore, reflects the EEG spatial organization. The shape α and scale β parameters of the HGD, successfully representing the empirical distribution of the EEG absolute increment, characterize the degree of temporal randomness in real EEG. It is reasonable to construct a three-dimensional feature vector FV = (α, β, V)T which may be used to estimate the closeness of the real EEG to the “random” EEG (in the sense defined above). In the case of 16-channel EEG, the reference FVR has components 2, 0.25 and 5.569, respectively.

The EEG temporal organization is also characterized by the scaling exponent ζ (or Hurst exponent H in our case) and at least by two frequencies defining the type of S 1(τ) cyclicity around the sill level. The time scale where EEG records appear to exhibit scaling depends on subject varying between 0.012 s and 0.028 s. The average value of ζ (or H) in our analysis is 0.88 ± 0.03. It means that one-dimensional random process \(|{X(t)}|\) at low time scales is characterized by persistent increments and long-range correlations. The mere fact that ζ has a value close to unity and relatively small coefficient of variation can be considered only preliminary. It calls for further investigations.

The next characteristic defining the temporal structure of EEG is the cyclicity of S 1(τ). In 84.4 % of cases, the derived S 1(τ) exhibited relatively high oscillation (under-damped behavior) around the sill level with frequencies mainly distributed within the range of 7.5–12.0 Hz. At least two dominant frequencies differing by less than 0.3 Hz were found within this range. This fact is rather interesting and needs to be understood.

It is shown that the combined Bessel and exponential low-order model can capture the behavior of the first-order SFs exhibiting high cyclicity. The third-order model provides the mean relative error fitting of 2.8 % over the time lag range of 1 s, using a sampling interval of 4 ms, for all cases under analysis.

Our future studies will focus on the analysis of EEG recorded at a higher sampling frequency to identify more distinct scaling regions with the breaks in the slope of the first-order SF. Using such data can allow us to understand more carefully underlying stochastic processes and to suggest appropriate improvements in the model (4). Assuming that S 1(τ) might be a solution of some forced second-order ordinary differential equation it would be interesting to think about the possible form of such equation.

References

Lopes da Silva F (2010) EEG: origin and measurement. In: Mulert C, Lemieux L (eds) EEG–fMRI: physiological basis, technique, and applications. Springer, Berlin, pp 19–38. doi:10.1007/978-3-540-87919-0_2

Gao JB, Hu J, Tung WW (2011) Complexity measures of brain wave dynamics. Cogn Neurodyn 5(2):171–182. doi:10.1007/s11571-011-9151-3

Ince RAA, Mazzoni A, Petersen RS, Panzeri S (2010) Open source tools for the information theoretic analysis of neural data. Front Neurosci 4(1):62–70. doi:10.3389/neuro.01.011.2010

Rodríguez-Bermúdez G, García-Laencina PJ (2015) Analysis of EEG signals using nonlinear dynamics and chaos: a review. Appl Math Inf Sci 9(5):2309–2321

Fulcher BD, Little MA, Jones NS (2013) Highly comparative time-series analysis: the empirical structure of time series and their methods. J R Soc Interface 10:20130048. doi:10.1098/rsif.2013.0048

Gao JB, Hu J, Tung WW (2012) Entropy measures for biological signal analyses. Nonlinear Dyn 68(3):431–444. doi:10.1007/s11071-011-0281-2

Gao JB, Hu J, Liu F, Cao YH (2015) Multiscale entropy analysis of biological signals: a fundamental bi-scaling law. Front Comput Neurosci 9(64):1–9. doi:10.3389/fncom.2015.00064

Richman JS, Moorman JR (2000) Physiological time-series analysis using approximate entropy and sample entropy. Am J Physiol Heart Circ Physiol 278(6):H2039–H2049

Lempel A, Ziv J (1976) On the complexity of finite sequences. IEEE Trans Inf Theory 22(1):75–81

Pincus SM (1991) Approximate entropy as a measure of system complexity. Proc Natl Acad Sci USA 88:2297–2301

Gan CC, Learmonth G (2015) Comparing entropy with tests for randomness as a measure of complexity in time series. http://arxiv.org/abs/1512.00725

Sleimen-Malkoun R, Perdikis D, Müller V, Blanc J-L, Huys R, Temprado J-J, Jirsa VK (2015) Brain dynamics of aging: multiscale variability of EEG signals at rest and during an auditory oddball task1,2,3. eNeuro 2(3) e0067-14.2015 1–e0067-14.2015 21. doi:10.1523/ENEURO.0067-14.2015

Grassberger P, Procaccia I (1983) Characterization of strange attractors. Phys Rev Lett 50:346–349

Wolf A, Swift J, Swinney H, Vastano J (1985) Determining Lyapunov exponents from a time series. Physica D 16:285–317

Zang X, Howell J (2005) Correlation dimension and Lyapunov exponent based isolation of plant-wide oscillations. In: Dynamics and control of process system 2004 (IPV—IFAC Proceeding Volume). Elsevier, Philadelphia, pp 347–352

Hurst HE (1951) Long-term storage capacity of reservoirs. Trans Am Soc Civ Eng 116:770–808

Grassberger P (1983) Generalized dimensions of strange attractors. Phys Lett A 97(6):227–230. doi:10.1016/0375-9601(83)90753-3

Masquelier T (2013) Neural variability, or lack thereof. Front Comput Neurosci 7(7):1–7. doi:10.3389/fncom.2013.00007

Ravetti MG, Carpi LC, Gonçalves BA, Frery AC, Rosso OA (2014) Distinguishing noise from chaos: objective versus subjective criteria using horizontal visibility graph. PLoS One 9(9):e108004. doi:10.1371/journal.pone.0108004

Unsworth CP, Cowper MR, McLaughlin S, Mulgrew B (2000) False detection of chaotic behavior in the stochastic compound k-distribution model of radar sea clutter. In: Proceedings of the 10th IEEE workshop on statistical signal and array processing, pp 296–300

Peng C-K, Buldyrev SV, Havlin S, Simons M, Stanley HE, Goldberger AL (1994) Mosaic organization of DNA nucleotides. Phys Rev E 49(2):1685–1689

Bryce RM, Sprague KB (2012) Revisiting detrended fluctuation analysis. Sci Rep 2(315):1–6. doi:10.1038/srep00315

Hardstone R, Poil S-S, Schiavone G, Jansen R, Nikulin VV, Mansvelder HD, Linkenkaer-Hansen K (2012) Detrended fluctuation analysis: a scale-free view on neuronal oscillations. Front Physiol 3(450):1–13. doi:10.3389/fphys.2012.00450

Trifonov M, Rozhkov V (2014) Age-related changes in probability density function of pairwise Euclidean distances between multichannel human EEG signals. J Biosci Med 2(4):19–23. doi:10.4236/jbm.2014.24004

Obukhov AM (1949) Structure of the temperature field in the turbulent flow (in Russian). Izvestiia Akad Nauk SSSR Ser Geogr i Geofiz 13(1):58–69; Translated in Eng.—http://www.dtic.mil/dtic/tr/fulltext/u2/683016.pdf

Kolmogorov AN (1941) The local structure of turbulence in incompressible viscous fluid for very large Reynolds number (in Russian). Dokl Akad Nauk SSSR 30(4):299–303; Translated in Proc R Soc Lond A (1991) 434(1890):9–13. doi:10.1098/rspa.1991.0075

Kolmogorov AN (1941) Dissipation of energy in the locally isotropic turbulence (in Russian). Dokl Akad. Nauk SSSR 32(1):9–21; Translated in Proc R Soc Lond A (1991) 434(1890):15–17. doi:10.1098/rspa.1991.0076

Gringarten E, Deutsch CV (2001) Teacher’s aide variogram interpretation and modeling. Math Geol 33(4):507–534

Eyer L, Genton MG (1999) Characterization of variable stars by robust wave variograms: an application to Hipparcos mission. Astron Astrophys Suppl Ser 136:421–428

Sergeev GA, Pavlova LP, Romanenko AF (1968) Statistical methods for human electroencephalogram analysis (in Russian). Science Publishing, Leningrad

Conte E, Khrennikov A, Federici A, Zbilut JP (2009) Fractal fluctuations and quantum-like chaos in the brain by analysis of variability of brain waves: a new method based on a fractal variance function and random matrix theory: a link with El Naschie fractal Cantorian space–time and V. Weiss and H. Weiss golden ratio in brain. Chaos Solitons Fractals 41(5):2790–2800

Timashev SF, Panischev OY, Polyakov YS, Demin SA, Kaplan AYa AY (2012) Analysis of cross-correlations in electroencephalogram signals as an approach to proactive diagnosis of schizophrenia. Phys A 391(4):1179–1194

Chernyavskiy P, Hudac CM, Molfese DL, Marx DB (2014) Using the spatio-temporal variogram for the classification of electroencephalographic (EEG) assessment. METMA VII and GRASPA14 Conference, Torino (IT). http://meetings.sis-statistica.org/index.php/graspametma14/metma7graspa14/paper/viewFile/3166/529

Webster R, Oliver MA (2007) Geostatistics for environmental scientists, 2nd edn. Wiley, Chichester

Journel AG, Huijbregts CJ (1978) Mining geostatistics. Academic Press, London

Suzuki E (1964) Hyper gamma distribution and its fitting to rainfall data. Pap Meteorol Geophys 15(1):31-51. http://www.mri-jma.go.jp/Publish/Papers/DATA/VOL_15/15_031.pdf

Crooks GE (2010) The Amoroso distribution. http://arxiv.org/pdf/1005.3274v2.pdf. Accessed 18 May 2010

Bourgault G, Marcotte D (1991) Multivariate variogram and its application to the linear model of coregionalization. Math Geol 23(7):899–928

Petersen L, Esbensen KH (2005) Representative process sampling for reliable data analysis—a tutorial. J Chemometr 19:625–647. doi:10.1002/cem.968

Marsch E, Tu C-Y (1997) Intermittency, non-Gaussian statistics and fractal scaling of MHD fluctuations in the solar wind. Nonlinear Proc Geophys 4(2):101–124

Fischer L, Craig GC, Kiemle C (2013) Horizontal structure function and vertical correlation analysis of mesoscale water vapor variability observed by airborne lidar. J Geophys Res Atmos 118(14):7579–7590. doi:10.1002/jgrd.50588

Thacker NA, Aherne FJ, Rockett PI (1997) The Bhattacharyya metric as an absolute similarity measure for frequency coded data. Kybernetika 34(4):363–368

Ma YZ, Jones TA (2001) Teacher’s aide modeling hole-effect variograms of lithology-indicator variables. Math Geol 33(5):631–648

Peña D, Rodríguez J (2003) Descriptive measures of multivariate scatter and linear dependence. J Multivar Anal 85(2):361–374. doi:10.1016/S0047-259X(02)00061-1

Ye J, Lazar NA, Li Y (2015) Nonparametric variogram modeling with hole effect structure in analyzing the spatial characteristics of fMRI data. J Neurosci Methods 240:101–115. doi:10.1016/j.jneumeth.2014.11.008

Pressel KG, Collins WD (2012) First-order structure function analysis of statistical scale invariance in the AIRS-observed water vapor field. J Clim 25(16):5538–5555. doi:10.1175/JCLI-D-11-00374

Nash JE, Sutcliffe JV (1970) River flow forecasting through conceptual models part I—a discussion of principles. J Hydrol 10(3):282–290

Duchesne J, Bouvier V, Guillemé J, Coubard OA (2012) Maxwellian eye fixation during natural scene perception. Sci World J 2012:956340. doi:10.1100/2012/956340

Acknowledgments

The author would like to thank Prof. Mikhail Tsitseroshin, Dr. Vladimir Rozhkov, Dr. Sergey Bekshaev, Dr. Ekaterina Panasevich for sharing their EEG data and helpful discussions. The author is also grateful to Dr. Anthony Smith and Dr. Yuri Ovchinnikov for their careful correction of the English. Two anonymous reviewers are also appreciated for critical reading the manuscript that yielded its significant improvements.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Trifonov, M. The structure function as new integral measure of spatial and temporal properties of multichannel EEG. Brain Inf. 3, 211–220 (2016). https://doi.org/10.1007/s40708-016-0040-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40708-016-0040-8