Abstract

Convolutional neural networks (CNNs) are utilized for the task of inferring the attitude status in terms of rotation rate of resident space objects (RSOs) using simulated light curve measurements. Research into the performance of CNNs on synthetic light curve data-sets has shown significant promise that has not yet translated into success when working with empirically collected light curves. This limitation appears to be due to a number of factors including: mixing of bidirectional reflectance distribution function (BRDF) signatures, the effects of sensor noise, and blurring due to atmospheric turbulence. A synthetic data-set of approximately 7500 light curves was generated that takes into account realistic BRDF signatures and environmental parameters. The RSO used in this study was texture mapped with three unique material BRDF signatures: silicon solar panel, glossy paint, and aluminum. A two-step BRDF model inversion of the Beard-Maxwell model was performed using empirically collected data-sets of these materials in order to physically derive the BRDF model parameters. The CNN was trained on light curves resulting from the RSO performing four different maneuvers: tumbling, accelerating in rotational rate, stabilizing, and inactive (or stable in rotation rate). The CNN achieved an overall classification accuracy of 86.2% across the four maneuver classes. A confusion matrix analysis of the different classes of maneuvers suggested that our model performed best when classifying tumbling and accelerating RSOs (94% accuracy) and worst at classifying inactive RSOs (60% accuracy). This performance limitation when classifying inactive RSOs is attributed to (1) back-scatter signatures and specular glints within the synthetic light curves of inactive satellites being mistaken as attitude maneuvers, and (2) low signal-to-noise ratio due to factors such as atmospheric blurring. These results suggest that CNNs have strong potential for aiding in the problem of classifying satellite attitude status from light curves, but that machine learning research must focus on developing training sets and pre-processing techniques that account for these complications.

Similar content being viewed by others

References

Alcala, C.M., Brown, J.H.: Space object characterization using time-frequency analysis of multi-spectral measurements from the Magdalena Ridge Observatory. Tech. rep, Air Force Research Lab Space Vehicles Directorate (2009)

Badura, G., Valenta, C.R., Gunter, B., Renegar, L., Wu, D.: Spectral performance optimization of small telescopes for space object detection. Advanced Maui Optical and Space Surveillance Technologies Conference (AMOS) (2019)

Bedard, D., Lévesque, M., Wallace, B.: Measurement of the photometric and spectral BRDF of small Canadian satellites in a controlled environment. In: Proc. of the Advanced Maui Optical and Space Surveillance Technologies Conf, pp. 1–10 (2011)

Bédard, D., Wade, G.A., Abercromby, K.: Laboratory characterization of homogeneous spacecraft materials. J. Spacecr. Rocket. 52(4), 1038–1056 (2015)

Bradley, B.K., Axelrad, P.: Lightcurve inversion for shape estimation of geo objects from space-based sensors. In: Univ. of Colorado. International Space Symposium for Flight Dynamics (2014)

Budding, E., Demircan, O.: Introduction to astronomical photometry. Cambridge University Press (2007)

Caruana, R., Lawrence, S., Giles, C.L.: Overfitting in neural nets: Backpropagation, conjugate gradient, and early stopping. In: Advances in Neural Information Processing Systems, pp. 402–408 (2001)

Chollet, F., et al.: Keras. https://keras.io (2015)

Coder, R., Holzinger, M.: Sizing of a Raven-class telescope using performance sensitivities. In: Advanced Maui Optical and Space Surveillance Technologies Conference (2013)

Coder, R.D., Holzinger, M.J.: Multi-objective design of optical systems for space situational awareness. Acta Astronaut. 128, 669–684 (2016)

Cornell: Reflectance data, cornell university program of computer graphics. https://www.graphics.cornell.edu/online/measurements/reflectance/index.html (2002). Accessed: 12 Jan 2020

Dai, J.S.: Euler-rodrigues formula variations, quaternion conjugation and intrinsic connections. Mech. Mach. Theory 92, 144–152 (2015)

Dao, P., Haynes, K., Gregory, S., Hollon, J., Payne, T., Kinateder, K.: Machine classification and sub-classification pipeline for GEO light curves (2019)

Dianetti, A.D., Crassidis, J.L.: Light curve analysis using wavelets. In: 2018 AIAA Guidance, Navigation, and Control Conference, p. 1605 (2018)

DiBona, P., Foster, J., Falcone, A., Czajkowski, M.: Machine learning for RSO maneuver classification and orbital pattern prediction. In: Advanced Maui Optical and Space Surveillance Technologies Conference (AMOS) (2019)

Eismann, M.T.: Hyperspectral remote sensing. SPIE Press, Bellingham (2012)

Fan, S., Friedman, A., Frueh, C.: Satellite shape recovery from light curves with noise. Advanced Maui Optical and Space Surveillance Technologies Conference (AMOS) p. 23 (2019)

Fried, D.L.: Optical resolution through a randomly inhomogeneous medium for very long and very short exposures. JOSA 56(10), 1372–1379 (1966)

Früh, C., Kelecy, T.M., Jah, M.K.: Coupled orbit-attitude dynamics of high area-to-mass ratio (hamr) objects: influence of solar radiation pressure, Earth’s shadow and the visibility in light curves. Celest. Mech. Dyn. Astron. 117(4), 385–404 (2013)

Fulcoly, D.O., Kalamaroff, K.I., Chun, F.: Determining basic satellite shape from photometric light curves. J. Spacecr. Rocket. 49(1), 76–82 (2012)

Furfaro, R., Linares, R., Reddy, V.: Space objects classification via light-curve measurements: deep convolutional neural networks and model-based transfer learning. In: AMOS Technologies Conference, Maui Economic Development Board (2018)

Furfaro, R., Linares, R., Reddy, V.: Shape identification of space objects via light curve inversion using deep learning models. In: AMOS Technologies Conference, Maui Economic Development Board, Kihei, Maui (2019)

Goodfellow, I., Bengio, Y., Courville, A.: Deep learning. MIT Press (2016)

Green, M.A.: Self-consistent optical parameters of intrinsic silicon at 300 K including temperature coefficients. Sol. Energy Mat. Sol. Cells 92(11), 1305–1310 (2008)

Gunter, B.C., Davis, B., Lightsey, G., Braun, R.D.: The ranging and nanosatellite guidance experiment (range). Proceedings of the AIAA/USU Conference on Small Satellites, Session V: Guidance and Control (2016). http://digitalcommons.usu.edu/smallsat/2016/S5GuidCont/3/

Hall, D., Calef, B., Knox, K., Bolden, M., Kervin, P.: Separating attitude and shape effects for non-resolved objects. In: The 2007 AMOS Technical Conference Proceedings, pp. 464–475. Maui Economic Development Board, Inc. Kihei, Maui, HI (2007)

Haselsteiner, E., Pfurtscheller, G.: Using time-dependent neural networks for EEG classification. IEEE Trans. Rehab. Eng. 8(4), 457–463 (2000)

Holzinger, M., Jah, M.: Challenges and potential in space domain awareness J. Guid. Contr. Dyn. 41(1), 15–18 (2018)

Hou, Q., Wang, Z., Su, J., Tan, F.: Measurement of equivalent brdf on the surface of solar panel with periodic structure. Coatings 9(3), 193 (2019)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv:1412.6980 (2014)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Lawrence, R.S., Strohbehn, J.W.: A survey of clear-air propagation effects relevant to optical communications. Proc. IEEE 58(10), 1523–1545 (1970)

LeCun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Li, X., Chen, S., Hu, X., Yang, J.: Understanding the disharmony between dropout and batch normalization by variance shift. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2682–2690 (2019)

Li, X., Strahler, A.H.: Geometric-optical bidirectional reflectance modeling of the discrete crown vegetation canopy: Effect of crown shape and mutual shadowing. IEEE Trans. Geosci. Remote Sens. 30(2), 276–292 (1992)

Linares, R., Furfaro, R.: Space object classification using deep convolutional neural networks. In: 2016 19th International Conference on Information Fusion (FUSION), pp. 1140–1146. IEEE (2016)

Marana, A.N., Velastin, S., Costa, L., Lotufo, R.: Estimation of crowd density using image processing. In: IEE Colloquium on Image Processing for Security Applications (1997)

Marschner, S.R., Westin, S.H., Lafortune, E.P., Torrance, K.E.: Image-based bidirectional reflectance distribution function measurement. Appl. Opt. 39(16), 2592–2600 (2000)

Maxwell, J., Beard, J., Weiner, S., Ladd, D., Ladd, S.: Bidirectional reflectance model validation and utilization. Tech. rep, Environmental Research Institute of Michigan Ann Arbor Infrared and Optics Division (1973)

McQuaid, I., Merkle, L.D., Borghetti, B., Cobb, R., Fletcher, J.: Space object identification using deep neural networks. In: The Advanced Maui Optical and Space Surveillance Technologies Conference (2018)

Miranda, L.J.V., et al.: PySwarms: a research toolkit for particle swarm optimization in Python. J. Open Source Softw. 3(21), 433 (2018)

Montanaro, M.: NEFDS Beard-Maxwell BRDF model implementation in Matlab. Rochester Institute of Technology, DIRS Technical Report 2007–83, 174 (2007)

Peng, H., Bai, X.: Machine learning approach to improve satellite orbit prediction accuracy using publicly available data. J. Astronaut. Sci. 1–32 (2019)

Powell, M.J.: An efficient method for finding the minimum of a function of several variables without calculating derivatives. Comput. J. 7(2), 155–162 (1964)

Price-Whelan, A.M., Sipőcz, B., Günther, H., Lim, P., Crawford, S., Conseil, S., Shupe, D., Craig, M., Dencheva, N., Ginsburg, A., et al.: The Astropy Project: Building an open-science project and status of the v2. 0 core package. Astron J 156(3), 123 (2018)

Reyes, J., Cone, D.: Characterization of spacecraft materials using reflectance spectroscopy. In: The Advanced Maui Optical and Space Surveillance Technologies Conference (2018)

Santurkar, S., Tsipras, D., Ilyas, A., Madry, A.: How does batch normalization help optimization? In: Advances in Neural Information Processing Systems, pp. 2483–2493 (2018)

Schildknecht, T.: Optical astrometry of fast moving objects using ccd detectors. Geod. Geophys. Arb. Schweiz. 49(49) (1994)

Shell, J.R.: Optimizing orbital debris monitoring with optical telescopes. Tech. rep, Air Force Space Innovation and Development Center Schriver AFB CO (2010)

Shi, Y., Eberhart, R.: A modified particle swarm optimizer. In: 1998 IEEE international conference on evolutionary computation proceedings. IEEE World Congress on Computational Intelligence (Cat. No. 98TH8360), pp. 69–73. IEEE (1998)

Shuster, M.D.: A survey of attitude representations. Navigation 8(9), 439–517 (1993)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556 (2014)

Spurbeck, J., Jah, M., Kucharski, D., Bennett, J.C., Webb, J.G.: Satellite characterization, classification, and operational assessment via the exploitation of remote photoacoustic signatures. In: Advanced Maui Optical and Space Surveillance Technologies Conference (AMOS) (2018)

Swietojanski, P., Ghoshal, A., Renals, S.: Convolutional neural networks for distant speech recognition. IEEE Signal Proc. Lett. 21(9), 1120–1124 (2014)

Wang, Z., Yan, W., Oates, T.: Time series classification from scratch with deep neural networks: A strong baseline. In: 2017 international joint conference on neural networks (IJCNN), pp. 1578–1585. IEEE (2017)

Westlund, H.B., Meyer, G.W.: A BRDF database employing the Beard-Maxwell reflection model. In: Proceedings of Graphics Interface 2002, pp. 189–201 (2002)

Wetterer, C.J., Jah, M.K.: Attitude determination from light curves. J. Guid. Control Dyn. 32(5), 1648–1651 (2009)

Willison, A., Bédard, D.: A novel approach to modeling spacecraft spectral reflectance. Adv. Space Res. 58(7), 1318–1330 (2016)

Zamek, S., Yitzhaky, Y.: Turbulence strength estimation from an arbitrary set of atmospherically degraded images. J. Opt. Soc. Am. A 23(12), 3106–3113 (2006)

Zhang, T., Xie, L., Li, Y., Mallick, T., Wei, Q., Hao, X., He, B.: Experimental and theoretical research on bending behavior of photovoltaic panels with a special boundary condition. Energies 11(12), 3435 (2018)

Zhao, B., Lu, H., Chen, S., Liu, J., Wu, D.: Convolutional neural networks for time series classification. J. Syst. Eng. Electron. 28(1), 162–169 (2017)

Acknowledgements

This work has been funded by Georgia Tech Research Institute Internal Research and Development (IRAD) funding.

Author information

Authors and Affiliations

Corresponding author

Additional information

This article belongs to the Topical Collection: Advanced Maui Optical and Space Surveillance Technologies (AMOS 2020)

Guest Editors: James M. Frith, Lauchie Scott, Islam Hussein

Appendices

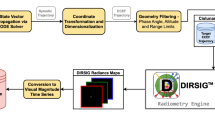

Appendix A: Light Curve Simulation

1.1 A.1 Propagation of Signal from RSO in Orbit to Telescope Aperture

The total irradiance, \(M_\mathrm{orbit}\), in units of \(\mathrm{Watts/m^2}\) onto a facet of an RSO in orbit can be obtained by modeling the sun as an idealized blackbody and assuming that the sun is an isotropic point source [6]:

where \(T_\mathrm{sun}\) is the temperature of the sun, \(K_B\) is Boltzmann’s constant, c is the speed of light, \(\lambda\) is the wavelength of interest, h is Planck’s constant, \(1 \, AU\) is the mean earth-sun distance and \(r_{sun}\) is the radius of the sun. The wavelength-dependent irradiance was integrated over the upper (\(\lambda _U\)) and lower transmission (\(\lambda _L\)) bounds of the spectral filter of interest to give the wavelength-independent irradiance in this equation.

We assume that the distance between facets along the RSO is sufficiently small relative to the RSO-to-sun distance, \(R_\mathrm{RSO/sun}(t)\), that \(M_\mathrm{orbit}\) can be considered constant over all RSO facets. The radiance, \(L_{\mathrm{orbit}_j}\), leaving the \(j^{th}\) facet of the RSO body into a given observer direction (\(\theta _r(t) \, , \phi _r(t)\)) can be written according to:

where (\(\theta _i(t) \, , \phi _i(t)\)) is the time-dependent direction of the sun relative to the facet in the body-frame, \(\hat{n}^B_j\) is the unit normal vector denoting the orientation of the \(j^{th}\) facet relative to the body frame, \(\hat{s}^B\) is the unit normal vector specifying the solar orientation in the body frame, and \(\rho _j\) is the observer/illumination direction dependent BRDF of the \(j^{th}\) facet’s material. Note that the BRDF of spacecraft materials has been shown to be highly dependent on wavelength [4, 46], but is taken to be averaged over the range of the spectral filter [\(\lambda _L \, , \lambda _U\)] in this study. The observer-illuminator geometry parameters \(\Big ( \theta _i(t) \, , \phi _i(t) \, ; \theta _r(t) \, , \phi _r(t) \Big )\) are all time-dependent due to the attitude and orbit of the RSO, but for simplicity, the time dependence will be dropped from this point forward.

The average irradiance onto an observing telescope aperture due to the \(j^{th}\) facet, \(E_{ap_j}\), in units of Watts/m\(^2\) can then be written by taking into account the solid angle subtended by the RSO:

where \(\hat{o}^B\) is the unit normal vector specifying the observer orientation in the body frame, \(A_j\) is the surface area of the \(j^{th}\) facet, \(\tau _\mathrm{atm}(\theta _r)\) is the zenith-angle dependent atmospheric transmittance, and \(R_\mathrm{RSO/obs}(t)\) is the time dependent distance of the RSO to the observer telescope aperture.

In order to obtain the total expected irradiance, \(E_{ap}\), onto the aperture, the signal due to all facets is summed:

The flux due to a point source that reaches the focal plane, \(\varPhi _\mathrm{FPA}\), in units of [Watts] can be written according to:

where \(\tau _\mathrm{opt}(\bar{\lambda })\) is the average optical transmittance of the filter over the bandpass of interest, \(D_{ap}\) is the aperture diameter, and \(\bar{\lambda } = (\lambda _L + \lambda _U )/2\) is the average wavelength under consideration.

The signal can then be converted to units of \(e^-\)/second by taking into account the wavelength of bandpass of interest and the quantum efficiency of the focal plane array electronics [49]:

1.2 A.2 Propagation of Energy From Sky Background Onto Focal Plane Array

By assuming that the sky background is an extended source, we can derive the spectral irradiance onto a pixel along the focal plane array according to the following equation for a telescope of \(f_\# = f/D_{ap}\) , where f is the focal length of the telescope [10]:

where \(L_\mathrm{sky}\) is the photon radiance at the telescope aperture due to background sky pollution in units of photons/s/m\(^2\)/sr [10]:

In the above equation \(m_{v_{0}}\) is the magnitude of the Vega star which serves as the magnitude-zero source [6], and \(I_\mathrm{sky}\) is the local background sky radiant intensity due to light pollution, moonlight, and starlight in units of visual magnitude per arcsec\(^2\).

A final expression for the photon flux per pixel due to sky background, \(q_{p, \mathrm{sky}}\), in units of \(e^-\)/pixel/second can be written as [49]:

where p is the pixel pitch.

1.3 A.3 Energy Loss Due to Atmospheric Turbulence Blur and Sampling

Inhomogeneities in the temperature of air within the atmosphere cause refractive index variations over the path from the surface of the earth to space. This refractive index gradient along the propagation path causes light to be received in a large variety of angles of incidence at the optical aperture [59]. Over short integration time scales (on the order of a few milliseconds), propagation through a turbulent atmosphere leads to phenomena such as image dancing, while over long time scales (on the order of hundreds of milliseconds) many dancing resolved images are collected which leads to image blurring [18]. Long-term blurring effects on imagery collected from optical telescopes arise primarily due to the effects of atmospheric turbulence on the phase rather than the amplitude of the propagating wavefront [32]. Consequently, in an imaging context, it can be assumed that energy is conserved when examining the effects of atmospheric turbulence on the received irradiance at the telescope aperture.

A plane wave approximation is met when the path length difference from an RSO in orbit to points along the extent of the observer aperture is negligible compared to the wavelength [59]:

For the observer-to-RSO distances and optical wavelengths considered in this paper, Eq. 20 is met and a plane wave treatment is used.

For a plane wave, the full-width half maximum (FWHM) of the point spread function (PSF) due to atmospheric seeing is approximately parameterized by the wavelength-dependent Fried parameter of the atmosphere at a wavelength of 500 nm, \(r_0(\theta _r)\): [18]. The value of \(r_0\) is non-linearly dependent on the observation zenith angle, \(\theta _r\). The equation for the dependence of the Fried parameter on zenith angle for a plane wave under the assumption of a constant atmospheric turbulence profile is given by [18]:

where \(C_n^2\) is the altitude-independent refractive index structure constant. Equation 21 has been empirically shown to be valid for observing zenith angles up to 45 degrees [32].

For a moving RSO, the number of pixels occupied by the RSO along the sensor focal plane, m(t), grows according to:

where IFOV is the instantaneous field of view of a pixel on the sensor focal plane in units of radians/pixel, \(m_0(\lambda , t)\) is the number of pixels initially subtended by the RSO, \(t_\mathrm{int}\) is the integration time of the telescope system, and \(\omega\) is the angular velocity of the RSO in units of rad/sec under the assumption of a telescope operating in stare mode [10]. The IFOV of the telescope is defined by \(\mathrm{IFOV} = 2 \arctan (p/2f)\), where p is the pixel pitch, and f is the lens focal length.

The wavelength dependent number of pixels occupied by an RSO of projected area \(A_{SO}\) at a distance \(R_\mathrm{RSO/obs}(t)\) to the telescope can then be approximately defined as [10]:

Equation 23 gives the number of pixels across which the signal due to the RSO will be smeared. However, algorithms for calculating light curves will normally only integrate over a small rectangular transect that is aligned along the streak of the light curve in a collected image. In this paper, we assume that the rectangular extent has a transverse side length of \(\Delta l\). The total number of pixels over which a light curve extraction algorithm will integrate is then given by:

The fractional amount of energy lost due to the sampling algorithm of a streak detector can then be approximately calculated by \(m_{\Delta l} / m(\lambda , t)\).

In this study, the parameter \(r_0\) is defined for the site of interest at zenith angle \(\theta _r = 0\) degrees using measures of atmospheric seeing obtained from previous studies [9, 32]. Equation 21 is then inverted to determine \(C_n^2\) and the resultant value is used to infer the value of \(r_0(\theta _r)\) for the RSO geometries over the light curve time series.

1.4 A.4 Noise Terms

The instantaneous Signal-to-Noise Ratio (SNR) of an RSO observed by a telescope on the ground can be derived by modeling a number of noise terms, including (1) the photon arrival process on the telecope’s CCD as a Poisson process, (2) dark current of sensor electronics, and (3) read noise in the process of conversion from analog to digital units. By assuming a constant background noise spectral signature, the instantaneous SNR can be written as [48]:

where \(q_{p,dark}\) is the dark noise of the CCD [\(e^-/\mathrm{pixel/s}\)], \(q_\mathrm{sky}(\lambda )\) is the wavelength-dependent sky signature reaching a pixel of the focal plane [\(e^-/s\)], \(q_\mathrm{read}\) is the read noise of the sensor electronics [\(e^-/\mathrm{pixel/s}\)], \(t_\mathrm{int}\) is the sensor integration time, m(t) is the wavelength- and time- dependent number of pixels occupied by the RSO along the focal plane, and n is a pixel binning factor.

The mean and variance of a Poisson distribution representing the photon arrival rate are equal to the rate parameter of the distribution [10]. Using this representation in combination with the assumption of independence of the random variable noise terms in Eq. 25, the mean and variance of the received light curve signal, \(s_r(t)\), in units of electrons can be written as a Gaussian distribution following [48]:

where the term \(m_{\varDelta l} / m(t)\) is meant to signify the loss of a percentage of RSO signal due to the sampling of the light streak algorithm. Using Eq. 26, the instantaneous signal received due to the RSO for a given light track is derived as a random variable that is meant to simulate the combined effects of motion blur, optical blur, and noise due to the electronics of the system.

This term can be converted into Digital Counts (DC) by using knowledge of the full-well depth of sensor in units of electrons, \(N_\mathrm{well}\), and of the dynamic range of the system in units of bits, \(N_{DR} = 2^b\). Under this assumption, the received signal in units of electrons can be converted into digital counts according to:

where the integer operator truncates the signal and further contributes to the noise of the received signal.

Appendix B: Beard–Maxwell BRDF Model Parameters

In Eq. 7, the incident and exitant polar angles are specified by the time series of solar, observer, and macro-facet orientations within the body frame:

The half-angle between the incident and exitant directions (\(\theta _n\)) and the phase angle of the solar-observer geometry (\(\beta\)) are written respectively as:

The term \(R_f(\beta ; \, n, \, k)\) denotes the effective Fresnel reflectance of a material with complex index of refraction of \(n^* = n \, + \, i k\), perpendicular reflectance coefficient of \(r_s\), and parallel reflectance coefficient of \(r_p\). The values of n and k capture the wavelength dependency of first surface reflection in terms of the index of refraction and extinction coefficients of the material [39]. In this study, the values of the Beard–Maxwell model are fit over a broad bandpass such that the values of n and k are considered to be averaged over wavelength:

The term \(\rho _{fs}(\theta _n)\) denotes the first-surface reflectance. This term represents light being reflected in the specular direction off of a collection of micro-facets determined by a Cauchy distribution that make up the texture of the \(j^{th}\) macro-facet pointing in direction \(\hat{n}^B_j (t)\) [42, 56]:

where \(\sigma\) is the mean square value of the micro-facet slope normal directions of the distribution, and B is a scaling parameter meant to fit the overall magnitude of the specular reflectance.

The shadowing and obscuration term, \(S(\theta _n, \, \beta )\), accounts for the height distribution of the micro-facets. This function was derived according to fitting free parameters using empirical data [39]. This function, can be written as [42]:

where the free parameters \(\varOmega\) and \(\psi\) modify the falloff of the shadowing function in the forward-scattered and back-scattered directions, respectively.

Finally, the terms \(\rho _D\) and \(\rho _V\) represent the diffuse and directional volumetric reflectance of the material, respectively. Note that in the original Beard–Maxwell model, only one volumetric component was utilized [39]. In the enhanced version of the model used in this study both the Lambertian reflectance, \(\rho _D\), and the directional diffuse reflectance, \(\rho _V\), are used as fitting parameters [56].

Rights and permissions

About this article

Cite this article

Badura, G.P., Valenta, C.R. & Gunter, B. Convolutional Neural Networks for Inference of Space Object Attitude Status. J Astronaut Sci 69, 593–626 (2022). https://doi.org/10.1007/s40295-022-00309-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40295-022-00309-z