Abstract

Purpose of review

Computing advances over the decades have catalyzed the pervasive integration of digital technology in the medical industry, now followed by similar applications for clinical nutrition. This review discusses the implementation of such technologies for nutrition, ranging from the use of mobile apps and wearable technologies to the development of decision support tools for parenteral nutrition and use of telehealth for remote assessment of nutrition.

Recent findings

Mobile applications and wearable technologies have provided opportunities for real-time collection of granular nutrition-related data. Machine learning has allowed for more complex analyses of the increasing volume of data collected. The combination of these tools has also translated into practical clinical applications, such as decision support tools, risk prediction, and diet optimization.

Summary

The state of digital technology for clinical nutrition is still young, although there is much promise for growth and disruption in the future.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Accelerating advances in the power and utilitarian benefit of digital technology have led to its pervasive integration into society. Each step throughout the day—from the moment we awaken to the sound of an alarm to the moment calming music is played before bedtime—digital devices play a central role in these functions. Virtually all industries, including those unrelated to technology, now rely on digital technology in some form to capture data, perform calculations, and automate processes. Nonetheless, beyond the sheer scale of availability and assimilation of such technologies in society is the remarkable computing power within reach. For instance, over 80% of Americans today wield more computing power in their palms than the Apollo 11 guidance computer that first landed Neil Armstrong on the moon [1]. Such advances in processing power and data storage sizes, and an inverse reduction in cost per gigaflop or gigabyte, respectively, have similarly led to development of increasingly sophisticated applications. Storage and analysis of “big data” no longer require supercomputers that occupy large climate-controlled rooms. In the medical industry, immense amounts of clinical and administrative data are routinely collected by health networks to evaluate trends in care delivery, identify areas for cost reduction, and perform outcomes-based research. Artificial intelligence (AI) has been harnessed to help understand complex biological phenomena, diagnose diseases, predict clinical outcomes, and design novel therapeutics. The ubiquity of mobile and smart devices even provide opportunities for more personalized real-time data collection, data synthesis, analysis, and feedback at the consumer to enterprise levels.

Following the rise of the medical technology (“medtech”) industry, there has been a burgeoning interest in the application of similar core technologies for nutrition. These technologies offer the opportunity to optimize nutrition, as information about diet intake, interpretation of the diet in the context of the person and their health, and the ability to generate practical feedback are important features of clinical nutrition. Implementation into clinical practice may range from the use of mobile apps to track diet intake and the use of wearable technologies to collect supportive data to the development of decision support tools for parenteral nutrition and the use of telehealth for remote assessment of nutrition.

Mobile Applications

Mobile health applications represent an opportunity for increased patient engagement, data gathering, and remote monitoring of outcomes outside of the healthcare facility. There now exist an estimated 165,000 publicly available mobile health apps with wellness management and disease management in leading areas [2]. In 2020, the mobile health market was valued at 40 billion dollars and is expected to grow 17.7% from 2021 to 2028 [3]. Use of mobile apps for monitoring health data is thus a growing area and surveys of mobile phone users in the United States indicate that 58% of mobile phone users have downloaded a health-related app to their device [4]. Among registered dietitians, nearly 83% report use of mobile apps in their practice [5]. Despite widespread use among individuals, apps remain an ongoing area of investigation for its use in healthcare management.

Applications focused on diet and weight loss are widely utilized, and it is estimated that over 10,000 apps are currently available for diet and weight loss [6]. A 2015 systematic review and meta-analysis of studies evaluating mobile apps for weight loss showed a mean reduction of body mass index (BMI) by 0.43 kg/m2 among mobile app users, and users may benefit from more continuous feedback on their health interventions [7]. For a healthcare provider, mobile apps may provide further opportunities to assess dietary patterns of patients instead of relying on dietary recalls alone. This feature may provide added value to the nutrition care process, as patients often under-report their intake or engage in recall bias due to factors such as body dissatisfaction or desire for social approval [8].

Diet-focused apps vary widely in terms of their functions and ease of use. Self-monitoring of diet and physical activity are commonly integrated features and individuals may record their dietary intake and physical activity, while establishing goals to meet in these areas, thereby receiving continuous data feedback on their behavior [•9]. Apps may also be purveyors of health information, including tips on weight management or diet, whether or not this has been vetted or verified by healthcare professionals and experts. Other apps may have a component of social engagement with the ability to interact on group forums or connect with other users. Features among nutrition apps vary widely, yet all share some commonality in terms of tracking the user’s day-to-day dietary intake and physical activity. Examples of applications in the weight loss space employing such features are shown in Table 1.

Another area of interest in mobile app nutrition includes those catered to specific disease states. Apps for diabetes, for example, may provide users with a better understanding of how their blood sugar management relates to their diet and behavior. While a number of applications exist, a 2018 technical brief by the Agency for Healthcare Research and Quality evaluated currently available mobile apps for diabetes self-management and found that among hundreds of apps for diabetes, studies showed that only 5 were associated with clinically meaningful improvements in biomarkers such as hemoglobin A1c (HgbA1c) [10]. The report concluded that more longitudinal, high-quality studies are needed. As diabetes represents but one of many disease states that may benefit from mobile app use, rigorous studies evaluating outcomes in app use for disease management are often outpaced by the development and widespread use of the apps. For patients with diabetes, apps such as Day Two, Glucose Buddy, and Dario Health may be utilized (Table 2). In addition to diabetes, gastrointestinal disease is another area where mobile apps may play a role in monitoring and management. Some of the applications in this area are shown in Table 3.

Given the multitude of mobile health apps available and widespread use among individuals, clinicians may benefit from employing these tools in their practices. While many barriers currently exist related to collection and use of personal data (e.g., assurance of accuracy privacy concerns, regulatory oversight, or lack of integration into the healthcare system) [2], these challenges may be addressed through future collaboration with research and healthcare institutions. While mobile apps may be an imperfect process at this time, they still provide value to patients looking to utilize more data and engagement for improving health outcomes.

Wearable Technologies

Another emerging technology in health care is wearable devices. While a variety of consumer health monitoring devices relevant to clinical nutrition assessment exists, this section will focus on noninvasive wearable technologies, which are defined as compact devices that present information to users, enable user interactions, and are meant to be worn on the body [11]. Wearable devices relevant to clinical nutrition care discussed here focus on the widely used smartwatches, the more experimental wearable devices for dietary assessment, and emerging wearable device technologies.

Smartwatches for Nutrition Assessment

Smartwatches are by far the most popular wearable health monitoring devices [12]. Smartwatch technologies have rapidly advanced in the past ten years, initially starting with simple pedometers to current devices with increasing health monitoring capabilities [13]. Smartwatches for health-care monitoring tend to utilize one or often a combination of the following technologies: accelerometer, pedometer, gyroscope, heart rate, electrocardiography (ECG), pulse oximetry, altimeter, barometer, proximity, microphone, camera, compass, global positioning system (GPS), and/or long-term evolution (LTE) communication [14]. Smartwatches may have started out as standalone devices, but given the greater capabilities (e.g., higher computing power and connectivity) of smartphones, the current smartwatches are meant to be paired with mobile applications to enhance the user experience. Table 4 provides a list of the features of the current popular smartwatches that are relevant to clinical nutrition care.

Smartwatches allow patients to passively gather data about their activities of daily living. These patient-generated health data can then be shared with healthcare providers. A recent qualitative study found that healthcare providers valued patient smartwatch data, because these data can be used to initiate productive discussions and inform patient care decisions [15]. Smartwatches can be used to gather baseline data and track patient progress and efficacy of interventions. For example, a decline in functional status is one of the recommended criteria for the identification and documentation of malnutrition [16]. Activity trackers can help clinicians monitor and evaluate any trends in functional decline or improvement through tracking activities of daily living. Of note, smartwatches have been shown to more effectively recognize hand-based activities (e.g., eating, typing, playing catch, etc.) when compared to smartphones, but specific activity tracking recognition technology still requires much fine tuning [17]. Using machine learning approaches, future development work can expand to recognition of specific activities with more accuracy.

The most recent technology advance in smartwatches is the addition of cardiovascular health measures. As shown in Table 4, popular smartwatches include features to monitor heart rate, monitor blood oxygen levels, take ECG, and/or track heart rate variability. In 2017, the Apple Watch accessory wristband, the Kardia Band, was the first to receive Food and Drug Administration (FDA) clearance for detection of atrial fibrillation, the most commonly encountered arrhythmia in clinical practice [18, 19]. Since then, Apple Watches have evolved to include inherent ECG monitoring capabilities with the newer series watches (Series 4 and higher) not requiring an accessory wristband to monitor and detect atrial fibrillations [18,19,20]. Primary research indicates direct-to-consumer smartwatches that passively detect atrial fibrillations can be useful in clinical settings. However, the algorithms used for specific and sensitive detection of arrhythmias will need to be further validated [21, 22]. Overall, cardiovascular health data provided by smartwatches can be used by nutrition care providers to integrate into their nutritional assessments to monitor and evaluate the efficacy of nutrition interventions for patients.

Wearable Devices for Dietary Assessment

Currently, much of dietary self-assessment occurs via mobile applications (discussed in section “Mobile Applications”) that allow users to actively maintain digital dietary records. Patients often show their digital food diaries to clinicians during nutrition visits. Despite still being in the prototype stages and with limited utility in the clinical setting, wearable dietary monitors are being developed as a new method to passively capture dietary intake [23]. The most promising wearable dietary intake sensors currently being researched are sound, image, and/or motion [23].

Acoustic-based food intake wearable devices utilize microphones to detect chewing and/or swallowing patterns that could theoretically give insight into the type and/or relative quantity of food being eaten. For example, in a study using a tiny microphone embedded in an ear device, researchers created a sound-based recognition system that was able to distinguish between three test foods (potato chips, lettuce, and apple) with 94% accuracy [24]. The system analyzed acoustic variables (structural and timing) associated with chewing and used this data to predict bite weight, defined as “a quantity of food amount that is ingested into the mouth with each bite taken.” Bite weight prediction models were selected based on recognized food types. While this study was limited in the types of foods detected, one can imagine that the employment of machine learning can be used to advance this field of sound-based dietary intake sensors in food intake tracking.

Image-based food intake wearable devices use cameras to classify foods and/or estimate portion sizes. One notable wearable image-based device is the eButton, which is a miniature computer with a camera embedded in a 6 cm (or 2.4 in) diameter button, meant to be worn on the chest [23, 25,26,27,28]. The eButton automatically takes images at a preset rate of a meal being eaten and theoretically, the images can be analyzed by an algorithm to detect the food item and portion size based on environmental cues such as plate and eating utensils [28].With food item and portion size information, the calories and nutrients data can subsequently be obtained from a linked dietary database. Other wearable devices that capture digital images in dietary assessment typically require subsequent coding by nutritionists to identify the type and amount of foods eaten [26]. With smartphones being more commonplace, patients may opt to use mobile applications that utilize the camera on their smartphones to take food images for dietary assessment [25], although using smartphones for dietary assessment would require active rather than the passive data collection offered by wearable devices.

Motion-based dietary assessment wearable devices are often worn on the wrist to track wrist movements during eating. These devices integrate an accelerometer and/or a gyroscope to record lifting, turning, and/or rotation movements of the wrist to count bites as a proxy marker for caloric intake [23, 27]. As these devices are meant to be worn all day, the sensor needs to be able to distinguish between eating events and non-eating wrist movements. Researchers have tested devices that are able to pick out periods of eating during all-day tracking with good accuracy [29]. Algorithms to estimate number of bites using inertial data and predictive equations to estimate caloric intake associated with a single bite have been developed [30, 31]. It should be noted that these devices need to be worn on the dominant hand used for eating.

Importantly, these wearable dietary intake sensors are still in development. Future work will need to focus on algorithms that can distinguish between different foods, especially solid versus liquid foods, more accurately estimate food portions and volumes, and remove background sound, image, and motion data in real-world environments that are not related to food ingestion. It has been noted that fusion devices that track sound, image, and motion could be developed and coupled with smartphone applications via Bluetooth technology to allow higher computing power and a more sophisticated user interface [28, 32]. In fact, one can imagine adding the detection of eating events and estimation of caloric intake from activity data to current smartwatch features.

Future Direction of Wearable Devices

Recently released wearable devices reveal the trajectory of the industry. One challenge unique to wearable devices is that device size can limit computing power and battery life. Ideally, wearable devices should be lightweight, comfortable to wear, and power efficient. The trend in pairing wearable devices with smartphone applications is a solution to creating a small, easy-to-wear device with increasing monitoring capabilities. Given that smartphones are ubiquitous nowadays, new and future wearable devices will likely rely on mobile applications for user interface.

As an example, the Amazon Halo wristband health monitor was released in Fall 2020 and there is no screen on the device [33]. The Amazon Halo wristband not only tracks activity (intensity and duration and sedentary time) but also monitors sleep, tracks heart rate, estimates body composition, and detects mood (via tone of voice analysis). The use of the Amazon Halo and its features does require a smartphone and a subscription membership ($3.99/month) with Amazon. The most novel and controversial aspects of the Amazon Halo are its ability to estimate percent body fat from a three-dimensional model of the user’s body based on photos and its tone analysis aimed to help the user communicate more effectively with others [34]. The body composition estimation feature is of interest in nutrition assessment. The mobile application associated with the Amazon Halo creates a three-dimensional model of the user and allows the user to simulate what they may look like with more or less body fat. This wearable device has not yet been studied in clinical trials, so the accuracy of the body composition analyses when compared to other clinical methods (such as bioelectrical impedance analysis and whole-body dual-energy X-ray absorptiometry) remains to be seen. Healthcare concerns have also been raised, especially related to potential body dysmorphia and particularly in adolescent populations, as a result of this body composition feature. Another concern is the clinically appropriate interpretation of the body composition results [35]. While many reference ranges for body fat percentages have been published for various populations, there is no consensus on what is considered normal body fat percentage. There is no one-size-fits-all algorithm to appropriately interpret body composition results. Future algorithm development will need to take into account as many factors as possible (e.g., genetics, ethnicity, fitness, dietary intake, etc.) to present results in the context of overall personalized health.

Another emerging technology in wearable devices is the goal of creating a noninvasive blood glucose monitor. At the January 2021 Consumer Electronic Show (CES) virtual conference, a Japanese startup company, Quantum Operation Inc., showcased a prototype noninvasive glucose monitor [36]. The Quantum Operation Inc. Glucose Monitor looks like a smartwatch and houses a small spectrometer used to scan the blood through the skin for glucose concentrations [37]. The company supplied a sampling of data comparing their monitor’s blood glucose measurements with those taken using a commercial glucometer, the FreeStyle Libre [36]. The sampling data shows variation between the data collected by the Quantum Operation glucose monitor and continuous blood glucose measurements.

As wearable devices move from the realm of wellness monitoring to become more medical devices (where information obtained from devices will be used to make medical decisions), they will be subject to regulation by the FDA [•13]. In anticipation, the FDA has been working with wearable device manufacturers, such as Apple, and introduced the Digital Health Software Precertification (Pre-Cert) Program for low-risk device approval [38]. The purpose of the FDA Pre-Cert Program is to “provide more streamlined and efficient regulatory oversight of software-based medical devices developed by manufacturers who have demonstrated a robust culture of quality and organizational excellence, and who are committed to monitoring real-world performance of their products once they reach the U.S. market.”

A recent review of scientific literature in the field of wearable health monitoring technologies revealed the top concerns regarding wearable devices are security and safety, especially if the data from wearable devices are used for medical decisions [39]. A major advantage of using wearable devices for assessing health is the fact that this technology does not rely on subjective information and removes the burden of self-reporting. Wearables can be helpful in clinical interventions as they provide real-time information to users and have the potential to change immediate behaviors. Ideally, a wearable device should transmit real-time data to a patient’s healthcare team, and clinical interventions would be determined based on that data. Moving forward, in order for consumer-based wearable devices to be more widely used in clinical practice, there needs to be ways for user data to be privately and securely shared with clinicians. Ultimately, clinicians will play an integral role in interpretation of wearable device monitoring data, as “data must be interpreted before they can be considered real information” [40]. It is important for clinicians to be familiar with current and emerging wearable devices and their limitations as they help patients transform collected data into useful information for making healthcare decisions.

Artificial Intelligence

AI is broadly defined as the application of computers to independently or semi-independently perform functions that mimic human intellect. Machine learning, a subset of AI, involves computer algorithms that process and learn from data without requiring explicit programming to define each step. Deep learning is a further subset of AI and machine learning that involves the use of multi-layered neural networks (“deep learning”) to permit far more complex analyses. As suggested in the term, neural networks were designed to mimic the complex neuronal network architecture of the human brain. Similar to how a person learns principles through repeated observations, large datasets are typically required to train the AI system how to interpret data. The AI system can nonetheless improve (“learn”) over time as it gets exposed to more data. Training can be performed in a supervised, semi-supervised, or unsupervised manner. Supervised learning involves the use of labeled data that “teach” the system about the meaning and relationships within the data, such as labeling fruit images with their respective identifiers (e.g., apple, orange, lemon) to allow the system to identify shared and unique features inherent in each fruit type. Unsupervised learning involves the processing of data without human intervention, such as pattern recognition of unlabeled data for clustering, anomaly detection, or reduction of complex data. Basic machine learning functions can include pattern detection, prediction, classification, language processing, and image recognition. These functions can be seen in consumer-oriented services, such as spam filters, real estate price estimators, video recommender systems, chatbots, and facial recognition. These functions can similarly be ported to applications in nutrition. Some domains include diet optimization, food image recognition, risk prediction, and diet pattern analysis.

Diet Optimization

In an early demonstration of the utility of machine learning for personalized nutrition, Israeli investigators collected one-week data on the diet, anthropometrics, blood parameters (e.g., blood glucose, hemoglobin A1c, cholesterol), lifestyle (e.g., physical activity, sleep), and the gut microbiota in a cohort of 900 healthy individuals [41]. When applying the “carbohydrate counting” approach to estimating post-prandial glycemic response (PPGR), the correlation between a meal’s carbohydrate content and PPGR was statistically significant but modest (R = 0.38). The machine learning model that considered the participant-specific data was ,however, more effective at predicting PPGR in both the training (n = 800; R = 0.68) and validation (n = 100; R = 0.70) cohorts. To demonstrate the use of PPGR prediction for diet interventions, the investigators conducted a trial on 26 healthy participants who first received instructions on a dietitian-designed diet based on their individual dietary preferences and constraints. Participants underwent another week of diet, blood, and activity profiling while on their custom diets, similarly as the original 900 participants. The 26 participants were then randomized to either the “prediction arm” or the “expert arm.” In the former arm, the machine learning algorithm was used to design a “good” diet composed of low-predicted PPGR and a “bad” diet composed of high-predicted PPGR specific to the individual. In the latter arm, clinical experts designed the “good” and “bad” diets based on prior knowledge of foods with high glycemic burden. Participants would consume both “good” and “bad” diets in independent weeks. For the prediction arm, 83% (10/12) of participants had significantly higher PPGR when consuming the “bad” diet than the “good” diet. For the expert arm, similar trends were observed in 57% (8/14) of participants. This technology has since been commercialized with the Day Two mobile application on the front (Table 2).

Food Image Recognition

Image recognition is a popular function of deep learning. In health care, deep learning has been used for analyzing radiographic images for diagnosis of pneumonia, dermal images for identification of melanoma, and endoscopic images for detection of colonic polyps [42,43,44]. For nutrition, a natural use of deep learning would be food image recognition. Early models that trained with 50,000 food images could reach a reasonable accuracy of 78 to 92% for identifying the pre-categorized food image [45]. The top-5 accuracy (where the computer provides its top 5 guesses) was even higher at 91 to 98% accuracy. While these models prove the feasibility for AI to detect food images with reasonable accuracy, a primary limitation is the artificial method to train and test the system. That is, the training dataset would include a finite number of labeled food items and the testing or validation datasets would include the same catalog of labeled food items. However, in a real-world scenario, there are innumerable types of food items. With an early neural network model developed by our laboratory, we were able to achieve similar training and validation performance as the other models, while using 222,285 curated images representing 131 pre-defined food categories [46]. However, in a prospective analysis of real-world food items consumed in the general population, the accuracy plummeted to 0.26 and 0.49, respectfully. Future refinement of AI for food image recognition would, therefore, benefit on training models with a significantly broader diversity of food items that may have to be adapted to specific cultures.

Risk Prediction

Conventional approaches for analyzing data, such as visualization for trends or use of multivariable regression models, often suffice. On the other hand, the advantage of machine learning is its ability to parse large high-dimensional data to identify complex patterns that would otherwise have been hidden. In an analysis of six waves of the National Health and Nutrition Examination Survey (NHANES) and the National Death Index, investigators compared the ability of Cox proportional hazards and machine learning to predict 10-year cardiovascular disease-related mortality in 29,390 individuals [•47]. The Cox proportional hazards model that included age, sex, black race, hispanic ethnicity, total cholesterol, high-density lipoprotein cholesterol, systolic blood pressure, antihypertensive medication, diabetes, and tobacco use appeared to significantly overestimate risk. The addition of dietary indices did not change model performance, while the addition of 24-h diet recall worsened performance. By contrast, the machine learning algorithms had superior performance than all Cox models.

4.4 In a prospective study conducted by the University of Athens (Greece), 2583 participants completed a baseline questionnaire, dietary evaluation, and 10-year follow-up [48]. The dietary instrument was the European Prospective Investigation into Cancer and Nutrition (EPIC)-Greek questionnaire. Multiple linear regression and machine learning were compared in their ability to predict the 10-year Cardiometabolic Health Score (a composite of cardiovascular disease, diabetes mellitus, hypertension, and hyperlipidemia). The linear regression models had a predictive accuracy of 16 to 22% when classifying the dietary pattern within the same tertile as the Cardiometabolic Health Score; the machine learning models had a higher accuracy around 40%.

Diet Pattern Analysis

In a prospective study of 7572 pregnant women in the Nulliparous Pregnancy Outcomes Study: monitoring mothers to be (nuMoM2b), participants completed the Block 2005 Food Frequency Questionnaire to reflect their typical dietary intake around 3 months prior to conception [49]. The investigators compared multivariable logistic regression with machine learning to estimate the risk of adverse pregnancy outcomes based on fruit and vegetable consumption. Among women in the ≥ 80th percentile of total fruit or vegetable density consumption, there was a modestly lower incidence of preterm birth, small-for-gestational-age births, gestational diabetes, or pre-eclampsia. The logistic regression model did not identify an association between fruit and vegetable consumption and adverse pregnancy outcomes, while the machine learning model found that the highest fruit or vegetable consumers had lower risk of preterm birth, small-for-gestational-age birth, and pre-eclampsia.

Digital Technology and Nutrition Support

The nutrition support team (NST) is a specialized team that provides expertise and guidance to medical teams on the nutritional needs of patients [50]. NST members vary across institutions but may be compromised of physicians, advanced practice providers, dieticians, nurses, and pharmacists. Digital innovations, improvements, and integrations into the electronic health record (EHR) have impacted nutrition care provided by the NST over a spectrum of activities, such as diagnosis and coding, treatment interventions, and follow-up care [51].

Since the NST is typically a consultative service, an important first step is the recognition of a nutritional concern, such as malnutrition, by the primary team [52]. Malnutrition is a clinical condition where the patient is undernourished and not meeting their nutritional needs [52, 53]. Recognition of malnutrition is important because it is associated with increased morbidity and mortality [52, 54]. Numerous validated malnutrition screening tools (e.g., NRS-2002, MUST, SGA) have been created and can be utilized for screening [52]. Importantly, the widespread adoption of EHRs has enabled the integration of these screening tools into clinical workflows, such as admission orders or office visit intakes. In addition, the use of embedded screening tools in the EHR allows the ability to capture the discrete data and can drive clinical decision support processes that can be configured to notify the NST to perform a formal malnutrition assessment [51, 55]. If malnutrition is not initially present, patients can be rescreened at regular intervals for ongoing assessment during the course of longitudinal care of the patient. One of the strengths of the EHR is the ability to capture data for trending and activation of alerts if clinical decision support logic is met.

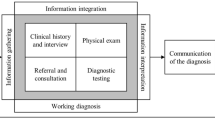

After a positive malnutrition screen, the NST performs a nutrition assessment of the patient to determine if the patient meets criteria for malnutrition. The nutrition assessment includes food intake and nutrition history, anthropometrics, nutrition focused physical exam, and review of clinical and medical history as well as review of tests and procedures [51]. A study by McCamley et al. demonstrated that after implementation of an EHR, there was not only a 72% increase in admissions requiring nutrition intervention but also the time spent per nutrition event was reduced by 22% post-EHR implementation. If after the nutrition assessment by the NST, the patient meets clinical criteria for malnutrition, the NST member or clinician should then accurately document and code the malnutrition diagnosis. Importantly, this has implications that not only can affect patient care but also is important for appropriate coding, billing, and reimbursement [51, 56, 57].

For patients unable to meet their nutritional needs through enteral means alone, parenteral nutrition (PN) is a nutritional modality that can be utilized by the NST to meet the patient’s nutritional needs through intravenous rather than enteral route. PN is a complex admixture that contains both macro- and micronutrients along with electrolytes and trace elements. Although PN can be lifesaving and life-sustaining, it is a high-risk treatment that has the potential to harm patients if ordered or administered incorrectly [58]. In order to reduce the chance of error, PN is recommended to be ordered through a computerized provider order entry system (CPOE) [59]. By utilizing CPOE, alerts can be developed to notify the prescriber if doses exceed the recommended or safe clinical limits or exceed the limits of compatibility. After entry of the PN order, another digital advance is the integration of the EHR to the automated compounding device (ACD) that prepares the PN. The digital link from the order to the ACD eliminates any manual transcription, including handwritten, verbal, or fax transmissions in the PN workflow thereby reducing the chance for error and harm to patients [58].The supporting evidence for this workflow was highlighted in a 2016 study from a large academic pediatric hospital where the frequency of PN errors was 0.27% (230 errors/84,503 PN prescriptions) with no errors due to transcription [60].

Like PN, enteral nutrition (EN) has also benefited from the digital advances in health care. The number of EN products available on the market today is large, and hospitals often also have a number of these products available on their formulary to order for patients. In addition, capturing the EN product used as well as documenting the amount used is important for the overall care of patients. To address these issues, Kamel et al. demonstrated the steps for development of an electronic nutrition administration record (ENAR) with a linked nutrition tab in their EHR [61]. Working with the EHR vendor, they were able to create order panels that standardized the EN ordering process. After EN administration, the amount of EN was documented and captured for inclusion in the patient’s ins and outs, which is important for ongoing clinical volume assessment.

Lastly, the coronavirus disease 2019 (COVID-19) pandemic brought about significant changes in healthcare delivery [62]. NSTs were not spared as from the system-wide disruption and were required to adapt how they practiced nutrition care [63]. One significant advancement was the use of a virtual care model [62,63,64,65]. In this type of model, the patients and the care team do not need to be physically co-located and the patient is not seen in a traditional face-to-face visit. The hospital or clinic visit is instead conducted through a virtual format such as video, telephone, or electronic consultation. The significance of the virtual visit is that it allows the continued involvement of specialized teams, such as an NST, to be involved in the patient care with lowered risk of infection transmission for providers and patients. Meyer et al. published their experience with creation and experience with virtual NST model in a multisite healthcare system [•66]. With implementation of the virtual NST, they demonstrated improved appropriateness of PN use (97.2% vs 58.9%) and also improved glycemic control (83.5% vs 62.2%).

Keating et al. published their work assessing the agreement and reliability between clinician-measured and patient self-measured clinical and functional assessments for use in remote monitoring, in a home-based setting, using telehealth [67]. They noted that patient self-assessed clinical and functional outcome measures for metabolic health and fitness had good agreement and reliability on average with face-to-face clinician-assessed outcome measures, but that aside from body weight, no clinical or functional outcome was deemed acceptable when compared with minimal clinically important difference. As health systems increasingly develop hybrid care pathways incorporating both in-person and remote nutritional assessments, there is an increasing need for the development of standardized measures for remote nutritional assessments [•68]. Additionally, development of virtual care pathways will need to consider patient training to improve the uptake and reliability of patient home-based health assessments and anthropometric measurements.

Conclusion

The adoption of digital devices and AI have opened exciting avenues for personalized nutrition and optimization of nutrition care. Mobile applications and wearable technologies have since facilitated longitudinal, real-time, and multi-type data collection, while advances in computing power and refinements in machine learning algorithms have permitted high-dimensional analyses of large datasets to generate meaningful observations. The multi-modal integration of technology has, thus, allowed for development of sophisticated applications in medicine and nutrition. Similarly, digital health has improved the quality and safety of nutrition support care, while telehealth helped preserve this quality of care during the COVID-19 pandemic. As the application of cutting-edge digital technologies lags in nutrition relative to the medical or other consumer-oriented industries, disruptive technologies in nutrition are still forthcoming but near. As such, continued research and development in these areas will indubitably produce technological innovations for nutrition that would have once been relegated to science fiction just a few years ago.

References

Recently published papers of particular interest have been highlighted as: • Of importance

Pew Research Center. Mobile Fact Sheet [Internet]. Available from: https://www.pewresearch.org/internet/fact-sheet/mobile/. Accessed 12 Feb 2021

Kao C-K, Liebovitz DM. Consumer mobile health apps: current state, barriers, and future directions. PM&R. 2017;9(5S):S106–15.

Grand View Research. mHealth Apps Market Size, Share & Trends Analysis Report By Type (Fitness, Medical), By Region (North America, APAC, Europe, MEA, Latin America), And Segment Forecasts, 2021 - 2028. 2021. Available from: https://www.grandviewresearch.com/industry-analysis/mhealth-app-market. Accessed 2 March 2021

Krebs P, Duncan DT. Health app use among US mobile phone owners: a national survey. JMIR MHealth UHealth. 2015;3(4):e101.

Sauceda A, Frederico C, Pellechia K, Starin D. Results of the academy of nutrition and dietetics’ consumer health informatics work Group’s 2015 member app technology survey. J Acad Nutr Diet. 2016;116(8):1336–8.

Azar KMJ, Lesser LI, Laing BY, Stephens J, Aurora MS, Burke LE, et al. Mobile applications for weight management: theory-based content analysis. Am J Prev Med. 2013;45(5):583–9.

Flores MG, Granado-Font E, Ferré-Grau C, Montaña-Carreras X. Mobile phone apps to promote weight loss and increase physical activity: a systematic review and meta-analysis. J Med Internet Res. 2015;17(11):253.

Ventura AK, Loken E, Mitchell DC, Smiciklas-Wright H, Birch LL. Understanding reporting bias in the dietary recall data of 11-year-old girls. Obes Silver Spring Md. 2006;14(6):1073–84.

•Ghelani DP, Moran LJ, Johnson C, Mousa A, Naderpoor N. Mobile Apps for weight management: a review of the latest evidence to inform practice. Front Endocrinol. 2020;11:412. Review of mobile applications for weight management.

U.S. Department of Health and Human Services. Mobile Applications for Self-Management of Diabetes. Rockville, MD: Agency for Healthcare Research and Quality; 2018. (AHRQ Publication).

Iqbal MH, Aydin A, Brunckhorst O, Dasgupta P, Ahmed K. A review of wearable technology in medicine. J R Soc Med. 2016;109(10):372–80.

Wurmser YW 2019 [Internet]. eMarketer Insider Intelligence. 2019. Available from: https://www.emarketer.com/content/wearables-2019. Accessed 28 Jan 2021

•Foster KR, Torous J. The opportunity and obstacles for smartwatches and wearable sensors. IEEE Pulse. 2019;10(1):22–5. Concise summary of the implications of using smartwatches and wearable sensors in medical care

King CE, Sarrafzadeh M. A survey of smartwatches in remote health monitoring. J Healthc Inform Res. 2018;2(1):1–24.

Alpert JM, Manini T, Roberts M, Kota NSP, Mendoza TV, Solberg LM, et al. Secondary care provider attitudes towards patient generated health data from smartwatches. NPJ Digit Med. 2020;3(1):1–7.

White JV, Guenter P, Jensen G, Malone A, Schofield M, Academy Malnutrition Work Group et al. Consensus statement: Academy of Nutrition and Dietetics and American Society for Parenteral and Enteral Nutrition: characteristics recommended for the identification and documentation of adult malnutrition (undernutrition). JPEN J Parenter Enteral Nutr. 2012;36(3):275–83.

Weiss GM, Timko JL, Gallagher CM, Yoneda K, Schreiber AJ. Smartwatch-based activity recognition: A machine learning approach. In: 2016 IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI). 2016. pp. 426–9.

Isakadze N, Martin SS. How useful is the smartwatch ECG? Trends Cardiovasc Med,. 2020;30(7):442–8.

Muoio D. AliveCor ends sales of KardiaBand, its ECG accessory for Apple Watches [Internet]. MobiHealthNews. 2019. Available from: https://www.mobihealthnews.com/news/north-america/alivecor-ends-sales-kardiaband-its-ecg-accessory-apple-watches. Accessed 1 Feb 2021.

Apple Watch Series 6 [Internet]. Apple. 2021. Available from: https://www.apple.com/apple-watch-series-6/. Accessed 1 Feb 2021

Bumgarner JM, Lambert CT, Hussein AA, Cantillon DJ, Baranowski B, Wolski K, et al. Smartwatch algorithm for automated detection of atrial fibrillation. J Am Coll Cardiol. 2018;71(21):2381–8.

Tison GH, Sanchez JM, Ballinger B, Singh A, Olgin JE, Pletcher MJ, et al. Passive detection of atrial fibrillation using a commercially available smartwatch. JAMA Cardiol. 2018;3(5):409–16.

Vu T, Lin F, Alshurafa N, Xu W. Wearable food intake monitoring technologies: a comprehensive review. Computers. 2017;6(1):4.

Amft O, Kusserow M, Tröster G. Bite weight prediction from acoustic recognition of chewing. IEEE Trans Biomed Eng. 2009;56(6):1663–72.

Boushey CJ, Spoden M, Zhu FM, Delp EJ, Kerr DA. New mobile methods for dietary assessment: review of image-assisted and image-based dietary assessment methods. Proc Nutr Soc. 2017;76(3):283–94.

Eldridge AL, Piernas C, Illner A-K, Gibney MJ, Gurinović MA, De Vries JH, et al. Evaluation of new technology-based tools for dietary intake assessment: an ilsi europe dietary intake and exposure task force evaluation. Nutrients. 2019;11(1):55.

Magrini ML, Minto C, Lazzarini F, Martinato M, Gregori D. Wearable devices for caloric intake assessment: state of art and future developments. Open Nurs J. 2017;11(1):232–40.

Sun M, Burke LE, Mao Z-H, Chen Y, Chen H-C, Bai Y, et al. eButton: a wearable computer for health monitoring and personal assistance. In: Proceedings of the 51st annual design automation conference. 2014. pp. 1–6.

Dong Y, Scisco J, Wilson M, Muth E, Hoover A. Detecting periods of eating during free-living by tracking wrist motion. IEEE J Biomed Health Inform. 2014;18(4):1253–60.

Dong Y, Hoover A, Scisco J, Muth E. A new method for measuring meal intake in humans via automated wrist motion tracking. Appl Psychophysiol Biofeedback. 2012x;37(3):205–15.

Salley JN, Hoover AW, Wilson ML, Muth ER. Comparison between human and bite-based methods of estimating caloric intake. J Acad Nutr Diet. 2016;116(10):1568–77.

Boland M, Bronlund J. eNutrition-The next dimension for eHealth? Trends Food Sci Technol. 2019;91:634–9.

Amazon. Amazon Halo- Health & wellness band [Internet]. 2021. Available from: https://www.amazon.com/Amazon-Halo-Fitness-And-Health-Band/. Accessed 29 Jan 2021

Bohn D. Amazon announces Halo, a fitness band and app that scans your body and voice [Internet]. The Verge. 2020. Available from: https://www.theverge.com/2020/8/27/21402493/amazon-halo-band-health-fitness-body-scan-tone-emotion-activity-sleep. Accessed 1 Feb 2021

Chen BX. Amazon Halo Review: The Fitness Gadget We Don’t Deserve or Need. The New York Times [Internet]. 2020; Available from: https://www.nytimes.com/2020/12/09/technology/personaltech/amazon-halo-review.html. Accessed 1 Feb 2021

Cooper D. Startup claims its new wearable can monitor blood sugar without needles [Internet]. Engadget. 2021. Available from: https://www.engadget.com/quantum-operation-inc-wearable-glucose-121015450.html. Accessed 1 Feb 2021

Quantum Operation Co. Quantum Operation Co., Ltd.-To become a leading company in an era when you have to protect your own health-[Internet]. 2021. Available from: https://quantum-op.co.jp/. Accessed 1 Feb 2021

FDA Center for Devices and Radiological. Digital Health Software Precertification (Pre-Cert) Program [Internet]. FDA. FDA. Available from: https://urldefense.com/v3/https://www.fda.gov/medical-devices/digital-health-center-excellence/digital-health-software-precertification-pre-cert-program;F9wkZZsI-LA!Wai7u74fKJw4By4v0GuOnAbAW9crwf8Jz7tAYhEwshIvE-e9Fqduch90L2ajbh5wjlbsk $[fda[.]gov]. Accessed 27 Jan 2021

Loncar-Turukalo T, Zdravevski E, Machado da Silva J, Chouvarda I, Trajkovik V. Literature on wearable technology for connected health: scoping review of research trends, advances, and barriers. J Med Internet Res. 2019;21(9):14017.

Buus-Frank M. Nurse versus machine: slaves or masters of technology? J Obstet Gynecol Neonatal Nurs JOGNN. 1999;28(4):433–41.

Zeevi D, Korem T, Zmora N, Israeli D, Rothschild D, Weinberger A, et al. Personalized nutrition by prediction of glycemic responses. Cell. 2015;163(5):1079–94.

Li L, Qin L, Xu Z, Yin Y, Wang X, Kong B, et al. Using artificial intelligence to detect COVID-19 and community-acquired pneumonia based on pulmonary CT: evaluation of the diagnostic accuracy. Radiology. 2020;296(2):E65-71.

Tschandl P, Rinner C, Apalla Z, Argenziano G, Codella N, Halpern A, et al. Human-computer collaboration for skin cancer recognition. Nat Med. 2020;26(8):1229–34.

Byrne MF, Chapados N, Soudan F, Oertel C, Linares Pérez M, Kelly R, et al. Real-time differentiation of adenomatous and hyperplastic diminutive colorectal polyps during analysis of unaltered videos of standard colonoscopy using a deep learning model. Gut. 2019;68(1):94–100.

Shen Z, Shehzad A, Chen S, Sun H, Liu J. Machine learning based approach on food recognition and nutrient estimation. Procedia Comput Sci. 2020;174:448–53.

Limketkai BN, Ebriani J, Amundson A, Raj FP, Grover C, Canlian N, et al. Convolutional neural network for assessment of dietary intake. Gastroenterology. 2021;

•Rigdon J, Basu S. Machine learning with sparse nutrition data to improve cardiovascular mortality risk prediction in the USA using nationally randomly sampled data. BMJ Open. 2019;9(11):032703. Use of machine learning to analyze nutrition data for risk prediction of cardiovascular mortality

Panaretos D, Koloverou E, Dimopoulos AC, Kouli G-M, Vamvakari M, Tzavelas G, et al. A comparison of statistical and machine-learning techniques in evaluating the association between dietary patterns and 10-year cardiometabolic risk (2002–2012): the ATTICA study. Br J Nutr. 2018;120(3):326–34.

Bodnar LM, Cartus AR, Kirkpatrick SI, Himes KP, Kennedy EH, Simhan HN, et al. Machine learning as a strategy to account for dietary synergy: an illustration based on dietary intake and adverse pregnancy outcomes. Am J Clin Nutr. 2020;111(6):1235–43.

Ukleja A, Gilbert K, Mogensen KM, Walker R, Ward CT, Ybarra J, et al. Standards for nutrition support: adult hospitalized patients. Nutr Clin Pract Off Publ Am Soc Parenter Enter Nutr. 2018;33(6):906–20.

Kight CE, Bouche JM, Curry A, Frankenfield D, Good K, Guenter P, et al. Consensus recommendations for optimizing electronic health records for nutrition care. J Acad Nutr Diet. 2020;120(7):1227–37.

Cederholm T, Jensen GL, Correia MITD, Gonzalez MC, Fukushima R, Higashiguchi T, et al. GLIM criteria for the diagnosis of malnutrition - A consensus report from the global clinical nutrition community. Clin Nutr Edinb Scotl. 2019;38(1):1–9.

Teigen LM, Kuchnia AJ, Nagel EM, Price KL, Hurt RT, Earthman CP. Diagnosing clinical malnutrition: perspectives from the past and implications for the future. Clin Nutr ESPEN. 2018;26:13–20.

Hudson L, Chittams J, Griffith C, Compher C. Malnutrition Identified by Academy of Nutrition and Dietetics/American Society for Parenteral and Enteral Nutrition is Associated With More 30-day readmissions, greater hospital mortality, and longer hospital stays: a retrospective analysis of nutrition assessment data in a major medical center. JPEN J Parenter Enteral Nutr. 2018;42(5):892–7.

Mogensen KM, Bouma S, Haney A, Vanek VW, Malone A, Quraishi SA, et al. Hospital nutrition assessment practice 2016 survey. Nutr Clin Pract Off Publ Am Soc Parenter Enter Nutr. 2018;33(5):711–7.

Giannopoulos GA, Merriman LR, Rumsey A, Zwiebel DS. Malnutrition coding 101: financial impact and more. Nutr Clin Pract Off Publ Am Soc Parenter Enter Nutr. 2013;28(6):698–709.

Doley J, Phillips W. Coding for malnutrition in the hospital: does it change reimbursement? Nutr Clin Pract Off Publ Am Soc Parenter Enter Nutr. 2019 Dec;34(6):823–31.

Vanek VW, Ayers P, Kraft M, Bouche JM, Do VT, Durham CW, et al. A call to action for optimizing the electronic health record in the parenteral nutrition workflow. Nutr Clin Pract Off Publ Am Soc Parenter Enter Nutr. 2018;33(5):e1-21.

Ayers P, Adams S, Boullata J, Gervasio J, Holcombe B, Kraft MD, et al. A.S.P.E.N parenteral nutrition safety consensus recommendations. JPEN J Parenter Enteral Nutr. 2014;38(3):296–333.

MacKay M, Anderson C, Boehme S, Cash J, Zobell J. Frequency and severity of parenteral nutrition medication errors at a large children’s hospital after implementation of electronic ordering and compounding. Nutr Clin Pract Off Publ Am Soc Parenter Enter Nutr. 2016;31(2):195–206.

Kamel AY, Rosenthal MD, Citty SW, Marlowe BL, Garvan CS, Westhoff L, et al. Enteral nutrition administration record (ENAR) prescribing process using computerized order entry: a new paradigm and opportunities to improve outcomes in critically ill patients. JPEN J Parenter Enteral Nutr. 2020.

Wosik J, Fudim M, Cameron B, Gellad ZF, Cho A, Phinney D, et al. Telehealth transformation: COVID-19 and the rise of virtual care. J Am Med Inform Assoc JAMIA. 2020;27(6):957–62.

Allan PJ, Pironi L, Joly F, Lal S, Van Gossum A. Home Artificial Nutrition & Chronic Intestinal Failure special interest group of ESPEN: an international survey of clinicians’ experience caring for patients receiving home parenteral nutrition for chronic intestinal failure during the COVID-19 Pandemic. JPEN J Parenter Enteral Nutr. 2021;45(1):43–9.

Ohannessian R, Duong TA, Odone A. Global Telemedicine Implementation and Integration Within Health Systems to Fight the COVID-19 Pandemic: A Call to Action. JMIR Public Health Surveill. 2020;6(2):18810.

Farid D. COVID-19 and telenutrition: remote consultation in clinical nutrition practice. Curr Dev Nutr. 2020;4(12):124.

•Meyer M, Hartwell J, Beatty A, Cattell T. Creation of a virtual nutrition support team to improve quality of care for patients receiving parenteral nutrition in a multisite healthcare system. Nutr Clin Pr. 2019. Implementation of a virtual nutrition support team

Keating SE, Barnett A, Croci I, Hannigan A, Elvin-Walsh L, Coombes JS, et al. Agreement and reliability of clinician-in-clinic versus patient-at-home clinical and functional assessments: implications for telehealth services. Arch Rehabil Res Clin Transl. 2020;2(3):100066.

•Bagni UV, da Silva Ribeiro KD, Bezerra DS, de Barros DC, de Magalhães Fittipaldi AL, da Silva Araújo RGP. Anthropometric assessment in ambulatory nutrition amid the COVID-19 pandemic: possibilities for the remote and in-person care. Clin Nutr ESPEN. 2021;41:186–92. Review of remote nutrition assessment during the COVID-19 pandemic.

Funding

None.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the Topical collection on Nutrition, Metabolism, and Surgery.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Limketkai, B.N., Mauldin, K., Manitius, N. et al. The Age of Artificial Intelligence: Use of Digital Technology in Clinical Nutrition. Curr Surg Rep 9, 20 (2021). https://doi.org/10.1007/s40137-021-00297-3

Accepted:

Published:

DOI: https://doi.org/10.1007/s40137-021-00297-3