Abstract

Background

The efficacy of just-in-time teaching (JiTT) screencasts for graduate medical education on an inpatient adult hematology-oncology service (HOS) setting is not known. Our preceding pilot data identified six high-yield topics for this setting. The study objective was to evaluate screencast educational efficacy.

Methods

Internal medicine residents scheduled to start a rotation on the primary HOS of an academic medical center were eligible for this parallel, unblinded, randomized controlled trial with concealed allocation. Participants underwent block randomization to the usual educational curriculum either with or without access to a series of novel screencasts; all participants received an anonymous online end-of-rotation survey and a $20 gift certificate upon completion. The primary outcome was the change in attitude among learners, measured as their self-reported confidence for managing the clinical topics.

Results

From 12/9/2019 through 6/15/2020, accrual was completed with 67 of 78 eligible residents (86%) enrolled and randomized. Analysis was by intention-to-treat and participant response rate was 91%. Sixty-four percent of residents in the treatment arm rated their clinical management comfort level as “comfortable” or “very comfortable” versus 21% of residents in the usual education arm (p = 0.001), estimated difference = 43% (95% CI: 21–66%), using a prespecified cumulative cutoff score. Treatment arm participants reported that the screencasts improved medical oncology knowledge base (100%), would improve their care for cancer patients (92%), and had an enjoyable format (96%).

Conclusion

Residents on a busy inpatient HOS found that a JiTT screencast increased clinical comfort level in the management of HOS-specific patient problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Among the internal medicine (IM) subspecialties, graduate medical education (GME) programs face a particular challenge in teaching hematology and oncology. The typical inpatient caseload is focused on patients who are acutely ill either due to their cancer, an exacerbation of a related comorbidity, or a complication from a cancer treatment. Cases are often complex and challenging for learners—as a result, medical oncology has been identified by IM residents as a key area in need of educational improvement [1, 2]. A Canadian national survey of trainees found oncology as the least adequately taught subspecialty with 63% of residents reporting their oncology education as inadequate [3]. Similarly, a needs assessment at a large IM residency program in the USA found higher dissatisfaction with oncology training compared to the other IM subspecialties [4]. Cancer care leads to a higher level of emotional intensity which contributes to trainee burnout [5]. New strategies are needed to teach this subspecialty to IM residents.

Historically, GME has been fully synchronous, with trainees learning all key concepts together in a physical or virtual classroom at the same time. But for learners on an inpatient adult hematology/oncology service (HOS), it is often infeasible to attend synchronous daytime sessions due to frequent schedule interruptions and intermittent night float cross-coverage shifts. On the other end of the teaching spectrum, fully asynchronous self-study courses have trainees learning independently at their own pace; for example, by taking a certification course in basic research practices or a podcast series of clinician expert interviews [6]. Although it is convenient, asynchronous learning is isolating and requires commitment from trainees that is unrealistic in the face of a heavy clinical workload. The “flipped classroom” is a middle-of-the-road hybrid that combines these two approaches, with trainees learning key concepts independently (asynchronously) and then meeting (synchronously) to discuss their findings and draw conclusions as a collaborative group. Although effective for undergraduate medical education [7], the flipped classroom may not be as feasible for GME due to its reliance on trainees to punctually learn key concepts and prevent the trainee-led classroom discussions from stalling.

Just-in-Time Teaching (JiTT) is another hybrid strategy closer on the spectrum to traditional learning since the majority of key concepts are taught in the instructor-led teaching session, but with additional preparatory self-study materials accessed by trainees beforehand. Ideally, these materials include content questions for a needs assessment that is received “just in time” for the teacher to optimize the content that he or she will cover in the classroom, thus creating an active feedback loop [8]. To promote adherence to pre-classroom preparation, self-study materials are intended to have an engaging and accessible format; for example, screencast audio/video recordings of a slide deck presentation with accompanying narration [9,10,11]. First described in 1999 for undergraduates in physics, JiTT has since been incorporated into a variety of educational settings [8, 12]. There is limited but promising data to support the implementation of JiTT into GME. Mangum et al. developed a JiTT online learning module for an inpatient pediatric oncology resident ward service and found that the majority of residents reported it as being helpful [13]. Similarly, Iverson et al. developed an oncology video curriculum for IM residents rotating through an outpatient clinic which had excellent learner feedback and subsequent improvements in their medical knowledge [4]. These single-arm studies suggested that oncology screencasts may be a more effective teaching method as compared to traditional learning alone. Although promising, more research is needed to justify the widespread implementation of JiTT since the majority of IM residency program directors (79%) do not currently have a budget to integrate online learning into GME [14].

Our earlier pilot study confirmed both the need for improved educational interventions and the feasibility of developing screencasts that were easily accessible and relevant to IM residents on a HOS [15]. Based on those data, we conducted this randomized controlled trial to test our hypothesis that a JiTT screencast series would improve learner knowledge of critical concepts for a HOS as compared to a standard educational curriculum alone.

Methods

Setting

The HOS for this study had a busy census that remained near its cap of 20 patients and was managed by a variety of learners—typically 5–6 residents (2 of whom were on night float), 2 medical students, 1 fellow, and 1 attending. The typical traditional educational curriculum included lectures and small group teaching sessions (1–2 h/day, Mon-Fri). On 3/12/20, initiation of social distancing for the COVID19 pandemic changed the format (switched from synchronous classroom to synchronous online) but did not disrupt either the content or schedule of the traditional educational curriculum.

Eligibility, Recruitment, and Randomization

This two-armed, randomized, controlled trial enrolled during a single academic year (2019–2020). All IM residents (post-graduate years [PGY] 1–3) who were scheduled to provide inpatient care on the primary teaching HOS were eligible. Recruitment took place at scheduled conferences as brief informational sessions (5 min) which included a study overview, printed materials, and light snacks. Recruitment materials were also emailed to all absent IM residents. To compensate for their time, all randomized participants who completed their end of rotation survey were provided a $20 USD Amazon gift card. No faculty participated in the recruitment process and participation was emphasized as being voluntary. Following enrollment and 1–2 weeks prior to his or her scheduled HOS rotation, each IM resident participant underwent block randomization either to screencast access (intervention) or usual education (control). Allocation was concealed by computer-based central randomization. Non-randomized study participants included medical students, fellows in hematology and oncology (PGY4–6), and faculty who covered the inpatient HOS during the study period; all of whom were given access to the intervention to evaluate secondary and exploratory outcomes. The study protocol received approval from the Institutional Review Board. The study was considered minimal risk and written short form consent was obtained from all participants.

Screencast Intervention

Content Development

A mixed-measures approach was used to produce an exhaustive list of relevant, rotation-specific topics. This list was then reviewed with a focus group of 3 faculty and 10 residents and narrowed down to six topics considered to be both crucial and unique to the HOS: venous thromboembolism, acute complications of sickle cell disease, infectious and metabolic emergencies, thrombocytopenia, brain metastases, and spinal metastases. Screencast content was primarily composed by one fellow (PK) and edited by faculty. Another focus group, including a representative sample of IM residents, provided quantitative/qualitative assessments of the preliminary screencasts as a pilot study to confirm feasibility and inform final edits [15]. To reduce study bias, the screencasts did not generate a needs assessment for the teacher (i.e., supervising faculty on the HOS) since it would only be collected from the treatment arm and would potentially be an unbalanced incentive.

Software

Screencast presentations were created in PowerPoint (Microsoft Corporation, Redmond, WA) with Camtasia Studio software (TechSmith Corporation, Okemos, MI) to superimpose audio narrations and visual notations. Audio narrations were recorded with a microphone (Blue Snowflake). Visual notations were recorded with a digital handwriting pad (WaCom Bamboo Tablet; Kazo, Saitama, Japan) to allow freehand sketching for a “chalk talk” feel. Final editing and video production were done by a third-party instructional design team (iCortex Instructional Design) which cost $50 USD/hour for 2.5–3.5 h of work per screencast (total cost $850 USD). Distribution of videos was on a secure cloud-based website (Microsoft Stream, Redmond, WA) which was readily accessible to all participants and provided a comments section for reviewers to provide feedback on the content. Study data were collected and managed using the secure, web-based Research Electronic Data Capture (REDCap) electronic data capture tool hosted at Wake Forest School of Medicine [16]. Statistical Analysis Software (SAS version 9.4, Cary, NC) was used for statistical analyses.

Production

Screencast style, length, and delivery were based on prior data from the pilot study. Most optimal screencast or podcast lengths have been recommended to be in the 5–30-min time frame [6, 17, 18]. Therefore, the run time of the six screencasts ranged from 13 to 25 min. The option to change the speed of screencast playback was identified as a critical feature [17] and was included with our screencasts (range: 0.5× to 2× speed). Other best practices included consistent production style to match content stability, development of a repeatable pattern, and investment in good visuals [18].

Access

All participants had access via computer and smartphone to the usual educational materials provided by the traditional educational curriculum on the cloud-based website. Within that server a private group was created to allow selective access to the screencasts as per protocol. Once starting the rotation, residents randomized to screencast access were provided with weekly email and text page notifications that contained instructions on how to access the screencasts.

Outcomes

Educational outcomes were classified via modified Kirkpatrick framework in the context of technology-enhanced learning in GME [19, 20]. The primary endpoint was improved knowledge in the management of critical and commonly encountered clinical problems on a HOS (Kirkpatrick level 2b) as measured by self-reported comfort among IM residents on the standard curriculum alone (control arm) as compared to IM residents who were also given web access to the screencast series (treatment arm). This was measured for each of the clinical topics by Likert scale (1–5: very uncomfortable, uncomfortable, neutral, comfortable, very comfortable) and participants who had a prespecified sum score of 21 or higher across all 6 topics were interpreted as being comfortable in HOS management. Secondary endpoints were measures of burnout (Kirkpatrick level 4a), improved knowledge base as tested by clinical vignettes (Kirkpatrick level 2b), promotion of professional development (Kirkpatrick level 2a), and learner satisfaction with the screencast format (Kirkpatrick level 1). Burnout was measured by the Maslach Burnout Inventory for medical personnel [21] using the overall score and the depersonalization subscale, which was more relevant to the intervention than the depersonalization or emotional exhaustion subscales. Clinical vignettes were tested as 6 multiple choice questions (MCQs) which included representative content from each of the 6 study topics. Exploratory endpoints were the above outcomes among a non-randomized group of medical students and fellows and the feasibility of a trainee to produce clinically relevant JiTT screencasts. All endpoints were measured by an end-of-rotation online survey which was sent to all randomized participants. Reminder emails and pages were then sent on a weekly basis until completion of the survey, withdrawal from the study, or 4 weeks after the end of the rotation.

Statistical Analyses

Based on the pilot study data, with two-sided alpha of 0.05, power of 80%, and sample size of 30 in each group, the study would be able to detect a difference between the expected 20% rate of being comfortable in the usual education group (control) and a rate of 55% in the study intervention group. Descriptive statistics, including means and standard deviations for continuous measures and frequencies and proportions for categorical ones, were calculated for all study measures. For categorical measures, Fisher’s exact tests were used to test for differences between study arms. For continuous measures, independent t-tests were used to test for differences between study arms; although for the unplanned social distancing analysis, Wilcoxon two-sample test was used due to the small size of this subgroup. The 95% confidence interval (CI) around the primary outcome was calculated using a Wald interval. p-values <0.05 were considered to be statistically significant.

Results

Out of 106 total IM residents at our institution, 78 residents were eligible for participation and 67 were enrolled and underwent randomization. Overall response rate to the end of rotation survey was high at 91%. Of the 33 residents allocated to the intervention arm, 5 residents were lost to follow-up. Of the 34 residents allocated to the control arm, 1 resident was lost to follow-up. Enrollment took place between 12/9/2019 to 6/15/2020 and the study had full accrual with all follow-up completed on 6/30/20. Demographic and baseline characteristics were well matched between the two treatment arms (Table 1) with no significant differences apart from a higher prevalence of male gender in the intervention arm (p 0.02 by Fisher’s exact test). Among all randomized participants who responded to the end of rotation survey, the majority (40, 65%) were interns (PGY1) as opposed to supervisory residents (PGY2–3).

Educational Efficacy

When measured cumulatively across all 6 clinical topics, the majority (64%) of participants in the intervention arm were either “comfortable” or “very comfortable” overall which was significantly higher than residents in the control arm (21%, Table 1) for an estimated difference of 43% (95% CI: 21–66%). When excluding the 7 residents (control = 3, intervention = 4) who previously participated in the pilot study, the results of the primary outcome were similar, with the primary outcome occurring in 63% of the treatment arm as compared to 20% of residents in the usual education arm. For each clinical topic, more participants were comfortable in the treatment arm than in the control arm. Burnout as tested by the Maslach Burnout Inventory was not different between the screencast and control arms in terms of total score or the personal accomplishment subscale. Medical knowledge as tested by 6 MCQs was not different between the screencast and control arms.

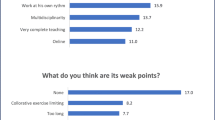

Learner Satisfaction with the Screencast Format

Feedback for the screencasts from the randomized participants was positive (Table 2). Nearly all participants within the treatment arm felt that a similar database of screencasts would be helpful for all of their clinical rotations. Nearly all participants on the treatment arm either agreed or strongly agreed that the screencasts improved their knowledge base in medical oncology, would improve their care for cancer patients, and had an enjoyable format (Table 3). Most participants on the treatment arm felt that the intervention was optimal in terms of content, length, and accessibility. Preferred viewing speed for the screencasts was 1.5×. Although not the target audience for the screencasts, exploratory data was also collected from non-randomized participants with responses obtained from 6 medical students, 8 fellows, and 6 faculty who covered the HOS during the study period. Half of the students (3, 50%) would recommend the screencasts for their IM clerkship. The majority of fellows and faculty were neutral in their interest in contributing to (7/13, 54%) or producing (10/13, 77%) educational screencasts; one participant did not respond to either question. Screencast series creation was feasible for a second-year fellow (PK) within a 12-week research block and promoted professional development as a physician educator.

COVID19 Social Distancing

As an unplanned analysis, we analyzed outcomes among the subgroup of participants who completed their study assessment while social distancing for the COVID19 pandemic (n = 28). Regardless of social distancing, there appeared to be a higher comfort level in the screencast arm as compared to the control arm, although for the social distancing subgroup this no longer met statistical significance (supplementary graph 1). Burnout by total score remained the same between arms either before (72 vs. 70, p 0.50) or while social distancing (70 vs. 69, p 0.67). Burnout by personal accomplishment subscale remained the same between arms either before (46 vs. 43, p 0.23) or while social distancing (46 vs. 49, p 0.71). Medical knowledge testing by MCQs remained the same between arms either before (78% vs. 81%, p 0.77) or while social distancing (76% vs. 79%, p 0.56).

Discussion

JiTT screencasts covering critical and commonly encountered problems on an inpatient HOS were effective in increasing resident comfort level in clinical management. The intervention had a high level of learner satisfaction with nearly all participants advocating for its expanded use in their GME. We also gained insight into the optimal format in terms of topic selection, length (20 min), and speed (1.5×). The treatment arms were well-balanced although notable for a higher proportion of male participants in the intervention arm. If there was a gender difference in educational outcomes, then it was likely insufficient to account for the interventional effect found by our study. We also found variability in medical knowledge outcomes between topics; this may have reflected differences either in the quality of the screencasts or in the potential of topics to be taught via screencasts—further research is needed to explore this further. To our knowledge, this is the first randomized study that has tested the efficacy of screencasts in the GME setting.

These data inform modifications to the traditional GME curriculum on HOS to better accommodate the modern scheduling structure of IM residency programs. First, the night float system reduces access to synchronous learning due to the need for protected sleeping hours during the daytime. This intervention included all residents covering the HOS, including those on night float coverage. Second, a rapidly increasing number of IM residency programs in the USA have adopted the “X+Y” format to better balance the training environment between the inpatient and outpatient settings. Residents have dedicated ambulatory weeks (“Y weeks”) where didactic sessions and administrative time occur prior to the start of the next inpatient block (“X weeks”) [22]. This intervention was accessible to the trainees as preparatory learning during their more flexible administrative time prior to starting their HOS rotation; thus optimizing their time. Finally, an unplanned and underpowered exploratory analysis suggested similar results among the subgroup of participants who were socially distanced for the COVID-19 pandemic. This finding and the completed accrual of the study are suggestive that screencasts easily permit social distancing for programs with multiple training sites or during infectious outbreaks [23]. Many of the challenges addressed by this intervention are not unique to a HOS and nearly all participants (96%) reported that a similar database of screencasts would be a helpful educational tool for their other clinical rotations as well.

The intervention also demonstrated the feasibility of creating effective educational screencasts for a HOS, the type of aesthetically appealing technology that is favored among the young adult population which makes up the vast majority of IM residents [24]. Creation of educational screencasts for a HOS can serve as professional development for a fellow, the majority of whom anticipate teaching as part of their future careers (79%) [25]. This study confirmed the feasibility of a fellow designing and completing an effective screencast series over 12 weeks. We also found that one third of fellows and attendings would be interested in creating their own educational screencasts using a similar format.

Our study has limitations; namely that accrual was limited to a single institution and not powered for the secondary or exploratory outcomes. Additionally, the short quiz of just six MCQs and the burnout measurement at only one timepoint may have lacked sensitivity for these outcomes. The treatment arm was provided with weekly instructions to facilitate online access since the intervention was provided in a new area of the residency website; this discrepancy may have introduced some bias. For all participants in both arms, survey completion was compensated with a $20 USD gift card which may have also increased utilization of the screencasts. However, adult learners are most interested in learning about topics that have immediate relevance and a strong impact upon their job, therefore, we are optimistic that screencasts may be increasingly utilized in the real-world setting.

Future steps to confirm the real-world efficacy of JiTT screencasts would be to measure utilization outside the research setting and to test the impact of such an intervention upon shelf or certifying exam scores and ideally upon patient outcomes. Larger studies should also confirm that this intervention is equally effective for the minority (10–20%) of IM residents who are on a career path towards cancer care; a subgroup comparison that was outside of the scope of our study. Working with institutional leaders to secure resources such as medical educator training, instructional design services, and adequate time to devote to the creation of JiTT screencasts would be optimal.

Conclusions

This randomized medical education study found that a novel, asynchronous series of screencasts increased resident comfort level in the management of clinical problems commonly seen on an inpatient HOS. Screencast content and style were viewed as overwhelmingly positive with participants advocating for its use on other clinical rotations. On the basis of these findings, learning health systems should examine the utility of JiTT screencasts to optimize GME in hematology and oncology.

References

Younis T, Colwell B (2018) Oncology education for internal medicine residents: a call for action! Curr Oncol 25:189–190

Mohyuddin GR, Dominick A, Black T et al (2020) A critical appraisal and targeted intervention of the oncology experience in an internal medicine residency. J Cancer Educ. https://doi.org/10.1007/s13187-020-01766-6

Nixon NA, Lim H, Elser C, Ko YJ, Lee-Ying R, Tam VC (2018) Oncology education for Canadian internal medicine residents: the value of participating in a medical oncology elective rotation. Curr Oncol 25:213–218

Iverson N, Subbaraj L, Babik JM, Brondfield S (2021) Evaluating an oncology video curriculum designed to promote asynchronous subspecialty learning for internal medicine residents. J Cancer Educ 36:422–429. https://doi.org/10.1007/s13187-021-01968-6

McFarland DC, Holland J, Holcombe RF (2015) Inpatient hematology-oncology rotation is associated with a decreased interest in pursuing an oncology career among internal medicine residents. J Oncol Pract 11:289–295

Ahn J, Inboriboon PC, Bond MC (2016) Podcasts: accessing, choosing, creating, and disseminating content. J Grad Med Educ 8:435–436

Chen F, Lui AM, Martinelli SM (2017) A systematic review of the effectiveness of flipped classrooms in medical education. Med Educ 51:585–597

Brame C, Director CA (2018) Just-in-time teaching (JiTT). Center for Teaching, Vanderbilt University Retrieved from https://cft.vanderbilt.edu/guides-sub-pages/just-in-time-teaching-jitt/. Accessed on 11/2/20

Greene SJ (2018) The use and effectiveness of interactive progressive drawing in anatomy education. Anat Sci Educ 11:445–460

Pickering JD (2017) Measuring learning gain: comparing anatomy drawing screencasts and paper-based resources. Anat Sci Educ 10:307–316

Ramar K, Hale CW, Dankbar EC (2015) Innovative model of delivering quality improvement education for trainees--a pilot project. Med Educ Online 20:28764

Novak GM (1999) Just-in-time teaching: blending active learning with Web Technology. San Francisco: Benjamin Cummings publishing company

Mangum R, Lazar J, Rose MJ, Mahan JD, Reed S (2017) Exploring the value of just-in-time teaching as a supplemental tool to traditional resident education on a busy inpatient pediatrics rotation. Acad Pediatr 17:589–592

Wittich CM, Agrawal A, Cook DA, Halvorsen AJ, Mandrekar JN, Chaudhry S, Dupras DM, Oxentenko AS, Beckman TJ (2017) E-learning in graduate medical education: survey of residency program directors. BMC Med Educ 17:114

Kuhlman P, Williams D, Amornmarn A et al (2020) Just-in-time teaching (JiTT) screencasts for the resident inpatient oncology service: a pilot study evaluating feasibility and effectiveness. J Clin Orthod 38:11025–11025

Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG (2009) A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 42:377–381

Pettit RK, Kinney M, McCoy L (2017) A descriptive, cross-sectional study of medical student preferences for vodcast design, format and pedagogical approach. BMC Med Educ 17:89

Norman MK (2017) Twelve tips for reducing production time and increasing long-term usability of instructional video. Med Teach 39:808–812

Boet S, Sharma S, Goldman J, Reeves S (2012) Review article: Medical education research: an overview of methods. Can J Anesth/Journal canadien d’anesthésie 59:159–170

Cook DA, Ellaway RH (2015) Evaluating technology-enhanced learning: a comprehensive framework. Med Teach 37:961–970

Maslach C, Jackson SE, Leiter MP (1996) MBI: Maslach burnout inventory. CPP, Incorporated Sunnyvale

Noronha C, Chaudhry S, Chacko K, McGarry K, Agrawal A, Yadavalli G, Shalaby M (2018) X+ Y scheduling models in internal medicine residency programs: a national survey of Program Directors’ Perspectives. Am J Med 131:107–114

Dedeilia A, Sotiropoulos MG, Hanrahan JG et al (2020) Medical and surgical education challenges and innovations in the COVID-19 Era: a systematic review. In Vivo 34:1603–1611

Roberts DH, Newman LR, Schwartzstein RM (2012) Twelve tips for facilitating Millennials’ learning. Med Teach 34:274–278

McSparron JI, Huang GC, Miloslavsky EM (2018) Developing internal medicine subspecialty fellows’ teaching skills: a needs assessment. BMC Med Educ 18:221

Acknowledgements

Additional feedback on educational content provided by Christina Cramer, MD, and Carol Geer, MD.

Availability of Data and Material

All data and materials are available for readers upon request.

Code Availability

Not applicable.

Funding

Research reported in this publication was supported by the National Cancer Institute’s Cancer Center Support Grant award number P30CA012197 issued to the Wake Forest Baptist Comprehensive Cancer Center.

Author information

Authors and Affiliations

Contributions

- PK: Conceptualization, methodology, funding acquisition, software, validation, investigation, formal analysis, data curation, writing, editing, visualization.

- DW: Conceptualization, methodology, validation, editing, supervision.

- GR: Conceptualization, methodology, formal analysis, editing.

- AA: Validation, editing.

- JH: Validation, editing.

- RW: Validation, editing.

- TL: Conceptualization, methodology, funding acquisition, formal analysis, writing, editing, visualization, supervision, project administration.

Corresponding author

Ethics declarations

Ethics Approval and Consent to Participate

This study was approved by the Institutional Review Board at Wake Forest School of Medicine and was performed in accordance with the ethical standards as laid down in the 1964 Declaration of Helsinki and its later amendments. Written short form consent was obtained from all participants.

Consent for Publication

Not applicable.

Conflict of Interest

Co-owner of Certus Critical Care, Inc., medical device company without devices on the market (DW). Advisory board for Sanofi (RW).

Disclaimer

The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Cancer Institute.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(PNG 188 kb)

Rights and permissions

About this article

Cite this article

Kuhlman, P.D., Williams, D., Russell, G. et al. Just-in-Time Teaching (JiTT) Screencasts: a Randomized Controlled Trial of Asynchronous Learning on an Inpatient Hematology-Oncology Teaching Service. J Canc Educ 37, 1711–1718 (2022). https://doi.org/10.1007/s13187-021-02016-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13187-021-02016-z