Abstract

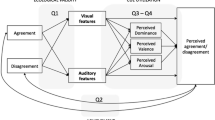

In this paper we present a multimodal analysis of emergent leadership in small groups using audio-visual features and discuss our experience in designing and collecting a data corpus for this purpose. The ELEA Audio-Visual Synchronized corpus (ELEA AVS) was collected using a light portable setup and contains recordings of small group meetings. The participants in each group performed the winter survival task and filled in questionnaires related to personality and several social concepts such as leadership and dominance. In addition, the corpus includes annotations on participants’ performance in the survival task, and also annotations of social concepts from external viewers. Based on this corpus, we present the feasibility of predicting the emergent leader in small groups using automatically extracted audio and visual features, based on speaking turns and visual attention, and we focus specifically on multimodal features that make use of the looking at participants while speaking and looking at while not speaking measures. Our findings indicate that emergent leadership is related, but not equivalent, to dominance, and while multimodal features bring a moderate degree of effectiveness in inferring the leader, much simpler features extracted from the audio channel are found to give better performance.

Similar content being viewed by others

References

Aran O, Gatica-Perez D (2011) Analysis of group conversations: modeling social verticality. In: Gevers T, Salah AA (eds) Computer analysis of human behavior. Springer, London, pp 293–322

Aran O, Hung H, Gatica-Perez D (2010) A multimodal corpus for studying dominance in small group conversations. in: Workshop international conference on language resources and evaluation, LREC

Ba S, Odobez J (2009) Recognizing visual focus of attention from head pose in natural meetings. Syst Man Cybern Part B Cybern IEEE Trans 39(1):16–33

Ba S, Odobez J (2011) Multi-person visual focus of attention from head pose and meeting contextual cues. Pattern Anal Mach Intell IEEE Trans 33(1):101–116

Baird JE (1977) Some non-verbal elements of leadership emergence. Southern Speech Commun J 42(4):352–361

Bales R, Strodtbeck F (1951) Phases in group problem-solving. J Abnorm Soc Psychol 46:485–495

Burger S, MacLaren V, Yu H (2002) The ISL meeting corpus: the impact of meeting type on speech style. In: International conference on spoken language processing, Interspeech-ICSLP

Campbell N, Sadanobu T, Imura M, Iwahashi N, Noriko S, Douxchamps D (2006) A multimedia database of meeting and informal interactions for tracking participant involvement and discourse flow. In: Workshop international conference on language resources and evaluation, LREC

Carletta J, Ashby S, Bourban S, Flynn M, Guillemot M, Hain T, Kadlec J, VK, Kraaij W, Kronenthal M, Lathoud G, Lincoln M, Lisowska A, McCowan I, Post W, Reidsma D, Wellner P (2005) The AMI meeting corpus: a pre-announcement. In: Workshop on machine learning and multimodal interaction, ICMI-MLMI

Chen L, Rose TR, Parrill F, Han X, Tu J, Huang Z, Harper M, Quek F, McNeill D, Tuttle R, Huang T (2005) Vace multimodal meeting corpus. In: Workshop on machine learning and multimodal interaction, ICMI-MLMI

Cook M, Smith JMC (1975) The role of gaze in impression formation. Br J Soc Clin Psychol 14(1):19–25

Costa P, McCrae R (1992) NEO PI-R profesional manual.

Dovidio J, Ellyson S (1982) Decoding visual dominance: Attributions of power based on relative percentages of looking while speaking and looking while listening. Soc Psychol Q 45(2):106–113

Dunbar NE, Burgoon JK, Dunbar NE, Burgoon JK (2005) Perceptions of power and interactional dominance in interpersonal relationships. J Soc Pers Relationsh 22(2):207–233

Efran J (1968) Looking for approval: effects of visual behavior of approbation from persons differing in importance. J Pers Soc Psychol 10(1):21–25

Garofolo I, Michel M, Laprun C, Stanford V, Tabassi E (2004) The NIST meeting room pilot. In: International conference on language resources and evaluation, LREC

Gatica-Perez D (2006) Analyzing group interactions in conversations: a review. In: International conference on multisensor fusion and integration for intelligent systems, pp 41–46

Gatica-Perez D (2009) Automatic nonverbal analysis of social interaction in small groups: a review. Image Vis Comput 1(12)

Hall JA, Coats EJ, Smith L (2005) Nonverbal behavior and the vertical dimension of social relations: a meta-analysis. Psychol Bull 131(6):898–924

Harrigan J (2005) Proxemics, kinesics, and gaze. The new handbook of methods in nonverbal behavior research. pp 137–198

Hung H, Jayagopi DB, Ba S, Odobez JM, Gatica-Perez D (2008) Investigating automatic dominance estimation in groups from visual attention and speaking activity. In: International conference on multimodal interfaces, ICMI, pp 233–236

Jackson DN (1967) Personality research form manual. Research Psychologists Press

Janin A, Baron D, Edwards J, Ellis D, Gelbart D, Morgan N, Peskin B, Pfau T, Shriberg E, Stolcke A, Wooters C (2003) The ICSI meeting corpus. In: International conference on acoustics, speech, and signal processing, ICASSP

Jayagopi D, Hung H, Yeo C, Gatica-Perez D (2009) Modeling dominance in group conversations using nonverbal activity cues. IEEE Trans Audio Speech Lang Process 17(3)

Jovanovic N, op den Akke R, Nijholt A (2005) A corpus for studying addressing behavior in multi-party dialogues. In: The sixth SigDial conference on discourse and dialogue

Kickul J, Neuman G (2000) Emergent leadership behaviours: The function of personality and cognitive ability in determining teamwork performance and ksas. J Business Psychol 15(1)

Kim T, Chang A, Holland L, Pentland A (2008) Meeting mediator: enhancing group collaboration with sociometric feedback. In: Conference on computer supported cooperative work, CSCW

Knapp ML, Hall JA (2008) Nonverbal communication in human interaction. Wadsworth, Cengage Learning

Mana N, Lepri B, Chippendale P, Cappelletti A, Pianesi F, Svaizer P, Zancanaro M (2007) Multimodal corpus of multi-party meetings for automatic social behavior analysis and personality traits detection. In: Workshop on tagging, mining and retrieval of human related activity information, TMR

Mast MS (2002) Dominance as expressed and inferred through speaking time: a meta-analysis. Hum Commun Res 28(3):420–450

McCowan I (2011) http://www.dev-audio.com/

McCowan I, Gatica-Perez D, Bengio S, Lathoud G, Barnard M, Zhang D (2005) Automatic analysis of multimodal group actions in meetings. PAMI 27(3):305–317

Otsuka K, Takemae Y, Yamato J, Murase H (2005) Probabilistic inference of multiparty-conversation structure based on markov-switching models of gaze patterns and head directions and utterances. In: International conference on multimodal interfaces, ICMI

Otsuka K, Yamato J, Takemae Y, Murase H (2006) Quantifying interpersonal influence in face-to-face conversations based on visual attention patterns. In: CHI ’06 extended abstracts on human factors in computing systems, ACM, New York, NY, USA, CHI EA ’06, pp 1175–1180

Otsuka K, Araki S, Ishizuka K, Fujimoto M, Heinrich M, Yamato J (2008) A realtime multimodal system for analyzing group meetings by combining face pose tracking and speaker diarization. In: International conference on multimodal interfaces, ICMI

Pianesi F, Zancanaro M, Lepri B, Cappelletti A (2007) A multimodal annotated corpus of consensus decision making meetings. Lang Resour Eval 41:409–429

Poole MS, Holligshead AB, McGrath JE, Moreland RL, Rohrbaugh J (2004) Interdisciplinary perspectives on small groups. Small Group Res 35(1):3–16

Ricci E, Odobez J (2009) Learning large margin likelihoods for realtime head pose tracking. In: International conference on image processing, ICIP

Rienks R, Heylen D (2005) Dominance detection in meetings using easily obtainable features. In: Bourlard H, Renals S (eds) Revised selected papers of the 2nd joint workshop on multimodal interaction and related machine learning algorithms, Springer, Berlin, pp 76–86

Rienks R, Zhang D, Gatica-Perez D, Post W (2006) Detection and application of influence rankings in small group meetings. In: International conference on multimodal interfaces, ICMI

Salas E, Sims DE, Burke CS (2005) Is there a big five in teamwork. Small Group Res 36(5):555–599

Sanchez-Cortes D, Aran O, Mast MS, Gatica-Perez D (2010) Identifying emergent leadership in small groups using nonverbal communicative cues. In: International conference on multimodal interfaces, ICMI

Sanchez-Cortes D, Aran O, Gatica-Perez D (2011) An audio visual corpus for emergent leader analysis. In: Workshop on multimodal corpora for machine learning: taking stock and road mapping the future, ICMI-MLMI

Sanchez-Cortes D, Aran O, Mast MS, Gatica-Perez D (2011) A nonverbal behavior approach to identify emergent leaders in small groups. IEEE Trans Multimed

Stein RT (1975) Identifying emergent leaders from verbal and nonverbal communications. Pers Soc Psychol 32(1):125–135

Stein RT, Heller T (1979) An empirical analysis of the correlations between leadership status and participation rates reported in the literature. J Pers Soc Psychol 37(11):1993–2002

Stiefelhagen R, Zhu J (2002) Head orientation and gaze direction in meetings. In: CHI’02 Extended abstracts on human factors in computing systems, CHI EA ’02

Subramanian R, Staiano J, Kalimeri K, Sebe N, Pianesi F (2010) Putting the pieces together: Multimodal analysis in social attention in meetings. In: ACM Multimedia, MM

Acknowledgments

We thank Iain McCowan (dev-audio) for technical support; Denise Frauendorfer and Pilar Lorente (University of Neuchatel), Radu-Andrei Negoescu (Idiap) for help during the data collection and for data processing, Jean-Marc Odobez (Idiap) for sharing code for VFOA extraction, and all the participants in the recordings. D. Sanchez-Cortes was supported by CONACYT (Mexico) through a doctoral scholarship and the Swiss NSF SONVB project. O. Aran was supported by the projects NOVICOM (EU FP7-IEF) and SOBE (Swiss NSF Ambizione grant no: PZ00P2-136811). D. Jayagopi was supported by the HUMAVIPS project (EU FP7).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sanchez-Cortes, D., Aran, O., Jayagopi, D.B. et al. Emergent leaders through looking and speaking: from audio-visual data to multimodal recognition. J Multimodal User Interfaces 7, 39–53 (2013). https://doi.org/10.1007/s12193-012-0101-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12193-012-0101-0