Abstract

This paper studies the problem of simultaneously testing that each of k samples, coming from k count variables, were all generated by Poisson laws. The means of those populations may differ. The proposed procedure is designed for large k, which can be bigger than the sample sizes. First, a test is proposed for the case of independent samples, and then the obtained results are extended to dependent data. In each case, the asymptotic distribution of the test statistic is stated under the null hypothesis as well as under alternatives, which allows to study the consistency of the test. Specifically, it is shown that the test statistic is asymptotically free distributed under the null hypothesis. The finite sample performance of the test is studied via simulation. A real data set application is included.

Similar content being viewed by others

1 Introduction

Univariate count data appear in many real life situations and the Poisson distribution is frequently used to model this kind of data. Testing the goodness-of-fit of given observations with a probabilistic model is a crucial aspect of data analysis. Because of these reasons, a number of authors have proposed tests for the Poisson law. Most papers on this issue deal with testing Poissonity for a sample, and the properties of the proposed procedures are studied as the sample size increases. This paper studies the problem of simultaneously testing Poissonity of k samples, where k can be larger than the sample sizes.

Let \(\textbf{X}_1=\{X_{1,1}, \ldots , X_{1, n_1}\}\), \(\ldots ,\) \(\textbf{X}_k=\{X_{k,1}, \ldots , X_{k, n_k}\}\) be k independent samples with sizes \(n_1, \ldots , n_k\), which may be different, where \(X_{i,1}, \ldots , X_{i, n_i}\) are independent and identically distributed (i.i.d.) coming from \(X_i \in \mathbb {N}_0=\{0,1,2, \ldots \}\), \(1 \le i \le k\). In this setting, we deal with the problem of testing

were \(X\sim Pois(\lambda )\) means that the random variable X has a Poisson law with mean \(\lambda \), against general alternatives,

where k is allowed to be large (the precise meaning of “large” will be stated in the following sections). Notice that, under \(H_{0,k}\), the parameter of each Poisson law may vary across the k populations.

Testing \(H_{0,k}\) is relevant in many settings. Just to mention an important example, sequencing experiments performed in biological sciences usually report long sequences of read counts, corresponding to a large number of genes or DNA bases; see for instance Jiménez-Otero et al. (2019) and references theirein. Such counts are typically analyzed under the umbrella of the Poisson model, which has been found particularly well-suited for certain sequencing experiments (see Lander and Waterman 1988). The validity of these analyses critically depends on the goodness-of-fit of the Poisson distribution to the count data at hand, and here is where our testing problem comes into play.

The problem of simultaneously testing goodness-of-fit for k populations have been studied in Gaigall (2021) by using test statistics based on comparing the empirical distribution function of each sample with a parametric estimator derived under the null hypothesis. The asymptotic properties studied in Gaigall (2021) are for continuous distribution functions, fixed k and increasing sample sizes. The tests in Gaigall (2021) are sums of goodness-of-fit test statistics for each of the k populations. For the problem of simultaneously testing normality of a large number of populations, Jiménez-Gamero (2023) considered several test statistics, obtaining best overall results for one based on a sum of statistics for testing normality in each population. Here we will also study a test based on a statistic of this type.

The paper is organized as follows. Section 2 briefly reviews Poissonity tests for one sample. The review is restricted to consistent tests, with special emphasis on the test in Baringhaus and Henze (1992). Section 3 studies a test statistic that is based on sum of statistics for one-sample Poissonity testing. Specifically, the one-sample statistic considered is a modification of the one proposed in Baringhaus and Henze (1992). We start by assuming that the samples are independent. It is shown that the test statistic is asymptotically free distributed, not relying on resampling or Monte-Carlo methods to obtain critical values. Here by asymptotic we mean as \(k\rightarrow \infty \), no assumption is made on the sample sizes, which may remain bounded or increase with k. Section 4 derives the asymptotic power. As the asymptotic approximation to the null distribution is rather conservative for small to moderate values of k, Sect. 5 studies a bootstrap estimator of the null distribution that gives good results in such cases. The finite sample performance of the test was numerically assessed by means of an extensive simulation experiment, whose results are reported in Sect. 6. Section 7 shows that, by assuming suitable mixing conditions, the results in sections 3 and 4 can be extended to the case of dependent data. A real data illustration is provided in Sect. 8. Section 9 summarizes the paper and comments on extensions and further research. All proofs and some simulation results can be found in the Supplementary Material (SM). The computations in this paper have been carried out using programs written in the R language [39]. The R code for the calculation of the modification of the test statistic in Baringhaus and Henze (1992) is also available in the SM.

Throughout the paper we will make use of the following standard notation: all random variables and random elements will be defined on a sufficiently rich probability space \((\Omega ,{{\mathcal {A}}},\mathbb {P})\); the symbols \(\mathbb {E}\) and \(\mathbb {V}\) denote expectation and variance, respectively; \(\mathbb {P}_0\), \(\mathbb {E}_0\) and \(\mathbb {V}_0\) denote probability, expectation and variance under the null hypothesis, respectively; \( \overset{\mathcal {D}}{=}\) and \(\overset{\mathcal {D}}{\rightarrow }\) mean equality in distribution and convergence in distribution, respectively; \({\mathop {\rightarrow }\limits ^{\mathbb {P}}}\) denotes convergence in probability; unless otherwise specified, all limits are taken as \(k\rightarrow \infty .\)

2 Poissonity tests for one sample

2.1 A brief review

This subsection gives a brief, non-exhaustive review of Poissonity tests for one sample, i.e. tests of the null hypothesis \(H_{0,1}\) v.s. \(H_{1,1}\). The review will be centered on tests that are consistent against fixed alternatives. Most of them are discussed in Mijburgh and Visagie (2020), that gives an overview of Poissonity tests, including some non-universally consistent ones.

Henze (1996) studied tests based on the empirical distribution function for discrete models, that can be applied to test \(H_{0,1}\). The test statistics are functions of \(F_n(x)-F(x; \hat{\lambda })\), where \(F_n\) denotes the empirical distribution function, \(F( \cdot ; \lambda )\) stands for the cumulative distribution function of the law \( Pois(\lambda )\) and \(\hat{\lambda }\) is an estimator of \(\lambda \), which is taken equal to the sample mean in Sect. 4 of that paper. Specifically, the Kolmogorov-Smirnov and Cramér-von Mises statistics are studied. A Kolmogorov-Smirnov type statistic was also proposed by Klar (1999), based on the difference between the integrated distribution function and its empirical counterpart. Székely and Rizzo (2004) proposed a Cramér-von Mises type test statistic where the empirical distribution function is replaced by an estimator which is obtained by using a characterization of the distribution of count variables in terms of mean distances.

When dealing with count data, Nakamura and Pérez-Abreu (1993a) argue in favor of using inferential methods based on the empirical probability generating function (EPGF). A number of tests use this tool. Rueda and O’Reilly (1999) (see also Jiménez-Gamero and Batsidis 2017) proposed a test of \(H_{0,1}\) based on an \(L^2\) norm of the difference between the probability generating function (PGF) of the Poisson law, with \(\lambda \) replaced with the sample mean, and the EPGF. That test was latter extended for the bivariate (and d-variate) Poisson law in Novoa-Muñoz and Jiménez-Gamero (2014). The PFG of the Poisson law is the unique PGF g of a law with finite mean, satisfying the differential equation,

where \(g'(t)=\frac{\textrm{d}}{\textrm{d} t}g(t)\) and \(\mu \) is a constant, which is necessarily the mean of the distribution. Baringhaus and Henze (1992) consider an \(L^2\) norm of an empirical version of that equation as a test statistic for \(H_{0,1}\). A related test was proposed by Nakamura and Pérez-Abreu (1993b), that was later generalized in Jiménez-Gamero and Alba-Fernández (2019) to testing for a family of distributions that contains the Poisson law as a special case, and in Novoa-Muñoz and Jiménez-Gamero (2016) to the bivariate Poisson. Puig and Weiss (2020) give a characterization of the Poisson distribution, based on an identity involving the binomial thinning operator, that is used to propose some tests of \(H_{0,1}\), whose test statistics replace the PGF in the characterization by the EPGF.

The last decade has witnessed an increasing interest in the developing of inferential methods based on Stein’s method (see Anastasiou et al. (2023) for a recent overview). In this line, Betsch et al. (2022) give a characterization of count variables that is used to propose a test of \(H_{0,1}\).

As expected from Janssen (2000), in the simulations carried out in Gürtler and Henze (2000), Székely and Rizzo (2004), Puig and Weiss (2020) and Betsch et al. (2022), there is no test having the largest power against all considered alternatives. Nevertheless, the test in Baringhaus and Henze (1992), that will be called the BH test, is one of those having best overall performance. Next subsection deals in some detail with its test statistic.

2.2 The BH test statistic

In order to simplify notation, for the one-sample case we will write \(Y, Y_1, \ldots , Y_n\) and \(\mu \) instead of \(X_1, X_{1,1}, \ldots , X_{1, n_1}\) and \(\lambda _1\), respectively. Along this subsection, it will be assumed that \(\mu =\mathbb {E}(Y)<\infty \). Let \(g_n\) denote the EPGF associated to the sample,

Since the PFG of the Poisson law is the unique satisfying Eq. (1) it follows that, calling

where g(t) is the PGF of Y, we have that \(\theta =0\) if and only if Y has a Poisson law. Baringhaus and Henze (1992), estimate \(\theta \) by means of \(\tilde{\theta }\), obtained by replacing g, \(g'\) and \(\mu \) with \(g_n\), \(g'_n\) and \(\overline{Y}=(1/n)\sum _{i=1}^n Y_i \), respectively, in the expression of \(\theta \), where

and \(\textbf{1}(\cdot )\) stands for the indicator function. The proposed test rejects the null for large values of \(n\tilde{\theta }\).

\(\tilde{\theta }\) is a degree-4 V-statistic with symmetric kernel \(H_{sym}\)

where

\(\mathcal {P}_4\) is the set of all permutations of \((i_1,i_2,i_3,i_4)\) (the four elements are treated as different, even if there are ties between them), and

Clearly, \(\tilde{\theta }\) is not an unbiased estimator of \(\theta \). In our developments, we will work with the associated U-statistic, denoted as \(\hat{\theta }\),

where \(n(4) = n(n - 1)(n - 2)(n - 3)\), which is unbiased for \(\theta \), and thus \(\mathbb {E}_0(\hat{\theta })=0\). In the expression (4), the notation \(1\le i_1 \ne i_2 \ne i_3 \ne i_4 \le n\) means that the four indices \(i_1, i_2, i_3, i_4\) are all of them different and that their values range from 1 to n. Notice that the calculation of \(\hat{\theta }\) requires \(n \ge 4\).

For the practical calculation of \(n\hat{\theta }\), it is convenient to use the following expression

The R code we used in the numerical results for the calculation of \(n\hat{\theta }\) is provided in the SM.

3 Sum of BH test statistics

This section studies a test of \(H_{0,k}\) vs \(H_{1,k}\) whose associate statistic is a sum of one-sample modified BH test statistics. As seen in Subsection 2.2, for \(n\hat{\theta }\) to be well-defined we must assume that the population generating the data has finite expectation and that the sample size is larger than or equal to 4. Accordingly, the setting is as follows:

Let

where \(\hat{\theta }_i=\hat{\theta }(X_{i,1}, \ldots , X_{i, n_i})\), \(1 \le i \le k\), and \(\hat{\theta }\) is as defined in (4). Let \({\theta }_i=\theta (X_i)\), where \(\theta \) is as defined in (2), \(1 \le i \le k\). By construction, \(\mathbb {E}(\mathcal {T}_k)=\sum _{i=1}^kn_i {\theta }_i \ge 0\) with \(\mathbb {E}(\mathcal {T}_k)=0\) if and only if \(H_{0,k}\) is true. Thus, it seems reasonable to reject \(H_{0,k}\) for large values of \(\mathcal {T}_k\).

Remark 1

The reason for multiplying \({\hat{\theta }}_i\) by \(n_i\) in the definition of \(\mathcal {T}_k\) is that, under \(H_{0,k}\) and for large \(n_i\), we have that \(n_i{\hat{\theta }}_i=O_\mathbb {P}(1)\). Specifically, it converges in law to a linear combination of \(\chi ^2\) variables (see, for example, Chapter 5 of Serfling 2009). For small to moderate \(n_i\) we still have that \(n_i{\hat{\theta }}_i=O_\mathbb {P}(1)\). Therefore, in all cases (small to large sample sizes), under \(H_{0.k}\), \({{\mathcal {T}}}_k\) is a sum of zero-mean, non-degenerate random variables.

To test \(H_{0,k}\) we must calculate upper percentiles of the null distribution of \(\mathcal {T}_k\), that is unknown because it depends on \(\lambda _1, \ldots , \lambda _k\), which are unknown quantities. Moreover, even if they were known, it is rather difficult to determine the exact null distribution of \(\mathcal {T}_k\). Because of these reasons, we try to approximate it. The next result shows that, under \(H_{0,k}\) and conveniently normalized, \(\mathcal {T}_k\) converges in law to a standard normal law, no matter how large (or small) are the sample sizes \(n_1, \ldots , n_k\). To derive it we only assume that the means of the populations take values in a compact interval bounded away from 0. This is mainly done to avoid the case in which the variance goes to infinity or to 0. Let \(\tau _{0,i}^2=\mathbb {V}_0(n_i\hat{\theta }_i)= \mathbb {E}_0(n_i^2\hat{\theta }_i^2)\), \(1 \le i \le k\), and \(\sigma _{0k}^2=(1/k) \sum _{i=1}^k \tau _{0,i}^2 \). Let \(\Phi \) denote the cumulative distribution function of a standard normal distribution.

Theorem 1

Suppose that (5) holds and that \(H_{0,k}\) is true with \(\lambda _i\in [L_1, L_2]\), \(\forall i\), for some fixed \(0<L_1<L_2<\infty \), then

moreover

for each k, where \(C=C(L_1,L_2)>0\) is a finite constant.

Recall that the test is one-sided, rejecting the null hypothesis for large values of \(\mathcal {T}_k\). If \(\sigma _{0k}\) were a known quantity, in view of Theorem 1, the test that rejects \(H_{0,k}\) when \(\mathcal {T}_k/(\sqrt{k}\sigma _{0k})\ge z_{1-\alpha },\) for some \(\alpha \in (0,1)\), where \(\Phi (z_{1-\alpha })=1-\alpha \), would have (asymptotic) level \(\alpha \). The assertion remains true if \(\sigma _{0k}\) is replaced by a consistent estimator. We will consider as estimator of \(\sigma _{0k}^2\) the sample variance of \(n_1\hat{\theta }_1, \ldots , n_k\hat{\theta }_k\),

Since \(\mathbb {E}_0(n_i\hat{\theta }_i)=0\), \(\forall i\), we can also consider the following estimator of \(\sigma _{0k}^2\),

The next proposition shows that, under \(H_{0,k}\), \( S^2_{1k}\) and \( S^2_{2k}\) are both of them ratio consistent estimators of \(\sigma _{0k}^2\).

Proposition 1

Suppose that the assumptions in Theorem 1 hold, then \(S^2_{ik}/\sigma _{0k}^2 {\mathop {\rightarrow }\limits ^{a.s.}} 1\), \(i=1,2\).

Corollary 1

Suppose that the assumptions in Theorem 1 hold, then \(\mathcal {T}_k/({\sqrt{k}}S_{ik}) \overset{\mathcal {D}}{\rightarrow } Z,\) \(i=1,2\).

Let \(\alpha \in (0,1)\). For testing \(H_{0,k}\) vs \(H_{1,k}\), we consider the test that rejects the null when

From Corollary 1, it has asymptotic level \(\alpha \), \(i=1,2\).

Remark 2

Notice that to derive the above results no assumption has been done on the sample sizes (apart from \(n_i \ge 4\)); hence they are valid whether the sample sizes remain bounded or increase with k at any rate.

Remark 3

To estimate the variance of \(\mathcal {T}_k\) we have considered nonparametric estimators. Parametric estimators, that is, estimators obtained by calculating the exact expression of \(\tau _{0,i}^2\) and then replacing \(\lambda _i\) with the sample mean of the sample from \(X_i\), or any other estimator (see, e.g. Jiménez-Gamero and Batsidis 2017, Jiménez-Gamero et al. 2016), are more involved. In addition, the study of the behavior of such estimators under alternatives (necessary to study the power of the proposed test) may become rather complicated.

Remark 4

In simulations we observed that the tests in (7), based on the normal approximation,

are rather conservative, for small to moderate k (the results will be shown in Sect. 6). In order to improve the normal approximation one could try a one-term Edgeworth expansion which, if it exists, should be (see, e.g., display (2.6) in Liu 1988),

where \(\phi \) denotes the probability density function of a standard normal distribution. For practical application, the term \(\varsigma \) must be replaced by some consistent estimator, such as \(\hat{\varsigma }_1= \frac{1}{k}\sum _{i=1}^k (n_i\hat{\theta }_i-\overline{n\hat{\theta }})^3/S_{1,k}^3\) or \(\hat{\varsigma }_2= \frac{1}{k}\sum _{i=1}^k n_i^3\hat{\theta }_i^3/S_{2,k}^3\) (the proof of the consistency of these two estimators of \(\varsigma \) can be easily derived using the results in Subsection 1.1 of the SM). Notice that since \(\phi (x)(2x^2+1)\) is a bounded function and that, under assumptions in Theorem 1, \(\varsigma \) is a bounded quantity (this fact is consequence of Lemma 2 in Subsection 1.1 of the SM), it follows that,

where M is a positive constant. Thus, one can try both approximations to the null distribution of the test statistic \(T_i\), \(i=1,2\). Typically, one-term Edgeworth expansions give approximations with error of order \(o(k^{-1/2})\), whenever the involved populations satisfy certain assumptions related to the existence of certain moments and their characteristic functions (see, e.g. Bhattacharya and Ranga Rao 1976 and Hall 1992). According to the results in Subsection 1.1 of the SM, such moment assumptions are satisfied in our setting. The situation is not so clear for the conditions on the characteristic function. Perhaps, the most popular sufficient condition on the characteristic function is Cramér condition. See display (20.55) in Bhattacharya and Ranga Rao (1976) for the case of independent random vectors. We did not succeed to prove it in our setting. However, if all \(n_i\hat{\theta }_i\) are replaced by their limit in law, as \(n_i\rightarrow \infty \) (which are linear combinations of centered independent \(\chi ^2\) variables, as observed in Remark 1), in the expression of \(\mathcal {T}_k\), then it can be checked that under the assumptions in Theorem 1, the approximation error of \(\hat{\mathbb {P}}_2(x)\) is of order \(o(k^{-1/2})\). This suggests that the order \(o(k^{-1/2})\) could be valid for large sample sizes.

Nevertheless, in simulations we observed that when using the approximation (8), the tests become a bit liberal, specially for small k. Section 5 investigates a bootstrap approximation, that gave really good practical results.

4 Power

This section studies the asymptotic power of the tests in (7). With this aim, it will be assumed that the sample sizes are comparable in the following sense:

To be precise, m should be denoted as \(m_k\), since it can vary with k. To simplify notation, the subindex k will be omitted. No restriction is assumed on m (except that \(m \ge 1\)), so if (9) holds, the sample sizes can either remain bounded or increase arbitrarily. It will be also assumed w.l.o.g. that \( X_1, \ldots , { X}_r\) have Poisson laws, while the remaining populations have alternative distributions, for some \(0\le r < k\). Here r is also allowed to vary with k, \(r=r_k\), but such dependence on k will be skipped. If \(r=0\), then \(1 \le i \le r\) is understood to be the empty set.

The next result, which is similar to Theorem 1, shows that under \(H_{1,k}\) and conveniently normalized, \(\mathcal {T}_k\) also converges in law to a standard normal law.

Theorem 2

Suppose that (5) holds, that \( X_1, \ldots , { X}_r\) have Poisson laws with \(\lambda _i\in [L_1, L_2]\), \(1 \le i \le r\), for some \(0\le r<k\), and for some fixed \(0<L_1<L_2<\infty \), that \( X_{r+1}, \ldots , { X}_k\) have alternative distributions satisfying \(0<a \le \mathbb {V}(\sqrt{n_i}\hat{\theta }_i)\) and \(\mathbb {E}\{|\sqrt{n_i}(\hat{\theta }_i-\theta _i)|^{2+\delta }\} \le A\), \(r+1 \le i \le k\), for some positive constants a, \(\delta \) and A, and that \(n_{1}, \ldots , n_k\) satisfy (9). Let \(p_k=(k-r)/k\). Assume that either \(p_k \rightarrow p \in (0,1]\), or \(p_k \rightarrow 0\) and \(k-r \rightarrow \infty \), or \(p_k \rightarrow 0\), \(k-r\) remain bounded and \(m/k\rightarrow 0\). Then

Remark 5

If \(X_i\) has an alternative distribution, then \(\sqrt{n_i}(\hat{\theta }_i-\theta _i)\) converges in law, as \(n_i \rightarrow \infty \), to a normal law; so, it makes sense to impose restrictions on \(\sqrt{n_i}(\hat{\theta }_i-\theta _i)\) rather than on \(n_i(\hat{\theta }_i-\theta _ i)\), \(r+1 \le i \le k\).

Remark 6

Notice that to derive the above result it has been assumed that the sample sizes satisfy \(n_i \ge 4\), and (9) with \(m/k\rightarrow 0\) if \(p_k\rightarrow 0\) and \(k-r\) remain bounded. In any case, the result is valid whether the sample sizes remain bounded or increase with k.

Remark 7

Theorem 2 assumes that \(0<a \le \mathbb {V}(\sqrt{n_i}\hat{\theta }_i)\), \(r+1 \le i \le k\), for some fixed constant a. From the proof, we see that it suffices that the variance of \(\sqrt{n_i}\hat{\theta }_i\) is bounded away from 0 for a positive proportion of alternatives.

Under alternatives,

and so \(S_{1k}^2\) and \(S_{2k}^2\) are both of them biased estimators of \( (1/k)\sum _{i=1}^k \mathbb {V}(n_i\hat{\theta }_i)\). The next result shows that \(S_{ik}^2\) is a ratio (strongly) consistent estimator of its expectation, \(i=1,2\).

Proposition 2

Suppose that the assumptions in Theorem 2 hold. Then \(S^2_{ik}/\mathbb {E}(S^2_{ik}) {\mathop {\longrightarrow }\limits ^{a.s.}} 1\), \(i=1,2\).

Let \(\textrm{pwd}_{ik}=\mathbb {P}(\mathcal {T}_k/\sqrt{k}{S_{ik}} \ge z_{1-\alpha })\), \(i=1,2\). From Theorem 2 and Proposition 2,

\(i=1,2\), where

\(i=1,2\). The next result gives the asymptotic power of the tests (7), that is, the limit of \(\Phi \left( \textrm{coc}_{i1}^{1/2}z_\alpha +\textrm{coc}_2\right) \) in some special cases.

Theorem 3

Suppose that the assumptions in Theorem 2 hold, that \(kp_k\rightarrow \infty \), that \(\theta _i \le \Theta \), \(\forall i\), for some positive constant \(\Theta \) and that \(\bar{\gamma }=\frac{1}{k-r}\sum _{i=r+1}^k c_i\theta _i>\epsilon \), for some positive constant \(\epsilon \) (at least for large k).

-

1.

If \(p_k\rightarrow p\in (0,1]\), then \(\textrm{pwd}_{ik} \rightarrow 1\), \(i=1,2\).

-

2.

If \(p_k\rightarrow p=0\) and \(mp_k \rightarrow a>0\), then \(\textrm{pwd}_{ik} \rightarrow 1\), \(i=1,2\).

-

3.

If \(p_k\rightarrow p= 0\), \(mp_k \rightarrow 0\) and \(\sqrt{k}m p_k \rightarrow \infty \), then \(\textrm{pwd}_{ik} \rightarrow 1\), \(i=1,2\).

-

4.

If \(p_k\rightarrow p= 0\), \(mp_k \rightarrow 0\) and \(\sqrt{k}m p_k \rightarrow \kappa \in [0, \infty )\), then

$$\begin{aligned} \textrm{pwd}_{ik} =\Phi \left( z_\alpha + \frac{\kappa \bar{\gamma }}{\sqrt{v_1 }}\right) +r_{ik}, \end{aligned}$$(12)with \(r_{ik}\rightarrow 0\), \(i=1,2\), where \(v_1= (1/r) \sum _{i=1}^r \mathbb {E}(n_i^2\hat{\theta }_i^2)\).

Theorem 3 assumes that all \(\theta _i\) are bounded. If \({\mathbb {E}}(X_i) \le M\), \(\forall i\), for some positive constant M, then, from the definition of \(\theta \) in (2), it follows that \(\theta _i \le \Theta \), \(\forall i\), for some positive \( \Theta = \Theta (M) \). Recall that for the populations obeying the null we are assuming that \(\lambda _i={\mathbb {E}}(X_i) \le L_2\), so it is not very restrictive to assume that a similar bound is true for the expectations of the alternative populations.

Theorem 3 reveals that the tests in (7) are consistent in a variety of scenarios. To be specific: if the proportion of alternative distributions is positive, then the proposed tests are consistent (this is case 1 in Theorem 3); if the proportion of alternative distributions goes to 0, then the proposed tests are still consistent provided that the sampling effort m compensates for the vanishing \(p_k\) (cases 2 and 3 in Theorem 3); otherwise, the tests may be still able to detect alternatives (power greater than \(\alpha \)), but the power may not reach 1 (case 4 in Theorem 3). Finally, we point out that there are more cases not considered in that theorem, which could be studied in a similar way.

5 A bootstrap approximation to the null distribution

We observed in simulations that neither the normal nor the one-term Edgeworth approximations to the null distribution of the test statistics \(T_1\) and \(T_2\) are satisfactory. Because of this reason, this section studies another approximation based on bootstrap. Two bootstrap approximations are conceivable. First, since \(H_{0,k}\) is a parametric hypothesis, a parametric bootstrap could be applied: estimate \(\lambda _i\) through \(\hat{\lambda }_i\) (which can be taken the sample mean of the sample \({{\textbf{X}}}_i\)), generate a sample of size \(n_i\) from \(X^\star _i \sim Pois(\hat{\lambda }_i)\), say \(X_{i,1}^\star , \ldots , X_{i,n_i}^\star \), \(1\le i \le k\), evaluate the test statistic at the bootstrap data \(X_{1,1}^\star , \ldots , X_{1,n_1}^\star , \ldots , X_{k,1}^\star , \ldots , X_{k,n_k}^\star \), obtaining say \(T^\star _i\), and finally, approximate the null distribution of \(T_i\) through its bootstrap distribution, which is the conditional distribution of \(T^\star _i\), given the original data, \(X_{1,1}, \ldots , X_{1,n_1}, \ldots , X_{k,1}, \ldots , X_{k,n_k}\). In practise, the approximation is carried out by simulation, that is, one repeats B times the above procedure, that way obtaining B bootstrap replicates of \(T_i\), say \(T_i^{\star 1}, \ldots , T_i^{\star B}\), and then the bootstrap distribution of \(T_i\) is estimated through the empirical distribution of the B bootstrap replicates. Although in simulations we got excellent results (in terms of obtaining a really good approximation to the null distribution), the main issue with this bootstrap approximation is that it is very time-consuming, due to the calculation of \(\hat{\theta }^\star _i\), since it involves a sum with \(O(n_i^2)\) terms, \(1\le i \le k\).

Alternatively, a non-parametric bootstrap estimator can be obtained as follows. First, define

By construction, \(\bar{Y}=(1/k)\sum _{i=1}^k Y_i=0\). Let \(Y^*_1, \ldots , Y^*_k\) be a random sample from the empirical distribution of \(Y_1, \ldots , Y_k\). Approximate the null distribution of \(T_i\), \(i=1,2\), through the conditional distribution of \(T^*_i=\sum _{i=1}^k Y^*_i/(\sqrt{k}{S_{ik}^*})\), \(i=1,2\), given the original data, denoted as \({\mathbb {P}}_*(T^*_i \le x)\), where \(S^{*2}_{1k}=(1/k)\sum _{i=1}^k (Y^*_i-\overline{Y^*})^2\), \(\overline{Y^*}=(1/k)\sum _{i=1}^k Y^*_i\) and \(S^{*2}_{2k}=(1/k)\sum _{i=1}^k Y^{* 2}_i\). As before, in practise the approximation is carried out by simulation, that is, one repeats B times the above procedure, obtaining \(T_i^{* 1}, \ldots , T_i^{* B}\), and then the bootstrap distribution of \(T_i\) is estimated through the empirical distribution of the B bootstrap replicates, \(i=1,2\). As this bootstrap avoids the calculation (at the bootstrap level) of the most time-consuming part (the \(\hat{\theta }^*_i\), \(1\le i \le k\)), its implementation is really fast, even for large k. Because of this reason, we restrict ourselves to study the consistency of this non-parametric bootstrap approximation.

Theorem 4

Suppose that either \(H_{0,k}\) is true or it is not true and the assumptions in Theorem 2 hold with \(m^2/k\rightarrow 0\), and that \(\theta _i \le \Theta \), \(\forall i\), for some positive constant \(\Theta \). Then \( \displaystyle \sup _x \left| {\mathbb {P}}_*(T^*_i \le x)-\Phi (x) \right| {\mathop {\rightarrow }\limits ^{\mathbb {P}}} 0\), \(i=1,2\).

To get the consistency of the bootstrap null distribution estimator when the data do not obey \(H_{0,k}\), Theorem 4 requires \(m^2/k\rightarrow 0\), which is used to cope with the bias of \(S^2_{ik}\) as an estimator of the variance of \(\mathbb (1/\sqrt{k}) {{\mathcal {T}}}_k\).

The result in Theorem 4 holds whether \(H_{0,k}\) is true or not. In particular, the test that rejects \(H_{0,k}\) when \(p_*={\mathbb {P}}_*\{T^*_i > T_i\}\le \alpha ,\) is asymptotically correct in the sense that when \(H_{0,k}\) is true, \(p_*\rightarrow \alpha \), in probability. Under alternatives, the results in Theorem 3 also apply to that bootstrap test.

6 Finite sample results

Two tests of \(H_{0,k}\) have been studied and three approximations to the null distribution of their test statistics have been proposed. We conducted two simulation experiments, in order to assess the finite sample performance of the proposed tests, using each of the three null distribution approximations, under the null hypothesis (level) and under alternatives (power). Next we describe those experiments and summarize their outputs. As slightly better results (in the sense of closeness of the empirical levels to the nominal values under the null, and greater power under alternatives) were obtained for the test based on the variance estimator \(S^2_{1k}\), we will only display the results obtained for such test.

Experiment to study the level To study the level of the tests for a finite value of k, we carried out the following simulation experiment. We generated a sample from each of k populations, for \(k=10, 20, 100, 200, 1000, 2000\). Population i has a Poisson law with mean \(\lambda _i\), \(1\le i \le k\), where the values of \(\lambda _1, \ldots , \lambda _k\) were randomly generated from a continuous uniform distribution in the interval (a, b), U(a, b), for \((a,b)=(1,5)\), (5, 10), (10, 15), (15, 20). The size of each sample has been randomly generated from a discrete uniform random law \(UD\{N, N+1, \ldots , M\}\), with \((N,M)=(6,10)\), (11, 15), (16, 20), (21, 25), (26, 30). Each case was run \(R=10,000\) times and to calculate the bootstrap approximation, \(B=1000\) bootstrap samples were generated. Table 1 displays the percentages of p-values less than or equal to 0.05 and 0.10, which are the estimated type I error probabilities for nominal significance level \(\alpha \)=0.05 and 0.10, respectively. Looking at this table we observe that: for small to moderate k, in general, the normal approximation gives conservative tests and the one-term Edgeworth approximation gives liberal tests, better results are observed for the bootstrap approximation; the sample sizes seems to have no effect on the closeness of these approximations to the null distribution (for the sample sizes tried); as expected from the theory, as k increases all approximations yield levels closer to the nominal values.

Experiment to study the power To study the power, we repeated the above experiment with 100p% of the samples coming from an alternative distribution with mean \(\mu \) and the remaining 100\((1-p)\)% of the samples were generated from a Poisson law with the same mean \(\mu \), for \(p=0.1, 0.2\). The considered alternative distributions are

-

ALT1: a discrete uniform distribution, \(UD\{0, \ldots , 5\}\), \(\mu =2.5\).

-

ALT2: a mixture of two Poisson distributions, \(0.5\times Pois(2)+0.5\times Pois(5)\), \(\mu =3.5\).

-

ALT3: a zero inflated Poisson distribution, \(0.2\times 0+0.8\times Pois(2)\), \(\mu =1.6\).

-

ALT4: a binomial distribution, Bin(10, 0.5), \(\mu =5\).

-

ALT5: a negative binomial distribution, BN(2, 2/3), \(\mu =1\).

-

ALT6: a negative binomial distribution, BN(10, 2/3), \(\mu =5\).

Table 2 displays the power results for \(k=100, 200\). Looking at this table we see that power increases with the sample sizes, with k and with p.

7 Dependent data

This section aims to relax the assumption that \(X_1, \ldots , X_k\) are independent. For example, \(X_1, \ldots , X_k\) can be seen as a segment of a process (observed at discrete times) whose random variables take values in \(\mathbb {N}_0\). Along this section, instead of (5), the setting will be the following:

Notice that, if the independence assumption is dropped, the expression of the variance of \(\mathcal {T}_k\) given in Sect. 3 is not valid; moreover, to derive a result similar to that in Theorem 1, some mixing conditions have to be added; and finally, adequate variance estimators must be considered.

As before, let \(\hat{\theta }_i=\hat{\theta }(X_{i1}, \ldots , X_{in})\), \(1\le i \le k\). Under the setting (13), we again have that \(\mathbb {E}(\mathcal {T}_k)=\sum _{i=1}^kn {\theta }_i \ge 0\) with \(\mathbb {E}(\mathcal {T}_k)=0\) if and only if \(H_{0,k}\) is true. Therefore, it seems reasonable to reject \(H_{0,k}\) for large values of \(\mathcal {T}_k\). Because of the same reasons argued in Sect. 3 in the independent setting (5), we will build a test of \(H_{0,k}\) in the current dependent setting (13), based on the asymptotic null distribution of \(\mathcal {T}_k\). As observed before, some mixing conditions must be assumed with this aim. The definition of the mixing coefficients in Theorem 5 below can be found in Definition 3.42 of White (2001). Let

Theorem 5

Suppose that (13) holds, that \(\{X_i\}_{i\in \mathbb {Z}}\) has \(\phi \)-mixing coefficients of size \(-v/2(v-1)\) or \(\alpha \)-mixing coefficients of size \(-v/(v-2)\), for some \(v>2\), that \(\bar{\sigma }_{0k}>0\) for all k sufficiently large, and that \(H_{0,k}\) is true with \(\lambda _i\in [L_1, L_2]\), \(\forall i\), for some fixed \(0<L_1<L_2<\infty \). Then \(\mathcal {T}_k/(\sqrt{k}{\bar{\sigma }_{0k}})\overset{\mathcal {D}}{\rightarrow } Z.\)

For the result in Theorem 5 to be useful to construct a test of \(H_{0,k}\), a ratio consistent estimator of \(\bar{\sigma }_{0k}^2\) is needed. With this aim we consider the following estimator, called in the literature the HAC estimator,

Several papers have dealt with the consistency of the HAC estimator for non-stationary sequences (see e.g. Andrews 1988, Andrews 1991, Newey and West 1987, White 2001). The next proposition shows that, for adequate choices of the weights \( \omega (i, \ell _k)\) and of \(\ell _k\), and under some mixing conditions, \(\hat{\bar{\sigma }}_{0k}^2\) is ratio consistent when \(\bar{\sigma }_{0k}>0\).

Proposition 3

Suppose that (13) holds, that \(\{X_i\}_{i\in \mathbb {Z}}\) has \(\phi \)-mixing coefficients of size \(-2v/(2v-1)\) or \(\alpha \)-mixing coefficients of size \(-2v/(v-1)\), for some \(v>1\), that \(\bar{\sigma }_{0k}>0\) for all k sufficiently large, that \(H_{0,k}\) is true with \(\lambda _i\in [L_1, L_2]\), \(\forall i\), for some fixed \(0<L_1<L_2<\infty \), that the weights are bounded, \(|\omega (i, \ell _k)| \le \Delta \), for some \(\Delta >0\), that \(\ell _k\rightarrow \infty \), that \(\omega (i, \ell _k) \rightarrow 1\) for each i, and that \(\ell _k/k^{1/4}\rightarrow 0\). Then, \(\hat{\bar{\sigma }}_{0k}^2/\bar{\sigma }_{0k}^2 {\mathop {\rightarrow }\limits ^{\mathbb {P}}} 1\).

Corollary 2

Suppose that the assumptions in Proposition 3 hold. Then \(\mathcal {T}_k/(\sqrt{k}\hat{\bar{\sigma }}_{0k})\overset{\mathcal {D}}{\rightarrow } Z.\)

Remark 8

Notice that to derive the above results no assumption has been done on the sample size n (apart from \(n \ge 4\)); hence they are valid whether n remains bounded or increases with k at any rate.

Let \(\alpha \in (0,1)\). For testing \(H_{0,k}\) vs \(H_{1,k}\), in the setting (13), we consider the test that rejects the null when

From Corollary 2, it has asymptotic level \(\alpha \).

Remark 9

The condition \(\ell _k/k^{1/4}\rightarrow 0\) can be relaxed in the sense that \(\ell _k\) can be chosen larger (see Andrews (1988), Andrews (1991)). Nevertheless, in our simulations we found no practical advantage when taking larger values of \(\ell _k\).

Remark 10

Theorem 1 gives a Berry-Esseen type bound for the normal approximation in the independent setting (5) and Remark 4 gives the expression of the one-term Edgeworth expansion. We are only aware of Berry-Essen type bounds for stationary sequences of weakly dependent data (see, e.g. Rio 1996, Bentkus et al. 1997, Jirak 2016), and the same apply to Edgeworth expansions (see, e.g. Lahiri 2010), which are more involved than in the independent setting. Recall that in our setting, the means of the components of the sequence \(X_1, \ldots , X_k\) may differ, therefore the sequences we are dealing with are not stationary, and hence the aforementioned bounds and expansions do not apply.

To study the power, as in the independence setting (5), we will assume that \(X_1, \ldots , X_r\) have Poisson laws, while the rest of the data have alternative distributions. Let

Theorem 6

Suppose that (13) holds, that \(\{X_i\}_{i\in \mathbb {Z}}\) has \(\phi \)-mixing coefficients of size \(-v/2(v-1)\) or \(\alpha \)-mixing coefficients of size \(-v/(v-2)\), for some \(v>2\), that \( X_1, \ldots , { X}_r\) have Poisson laws with \(\lambda _i\in [L_1, L_2]\), \(1 \le i \le r\), for some \(r<k\), that \( X_{r+1}, \ldots , { X}_k\) have alternative distributions satisfying \(\mathbb {E}(|\sqrt{n}(\hat{\theta }_i-\theta _i)|^{2+\delta }) \le A < \infty \), \(r+1 \le i \le k\), for some constants \(A, \, \delta >0\), and that \(\bar{\sigma }_{k}^2>0\) for all k sufficiently large. Then,

Remark 11

The observation in Remark 5, made for the setting (5), also applies here in the setting (13).

The next proposition gives the expectation and the limit in probability of \(\hat{\bar{\sigma }}_{0k}^2\) under alternatives.

Proposition 4

Suppose that (13) holds, that \(\{X_i\}_{i\in \mathbb {Z}}\) has \(\phi \)-mixing coefficients of size \(-v/(v-1)\) or \(\alpha \)-missing coefficients of size \(-2v/(v-2)\), for some \(v>2\), that \( X_1, \ldots , { X}_r\) have Poisson laws with \(\lambda _i\in [L_1, L_2]\), \(1 \le i \le r\), for some \(r<k\), that \( X_{r+1}, \ldots , { X}_k\) have alternative distributions satisfying \(\mathbb {E}(|\sqrt{n}(\hat{\theta }_i-\theta _i)|^{2+\delta }) \le A< \infty \), \(r+1 \le i \le k\), for some positive constants \(A, \, \delta \), that that \(\theta _i \le \Theta \), \(\forall i\), for some positive constant \(\Theta \), that \(|\omega (i, \ell _k)| \le \Delta \), for some \(\Delta >0\), that \(\ell _k\rightarrow \infty \), that \(\omega (i, \ell _k) \rightarrow 1\) for each i, that \(\ell _k/k^{1/4}\rightarrow 0\), that \(n/\sqrt{k}\) is a bounded quantity, at least for large k, and that \(\bar{\sigma }_{k}>0\) for all k sufficiently large. Then,

where \(\bar{\theta }=(1/k)\sum _{i=1}^k\theta _i\) and \(r_k\rightarrow 0\) as \(k\rightarrow \infty \). Assume also that \((1/n)\mathbb {E}\left( \hat{\bar{\sigma }}_{0k}^2\right) \ge a> 0\), at least for large k. Then,

Finally, from Theorem 6 and Proposition 4, the power of the test (14) can be approximated by means of

where

In particular, the test is consistent against alternatives for which \(\mathrm{coc.d}_{1}\) remains bounded and \(\mathrm{coc.d}_2\rightarrow \infty \).

7.1 Finite sample results for simulated data

We conducted two simulations experiments, in order to assess the finite sample performance of the test in (14) under the null hypothesis (level) and under alternatives (power). Next we describe those experiments and summarize their outputs.

Experiment to study the level To generate a sequence of k dependent Poisson data, we first generated \(k+2m\) independent Poisson data, and then calculated the moving sums of each \(2m+1\) consecutive elements. To generate the independent Poisson data, we considered the following three cases:

-

Case inc: the means of the data form an increasing sequence from 0.25 to 1

-

Case ran: the means of the data are a random sample from a uniform law in the interval (0.25, 1).

-

Case alt: the means of the data form a sequence with the first and third quarters equal to 0.25, and the remaining equal to 1.

We tried \(m=2,3\), the case \(m=3\) inducing a stronger dependence compared to \(m=2\). As for \(\ell _k\), we took \(\xi \lceil \log (k) \rceil \), with \(\xi =1,2,3\), where \(\lceil x \rceil \) is the smaller integer greater than or equal to x. This ensures in particular \(\ell _k=o(k^{1/4})\), as requested in Proposition 3. We considered several weights \(\omega (i, \ell _k)\). Specifically we considered the weights generated by the following kernels (see, for example, Andrews 1991)

-

Truncated kernel, which gives \(\omega _T(i, \ell _k)=1\), \(1\le i \le \ell _k\),

-

Bartlett kernel, which gives \(\omega _B(i, \ell _k)=1-i/(\ell _k+1)\), \(1\le i \le \ell _k\),

-

Tukey-Hanning kernel, which gives \(\omega _{TH}(i, \ell _k)=0.5\{1+\cos (\pi \,i/\ell _k)\}\), \(1\le i \le \ell _k\),

-

Parzen kernel, which gives \(\omega _P(i, \ell _k)=1-6(i/\ell _k)^2+6(i/\ell _k)^3\), for \(1\le i \le \ell _k/2\), and \(\omega (i, \ell _k)=2(1-i/\ell _k)^3\), for \( \ell _k/2< i \le \ell _k\).

The weights \(\omega _T\) and \(\omega _P\) do not always generate positive variance estimators in finite samples. In our simulations we got negative variance estimators in many instances when using \(\omega _T\), and in less cases with \(\omega _P\) (only for \(k=50\)). As for the other weights, with the use of \(\omega _B\) we got, in general, level results closer to the nominal values than those given by \(\omega _{TH}\). Because of these reasons, we will only show up the results for \(\omega _B\). Table 1 in the SM displays the percentages of p-values less than or equal to 0.05 and 0.10, which are the estimated type I error probabilities for nominal significance level \(\alpha \)=0.05 and 0.10, respectively. Each of those percentages were calculated over \(R=10,000\) runs. Looking at this table we see that, in contrast to the independent case, here the sample size has an effect on the closeness of the actual level to the nominal value; in general, better results (in the sense of getting closer values) are obtained for \(n=10,15\), than for \(n=5\). As expected from the theory, as k increases, the observed levels stick to the nominal values.

Experiment to study the power To generate a sequence of dependent data, we proceed as before: first generate \(k+2m\) independent data, and then calculate the moving sums of each \(2m+1\) consecutive elements; the difference lies in the way of generating the independent data: the first 100p% come from an alternative law, and the remaining 100\((1-p)\)% come form a Poisson law with the same mean \(\mu \) as the alternative, for \(p=0.2, \, 0.4\). The considered alternatives were

-

ALTD1: a zero inflated Poisson distribution, \(0.2\times 0+0.8\times Pois(2)\), \(\mu =1.6\),

-

ALTD2: a binomial distribution, Bin(5, 0.3), \(\mu =1.5\),

-

ALTD3: a negative binomial distribution, BN(2, 5/7), \(\mu =0.8\).

Because of the same reasons given for the level, we will only show up the results for \(\omega _B\). Table 2 in the SM displays them. Looking at this table, the same conclusions given for the independent case apply here.

8 Real data illustration

In order to illustrate the proposed test, we consider the results of the MAQC-2 mRNA-Seq experiment in Bullard et al. (2010). In this experiment, two types of biological samples (Brain and UHR) were analyzed, each using \(n=7\) lanes. As a result, seven sequences of read counts were obtained for each group along \(k=52,580\) genes. In sequencing experiments, the Poisson has been suggested as a suitable model for the read counts; see for instance Lander and Waterman (1988). In this setting, the Poisson distribution is often used to calculate p-values from the read counts, so genetic disorders or other features can be detected (Jiménez-Otero et al. 2019). Therefore, verification of this assumption is important in practice in order to validate the Poisson-based inference.

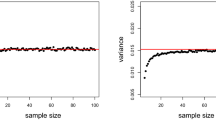

The null hypothesis of Poissonity was tested separately for the two biological samples by applying criterion (14). We used the weigths \(\omega _B(i,\ell _k)\) with \(\ell _k=11\), 22 and 33, corresponding to the choice \(\ell _k=\lceil \xi \log (k) \rceil \), with \(\xi =1,2,3\) respectively. The p-values were very small for the Brain group (\(2.36\times 10^{-9}\), \(3.63\times 10^{-9}\) and \(5.29\times 10^{-9}\)), leading to the rejection of the Poisson model. The p-value obtained for the UHR type was 0.076 for the three choices of \(\ell _k\). The very small p-values obtained for the Brain group can be explained from the overdispersion of the read counts. In order to see this, in Fig. 1, left, the modified BH test statistics \(n{\hat{\theta }}_i\), \(1\le i\le k\), are plotted against the log-ratio of sample variance and mean. Note that this log-ratio is zero for the Poisson model. From Fig. 1, left, it is seen that the overdispersion is clearly present for many of the genes, which report a relatively large value of the modified BH test statistic too. This figure also reveals that the modified BH statistic may be large even when mean and variance are in well agreement; this is not surprising, since the absence of overdispersion does not guarantee Poissonity. The situation is less critical for the UHR group, see Fig. 1, right; this is why the p-value is several orders of magnitude greater compared to the Brain group. In this case, the log-overdispersion rate roughly ranges between \(-2\) and 2, with only few genes reporting values larger than 2. The modified BH statistics are smaller for the UHR group too.

For comparison purposes, the p-values were re-calculated by using the tests based on the independence assumption; the results were very similar to those reported by the test for dependent data. Specifically, the p-values reported by \(T_1\) were \(1.17\times 10^{-9}\) and 0, obtained with the normal and the non-parametric bootstrap approximations, respectively, for the Brain group; and similarly 0.078 and 0.015 for the UHR group. The similarity among the results obtained with the normal approximation in each group suggests that the dependence among the read counts is not strong.

9 Concluding remarks and further research

This paper proposes and studies procedures for simultaneously testing that k samples from a count variable come from Poisson laws, which can have different means. The samples can be independent or dependent. The proposed tests are based on the BH test and allow k to increase. The normal approximation to the null distribution of the test statistics works reasonably well for large k. The tests have been shown to be consistent against a wide range of possible configurations for the alternatives, including cases where the proportion of alternative distributions goes to 0.

The methods in this paper are based on the test in Baringhaus and Henze (1992) for one sample. As seen in Subsection 2.1, there are other Poissonity tests designed for the one-sample setting. Each of them could be used to build similar procedures to those developed here. Moreover, parallel strategies could be tried for simultaneously testing that k samples come from other parametric families.

References

Anastasiou A, Barp A, Briol FX, Ebner B, Gaunt RE, Ghaderinezhad F, Gorham J, Gretton A, Ley C, Liu Q, Mackey L, Oates CJ, Reinert G, Swan Y (2023) Stein’s Method Meets Computational Statistics: A Review of Some Recent Developments. Stat Sci 38(1):120–139. https://doi.org/10.1214/22-STS863

Andrews DWK (1988) Heteroskedasticity and autocorrelation consistent covariance matrix estimation. Cowles Foundation Discussion Paper No. 877, Yale University

Andrews DWK (1991) Heteroskedasticity and autocorrelation consistent covariance matrix estimation. Econometrica 59(3):817–858. https://doi.org/10.2307/2938229

Baringhaus L, Henze N (1992) A goodness of fit test for the Poisson distribution based on the empirical generating function. Statist Probab Lett 13(4):269–274. https://doi.org/10.1016/0167-7152(92)90033-2

Bentkus V, Götze F, Tikhomirov A (1997) Berry-Esseen bounds for statistics of weakly dependent samples. Bernoulli 3(3):329–349. https://doi.org/10.2307/3318596

Betsch S, Ebner B, Nestmann F (2022) Characterizations of non-normalized discrete probability distributions and their application in statistics. Electron J Stat 16(1):1303–1329. https://doi.org/10.1214/22-ejs1983

Bhattacharya RN, Ranga Rao R (1976) Normal approximation and asymptotic expansions. John Wiley & Sons

Bullard JH, Purdom E, Hansen KD, Dudoit S (2010) Evaluation of statistical methods for normalization and differential expression in MRNA-seq experiments. BMC Bioinformatics 11(94):1–13

Gaigall D (2021) On a new approach to the multi-sample goodness-of-fit problem. Commun Stat Simul Comput 50(10):2971–2989. https://doi.org/10.1080/03610918.2019.1618472

Gürtler N, Henze N (2000) Recent and classical goodness-of-fit tests for the Poisson distribution. J Statist Plan Inference 90(2):207–225. https://doi.org/10.1016/S0378-3758(00)00114-2

Hall P (1992) The bootstrap and Edgeworth expansion. Springer-Verlag. https://doi.org/10.1007/978-1-4612-4384-7

Henze N (1996) Empirical-distribution-function goodness-of-fit tests for discrete models. Canad J Statist 24(1):81–93. https://doi.org/10.2307/3315691

Janssen A (2000) Global power functions of goodness of fit tests. Ann Statist 28(1):239–253. https://doi.org/10.1214/aos/1016120371

Jiménez-Gamero MD (2023) Testing normality of a large number of populations. Statist Pap. https://doi.org/10.1007/s00362-022-01384-y

Jiménez-Gamero MD, Alba-Fernández MV (2019) Testing for the Poisson-Tweedie distribution. Math Comput Simul 164:146–162. https://doi.org/10.1016/j.matcom.2018.08.001

Jiménez-Gamero MD, Batsidis A (2017) Minimum distance estimators for count data based on the probability generating function with applications. Metrika 80(5):503–545. https://doi.org/10.1007/s00184-017-0614-3

Jiménez-Gamero MD, Batsidis A, Alba-Fernández MV (2016) Fourier methods for model selection. Ann Inst Statist Math 68(1):105–133. https://doi.org/10.1007/s10463-014-0491-8

Jiménez-Otero N, de Uña-Álvarez J, Pardo-Fernández JC (2019) Goodness-of-fit tests for disorder detection in NGS experiments. Biom J 61(2):424–441. https://doi.org/10.1002/bimj.201700284

Jirak M (2016) Berry-Esseen theorems under weak dependence. Ann Probab 44(3):2024–2063. https://doi.org/10.1214/15-AOP1017

Klar B (1999) Goodness-of-fit tests for discrete models based on the integrated distribution function. Metrika 49(1):53–69. https://doi.org/10.1007/s001840050025

Lahiri SN (2010) Edgeworth expansions for Studentized statistics under weak dependence. Ann Statist 38(1):388–434. https://doi.org/10.1214/09-AOS722

Lander ES, Waterman MS (1988) Genomic mapping by fingerprinting random clones: a mathematical analysis. Genomics 2(3):231–239. https://doi.org/10.1016/0888-7543(88)90007-9

Liu RY (1988) Bootstrap procedures under some Non-I.I.D. models. Ann Statist 16(4):1696–1708. https://doi.org/10.1214/aos/1176351062

Mijburgh PA, Visagie IJH (2020) An overview of goodness-of-fit tests for the poisson distribution. South Afr Statist J 54(2):207–230

Nakamura M, Pérez-Abreu V (1993) Empirical probability generating function: an overview. Insur Math Econom 12(3):287–295. https://doi.org/10.1016/0167-6687(93)90239-L, https://www.sciencedirect.com/science/article/pii/016766879390239L

Nakamura M, Pérez-Abreu V (1993) Use of an empirical probability generating function for testing a Poisson model. Canad J Statist 21(2):149–156. https://doi.org/10.2307/3315808

Newey WK, West KD (1987) A simple, positive semi-definite, heteroskedasticity and autocorrelation consistent covariance matrix. Econometrica 55(3):703–708. https://doi.org/10.2307/1913610

Novoa-Muñoz F, Jiménez-Gamero MD (2016) A goodness-of-fit test for the multivariate Poisson distribution. SORT 40(1):113–138, https://raco.cat/index.php/SORT/article/view/310071,

Novoa-Muñoz F, Jiménez-Gamero MD (2014) Testing for the bivariate Poisson distribution. Metrika 77(6):771–793. https://doi.org/10.1007/s00184-013-0464-6

Puig P, Weiss CH (2020) Some goodness-of-fit tests for the Poisson distribution with applications in biodosimetry. Comput Statist Data Anal 144(106878):12. https://doi.org/10.1016/j.csda.2019.106878

R Core Team (2020) R: A language and environment for statistical computing. R Foundation for statistical computing, Vienna, Austria, https://www.R-project.org/

Rio E (1996) Sur le théorème de Berry-Esseen pour les suites faiblement dépendantes. Probab Theory Related Fields 104(2):255–282. https://doi.org/10.1007/BF01247840

Rueda R, O’Reilly F (1999) Tests of fit for discrete distributions based on the probability generating function. Comm Statist Simul Comput 28(1):259–274. https://doi.org/10.1080/03610919908813547

Serfling RJ (2009) Approximation theorems of mathematical statistics, vol 162. John Wiley & Sons, New York

Székely GJ, Rizzo ML (2004) Mean distance test of Poisson distribution. Statist Probab Lett 67(3):241–247. https://doi.org/10.1016/j.spl.2004.01.005

White H (2001) Asymptotic theory for econometricians. Academic press

Acknowledgements

The authors thank two anonymous referees for their constructive comments and suggestions which helped to improve the presentation. The authors are supported by grant PID2020-118101GB-I00, Ministerio de Ciencia e Innovación (MCIN/ AEI /10.13039/501100011033).

Funding

Funding for open access publishing: Universidad de Sevilla/CBUA.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jiménez-Gamero, M.D., de Uña-Álvarez, J. Testing Poissonity of a large number of populations. TEST 33, 81–105 (2024). https://doi.org/10.1007/s11749-023-00883-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-023-00883-w