Abstract

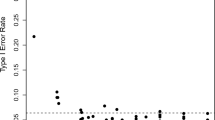

In this paper we consider testing the equality of probability vectors of two independent multinomial distributions in high dimension. The classical Chi-square test may have some drawbacks in this case since many of cell counts may be zero or may not be large enough. We propose a new test and show its asymptotic normality and the asymptotic power function. Based on the asymptotic power function, we present an application of our result to a neighborhood-type test which has been previously studied, especially for the case of fairly small p values. To compare the proposed test with existing tests, we provide numerical studies including simulations and real data examples.

Similar content being viewed by others

References

Anders S, Huber W (2010) Differential expression analysis for sequence count data. Genome Biol 11(10):1–12

Anderson DA, McDonald LL, Weaver KD (1974) Tests on categorical data from the union-intersection principle. Ann Inst Stat Math 26:203–213

Bai Z, Saranadasa H (1996) Effect of high dimension: by an example of a two sample problem. Stat Sin 6:311–329

Berger J, Delampady M (1987) Testing precise hypotheses. Stat Sci 2:317–352

Berger J, Sellke T (1987) Testing a point null hypothesis: irreconcilability of p-values and evidence. J Am Stat Assoc 82:112–122

Cai TT, Liu WD, Xia Y (2014) Two-sample test of high dimensional means under dependence. J R Stat Soc Ser B Stat Methodol 76:349–372

Chen SX, Qin YL (2010) A two sample test for high dimensional data with applications to gene-set testing. Ann Stat 38:808–835

Choi S, Park J (2014) Plug-in tests for nonequivalence of means of independent normal populations. Biom J 56:1016–1034

Dette H, Munk A (1998) Validation of linear regression models. Ann Stat 26:778–800

Hall P, Jin J (2010) Innovated higher criticism for detecting sparse signals in correlated noise. Ann Stat 38:1686–1732

Morris C (1975) Central limit theorems for multinomial sums. Ann Stat 3:165188

Munk A, Paige R, Pang J, Patrangenaru V, Ruymgaat F (2008) The one- and multi-sample problem for functional data with application to projective shape analysis. J Multivar Anal 99:815–833

Park J, Ayyala DN (2013) A test for the mean vector in large dimension and small samples. J Stat Plan Inference 143:929–943

Park J, Sinha B, Shah A, Xu D, Lin J (2015) Likelihood ratio tests for interval hypotheses with applications. Commun Stat Theory Methods 44:2351–2370

Solo V (1984) An alternative to significance tests. Technical Report 84-14, Department of Statistics, Purdue University, IN

Srivastava M (2009) A test for the mean vector with fewer observations than the dimension under non-normality. J Multivar Anal 100:518–532

Srivastava M, Katayama S, Kano Y (2013) A two sample test in high dimensional data. J Multivar Anal 114:349–835

Steck GP (1957) Limit theorems for conditional distributions. Univ Calif Publ Stat 2(12):237–284

Zelterman D (1987) Goodness of fit tests for large sparse multinomial distributions. J Am Stat Assoc 82:624–629

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix: Proof of Lemma 1

Appendix: Proof of Lemma 1

We show the ratio consistency of \(\hat{\sigma }_k^2\). To show the ratio consistency of \(\hat{\sigma }_k^2\), by using \(n_1 \asymp n_2\) and \(\sigma _k^2 \asymp n^{-2} ||\mathbf{P}_1 + \mathbf{P}_2||^2_2\), it is sufficient to show

We first show the ratio consistency of \(\sum _{i=1}^{k}\left( {\hat{p}}_{1i}^2 -\frac{{\hat{p}}_{1i}}{n_1}\right) \) for \(\sum _{i=1}^{k}p_{1i}^2\). The case of the 2nd group (\(\sum _{i=1}^{k}\left( {\hat{p}}_{1i}^2 -\frac{{\hat{p}}_{1i}}{n_1}\right) \) for \(\sum _{i=1}^{k}p_{1i}^2\)) can be proved similarly. Since \(E({\hat{p}}_{1i} ^2) = p_{1i}^2 + \frac{p_{1i}(1-p_{1i})}{n_1} = (1-\frac{1}{n_1})p_{1i}^2 + \frac{p_{1i}}{n_1}\) where \({\hat{p}}_{1i} = \frac{N_{1i}}{n_1}\), we have \(E \left( \frac{n_1}{n_1-1}({\hat{p}}_{1i}^2 - \frac{{\hat{p}}_{1i}}{n_1}) \right) = p_{1i}^2\). Thus we consider the following unbiased estimator of \(\sum _{i=1}^k p_{1i}^2\): \(\frac{n_1}{n_1-1}\sum _{i=1}^k({\hat{p}}_{1i}^2 - \frac{{\hat{p}}_{1i}}{n_1})\). To show \(\frac{\frac{n_1}{n_1-1}\sum _{i=1}^k({\hat{p}}_{1i}^2 - \frac{{\hat{p}}_{1i}}{n_1}) - \sum _{i=1}^{k}p^2_{1i} }{||\mathbf{P}_1 + \mathbf{P}_2||^2_2} \overset{p}{\rightarrow }0\), we will show that the following quantity converges to 0 as follows:

where the last inequality in (26) is from \(Var(X+Y) \le 2(Var(X)+Var(Y))\) and \(n_1/(n_1-1) \le 2\). We decompose (I) into two parts:

Using the results in Lemma.S2 in supplementary material, for some constants \(C_1\) and \(C_2\), we have

For all the terms in the above, we can show \(\frac{(A)}{||\mathbf{P}_1 + \mathbf{P}_2||_2^{4}} \rightarrow 0\) and \(\frac{(B)}{||\mathbf{P}_1 + \mathbf{P}_2||_2^{4}} \rightarrow 0\) as follows: first, note that \( \frac{\max _i p_{ci}^2}{||\mathbf{P}_1 + \mathbf{P}_2||^2_2} \rightarrow 0\) since \( \frac{\max _i p_{ci}^2}{||\mathbf{P}_1 + \mathbf{P}_2||^2_2} \le \frac{\max _i p_{ci}}{||\mathbf{P}_c ||_2^2} \rightarrow 0\) from Condition 2 in Theorem 1. For (A), using \(\max _i p_{1i}^2 \le \max _i p_{1i} \rightarrow 0\) in result 2 in Lemma.S2 in the supplementary material, we have

where Condition 3 (\( n||\mathbf{P}_1 + \mathbf{P}_2||^2_2\ge \epsilon >0 \)) and \(n_1 \asymp n_2 \) are used in the last steps as \(n_1 \rightarrow \infty \). For (B), using \(\sum _{i\ne j} p_{1i}^2 p_{1j}^2 \le ||\mathbf{P}_1 + \mathbf{P}_2||^2_2\) and \(\sum _{i\ne j} p_{1i} p_{1j} \le \sum _{i=1}^k p_{1i} =1\), we have from Conditions 1–3 in Theorem 1

Similarly, for (II), we have

Therefore, we have \(\frac{(II)}{n_1^3||\mathbf{P}_1 + \mathbf{P}_2||_2^{4}} \le \frac{1}{n_1^3||\mathbf{P}_1 + \mathbf{P}_2||_2^{4}} \rightarrow 0\) which leads

The ratio consistent estimator of \(\sum _{i=1}^{k}\left( {\hat{p}}_{2i}^2 - \frac{{\hat{p}}_{2i}}{n_2}\right) \) can be also proved in the same way.

For \(\sum _{i=1}^{k}{\hat{p}}_{1i}{\hat{p}}_{2i}\), we show

where the last term converges to 0 since \(\frac{ (\mathbf{P}_1\cdot \mathbf{P}_2)^2 }{n||\mathbf{P}_1 + \mathbf{P}_2||_2^{4}} \le \frac{||\mathbf{P}_1 + \mathbf{P}_2||^2_2}{2n||\mathbf{P}_1 + \mathbf{P}_2||_2^{4}} = \frac{1}{2n||\mathbf{P}_1 + \mathbf{P}_2||^2_2} \rightarrow 0\) from Condition 3 in Theorem 1. Therefore

Combining (27) and (28), we have (25) which leads to the ratio consistency of \(\hat{\sigma }_k^2\).

Rights and permissions

About this article

Cite this article

Plunkett, A., Park, J. Two-sample test for sparse high-dimensional multinomial distributions. TEST 28, 804–826 (2019). https://doi.org/10.1007/s11749-018-0600-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-018-0600-8