Abstract

Purpose

Surgical workflow recognition has emerged as an important part of computer-assisted intervention systems for the modern operating room, which also is a very challenging problem. Although the CNN-based approach achieves excellent performance, it does not learn global and long-range semantic information interactions well due to the inductive bias inherent in convolution.

Methods

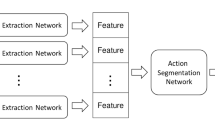

In this paper, we propose a temporal-based Swin Transformer network (TSTNet) for the surgical video workflow recognition task. TSTNet contains two main parts: the Swin Transformer and the LSTM. The Swin Transformer incorporates the attention mechanism to encode remote dependencies and learn highly expressive representations. The LSTM is capable of learning long-range dependencies and is used to extract temporal information. The TSTNet organically combines the two components to extract spatiotemporal features that contain more contextual information. In particular, based on a full understanding of the natural features of the surgical video, we propose a priori revision algorithm (PRA) using a priori information about the sequence of the surgical phase. This strategy optimizes the output of TSTNet and further improves the recognition performance.

Results

We conduct extensive experiments using the Cholec80 dataset to validate the effectiveness of the TSTNet-PRA method. Our method achieves excellent performance on the Cholec80 dataset, which accuracy is up to 92.8% and greatly exceeds the state-of-the-art methods.

Conclusion

By modelling remote temporal information and multi-scale visual information, we propose the TSTNet-PRA method. It was evaluated on a large public dataset, showing a high recognition capability superior to other spatiotemporal networks.

Similar content being viewed by others

References

Shi P, Zhao Z, Liu K, Li F (2022) Attention-based spatial-temporal neural network for accurate phase recognition in minimally invasive surgery: feasibility and efficiency verification. J Comput Des Eng 9(2):406–416. https://doi.org/10.1093/jcde/qwac011

Twinanda AP, Yengera G, Mutter D, Marescaux J, Padoy N (2018) Rsdnet: learning to predict remaining surgery duration from laparoscopic videos without manual annotations. IEEE Trans Med Imaging 38(4):1069–1078. https://doi.org/10.1109/TMI.2018.2878055

Wesierski D, Wojdyga G, Jezierska A (2015) Instrument tracking with rigid part mixtures model. In: Computer-assisted and robotic endoscopy. Springer, pp 22–34. https://doi.org/10.1007/978-3-319-29965-5_3

Padoy N (2019) Machine and deep learning for workflow recognition during surgery. Minim Invasive Ther Allied Technol 28(2):82–90. https://doi.org/10.1080/13645706.2019.1584116

Jin Y, Cheng K, Dou Q, Heng P-A (2019) Incorporating temporal prior from motion flow for instrument segmentation in minimally invasive surgery video. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 440–448. https://doi.org/10.1007/978-3-030-32254-0_49

Zhao Z, Jin Y, Gao X, Dou Q, Heng P-A (2020) Learning motion flows for semi-supervised instrument segmentation from robotic surgical video. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 679–689. https://doi.org/10.1007/978-3-030-59716-0_65

Lalys F, Riffaud L, Bouget D, Jannin P (2012) A framework for the recognition of high-level surgical tasks from video images for cataract surgeries. IEEE Trans Biomed Eng 59(4):966–976. https://doi.org/10.1109/TBME.2011.2181168

Charrière K, Quellec G, Lamard M, Martiano D, Cazuguel G, Coatrieux G, Cochener B (2017) Real-time analysis of cataract surgery videos using statistical models. Multim Tools Appl 76(21):22473–22491. https://doi.org/10.1007/s11042-017-4793-8

Twinanda AP, Mutter D, Marescaux J, de Mathelin M, Padoy N (2016) Single-and multi-task architectures for surgical workflow challenge at m2cai 2016. arXiv:1610.08844

Jin Y, Dou Q, Chen H, Yu L, Qin J, Fu C, Heng P (2018) SV-RCNet: workflow recognition from surgical videos using recurrent convolutional network. IEEE Trans Med Imaging 37(5):1114–1126. https://doi.org/10.1109/TMI.2017.2787657

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780. https://doi.org/10.1162/neco.1997.9.8.1735

Jin Y, Li H, Dou Q, Chen H, Qin J, Fu C, Heng P (2020) Multi-task recurrent convolutional network with correlation loss for surgical video analysis. Med Image Anal. https://doi.org/10.1016/j.media.2019.101572

Yi F, Jiang T (2019) Hard frame detection and online mapping for surgical phase recognition. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 449–457. https://doi.org/10.1007/978-3-030-32254-0_50

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778. https://doi.org/10.1109/CVPR.2016.90

Lea C, Reiter A, Vidal R, Hager GD (2016) Segmental spatiotemporal CNNS for fine-grained action segmentation. In: European conference on computer vision, pp 36–52. https://doi.org/10.1007/978-3-319-46487-9_3

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. Computer Science. arXiv:1409.1556

Twinanda AP, Shehata S, Mutter D, Marescaux J, de Mathelin M, Padoy N (2017) EndoNet: a deep architecture for recognition tasks on laparoscopic videos. IEEE Trans Med Imaging 36(1):86–97. https://doi.org/10.1109/TMI.2016.2593957

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems 25: 26th annual conference on neural information processing systems 2012. Proceedings of a meeting held December 3–6, 2012, Lake Tahoe, Nevada, United States, pp 1106–1114. https://doi.org/10.1145/3065386

Twinanda AP (2017) Vision-based approaches for surgical activity recognition using laparoscopic and RBGD videos. (approches basées vision pour la reconnaissance d’activités chirurgicales à partir de vidéos laparoscopiques et multi-vues RGBD). Ph.D. Thesis, University of Strasbourg, France. https://tel.archives-ouvertes.fr/tel-01557522

Jin Y, Long Y, Chen C, Zhao Z, Dou Q, Heng P (2021) Temporal memory relation network for workflow recognition from surgical video. IEEE Trans Med Imaging 40(7):1911–1923. https://doi.org/10.1109/TMI.2021.3069471

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017) Attention is all you need. CoRR. arXiv:1706.03762

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, Uszkoreit J, Houlsby N (2020) An image is worth 16x16 words: transformers for image recognition at scale. arXiv:2010.11929

Khan SH, Naseer M, Hayat M, Zamir SW, Khan FS, Shah M (2021) Transformers in vision: a survey. CoRR. arXiv:2101.01169

Han K, Wang Y, Chen H, Chen X, Guo J, Liu Z, Tang Y, Xiao A, Xu C, Xu Y, Yang Z, Zhang Y, Tao D (2020) A survey on visual transformer. arXiv:2012.12556

Wang Y, Solomon JM (2019) Deep closest point: learning representations for point cloud registration. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 3523–3532. https://doi.org/10.1109/ICCV.2019.00362

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021) Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 10012–10022. https://doi.org/10.1109/ICCV48922.2021.00986

Acknowledgements

Funding from the Key Industry Innovation Chain of Shaanxi (Grant No. 2022ZDLSF04-05) is gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethics approval

For this type of research, formal consent is not required.

Informed consent

This article contains patient data from publicly available datasets.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Pan, X., Gao, X., Wang, H. et al. Temporal-based Swin Transformer network for workflow recognition of surgical video. Int J CARS 18, 139–147 (2023). https://doi.org/10.1007/s11548-022-02785-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-022-02785-y