Abstract

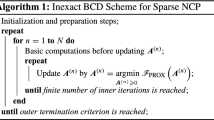

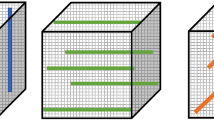

Nonnegative tensor decomposition has become increasingly important for multiway data analysis in recent years. The alternating proximal gradient (APG) is a popular optimization method for nonnegative tensor decomposition in the block coordinate descent framework. In this study, we propose an inexact version of the APG algorithm for nonnegative CANDECOMP/PARAFAC decomposition, wherein each factor matrix is updated by only finite inner iterations. We also propose a parameter warm-start method that can avoid the frequent parameter resetting of conventional APG methods and improve convergence performance. By experimental tests, we find that when the number of inner iterations is limited to around 10 to 20, the convergence speed is accelerated significantly without losing its low relative error. We evaluate our method on both synthetic and real-world tensors. The results demonstrate that the proposed inexact APG algorithm exhibits outstanding performance on both convergence speed and computational precision compared with existing popular algorithms.

Similar content being viewed by others

References

Cichocki A, Mandic D, de Lathauwer L, et al. Tensor decompositions for signal processing applications: From two-way to multiway component analysis. IEEE Signal Process Mag, 2015, 32: 145–163

Sidiropoulos N D, de Lathauwer L, Fu X, et al. Tensor decomposition for signal processing and machine learning. IEEE Trans Signal Process, 2017, 65: 3551–3582

Xu Y, Yin W. A block coordinate descent method for regularized multi-convex optimization with applications to nonnegative tensor factorization and completion. SIAM J Imag Sci, 2013, 6: 1758–1789

Kim J, He Y, Park H. Algorithms for nonnegative matrix and tensor factorizations: a unified view based on block coordinate descent framework. J Glob Optim, 2014, 58: 285–319

Zhang Y, Zhou G, Zhao Q, et al. Fast nonnegative tensor factorization based on accelerated proximal gradient and low-rank approximation. Neurocomputing, 2016, 198: 148–154

Cichocki A, Zdunek R, Phan A H, et al. Nonnegative Matrix and Tensor Factorizations: Applications to Exploratory Multi-way DATA ANAlysis and Blind Source Separation. Hoboken: John Wiley & Sons, 2009

Cichocki A, Phan A H. Fast local algorithms for large scale nonnegative matrix and tensor factorizations. IEICE Trans Fundamentals, 2009, 92: 708–721

Huang K, Sidiropoulos N D, Liavas A P. A flexible and efficient algorithmic framework for constrained matrix and tensor factorization. IEEE Trans Signal Process, 2016, 64: 5052–5065

Nesterov Y E. A method of solving a convex programming problem with convergence rate \(o\left( {{1 \over {{k^2}}}} \right)\). Soviet Math Dokl, 1983, 27: 372–376

Toh K C, Yun S. An accelerated proximal gradient algorithm for nuclear norm regularized linear least squares problems. Pacific J Optim, 2010, 6: 15

Beck A, Teboulle M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J Imag Sci, 2009, 2: 183–202

Li H, Lin Z. Accelerated proximal gradient methods for nonconvex programming. In: Proceedings of Advances in Neural Information Processing Systems 28. Montreal, 2015. 379–387

Guan N, Tao D, Luo Z, et al. NeNMF: An optimal gradient method for nonnegative matrix factorization. IEEE Trans Signal Process, 2012, 60: 2882–2898

Liavas A P, Sidiropoulos N D. Parallel algorithms for constrained tensor factorization via alternating direction method of multipliers. IEEE Trans Signal Process, 2015, 63: 5450–5463

Le H, Gillis N, Patrinos P. Inertial block proximal methods for non-convex non-smooth optimization. In: Proceedings of the 37th International Conference on Machine Learning. Vienna, 2020. 5671–5681

Nazih M, Minaoui K, Comon P. Using the proximal gradient and the accelerated proximal gradient as a canonical polyadic tensor decomposition algorithms in difficult situations. Signal Process, 2020, 171: 107472

Mitchell D, Ye N, de Sterck H. Nesterov acceleration of alternating least squares for canonical tensor decomposition: Momentum step size selection and restart mechanisms. Numer Linear Algebra Appl, 2020, 27: e2297

Liavas A P, Kostoulas G, Lourakis G, et al. Nesterov-based alternating optimization for nonnegative tensor factorization: Algorithm and parallel implementation. IEEE Trans Signal Process, 2018, 66: 944–953

Vervliet N, Lathauwer L D. Numerical optimization-based algorithms for data fusion. Data Handling Sci Tech, 2019, 31: 81–128

Shi Q, Sun H, Lu S, et al. Inexact block coordinate descent methods for symmetric nonnegative matrix factorization. IEEE Trans Signal Process, 2017, 65: 5995–6008

Yang Y, Pesavento M, Luo Z Q, et al. Inexact block coordinate descent algorithms for nonsmooth nonconvex optimization. IEEE Trans Signal Process, 2020, 68: 947–961

Udell M, Horn C, Zadeh R, et al. Generalized low rank models. Found Trends Mach Learn, 2016, 9: 1–118

Bottou L, Curtis F E, Nocedal J. Optimization methods for large-scale machine learning. SIAM Rev, 2018, 60: 223–311

Gillis N, Glineur F. Accelerated multiplicative updates and hierarchical ALS algorithms for nonnegative matrix factorization. Neural Comput, 2012, 24: 1085–1105

Jiang K, Sun D, Toh K C. An inexact accelerated proximal gradient method for large scale linearly constrained convex SDP. SIAM J Optim, 2012, 22: 1042–1064

Wang C, Xu A. An inexact accelerated proximal gradient method and a dual Newton-CG method for the maximal entropy problem. J Optim Theor Appl, 2013, 157: 436–450

Liu H, Song Z. An inexact continuation accelerated proximal gradient algorithm for lown-rank tensor recovery. Int J Comput Math, 2014, 91: 1574–1592

Acar E, Dunlavy D M, Kolda T G. A scalable optimization approach for fitting canonical tensor decompositions. J Chemometr, 2011, 25: 67–86

Xu Y. Alternating proximal gradient method for sparse nonnegative Tucker decomposition. Math Prog Comp, 2015, 7: 39–70

Lin C J. Projected gradient methods for nonnegative matrix factorization. Neural Comput, 2007, 19: 2756–2779

Monga V, Li Y, Eldar Y C. Algorithm unrolling: Interpretable, efficient deep learning for signal and image processing. IEEE Signal Process Mag, 2021, 38: 18–44

Wang D, Zhu Y, Ristaniemi T, et al. Extracting multi-mode ERP features using fifth-order nonnegative tensor decomposition. J Neurosci Methods, 2018, 308: 240–247

Bertsekas D P. Nonlinear Programming. 3rd ed. Belmont: Athena Scientific, 2016

Xu Y, Yin W. A globally convergent algorithm for nonconvex optimization based on block coordinate update. J Sci Comput, 2017, 72: 700–734

Bader B W, Kolda T G, et al. Matlab tensor toolbox version 2.6. 2015. https://old-www.sandia.gov/tgkolda/TensorToolbox/index-2.6.html

Lawaetz A J, Bro R, Kamstrup-Nielsen M, et al. Fluorescence spectroscopy as a potential metabonomic tool for early detection of colorectal cancer. Metabolomics, 2012, 8: 111–121

Mørup M, Hansen L K, Arnfred S M. ERPWAVELAB: A toolbox for multi-channel analysis of time-frequency transformed event related potentials. J Neurosci Methods, 2007, 161: 361–368

Nascimento SMC, Amano K, Foster D H. Spatial distributions of local illumination color in natural scenes. Vision Res, 2016, 120: 39–44

Shi Q, Hong M. Penalty dual decomposition method for nonsmooth nonconvex optimization-Part I: Algorithms and convergence analysis. IEEE Trans Signal Process, 2020, 68: 4108–4122

Author information

Authors and Affiliations

Corresponding authors

Additional information

This work was supported by the National Natural Science Foundation of China (Grant No. 91748105), the National Foundation in China (Grant Nos. JCKY2019110B009 and 2020-JCJQ-JJ-252), the Fundamental Research Funds for the Central Universities (Grant Nos. DUT20LAB303 and DUT20LAB308) in Dalian University of Technology in China, and the scholarship from China Scholarship Council (Grant No. 201600090043). The authors would like to thank Dr. WANG Lei and Dr. LIU YongChao for the discussion on mathematical convergence properties. This study is to memorize Prof. Tapani Ristaniemi for his great help to CONG FengYu and WANG DeQing. Prof. Tapani Ristaniemi supervised this work.

Rights and permissions

About this article

Cite this article

Wang, D., Cong, F. An inexact alternating proximal gradient algorithm for nonnegative CP tensor decomposition. Sci. China Technol. Sci. 64, 1893–1906 (2021). https://doi.org/10.1007/s11431-020-1840-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11431-020-1840-4