Abstract

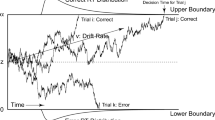

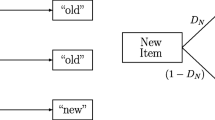

This article presents a joint modeling framework of ordinal responses and response times (RTs) for the measurement of latent traits. We integrate cognitive theories of decision-making and confidence judgments with psychometric theories to model individual-level measurement processes. The model development starts with the sequential sampling framework which assumes that when an item is presented, a respondent accumulates noisy evidence over time to respond to the item. Several cognitive and psychometric theories are reviewed and integrated, leading us to three psychometric process models with different representations of the cognitive processes underlying the measurement. We provide simulation studies that examine parameter recovery and show the relationships between latent variables and data distributions. We further test the proposed models with empirical data measuring three traits related to motivation. The results show that all three models provide reasonably good descriptions of observed response proportions and RT distributions. Also, different traits favor different process models, which implies that psychological measurement processes may have heterogeneous structures across traits. Our process of model building and examination illustrates how cognitive theories can be incorporated into psychometric model development to shed light on the measurement process, which has had little attention in traditional psychometric models.

Similar content being viewed by others

Change history

20 June 2023

An Erratum to this paper has been published: https://doi.org/10.1007/s11336-023-09925-6

Notes

We used the term ‘item strength’, in a similar sense as item difficulty in IRT analysis of test data in that a respondent with a higher value of latent trait than item strength has a higher probability of endorsing the personality/attitude measurement item. This can also be called ‘item attractiveness’ in that it represents the attractiveness of the item.

An alternative to this choice could be item-wise nondecision time. However, modeling both person-wise and item-wise nondecision times is not feasible because their effects on outcome variables are confounded. In other words, person-wise (item-wise) nondecision time parameters can also account for inter-item (inter-person) differences. We consider person-wise nondecision time in this article because typically measurement data have more respondents (than items) and thus models with person-wise nondecision time parameters can better decompose RTs into decision and nondecision times.

The simulated respondent in the Model 3 result, mentioned in the text, has \(\log (\gamma _p) = 1.520\), which is the largest in the simulation study. The mean and SD of data-generating \(\log (\gamma _p)\) values for Model 3 were \(-0.002\) and 0.498, respectively, and the second largest value was 1.162. Having more items can better constrain nondecision time parameters (Kang et al., 2022b). In particular, including an item with a highly strong inclination (i.e., the one with a positively or negatively highly large \(b_i\)) could be helpful as an RT for this item could be closer to the minimum RT of the respondent mostly spent for nondecision processes only.

This was also the case in our simulation study. For example, when Model 2 was the data-generating model, Model 3 beat Model 2 in 3 out of 25 repetitions when we judged based on DIC. Also, there are at least two ways to compute the effective number of parameters and DIC (Gelman et al., 2013), which can produce different results. For example, when Model 3 was the data-generating model, one way of calculating the effective number of parameters and DIC (Equation 7.10 in Gelman et al.) predicted that Model 3 was the best-fitting model for all repetitions but another way (Equation 7.8 in Gelman et al.) predicted that Model 1 was the best-fitting model for all repetitions. Thus, we were not able to obtain consistent conclusions for the proposed models with DIC.

For example, adapt_delta and max_treedepth in Stan.

Ranger and Kuhn’s model does not consider nondecision time, unlike our models.

References

Akaike, H. (1974). A new look at the statistical model identification. IEEE Transactions on Automatic Control, 19(6), 716–723. https://doi.org/10.1109/TAC.1974.1100705

Andrich, D. (1978). Application of a psychometric rating model to ordered categories which are scored with successive integers. Applied Psychological Measurement, 2(4), 581–594. https://doi.org/10.1177/014662167800200413

Andrich, D. (1978). A rating formulation for ordered response categories. Psychometrika, 43, 561–573. https://doi.org/10.1007/BF02293814

Baranski, J., & Petrusic, W. (1998). Probing the locus of confidence judgments: experiments on the time to determine confidence. Journal of Experimental Psychology. Human Perception and Performance, 24(3), 929–945.

Basso, M. A., & Wurtz, R. H. (1998). Modulation of neuronal activity in superior colliculus by changes in target probability. Journal of Neuroscience, 18(18), 7519–7534. https://doi.org/10.1523/JNEUROSCI.18-18-07519.1998

Beck, J. M., Ma, W. J., Kiani, R., Hanks, T., Churchland, A. K., Roitman, J., Shadlen, M. N., Latham, P. E., & Pouget, A. (2008). Probabilistic population codes for Bayesian decision making. Neuron, 60(6), 1142–1152. https://doi.org/10.1016/j.neuron.2008.09.021

Bock, R. D., & Jones, L. V. (1968). The measurement and prediction of judgment and choice. Holden-Day.

Bollen, K., & Barb, K. H. (1981). Pearson’s r and coarsely categorized measures. American Sociological Review, 46(2), 232–239.

Bolsinova, M., De Boeck, P., & Tijmstra, J. (2017). Modelling conditional dependence between response and accuracy. Psychometrika, 82(4), 1126–1148. https://doi.org/10.1007/s11336-016-9537-6

Bolsinova, M., & Molenaar, D. (2018). Modeling nonlinear conditional dependence between response time and accuracy. Frontiers in Psychology, 9(1525), 1–12. https://doi.org/10.3389/fpsyg.2018.01525

Bolsinova, M., & Molenaar, D. (2019). Nonlinear indicator-level moderation in latent variable models. Multivariate Behavioral Research, 54(1), 62–84. https://doi.org/10.1080/00273171.2018.1486174

Bolsinova, M., & Tijmstra, J. (2018). Improving precision of ability estimation: Getting more from response times. British Journal of Mathematical and Statistical Psychology, 71(1), 13–38. https://doi.org/10.1111/bmsp.12104

Bolsinova, M., Tijmstra, J., & Molenaar, D. (2017). Response moderation models for conditional dependence between response time and response accuracy. British Journal of Mathematical and Statistical Psychology, 70, 257–279. https://doi.org/10.1111/bmsp.12076

Bolsinova, M., Tijmstra, J., Molenaar, D., & De Boeck, P. (2017). Conditional dependence between response time and accuracy: An overview of its possible sources and directions for distinguishing between them. Frontiers in Psychology, 8, 202. https://doi.org/10.3389/fpsyg.2017.00202

Borsboom, D., Mellenbergh, G. J., & van Heerden, J. (2003). The theoretical status of latent variables. Psychological Review, 110(2), 203–219. https://doi.org/10.1037/0033-295X.110.2.203

Borsboom, D., Mellenbergh, G. J., & van Heerden, J. (2004). The concept of validity. Psychological Review, 1114, 1061–1071. https://doi.org/10.1037/0033-295X.111.4.1061

Brown, S. D., & Heathcote, A. (2008). The simplest complete model of choice response time: Linear ballistic accumulation. Cognitive Psychology, 57(3), 153–178. https://doi.org/10.1016/j.cogpsych.2007.12.002

Cowell, R. A., Bussey, T. J., & Saksida, L. M. (2006). Why does brain damage impair memory? a connectionist model of object recognition memory in perirhinal cortex. Journal of Neuroscience, 26(47), 12186–12197. https://doi.org/10.1523/JNEUROSCI.2818-06.2006

Cox, D., & Miller, H. D. (1965). The theory of stochastic processes. Methuen.

De Boeck, P., & Jeon, M. (2019). An overview of models for response times and processes in cognitive tests. Frontiers in Psychology, 10, 102. https://doi.org/10.3389/fpsyg.2019.00102

DiTrapani, J., Jeon, M., De Boeck, P., & Partchev, I. (2016). Attempting to differentiate fast and slow intelligence: Using generalized item response trees to examine the role of speed on intelligence tests. Intelligence, 56, 82–92. https://doi.org/10.1016/j.intell.2016.02.012

Embretson, S., & Reise, S. (2000). Item response theory for psychologists. L. Erlbaum Associates.

Fengler, A., Govindarajan, L. N., Chen, T., & Frank, M. J. (2021). Likelihood approximation networks (lans) for fast inference of simulation models in cognitive neuroscience. eLife, 10, e65074. https://doi.org/10.7554/eLife.65074

Ferrando, P. J., & Lorenzo-Seva, U. (2007). An item response theory model for incorporating response time data in binary personality items. Applied Psychological Measurement, 316, 525–543. https://doi.org/10.1177/0146621606295197

Ferrando, P. J., & Lorenzo-Seva, U. (2007). A measurement model for likert responses that incorporates response time. Multivariate Behavioral Research, 424, 675–706. https://doi.org/10.1080/00273170701710247

Festinger, L. (1943). Studies in decision: I. decision-time, relative frequency of judgment and subjective confidence as related to physical stimulus difference. Journal of Experimental Psychology, 32(4), 291–306.

Festinger, L. (1943). Studies in decision. ii. an empirical test of a quantitative theory of decision. Journal of Experimental Psychology, 32(5), 411–423.

Forstmann, B., Ratcliff, R., & Wagenmakers, E.-J. (2016). Sequential sampling models in cognitive neuroscience: Advantages, applications, and extensions. Annual Review of Psychology, 67(1), 641–666. https://doi.org/10.1146/annurev-psych-122414-033645

Gelman, A. (1996). Inference and monitoring convergence. In W. R. Gilks, S. Richardson, & D. J. Spiegelhalter (Eds.), Markov chain monte Carlo in practice (pp. 131–143). CRC Press.

Gelman, A., Carlin, J. B., Stern, H. S., & Rubin, D. B. (2013). Bayesian data analysis (3rd ed.). CRC Press.

Green, D. M., & Swets, J. A. (1966). Signal detection theory and psychophysics. Wiley.

Hermans, H. J. M. (1968). Handleiding bij de prestatie motivatie test [manual of the performance motivation test]. Harcourt Assessment B.V.

Hermans, H. J. M., Ter Laak, J. J. F., & Maes, P. C. J. M. (1972). Achievement motivation and fear of failure in family and school. Developmental Psychology, 6, 520–528.

Jazayeri, M., & Movshon, J. (2006). Optimal representation of sensory information by neural populations. Nature Neuroscience, 9, 690–696. https://doi.org/10.1038/nn1691

Kang, I., De Boeck, P., & Partchev, I. (2022). A randomness perspective on intelligence processes. Intelligence, 91, 101632. https://doi.org/10.1016/j.intell.2022.101632

Kang, I., De Boeck, P., & Ratcliff, R. (2022). Modeling conditional dependence of response accuracy and response time with the diffusion item response theory model. Psychometrika, Advance Online Publication.https://doi.org/10.1007/s11336-021-09819-5

Kang, I., & Ratcliff, R. (2020). Modeling the interaction of numerosity and perceptual variables with the diffusion model. Cognitive Psychology, 120, 1–42. https://doi.org/10.1016/j.cogpsych.2020.101288

Kang, I., Ratcliff, R., & Voskuilen, C. (2020). A note on decomposition of sources of variability in perceptual decision-making. Journal of Mathematical Psychology, 98, 102431. https://doi.org/10.1016/j.jmp.2020.102431

Kuiper, N. A. (1981). Convergent evidence for the self as a prototype: The “inverted-u rt effect’’ for self and other judgments. Personality and Social Psychology Bulletin, 7(3), 438–443. https://doi.org/10.1177/014616728173012

Kuncel, R. B. (1973). Response processes and relative location of subject and item. Educational and Psychological Measurement, 333, 545–563. https://doi.org/10.1177/001316447303300302

Lu, J., Wang, C., & Shi, N. (2021). A mixture response time process model for aberrant behaviors and item nonresponses. Multivariate Behavioral Research, Advance Online Publication.https://doi.org/10.1080/00273171.2021.1948815.

Luce, R. D. (1986). Response times: Their role in inferring elementary mental organization. Oxford University Publication.

Macmillan, N. A., & Creelman, C. D. (1966). Detection theory: A user’s guide. Taylor & Francis.

McKoon, G., & Ratcliff, R. (2016). Adults with poor reading skills: How lexical knowledge interacts with scores on standardized reading comprehension tests. Cognition, 146, 453–469. https://doi.org/10.1016/j.cognition.2015.10.009

McKoon, G., & Ratcliff, R. (2017). Adults with poor reading skills and the inferences they make during reading. Scientific Studies of Reading, 21(4), 292–309. https://doi.org/10.1080/10888438.2017.1287188

McKoon, G., & Ratcliff, R. (2018). Adults with poor reading skills, older adults, and college students: The meanings they understand during reading using a diffusion model analysis. Journal of Memory and Language, 102, 115–129. https://doi.org/10.1016/j.jml.2018.05.005

Merkle, E., & Van Zandt, T. (2006). An application of the poisson race model to confidence calibration. Journal of Experimental Psychology. General, 135, 391–408.

Modick, H. E. (1977). A 3-scale measure of achievement motivation: Report on a German extension of the prestatie motivatie test. Diagnostica, 23(4), 298–321.

Molenaar, D., & De Boeck, P. (2018). Response mixture modeling: Accounting for heterogeneity in item characteristics across response times. Psychometrika, 83(2), 279–297.

Molenaar, D., Oberski, D., Vermunt, J., & Boeck, P. D. (2016). Hidden Markov item response theory models for responses and response times. Multivariate Behavioral Research, 51(5), 606–626. https://doi.org/10.1080/00273171.2016.1192983

Molenaar, D., Tuerlinckx, F., & van der Maas, H. L. J. (2015). A bivariate generalized linear item response theory modeling framework to the analysis of responses and response times. Multivariate Behavioral Research, 50(1), 56–74. https://doi.org/10.1080/00273171.2014.962684

Molenaar, D., Tuerlinckx, F., & van der Maas, H. L. J. (2015). Fitting diffusion item response theory models for responses and response times using the r package diffirt. Journal of Statistical Software, 66(4), 1–34. https://doi.org/10.18637/jss.v066.i04

Muraki, E. (1990). Fitting a polytomous item response model to likert-type data. Applied Psychological Measurement, 14(1), 59–71. https://doi.org/10.1177/014662169001400106

Muthén, B. (1983). Latent variable structural equation modeling with categorical data. Journal of Econometrics, 22(1), 43–65. https://doi.org/10.1016/0304-4076(83)90093-3

Muthén, B. (1984). A general structural equation model with dichotomous, ordered categorical, and continuous latent variable indicators. Psychometrika, 49, 115–132. https://doi.org/10.1007/BF02294210

Olsson, U. (1979). Maximum likelihood estimation of the polychoric correlation coefficient. Psychometrika, 44(4), 443–460.

Partchev, I., & De Boeck, P. (2012). Can fast and slow intelligence be differentiated? Intelligence, 40(1), 23–32. https://doi.org/10.1016/j.intell.2011.11.002

Pearson, K. (1901). Mathematical contributions to the theory of evolution. viii. on the inheritance of characters not capable of exact quantitative measurement. Philosophical Transactions of the Royal Society of London A, 195, 79–150. https://doi.org/10.1098/rsta.1900.0024

Pleskac, T. J., & Busemeyer, J. (2010). Two-stage dynamic signal detection: a theory of choice, decision time, and confidence. Psychological Review, 117(3), 864–901.

Ranger, J., & Kuhn, J.-T. (2018). Modeling responses and response times in rating scales with the linear ballistic accumulator. Methodology, 14(3), 119–132. https://doi.org/10.1027/1614-2241/a000152

Ranger, J., Kuhn, J.-T., & Szardenings, C. (2017). Analysing model fit of psychometric process models: An overview, a new test and an application to the diffusion model. British Journal of Mathematical and Statistical Psychology, 70(2), 209–224. https://doi.org/10.1111/bmsp.12082

Ratcliff, R. (1978). A theory of memory retrieval. Psychological Review, 85(2), 59–108.

Ratcliff, R. (2002). A diffusion model account of response time and accuracy in a brightness discrimination task: Fitting real data and failing to fit fake but plausible data. Psychological Science, 9(2), 278–291.

Ratcliff, R. (2018). Decision making on spatially continuous scales. Psychological Review, 125, 888–935. https://doi.org/10.1037/rev0000117

Ratcliff, R., Gomez, P., & McKoon, G. (2003). A diffusion model account of the lexical decision task. Psychological Review, 111(1), 159–182. https://doi.org/10.1037/0033-295X.111.1.159

Ratcliff, R., Hasegawa, Y., Hasegawa, R., Smith, P., & Segraves, M. (2007). Dual diffusion model for single-cell recording data from the superior colliculus in a brightness-discrimination task. Journal of Neurophysiology, 97, 1756–74. https://doi.org/10.1152/jn.00393.2006

Ratcliff, R., Hasegawa, Y. T., Hasegawa, R. P., Smith, P. L., & Segraves, M. A. (2007). Dual diffusion model for single-cell recording data from the superior colliculus in a brightness-discrimination task. Journal of Neurophysiology, 97(2), 1756–1774. https://doi.org/10.1152/jn.00393.2006

Ratcliff, R., & Kang, I. (2021). Qualitative speed-accuracy tradeoff effects can be explained by a diffusion/fast-guess mixture model. Scientific Reports, 11, 15169. https://doi.org/10.1038/s41598-021-94451-7

Ratcliff, R., & McKoon, G. (2008). The diffusion decision model: Theory and data for two-choice decision tasks. Neural Computation, 20(4), 873–922.

Ratcliff, R., & Smith, P. (2004). A comparison of sequential sampling models for two-choice reaction time. Psychological Review, 111, 333–67. https://doi.org/10.1037/0033-295X.111.2.333

Ratcliff, R., & Starns, J. J. (2009). Modeling confidence and response time in recognition memory. Psychological Review, 116(1), 59–83.

Ratcliff, R., & Starns, J. J. (2013). Modeling confidence judgments, response times, and multiple choices in decision making: Recognition memory and motion discrimination. Psychological Review, 120(3), 697–719. https://doi.org/10.1037/a0033152

Ratcliff, R., Voskuilen, C., & McKoon, G. (2018). Internal and external sources of variability in perceptual decision-making. Psychological Review, 125(1), 33–46. https://doi.org/10.1037/rev0000080

Rouder, J., Province, J., Morey, R., Gómez, P., & Heathcote, A. (2015). The lognormal race: A cognitive-process model of choice and latency with desirable psychometric properties. Psychometrika, 80(2), 491–513. https://doi.org/10.1007/s11336-013-9396-3

Samejima, F. (1969). Estimation of latent ability using a response pattern of graded scores. Psychometrika Monograph Supplement, 34(4, Pt. 2), 100.

Samejima, F. (1997). Graded response model. In W. J. van der Linden & R. Hambleton (Eds.), Handbook of modern item response theory (pp. 85–100). Springer. https://doi.org/10.1007/978-1-4757-2691-6_5

Schnipke, D. L., & Scrams, D. J. (1997). Modeling item response times with a two-state mixture model: A new method of measuring speededness. Journal of Educational Measurement, 34(3), 213–232. https://doi.org/10.1111/j.1745-3984.1997.tb00516.x

Schwarz, G. (1978). Estimating the dimension of a model. The Annals of Statistics, 6(2), 461–464. https://doi.org/10.2307/2958889

Skrondal, A., & Rabe-Hesketh, S. (2004). Generalized latent variable modeling: Multilevel, longitudinal, and structural equation models. Chapman & HallCRC.

Smith, P. L. (2000). Stochastic dynamic models of response time and accuracy: A foundational primer. Journal of Mathematical Psychology, 44(3), 408–463. https://doi.org/10.1006/jmps.1999.1260

Smith, P. L., & Vickers, D. (1988). The accumulator model of two-choice discrimination. Journal of Mathematical Psychology, 32(2), 135–168. https://doi.org/10.1016/0022-2496(88)90043-0

Spiegelhalter, D. J., Best, N. G., Carlin, B. P., & Van Der Linde, A. (2002). Bayesian measures of model complexity and fit. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 64(4), 583–639. https://doi.org/10.1111/1467-9868.00353

Stan Development Team. (2021). Stan modeling language user’s guide and reference manual stan modeling language user’s guide and reference manual. Retrieved from http://mc-stan.org/.

Takane, Y., & De Leeuw, J. (1987). On the relationship between item response theory and factor analysis of discretized variables. Psychometrika, 52, 393–408. https://doi.org/10.1007/BF02294363

Thurstone, L. L. (1927). A law of comparative judgment. Psychological Review, 34(4), 273–286. https://doi.org/10.1037/h0070288

Thurstone, L. L. (1927). Psychophysical analysis. The American Journal of Psychology, 38(3), 368–389.

Thurstone, L. L. (1928). Attitudes can be measured. American Journal of Sociology, 33, 529–554. https://doi.org/10.1086/214483

Torgenson, W. S. (1958). Theory and methods of scaling. Wiley.

Tuerlinckx, F., & De Boeck, P. (2005). Two interpretations of the discrimination parameter. Psychometrika, 70(4), 629–650. https://doi.org/10.1007/s11336-000-0810-3

Tuerlinckx, F., Molenaar, D., & van der Maas, H. L. J. (2016). Diffusion-based response-time models. In W. J. van der Linden (Ed.), Handbook of item response theory (pp. 283–300). Chapman and Hall/CRC.

Turner, B. M., & Sederberg, P. B. (2012). Approximate Bayesian computation with differential evolution. Journal of Mathematical Psychology, 56(5), 375–385. https://doi.org/10.1016/j.jmp.2012.06.004

Turner, B. M., & Sederberg, P. B. (2014). A generalized, likelihood-free method for posterior estimation. Psychonomic Bulletin and Review, 21, 227–250. https://doi.org/10.3758/s13423-013-0530-0

Turner, B. M., & Van Zandt, T. (2012). A tutorial on approximate Bayesian computation. Journal of Mathematical Psychology, 56(2), 69–85. https://doi.org/10.1016/j.jmp.2012.02.005

Turner, B. M., & Van Zandt, T. (2014). Hierarchical approximate Bayesian computation. Psychometrika, 79, 185–209. https://doi.org/10.1007/s11336-013-9381-x

Usher, M., & McClelland, J. (2001). The time course of perceptual choice: the leaky, competing accumulator model. Psychological Review, 108, 550–92. https://doi.org/10.1037//0033-295X.108.3.550

van der Linden, W. J. (2007). A hierarchical framework for modeling speed and accuracy on test items. Psychometrika, 72(3), 287–308. https://doi.org/10.1007/s11336-006-1478-z

van der Maas, H. L. J., Molenaar, D., Maris, G., Kievit, R. A., & Borsboom, D. (2011). Cognitive psychology meets psychometric theory: On the relation between process models for decision making and latent variable models for individual differences. Psychological Review, 118(2), 339–356. https://doi.org/10.1080/20445911.2011.454498

van der Maas, H. L. J., & Wagenmakers, E.-J. (2005). A psychometric analysis of chess expertise. The American Journal of Psychology, 118, 29–60.

Van Zandt, T. (2000). Roc curves and confidence judgments in recognition memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 26(3), 582–600. https://doi.org/10.1037/0278-7393.26.3.582

Van Zandt, T., & Maldonado-Molina, M. (2004). Response reversals in recognition memory. Journal of experimental psychology. Learning, Memory, and Cognition, 30, 1147–1166. https://doi.org/10.1037/0278-7393.30.6.1147

Vickers, D. (1979). Decision processes in visual perception. Academic Press.

Volkmann, J. (1934). The relation of time of judgment to certainty of judgment. Psychological Bulletin, 31, 672–673.

Wald, A. (1947). Sequential analysis. Wiley.

Wang, C., & Xu, G. (2015). A mixture hierarchical model for response times and response accuracy. British Journal of Mathematical and Statistical Psychology, 68(3), 456–477. https://doi.org/10.1111/bmsp.12054

Wang, C., Xu, G., & Shang, Z. (2018). A two-stage approach to differentiating normal and aberrant behavior in computer based testing. Psychometrika, 83(1), 223–254. https://doi.org/10.1007/s11336-016-9525-x

Wickelgren, W. A. (1977). Speed-accuracy tradeoff and information processing dynamics. Acta Psychologica, 41(1), 67–85. https://doi.org/10.1016/0001-6918(77)90012-9

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Code Availability

The Stan codes to fit the proposed models can be found online at https://osf.io/76jb4/.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kang, I., Molenaar, D. & Ratcliff, R. A Modeling Framework to Examine Psychological Processes Underlying Ordinal Responses and Response Times of Psychometric Data. Psychometrika 88, 940–974 (2023). https://doi.org/10.1007/s11336-023-09902-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-023-09902-z