Abstract

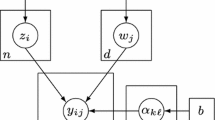

Diagnostic classification models (DCMs) are widely used for providing fine-grained classification of a multidimensional collection of discrete attributes. The application of DCMs requires the specification of the latent structure in what is known as the \({\varvec{Q}}\) matrix. Expert-specified \({\varvec{Q}}\) matrices might be biased and result in incorrect diagnostic classifications, so a critical issue is developing methods to estimate \({\varvec{Q}}\) in order to infer the relationship between latent attributes and items. Existing exploratory methods for estimating \({\varvec{Q}}\) must pre-specify the number of attributes, K. We present a Bayesian framework to jointly infer the number of attributes K and the elements of \({\varvec{Q}}\). We propose the crimp sampling algorithm to transit between different dimensions of K and estimate the underlying \({\varvec{Q}}\) and model parameters while enforcing model identifiability constraints. We also adapt the Indian buffet process and reversible-jump Markov chain Monte Carlo methods to estimate \({\varvec{Q}}\). We report evidence that the crimp sampler performs the best among the three methods. We apply the developed methodology to two data sets and discuss the implications of the findings for future research.

Similar content being viewed by others

References

Brooks, S. P., & Gelman, A. (1998). General methods for monitoring convergence of iterative simulations. Journal of Computational and Graphical Statistics, 7(4), 434–455.

Chen, Y., Culpepper, S., & Liang, F. (2020). A sparse latent class model for cognitive diagnosis. Psychometrika, 85, 121–153.

Chen, Y., Culpepper, S., & Liang, F. (2020b). A sparse latent class model for cognitive diagnosis. Psychometrika, 1–33.

Chen, Y., Culpepper, S. A., Chen, Y., & Douglas, J. (2018). Bayesian estimation of the DINA Q. Psychometrika, 83(1), 89–108.

Chen, Y., Culpepper, S. A., Wang, S., & Douglas, J. A. (2018). A hidden Markov model for learning trajectories in cognitive diagnosis with application to spatial rotation skills. Applied Psychological Measurement, 42, 5–23.

Chen, Y., Liu, J., Xu, G., & Ying, Z. (2015). Statistical analysis of Q-matrix based diagnostic classification models. Journal of the American Statistical Association, 110(510), 850–866.

Culpepper, S. A. (2015). Bayesian estimation of the DINA model with Gibbs sampling. Journal of Educational and Behavioral Statistics, 40(5), 454–476.

Culpepper, S. A. (2019a). Estimating the cognitive diagnosis Q matrix with expert knowledge: Application to the fraction-subtraction dataset. Psychometrika, 84(2), 333–357.

Culpepper, S. A. (2019b). An exploratory diagnostic model for ordinal responses with binary attributes: Identifiability and estimation. Psychometrika, 84(4), 921–940.

Culpepper, S. A., & Chen, Y. (2018). Development and application of an exploratory reduced reparameterized unified model. Journal of Educational and Behavioral Statistics, 44, 3–24.

de la Torre, J. (2011). The generalized DINA model framework. Psychometrika, 76(2), 179–199.

de la Torre, J., & Douglas, J. A. (2004). Higher-order latent trait models for cognitive diagnosis. Psychometrika, 69(3), 333–353.

de la Torre, J., & Douglas, J. A. (2008). Model evaluation and multiple strategies in cognitive diagnosis: An analysis of fraction subtraction data. Psychometrika, 73(4), 595–624.

Doshi-Velez, F., & Williamson, S. A. (2017). Restricted Indian buffet processes. Statistics and Computing, 27(5), 1205–1223.

Fang, G., Liu, J., & Ying, Z. (2019). On the identifiability of diagnostic classification models. Psychometrika, 84(1), 19–40.

Gershman, S. J., & Blei, D. M. (2012). A tutorial on Bayesian nonparametric models. Journal of Mathematical Psychology, 56(1), 1–12.

Green, P. J. (1995). Reversible jump Markov chain Monte Carlo computation and Bayesian model determination. Biometrika, 82(4), 711–732.

Griffiths, T. L., & Ghahramani, Z. (2005). Infinite latent feature models and the Indian buffet process (Technical Report 2005-001). London: Gatsby Computational Neuroscience Unit.

Griffiths, T. L., & Ghahramani, Z. (2011). The Indian buffet process: An introduction and review. Journal of Machine Learning Research, 12(Apr), 1185–1224.

Gu, Y., & Xu, G. (2019). The sufficient and necessary condition for the identifiability and estimability of the dina model. Psychometrika, 84(2), 468–483.

Gu, Y., & Xu, G. (in press). Sufficient and necessary conditions for the identifiability of the Q-matrix. Statistica Sinica. https://doi.org/10.5705/ss.202018.0410

Heller, J., & Wickelmaier, F. (2013). Minimum discrepancy estimation in probabilistic knowledge structures. Electronic Notes in Discrete Mathematics, 42, 49–56.

Henson, R. A., Templin, J. L., & Willse, J. T. (2009). Defining a family of cognitive diagnosis models using log-linear models with latent variables. Psychometrika, 74(2), 191–210.

Kaya, Y., & Leite, W. L. (2017). Assessing change in latent skills across time with longitudinal cognitive diagnosis modeling: An evaluation of model performance. Educational and Psychological Measurement, 77(3), 369–388.

Li, F., Cohen, A., Bottge, B., & Templin, J. (2016). A latent transition analysis model for assessing change in cognitive skills. Educational and Psychological Measurement, 76(2), 181–204.

Liu, C.-W., Andersson, B., & Skrondal, A. (2020). A constrained metropolis-hastings robbins-monro algorithm for q matrix estimation in dina models. Psychometrika, 85(2), 322–357.

Liu, J., Xu, G., & Ying, Z. (2013). Theory of the self-learning Q-matrix. Bernoulli, 19(5A), 1790–1817.

Liu, J. S. (1994). The collapsed Gibbs sampler in Bayesian computations with applications to a gene regulation problem. Journal of the American Statistical Association, 89(427), 958–966.

Madison, M. J., & Bradshaw, L. P. (2018). Assessing growth in a diagnostic classification model framework. Psychometrika, 83, 963–990.

Sen, S., & Bradshaw, L. (2017). Comparison of relative fit indices for diagnostic model selection. Applied Psychological Measurement, 41(6), 422–438.

Sethuraman, J. (1994). A constructive definition of Dirichlet priors. Statistica Sinica, 4, 639–650.

Sorrel, M. A., Olea, J., Abad, F. J., de la Torre, J., Aguado, D., & Lievens, F. (2016). Validity and reliability of situational judgement test scores: A new approach based on cognitive diagnosis models. Organizational Research Methods, 19(3), 506–532.

Tatsuoka, C. (2002). Data analytic methods for latent partially ordered classification models. Journal of the Royal Statistical Society: Series C (Applied Statistics), 51(3), 337–350.

Tatsuoka, K. K. (1984). Analysis of errors in fraction addition and subtraction problems. Computer-Based Education Research Laboratory: University of Illinois at Urbana-Champaign.

Teh, Y. W., Grür, D., & Ghahramani, Z. (2007). Stick-breaking construction for the Indian buffet process. In Artificial intelligence and statistics (pp. 556–563). San Juan, Puerto Rico.

Thibaux, R., & Jordan, M. I. (2007). Hierarchical beta processes and the Indian buffet process. In Artificial intelligence and statistics (pp. 564–571). San Juan, Puerto Rico.

von Davier, M. (2008). A general diagnostic model applied to language testing data. British Journal of Mathematical and Statistical Psychology, 61(2), 287–307.

Wang, S., Yang, Y., Culpepper, S. A., & Douglas, J. (2017). Tracking skill acquisition with cognitive diagnosis models: A higher-order hidden Markov model with covariates. Journal of Educational and Behavioral Statistics, 43(1), 57–87.

Wang, S., Zhang, S., Douglas, J., & Culpepper, S. A. (2018). Using response times to assess learning progress: A joint model for responses and response times. Measurement: Interdisciplinary Research and Perspectives, 16(1), 45–58.

Xu, G. (2017). Identifiability of restricted latent class models with binary responses. Annals of Statistics, 45(2), 675–707.

Xu, G., & Shang, Z. (2018). Identifying latent structures in restricted latent class models. Journal of the American Statistical Association, 113(523), 1284–1295.

Xu, G., & Zhang, S. (2016). Identifiability of diagnostic classification models. Psychometrika, 81(3), 625–649.

Ye, S., Fellouris, G., Culpepper, S. A., & Douglas, J. (2016). Sequential detection of learning in cognitive diagnosis. British Journal of Mathematical and Statistical Psychology, 69(2), 139–158.

Zhang, S., Douglas, J., Wang, S., & Culpepper, S. A. (in press). Reduced reparameterized unified model applied to learning spatial reasoning skills. In M. von Davier & Y. Lee (Eds.), Handbook of diagnostic classification models. New York: Springer.

Acknowledgements

This research was partially supported by National Science Foundation Methodology, Measurement, and Statistics program Grant #1758631 and #1758688.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

Reversible Jump MCMC Algorithm

We use reversible jump MCMC to move between \({\varvec{Q}}\) matrices of different numbers of columns. With the probabilities for “birth” and “death” steps \(b_K+d_K=1\), we have two possible moves:

-

1.

Birth move: We propose \(K\rightarrow K+1\) with probability \(b_K\).

To make our algorithm more efficient, we apply the collapsed Gibbs sampling (Liu 1994) by integrating \({\varvec{\alpha }}_i\) out:

$$\begin{aligned} P({\varvec{Y}} \vert {\varvec{g}},{\varvec{s}},{\mathbf {Q}},{\varvec{\pi }},K)=\prod _{i=1}^{N} \sum _{{\varvec{\alpha }}_c \in \lbrace 0,1 \rbrace ^K}^{} \pi _c \prod _{j=1}^{J} P(Y_{ij}\vert s_j,g_j,{\varvec{\alpha }}_c, {\varvec{q}}_j), \end{aligned}$$so that we can skip the step of sampling \({\varvec{\alpha }}_i\). Following the steps described in Algorithm 4, let \({\varvec{Q}}_K=({\varvec{q}}_1,\ldots ,{\varvec{q}}_K)\), we propose the move from \(\lbrace {\varvec{Q}}_K , {\varvec{\pi }},K \rbrace \rightarrow \lbrace {\varvec{Q}}_{K+1} , {\varvec{\pi }}^{*}, K+1\rbrace \), which is

$$\begin{aligned} \left[ \begin{matrix} {\varvec{q}}_1 \\ \vdots \\ {\varvec{q}}_K \\ {\varvec{q}}_{K+1}\\ \pi _1\\ \vdots \\ \pi _C\\ u_1\\ \vdots \\ u_C\\ \end{matrix} \right] \rightarrow \left[ \begin{matrix} {\varvec{q}}_1 \\ \vdots \\ {\varvec{q}}_K \\ {\varvec{q}}_{K+1}\\ \pi _1^{*}\\ \vdots \\ \pi _C^{*}\\ \pi _{C+1}^{*}\\ \vdots \\ \pi _{2C}^{*}\\ \end{matrix} \right] . \end{aligned}$$(31)The RJ-MCMC acceptance ratio is

$$\begin{aligned} A&=\dfrac{P({\varvec{Y}} \vert {\varvec{g}},{\varvec{s}},{\varvec{Q}}_{K+1},{\varvec{\pi }}^{*},K+1)}{P({\varvec{Y}} \vert {\varvec{g}},{\varvec{s}},{\varvec{Q}}_{K},{\varvec{\pi }},K)}\times \dfrac{P(K+1)P({\varvec{\pi }}^{*}\vert {\varvec{\delta }}_0^{*},K+1)P({\varvec{Q}}_{K+1}\vert K+1)}{P(K)P({\varvec{\pi }}\vert {\varvec{\delta }}_0,K)P({\varvec{Q}}_{K}\vert K)}\nonumber \\&\quad \times \dfrac{d_{K+1}\dfrac{1}{K+1}}{b_K \prod _{j=1}^{J}p(q_{j,K+1}|q_{1,K+1},\ldots ,q_{j-1,K+1})\prod _{i=1}^{C}p (u_i^{*})}\times \vert J\vert , \end{aligned}$$(32)where

$$\begin{aligned} \dfrac{P({\varvec{\pi }}^{*}\vert {\varvec{\delta }}_0^{*},K+1)}{P({\varvec{\pi }}\vert {\varvec{\delta }}_0 ,K)}=\dfrac{\Gamma (2^{K+1})}{\Gamma (2^{K})}, \qquad ({\varvec{\delta }}_0={\varvec{1}}_{2^K}, {\varvec{\delta }}_0^{*}={\varvec{1}}_{2^{K+1}}), \end{aligned}$$and the Jacobian matrix J is

$$\begin{aligned} \begin{aligned} \vert J \vert&= \left| \dfrac{\partial {\varvec{\pi }}^{*}}{\partial ({\varvec{\pi }},{\varvec{u}}^{*})} \right| \\ \\&= \begin{vmatrix}&\begin{pmatrix} u_1 &{} &{} &{} \\ &{} u_2 &{} &{} \\ &{} &{} \ddots &{}\\ &{} &{} &{} u_C\\ \end{pmatrix}&\begin{pmatrix} \pi _1 &{} &{} &{} \\ &{} \pi _2 &{} &{} \\ &{} &{} \ddots &{}\\ &{} &{} &{} \pi _C\\ \end{pmatrix}\\&\begin{pmatrix} 1-u_1 &{} &{} &{} \\ &{} 1-u_2 &{} &{} \\ &{} &{} \ddots &{}\\ &{} &{} &{} 1-u_C\\ \end{pmatrix}&\begin{pmatrix} -\pi _1 &{} &{} &{} \\ &{} -\pi _2 &{} &{} \\ &{} &{} \ddots &{}\\ &{} &{} &{} -\pi _C\\ \end{pmatrix}\\ \end{vmatrix}\\ \\&= \prod _{i=1}^{C}\pi _i. \end{aligned} \end{aligned}$$(33)The acceptance probability for the proposed birth move is min(1, A).

-

2.

Death move: We propose \(K\rightarrow K-1\) with probability \(d_K\).

We randomly select a column in \({\varvec{Q}}_K\) to delete. The acceptance probability is min\((1,A^{-1})\), where \(A^{-1}\) can be computed in a similar way as Eq. 32.

Appendix B

Acceptance Ratio of Crimp Sampler

The crimp sampler moves between identifiable spaces with deterministic transformations of the model parameters. The posterior distribution of \({\varvec{\xi }}^{K}=({\varvec{Q}}_K,{\varvec{\pi }}_K,{\varvec{A}}_K,K)\) and \({\varvec{\Omega }}\) is

where

Let \({\varvec{u}}=(u_0,\ldots ,u_{2^K-1})\) be a vector of independent random variables. The conditional posterior of \({\varvec{\xi }}^K\) and \({\varvec{u}}\) is

Note that for \({\varvec{\pi }}_K\sim \text {Dirichlet}({\varvec{d}}_K)\) where \({\varvec{d}}_K=(d_0,\ldots ,d_{2^K-1})\),

where \(n_c\) is the number of respondents in class c and the normalizing constant is

Moves from \(K\rightarrow K+1\) involve transiting from \({\varvec{\xi }}^K=({\varvec{A}}_K,{\varvec{\pi }}_K,{\varvec{Q}}_K,K,{\varvec{u}})\rightarrow \) \({\varvec{\xi }}^{K+1}=({\varvec{A}}_{K+1},{\varvec{\pi }}_{K+1},{\varvec{Q}}_{K+1},K+1)\). That is, the move to a higher dimension involves a transformation of \({\varvec{\pi }}_K\) and \({\varvec{u}}\) into \({\varvec{\pi }}_{K+1}\). Specifically, let

where \(\pi _{c0}\) and \(\pi _{c1}\) are elements of \({\varvec{\pi }}_{K+1}\) for \(c=0,\ldots ,2^K-1\). Note the Jacobian of transformation equals \(|J|=\prod _{c=0}^{2^K-1} (\pi _{c0}+\pi _{c1})^{-1}\).

Let \({\varvec{a}}_{K+1}=(\alpha _{1,K+1},\ldots ,\alpha _{N,K+1})^\top \) be an N vector of the proposed \(K+1\) binary attributes. The full conditional distribution for the proposed \(K+1\) state prior to transformation is

where \(p({\varvec{a}}_{K+1}|{\varvec{A}}_K,{\varvec{u}})=\prod _{i=1}^n\sum _{c=0}^{2^K-1}{\mathcal {I}}({\varvec{\alpha }}_i^\top {\varvec{v}}=c)\left( u_c^{\alpha _{i,K+1}}(1-u_c)^{1-\alpha _{i,K+1}}\right) \).

Let \(u_c\sim \text {Beta}(1,1)\) for \(c=0,\ldots ,2^K-1\) and consider \({\varvec{d}}={\varvec{1}}_{2^K}\). The conditional prior distribution of \({\varvec{a}}_{K+1}\), \({\varvec{A}}_K\), \({\varvec{\pi }}_K\), and \({\varvec{u}}\) given \({\varvec{Q}}_{K+1}\) is

where \(n_{c0}+n_{c1}=n_{c}\), \(n_{c0}\) is the number of individuals in class c (for attributes 1 to K) with attribute \(K+1\) equal to 0, and \(n_{c1}\) corresponds to the number of individuals in class c with attribute \(K+1\) equal to 1. Applying the transformation implies

The transformed conditional posterior distribution is therefore

where \({\varvec{A}}_{K+1}=({\varvec{A}}_{K},{\varvec{a}}_{K+1})\).

The transformed posterior for \({\varvec{\xi }}^{K+1}\) requires we propose values for \({\varvec{a}}_{K+1}\) and the \(2^K\) \({\varvec{u}}\), which form the additional elements of \({\varvec{\pi }}_{K+1}\) (in fact, RJ-MCMC transitions between spaces this way). Proposals for these parameters must be carefully constructed to ensure proper mixing and acceptance rates. Rather than proposing values we integrate out \({\varvec{a}}_{K+1}\) and \({\varvec{u}}\). That is, we implement the inverse transformations

sum over \({\varvec{a}}_{K+1}\), and integrate out \({\varvec{u}}\) to find,

Therefore, for \(K\rightarrow K+1\), with the uniform prior for \({\varvec{Q}}_K\) and K, all terms but the likelihood functions will cancel out in the MH acceptance ratio, i.e.,

Transitions to lower dimensions involve a move from \({\varvec{\xi }}^K\rightarrow \) \({\varvec{\xi }}^{K-1}=({\varvec{A}}_{K-1},{\varvec{\pi }}_{K-1},{\varvec{Q}}_{K-1},K-1)\). Without loss of generality, suppose the Kth attribute is proposed to be deleted. Therefore, \({\varvec{\alpha }}_i=(\tilde{{\varvec{\alpha }}}_i^\top ,\alpha _{iK})^\top \) and \({\varvec{A}}_{K-1}=(\tilde{{\varvec{\alpha }}}_1,\ldots ,\tilde{{\varvec{\alpha }}}_N)^\top \).

An important observation is that deleting a column of \({\varvec{Q}}\) implies an attribute is no longer related to observed responses, which implies

The conditional posterior after summing over \({\varvec{a}}_K\) is

where

and \(n_c\) is the number of individuals in the collapsed class c. The joint conditional prior for \({\varvec{A}}_{K-1}\) and \({\varvec{\pi }}_K\) is

We then transform \({\varvec{\pi }}_K\) by defining

for \(c=0,\ldots ,2^{K-1}-1\) where \(\pi _c\) is an element of \({\varvec{\pi }}_{K-1}\). The transformation implies

Therefore, integrating over \({\varvec{u}}\) yields

where

If the prior for \({\varvec{\pi }}_K\sim \text {Dirichlet}({\varvec{1}}_{2^K})\), then the integral is one. Since we use the uniform prior of \(p({\varvec{Q}},K)\), the acceptance ratio becomes

Appendix C

Gibbs Sampling Step for Updating \({\varvec{\alpha }}\) in Crimp Sampler

The conditional posterior distribution of \({\varvec{a}}_{K+1}\), \({\varvec{A}}_K\), \({\varvec{\pi }}_K\), and \({\varvec{u}}\) is

then, the marginal posterior of \({\varvec{a}}_{K+1}\) and \({\varvec{A}}_K\) is

where

Therefore,

Let \(n_c^{\prime }\) be the number of individuals other than i with K attributes equal to \({\varvec{\alpha }}_c\), and let \(n_{c0}^{\prime }\) and \(n_{c1}^{\prime }\) be the number of such individuals with \(\alpha _{c,K+1}=0\) and \(\alpha _{c,K+1}=1\), respectively. So \(n_c^{\prime }=n_{c0}^{\prime } + n_{c1}^{\prime }\). That is,

Recall the following properties for the beta and gamma functions:

Then, Eq. 57 can be written as:

Let \({\varvec{A}}_{(i)}=({\varvec{\alpha }}_1,\ldots , {\varvec{\alpha }}_{i-1},{\varvec{\alpha }}_{i+1},\ldots ,{\varvec{\alpha }}_N)\) and \({\varvec{\alpha }}_{(i),K+1}=(a_{1,K+1},\ldots ,\alpha _{i-1,K+1},\alpha _{i+1,K+1}, \cdots ,a_{N,K+1})\). By integrating out \({\varvec{\alpha }}_i\) and \(\alpha _{i,K+1}\), we have

Note that

which implies

and

Then, Eq. 59 should be

Therefore, the full conditional distribution for \({\varvec{\alpha }}_i^\top {\varvec{v}}=c\) and \(\alpha _{i,K+1}=1\) is

In order to update \(\alpha _{i,K+1}\) sequentially, we need to find

The probability in the numerator is calculated by summing Eq. 57 over \(\alpha _{i+1,K+1},\ldots ,\alpha _{N,K+1}\):

Given that

we have

Therefore, Eq. 63 can be written as:

For the probability in the denominator,

Combining Eqs. 64 and 65, Eq. 62 is

If \({\varvec{\alpha }}_i^\top {\varvec{v}}=c\) and \(\alpha _{i,K+1}=1\), then Eq. 66 can be simplified as

and if \({\varvec{\alpha }}_i^\top {\varvec{v}}=c\) and \(\alpha _{i,K+1}=0\), Eq. 66 is

Therefore, we can update \(({\varvec{\alpha }}_{i}, \alpha _{i,K+1})\) sequentially to \(({\varvec{\alpha }}_c,\alpha ^*)\) with probability proportional to \(p({\varvec{Y}}_i \vert {\varvec{\alpha }}_i^\top {\varvec{v}}=c,\alpha _{i,K+1}=1,{\varvec{\Omega }},{\varvec{Q}}_{K+1}) \cdot \left( \dfrac{n_{c\alpha ^*,i-1}+1 }{n_{c,i-1}+2} \cdot \dfrac{n_{c}^{\prime }+1}{N+2^K-1}\right) \), \(c=0,\ldots ,2^K,\alpha ^*=0,1\).

Appendix D

Posterior Distribution of \(\lambda \) in Indian Buffet Process Algorithm

Assume \(\lambda \sim Gamma(a,b)\), and \(\lambda \) is only related to \({\varvec{Q}}\), so its posterior can be updated by

then \(\lambda ^{(t)}\) can be sampled from Gamma\((K +a, H_N+b)\), where K is the number of features in current \({\varvec{Q}}^{(t)}\) and \(H_N\) the Nth harmonic number.

Appendix E

Problem set of Elementary Probability Theory

The twelve questions from R package pks (Heller and Wickelmaier 2013) are

-

1.

A box contains 30 marbles in the following colors: 8 red, 10 black, 12 yellow. What is the probability that a randomly drawn marble is yellow?

-

2.

A bag contains 5-cent, 10-cent, and 20-cent coins. The probability of drawing a 5-cent coin is 0.35, that of drawing a 10-cent coin is 0.25, and that of drawing a 20-cent coin is 0.40. What is the probability that the coin randomly drawn is not a 5-cent coin?

-

3.

A bag contains 5-cent, 10-cent, and 20-cent coins. The probability of drawing a 5-cent coin is 0.20, that of drawing a 10-cent coin is 0.45, and that of drawing a 20-cent coin is 0.35. What is the probability that the coin randomly drawn is a 5-cent coin or a 20-cent coin?

-

4.

In a school, 40% of the pupils are boys and 80% of the pupils are right-handed. Suppose that gender and handedness are independent. What is the probability of randomly selecting a right-handed boy?

-

5.

Given a standard deck containing 32 different cards, what is the probability of not drawing a heart?

-

6.

A box contains 20 marbles in the following colors: 4 white, 14 green, 2 red. What is the probability that a randomly drawn marble is not white?

-

7.

A box contains 10 marbles in the following colors: 2 yellow, 5 blue, 3 red. What is the probability that a randomly drawn marble is yellow or blue?

-

8.

What is the probability of obtaining an even number by throwing a dice?

-

9.

Given a standard deck containing 32 different cards, what is the probability of drawing a 4 in a black suit?

-

10.

A box contains marbles that are red or yellow, small or large. The probability of drawing a red marble is 0.70, the probability of drawing a small marble is 0.40. Suppose that the color of the marbles is independent of their size. What is the probability of randomly drawing a small marble that is not red?

-

11.

In a garage there are 50 cars. 20 are black and 10 are diesel powered. Suppose that the color of the cars is independent of the kind of fuel. What is the probability that a randomly selected car is not black and it is diesel powered?

-

12.

A box contains 20 marbles. 10 marbles are red, 6 are yellow and 4 are black. 12 marbles are small and 8 are large. Suppose that the color of the marbles is independent of their size. What is the probability of randomly drawing a small marble that is yellow or red?

Appendix F

Supplementary Simulation Results

Tables 8, 9 and 10 report comparison on the root-mean-squared error (RMSE) of the estimated item parameters for \(\rho =0\) for the cases where K was correctly estimated. The tables show that both the crimp sampler and RJ-MCMC give a smaller RMSEs on item parameter estimation than IBP across different sample sizes and K, and the crimp sampler is slightly better than RJ-MCMC for the \(K=5\) case. The results for \(\rho =0.25\) are omitted here because the performance was similar. Table 11 reports the performance of inferring K using DIC selection.

Rights and permissions

About this article

Cite this article

Chen, Y., Liu, Y., Culpepper, S.A. et al. Inferring the Number of Attributes for the Exploratory DINA Model. Psychometrika 86, 30–64 (2021). https://doi.org/10.1007/s11336-021-09750-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-021-09750-9