Abstract

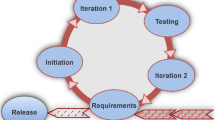

Agile software development methodologies focus on software projects which are behind schedule or highly likely to have a problematic development phase. In the last decade, Agile methods have transformed from cult techniques to mainstream methodologies. Scrum, an Agile software development method, has been widely adopted due to its adaptive nature. This paper presents a metric that measures the quality of the testing process in a Scrum process. As product quality and process quality correlate, improved test quality can ensure high-quality products. Also, gaining experience from 8 years of successful Scrum implementation at SoftwarePeople, we describe the Scrum process emphasizing the testing process. We propose a metric Product Backlog Rating (PBR) to assess the testing process in Scrum. PBR considers the complexity of the features to be developed in an iteration of Scrum, assesses test ratings and offers a numerical score of the testing process. This metric is able to provide a comprehensive overview of the testing process over the development cycle of a product. We present a case study which shows how the metric is used at SoftwarePeople. The case study explains some features that have been developed in a Sprint in terms of feature complexity and potential test assessment difficulties and shows how PBR is calculated during the Sprint. We propose a test process assessment metric that provides insights into the Scrum testing process. However, the metric needs further evaluation considering associated resources (e.g., quality assurance engineers, the length of the Scrum cycle).

Similar content being viewed by others

Notes

References

Abrahamsson P, Salo O, Ronkainen J, Warsta J (2002) Agile software development methods: review and analysis. VTT, Espoo

Rech J (2007) Handling of software quality defects in agile software development. In: Agile software development quality assurance 90

Churchville D (2008) Agile thinking: leading successful software projects and teams. ExtremePlanner Software, San Diego

Chan K (2013) Agile adoption statistics 2012. http://www.onedesk.com/2013/05/agile-adoption-statistics-2012/

Wailgum T (2007) From here to agility. http://www.cio.com.au/article/193933/from_here_agility/

Ceschi M, Sillitti A, Succi G, De Panfilis S (2005) Project management in plan-based and agile companies. IEEE Softw 22:21–27

Mann C, Maurer F (2005) A case study on the impact of scrum on overtime and customer satisfaction. In: Proceedings on agile conference 2005, p 70–79

Schwaber C (2007) The truth about agile processes. http://www.rallydev.com/sites/default/files/The_Truth_About_Agile_Processes_Forrester_white_paper.pdf

Schwaber K (2004) Agile project management with Scrum. O’Reilly Media, Inc, Sebastopol

Alliance S (2007) Scrum alliance membership survey shows growing scrum adoption and project success. http://prn.to/13t1V2V

Schatz B, Abdelshafi I (2005) Primavera gets agile: a successful transition to agile development. IEEE Softw 22:36–42

Upender B (2005) Staying agile in government software projects. In: Proceedings on agile conference 2005, p 153–159

Mahnic V, Drnovscek S (2005) Agile software project management with scrum. In: EUNIS 2005 conference-session papers and tutorial abstracts

Smith G, Sidky A (2009) Becoming agile in an imperfect world. Manning, Greenwich

Anderson DJ (2003) Agile management for software engineering: applying the theory of constraints for business results. Prentice Hall Professional, Upper Saddle River

Desharnais JM, Kocatürk B, Abran A (2011) Using the cosmic method to evaluate the quality of the documentation of agile user stories. In: IEEE software measurement, 2011 joint conference of the 21st international workshop on and 6th international conference on software process and product measurement (IWSM-MENSURA), 269–272

Petersen K, Wohlin C (2010) Software process improvement through the lean measurement (spi-leam) method. J Syst Softw 83:1275–1287

Lacovelli A, Souveyet C (2008) Framework for agile methods classification. In: MoDISE-EUS, Citeseer p 91–102

Hartmann D, Dymond R (2006) Appropriate agile measurement: using metrics and diagnostics to deliver business value. In: IEEE agile conference 2006

Jabangwe R, Petersen K, Mite D (2013) Visualization of defect inflow and resolution cycles: before, during and after transfer. In: IEEE software engineering conference (APSEC, 2013 20th Asia-Pacific), vol. 1, p 289–298

Petersen K (2012) A palette of lean indicators to detect waste in software maintenance: a case study. In: Agile processes in software engineering and extreme programming. Springer, p 108–122

Petersen K, Wohlin C (2011) Measuring the flow in lean software development. Softw Pract Exp 41:975–996

Fuggetta A (2000) Software process: a roadmap. In: Proceedings of the conference on the future of software engineering, ACM 25–34

Sommerville I, Kotonya G (1998) Requirements engineering: processes and techniques. Wiley, New York

Cugola G, Ghezzi C (1998) Software processes: a retrospective and a path to the future. Softw Process Improv Pract 4:101–123

Kitchenham B, Pfleeger SL (1996) Software quality: the elusive target [special issues section]. IEEE Software 13:12–21

Schwaber K, Sutherland J (2013) The definitive guide to scrum: the rules of the game. http://www.scrumguides.org/

Shore J et al (2008) The art of agile development. O’Reilly, Sebastopol

Gannon M (2013) An agile implementation of scrum. In: IEEE aerospace conference 2013, p 1–7

Rising L, Janoff NS (2000) The scrum software development process for small teams. IEEE Software 17:26–32

Boehm BW (1984) Software engineering economics. IEEE Trans Softw Eng 4–21

Ramamoorthy C, Prakash A, Tsai WT, Usuda Y (1984) Software engineering: problems and perspectives. Computer 17:191–209

Kayes M (2011) Test case prioritization for regression testing based on fault dependency. In: 2011 3rd international conference on electronics computer technology (ICECT), vol 5, 48–52

Beizer B (1995) Black-box testing: techniques for functional testing of software and systems. Wiley, New York

Perry WE (1999) Softw Testing. Wiley, New York

Majchrzak TA (2012) Improving software testing: technical and organizational developments. Springer, New York

Feyh M, Petersen K (2013) Lean software development measures and indicators-a systematic mapping study. In: Lean enterprise software and systems. Springer 32–47

Agarwal M, Majumdar R (2012) Tracking scrum projects tools, metrics and myths about agile. Int J Emerg Technol Adv Eng 2:97–104

Petersen K, Roos P, Nyström S, Runeson P (2014) Early identification of bottlenecks in very large scale system of systems software development. J Softw Evol Process 26:1150–1171

Staron M, Meding W (2011) Monitoring bottlenecks in agile and lean software development projects—a method and its industrial use. In: Product-focused software process improvement. Springer 3–16

Kniberg H (2007) In: Scrum and XP from the trenches: enterprise software development. Lulu.com

Paetsch F, Eberlein A, Maurer F (2003) Requirements engineering and agile software development. In: Proceedings of twelfth IEEE international workshops on enabling technologies: infrastructure for collaborative enterprises (WET ICE 2003) 308–313

Nuseibeh B, Easterbrook S (2000) Requirements engineering: a roadmap. In: Proceedings of the conference on the future of software engineering. ICSE ’00, New York, ACM 35–46

Nokhbeh Zaeem R, Khurshid S (2012) Test input generation using dynamic programming. In: Proceedings of the ACM SIGSOFT 20th international symposium on the foundations of software engineering. FSE ’12, New York, ACM 34:1–34:11

Samuel P, Surendran A (2010) Forward slicing algorithm based test data generation. In: 2010 3rd IEEE International Conference on computer science and information technology (ICCSIT), vol 8, 270–274

Alakeel AM (2010) An algorithm for efficient assertions-based test data generation. J Softw 5:644–653

Jafri R (2012) Database testing properties of a good test data and test data preparation techniques. http://www.softwaretestinghelp.com/database-testing-test-data-preparation-techniques/

Chemuturi M (2006) Test effort estimation. Chemuturi Consultants, April

Sangal N, Jordan E, Sinha V, Jackson D (2005) Using dependency models to manage complex software architecture. SIGPLAN Not. 40:167–176

Gall H, Hajek K, Jazayeri M (1998) Detection of logical coupling based on product release history. In: Proceedings of the international conference on software maintenance 1998, 190–198

Cataldo M, Mockus A, Roberts JA, Herbsleb JD (2009) Software dependencies, work dependencies, and their impact on failures. IEEE Trans Softw Eng 35:864–878

O’reilly T (2007) What is web 2.0: Design patterns and business models for the next generation of software. Communications & strategies 17

Zimmermann T, Nagappan N (2008) Predicting bugs from history. Springer, Berlin

Kaner C, Bond WP (2004) Software engineering metrics: What do they measure and how do we know? Methodology 8:6

Pick G (2001) Data migration concepts & challenges. http://bit.ly/13OsRdS

Fiedler RL, Kaner C (2009) Putting the context in context-driven testing (an application of cultural historical activity theory). In: Conference of the association for software testing

Kaner C, Bach J, Pettichord B (2008) Lessons learned in software testing. Wiley, New York

Moore JW (1998) Software engineering standards. Wiley Online Library, New York

Kaner C (2003) What is a good test case? In: Software testing analysis and review conference

Huang CY, Lin CT (2006) Software reliability analysis by considering fault dependency and debugging time lag. IEEE Trans Reliab 55:436–450

Stark GE, Oman P, Skillicorn A, Ameele A (1999) An examination of the effects of requirements changes on software maintenance releases. Journal of Software Maintenance 11:293–309

Gorschek T, Davis AM (2008) Requirements engineering: In search of the dependent variables. Inf Softw Technol 50:67–75

Fowler M (2002) Patterns of Enterprise Application Architecture. Addison-Wesley Longman Publishing Co. Inc., Boston

Boehm B, Bose P, Horowitz E, Lee MJ (1994) Software requirements as negotiated win conditions. In: Requirements Engineering, 1994., Proceedings of the First International Conference on. 74–83

Grady R (1994) Successfully applying software metrics. Computer 27:18–25

Jung HW, Kim SG, Chung CS (2004) Measuring software product quality: A survey of iso/iec 9126. Software, IEEE 21:88–92

Cavano JP, McCall JA (1978) A framework for the measurement of software quality. In: ACM SIGMETRICS Performance Evaluation Review. Volume 7., ACM 133–139

Bertoa MF, Troya JM, Vallecillo A (2006) Measuring the usability of software components. J Syst Softw 79:427–439

Nagappan N, Williams L, Vouk M (2003) Towards a metric suite for early software reliability assessment. In: International Symposium on Software Reliability Engineering. FastAbstract, Denver, CO, pp 238–239

Goel AL (1985) Software reliability models: Assumptions, limitations, and applicability. Software Engineering, IEEE Transactions on 1411–1423

Oman P, Hagemeister J (1992) Metrics for assessing a software system’s maintainability. In: Software Maintenance, 1992. Proceerdings., Conference on, IEEE 337–344

Coleman D, Ash D, Lowther B, Oman P (1994) Using metrics to evaluate software system maintainability. Computer 27:44–49

Washizaki H, Yamamoto H, Fukazawa Y (2003) A metrics suite for measuring reusability of software components. In: Software Metrics Symposium, 2003. Proceedings. Ninth International, IEEE 211–223

Henry S, Kafura D (1981) Software structure metrics based on information flow. Software Engineering, IEEE Transactions on 510–518

Paulk MC, Weber CV, Curtis B, Chrissis ME (1995) The capability maturity model: Guidelines for improving the software process, vol 441. Addison-wesley Reading, Boston

Paulk MC (1993) Comparing iso 9001 and the capability maturity model for software. Software Qual J 2:245–256

Ahern DM, Clouse AA, Turner RA (2004) CMMI distilled: a practical introduction to integrated process improvement. Addison-Wesley Professional, Boston

Team CP (2002) Capability maturity model integration (cmmi sm), version 1.1. Software Engineering Institute, Carnegie Mellon University/SEI-2002-TR-012. Pittsburg, PA

Emam KE, Melo W, Drouin JN (1997) SPICE: the theory and practice of software process improvement and capability determination. IEEE Computer Society Press, Washington

Jung HW, Hunter R (2001) The relationship between iso/iec 15504 process capability levels, iso 9001 certification and organization size: an empirical study. J Syst Softw 59:43–55

Basili V, Green S (1994) Software process evolution at the sel. IEEE Software 11:58–66

McFeeley B (1996) Ideal: A user’s guide for software process improvement. Technical report, DTIC Document

Salo O, Abrahamsson P (2007) An iterative improvement process for agile software development. Softw Process Improv Pract 12:81–100

Cockburn A, Highsmith J (2001) Agile software development, the people factor. Computer 34:131–133

Cockburn A, Williams L (2003) Agile software development: It’s about feedback and change. Computer 36:0039–43

Bratthall L, Wohlin C (2000) Understanding some software quality aspects from architecture and design models. In: Program Comprehension, 2000. Proceedings. IWPC 2000. 8th International Workshop on, IEEE 27–34

Budgen D (2003) Software design. Pearson Education, New York

Crosby PB (1979) Quality is free: The art of making quality certain, vol 94. McGraw-Hill, New York

Galin D (2004) Software quality assurance: from theory to implementation. Pearson education, New York

Watkins J (2009) Agile testing: how to succeed in an extreme testing environment. Cambridge University Press, Cambridge

Stamelos IG, Sfetsos P (2007) Agile software development quality assurance. Igi Global, Hershey

Author information

Authors and Affiliations

Corresponding author

Additional information

Imrul Kayes: Most of the work was done when the author was a software engineer at SoftwarePeople.

Rights and permissions

About this article

Cite this article

Kayes, I., Sarker, M. & Chakareski, J. Product backlog rating: a case study on measuring test quality in scrum. Innovations Syst Softw Eng 12, 303–317 (2016). https://doi.org/10.1007/s11334-016-0271-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11334-016-0271-0