Abstract

We discuss estimation problems where a polynomial \(s\rightarrow \sum _{i=0}^\ell \vartheta _i s^i\) with strictly positive leading coefficient is observed under Ornstein–Uhlenbeck noise over a long time interval. We prove local asymptotic normality (LAN) and specify asymptotically efficient estimators. We apply this to the following problem: feeding noise \(dY_t\) into the classical (deterministic) Hodgkin–Huxley model in neuroscience, with \(Y_t=\vartheta t + X_t\) and X some Ornstein–Uhlenbeck process with backdriving force \(\tau \), we have asymptotically efficient estimators for the pair \((\vartheta ,\tau )\); based on observation of the membrane potential up to time n, the estimate for \(\vartheta \) converges at rate \(\sqrt{n^3\,}\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Problems of parametric inference when we observe over a long time interval a process Y of type

with unknown parameters \((\vartheta _1,\ldots ,\vartheta _m)\) or \((\vartheta _1,\ldots ,\vartheta _m,\tau )\) and with \((f_1,\ldots ,f_m)\) a given set of functions have been considered in a number of papers; alternatively, such models can be written as

with related functions \((g_1,\ldots ,g_m)\). Also, driving Brownian motion W in the Ornstein–Uhlenbeck type equations above has been replaced by certain Lévy processes or by fractional Brownian motion. Many papers focus on orthonormal sets of periodic functions \([0,\infty )\rightarrow \mathbb {R}\) with known periodicity. To determine estimators and limit laws for rescaled estimation errors in this case, periodicity allows to exploit ergodicity or stationarity with respect to the time grid of multiples of the periodicity. We mention Dehling et al. (2010), Franke and Kott (2013) and Dehling et al. (2017) where limit distributions for least squares estimators and maximum likelihood estimators are obtained. Rather than in asymptotic properties, Pchelintsev (2013) is interested in methods which allow to reduce squared risk—i.e. risk defined with respect to one particular loss function—uniformly over determined subsets of the parameter space, at fixed and finite sample size. Asymptotic efficiency of estimators is the topic of Höpfner and Kutoyants (2009), where sums \(\sum \vartheta _j f_j\) as above are replaced by periodic functions S of known periodicity whose shape depends on parameters \((\vartheta _1,\ldots ,\vartheta _m)\). When the parametrization is smooth enough, local asymptotic normality in the sense of LeCam (see Le Cam 1969; Hajek 1970; Davies 1985; Pfanzagl 1994; Le Cam and Yang 2002; with a different notion of local neighbourhood see Ibragimov and Khasminskii (1981) and Kutoyants (2004)) allows to identify a limit experiment with the following property: risk—asymptotically as the time of observation tends to \(\infty \), and with some uniformity over small neighbourhoods of the true parameter—is bounded below by a corresponding minimax risk in a limit experiment. This assertion holds with respect to a broad class of loss functions.

With a view to an estimation problem which arises in stochastic Hodgkin–Huxley models and which we explain below, the present paper deals with parameter estimation when one observes a process Y

with leading coefficient \(\vartheta _p>0\) so that paths of Y almost surely tend to \(\infty \). Then good estimators for the parameters based on observation of Y up to time n show the following behaviour: whereas estimation of parameters \(\tau \) and \(\vartheta _0\) works at the ’usual’ rate \(\sqrt{n\,}\), parameters \(\vartheta _j\) with \(1\le j\le p\) can be estimated at rate \(\sqrt{n^{2j+1}\,}\) as \(n\rightarrow \infty \). With rescaled time \((tn)_{t\ge 0}\), we prove local asymptotic normality as \(n\rightarrow \infty \) in the sense of LeCam with local scale

and with limit information process \(J=(J_t)_{t\ge 0}\)

at every \(\theta \,{:}{=}\,(\vartheta _0,\ldots ,\vartheta _p,\tau )\). As a consequence of local asymptotic normality, there is a local asymptotic minimax theorem (Ibragimov and Khasminskii 1981; Davies 1985; Le Cam and Yang 2002; Kutoyants 2004; Höpfner 2014) which allows to identify optimal limit distributions for rescaled estimation errors in the statistical model (1); the theorem also specifies a particular expansion of rescaled estimation errors (in terms of the central sequence in local experiments at \(\theta \)) which characterizes asymptotic efficiency. We can construct asymptotically efficient estimators for the model (1), and these estimators have a simple and explicit form.

We turn to an application of the results obtained for model (1). Consider the problem of parameter estimation in a stochastic Hodgkin–Huxley model for the spiking behaviour of a single neuron belonging to an active network

where input \(dY_t\) received by the neuron is modelled by the increments of the stochastic process

The functions F(., ., ., .) and \(\alpha _j(.)\), \(\beta _j(.)\), \(j\in \{n,m,h\}\) are those of Izhikevich (2007) pp. 37–39. The stochastic model (2) extends the classical deterministic model with constant rate of input \(a>0\)

by taking into account ’noise’ in the dendritic tree where incoming excitatory or inhibitory spike trains emitted by a large number of other neurons in the network add up and decay. See Hodgkin and Huxley (1952), Izhikevich (2007), Ermentrout and Terman (2010) and the literature quoted there for the role of this model in neuroscience. Stochastic Hodgkin–Huxley models have been considered in Höpfner et al. (2016a, b), (2017) and Holbach (2020). For suitable data sets, membrane potential data hint to the existence of a quadratic variation which indicates the need for a stochastic modelization.

In systems (2) or (4), the variable \(V=(V_t)_{t\ge 0}\) represents the membrane potential in the neuron; the variables \(j=(j_t)_{t\ge 0}\), \(j\in \{n,m,h\}\), are termed gating variables and represent—in the sense of averages over a large number of channels—opening and closing of ion channels of certain types. The membrane potential can be measured intracellularly in good time resolution whereas the gating variables in the Hodgkin–Huxley model are not accessible to direct measurement.

In a sense of equivalence of experiments as in Holbach (2019), the stochastic Hodgkin Huxley model (2)+(3) corresponds to a submodel of (1). This is of biological importance. Under the assumption that the stochastic model admits a fixed starting point which does not depend on \(\theta \,{:}{=}\,(\vartheta ,\tau )\), we can estimate the components \(\vartheta >0\) and \(\tau >0\) of the unknown parameter \(\theta =(\vartheta ,\tau )\) in equations (2)+(3) from the evolution of the membrane potential alone, and have at our disposal simple and explicit estimators \(\breve{\theta }(n)=( \breve{\vartheta }(n) , \breve{\tau }(n) )\) with the following two properties (i) and (ii).

(i) With local parameter \(h=(h_1,h_2)\) parametrizing shrinking neighbourhoods of \(\theta =(\vartheta ,\tau )\), risks

converge as \(n\rightarrow \infty \) to

where \(\widetilde{W}=( \widetilde{W}^{(1)}, \widetilde{W}^{(2)} )\) is two-dimensional standard Brownian motion. Here C is an arbitrary constant, and \(L:\mathbb {R}^2\rightarrow [0,\infty )\) any loss function which is continuous, subconvex and bounded.

(ii) We can compare the sequence of estimators \(\breve{\theta }(n)=(\breve{\vartheta }(n),\breve{\tau }(n))\) for \(\theta =(\vartheta ,\tau )\) in (5) to arbitrary estimator sequences \(T(n)=( T^{(1)}(n) , T^{(2)}(n) )\) which can be defined from observation of the membrane potential up to time n, provided their rescaled estimation errors—using the same norming as in (5)—are tight. For all such estimator sequences,

is always greater or equal than the limit in (6). This is the assertion of the local asymptotic minimax theorem. It makes sure that asymptotically as \(n\rightarrow \infty \), it is impossible to outperform the simple and explicit estimator sequence \(\breve{\theta }(n)=(\breve{\vartheta }(n),\breve{\tau }(n))\) which we have at hand.

The paper is organized as follows. Section 2 collects for later use convergence results for certain functionals of the Ornstein–Uhlenbeck process. Section 3 deals with local asymptotic normality (LAN) for the model (1): proposition 1 and theorem 1 in Sect. 3.1 prove LAN, the local asymptotic minimax theorem is corollary 1 in Sect. 3.1; we introduce and investigate estimators for \(\theta =(\vartheta ,\tau )\) in Sects. 3.2 and 3.3; theorem 2 in Sect. 3.4 states their asymptotic efficiency. The application to parameter estimation in the stochastic Hodgkin–Huxley model (2)+(3) based on observation of the membrane potential is the topic of the final Sect. 4: see theorem 3 and corollary 2 there.

2 Functionals of the Ornstein Uhlenbeck process

We state for later use properties of some functionals of the Ornstein Uhlenbeck process

with fixed starting point \(x_0 \in \mathbb {R}\). \(\tau >0\) and \(\sigma >0\) are fixed, and \(\,\nu \,{:}{=}\,\mathcal {N}(0,\frac{\sigma ^2}{2\tau })\,\) is the invariant measure of the process in (7); \(\,X\) is defined on some \((\Omega ,\mathcal {A},P)\).

Lemma 1

For X defined by (7), for every \(f\in L^1(\nu )\) and \(\ell \in \mathbb {N}\), we have almost sure convergence as \(r\rightarrow \infty \)

Proof

(Höpfner and Kutoyants 2011, lemma 2.2; Holbach 2019, lemma 2.5, compare to Bingham et al. 1987 theorem 1.6.4 p. 33)

(1) We consider functions \(f\in L^1(\nu )\) which satisfy \(\nu (f)\ne 0\). The case \(\ell =1\) is the well known ratio limit theorem for additive functionals of the ergodic diffusion X (Kutoyants 2004; Höpfner 2014 p. 214). Assuming that the assertion holds for \(\ell =\ell _0\in \mathbb {N}\), define \(\,A_r =\int _0^r s^{\ell _0-1} f(X_s)\, ds\,\). Stieltjes product formula for semimartingales with paths of locally bounded variation yields

Under our assumption, both terms on the right hand side are of stochastic order \(O(r^{\ell _0+1})\): since \(\,\frac{\ell _0}{s^{\ell _0}} A_s\,\) converges to \(\,\nu (f)\ne 0\,\) almost surely as \(s\rightarrow \infty \), the second term on the right hand side behaves as \(\,\nu (f)\int _0^r \frac{s^{\ell _0}}{\ell _0} ds = \nu (f)\frac{r^{\ell _0+1}}{\ell _0(\ell _0+1)}\,\) as \(r\rightarrow \infty \); the first term on the right hand side behaves as \(\nu (f) \frac{r^{\ell _0+1}}{\ell _0}\). This proves the assertion for \(\ell _0+1\).

(2) We consider functions \(f\in L^1(\nu )\) such that \(\nu (f)=0\), \(f\ne 0\). Writing \(f^+\ge 0\) for the positive part of f and \(f^-\ge 0\) for the negative part, we have \(f=f^+-f^-\) and \(\alpha \,{:}{=}\,\nu (f^+)=\nu (f^-)>0\). For N arbitrarly large but fixed, step (1) applied to functions \(\,h_N\,{:}{=}\, [f^+ - (1{-}\frac{1}{N}) f^-]\,\) and \(\,g_N\,{:}{=}\, [(1{-}\frac{1}{N}) f^+ - f^- ]\,\) yields almost sure convergence

as \(r\rightarrow \infty \). Thus, letting N tend to \(\infty \), comparison of trajectories

establishes the desired result for functions \(f\in L^1(\nu )\) with \(\nu (f)=0\). \(\square \)

Lemma 2

For X as above we have for every \(\ell \in \mathbb {N}\)

Proof

This is integration by parts

and lemma 1 (with \(f(x)=x\) and \(\nu =\mathcal {N}(0,\frac{\sigma ^2}{2\tau })\)) applied to the right hand side. \(\square \)

Lemma 3

For X defined by (7), for every \(\ell \in \mathbb {N}_0\), we have convergence in law

as \(n\rightarrow \infty \) to the limit

where B is standard Brownian motion.

Proof

Rearranging SDE (7) we write

and have for every \(\ell \in \mathbb {N}_0\)

In case \(\ell =0\), the right hand side is \(-(X_r-X_0)+\sigma W_r\), and the scaling property of Brownian motion combined with ergodicity of X yields weak convergence as asserted. In case \(\ell \ge 1\), lemma 2 transforms the first term on the right hand side of (9), and we have

The martingale convergence theorem (Jacod and Shiryaev 1987, VIII.3.24) shows that

converges weakly in the Skorohod path space \(D([0,\infty )\mathbb {R})\) to a continuous limit martingale with angle bracket \(\,t \,\rightarrow \, \frac{1}{2\ell +1}t^{2\ell +1}\,\), i.e. to

Scaled in the same way, the first term on the right hand side of (10)

is negligible in comparison to (11), uniformly on compact t-intervals, by ergodicity of X. \(\square \)

Lemma 4

(a) For every \(\ell \in \mathbb {N}\) we have an expansion

where \(\lim \limits _{n\rightarrow \infty } \rho _\ell (n) = 0\,\) almost surely. In case \(\ell =0\) we have

(b) For every \(\ell \in \mathbb {N}\), we have joint weak convergence as \(n\rightarrow \infty \)

with limit law

Proof

Part (a) is (10) plus scaling as in the proof of lemma 3. For different \(\ell \in \mathbb {N}_0\), the expansions (12) hold with respect to the same driving Brownian motion W from SDE (7): this gives (b). \(\square \)

3 The statistical model of interest

Consider now a more general problem of parameter estimation from continuous-time observation of

where \(R(\cdot )\) is a sufficiently smooth deterministic function which depends on some finite-dimensional parameter \(\vartheta \), and where the Ornstein Uhlenbeck process \(X=(X_t)_{t\ge 0}\), unique strong solution to

depends on a parameter \(\tau >0\). The starting point \(X_0\equiv x_0\) is deterministic. Then Y solves the SDE

where S depending on \(\vartheta \) and \(\tau \) is given by

Conversely, if a process Y is solution to an SDE of type (16), then solving \(\,{R'} =-\tau R + S\,\) with suitable initial point \(r_0\) we get a representation (14) for Y where

For examples of parametric models of this type, see e.g. Dehling et al. (2010), Franke and Kott (2013), Höpfner and Kutoyants (2009), Pchelintsev (2013), and example 2.3 in Höpfner and Kutoyants (2011). The constant \(c>0\) in (15) is fixed and known: observing the trajectory of Y continuously in time, \(\,\langle Y\rangle _t = c\, t\,\) equals the limit in probability \(\lim \limits _{n\rightarrow \infty }\sum _{j=1}^{2^n} ( Y_{t j /2^n }-Y_{ t (j-1) / 2^n } )^2\) of squared increments, thus c is known almost surely and cannot be considered as a parameter.

We wish to estimate the unknown parameter \(\theta \,{:}{=}\,(\vartheta ,\tau )\) based on time-continuous observation of Y in (14) over a long time interval, in the model

with real coefficients where we assume a leading coefficient \(\,\vartheta _p>0\,\) such that trajectories of Y tend to \(\infty \) almost surely as \(t\rightarrow \infty \). Thus our parametrization is

and in SDE (16) which governs the observation Y, \(\,S\) depending on \(\theta =(\vartheta ,\tau )\) has the form

3.1 Local asymptotic normality for the model (14)+(19)

Let \(C\,{:}{=}\,C([0,\infty ),\mathbb {R})\) denote the canonical path space for continuous processes; with \(\pi = (\pi _t)_{t\ge 0}\) the canonical process (i.e. \(\pi _t(f)=f(t)\) for \(f\in C\), \(t\ge 0\)) and \(\mathcal {C}=\sigma (\pi _t:t\ge 0)\),

is the canonical filtration. Let \(Q_\theta \) denote the law on \((C,\mathcal {C},\mathbb {G})\) of the process Y in (14) under \(\theta \in \Theta \), cf. (20). By (14), (15), (16), (19) and (21), the canonical process \(\pi =(\pi _t)_{t\ge 0}\) on \((C,\mathcal {C})\) under \(Q_\theta \) solves

For pairs \(\theta '\ne \theta \) in \(\Theta \), probability measures \(Q_{\theta '}\), \(Q_\theta \) are locally equivalent relative to \(\mathbb {G}\), and we write

With \(m^{\pi ,\theta }=\sqrt{c\,} dW_s\) the martingale part of \(\pi \) under \(\theta \), the likelihood ratio process of \(Q_{\theta '}\) w.r.t. \(Q_\theta \) relative to \(\mathbb {G}\) (Lipster and Shiryaev 2001; Ibragimov and Khasminskii 1981; Jacod and Shiryaev 1987; Kutoyants 2004; Höpfner 2014 p. 162) is

In the integrand,

so we exploit (14) to write for short

where X under \(\theta =(\vartheta ,\tau )\) is the Ornstein Uhlenbeck process (15), and where

Localization at \(\theta \in \Theta \) will be as follows: with notation

we insert

in place of \(\theta '\) into (23); finally we rescale time. Define

With local parameter h and local scale (25) at \(\theta \), we obtain from (23)+(24)

where \(\rho _{n,\theta ,h}\) is some process of remainder terms, \(\,S_{n,\theta }\) a martingale with respect to \(Q_\theta \) and \((\mathcal {G}_{tn})_{t\ge 0}\)

(again by (14), \(X_s\) stands for \(\pi _s-R_\theta (s)\) under \(\theta \)), and \(\,J_{n,\vartheta }\) the angle bracket of \(\,S_{n,\theta }\) under \(\theta \).

Proposition 1

(a) For fixed \(0<t<\infty \), components of \(J_{n,\theta }(t) \) converge \(Q_\theta \)-almost surely as \(n\rightarrow \infty \) to those of the deterministic process

For every \(0<t<\infty \), the matrix J(t) is invertible.

(b) Let \(\widetilde{W}\) denote a two-dimensional standard Brownian motion with components \(\widetilde{W} ^{(1)}\) and \(\widetilde{W} ^{(2)}\). In the cadlag path space \(D=D([0,\infty ),\mathbb {R}^{p+2})\) (Jacod and Shiryaev 1987, chapters VI and VIII), martingales \(S_{n,\theta }\) under \(Q_\theta \) converge weakly as \(n\rightarrow \infty \) to the limit martingale

Proof

The proof is in several steps. (1) We specify the angle bracket (or predictable quadratic covariation) process \(J_{n,\vartheta }\) of \(S_{n,\vartheta }\) under \(Q_\theta \). Its state at time t

is a symmetric matrix of size \((p{+}2){\times }(p{+}2)\). Taking into account the norming factor in front of \(S_{n,\theta }\) in (27) we consider throughout \(cJ_{n,\theta }\). The entries are given as follows. We have

for all \(1\le i,j \le p\). In the first line of \(cJ_{n,\theta }(t)\) we have

in first and last position, and in-between for \(1\le j\le p\)

For the last column of \(cJ_{n,\theta }(t)\), the first entry \(cJ_{n,\theta }^{(0,p+1)}(t)\) has been given above, the last entry is

in-between we have for \(1\le j\le p\)

It remains to consider the three integrals which are not deterministic: here lemma 1 establishes almost sure convergence

as \(n\rightarrow \infty \) under \(Q_\theta \). This proves almost sure convergence of the components of \(J_{n,\theta }(t)\) to the corresponding components of J(t) defined in (28).

(2) We prove that for every \(0<t<\infty \), the matrix J(t) defined in (28) is invertible. For this it is sufficient to check invertibility of \((p{+}1){\times }(p{+}1)\) matrices

which up to the factor \(\frac{\tau ^2}{c}\) represent the upper left block in J(t). We have to show that \(\,\min \limits _{|u|=1} u^\top \widetilde{J}(t)\, u\,\) is strictly positive. In case \(t=1\), the rows of \(\widetilde{J}(1)\) are linearly independent vectors in \(\mathbb {R}^{p+1}\), thus the assertion holds. For \(t\ne 1\), associate \(\,v(u) = \sqrt{t\,}\, (\,u_i\, t^i\,)_{0\le i\le p}\,\) to \(u\in \mathbb {R}^{p+1}\). Then we have \(\,u^\top \widetilde{J}(t)\, u = v(u)^\top \widetilde{J}(1)\, v(u)\,\), from which we deduce the assertion. Part a) of the proposition is proved.

(3) As an auxiliary step, we determine a martingale \(\widetilde{S}\) which admits \(\widetilde{J}\) defined in (30)

as its angle bracket. In integral representation we have

where \(\Psi _s\) is a square root

for the matrix \((s^{i+j})_{i,j=0,\ldots ,p}\) (note that for fixed s, this matrix is not invertible). From this representation for the angle brackets \(\widetilde{J}\) we obtain a representation of the martingale \(\widetilde{S}\) (Ikeda and Watanabe 1989, theorem 7.1’ on p. 90):

with some \((p{+}1)\)-dimensional standard Brownian motion

Given the simple structure of the \(\Psi _s\), we can define a new one-dimensional Brownian motion \(\widetilde{W}^{(1)}\) by

and end up with

(4) Now we can determine the martingale S which admits J defined in (28) as angle bracket. Since J(t) has a diagonal block structure where (up to multiplication with a constant in every block) the upper left block has been considered in step 3 whereas we have for the lower right block

\(Q_\theta \)-almost surely by lemma 1, the desired representation is

with \(\widetilde{W}^{(1)}\) from (31), and with another one-dimensional Brownian motion \(\widetilde{W}^{(2)}\) which is independent from \(\widetilde{W}^{(1)}\). This is the form appearing in (29) of the proposition.

(5) On the basis of part (a) of the proposition, the martingale convergence theorem (Jacod and Shiryaev 1987, VIII.3.24) establishes weak convergence (in the path space \(D([0,\infty ), \mathbb {R}^{p+2})\), under \(Q_\theta \), as \(n\rightarrow \infty \)) of the martingales \(S_{n,\theta }\) under \(Q_\theta \) to the limit martingale S which has been determined in step (4). This finishes the proof of proposition 1. \(\square \)

As a consequence of proposition 1, we obtain local asymptotic normality (Le Cam 1969; Hajek 1970; Ibragimov and Khasminskii 1981; Davies 1985; Le Cam and Yang 2002; Pfanzagl 1994; Kutoyants 2004; Höpfner 2014, section 7.1).

Theorem 1

(a) At \(\theta \in \Theta \), with local scale at \(\theta \) given by \((\psi _n)_n\) from (25), quadratic expansions

hold for arbitrary bounded sequences \((h_n)_n\) in \(\mathbb {R}^{p+2}\); since \(\Theta \) is open, \(\,\theta +\psi _n h_n\) belongs to \(\Theta \) for n large enough. Eventually as \(n\rightarrow \infty \), \(\,J_{n,\theta }(1)\) takes its values in the set of invertible \((p{+}2){\times }(p{+}2)\)-matrices, \(Q_\theta \)-almost surely.

(b) For every \(\theta \in \Theta \), we have weak convergence in \(D([0,\infty ), \mathbb {R}^{(p+2)}{\times }\mathbb {R}^{{(p+2)}\times {(p+2)}})\) as \(n\rightarrow \infty \)

with S the martingale in (29) and J its angle bracket in (28).

(c) There is a Gaussian shift limit experiment \(\mathcal {E}(S,J)\) with likelihood ratios

Proof

(1) As a first step, weak convergence of \(S_{n,\theta }\) to S under \(Q_\theta \) in proposition 1 implies (Jacod and Shiryaev 1987, theorem VI.6.1) joint weak convergence of the martingale together with its angle bracket. This is part (b) of the theorem. For \(0<t<\infty \) fixed, invertibility of \(J_{n,\theta }(t)\), \(Q_\theta \)-almost surely for sufficiently large n, follows from invertibility of J(t) and componentwise almost surely convergence \(J_{n,\theta }(t) \rightarrow J(t)\) by proposition 1.

(2) We can represent the limit experiment \(\mathcal {E}(S,J)\) in (c) as \(\{ \mathcal {N}( J(1)h , J(1) ) : h \in \mathbb {R}^{p+2} \}\).

(3) Fix a bounded sequence \((h_n)_n\) in \(\mathbb {R}^{p+2}\), take n large enough so that \(\theta +\psi _n h_n\) is in \(\Theta \), and define

Using notation \(\theta '(n,h)=\theta +\psi _n h\) as in (23)–(26), we split \(\,\theta '(n,h_n){=}{:}(\vartheta '(n,h_n),\tau '(n,h_n))\,\) into a bloc \(\,\vartheta '(n,h_n)=( \vartheta '_0(n,h_n) , \ldots , \vartheta '_p(n,h_n) )\,\) and the last component \(\,\tau '(n,h_n)\). We write \(h_{n,0}, h_{n,1}, \ldots , h_{n,p+1}\) for the components of the local parameter \(h_n\). Comparing (26) to (23), we see that out of

to be considered in (23)+(24) we did consider

under the integral signs, whereas we did neglect contributions

under the integral signs, both in the martingales and in the quadratic variations. With these notations, the remainder terms (32) have the form

Recall that \((h_n)_n\) is a bounded sequence. By choice of the localization and by (19) we have

Transforming the convergence arguments in the proof of proposition 1 into tightness arguments, the random objects

remain tight under \(Q_\theta \) as \(n\rightarrow \infty \), for every t fixed. The deterministic sequence

vanishing as \(n\rightarrow \infty \),

vanishes under \(Q_\theta \) as \(n\rightarrow \infty \) by Cauchy-Schwarz. The sequence of martingales

has angle brackets which vanish as \(n\rightarrow \infty \) for every t fixed, so the martingales itself vanish in \(Q_\theta \)-probability, uniformly over compact t-intervals as \(n\rightarrow \infty \). With \(0<t_0<\infty \) arbitrary, this proves

for the remainder terms (32). Since we did consider arbitrary bounded sequences \((h_n)_n\), we can reformulate the last assertion in the form

for arbitrary \(0<C<\infty \). We thus have proved part (a) of the theorem. The proof is finished. \(\square \)

The local asymptotic minimax theorem arises as a consequence of theorem 1, see Ibragimov and Khasminskii (1981), Davies (1985), Le Cam and Yang (2002), Kutoyants (2004), or Höpfner (2014) theorem 7.12. Note that it is interesting to consider quite arbitrary \(\,\mathcal {G}_n\)-measurable random variables \(T_n\) taking values in \(\mathbb {R}^{(p+2)}\) as possibly useful estimators for the unknown parameter \(\theta \in \Theta \).

Corollary 1

For \(\theta \in \Theta \), for arbitrary estimator sequences \((T_n)_n\) whose rescaled estimation errors

at \(\theta \) are tight as \(n\rightarrow \infty \), for arbitrary loss functions \(L : \mathbb {R}^{(p+2)} \rightarrow [0,\infty )\) which are continuous, bounded and subconvex, the following local asymptotic minimax bound holds:

Estimator sequences whose rescaled estimation errors at \(\theta \) admit as \(n\rightarrow \infty \) a representation

have the property

for every \(0<C<\infty \) fixed, and thus attain the local asymptotic minimax bound at \(\theta \).

Remark 1

In theorem 1, the limit experiment \(\mathcal {E}(S,J)\) at \(\theta =(\vartheta ,\tau )\in \Theta \) depends on the component \(\tau \) (the constant \(c\,\) is not a parameter), by (28), but not on \(\vartheta =(\vartheta _0,\vartheta _1,\ldots ,\vartheta _p)\). The \(\tau \)-component of \(\mathcal {E}(S,J)\) is the well-known limit experiment when an ergodic Ornstein Uhlenbeck process (15) with backdriving force \(\tau \) is observed over a long time interval (Kutoyants 2004; Höpfner 2014 section 8.1).

3.2 Estimating \((\vartheta _0,\ldots ,\vartheta _p)\) in the model (14)+(19)

By abuse of language, we write in this subsection \(\,Y\) for \(\pi \) on \((C,\mathcal {C})\) under \(Q_\theta \), \(\,X\) for \(\pi -R_\theta \) under \(Q_\theta \); as before \(\sqrt{c\,} W\) denotes the martingale part of Y or X under \(Q_\theta \) relative to \(\mathbb {G}\). To estimate \(\vartheta =(\vartheta _0,\ldots ,\vartheta _p) \in \mathbb {R}^p{\times }(0,\infty )\) in the model (14)+(19), consider

Least squares estimators (34) are uniquely determined—see (37) below—and have an explicit and easy-to-calculate form; we discuss their asymptotics under \(\theta =(\vartheta ,\tau )\). Define martingales \(\widetilde{S}_{n,\theta }\) with respect to \(Q_\theta \) and \((\mathcal {G}_{tn})_{t\ge 0}\)

which coincide with \(S_{n,\theta }\) of (27) whose last component has been suppressed. Let \(\widetilde{J}_{n,\theta }\) denote the angle bracket of \(\widetilde{S}_{n,\theta }\) under \(Q_\theta \). We consider also

which coincides with local scale \(\psi _n\) of (25) whose last row and last column have been suppressed, and invertible deterministic \((p{+}1){\times }(p{+}1)\) matrices as defined in (30) in the proof of proposition 1:

Proposition 2

For every \(\theta =(\vartheta ,\tau )\in \Theta \), rescaled estimation errors of the least squares estimator (34) admit a representation

as \(n\rightarrow \infty \). \(\square \)

Proof

(1) Almost surely as \(n\rightarrow \infty \), angle brackets \(\widetilde{J}_{n,\theta }\) of \(\widetilde{S}_{n,\theta }\) under \(Q_\theta \) converge to

for fixed \(0<t<\infty \). This has been proved in proposition 1.

(2) Least squares estimators \(\widetilde{\vartheta }(t)\) in (34) are uniquely defined and have the explicit form

to check this, take derivatives under the integral sign in (34), use (19) for \(\,i = 0,1,\ldots ,p\,\)

put integrals equal to zero and use the definition (30) of \(\widetilde{J}(t)\). On the other hand, (19) shows

Thus (14) allows to write

The scaling property

applied to (39) then yields the representation

(3) Representations (12) in lemma 4 combined with the definition of \(\widetilde{S}_{n,\theta }\) in (35) show that under \(Q_\theta \) as \(n\rightarrow \infty \), the vector on the right hand side of (41) can be written as

Taking into account step (1) this allows to write representation (41) of rescaled estimation errors as

which concludes the proof. \(\square \)

3.3 Estimating \(\tau \) in the model (14)+(19)

Also in this subsection, \(\,Y\) stands for \(\pi \) on \((C,\mathcal {C})\) under \(Q_\theta \), \(\,X\) for \(\pi -R_\theta \) under \(Q_\theta \), and \(\sqrt{c\,} W\) for the martingale part of Y or X under \(Q_\theta \) relative to \(\mathbb {G}\). To estimate \(\tau >0\) in the model (14)+(19) based on observation of Y up to time n, define

where \(\widetilde{\vartheta }(n)\) is the least squares estimator (34), and \(\widetilde{J}(n)\) is given by (30).

A motivation is as follows. With notations of Sect. 3.1 write the log-likelihood surface \(\,\theta ' \,\rightarrow \, \log L_n^{ \theta ' / \theta }\,\) under \(Q_\theta \) in the form

neglect contributions which do not depend on \(\theta '=(\vartheta ',\tau ')\); maximize in \(\tau '>0\) on \(\vartheta '\)-sections \(\,\Theta _{\vartheta '}{:}{=}\{ (\vartheta ',\tau '):\tau '>0\} \subset \Theta \,\) on which \(\vartheta '\) remains fixed; finally, insert the estimate \(\widetilde{\vartheta }(n)\) in place of \(\,\vartheta '\). Making use of (37) the resulting estimator for the parameter \(\tau >0\) is \(\widetilde{\tau }(n)\) as specified in (42).

Proposition 3

As \(n\rightarrow \infty \), rescaled estimation errors of the estimator (42) admit an expansion

under \(\theta =(\vartheta ,\tau )\in \Theta \), with X solution to the Ornstein Uhlenbeck SDE (15) driven by W.

Proof

Combining (42) with (37) we have

(1) Consider the numerator

on the right hand side of (43). (37) and (38) allow to write

Adding and substracting this expression, (44) takes the form

Exploiting first (19) and then (37) and (30) we can write

Adding and subtracting this expression to (45) we thus can write (44) as

which in virtue of (21) and (16) equals

Using again (14), we have reduced the numerator (44) on the right hand side of (43) to

As in lemma 4, joint laws

do not depend on n, whereas by proposition 2 rescaled estimation errors

converge in law as \(n\rightarrow \infty \), and thus are tight as \(n\rightarrow \infty \). Terms \(\int _0^n X_s\, dW_s\) in (46) are of stochastic order \(O_{Q_\theta }(\sqrt{n\,})\) as \(n\rightarrow \infty \), by proposition 1. As a consequence, our final representation (46) of the numerator (44) on the right hand side of (43) allows to write the rescaled estimation error as

(2) We consider the denominator

on the right hand side of (47)—i.e. on the right hand side of (43)—which we write as

whereas (39) shows

Thus we have reduced the denominator (48) to

The first summand in this expression is \(O_{Q_\theta }(n)\), by lemma 1, whereas the second summand

converges in law as \(n\rightarrow \infty \) under \(Q_\theta \), by (40) and proposition 2, and thus is tight as \(n\rightarrow \infty \). Taking all this together, the denominator (48) on the right hand side of (43) under \(Q_\theta \) satisfies

(3) The proof is finished: taking together (43), (47) and (49), we have

and thus

By lemma 1, \(\,\frac{1}{n} \int _0^n X_s^2\, ds\) converges \(Q_\theta \)-almost surely to \(\frac{c}{2\tau }\): so proposition 3 is proved. \(\square \)

3.4 Efficiency in the model (14)+(19)

We can put together the results of subsections 3.2 and 3.3 to prove that for every \(\theta \in \Theta \) as \(n\rightarrow \infty \),

is an asymptotically efficient estimator sequence in the sense of the local asymptotic minimax theorem.

Theorem 2

Observing Y in (14)+(19) over the time interval [0, n] as \(n\rightarrow \infty \), the sequence

defined by (34) and (42) is such that representation (33) of corollary 1 in Sect. 3.1 holds as \(n\rightarrow \infty \):

The estimator sequence \((\widetilde{\theta }(n))_n\) is thus efficient at \(\theta \) in the sense of the local asymptotic minimax theorem. This holds for all \(\theta =(\vartheta ,\tau )\in \Theta \).

Proof

If we compare the set of definitions for \(S_{n,\theta }\) in (27), J in (28), \(\psi _n\) in (25) to the set of definitions for \(\widetilde{S}_{n,\theta }\) in (35), \(\widetilde{J}\) in (30), \(\widetilde{\psi }_n\) in (36), we can merge the assertions of propositions 2 and 3

under \(Q_\theta \) as \(n\rightarrow \infty \) into one assertion

Together with proposition 1 (a) in Sect. 3.1, this shows that condition (33) of corollary 1 in Sect. 3.1 is satisfied. But the last condition implies asymptotic efficiency of an estimator sequence for the unknown parameter in the model (14)+(19) at \(\theta =(\vartheta ,\tau )\in \Theta \). \(\square \)

4 Application: inference in stochastic Hodgkin–Huxley models

Hodgkin–Huxley models play an important role in neuroscience and are considered as realistic models for the spiking behaviour of neurons (see Hodgkin and Huxley 1952; Izhikevich 2007; Ermentrout and Terman 2010). The classical deterministic model with constant rate of input is a 4-dimensional dynamical system with variables (V, n, m, h)

where \(a>0\) is a constant. The functions \((V,n,m,h)\rightarrow F(V,n,m,h)\) and \(V\rightarrow \alpha _j(V)\), \(V\rightarrow \beta _j(V)\), \(j\in \{n,m,h\}\), are those of Izhikevich (2007) pp. 37–38 (i.e. the same as in Höpfner et al. (2016a), section 2.1). V takes values in \(\mathbb {R}\) and models the membrane potential in the single neuron. The variables n, m, h are termed gating variables and take values in [0, 1]. Write \(E_4\,{:}{=}\,\mathbb {R}\times [0,1]^3\) for the state space.

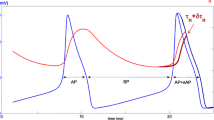

Depending on the value of the constant \(a>0\), the following behaviour of the deterministic dynamical system is known, see Ermentrout and Terman (2010) pp. 63–66. On some interval \((0,a_1)\) there is a stable equilibrium point for the system. There is a bistability interval \(\,\mathbb {I}_{\mathrm{bs}}=(a_1,a_2)\,\) on which a stable orbit coexists with a stable equilibrium point. There is an interval \((a_2,a_3)\) on which a stable orbit exists together with an unstable equilibrium point. At \(a=a_3\) orbits collapse into equilibrium; for \(a>a_3\) the equilibrium point is again stable. Here \(0<a_1<a_2<a_3<\infty \) are suitably determinedFootnote 1 endpoints for intervals. Equilibrium points and orbits depend on the value of a. Evolution of the system along an orbit yields a remarkable excursion of the membrane potential V which we interprete as a spike.

In simulations, the equilibrium point appears to be globally attractive on \((0,a_1)\), the orbit appears to be globally attractive on \((a_2,a_3)\); on the bistability interval \(\mathbb {I}_{\mathrm{bs}}=(a_1,a_2)\), the behaviour of the system depends on the choice of the starting value: simulated trajectories with randomly chosen starting point either spiral into the stable equilibrium, or are attracted by the stable orbit.

We feed noise into the system. Prepare an Ornstein–Uhlenbeck process (15) with parameter \(\tau >0\)

and replace input \(\,a\,dt\,\) in the deterministic system (50) above by increments (16)

of the stochastic process Y in (14) which depends on the parameter \(\vartheta >0\):

This yields a stochastic Hodgkin–Huxley model

with parameters \(\vartheta >0\) and \(\tau >0\). By (54), the 5-dimensional stochastic system

is strongly Markov with state space \(E_5\,{:}{=}\,\mathbb {R}\times [0,1]^3\times \mathbb {R}\). Stochastic Hodgkin–Huxley models where stochastic input encodes a periodic signal have been considered in Höpfner et al. (2016a, b), (2017) and in Holbach (2019). A biological interpretation of the model (54) is as follows. The structure \(\,dY_t=\vartheta dt + dX_t\,\) of input reflects superposition of some global level \(\vartheta >0\) of excitation through the network with ’noise’ in the single neuron. Noise arises out of accumulation and decay of a large number of small postsynaptic charges, due to the spikes—excitatory of inhibitory, and registered at synapses along the dendritic tree— in spike trains which the neuron receives from a large number of other neurons in the network.

In simulations, the stochastic Hodgkin–Huxley model (54) which we consider in this section exhibits the following behaviour. For values of \(\vartheta \) in neighbourhoods of the bistability interval \(\mathbb {I}_{\mathrm{bs}}\) of (50), the system (54) alternates (possibly long) time periods of seemingly regular spiking with (possibly long) time periods of quiet behaviour. ’Quiet’ means that the system performs small random oscillations in neighbourhoods of some typical point. For smaller values of \(\vartheta \), quiet behaviour prevails, for larger values of \(\vartheta \) we see an almost regular spiking.

The aim of the present section is estimation of an unknown parameter

in the system (54) with stochastic input (52), based on observation of the membrane potential V over a long time interval. For this, our standing assumption will be:

Assuming (55) we recover first, for the internal variables \(j\in \{n,m,h\}\), the state \(\,j_t\,\) at time t from the trajectory of V up to time t

and then, in virtue of the first equation in (54), the state \(\,Y_t\,\) at time t of the process (53) of acccumulated dendritic input from the trajectory of V up to time t:

here and below we write ’ ’ to distinguish reconstructed variables. Thus \((V, \breve{n}, \breve{m}, \breve{h}, \breve{Y})\) reconstructs the trajectory of the 5-dimensional stochastic system \(\mathbb {X}\) from observation of the membrane potential V and given starting point satisfying assumption (55). The motivation is from biology. For single neurons in active networks, the membrane potential can be measured intracellularly with very good time resolution, whereas the gating variables \(j\in \{n,m,h\}\) in the stochastic system (54) represent averages over large numbers of certain ion channels and are not accessible to measurement.

’ to distinguish reconstructed variables. Thus \((V, \breve{n}, \breve{m}, \breve{h}, \breve{Y})\) reconstructs the trajectory of the 5-dimensional stochastic system \(\mathbb {X}\) from observation of the membrane potential V and given starting point satisfying assumption (55). The motivation is from biology. For single neurons in active networks, the membrane potential can be measured intracellularly with very good time resolution, whereas the gating variables \(j\in \{n,m,h\}\) in the stochastic system (54) represent averages over large numbers of certain ion channels and are not accessible to measurement.

Under assumption (55), the problem of estimating the unknown parameter \(\theta =(\vartheta ,\tau )\in \Theta \) in the stochastic Hodgkin–Huxley system (54) based on observation of the membrane potential can be formulated as follows. Consider the canonical path space \((C,\mathcal {C})\), \(C\,{:}{=}\,C([0,\infty ),\mathbb {R}^5)\), equipped with the canonical process \(\pi =(\pi _t)_{t\ge 0}\) and the canonical filtration \(\mathbb {G}=(\mathcal {G}_t)_{t\ge 0}\), and also the smaller filtration

generated by observation of the first component \(\pi ^{(1)}\) of the canonical process \(\pi \) knowing the starting point \(\pi _0\) of \(\pi \). For \(\theta \in \Theta \), let \(Q_\theta \) denote the law of the process \(\mathbb {X}\) under \(\theta =(\vartheta ,\tau )\) on \((C,\mathcal {C})\), with starting point (55) not depending on \(\theta \). On \((C,\mathcal {C})\) we write for short

for the reconstruction \(\breve{\pi }^{(5)}\) of the fifth component \(\pi ^{(5)}\) of \(\pi \) (which under \(Q_\theta \) represents accumulated dendritic input \(Y_t=\vartheta t + X_t\), \(t\ge 0\)) from the first component \(\pi ^{(1)}\) (which under \(Q_\theta \) represents the membrane potential V) and the starting point \(\pi _0\); on the lines of (57) we have

By definition of \(\mathbb {G}^{(1)}\), the observed process \(\,\pi ^{(1)}\) and the reconstructed processes \(\,\zeta \,,\, \breve{\pi }^{(j)}\), \(j\in \{2,3,4\}\), are \(\mathbb {G}^{(1)}\)-semimartingales. Write \(\sqrt{c\,}\, W\) for the \(\mathbb {G}^{(1)}\)-martingale part of \(\zeta \) or of \(\pi ^{(1)}\) under \(Q_\theta \). The likelihood ratio process of \(Q_{\theta '}\) with respect to \(Q_\theta \) relative to \(\mathbb {G}^{(1)}\) is obtained in analogy to (23)+(24), special case \(R_\vartheta (s)=\vartheta s\). Then the following is proposition 3.2 in Holbach (2020):

Proposition 4

(Holbach 2020) For pairs \(\,\theta '=(\vartheta ',\tau ')\), \(\theta =(\vartheta ,\tau )\) in \(\Theta =(0,\infty )^2\), writing

likelihood ratios in the statistical model

are given by

where \(\langle M^{ \theta ' / \theta }\rangle \) denotes the angle bracket of the martingale \(M^{ \theta ' / \theta }\) under \(Q_\theta \) relative to \(\mathbb {G}^{(1)}\).

Note that under \(Q_\theta \), the \(\mathbb {G}^{(1)}\)-adapted process \(\,(\zeta _t-\vartheta t)_{t\ge 0}\) in the integrand of \(M^{ \theta ' / \theta }\) represents the Ornstein Uhlenbeck process X of equation (51); the constant \(\,c\,\) is known from quadratic variation of \(\,\zeta \,\).

We know everything about the likelihoods (60): they are the likelihoods in the submodel where \(\vartheta _0\equiv 0\) is fixed of the model considered in Sect. 3, case \(p\,{:}{=}\, 1\). As a consequence, in the statistical model associated to the stochastic Hodgkin Huxley model, we have LAN at \(\theta \), with local scale \(\frac{1}{\sqrt{n^3\,}}\) for the \(\vartheta \)-component and \(\frac{1}{\sqrt{n\,}}\) for the \(\tau \)-component, \(\theta =(\vartheta ,\tau )\in \Theta \). We have a characterization of efficient estimators by the local asymptotic minimax theorem, and we did construct asymptotically efficient estimators. Since \(\,\zeta \,\) is a \(\mathbb {G}^{(1)}\)-semimartingale, common \(\mathbb {G}^{(1)}\)-adapted determinations for \(\theta \in \Theta \) of the stochastic integrals \(\,\int s\, d\zeta _s\,\) and \(\,\int \zeta _s\, d\zeta _s\,\) exist. According to (34), (37) and (42), we estimate the first component of the unknown parameter \(\theta =(\vartheta ,\tau )\) in \(\Theta =(0,\infty )^2\) in the system (54) by

and then the second component by

The estimators \(\breve{\vartheta }(t)\), \(\breve{\tau }(t)\) are \(\mathbb {G}^{(1)}_t\)-measurable, \(t\ge 0\). The structure of the likelihoods (60) is the structure of the likelihoods in Sect. 3 with \(p\,{:}{=}\,1\), submodel \(\vartheta _0\equiv 0\). The structure of the pair \((\breve{\vartheta }(t),\breve{\tau }(t))\) in (61)+(62) is the structure of the estimators \((\widetilde{\vartheta }(t),\widetilde{\tau }(t))\) in Sect. 3 with \(p\,{:}{=}\,1\), submodel \(\vartheta _0\equiv 0\). Under \(Q_\theta \), we have from (27) and (28) and theorem 2 in Sect. 3.4 a representation

of rescaled estimation errors as \(n\rightarrow \infty \), and proposition 1 in Sect. 3.1 shows convergence in law

under \(Q_\theta \) as \(n\rightarrow \infty \), with some two-dimensional standard Brownian motion \(\widetilde{W}\). Consider on \(\,( C, \mathcal {C}, (\mathcal {G}^{(1)}_{tn})_{t\ge 0}, \{Q_\theta :\theta \in \Theta \})\,\) martingales

under \(Q_\theta \), and let \(\breve{J}_{n,\theta }\) denote their angle brackets under \(Q_\theta \). Define local scale

and limit information

With these notations, theorem 1 in Sect. 3.1 and theorem 2 in Sect. 3.4 yield:

Theorem 3

In the sequence of statistical models

the following holds at every point \(\theta =(\vartheta ,\tau )\) in \(\Theta =(0,\infty )^2\):

(a) we have LAN at \(\theta \) with local scale \((\breve{\psi }_n)_n\) and local parameter \(h\in \mathbb {R}^2\):

(b) by (63), rescaled estimation errors of \(\,\breve{\theta }(n)\,{:}{=}\,(\breve{\vartheta }(n),\breve{\tau }(n))\,\) admit the expansion

When we observe—for some given starting point of the system—the membrane potential in a stochastic Hodgkin–Huxley model (54) up to time n, with accumulated stochastic input defined by (53) together with (51) which depends on an unknown parameter \(\theta =(\vartheta ,\tau )\) in \(\Theta =(0,\infty )^2\), the following resumes in somewhat loose language the assertion of the local asymptotic minimax theorem, corollary 1 in Sect. 3.1:

Corollary 2

For loss functions \(L:\mathbb {R}^2\rightarrow [0,\infty )\) which are continuous, subconvex and bounded, for \(0<C<\infty \) arbitrary, maximal risk over shrinking neighbourhoods of \(\theta \)

converges as \(n\rightarrow \infty \) to

Within the class of \((\mathcal {G}^{(1)}_n)_n\)-adapted estimator sequences \((T_n)_n\) whose rescaled estimation errors at \(\theta =(\vartheta ,\tau )\) are tight—at rate \(\sqrt{n^3\,}\) for the \(\vartheta \)-component, and at rate \(\sqrt{n\,}\) for the \(\tau \)-component—it is impossible to outperform the sequence \((\breve{\vartheta }(n),\breve{\tau }(n))\) defined by (61)+(62), asymptotically as \(n\rightarrow \infty \).

Note that we are free to measure risk through any loss function which is continuous, subconvex and bounded.

Notes

Note that the constants of Ermentrout and Terman (2010) are different from the constants of Izhikevich (2007) which we use for the Hodgkin–Huxley model (50). With constants from Izhikevich (2007), simulations localize \(a_1=\inf \mathbb {I}_{\mathrm{bs}}\) between 5.24 and 5.25, and \(a_2=\sup \mathbb {I}_{\mathrm{bs}}\) close to 8.4; the value of \(a_3\) is \(\approx 163.5\) and thus far beyond any ’biologically relevant’ value for the parameter a. Numerical calculations and simulations related to the bistability interval have been done in Hummel (2019).

References

Bingham N, Goldie C, Teugels J (1987) Regular variation. Cambridge University Press, Cambridge

Davies R (1985) Asymptotic inference when the amount of information is random. In LeCam L, Olshen R (eds), Proceedings of the Berkeley symposium in honor of J. Neyman and J. Kiefer. Vol. 2, Wadsworth Belmont

Dehling H, Franke B, Kott T (2010) Drift estimation for a periodic mean reversion process. Stat Inference Stoch Process 13:175–192

Dehling H, Franke B, Woerner J (2017) Estimating drift parameters in a fractional Ornstein Uhlenbeck process with periodic mean. Stat Inference Stoch Process 20:1–14

Ermentrout G, Terman D (2010) Mathematical foundations of neuroscience. Springer, Berlin

Franke B, Kott T (2013) Parameter estimation for the drift of a time-inhomogeneous jump diffusion process. Stat Neerl 76:145–168

Hajek J (1970) A characterization of limiting distributions for regular estimators. Z Wahrscheinlichkeitsth Verw Geb 14:323–330

Hodgkin A, Huxley A (1952) A quantitative descrition of ion currents and its application to conduction and excitation in nerve membranes. J Physiol 117:500–544

Holbach S (2020) Positive Harris recurrence for degenerate diffusions with internal variables and randomly perturbed time-periodic input. Stoch. Processes Applications, in press, https://doi.org/10.1016/j.spa.2020.07.005. arXiv:1907.13585

Holbach S (2019) Local asymptotic normality for shape and periodicity of a signal in the drift of a degenerate diffusion with internal variables. Electron J Stat 13:4884–4915

Höpfner R (2014) Asymptotic statistics. DeGruyter, Berlin

Höpfner R, Kutoyants Yu (2009) On LAN for parametrized continuous periodic signals in a time inhomogeneous diffusion. Stat Decis 27:309–326

Höpfner R, Kutoyants Yu (2011) Estimating a periodicity parameter in the drift of a time inhomogeneous diffusion. Math Methods Stat 20:58–74

Höpfner R, Löcherbach E, Thieullen M (2016) Ergodicity for a stochastic Hodgkin-Huxley model driven by Ornstein-Uhlenbeck type input. Ann Instit Henri Poincaré 52(1):483–501

Höpfner R, Löcherbach E, Thieullen M (2016) Ergodicity and limit theorems for degenerate diffusions with time periodic drift. Application to a stochastic Hodgkin-Huxley model. ESAIM PS 20:527–554

Höpfner R, Löcherbach E, Thieullen M (2017) Strongly degenerate time inhomogenous SDEs: Densities and support properties. Application to Hodgkin-Huxley type systems. Bernoulli 23(4A):2587–2616

Hummel C (2019) Netzwerke von Hodgkin-Huxley Neuronen. Institut für Mathematik, Universität Mainz, Masterarbeit

Ibragimov A, Khasminskii R (1981) Statistical estimation. Springer, New York

Ikeda N, Watanabe S (1989) Stochastic differential equations and diffusion processes, 2nd edn. North-Holland / Kodansha, Tokyo

Izhikevich E (2007) Dynamical systems in neuroscience: the geometry of excitation and bursting. MIT press, Cambridge

Jacod J, Shiryaev A (1987) Limit theorems for stochastic processes. Springer, Berlin

Kutoyants Yu (2004) Statistical inference for ergodic diffusion processes. Springer, London

Le Cam L (1969) Théorie asymptotique de la décision statistique. Les Presses de l’Universiteé de Montreal, Montreal

Le Cam L, Yang G (2002) Asymptotics in statistics, 2nd edn. Springer, Berlin

Lipster R, Shiryaev A (2001) Statistics of random processes, vol I+II, 2nd edn. Springer, Berlin

Pchelintsev E (2013) Improved estimation in a non-Gaussian parametric regression. Stat Inference Stoch Process 16:15–28

Pfanzagl J (1994) Parametric statistical theory. deGruyter, Berlin

Funding

Open Access funding provided by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Höpfner, R. Polynomials under Ornstein–Uhlenbeck noise and an application to inference in stochastic Hodgkin–Huxley systems. Stat Inference Stoch Process 24, 35–59 (2021). https://doi.org/10.1007/s11203-020-09226-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11203-020-09226-0

Keywords

- Diffusion models

- Local asymptotic normality

- Asymptotically efficient estimators

- Degenerate diffusions

- Stochastic Hodgkin–Huxley model