Abstract

Over the last decades, CSCW research has undergone significant structural changes and has grown steadily with manifested differences from other fields in terms of theory building, methodology, and socio-technicality. This paper provides a quantitative assessment of the scientific literature for mapping the intellectual structure of CSCW research and its scientific development over a 15-year period (2001–2015). A total of 1713 publications were subjected to examination in order to draw statistics and depict dynamic changes to shed new light upon the growth, spread, and collaboration of CSCW devoted outlets. Overall, our study characterizes top (cited and downloaded) papers, citation patterns, prominent authors and institutions, demographics, collaboration patterns, most frequent topic clusters and keywords, and social mentions by country, discipline, and professional status. The results highlight some areas of improvement for the field and a lot of well-established topics which are changing gradually with impact on citations and downloads. Statistical models reveal that the field is predominantly influenced by fundamental and highly recognized scientists and papers. A small number of papers without citations, the growth of the number of papers by year, and an average number of more than 39 citations per paper in all venues ensure the field a healthy and evolving nature. We discuss the implications of these findings in terms of the influence of CSCW on the larger field of HCI.

Similar content being viewed by others

Introduction

CSCW research has grown steadily for much of the past quarter century, disseminated by devoted and non-specific publication venues from North America and Europe (Grudin and Poltrock 2012). As an established, practice-based field of research committed to understand the socially organized nature of cooperation and its characteristics in order to inform the design of computer artifacts in cooperative settings, Computer Supported Cooperative Work (CSCW) has adopted a variety of theoretical constructs, descriptive methods, conceptual frameworks, and hybrid forms to characterize technology-driven waves that shape digitally mediated communication and collaboration in increasingly complex, networked settings (Schmidt and Bannon 2013). As a result of these advances, socially embedded systems and technologies have pervaded our everyday life settings as driving forces for contemporary research (Crabtree et al. 2005). With this in mind we tried to contribute to Schmidt and Bannon’s (2013) intent of assessing the “complex physiognomy” of this field of research, acknowledging that measuring individual and collaboration outcomes within an interdisciplinary community like CSCW can be a complex task due to its polymorphic nature and inherent difficulty to “judge the quality of its members’ contributions” (Bartneck and Hu 2010). A contrast between CSCW and other communities and disciplines that promote up-to-date introspections and history-aware research agendas is clearly noted. For instance, “CSCW researchers remain unreflective about the structure and impact of their own collaborations” (Horn et al. 2004). More recent evidence (Keegan et al. 2013) suggests that they “have been relatively quiet, with only a few attempts to understand the structure of the CSCW community”, which strongly affects the course of innovation and theory building in this field.

Some researchers have emphasized the ability of quantitative analysis to understand the nature of a field and its evolution over time. Kaye (2009) goes even further by claiming that “it seems reasonable that a certain amount of introspection is healthy for a field”. Scientometrics methods and tools “can be used to study sociological phenomena associated with scientific communities, to evaluate the impact of research, to map scientific networks and to monitor the evolution of scientific fields” (Jacovi et al. 2006). This means that such kind of analysis not only help researchers to understand how research communities are evolving throughout the world by reflecting on the publication data of specific conferences and journals (Gupta 2015; Barbosa et al. 2016; Mubin et al. 2017) but also faculty members, students, general public, administrators, conference organizers, journal editors, and publishers interested on their advances and trends as a basis for knowledge acquisition, consensus building, and decision making in a vast set of situations such as hiring staff or targeting venues and publications to examine (Holsapple and Luo 2003; Henry et al. 2007). Notwithstanding some efforts using statistical methods to measure data from the literature, none of the earlier known studies provided a cross-sectional, bibliometric analysis to portray the field of CSCW and its evolution in the entire twenty first century through the widely accessible data sources. There is a need to reflect upon the contributions annually published by CSCW authors both continuously and systematically for shaping the future by discovering new gaps while quantifying the underlying intellectual structure of CSCW research. Prior quantitative examinations are considered as the starting point for this study with a focus on CSCW devoted venues in a formal data-driven approach.

The present paper aims to reflect on the authorship and impact of the published papers in the field of CSCW, studying its main trends with the ultimate goal of providing future insights and recommendations to a research community fully committed to understanding cooperative work practices and designing digital and other material artifacts in cooperative settings. We tried to examine more deeply the authorship profile and how it has changed over time, the prominent institutions, and the research topics over time (Pritchard 1969; Glänzel 2009). Conceived as integral units of the “science of measuring” (Price 1963) for analyzing scientific and technological literature, bibliometric indicators are used as formative instruments to characterize structural gaps and trends of CSCW research. In addition, we expanded our research to identify the geographic origin of research by detecting the institutional’s affiliation country of each author. In the course of these pursuits, the present study also sheds new light to the altmetrics literature by providing an overview of the coverage of alternative metrics in CSCW research. We are particularly interested in gaining insight into the following Research Questions (RQ):

-

1.

Was there an increase in the amount of literature in CSCW research? Is there a pattern to how citations fluctuate over years?

-

2.

What are the differences in the country, affiliation, and individual research output? What does that tell us about the field profile?

-

3.

How has the scientific collaboration among CSCW researchers evolved? Do collaboration types influence the overall impact of publications?

-

4.

What research topics were of concern to the authors in the field of CSCW? How are they related with respect to their history and future progress?

-

5.

What is the impact of CSCW publications on social media? How much and what kind of altmetrics data exist for the papers?

-

6.

How can our observations on the structural changes of CSCW research be related to the larger field of Human–Computer Interaction (HCI)?

All these questions are suited to taking a first step in portraying the CSCW literature. The remainder of the paper is organized as follows. “Background” section discusses some background on the field of Scientometrics, providing an overview of the research dedicated to the bibliometric study of CSCW and contiguous disciplines. A conceptual framework comprising core and peripheral concepts in the field of CSCW is also presented. “Method” section summarizes our data selection, collection, and preparation processes. “Findings” section describes the main findings obtained with the scientometric analysis. Thereafter, we speculate and discuss on what they could mean looking ahead for the CSCW community by performing a scientometric comparison with HCI related outlets. The paper concludes with some reflections on the issues raised and a list of possible shortcomings of our analysis.

Background

Scientometrics was introduced by Nalimov and Mulchenko (1969) to characterize the study of science in terms of structure, growth, inter-relationships, and productivity. Historians, philosophers of science, and social scientists such as Derek J. de Solla Price published a vast set of research materials in the 1960s and 1970s on the foundations of the field of quantitative science studies (Mingers and Leydesdorff 2015), resulting in a “full-fledged research program” (Price 1976). As an integral part of the sociology of scientific knowledge, Scientometrics has a notable impact on the development of scientific policies (Hess 1997). Some of its main interests are the development and application of techniques, methods, tools, and quantitative indicators intended to analyze the interaction between scientific and technological domains, the improvement processes related to social factors, the study of cognitive and socio-organizational structures in scientific disciplines, and the design of information systems for knowledge acquisition, analysis and dissemination (van Raan 1997). A recent review of the literature (Mingers and Leydesdorff 2015) covered the disciplinary foundations and historical milestones of this problem-oriented field both conceptually and empirically.

A somewhat similar body of work appeared under the banner of bibliometrics as a descriptive and evaluative science focused on the mathematical and statistical examination of patterns in documentation and information (Pritchard 1969). Hertzel (1987) was among the first to trace the development of bibliometrics from its origins in statistics and bibliography. The term is often overlapped with concepts such as cybermetrics and webometrics, defined as the quantitative analysis of scholarly and scientific communication in the Internet ecosystem (Diodato 1994). At first, bibliometrics techniques and tools are based on mathematical patterns intended to measure trends and citation impact from the literature (Tague-Sutcliffe 1992). Another set of recent contributions regards the study of the altmetrics literature for measuring a different kind of research impact, “acting as a complement rather than a substitute to traditional metrics” (Erdt et al. 2016). The paradigm of ‘scientometrics 2.0’ (Priem and Hemminger 2010) emerged as a way of using non-traditional metrics for evaluating scholars, recommending publications, and studying science to monitor the growth in the size of scientific literature using Web 2.0 tools. Altmetric data sources include but are not limited to social bookmarking, social news and recommendations, blogs, digital libraries, social networks, and microblogs. The number of papers with available alternative metrics has been growing for most disciplines in the last 5 years (Erdt et al. 2016). Such Web-based metrics enable the assessment of public engagement and general impact of scholarly materials (Piwowar 2013) through different perspectives and levels of analysis (e.g., views, tweets, and shares).

CSCW research revisited: a socio-technical perspective

The origins of the term CSCW can be traced to a workshop organized by Irene Greif and Paul Cashman in 1984 intended to better understand how computer systems could be used to improve and enhance group outcomes taking into account the highly nuanced, flexible, and contextualized nature of human activity in cooperative work settings (Greif 1988; Ackerman 2000; Schmidt and Bannon 2013). The enthusiasm for the topic continued with the first open conference under the CSCW label convened in Austin, Texas in 1986 (Krasner and Greif 1986). GROUP conference (formerly Conference on Office Information Systems) was held 2 years later at Palo Alto, California. The first European CSCW conference was realized in London in 1989, while the CSCW Journal began to appear in 1992 (Schmidt and Bannon 2013).

Several authors attempted to define CSCW but there seems to be no general definition (Suchman 1989; Schmidt and Bannon 1992). In broad terms, CSCW can be defined as “an endeavor to understand the nature and characteristics of cooperative work with the objective of designing adequate computer-based technologies” (Bannon and Schmidt 1989). Kuutti (1991) went even further by claiming that CSCW involves “work by multiple active subjects sharing a common object and supported by information technology”. Such ways of viewing CSCW encompass an explicit concern for the socially organized practices related to the use of computer support systems (Bannon 1993). Interested readers can investigate other sources for accounts of the field (e.g., Hughes et al. 1991; Bannon 1992; Schmidt and Bannon 1992; Grudin 1994; Schmidt 2011).

CSCW researchers have developed distinct conceptualizations of key aspects of work and life practices, based upon empirical and design-oriented work in real-world settings (Schmidt and Bannon 2013). A large body of crucial discoveries brought through ethnographic studies of cooperative work has contributed to the development of concepts such as situated action, awareness, flexible workflows, invisible work, articulation work, expertise sharing, common information spaces, and material resources for action (Blomberg and Karasti 2013). CSCW research has over time created and refined classification systems and taxonomies covering mutually exclusive, exhaustive, and logically interrelated categories as crucial processes in theory development (Johnson 2008). Figure 1 summarizes a set of socio-technical requirements for collaboration. This conceptual model intends to categorize the conceptual structure of cooperative work and social computing.

Adapted from Cruz et al. (2012)

Socio-technical classification model of CSCW.

The 3C Model, originally proposed by Ellis et al. (1991), can be systematized into an interactive cycle through the well-known modes of collaboration. Communication can be defined as an interaction process between people (McGrath 1984) with explicit or implicit information exchange, in a private or public channel. The users’ messages can be identified or anonymous, and conversation may occur with no support, structured or intellectual process support, with associated protocols. As a requirement, collaborative computing needs to be able to support the conversation between two or more individuals in one-to-one, one-to-many, or many-to-many settings. Coordination relies on managing interdependencies between activities performed by multiple actors (Malone and Crowston 1994), which are based on the mutual objects that are exchanged between activities (e.g., design elements, manufactured parts, and resources). Some categories related to the coordination in the literature are: planning, control models, task/subtask relationship and information management, mutual adjustment, standardization, coordination protocol, and modes of operation. In order to effectively support coordination, collaborative computing needs to fulfill three important requirements: time management, resources, or shared artifacts produced along the activity chain. Cooperative work arrangements appear and dissolve again. Oppositely to conflict (McGrath 1984), cooperation occurs when a group works toward a common goal (Malone and Crowston 1994) with high degrees of task interdependencies, sharing the available information by some kind of shared space (Grudin 1994). Cooperation categories can range from production (co-authoring), storage or manipulation of an artifact, to concurrency, access or floor control. Technically, cooperation is supported by systems with capabilities to send or receive messages, synchronously and/or asynchronously (Mentzas 1993), and also develop or share documents (Grudin and Poltrock 1997).

Collaboration can occur in a specific time (synchronous, asynchronous) and place (co-located, remote) with some level of predictability. A set of temporal and spatial subdomains can be distilled, including: session persistence, delay between audio/video channels, reciprocity and homogeneity of channels, delay of the message sent, and spontaneity of collaboration. The application-level category identifies a set of typologies for collaborative systems and technologies (Ellis et al. 1991). Some examples include shared editors, video conferencing, shared file repositories, instant messaging, workflow systems, and social tagging systems. As a subcategory of social computing, regulation means the representation of mechanisms that enable participants to organize themselves into a collaborative working environment, where the regulation of collaboration activities concerns the definition and evolution of work rules to ensure conformity between the activity and group goals (Ferraris and Martel 2000). System properties can range from the architecture to the collaboration interface (portal, physical workspace, devices), content (text, links, graphic, data-stream), supported actions (receive, add, edit, move, delete), alert mechanisms, and access controls. In addition, social processes in work “strongly influence the ways in which CSCW applications are adopted, used, and influence subsequent work” (Kling 1991).

Groups are social aggregations of individuals with awareness of its presence, supported by task interdependencies, and conducted by its own norms towards a common goal (Pumareja and Sikkel 2002). The collaboration cycle is bounded by awareness, which can be understood as the perception of group about what each member develops, and the contextual knowledge that they have about what is happening within the group when executing activities in shared communication systems (Ackerman 2000; Mittleman et al. 2008). Cooperative work ensembles are characterized by size, composition, location, proximity, structure, formation, cohesiveness, autonomy, subject, behavior, and trust. The group members have a personal background (work experience, training, educational), motivation, skills, attitude towards technology, satisfaction, knowledge, and personality. There is a specific complexity associated to each task. In addition, group tasks can be subdivided in creativity, planning, intellective, mixed-motive, cognitive-conflict, decision-making, contests/battles/competitive, and performances/psychomotor (McGrath 1984). They are supported by cultural impact, goals, interdependency and information exchange needs, bottlenecks, and process gain. The contextual or situational factors range from an organizational perspective (rewards, budget, training) to the cultural context (trust, equity), physical setting, and environment (competition, uncertainly, time pressure, etc.). Interaction variables are related to individual outcomes such as expectations and satisfaction on system use, group factors (e.g., quality of group performance), and system outcomes (enhancements and affordances). Some independent variables that characterize a socio-technical collaboration scenario include classes of criteria (functional, technical, usability, ergonomics), meta-criteria (scalability, orthogonality), and additional dimensions such as work coupling, information richness and type, division of labor, scripts, assertion, social connectivity, and process integration. More details are given in our previous paper (Cruz et al. 2012).

Exploring an interdisciplinary field constantly disrupted by technological and sociocultural changes

A cross-sectional analysis of CSCW and related research needs the consideration of previous studies with similar purposes to identify reasonable gaps and reduce duplicated efforts. Research advances in CSCW have spread across journals, conference proceedings, posters, tutorials, book series, technical reports, presentation videos, slides, and social media platforms developed for scientists and researchers. Prior approaches to evaluating CSCW bibliography emphasized a need for “building and maintaining a reference collection of relevant publications” (Greenberg 1991), describing its evolution in terms of systems and concepts deployed since the origins of the field. Jonathan Grudin also explored historical changes and demographics in 1994. According to the author, North American foci witnessed a paradigmatic shift from mainframe computing and large minicomputer systems (developed to support organizational tasks and goals in the 1960s) to applications conceived primarily for individual users in the 1990s. Similarities between the ACM Conference on Computer-Supported Cooperative Work and Social Computing (ACM CSCW) and the ACM CHI Conference on Human Factors in Computing Systems (CHI) characterized the North American CSCW community as a consequence of HCI research, “fueled by computer companies moving beyond single-user applications to products supporting small-group activity”. This contrasted with a considerable attention from European research labs on designing large-scale systems, often in user organizations. A less visible but also a critical source of contributions stemmed from Asian researchers affiliated with product development and telecommunications companies (Grudin 1994).

A review of prior groupware evaluations (Pinelle and Gutwin 2000) preceded the introduction of the twenty first century with an examination of the ACM CSCW conference proceedings (1990–1998). Analyzing a sample of 45 publications that only introduced and/or evaluated a groupware system, findings pointed to a small number of deployments in real world settings when comparing with academic or research implementations. Laboratory experiments and field studies were the primary types of evaluation, but “almost forty percent of the articles contained no formal evaluation at all”. A lack of formal evaluation was also corroborated once only a very small number of studies examined the impact on work practices in a user group when a piece of groupware was introduced.

CSCW has evolved in response to the constant social-technical advances, and the first known bibliometric study devoted to CSCW research in this century was published by Holsapple and Luo in 2003. The authors applied citation analysis to analyze variances in journals with great influence. Tracking contributions, a total of 19,271 citations from journal papers, conference series, technical reports, and books were analyzed across an 8-year period (1992–1999). An appreciation of the most significant CSCW outlets indicated its presence in journals from computer science, business computing, library science, and communications related disciplines. Reasonably, the interdisciplinary nature of CSCW subject was characterized by a research focus on four prominent journals, including an emphasis on design and use issues by the CSCW Journal, which foundation corresponded with the initial wave of critical mass interest on CSCW research. Technically, the CSCW Journal had a higher amount of citations from conference proceedings, contrasting with the lowest number of journal citations and papers cited. Results also revealed that its number of citations remained constant in the last century.

Earlier studies of CSCW research demonstrated the value of examining its configuration by analyzing topics of interest, citation indicators, co-authorship networks, etc. Results achieved by Horn et al. (2004) denoted a “high volatility in the composition of the CSCW research community over decade-long time spans”. This view can corroborate a ‘punctuated equilibrium’ established on time frames of evolutionary stasis disrupted by short periods of sudden technological waves (Grudin 2012). Co-authorship network analysis revealed that CSCW researchers maintained a high proportion of collaborations with authors from outside fields of research between 1999 and 2003 (Horn et al. 2004). Collaboration robustness and co-authoring partnership were identified for HCI and CSCW cumulative networks (1982–2003), and a top 20 of HCI and CSCW prolific authors was identified using co-authorship networks. Physical proximity has represented an important factor in establishing collaborations between HCI and CSCW researchers. This falls far short of other fields where researchers cooperate actively with distinct institutions and it is also relevant to note that a considerable number of highly central authors in CSCW were also highly central in the HCI community. Oddly enough, there were quite a few cases in which co-authorship happens between married couples. The authors went even further by performing a citation data analysis from ACM CSCW conference series (2000–2002), displaying a preference for citing journal papers, followed by CSCW devoted conferences (i.e., ACM CSCW, GROUP, and ECSCW), books, CHI, other conferences, book chapters, URL, and other reports.

A citation graph analysis of the ACM CSCW conference series (1986–2004) spotted the field (Jacovi et al. 2006). Results pointed a relatively low number of papers that were never cited. Eight prominent clusters emerged from the structural analysis, exhibiting “a stable presence of a computer science cluster throughout the years” with decreasing indicators in 2004 given the rise of multimedia tools and media spaces. This cluster was characterized by prototypes and toolkits for collaborative tools, architectures of computer systems, including other subjects devoted to computer science. The evolution of the social science cluster in the ACM CSCW revealed periods of decomposition into sub-topics and small clusters followed by a trend for convergence in the present century. Results from Convertino et al.’s (2006) study on the structural and topical characteristics of ACM CSCW conference (1986–2002) showed a high number of contributions by academics from USA (70–90%) compared with Europe (10–30%). A focus on group issues was predominant throughout the period of analysis, contrasting with organizational and individual levels. Theoretical approaches declined gradually, whilst design contributions focused on design aspects and/or system architectures remained consistently high. Ethnography and experimental approaches increased steadily, while communication and information sharing were considered the main functions of cooperative work presented in the ACM CSCW conference, followed by coordination and awareness. In addition, the main focus on design issues (system prototypes and architectures) was clearly visible. Curiously, the conference also noted an emphasis on CSCW and HCI fully oriented topics instead of expanding its scope on topics from other disciplines.

Corroborating previous results, Wainer and Barsottini (2007) examined four ACM CSCW biannual series (1998–2004) indicating a remarkable decline of non-empirical papers, a stable portion of design and evaluation studies, a high number of descriptive studies, and a constant growth in the number of publications testing hypotheses about group dynamics and collaboration settings through experiments. Groupware design and evaluation papers usually describe system details instead of evaluation results, an indicator that can allow understanding how collaboration systems have been created without major concerns on evaluating use contexts. Keegan et al. (2013) studied the dynamics of ACM CSCW proceedings (1986–2013) by applying social network analysis and bibliometrics. Hence, the authors suggested that the impact of CSCW authors and publications are strongly related to their position within inner collaboration and citation networks. From this point of view, they presented an analytical framework in which the “impact is significantly influenced by structural position, such that ideas introduced by those in the core of the CSCW community (e.g., elite researchers) are advantaged over those introduced by peripheral participants (e.g., newcomers)”. Recently, researchers have started going back to exploring the ACM CSCW conference over a 25-year period (Wallace et al. 2017). The authors draw our attention to understand how work practices have been impacted by changes in technology from 1990 to 2015 taking into account synchronicity and distribution, research and evaluation type, and the device hardware targeted by each publication.

CSCW studies are primarily concerned with revealing the nature of particular work practices within which collaboration occurs. However, a somewhat distinct but interrelated body of work has sought to understand collaboration dynamics in learning settings. Kienle and Wessner (2006) examined the Computer-Supported Collaborative Learning (CSCL) community and suggested some insights about the growth and maturity of its principal conference (1995–2005). The findings revealed a stable ratio of new and recurrent members and an increasing number of citations and co-authorship indicators. Recently, this study was updated by combining social network analysis, document co-citation analysis and exploratory factor analysis for gauging contemporary CSCL research (Tang et al. 2014). In the meantime, a bibliometric exercise was also made for the CRIWG International Conference on Collaboration and Technology (2000–2008), a publication venue “initially thought to be a meeting to exchange research approaches in the field of groupware for a few groups” (Antunes and Pino 2010). CSCW represents the main research area referenced on CRIWG conference proceedings. The primary focus of this community relies on building prototypes and designing collaborative systems and tools, followed by theory and model development. In addition, a large number of CRIWG papers do not present an emphasis on evaluation, addressing architectures, frameworks, prototypes and design issues without an articulated focus on building guidelines for developers.

Recent scientometric studies have also been focused in CHI-related conference series occurring in specific regions or countries, including but not limited to UK (Padilla et al. 2014), Korea (Lee et al. 2014), India (Gupta 2015), New Zealand (Nichols and Cunningham 2015), Brazil (Barbosa et al. 2016), and Australia (Mubin et al. 2017). Such studies applied techniques that range from topic modeling to trend analysis, author gender analysis, institutional-level analysis and co-authorship networks, citations, and authoring frequency (Mubin et al. 2017). In most instances, scientometric examinations exhibit a quantitative emphasis on citation analysis and authorship data (Table 1). It is also worth noting that such kind of studies in the field of HCI exhibits low citation rates comparing with other types of publications (e.g., system design).

Method

The dimension of a field is measured by the size of its literary core, rather than the number of researchers (Garfield 1972). Researchers and educators can benefit from the application of quantitative methods for estimating the impact and coverage of publications and information dissemination services while detecting prolific authors, affiliations, countries, and trending topics (Pritchard 1969). The quantitative approach to research is also concerned with objective assessment of data for clarifying inconsistencies and conflicts while contributing to formulate facts, uncover patterns, and generalize results by manipulating pre-existing statistical data (Mulrow 1994). Moreover, quantitative data collection methods and analytical processes are more structured, explicit, and strongly informed than would be the norm for qualitative approaches (Pope et al. 2000). Qualitative research is constructivist by nature and is driven by the understanding of a concept or phenomenon due to insufficient or novel research (Bird et al. 2009). On the other hand, quantitative science is grounded on postpositive knowledge claims and can be applied to generate statistically reliable data from a sample typically larger (Sandelowski 1995).

Despite the usefulness of Systematic Literature Reviews (SLR), defined as “a form of secondary study that uses a well-defined methodology to identify, analyze and interpret all available evidence related to a specific question in a way that is unbiased and (to a degree) repeatable” (Keele 2007), they are arduous and require more time if compared to other review methods. In addition, solid literature coverage of the observed phenomenon is essential for this method, being more adequate when the data come from situations where the results can be broadly expected to be similar. It is very difficult to follow an updated research agenda using such methods due to the large number of papers published every year. Large volumes of information must be reduced for digestion, and it is also hard to document the whole process in a SLR for even experienced researchers (Stapić et al. 2016). The systematic mapping study should be used when a topic is either very little or very broadly covered, and tertiary reviews are only suitable if several reviews in the domain of analysis already exist and should be summarized. A meta-analysis is a technique that statistically combines evidence from different studies to provide a more precise effect of the results (Glass 1976). Substantial differences between fields and years in the relationship between citations and many other variables (e.g., co-authorship and nationality of authors) can be expected and so it is problematic to average results between fields or years (Bornmann 2015). As an objective and systematic means of describing phenomena, content analysis can be understood as “a research method for making replicable and valid inferences from data to their context, with the purpose of providing knowledge, new insights, a representation of facts and a practical guide to action” (Krippendorff 1980). The aim is to attain a condensed and broad description of the phenomenon, and the outcome of the analysis is concepts or categories describing the phenomenon. Usually the purpose of those concepts or categories is to build up a model, conceptual system, conceptual map or category (Elo and Kyngäs 2008). Quantitative instruments are used for measuring publications, researchers, venues, institutions, and topics (Glänzel 2009). We are particularly interested on measuring the impact of publications in a first stage, so content analysis will be part of future work. We linked the various types of analyses carried out in this paper with the research questions addressed in the introductory section.

Sample

This work relies on the analysis of a 15-year time period (2001–2015) constituted by concomitant variances resulting from technological deployments to support or enhance collaborative practices. Our corpus of study is comprised of a total of 1713 publications, including 985 papers published on ACM CSCW, 298 papers from the International Conference on Supporting Group Work (GROUP), 165 papers from the European Conference on Computer-Supported Cooperative Work (ECSCW), and 265 articles published on Computer Supported Cooperative Work: The Journal of Collaborative Computing and Work Practices (JCSCW). When using scientometrics, it is necessary to consider several issues related to the selection of papers and data normalization (Holsapple and Luo 2003). Evaluating contributions in the field of CSCW constitutes itself as a challenging exercise since “any heuristic chosen to identify which venue or publication belongs to CSCW field is error prone and will be subject to criticism and arguments” (Jacovi et al. 2006). As regards to the publication venues selected for this study, the choice to cover them was mainly because of their scientific committees and editorial boards include some of the most cited authors in CSCW. As such, one may expect that these venues provide a representative (although limited) sample of the work published in this century.

Specificity criterion relies primarily on the Horn et al.’s (2004) categorization, which acknowledged ACM CSCW, GROUP, and ECSCW as devoted venues in the field of CSCW. The authors also recognized JCSCW as a CSCW-related outlet, while Holsapple and Luo (2003) considered JCSCW articles as extremely specialized and influential contributions to the body of CSCW knowledge for shaping research. This selection criterion was also discussed in other studies that considered the included sources as flagship venues of the field for regular publication by CSCW authors from North American and European communities (Jacovi et al. 2006; Grudin and Poltrock 2012). On the other hand, Horn et al. (2004) considered CHI and the ACM Symposium on User Interface Software and Technology (UIST) as non-CSCW publication outlets. We chose to include only peer-reviewed documents, so book chapters were excluded in order to bring consistency to the data analysis.

A vast number of studies have been focused on CHI proceedings (as shown in Table 1), sketching a comprehensive portrait of this conference. The deterministic factors chosen to delimit the sample of this study are also extended to the iterant presence of CSCW topics in all selected venues, complementariness to the previous bibliometric studies, and free (online) access to the proceedings of ECSCW (Grudin and Poltrock 2012). Moreover, we can access the entire sample electronically in the ACM Digital Library (ACM-DL) and SpringerLink database for extracting publicly available bibliometric indicators (Jacovi et al. 2006). This is not possible for venues such as the IEEE International Conference on Computer Supported Cooperative Work in Design (CSCWD) and International Journal of Cooperative Information Systems (IJCIS), which were initially examined but the findings were not considered in this study due to their low representativeness when compared to the selected outlets. Moreover, we chose to limit the data collection and analysis by selecting only full and short papers, excluding plenary sessions, panels, posters, keynotes, introductions to special issues, workshops, extended abstracts, tutorials, and video presentations. Furthermore, editorials and tables of contents without a linear structure for analysis were also omitted.

Data collection and preparation

Seeking publications devoted to CSCW was a starting point for data retrieval and indexing. We used Google Scholar (GS) as the main source of our analysis, as followed by some previous authors such as Bartneck and Hu (2009) and Mubin et al. (2017), since it offers the widest coverage of scientific literature in comparison to Web of Science (WoS) and Scopus (Bar-Ilan et al. 2007; Meho and Yang 2007). The inclusion of ACM-DL and SpringerLink was primarily due to their freely accessible altmetrics and wide coverage of CSCW records. Despite SCImago provides some indicators from Scopus at the journal and country level (Jacsó 2010), this service does not contain bibliometric data for some CSCW conferences. More details on the comparison of data sources for citation analysis will be given in the “Appendix”.

For the purpose of this study, we extracted the publication metadata for every work listed in the tables of contents provided by DBLP, ACM-DL, and SpringerLink for every CSCW venue from 2001 through 2015. Bibliometrics and altmetrics were gathered from a total of 1520 full papers and 193 short papers between May 28, 2016 and July 3, 2016. Self-citations were not removed from the dataset. For each record, the following data was manually inspected and recorded in a spreadsheet:

-

1.

ID

-

2.

Year of publication

-

3.

Publication outlet

-

4.

Paper title

-

5.

Author(s)

-

6.

Per-author affiliation

-

7.

Country of authors’ affiliation

-

8.

Author listed keywords

-

9.

Citations of the paper (as available on GS, ACM-DL, and SpringerLink)

-

10.

Altmetrics (downloads, readers, and social mentions)

-

11.

Notes (e.g., errors identified in the study, and additional comments)

Data processing

Once all the metadata were collected and stored in a spreadsheet, we used several methods for data cleaning and processing. In fact, these processes occurred in numerous stages and cycles. A visual exploration of the resulting datasets allowed getting more familiar with the data while revealing faults with the data cleaning. In addition, such visualizations also unveiled new data to collect or combinations and calculations that would be advantageous to explore. Error detection and de-duplication efforts were performed at different levels to avoid misunderstandings. Before we could examine author and affiliation information, we fixed misspelled errors, typos, and cases where the same entity appeared in different formats. Such problems are challenging for both humans and computer algorithms. Our sample contained numerous variations in the way one’s name is reported. For example, “David W. Randall”, “Dave Randall” and “David Randall” referred to the same author. As discussed by Horn et al. (2004), this array of analogous identities must be resolved to a single identifier (otherwise each identity will be treated as a separate entity). We manually standardized the authors, affiliations, countries, and keywords. Thus, 3509 keywords were selected for the period of 2001–2015 using name matching and synonyms mergence of singular and plural forms of gerunds, nouns, abbreviations, and acronyms (Liu et al. 2014). This covered 1329 papers (around 85.85% of the total number of documents published in this period, since ECSCW publications do not contain author keywords).

After performing manual standardization, a total of 5416 individuals and 703 institutions were identified and included for analysis. A lot of authors worked for distinct organizations. For instance, Liam Bannon worked (at least) for University of Limerick and Aarhus University, and Kjeld Schmidt worked for IT University of Copenhagen and Copenhagen Business School. Last but not least, 40 authors’ affiliation countries were crawled based on demographic data. We considered all the contributory institutions while assuming that the country of each organization does not necessarily match the nationality of the author. Once the input data was stored, a simple count of (and subsequent combination of calculations between) occurrences in the dataset was performed. We also compared publications by their citation patterns and re-read the papers that had the highest citation values to understand the influential factors affecting such ratio. Cluster analysis was applied for grouping terms based on their similarity. Nevertheless, different weights were given for each set of keywords according to their word frequency. Thus, we were able to identify core research concepts from combined corpora of the four venues. In addition to citation and authorship data also analyzed in earlier studies, we examined alternative metrics of impact with an “additional ecological validity” (Keegan et al. 2013). These altmetrics constitute a first step towards understanding the kind of CSCW readers and how literature and current databases help people on accomplishing their search needs. It should be noted that the techniques used here are not without problems, both in the data collection and interpretation phases. Some problems include but are not limited to treating short papers in the same way as long papers and considering self-citations in the same level of importance of external ones.

Findings

In this section we report on the results obtained from our scientometric analysis of 15 years of CSCW research and discuss the main findings. We considered the total and average number of citations (and quantity of publications without citations) according to GS, ACM-DL, and SpringerLink. Due to the limitations of databases in the citation retrieval process, it was solely compared the full range of devoted venues over the years that present the required indicators to this exercise, discarding null values by the omission of data. Some conferences occur every 2 years, which affects citation counts and influences the overall results. For our initial exploration of the resulting data, the average number of citations in GS is higher in comparison to the other citation indexes, representing a more comprehensive view of what is happening worldwide by comprising not only citations in scholarly papers but also its reference in another documents such as PhD theses, MSc dissertations, book chapters, and technical reports. Looking at the results, a total of 74,846 citations were retrieved from GS, while ACM-DL allowed counting 19,966 citations and SpringerLink showed only 425. Table 2 presents a summary of the quantitative indicators calculated and organized by publication venue and year.

Publication patterns and citation analysis

Citation practices are fundamental elements in scientometrics to assess research performance in the study of science (Garfield 1979; Zuckerman 1987). The citation of publications can be understood as an indicator more focused on intellectual than social connections, representing an important measure for characterizing the influence and quality of a paper in the scholarly literature (Wade 1975). Reasons for an increasing popularity of citation analysis range from its validity and reliability in assessing scientists’ research outputs to the wide access to bibliometric products, databases, and measures able to support citation-based research (Meho and Rogers 2008). The citation metrics from the three outlets of GS, ACM-DL, and SpringerLink were extracted to perform a citation analysis. The average number of citations was calculated by the formula:

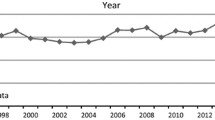

To explore RQ1, we have performed a detailed bibliometric inspection on the publication rate and the number of citations across years. The number of accepted papers published per year and venue is presented in Table 2. By counting papers, we are able to identify a very similar pattern on the total number of full and short papers published annually between 2001 and 2011. Nonetheless, an exponential growth in the ensuing years is noticed, resulting from a change of policy in ACM CSCW as an effort to be inclusive while fostering the community development. The ACM CSCW conference changed to a year-based edition in 2011, covering the widest range of research topics. In general, almost all of the remaining venues provided a highly consistent policy in terms of accepted papers in every year, with few exceptions translated in the decreasing number of GROUP and ECSCW papers in the last years. An increasing trend (R 2 = 0.74) is visible when considering the variation in the total number of published documents by year (Fig. 2). This growth results from several technological and scientific advances achieved during the last decade along with the internal policies, submission frequency, and quality presented by authors. Nevertheless, a simple count of publications cannot always be considered a positive indicator of research impact and/or quality.

A citation analysis allowed us to find out citation indicators with accentuated values for the CSCW Journal, with more representativeness in 2002, 2005, and 2003, respectively. The average number of citations per paper for all venues was 54.05 (JCSCW), 42.52 (ACM CSCW), 40.86 (GROUP), and 39.2 (ECSCW). As noted in earlier studies (e.g., Glänzel and Schoepflin 1995), the age of a paper is a significant factor for citations. Nevertheless, only ACM CSCW and ECSCW have complied with this rule by showing a regular decrease in the average number of citations across years. In contrast, GROUP increased its average citations in 2005 and again in 2009, whilst JCSCW confirmed this oscillation pattern in 2002, 2005, 2007, 2010, 2012, and 2013. Concerning the variation in the total number of citations by year, increasing trends are seen in 2002, 2005, 2008–2010, and 2013. Accentuated decrease indicators were noticeable for GROUP (2007, 2010), ACM CSCW (2004, 2008), ECSCW (2005), and JCSCW (2006, 2009) as a result of the temporal dispersion (yearly or biyearly) that influences this kind of data. ACM CSCW demonstrated the highest number of citations in 2002, showing a significant impact on CSCW research as a mature community. There was also a considerable gap between the average number of citations and the total number of authors per venue in ACM CSCW when comparing with other venues.

Extrapolating to a more granular perspective regarding the JCSCW citations, they remained constant between 2001 and 2015. A similar trend had already been recognized for the 1992–1999 period when Holsapple and Luo (2003) identified a total of 1246 publications and 239 journals cited, from which 28.21% symbolized journal citations, 38.85% represented book citations, 6.04% reflected technical report citations, and 24.9% were citations from conference proceedings (in which 21% represented CSCW devoted conferences) in a total of 4417 citations. In our study, JCSCW had 14,324 citations in GS, 3044 citations in ACM-DL (with an average of 11.89 citations and 29 papers without any citation), and 265 citations in SpringerLink (with an average number of 13.58 citations per paper and 31 papers without citations). The most cited papers in our sample were published in ACM CSCW, GROUP, and JCSCW (Table 3). Their main topics ranged from social networks and online communities to crowd work, Wikipedia, and instant messaging. It should be noted that the type of topics addressed by the community has a significant effect on the number of citations.

The dispersion of cited studies in the ACM CSCW proceedings presented a “strong bias toward citing recent papers within CSCW” (Horn et al. 2004), which is corroborated by the deviation from trend in the number of citations for this century. If we look at the number of citations received per year, it shows a typical birth–death process beginning with few citations, then the number increases, and finally they die away as the content becomes obsolete. Mingers and Leydesdorff (2015) also discussed other variants to this pattern, including “shooting stars” that are highly cited but die quickly and “sleeping beauties” that are ahead of their time. As others have highlighted (e.g., Bartneck 2011; Gupta 2015), it usually takes 1 year to get the first citations and they tend to increase annually thereafter. Reasons leading other authors to cite a paper can derivate from multiple factors, including the pertinence of the thematic to the topic that they want to address, quality of work, proximity of members in a scientific community (Jacovi et al. 2006), amongst factors that can considerably neglect a research approach by its partial lack of knowledge and empathy. In addition, Hu and Wu (2014) have already noted an impact of paper length on citation likelihood. However, this tendency may require further scrutiny (Mubin et al. 2017).

Table 4 presents the number of publications that had 0–1, 2–10, 11–25, 26–50, and > 50 citations. As the table shows, JCSCW had the highest ratio of papers with more than 50 citations, followed by GROUP, ACM CSCW, and ECSCW. On the other hand, ECSCW presented the most expressive ratio in terms of average number of papers without citations, while the ACM CSCW provided the lowest number of papers that were never cited, a tendency corroborated by Jacovi et al. (2006). This kind of publication data can be compared with non-exclusive CSCW conferences such as CHI (Henry et al. 2007), which large impact and prestige are related to a balance between low acceptance rates and a considerable number of attendees and papers cited in distinct venues over the years.

Author analysis

Scientific communities can be conceived as clusters of researchers with essential roles in modern science (Mao et al. 2017a). A deeper insight into contribution patterns (RQ2) led us to study the distribution of papers per author. The total number of author occurrences in our dataset was 5416. A valuable metric of community forming and growth is repeat authorship. In total, there were 30 authors with 10 or more publications, 17 of which were females. These results differ to some extent from those of Bartneck (2011), where there were 3 authors with more than 10 publications in the International Conference on Human–Robot Interaction. Regarding the average number of authors per paper, ACM CSCW shows the most accentuated values, followed by ECSCW, GROUP, and JCSCW. We observe from Table 2 that the 2001–2015 period had a per paper author mean of greater than 3.13. A total of 2485 authors (45.9%) published more than one paper. Table 5 shows the most prolific authors in the field in terms of published papers. As we can observe, these authors contributed to almost half of the publications (49.45%) and 52.8% of the total number of citations. The results showed high indicators for Carl Gutwin, Robert E. Kraut, Loren G. Terveen, and John M. Carroll. The authors with the highest average number of citations were Rebecca E. Grinter, Mary Beth Rosson, Quentin Jones, and Saul Greenberg. We also observed that these authors were mostly situated in authoring positions other than the first author. In most instances, these authors have high h-index ranks according to GS and hold leadership positions in research groups or labs.

Concerning the total citation count per author, Carl Gutwin was the most cited. It is worth mentioning that these results only reflect the volume of publications in the twenty first century. Researchers like Jonathan Grudin and Clarence A. Ellis (R.I.P.) integrated the sample with less than 10 papers but were extremely influential for the CSCW community. Matching this set of results with Oulasvirta’s (2006) findings for the most cited CHI authors, we also assume that all authors presented in Table 5 have not only been investigating a topic but established new areas of significant research. In addition, there are three cases of authors who were equally prolific in CHI (1990–2006): Michael J. Muller, Steve Whittaker, and Steve Benford. Extrapolating to a citation analysis of the top 22 authors in the entire field of HCI (Meho and Rogers 2008), Steve Benford and Tom Rodden had also some of the highest scores. The individual productivity indicators of these authors are strongly related to their recognition for contributing ideas and discoveries in highly ranked outlets.

Looking at the results provided by Table 6, GROUP and ACM CSCW have around 3–4 authors per paper, whilst ECSCW and JCSCW have a prevalence of 1–2 authors per article. Our study identified an approximate average number of 3 authors per paper in the specialized venues of CSCW, while the total number of authors has been increasing considerably as a result of the policy changes in the ACM CSCW. As expected, the number of authors per paper follows the curve of the total number of publications (Fig. 2). In order to characterize the evolution and impact of CSCW research through social network analysis, Horn et al. (2004) revealed a total of 22,887 papers in the HCI community with an average of 3.7 collaborators, which can indicate that CSCW researchers had a high portion of co-authors outside the CSCW community upon the birth of the field in the 1980s while maintaining a large number of connections to co-authors from non-CSCW devoted outlets (e.g., CHI). An average number of 5.1 CSCW co-authors and 4.5 non-CSCW collaborators were identified in a total of 188 authors (1999–2003). In addition, we are also able to conclude that the CSCW devoted venues have similar values in terms of average number of authors per paper when comparing with HCI dedicated conferences (Gupta 2015).

Institutional and geographical distribution of the accumulated contribution to the CSCW literature

To further investigate RQ2, we analyzed the authors’ affiliations and the countries in which they are located. The publications come from a total of 40 countries. As expected, our findings demonstrated a prevalence of institutions from USA in all venues (54.47%), as it represents the original location where the first CSCW conference took place. Researchers from institutions in the UK also produced a vast set of papers with more expressive values in JCSCW, ECSCW, and ACM CSCW. Table 7 presents a detailed perspective of the distribution of countries by venue. These indicators can be crossed with CHI conference for the 1981–2008 period, where the majority of its authors was affiliated to institutions from USA, Canada, and UK (Bartneck and Hu 2009). In addition, Convertino et al.’s (2006) results on the structural and topical characteristics of ACM CSCW conference (1986–2002) also presented a high number of contributions by academics from USA (70–90%) compared to Europe (10–30%) and Asia (0–10%). More recent evidence (Bartneck and Hu 2010) reveals that there are influential citation factors when considering the authors’ affiliation institution.

In a narrower level of analysis, Carnegie Mellon University led the way as the most representative institution with 261 authors, followed by University of California at Irvine, as shown in Table 8. If we take a closer look at the top 30 institutions ranked by research productivity we find that they include big companies such as IBM, Microsoft, and Xerox. At this stage, we excluded the department level from our analysis. Some of the most influential institutions in CSCW devoted outlets were also significant in CHI for the 1990–2006 interval, as stated by Oulasvirta (2006). Most of these institutions have an outstanding overall reputation, historical background in CSCW, and appropriate funding and personnel resources. This provides the basis for encouraging new generations of highly qualified scientists and enables to employ several scientists working on particular topics. Research funds are critical to shape the quantity of science generated by universities and research labs. Nevertheless, such studies are also guided by the demand for science at the regional level. For instance, several ethnographic studies have been conducted taking into account the sociocultural aspects related to the location in which the cooperative work arrangement occurs.

Collaboration types and their influence on scientific productivity indicators

The advent of the twenty first century expressed a paradigmatic shift in the relationships between North American and European CSCW communities, which seem to change every decade in terms of program committees, covered topics, research methods, experimental and analytic approaches (Grudin 2012). Collaboration is an intrinsically social process and often a critical component in scientific research (Lee and Bozeman 2005; Jirotka et al. 2013; Wang et al. 2017; Qi et al. 2017). In its basic form, scientific collaboration plays a significant role on the production of scientific knowledge (Ziman and Schmitt 1995) and occurs as a synergetic vehicle for resource mobilization due to the fact that a researcher may not possess all the expertise, time availability, information, and ideas to address a particular research question. More details on the predictors of scientific collaboration will be given in the next section. To investigate how collaboration has evolved over time in CSCW research (RQ3), we adopted Melin and Persson’s (1996) criteria to analyze the different types of collaboration and their influence on scientific production (Table 9). According to the authors, each paper can be internally (e.g., at an university), nationally, and internationally co-authored. It can be reasonably assumed that a paper is co-authored if it has more than one author, and institutionally co-authored if it has more than one author address suggesting that the authors come from various institutions, departments, and other research units. Scientists have used co-authorship analysis to assess collaborative activity. Nonetheless, we should sound a note of caution with regard to such findings since this indicator can underestimates the presence of less visible forms of collaboration in disciplinary fields (Katz and Martin 1997).

The effects of collaboration in CSCW were primarily expressed at a local level between researchers from the same organization working together, followed by domestic collaborations among authors from distinct institutions within the same country, and international connections between authors from distinct institutions situated in distinct countries (Fig. 3). Bartneck and Hu’s (2010) findings appear to support a similar pattern regarding the CHI conference proceedings. Very close associations are visible in our entire sample, an indicator corroborated by Keegan et al. (2013). Such evidence is visible for collaborations occurring between institutions from the same country with a reduced number of outside connections. Generally, international collaborations involve only 1 author from an external university (commonly, near countries such as USA and Canada). This means that repeat authorships and familiar interactions between authors really occur, which can be particularly observed in a pattern of repeat authorship in the core of each CSCW devoted outlet (e.g., Saul Greenberg, Carl Gutwin). Nevertheless, some of the international authorships derive from funded projects or conference editions occurring in a particular country (except GROUP which is realized in Sanibel Island, Florida, USA since 2003). Our study provides further evidence for the fact that distance is no longer a barrier as it was in the past (Wagner et al. 2016), despite the heterogeneity between some regions in their propensity to collaborate.

Unsurprisingly, most of the scientific collaboration efforts take place in the university sector. Subsequently, scholars pay more attention on their underlying laws and patterns (Wang et al. 2017). Some of such collaborations also denote a past history of a researcher on a particular university. For instance, both Cleidson de Souza and Pernille Bjorn passed by USA and returned to institutions from their country of origin. Following previous evidence on the decreasing role of practitioners when compared with academics in the field of HCI (Barkhuus and Rode 2007), our sample shows a main authorship component constituted by scholars/researchers from universities and research laboratories (N = 5212) in comparison with corporate professionals (N = 170). This finding suggests that the low number of interactions between academia and industry might mean that the industrial sector “is not broad enough to satisfy the collaborative needs of the universities” (Melin and Persson 1996). If we take a closer look at the industrial co-authorships we find that such professionals were mainly from software companies (e.g., Google Inc) and aerospace industry (e.g., Boeing company). A more sporadic set of co-authorships were recognized from a total of 28 health care professionals working in research units and hospitals, 5 researchers from aerospace industry, 4 independent researchers, 3 bank collaborators, 2 climate researchers, 1 library worker, and 1 AI researcher. However, such results must be interpreted with caution. As presented in Fig. 4, only JCSCW provided a set of individual authorships more accentuated than international collaborations, while a prevalence of local collaborations was noted in all venues. When considering the type of collaboration and its influence on the average number of citations (Fig. 5), a similar pattern is visible for all venues, denoting a strong impact of internal (local) collaborations on citation indicators, followed by domestic co-authorships, international collaborations, and individual authorships.

Keyword analysis

Keywords have been used to abstractly classify the content of a paper, providing the basis for examining the key topics and aspects in a particular field of research (Heilig and Voß 2014). By considering co-occurrences, it is possible to identify interconnections between topics through word-reference combinations within the keyword list of publications (Van den Besselaar and Heimeriks 2006). A closer inspection of the keywords the CSCW authors have chosen to characterize their papers (RQ4) revealed a total of 83 main clusters. The clusters were originated from a manual keyword analysis (Heilig and Voß 2014). To obtain an overview, we have grouped keywords under similar topics from a total of 1329 papers due to the lack of access to or absence of keywords in the remaining publications. We identified core and major clusters and analyzed their content by adopting Jacovi et al.’s (2006) methodological guidelines. Instead of using clustering algorithms like hierarchical clustering (Jain and Dubes 1988), manual clustering was used for aggregating data from keywords, identifying the main topics according to their frequency. The data was pre-processed and the clusters were identified on the basis of transformed values, not on the original information. The ranking of the top clusters with a high frequency of keywords (f greater than or equal to 13) is provided in Table 10.

Theories and models constitute the core cluster in CSCW research. For our initial exploration, we have identified terms such as activity theory, ethnography and ethnomethodology, social network analysis, distributed cognition, workplace studies, and survey. CSCW is the second most representative cluster, constituted by general terms introduced or already established in the field. Examples include but are not limited to common ground, articulation work, boundary work, socio-technical walkthrough, and collaboration patterns. A methodological emphasis on system deployment and evaluation seems to be slightly replaced by studies of existing social computing systems and technologies (Keegan et al. 2013). This shows that those publications can have less impact on the future directions of CSCW research. A considerable number of papers have explored the effect of Computer-Mediated Communication (CMC) technologies such as electronic mail and instant messaging on socially organized practices. The results indicate that recent research activities in CSCW have also been focused in the domains of health care and medical informatics, including but not limited to online health communities, electronic medical and patient records, autism spectrum disorders and chronic illnesses, and elderly caregiving.

CSCW research has also explored the online behavior on social networks and similar message exchange and file sharing technologies. The North American community has been emphasizing these behavioral studies on Facebook and microblogging, as indicated by Schmidt (2011). Such evidence, supported by a strong focus on design issues (e.g., usability, user-centered design, participatory design), reflects a possible trend for convergence in CSCW (Grudin 2012). These findings reinforce the fact that CSCW has moved its focus away from work with regards to the support of social practices in everyday life settings (Schmidt 2011), covering a diverse set of leisure pursuits and research domains related with archaeology, architecture, art, oceanography, and aerospace industry. In addition, CSCW researchers have manifested a strong interest on the examination of home practices and the integration of new technologies into family life.

Studies on time and space dimensions also constitute a relevant percentage of the total themes covered by CSCW research. In addition, another set of relevant clusters included teamwork and group dynamics (addressing issues such as group identity, size, and performance), awareness, security and privacy (e.g., adolescent online behavior), and community building. Some of these research topics remain coexistent to the socio-technical classification model of CSCW (Fig. 1). A considerable amount of papers have explored the situated role of information within cooperative work settings, including knowledge management and collaborative information behavior. As put forward by Ackerman et al. (2013), this is a key intellectual achievement of CSCW research community. A further important set of contributions have been provided on the domain of games and simulations, which span from virtual worlds to haptics and head-mounted displays, exergames, Massively Multiplayer Online Games (MMOG), and 3D telepresence. A growing trend for studies on crowdsourcing has been already established since 2006 (when the term was introduced). Some topics of intensive research in the last years include citizen science, human computation, collective intelligence, the wisdom of crowds, and crowdfunding.

Research has also tended to focus on CSCL, including informal learning, peer learning, situated learning, and Massive Open Online Courses (MOOC). On the other hand, software engineering is one of the most addressed areas in the last years. In addition to the established topics of global and open software development and code search on the Web such as GitHub studies, a recent focus has been provided on crowdsourcing software development (Mao et al. 2017b). Publications on coordination (e.g., Rolland et al. 2014) and cyberinfrastructure (e.g., Lee et al. 2006) have been covering research findings on coordinating centers, scientific collaboration, and scientific software development. This means that collaborative computing affects how scientific knowledge is constructed, verified, and validated (Jirotka et al. 2013) and thus it significantly accelerates the publication of scientific results and makes the access to these scientific papers much easier (Qi et al. 2017). Enterprise/organizational issues such as business process management, inter-organizational crisis management, and organizational memory have been also addressed. As reported before, groupware publications were mainly approached in the first decade of this century, including toolkits, frameworks, and system prototypes. Another set of relevant contributions regards the study of websites that use a wiki model, allowing the collaborative modification of its content and structure directly from a Web browser. Wikipedia has represented an attractive ecosystem for performing behavioral studies (e.g., Panciera et al. 2009). Crisis management and emergency response also show an important trend, while the use of machine learning and natural language processing has been explored in combination with crowd-based human computation. An example is the Flock system (Cheng and Bernstein 2015), an interactive machine learning prototype based on hybrid crowd-machine classifiers. Additional themes that can be distilled from this broad literature include but are not restricted to tangible and surface computing, ethics and policy, sentiment analysis, visual analytics, office and workspace, interruptions, context-aware computing, decision making, smart cities and urban computing, ubiquitous computing, and gender studies. Table 11 summarizes the most addressed keywords in CSCW by frequency range.

Measuring research outputs through altmetrics

Social media platforms have become integrated into the scholarly communication ecosystem. Previous work on altmetrics has addressed their usefulness as sources of impact assessment (Sugimoto et al. 2017). Nonetheless, there is still “a lack of systematic evidence that altmetrics are valid proxies of either impact or utility although a few case studies have reported medium correlations between specific altmetrics and citation rates for individual journals or fields” (Thelwall et al. 2013). An altmetric exercise in the field of CSCW was undertaken to address RQ 5, using different sources that have been proposed in the literature as alternatives for measuring the impact of scholarly publications (Priem and Hemminger 2010). Apart from the download data extracted from both ACM-DL and SpringerLink, the last one was used for obtaining reader counts from Mendeley, CiteULike, and Connotea. In addition, SpringerLink was also adopted for gathering mentions in blogs, Wikipedia, Twitter, Facebook, Q&A threads, Google + , and news outlets. Table 12 presents an overview of the alternative metrics retrieved for analysis.

It is possible to observe that GROUP proceedings provided the highest ratio of (cumulative) downloads, followed by ACM CSCW and ECSCW. Matching these indicators with our bibliometric analysis, a slight similarity between the highest cited and the most download papers is reported in Table 13. In this context, 17 of the most downloaded papers were also some of the highest cited papers in the current century of CSCW research. Furthermore, ACM CSCW had the highest number of papers with more than 5000 downloads (Table 14), while the GROUP proceedings represented the less downloaded ones.

In terms of readership in ECSCW and JCSCW, it was mainly from computer and information scientists, followed by social scientists. In addition, both venues presented a high number of readers from disciplines such as design, electrical and electronic engineering, business and management, education, and psychology. Table 15 shows a complete view of readership by discipline. PhD students are the most recurrent readers in ECSCW and JCSCW, followed by M.Sc. students, and researchers (Table 16). Such readers are mostly from USA, Germany, and UK, as shown in Table 17.

A less expressive number of social mentions were gathered for scrutiny. As presented in Table 18, they are mostly from USA and UK. Concerning the professional status of social mentions’ authors, they are mainly from members of the public, followed by scientists, science communicators (journalists, bloggers, editors), and practitioners (doctors and other health care professionals). Finally, most of the readers use Mendeley to access publications, while Twitter is the most used platform for social mentions (Table 19).

Despite the growing interest in altmetrics research (Erdt et al. 2016), the field of CSCW still lacks solid sources for measuring the impact of its publications. For instance, only the publication venues covered by SpringerLink (i.e., ECSCW and JCSCW) provided metrics on the impact of CSCW research on social media. In addition, we can also identify a lack of conversation flow in social media about CSCW publications. The community appears to be somewhat “silent” in terms of expressiveness, acting mostly in “offline” mode through views and downloads.

Discussion

The investigation of CSCW research over a period of 15 years has reinforced the importance of gathering quantitative evidence to reflect on the publication data and thus understanding the field and the ways it is evolving over time. As suggested by Wania et al. (2006), a bibliometric study can be suitable to determine how design and evaluation communities are related in the field of HCI and how it can be used to build a comprehensive theory. To answer RQ6, we discuss the aspects that came out as a result of analysis while grounding our findings with respect to the previous related work. Concerning the publication and citation patterns in CSCW research (RQ1), remarkable indicators of scientific production at the beginning of the new century and expressed again during this decade are in line with previous evidence for a possible convergence between research communities in the field (Grudin 2012). Seminal contributions provided by ethnographic (e.g., Hughes et al. 1994) and conceptual work (e.g., Schmidt and Bannon 1992; Grudin 1994) remain as some of the most cited publications, influencing a lot of studies that were preceded by the launch of collaborative technologies such as Wikipedia and Facebook. For our initial examination of the results, we also denoted that the core of CSCW papers has an average of 11–25 citations. A relatively small number of 51 papers without citations ensure the field a healthy and evolving nature.

In Meho and Rogers (2008) the authors considered JCSCW (4th place in journals) and ACM CSCW (2nd place in conference proceedings) within the top sources of citations in HCI taking into account the intersection between WoS and Scopus. Publication metadata covering CHI were also examined by Henry et al. (2007), indicating its large impact and prestige related to a balance between low acceptance rates and a considerable number of attendees and publications cited in distinct conferences over the years. The average citations for all CHI publications (1990–2006) were 3.2 and 61% of full papers got less than 6 citations (Oulasvirta 2006). Mubin et al. (2017) provided data on the Nordic Conference on Human–Computer Interaction (NordiCHI) to compare and contrast. According to the authors, just 3 NordiCHI papers had more than 50 citations in Scopus.

Extrapolating to the authorship indicators (RQ2), the field differs from others such as biology in terms of the number of authors per paper, which is particularly curious given the highly collaborative nature of CSCW (Keegan et al. 2013). Our bibliometric examination adds another set of correlated layers. First, highly closed relationships (1–4 authors per paper) and repeated co-authorship data denote a great affinity between authors, including some collaborations among members of the same family. A closer inspection revealed a very small number of papers with more than 6 authors. Some reasons can perhaps be attributed to a low number of PhD students in the field and typically working on long projects that publish on a yearly or biyearly basis, and a possible distancing of students from research after the completion of their program. CHI, for instance, has a great number of repeat authors who started publishing in the 1980s as graduate students and are still co-authoring papers with their students (Kaye 2009). This previous examination of CHI conference proceedings emphasized a decrease in single author publications, a consistent growth in 2-to-4 authors per paper, and a growing frequency of papers with more than 6 authors (1983–2007). To the best of our knowledge, the evidence from this study points towards the claim that the repeat authorship rates for ACM CSCW are lower when comparing to CHI.