Abstract

The goal of creating evidence-based programs is to scale them at sufficient breadth to support population-level improvements in critical outcomes. However, this promise is challenging to fulfill. One of the biggest issues for the field is the reduction in effect sizes seen when a program is taken to scale. This paper discusses an economic perspective that identifies the underlying incentives in the research process that lead to scale up problems and to deliver potential solutions to strengthen outcomes at scale. The principles of open science are well aligned with this goal. One prevention program that has begun to scale across the USA is early childhood home visiting. While there is substantial impact research on home visiting, overall average effect size is .10 and a recent national randomized trial found attenuated effect sizes in programs implemented under real-world conditions. The paper concludes with a case study of the relevance of the economic model and open science in developing and scaling evidence-based home visiting. The case study considers how the traditional approach for testing interventions has influenced home visiting’s evolution to date and how open science practices could have supported efforts to maintain impacts while scaling home visiting. It concludes by considering how open science can accelerate the refinement and scaling of home visiting interventions going forward, through accelerated translation of research into policy and practice.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

The hope is evidence-based prevention programs, if scaled up, will shift population-level outcomes in communities. However, the challenges in scaling evidence-based programs and maintaining the promised impacts are large, both in the complex infrastructure necessary to support programs effectively (Braithwaite et al., 2018; Bold et al., 2018; Fagan et al., 2019; Greenhalgh & Paptousi, 2019) and ensuring the programs scaled can deliver those impacts (Al-Ubayldi et al., 2020a; Bangser, 2014). The problems around scale-up can lead to a waste of resources, a missed opportunity to improve people’s lives, and a diminution in the public’s trust in the scientific method’s ability to contribute to policymaking (Al-Ubayldi et al., 2020a). The science of studying scaling is critical to ensure the promise of evidence-based policy to deliver community level outcomes is fulfilled.

Implementation scientists have been exploring what features are necessary for programs to deliver at scale, and much progress has been made (e.g., Bauer et al., 2015; Bold et al., 2018; Durlak, 2015). Much of the work in implementation science focuses after a program has demonstrated efficacy in rigorous research, and the goal now is to examine adoption, implementation, and scale up (Baker, 2010; Dearing & Cox, 2018). A key feature of implementation science revolves around concerns about the “voltage effect”—treatment effect sizes observed in the original impact studies diminish substantially when the program is rolled out at larger scale (Gottfredson et al., 2015; Kilbourne et al., 2007; Weiss et al., 2014). The literature has used this cautionary tale to stress that scaling up is an intricate, complex process (Braithwaite et al., 2018), and it oftentimes implies that the optimism advertised in the original research may be unjustified (Milat et al., 2016). However, much of the literature considers the problem after the research on evidence-based programs is completed and not at the scientific system that is incentivizing the creation of evidence for scaling (Milat et al., 2013).

The paper will present an economic perspective on the incentives and disincentives in the current scientific enterprise to generate evidence that supports effective scaling of evidence-based programs. The paper briefly discusses these threats to effective scaling in the current system and provides recommendations on changes to the system, including how the use of open science practices can begin to address the identified problems. Finally, the paper concludes with a case study of early childhood home visiting that highlights the problems in the current science of scaling and how open science practices could address some of the issues.

Incentives and Disincentives in the Scientific System

To date, economics has largely been on the sidelines of the implementation science and scaling literature, rendering itself mute on how it can enhance our understanding of the science of using science.Footnote 1 Recently, a series of papers by Al-Ubaydli et al. (2017, 2020a, b) apply the lens of economics to the challenges of scaling evidence-based programs. Using an economic lens is useful because it pinpoints the underlying incentives inherent in the scientific system that may be leading to voltage effect drops at scale, and points to areas where improvements can be made.

Consider false positives, a key target of the open science movement, and central to decisions on whether to scale a particular program. The standard discussion around false positives is that the analyst sets the statistical error (alpha) and that determines the false positive rate (usually 5%). Examining false positives from an economic perspective reveals that this rate is much too low in practice, particularly when the goal is scaling programs. For example, while typically researchers assume the estimation error in their statistical equation is zero, economic intuition warns that it might not be true for the specific evidence-based program chosen to scale. To understand the issue, consider the phenomenon of the winner’s curse in auction theory. For concreteness, assume bidders are bidding in a government auction for the rights to drill for oil. Bidders first estimate how much oil is on the plot, how much it will cost to bring the oil to market, and they forecast a market price of oil. Bidders then place their bids, largely based on these estimates. Inevitably, some will guess too low and some too high. Yet, since the highest bidder wins the auction and has to pay their bid, the “winner” is guaranteed to lose money because they overestimated the value of drilling on the plot.

Policymakers choosing which “winning policies” to scale hold similar underlying mechanics because researchers who deliver the largest effect sizes are most likely to be noticed by policymakers. Indeed, to make matters worse, we are usually comforted when there are more researchers working on a problem because we believe we are more likely to find out the truth, but in this case, as the number of scientists studying related interventions increases, the “winning program” (i.e., the program chosen by policymakers) will be overvalued even more because there will be more chances for an extreme draw. That is, the nature of the winner’s curse increases with the number of researchers (at least in the short-run), leading to an inferential error. Thus, the false positive rate will be larger than we believe when initially setting alpha to 0.05 (see Al-Ubaydli et al., 2020a).

A second channel of bias that leads to a higher false positive rate is the choice of sample population. Scientists desire to report both replicable findings and important treatment effects. When they place non-zero weight on each, it is important to recognize that the researcher could choose their subject pool with knowledge regarding the unique attributes of the participants compared to other parties who were not involved in the experiment. For example, they can strategically choose a sample population that yields a large treatment effect. In addition, if participants with the largest expected benefits from the program are more likely to sign up, participate, and comply, a scientist who maximizes their sample size to a fixed budget constraint of their grant may be inadvertently maximizing the treatment effect size, and subsequently presenting results that may not scale. Both selection effects (one nefarious, the other perhaps not) lead to higher false positive rates (similar insights emerge if one considers representativeness of the contexts in which studies are tested such as schools or agencies). While this continues to be a problem in the literature at large, there is a growing movement in the field towards more pragmatic trials and frameworks for transparently reporting the representativeness of study populations that may begin to address this problem (Curran et al., 2012; Loudon et al., 2015).

Finally, because of publication bias (the practice in which journals overwhelmingly publish studies that have large, surprising results with low p-values), and preference of funders and government officials in the constant search for interventions with significant treatment effects, there is an incentive not to apply all appropriate data analysis techniques. Strategies such as failing to correct for multiple testing (List et al., 2019) and re-analyzing data in multiple ways to generate a desired result (also known as p-hacking), and the like all lead to a higher false positive rate than the commonly advertised 5% rate.

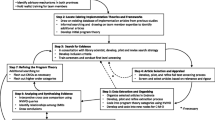

Economic Model on Scaling

Scaling up promising programs into effective policies is a complex, dynamic process that begins with the market for scientific knowledge (Al-Ubaydli et al., 2020b).Footnote 2 The knowledge market has three major players: policymakers, researchers, and community members. Policymakers fund the initial research and implement policies to provide the greatest benefit to the population within time, money, and resource constraints. Researchers conduct experiments to evaluate programs and publish the findings in academic journals. Community members receive rewards for participating in research studies and benefit from the programs the government implements. The needs of all three stakeholders — researchers, community members, and policymakers — affect the available economic model on scaling. This article will focus most closely on researchers and policymakers.

Looking at challenges related to scaling using an economic perspective, there are five aspects to an evidence-based program that policymakers want to understand before scaling a program: (1) when does evidence become actionable (appropriate statistical inference), (2) properties of the population (how representative are the participants in the research to the ultimate beneficiaries), (3) properties of the situation (how representative are the situations to the ultimate implementation contexts), (4) spillover (how much do effects of the program spillover into non-participating populations or contexts), and (5) marginal cost considerations (how much do costs change as we scale a program). Al-Ubaydli et al. (2020a) argue that until these five areas and their underpinnings are fully understood and recognized by researchers and policymakers, the threats to scalability will render achieving population-level effects through scaling evidence-based programs particularly vulnerable (List, 2022, denotes these as the “Five Vital Signs”).

Studies generated through the research enterprise contribute to the five elements described above. Understanding the sources of these elements and the incentives and disincentives in the system related to these elements is necessary to identify potential solutions to attenuating voltage effects. None of these elements are new on their own, yet the field rarely discusses their underpinnings, how the research process itself incentivizes threats to scalability, and how open science practices can support addressing the threats to scalability.

Open Science Solutions to the Issues in the Economic Model on Scaling

Like the incentives and disincentives an economic perspective on scaling aims to address, open science acknowledges the potential for bias in research and aims to incentivize high quality science through environmental mechanisms (Wagenmakers et al., 2012). Funding and policy are necessary conditions for scaling evidence-based programs (Fagan et al., 2019); thus, policymakers must have trust in science. Open science supports decision-makers in assessing the credibility and rigor of the work (Standen, 2019). At the same time, the way studies are designed can offer opportunities to overcome threats to scaling. Open science acknowledges that study quality and reporting are within researchers’ control, but the results obtained are not (Frankenhaus & Nettle, 2018). While open science practices are well aligned with all five threats within the economic model on scaling, our discussion will focus on the connection between open science practices, specifically replication and pre-registration to support incentives and disincentives related to the first three threats in the economic model on scaling: actionable evidence, properties of the population, and properties of the situation.

Replication

One way to increase confidence in the findings is replication of research. Open science values not only the replication itself but the conditions that support quality replication (e.g. transparency in methods through preregistration or open methods). In line with open science, the economic model on scaling calls for replication of findings to ensure the programs chosen to scale maintain their desired impacts. That is, the model highlights the power of coordinated replications to enhance knowledge creation and production of scalable insights, which is distinct from many researchers working on a problem without such coordination. Another open science strategy that addresses threats to scalability is the use of open data and code to encourage external researchers to replicate the findings. Open data and code shine a light on problems with analysis and reporting of findings, including genuine mistakes (Wicherts et al., 2011). Unfortunately, the current knowledge creation marketplace does not incentivize replication.

First, changes are needed within the knowledge creation market itself. For example, to shift the incentives in the system to producing more replicable work, we must recognize that the incentives within the knowledge creation system are designed such that once a study has been published, the original investigators and others have little incentive to replicate the findings. The reason is that the returns from replicating published work are generally low. This is problematic, as new and surprising findings may be false positives simply due to the mechanics of statistical inference outlined above (Dreber et al., 2015; Maniadis et al., 2017).

Currently, the research system discourages replications (Al-Ubaydli et al., 2020b). Koole and Lakens (2012) note the incentive structure in psychology is toward individual scientists with their own body of research and not as much on the scientific field in general to build and replicate knowledge. A recommendation in open science for changing the incentives towards replication is co-citing (when two documents cite each other) or co-publishing (sharing publications) to increase the stature of the findings and shift the norm from individual to group responsibility (Butera et al., 2020; Koole & Lakens, 2012). Another approach is to design simple replication mechanisms that generate mutually beneficial gains from trade among the authors of a novel study. Butera et al. (2020) propose one such approach, whereby upon completing an initial study, the original researchers write a working paper version of their research. While they can share their working paper online, they commit to never submitting the work to a journal for publication. They instead invite other researchers to coauthor and publish a second, yet-to-be-written paper, provided researchers are willing to replicate independently the experimental protocol. Once the team is established, but before the replications begin, the replication protocol is preregistered and referenced back in the first working paper. This guarantees that all replications, whether successful or unsuccessful, are properly recognized. The team of researchers then writes the second paper, which includes all replications, and submits to an academic journal.

Another strategy is to leverage multiple trials to learn about the variation of program impacts across both populational and situational dimensions. In other words, before recommending scaling a particular program, researchers should understand the program effects across subsets of the population and characteristics of the situation to understand who should receive the program and where/how it should be implemented (Orr et al., 2019; Stuart et al., 2015). An example of this strategy is the USA’s National Institutes of Health Early Intervention to Promote Cardiovascular Health of Mothers and Children initiative including low and high resourced, geographically diverse clinical, or community-sites within a multi-center or cluster randomized trials for cardio-vascular health interventions (RFA-HL-22-007: Early Intervention to Promote Cardiovascular Health of Mothers and Children (ENRICH) Multisite Clinical Centers (Collaborative UG3/UH3 Clinical Trial Required) (https://grants.nih.gov/grants/guide/rfa-files/RFA-HL-22-007.html)).

Finally, a way to revise the incentive structure in the knowledge creation system is through increasing the value of replications for tenure and promotion (Al-Ubaydli et al., 2020b). While the open science practice of replication is laudable, it needs to include the other incentives and disincentives in the scientific system. This means that we need to establish adequate rewards to scholars for designing research that can be independently replicated—tying tenure decisions, research funding, and the like to such research (i.e., increasing the demand for replicable work). Likewise, to increase the supply of replications, we should reward scholars’ replications in tenure and promotion decisions and providing research funding specifically for replication work.

Pre-registration

Pre-registration is another way to influence the knowledge production system. Pre-registration is the practice of publicly stating the research questions and study design, and sometimes analysis plans, prior to conducting the study. Pre-registration aims to increase credibility of the research and analysis overall through transparency (Yamada, 2018).

Pre-registration is thought to reduce publication bias toward significant findings, meaning studies with null effects are more difficult to identify, which is central to the first threat to scaling, inference (Nosek & Lindsey, 2018). Pre-registration compels scientists to document confirmatory and exploratory findings (Wagenmaker et al., 2012); through this, research consumers are clear which tests were planned and which were exploratory. Al-Ubaydli et al. (2020b) assert that in the economic model on scaling the publication of all findings, including null findings, is critical to inform policy decisions.

The knowledge creation system would need to change the incentive structure to support this goal. One strategy is registered reports in which the study publishes the design for peer review prior to study initiation (Chambers, 2019), making it possible to ascertain whether study execution deviated from the study plan in ways that might meaningfully affect results. Publication of study protocols provides incentives for researchers by increasing their number of publications and by increasing the likelihood results will be published, regardless of the nature of those results. Study protocols eliminate the “file drawer” problem (not all results are published) and p-hacking in that studies are accepted prior to results being available, meaning researchers do not need to worry about not being able to publish if the trial reveals null findings.

Another way in which study protocols can also support the goals of the economic model on scaling is by improving the representativeness of the populations and situations included in trials, such as factors highlighted in the Pragmatic-Explanatory Continuum Indicator Summary tool (PRECIS-2 - Home Page (https://www.precis-2.org/) and the increase in the use of hybrid effectiveness trials (Curran et al., 2012). If more researchers incorporate scientific practices to promote scaling, including taking into account the properties of the populations and situations (two threats to scaling), the peer review process in study protocols can push scientists to design more representative trials. Having pre-registration information allows other researchers to understand how representative (or not) the populations and situations are in the executed study. For example, following List (2020), in terms of sample selection, the author should report clearly how selection of subjects occurred in two stages. First, provide details on the representativeness of the studied group compared to the target population. Second, provide details of whether the study group is representative of the target population in terms of relevant observables that might impact preferences, beliefs, or individual constraints and how that might impact generalizability. Alongside that information, the researcher would report in their final analysis attrition and participant and implementer compliance rates. This includes documenting reasons for attrition and non-compliance such as motivational or incentive differences between groups. This will provide a sense of whether the subject pool is representative and whether the program will successfully scale.

In sum, the economic model on scaling provides insights on knowledge generation and use, including pinpointing the major threats to scaling. In doing so, the incentives and disincentives embedded in the current research system that promote and discourage creation of scalable insights are revealed. This highlights the richness of the results delivered from the economic model on scaling in that entire research agendas are rooted within each of the five vital signs the model delivers.

Home Visiting as a Case Study of How Open Science Can Strengthen Scaling Evidence

We turn now to the relevance of the threats to the economic model on scaling and open science in developing and scaling evidence-based home visiting. This section describes how the traditional approach for testing interventions has influenced the evolution of home visiting to date, then considers how the economic model on scaling and open science can accelerate the refinement and scaling of home visiting interventions going forward.

How the Traditional Research Approach Has Influenced Home Visiting’s Evolution to Date

Early childhood home visiting is a preventive intervention for expectant families and families with children birth to 5 years. It aims to achieve equity in health and socio-economic outcomes through education and family support during visits and by linking families with needed community resources. Investment in the USA in scaling evidence-based home visiting began in earnest in 2010 through the Maternal, Infant and Early Childhood Home Visiting Program (MIECHV) (Federal Register, 2010; Bipartisan Budget Act of 2018). MIECHV legislation calls for the majority of funding to scale up evidence-based home visiting models. MIECHV and other funding streams now support thousands of local home visiting programs across the country.

The first three decades of home visiting impact research followed a traditional course. Experts in parenting, health, early childhood development, and child welfare began to develop home visiting models in the 1970s (Weiss, 1993) when the benefits of open science practices and the threats to scaling in the economic model of scaling were neither delineated nor appreciated. Often, developers relied on non-experimental program evaluation designs. Study results were often unpublished or presented outside of standard peer-reviewed venues. Findings were mixed and sometimes contradictory, generalizability was limited, identification of common intervention elements in different models was given a low priority, mechanisms of change went largely unexamined, and replication and reproducibility were severely constrained (Sama-Miller et al., 2018). Still, early evaluation results appealed to policymakers eager for programs to promote child health and prevent child maltreatment. This demand created a strong and growing incentive to scale home visiting models early on (US Advisory Board on Child Abuse and Neglect, 1991; US General Accounting Office, 1990).

In response, several models launched dissemination arms in the 1990s. In some cases, dissemination began after experimental impact studies by the model developer. In other cases, dissemination began while the evidence from experimental studies was still being developed. In 2010, the USA’s Administration for Children and Families launched the Home Visiting Evidence of Effectiveness review (HomVEE), a systematic review of rigorous peer-reviewed and grey literature on home visiting models (Sama-Miller et al., 2018). Its purpose is to identify models with sufficient evidence of impact to be designated as “evidence-based.”

While HomVEE valued scientific rigor, it was constrained by limited information on interventions and study methods in the literature. These limitations arose because journal requirements were less stringent than those recommended by open science. Practices like pre-registration were either not available or rarely used and so key information such as identification of confirmatory outcomes was unavailable. Thus, important aspects of program design, program implementation, and study methods were not reported which constrained drawing inferences from results. Still, HomVEE had to use the available research to identify evidence-based models. In 2010, it designated seven models as evidence-based. By 2018, it had identified 28,927 studies, including 363 randomized trials of 46 home visiting models (Sama-Miller et al., 2018). Today, 19 models are designated as evidence-based, have dissemination arms, and are eligible for MIECHV Program funding.

Early impact research tested average effects of full models across highly diverse families in varied communities (Sama-Miller et al., 2018). Reports tended to focus on outcomes measured after the defined duration of program enrollment. Thus, research provided little insight on the population and situational aspects of the economic model on scaling. This constrained what could be inferred from results and the implications for scaling. Most early research failed to specify core components (the specific interventions comprising models), the underlying theories of behavior change, and mechanisms of action whereby intervention modifies behavior and, through this, achieves intended outcomes (Supplee & Duggan, 2019). A priori hypotheses were not often specified, and failure to control for multiple comparisons increased risk of false positives. There was usually little or no information to judge whether null results might be attributed to faulty implementation rather than shortcomings of the model itself (Paulsell et al., 2014). It was assumed, but not tested, that intensive, long-term intervention was critical. Studies that did report “dosage” found that many families disenrolled far sooner than intended by model developers. There was no research to identify which interventions within a model were essential nor whether what was essential varied by population or situational context.

How did states decide which models to invest in and how did HomVEE’s results inform their decisions? The usefulness of HomVEE’s results was constrained by limitations of the body of published research on which it relied. The body of research showed few positive impacts, a plethora of null results, a smattering of adverse effects, and highly mixed results across multiple studies of the same model. Not all models were independently tested. The preponderance of null or negative results was more pronounced for studies conducted by independent investigators. There was some replication of studies, but results were often divergent and hard to interpret due to different measurement, populations, and contexts. Furthermore, the studies provided evidence only for full models, not for the interventions that comprised them. These limitations made it impossible for decision makers to draw conclusions about which model worked best, for which families, in which context, why, and how.

Replication is a core tenet of open science and the economic model on scaling. With three or more randomized trials for some models, home visiting might seem to have more replication than other fields. Only nine evidence-based models have favorable effects in two or more studies; thus, most models meet criteria for being evidence-based based on only one sample (Mathematica Policy Research, 2019). Furthermore, results for models with replication studies are often inconsistent. It is hard to interpret such results since replication studies often used different methods and even the models themselves or their implementation might be different across studies in ways that are not reported (Michalopoulos et al., 2013). As in the original studies, analytic strategies of replications often elevated the risk for false positive results.

How Open Science Principles Could Inform Home Visiting Evolution Going Forward

Traditional home visiting research often fails to define, measure, and test mechanisms of change. As a result, the building of knowledge is slowed, opportunities for shared learning are missed, and the field struggles to understand what truly drives impacts within and across evidence-based models. The field needs a clear statement of theory-driven hypotheses to promote specification of mechanisms of change which will directly improve scaling stronger programs (Duggan, 2021a; Supplee & Duggan, 2019). If the knowledge creation system for home visiting research incentivized pre-registration, study protocols, and open data, we believe we could begin to address challenges in scaling home visiting programs.

First, the field should prioritize specifying the specific interventions within models (these are often referred to as core components) before the start of the study because alterations in them during the study affect statistical inference. This could be done within pre-registration or publishing of study protocols. Home visiting models vary greatly; many are comprehensive, aiming to improve multiple outcomes through multiple interventions implemented over long periods of time. As a result, it is hard to determine how specific home visiting interventions influence outcomes, and hard to achieve replication. Some home visiting interventions are loosely structured with limited specificity and explicit direction. This, too, is important to document, as it has implications for replication and subsequent scale and spread.

Intervention descriptions have rarely been shared prior to formal research and testing. While premature release of untested interventions may lead to unwarranted uptake and use, sharing such information would encourage scientific and practice community input, leading to more effective approaches. In home visiting, proprietary ownership of manuals and materials complicates publication of intervention descriptions and discourages sharing and collective involvement in refinement and improvement. The field needs more replication of findings within and across home visiting models to strengthen impacts at scale. To support replications, the field needs to share full descriptions of interventions, as specified in reporting guidelines such as TIDieR (Hoffmann et al., 2014) and RoHVER (Till et al., 2015).

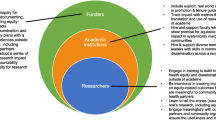

The Home Visiting Applied Research Collaborative (HARC) is addressing this barrier with core support from the MIECHV Program. HARC is a research and development platform in the USA that focuses on interventions within home visiting and uses innovative methods to learn what works best, for whom, in what contexts, why, and how (hvresearch.org). Its Precision Paradigm — a framework incorporating interventions, mediators, and moderators — is the touchstone for this work. HARC brings home visiting stakeholders together to define framework components using a common language, building on the ontology work of the Human Behaviour-Change Project (Michie et al., 2020). The Paradigm is a work in progress, with draft taxonomies constructed from the behavior change intervention literature and refined by applying consensus building techniques to the expert opinion of varied home visiting stakeholder groups. A recent study demonstrated the utility of this approach for defining five evidence-based models’ behavioral pathways and behavior change techniques to promote good birth outcomes (Duggan et al., 2021b). Current work builds on this initial study by assessing models — local program agreement on behavioral pathways and techniques, enrolled families’ views on specific behavior change techniques, and the adequacy of the home visiting literature in defining behavioral pathways and behavior change techniques in relation to reporting guidelines. The Paradigm’s shift from full models to interventions and its common framework, terminology, and definitions promote the field’s capacity for replication. In this way, the Precision Paradigm builds home visiting stakeholders’ capacity to embrace open science and the economic model on scaling.

Sharing of both study and routine program operations data is another beneficial feature of open science. It promotes accountability by providing a way to compare actual with planned implementation and creates opportunities for others to replicate and expand on original analyses. Barriers to data sharing within home visiting include lack of resources to prepare datasets and codebooks, investigator concerns that data might be analyzed incorrectly, an issue that is likely to be greater for proprietary interventions. Such barriers thwart the independent reproducibility of findings. As HARC shifts the focus from full home visiting models to generically defined interventions within them, data sharing barriers should diminish. In a current example, ten home visiting models in a Community of Practice are building their capacity for collaborative research using their existing management information system data (Sturmfels et al., 2021).

The practice of open science such as preregistration, study protocols, and open data would dramatically improve the available science to inform the scaling of home visiting. One example of the use of open science practices in home visiting is the Mother and Infant Home Visiting Program Evaluation (MIHOPE) Study, a large-scale, federally mandated multi-site clinical trial of four home visiting models implemented at scale (Michalopoulos et al., 2013). The study is particularly high-profile and politically sensitive, which made the use of open sciences practices even more valuable to build trust and transparency with the findings. First, the study published a design report prior to beginning any data collection or recruitment that was posted online, available for comment and a revised study protocol was posted online (Michalopoulos et al., 2013). The final study design was pre-registered at clinicaltrials.gov (Mother and Infant Home Visiting Program Evaluation - Full Text View - https://clinicaltrials.gov/ct2/show/NCT02069782). Second, all of the proposed measures and procedures were posted online to receive public comment (2013-00592.pdf (https://www.govinfo.gov/content/pkg/FR-2012-03-23/pdf/2012-6977.pdf)). Third, all the reports posted online include pre-specified confirmatory and exploratory outcomes and the results of all analyses, irrespective of whether they were null, negative, or positive (Michalopoulous et al., 2019). Finally, the study has made the data available for analysis (Warren, 2021). All of these practices together strengthened the transparency and trust in the findings and provided critical information on the true effects of programs at scale. The field still has substantial progress to make and open science can help.

Discussion

The economic model on scaling provides the field an important framework to consider the breadth of science necessary to move the prevention field forward. More research is needed on all four parts of the economic model of scaling to ensure the promise of scaling evidence-based programs across prevention science can be delivered to communities. Within three of the five threats of the model, open science practices are central to ensuring the research generated has the best chance of maintaining trust in science as well as producing higher quality research.

Home visiting, as an example of a prevention program that has been scaled, is well-poised to take advantage of the open science movement. It is a widely disseminated preventive service strategy with committed stakeholders in the practice, research, and policy fields. There is a strong desire to improve home visiting impacts. Embracing open science, however, will require new ways of conducting research and engagement with the larger science and practice communities. The MIHOPE evaluation and work underway within HARC provide examples for the field to advance open science principles. Transparency, accountability, and sharing of protocols and data will all need to become standard practices if we are to accelerate the pace of knowledge accrual and leverage the collective expertise of our colleagues.

We believe it is imperative to move away from almost exclusive research on stand alone, multicomponent models and instead focus on (1) identifying specific intervention elements and mechanisms of change and (2) determining “what works best for whom in what contexts” through examination of intervention moderators (i.e., properties of the population and situation). Precision home visiting research encapsulates this approach and provides a roadmap for future research (Supplee & Duggan, 2019). With its emphasis on shared intervention design and testing, precision home visiting research is highly compatible with the goals of open science. Home visiting is fortunate that investment in the USA has made possible not only the expansion of evidence-based home visiting but also the building of critical research infrastructure, such as HARC, to advance the field. Transformative advances in home visiting are possible with research on commonly defined cross-model intervention elements and tailored approaches in a way that engages the field in all levels of the process. Open science can strengthen the rigor and utility of such research and advance effective scaling in the process. Early childhood home visiting provides a unique case study to better understand how open science could advance scaling programs, although learnings from the application of the economic model to this approach have broad applications to prevention science as a whole.

Change history

23 May 2022

A Correction to this paper has been published: https://doi.org/10.1007/s11121-022-01377-1

Notes

One notable exception is the special symposium published in the Journal of Economic Perspectives, which included papers from both the development community (Banerjee et al., 2017; Muralidharan & Niehaus, 2017) and general lessons gleaned from the medication adherence literature for scaling (Al-Ubayldi et al., 2017).

Following this line of work (see, e.g., Al-Ubaydli et al. (2020b)), List and Suskind view three areas of import in the science creation market: (i) funding basic research (see List, 2011); (ii) providing the knowledge creation market with the optimal incentives for researchers to design, implement, and report scientific results; and (iii) developing a system whereby policymakers have the appropriate incentives to adopt effective policies, and once adopted develop strategies to implement those policies with rigorous evaluation methods to ensure continual improvement (see, e.g., Komro et al., 2016; Chambers et al., 2013). We focus on the second area in this paper but the other two are equally influential.

References

Al-Ubaydli, O., List, J. A., & Suskind, D. L. (2017). What can we learn from experiments? Understanding the threats to the scalability of experimental results. American Economic Review, 107, (5):282–286.

Al-Ubayldi, O., List, J. A., & Suskind, D. (2020a). 2017 Klein lecture: The science of using science: Towards an understanding of the threats to scaled experiments. International Economic Review, 61, 1387–1409.

Al-Ubaydli, O., Lee, M., List, J., Mackevicius, C., & Suskind, D. (2020b). How can experiments play a greater role in public policy? Twelve proposals from an economic model of scaling. Behavioural Public Policy, 1–48. https://doi.org/10.1017/bpp.2020.17

Baker, E. (2010). Taking programs to scale. Journal of Public Health Management and Practice, 16, 264–269. https://doi.org/10.1097/PHH.0b013e3181e03160

Banerjee, A., Banerji, R., Berry, J., Duflo, E., Kannan, H., Mukerji, S., Shotland, M., & Walton, M. (2017). From proof of concept to scalable policies: Challenges and solutions, with an application. The Journal of Economic Perspectives, 31, 73–102.

Bangser, M. (2014). A Funder's Guide to Using Evidence of Program Effectiveness in Scale-Up Decisions. MDRC and the Social Impact Exchange. Downloaded from: https://ssrn.com/abstract=2477982

Bauer, M.S., Damschroder, L., Hagedorn, H. Smith, J., & Kilbourne, A. M. (2015). An introduction to implementation science for the non-specialist. BMC Psychology, 3 (32). https://doi.org/10.1186/s40359-015-0089-9

Bipartisan Budget Act of 2018. (2018). 1892 USC § 50601–50607.

Bold, T., Kimenyi, M., Mwabu, G., & Ng’ang’a, A., & Sandefur, J. (2018). Experimental evidence on scaling up education reforms in Kenya. Journal of Public Economics, 168, 1–20. https://doi.org/10.1016/j.pubeco.2018.08.007

Braithwaite, J., Churruca, K., Long, J. C., Ellis, L. A., & Herkes, J. (2018). When complexity science meets implementation science: A theoretical and empirical analysis of systems change. BMC Medicine, 16, 63. https://doi.org/10.1186/s12916-018-1057-z.PMID:29706132;PMCID:PMC5925847

Butera, L., Grossman, P., Houser, D., List, J., & Villeval, M. (2020). A new mechanism to alleviate the crises of confidence in science — With an application to the public goods game, National Bureau of Economics Working Paper 26801, https://www.nber.org/papers/w26801

Chambers, C. (2019). What’s next for registered reports? Reviewing and accepting study plans before results are known can counter perverse incentives. Nature, 573, 187–189. https://doi.org/10.1038/d41586-019-02674-6

Chambers, D. A., Glasgow, R. E., & Stange, K. C. (2013). The dynamic sustainability framework: Addressing the paradox of sustainment amid ongoing change. Implementation Science, 8, 117. https://doi.org/10.1186/1748-5908-8-117

Curran, G. M., Bauer, M., Mittman, B., Pyne, J. M., & Stetler, C. (2012). Effectiveness-implementation hybrid designs: Combining elements of clinical effectiveness and implementation research to enhance public health impact. Medical Care, 50, 217–226. https://doi.org/10.1097/MLR.0b013e3182408812

Dearing, J. W., & Cox, J. G. (2018). Diffusion of innovations theory, principles, and practice. Health Affairs, 37, 183–190. https://doi.org/10.1377/hlthaff.2017.1104 PMID: 29401011.

Dreber, A., Pfeiffer, T., Almenberg, J. Isaksson, S., Wilson, B., Chen, Y., Nosek, B. A., & Johannesson, M. (2015). Using prediction markets to estimate the reproducibility of scientific research. Proceedings of the National Academy of Sciences, 112 (50)15343–15347.

Duggan. A. (2021a). How HARC members are building the new home visiting research paradigm [conference session]. HARC Annual Collaborative Science of Home Visiting Meeting, Baltimore, MD. 15–17. https://www.hvresearch.org/2021-collaborative-science-of-home-visiting-meeting/

Duggan, A. K., Bower, K. M., Zagaja, C., O'Neill, K., Daro, D., Harding, K., & Thorland, W. (2021b). Changing the Home Visiting Research Paradigm: Models’ Perspectives on Behavioral Pathways and Intervention Techniques to Promote Good Birth Outcomes. (Preprint available at: https://www.researchsquare.com/article/rs-154026/v1)

Durlak, J. A. (2015). Studying program implementation is not easy but it is essential. Prevention Science, 16, 1123–1127. https://doi.org/10.1007/s11121-015-0606-3

Fagan, A. A., Bumbarger, B. K., Barth, R. P., Bradshaw, C. P., Rhoades Cooper, B., Supplee, L. H., & Walker, D. K. (2019). Scaling up evidence-based interventions in US public systems to prevent behavioral health problems: Challenges and opportunities. Prevention Science, 20, 1147–1168. https://doi.org/10.1007/s11121-019-01048-8

Federal Register. (2010). https://www.federalregister.gov/documents/2010/07/23/2010-18013/maternal-infant-and-early-childhood-home-visiting-program

Frankenhuis, W. E., & Nettle, D. (2018). Open Science Is Liberating and Can Foster Creativity. Perspectives on Psychological Science, 13(4), 439–447. https://doi.org/10.1177/1745691618767878.

Greenhalgh, T., & Paptousi, C. (2019). Spreading and scaling up innovation and improvement. British Medical Journal, 365, 1–8. https://doi.org/10.1136/bmj.12068

Gottfredson, D. C., Cook, T. D., Gardner, F. E., Gorman-Smith, D., Howe, G. W., Sandler, I. N., & Zafft, K. M. (2015). Standards of evidence for efficacy, effectiveness, and scale-up research in prevention science: Next generation. Prevention Science, 16, 893–926.

Hoffman, T. C., Glasziou, P. P., Bourtron, I., Milne, R., Perera, R., Moher, D., et al. (2014). Better reporting of interventions: Template for intervention description and replication (TIDieR) checklist and guide. British Medical Journal, 384, g1687. https://doi.org/10.1136/bmj.g1687

Kilbourne, A. M., Neumann, M. S., Pincus, H. A., Bauer, M. S., & Stall, R. (2007). Implementing evidence-based interventions in health care: Application of the replicating effective programs framework. Implementation Science, 2 (42). https://doi.org/10.1186/1748-5908-2-42

Koole, S. L., & Lakens, D. (2012). Rewarding replications: A sure and simple way to improve psychological science. Perspectives on Psychological Science, 7, 608–614. https://doi.org/10.1177/1745691612462586

Komro, K. A., Livingston, M. D., Markowitz, S., & Wagenaar, A. C. (2016). The effect of an increased minimum wage on infant mortality and birth weight. American Journal of Public Health, 106, 1514–1516. https://doi.org/10.2105/AJPH.2016.303268

List, J. A. (2011). Why Economists Should Conduct Field Experiments and 14 Tips for Pulling One Off. Journal of Economic Perspectives, 25(3), 3–16. https://doi.org/10.1257/jep.25.3.3.

List, J. A. (2020). Non est disputandum de generalizability? A glimpse into the external validity trial. NBER Working Paper No 27535. https://doi.org/10.3386/w27535

List J. A. (2022). The voltage effect: How to make good ideas great and great ideas scale. Penguin Randomhouse.

List, J. A., Momeni, F., & Zenou, Y. (2019). Are measures of early education programs too pessimistic? Evidence from a large-scale field experiment. NBER Working Paper.

Loudon, K., Treweek, S., Sullivan, F., Donnan, P., Thorpe, K. E., Zwarenstein, M., et al. (2015). The PRECIS-2 tool: Designing trials that are fit for purpose. British Medical Journal, 350, h2147. https://doi.org/10.1136/bmj.h2147

Maniadis, Z., Tufano, F., & List, J. A. (2017). To Replicate or Not to Replicate? Exploring Reproducibility in Economics through the Lens of a Model and a Pilot Study, The Economic Journal, 127(605), F209–F235. https://doi.org/10.1111/ecoj.12527.

Mathematica Policy Research. (2019). Home visiting evidence of effectiveness review: Executive summary. OPRE Report 2019–93. https://homvee.acf.hhs.gov/sites/default/files/2020-02/homevee_effectiveness_executive_summary_dec_2019.pdf

Michalopoulos, C, Duggan, A., Knox, V. Filene, J. H., Lee, H., Snell, E.K., Crowne, S., Lundquist, E., Corso, P.S., & Ingels, J. B. (2013). Revised design for the mother and infant home visiting program evaluation. OPRE Report 2013–18. Washington, DC: Office of Planning, Research and Evaluation, Administration for Children and Families, U.S. Department of Health and Human Services.

Michalopoulos, C., Faucetta, K., Hill, C. J., Portilla, X. A., Burrell, L., Lee, H., Duggan, A., & Knox, V. (2019). Impacts on family outcomes of evidence-based early childhood home visiting: Results from the mother and infant home visiting program evaluation. OPRE Report 2019–07. Washington, DC: Office of Planning, Research, and Evaluation, Administration for Children and Families, U.S. Department of Health and Human Services.

Michie, S., West, R., Finnerty, A. N., Norris, E., Wright, A. J., Marques, M. M., Johnston, M., Kelly, M. P., Thomas, J., & Hastings, J. (2020). Representation of behaviour change interventions and their evaluation: Development of the upper level of the behaviour change intervention ontology [version1; peer review: Awaiting peer review]. Wellcome Open Research, 5, 123.

Milat, A. J., King, L., Bauman, A. E., & Redman, S. (2013). The concept of scalability: Increasing the scale and potential adoption of health promotion interventions into policy and practice. Health Promotion International, 28, 285–298. https://doi.org/10.1093/heapro/dar097

Milat, A. J., Newson, R., King, L., Rissel, C., Wolfenden, L., Bauman, A. (2016). A guide to scaling up population health interventions. Public Health Research and Practice, 26 (1), https://doi.org/10.17061/phrp2611604

Muralidharan, K., & Niehaus, P. (2017). Experimentation at scale. The Journal of Economic Perspectives, 31, 103–124.

Nosek, B. A. & Lindsay, D. S. (2018). Preregistration becoming the norm in psychological science. Association for Psychological Science. https://www.psychologicalscience.org/observer/preregistration-becoming-the-norm-in-psychological-science/comment-page-1

Orr, L. L., Olsen, R. B., Bell, S. H., Schmid, I., Shivji, A., & Stuart, E. A. (2019). Using the results from rigorous multisite evaluations to inform local policy decisions. J. Pol. Anal. Manage., 38, 978–1003. https://doi.org/10.1002/pam.22154

Paulsell, D., Del Grosso, P., & Supplee, L. (2014). Supporting replication and scale-up of evidence-based home visiting programs: Assessing the implementation knowledge base. American Journal of Public Health, 104, 1624–1632. https://doi.org/10.2105/AJPH.2014.301962

Sama-Miller, E., Akers, L., Mraz-Esposito, A., Avellar, S., Paulsell, D., & Del Grosso, P. (2018). Home visiting evidence of effectiveness review: Executive summary. OPRE/ACF, US DHHS.

Standen, E. (2019). Open science, pre-registration and striving for better research practices. Psychological Sciences Agenda. https://www.apa.org/science/about/psa/2019/11/better-research-practices

Stuart, E. A., Bradshaw, C. P., & Leaf, P. J. (2015). Assessing the generalizability of randomized trial results to target populations. Prevention Science, 16, 475–485. https://doi.org/10.1007/s11121-014-0513-z.PMID:25307417;PMCID:PMC4359056

Sturmfels, N., Taylor, R. M., & Fauth, R. (2021). Mapping the paradigm to models' existing MIS data and high priority research questions [conference session]. 2021 HARC Annual Collaborative Science of Home Visiting Meeting, Baltimore, MD. https://www.hvresearch.org/2021-collaborative-science-of-home-visiting-meeting/

Supplee, L. H., & Duggan, A. (2019). Innovative research methods to advance precision in home visiting for more efficient and effective programs. Child Development Perspectives, 13, 173–179.

Till, L., Filene, J., & Joraanstad, A. (2015). Reporting of home visiting effectiveness/efficacy research (RoHVER) guidelines. Heising-Simons Foundation.

United States Advisory Board on Child Abuse and Neglect. (1991). Creating caring communities: Blueprint for an effective federal policy on child abuse and neglect. U.S. Government Printing Office.

United States General Accounting Office. (1990). Home visiting: A promising early intervention strategy for at-risk families. Report to the Chairman, Subcommittee on Labor, Health, and Human Services, Education, and Related Agencies Committee on Appropriations, U.S. Senate.

Wagenmakers, E.-J., Wetzels, R., Borsboom, D., van der Maas, H. L. J., & Kievit, R. A. (2012). An Agenda for Purely Confirmatory Research. Perspectives on Psychological Science, 7, 632–638. https://doi.org/10.1177/1745691612463078

Warren, A. (2021). Accessing data from MIHOPE and MIHOPE-strong start. Start Early National Home Visiting Summit.

Weiss, H. (1993). Home visits: Necessary but not sufficient. Future of Children, 3, 113–128.

Weiss, M. J., Bloom, H. S., & Brock, T. (2014). A conceptual framework for studying sources of variation in program effects. Journal of Policy Analysis and Management, 33, 778–808.

Wicherts, J. M., Bakker, M., & Molenaar, D. (2011). Willingness to share research data is related to the strength of the evidence and the quality of reporting of statistical results. PLoS One, 6, e26828. https://doi.org/10.1371/journal.pone.0026828

Yamada, Y. (2018). How to Crack Pre-registration: Toward Transparent and Open Science. Frontiers in Psychology, 9, 1831. https://doi.org/10.3389/fpsyg.2018.01831.

Funding

Dr. Duggan’s contribution to this paper is supported by the Health Resources and Services Administration (HRSA) of the US Department of Health and Human Services (HHS) under cooperative agreement UD5MC30792, Maternal, Infant and Early Childhood Home Visiting Research and Development Platform.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclaimer

This information or content and conclusions are those of the author and should not be construed as the official position or policy of, nor should any endorsements be inferred by HRSA, HHS, or the US Government.

Ethics Approval

This study did not involve data collection so no human subjects review was necessary.

Consent to Participate

None.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised due to a retrospective Open Access order.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Supplee, L.H., Ammerman, R.T., Duggan, A.K. et al. The Role of Open Science Practices in Scaling Evidence-Based Prevention Programs. Prev Sci 23, 799–808 (2022). https://doi.org/10.1007/s11121-021-01322-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11121-021-01322-8