Abstract

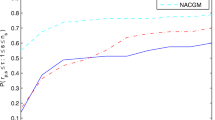

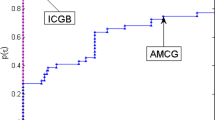

In this work, we present a new hybrid conjugate gradient method based on the approach of the convex hybridization of the conjugate gradient update parameters of DY and HS+, adapting a quasi-Newton philosophy. The computation of the hybrization parameter is obtained by minimizing the distance between the hybrid conjugate gradient direction and the self-scaling memoryless BFGS direction. Furthermore, a significant property of our proposed method is that it ensures sufficient descent independent of the accuracy of the line search. The global convergence of the proposed method is established provided that the line search satisfies the Wolfe conditions. Our numerical experiments on a set of unconstrained optimization test problems from the CUTEr collection indicate that our proposed method is preferable and in general superior to classic conjugate gradient methods in terms of efficiency and robustness.

Similar content being viewed by others

References

Al-Baali, M.: Descent property and global convergence of the Fletcher-Reeves method with inexact line search. IMA J. Numer. Anal. 5, 121–124 (1985)

Al-Baali, M.: Analysis of a family self-scaling quasi-Newton methods. Comput. Optim. Appl. 9, 191–203 (1998)

Al-Baali, M.: Numerical experience with a class of self-scaling quasi-Newton algorithms. J. Optim. Theory 96, 533–553 (1998)

Al-Baali, M.: Extra updates for the BFGS method. Optim. Method Softw. 13, 159–179 (2000)

Al-Baali, M., Spedicato, E., Maggioni, F.: Broyden’s quasi-Newton methods for a nonlinear system of equations and unconstrained optimization: a review and open problems. Optim. Method Softw. 29(5), 937–954 (2014)

Alekseev, A.K., Navon, I.M., Steward, J.L.: Comparison of advanced large-scale minimization algorithms for the solution of inverse ill-posed problems. Optim. Method Softw. 24(1), 63–87 (2009)

Andrei, N.: Scaled memoryless BFGS preconditioned conjugate gradient algorithm for unconstrained optimization. Optim. Method Softw. 22, 561–571 (2007)

Andrei, N.: Another hybrid conjugate gradient algorithm for unconstrained optimization. Numer. Algo. 47, 143–156 (2008)

Andrei, N.: Hybrid conjugate gradient algorithm for unconstrained optimization. J. Optim. Theory Appl. 141, 249–264 (2009)

Andrei, N.: Accelerated hybrid conjugate gradient algorithm with modified secant condition for unconstrained optimization. Numer. Algo. 54, 23–46 (2010)

Apostolopoulou, M.S., Sotiropoulos, D.G., Livieris, I.E., Pintelas, P.: A Memoryless BFGS neural network training algorithm. In: 7Th IEEE International Conference on Industrial Informatics (INDIN’09), pp 216–221 (2009)

Babaie-Kafaki, S., Fatemi, M., Mahdavi-Amiri, N.: Two effective hybrid conjugate gradient algorithms based on modified BFGS updates. Numer. Algo. 58, 315–331 (2011)

Babaie-Kafaki, S., Ghanbari, R.: Two hybrid nonlinear conjugate gradient methods based on a modified secant equation. Optimization: A journal of mathematical programming and operations research, 1–16 (2012)

Babaie-Kafaki, S., Ghanbari, R.: The Dai-Liao nonlinear conjugate gradient method with optimal parameter choices. Eur. J. Oper. Res. 234(3), 625–630 (2014)

Babaie-Kafaki, S., Ghanbari, R.: A hybridization of the Hestenes–Stiefel and Dai–Yuan conjugate gradient methods based on a least-squares approach. Optim. Method Softw. 30(4), 673–681 (2015)

Babaie-Kafaki, S., Ghanbari, R.: A hybridization of the Polak-Ribière-Polyak and Fletcher-Reeves conjugate gradient methods. Numer. Algo. 68(3), 481–495 (2015)

Babaie-Kafaki, S., Ghanbari, R.: Two optimal Dai-Laio conjugate gradient methods. Optimization 64, 2277–2287 (2015)

Babaie-Kafaki, S., Ghanbari, R.: A class of adaptive Dai-Laio conjugate gradient methods based on scaled memoryless BFGS update 4OR, 1–8 (2016)

Babaie-Kafaki, S., Mahdavi-Amiri, N.: Two modified hybrid conjugate gradient methods based on a hybrid secant equation. Math. Model. Anal. 18(1), 32–52 (2013)

Bongartz, I., Conn, A., Gould, N., Toint, P.: CUTE: Constrained and unconstrained testing environments. ACM Trans. Math. Softw. 21(1), 123–160 (1995)

Burstedde, C., Kunoth, A.: The conjugate gradient method for linear ill-posed problems with operator perturbations. Numer. Algo. 48(1), 161–188 (2008)

Dai, Y.H., Kou, C.X.: A nonlinear conjugate gradient algorithm with an optimal property and an improved Wolfe line search. SIAM J. Optim. 23, 296–320 (2013)

Dai, Y.H., Yuan, Y.X.: A nonlinear conjugate gradient with a strong global convergence properties. SIAM J. Optim. 10, 177–182 (1999)

Dai, Y.H., Yuan, Y.X.: Nonlinear Conjugate Gradient Methods. Shanghai Scientific and Technical Publishers, Shanghai (2000)

Dai, Y.H., Yuan, Y.X.: An efficient hybrid conjugate gradient method for unconstrained optimization. Ann. Oper. Res. 103, 33–47 (2001)

Dolan, E., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Fletcher, R.: Practical Methods of Optimization, Volume 1: Unconstrained Optimization , 1st edition. Wiley, New York (1987)

Fletcher, R., Reeves, C.M.: Function minimization by conjugate gradients. Comput. J 7, 149–154 (1964)

Gilbert, J.C., Nocedal, J.: Global convergence properties of conjugate gradient methods for optimization. SIAM J Optim. 2(1), 21–42 (1992)

Hager, W.W., Zhang, H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J Optim. 16, 170–192 (2005)

Hager, W.W., Zhang, H.: A survey of nonlinear conjugate gradient methods. Pacific J Optim. 2, 35–58 (2006)

Hestenes, M.R., Stiefel, E.: Methods for conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand. 49, 409–436 (1952)

Hu, Y.F., Storey, C.: Global convergence result for conjugate gradient methods. J. Optim. Theory Appl. 71, 399–405 (1991)

Kou, C.X., Dai, Y.H.: A modified self-scaling memoryless Broyden–Fletcher–Goldfarb–Shanno method for unconstrained optimization. Journal of Optimization Theory and Applications (2014)

Liu, D.C., Nocedal, J.: On the limited memory BFGS method for large scale optimization methods. Math. Program. 45, 503–528 (1989)

Liu, Q.: Two minimal positive bases based direct search conjugate gradient methods for computationally expensive functions. Numer. Algo. 58(4), 461–474 (2011)

Liu, Y., Storey, C.: Efficient generalized conjugate gradient algorithms, part 1: theory. J. Optim. Theory Appl. 69, 129–137 (1991)

Livieris, I.E., Pintelas, P.: A new conjugate gradient algorithm for training neural networks based on a modified secant equation. Appl. Math. Comput. 221, 491–502 (2013)

Livieris, I.E., Pintelas, P.: A limited memory descent Perry conjugate gradient method. Optim. Lett. 10, 17–25 (2016)

Morales, J.L., Nocedal, J.: Enriched methods for large-scale unconstrained optimization. Comput. Optim. Appl. 21, 143–154 (2002)

Nash, S.G.: Newton-type minimization via the Lanczos method. SIAM J Numer. Anal. 21, 770–788 (1984)

Nash, S.G.: Preconditioning of truncated Newton methods. SIAM J Sci. Stat. Comput. 6, 599–616 (1985)

Nocedal, J.: Updating quasi-Newton matrices with limited storage. Math. Comput. 35(151), 773–782 (1980)

Nocedal, J.: Theory of algorithms for unconstrained optimization. Acta Numerica 1, 199–242 (1992)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, New York (1999)

Nocedal, J., Yuan, Y.: Analysis of a self-scaling quasi-Newton method. Math. Program. 61, 19–37 (1993)

Oren, S.S.: Self-Scaling Variable Metric Algorithms for Unconstrained Minimization. PhD Thesis, Stanford University, California (1972)

Oren, S.S., Luenberger, D.G.: Self-scaling variable metric (SSVM) algorithms, Part I: criteria and sufficient conditions for scaling a class of algorithms. Manag. Sci. 20, 845–862 (1974)

Oren, S.S., Spedicato, E.: Optimal conditioning of self-scaling variable metric algorithms. Math. Program. 10, 70–90 (1976)

Perry, J.M.: A Class of Conjugate Gradient Algorithms with a Two-Step Variable-Metric Memory. Center for Mathematical Studies in Economies and Management Science. Northwestern University Press, Evanston Illiois (1977)

Plato, R.: The conjugate gradient method for linear ill-posed problems with operator perturbations. Numer. Algo. 20(1), 1–22 (1999)

Polak, E., Ribière, G.: Note sur la convergence de methods de directions conjuguees. Revue Francais d’Informatique et de Recherche Operationnelle 16, 35–43 (1969)

Powell, M.J.D.: Some global convergence properties of a variable metric algorithm for minimization without exact line searches. In: Cottle, R.W., Lemke, C.E. (eds.) Nonlinear Programming, SIAM-AMS Proceedings, vol. IX, pp 53–72. SIAM Publications (1976)

Powell, M.J.D.: Restart procedures for the conjugate gradient method. Math. Program. 12, 241–254 (1977)

Powell, M.J.D.: Nonconvex Minimization Calculations and the Conjugate Gradient Method. In: Numerical Analysis, Volume 1066 of Lecture Notes in Mathematics, pp 122–141. Springer, Berlin (1984)

Risler, F., Rey, C.: Iterative accelerating algorithms with Krylov subspaces for the solution to large-scale nonlinear problems. Numerical Algorithms, 23(1) (2000)

Shanno, D.F.: On the convergence of a new conjugate gradient algorithm. SIAM J. Numer. Anal. 15(6), 1247–1257 (1978)

Touati-Ahmed, D., Storey, C.: Efficient hybrid conjugate gradient techniques. J. Optim. Theory Appl. 64, 379–397 (1990)

Wu, X., Silva, B., Yuan, J.: Conjugate gradient method for rank deficient saddle point problems. Numer. Algo. 35(2), 139–154 (2004)

Zhang, L., Zhou, W.: Two descent hybrid conjugate gradient methods for optimization. J. Comput. Appl. Math. 216, 251–164 (2008)

Zhang, L., Zhou, W., Li, D.: Global convergence of a modified Fletcher-Reeves conjugate gradient method with Armijo-type line search. Numer. Math. 104, 561–572 (2006)

Zou, X., Navon, I.M., Berger, M., Phua, K.H., Schlick, T., Le Dimet, F.X.: Numerical experience with limited-memory quasi-Newton and truncated Newton methods. SIAM J Optim. 3(3), 582–608 (1993)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Livieris, I.E., Tampakas, V. & Pintelas, P. A descent hybrid conjugate gradient method based on the memoryless BFGS update. Numer Algor 79, 1169–1185 (2018). https://doi.org/10.1007/s11075-018-0479-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-018-0479-1