Abstract

The majority of machine learning algorithms assumes that objects are represented as vectors. But often the objects we want to learn on are more naturally represented by other data structures such as sequences and time series. For these representations many standard learning algorithms are unavailable. We generalize gradient-based learning algorithms to time series under dynamic time warping. To this end, we introduce elastic functions, which extend functions on Euclidean spaces to time series spaces. Necessary conditions are sketched under which generalized gradient learning on time series is consistent. Specifically, four linear classifiers are extended to time series under dynamic time warping and applied to benchmark datasets. Results indicate that generalized gradient learning via elastic functions have the potential to complement the state-of-the-art in pattern recognition on time series.

Similar content being viewed by others

1 Introduction

Statistical pattern recognition on time series finds many applications in diverse domains such as speech recognition, medical signal analysis, and recognition of gestures (Fu 2011; Geurts 2001). A challenge in learning on time series consists in filtering out the effects of shifts and distortions in time. A common and widely applied approach to address invariance of shifts and distortions are elastic transformations such as dynamic time warping (DTW). Following this approach amounts in learning on time series spaces equipped with an elastic proximity measure.

In comparison to Euclidean spaces, mathematical concepts such as the derivative of a function and a well-defined addition under elastic transformations are unknown in time series spaces. Therefore gradient-based algorithms can not be directly applied to time series. The weak mathematical structure of time series spaces bears two consequences: (a) there are only few learning algorithms that directly operate on time series under elastic transformation; and (b) simple methods like the nearest neighbor classifier together with the DTW distance belong to the state-of-the-art and are reported to be exceptionally difficult to beat (Batista et al. 2011; Lines and Bagnall 2014; Xi et al. 2006).

To advance the state-of-the-art in learning on time series, first adaptive methods have been proposed. They mainly devise or apply different measures of central tendency of a set of time series under dynamic time warping (Kruskal and Liberman 1983; Rabiner and Wilpon 1979, 1980; Petitjean et al. 2011). The individual approaches reported in the literature are k-means (Hautamaki et al. 2008; Niennattrakul and Ratanamahatana 2007a, b; Petitjean et al. 2014; Wilpon and Rabiner 1985), self-organizing maps (Somervuo and Kohonen 1999), and learning vector quantization (Somervuo and Kohonen 1999). These methods have been formulated in a problem-solving manner without a unifying theme. Consequently, there is no link to a mathematical theory that allows us to (1) place existing adaptive methods in a proper context, (2) derive adaptive methods on time series other than those based on a concept of mean, and (3) prove convergence of adaptive methods to solutions that satisfy necessary conditions of optimality.

Here we propose generalized gradient methods on time series spaces that combine the advantages of gradient information and elastic transformation such that the above issues (1)–(3) are resolved. The key idea behind this approach is the concept of elastic function. Elastic functions extend functions on Euclidean spaces to time series spaces such that elastic transformations are preserved. Then learning on time series amounts in minimizing piecewise smooth risk functionals using generalized gradient methods proposed by Ermoliev and Norkin (1998), Norkin (1986). Specifically, we investigate elastic versions of logistic regression, (margin) perceptron learning, and linear support vector machine (SVM) for time series under dynamic time warping. We derive update rules and present different convergence results, in particular an elastic version of the perceptron convergence theorem. Though the main treatment focuses on univariate time series under DTW, we also show under which conditions the theory also holds for multivariate time series and sequences with non-numerical elements under arbitrary elastic transformations.

We tested the four elastic linear classifiers to all two-class problems of the UCR time series benchmark dataset (Keogh et al. 2011). The results show that elastic linear classifiers on time series behave similarly to linear classifiers on vectors. Furthermore, our findings indicate that generalized gradient learning on time series spaces have the potential to complement the state-of-the-art in statistical pattern recognition on time series, because the simplest elastic methods are already competitive with the best available methods.

The paper is organized as follows: Sect. 2 introduces background material. Section 3 proposes elastic functions, generalized gradient learning on sequence data, and elastic linear classifiers. In Sect. 4, we relate the proposed approach to previous approaches on averaging a set of time series. Section 5 presents and discusses experiments. Finally, Sect. 6 concludes with a summary of the main results and an outlook for further research.

2 Background

This section introduces basic material. Section 2.1 defines the DTW distance, Sect. 2.2 presents the problem of learning from examples, and Sect. 2.3 introduces piecewise smooth functions.

2.1 Dynamic time warping distance

By [n] we denote the set \(\mathop {\left\{ 1, \ldots , n \right\} }\) for some \(n \in \mathbb {N}\). A time series of length n is an ordered sequence \(\mathbf{x} = (x_1, \ldots , x_n)\) with features \(x_i \in \mathbb {R}\) sampled at discrete points of time \(i \in [n]\).

To define the DTW distance between time series \(\mathbf{x}\) and \(\mathbf{y}\) of length n and m, resp., we construct a grid \({\mathcal {G}} = [n] \times [m]\). A warping path in grid \({\mathcal {G}}\) is a sequence \(\phi = (\mathbf{t}_1, \ldots , \mathbf{t}_p)\) consisting of points \(\mathbf{t}_k = \mathop {\left( i_{k}, j_{k} \right) } \in {\mathcal {G}}\) such that

-

1.

\(\mathbf{t}_1 = (1, 1)\) and \(\mathbf{t}_p = (n, m)\) (boundary conditions)

-

2.

\(\mathbf{t}_{k+1} - \mathbf{t}_k \in \mathop {\left\{ (1,0), (0,1), (1,1) \right\} }\) (warping conditions)

for all \(1 \le k < p\).

A warping path \(\phi \) defines an alignment between sequences \(\mathbf{x}\) and \(\mathbf{y}\) by assigning elements \(x_{i}\) of sequence \(\mathbf{x}\) to elements \(y_{j}\) of sequence \(\mathbf{y}\) for every point \((i, j) \in \phi \). The boundary condition enforces that the first and last element of both time series are assigned to one another accordingly. The warping condition summarizes what is known as the monotonicity and continuity condition. The monotonicity condition demands that the points of a warping path are in strict ascending lexicographic order. The continuity condition defines the maximum step size between two successive points in a path.

The cost of aligning \(\mathbf{x} = (x_1, \ldots , x_n)\) and \(\mathbf{y} = (y_1, \ldots , y_m)\) along a warping path \(\phi \) is defined by

where \(c(x_{i}, y_{j})\) is the local transformation cost of aligning features \(x_i\) and \(y_j\). Unless otherwise stated, we assume that the local transformation costs are given by \(c\mathop {\left( x_{i}, y_{j} \right) =} \mathop {\left( x_i - y_j \right) ^2}\). Then the distance function

is the dynamic time warping (DTW) distance between \(\mathbf{x}\) and \(\mathbf{y}\), where the minimum is taken over all warping paths in \({\mathcal {G}}\).

2.2 The problem of learning

We consider learning from examples as the problem of minimizing a risk functional. To present the main ideas, it is sufficient to focus on supervised learning.

Consider an input space \({\mathcal {X}}\) and output space \({\mathcal {Y}}\). The problem of supervised learning is to estimate an unknown function \(f_*:{\mathcal {X}} \rightarrow {\mathcal {Y}}\) on the basis of a training set

where the examples \((x_i, y_i) \in {\mathcal {X}} \times {\mathcal {Y}}\) are drawn independent and identically distributed according to a joint probability distribution P(x, y) on \({\mathcal {X}} \times {\mathcal {Y}}\).

To measure how well a function \(f{:}{\mathcal {X}} \rightarrow {\mathcal {Y}}\) predicts output values y from x, we introduce the risk

where \(\ell :{\mathcal {Y}} \times {\mathcal {Y}} \rightarrow \mathbb {R}_+\) is a loss function that quantifies the cost of predicting f(x) when the true output value is y.

The goal of learning is to find a function \(f:{\mathcal {X}} \rightarrow {\mathcal {Y}}\) that minimizes the risk. The problem is that we can not directly compute the risk of f, because the probability distribution P(x, y) is unknown. But we can use the training examples to estimate the risk of f by the empirical risk

The empirical risk minimization principle suggests to approximate the unknown function \(f_*\) by a function

that minimizes the empirical risk over a fixed hypothesis space \({\mathcal {F}} \subset {\mathcal {Y^X}}\) of functions \(f:{\mathcal {X}} \rightarrow {\mathcal {Y}}\).

Under appropriate conditions on \({\mathcal {X}}\), \({\mathcal {Y}}\), and \({\mathcal {F}}\), the empirical risk minimization principle is justified in the following sense: (1) a minimizer \(f_N\) of the empirical risk exists, though it may not be unique; and (2) the risk \(R[f_N]\) converges in probability to the risk \(R[f_*]\) of the best but unknown function \(f_*\) when the number N of training examples goes to infinity.

2.3 Piecewise smooth functions

A function \(f:{\mathcal {X}} \rightarrow \mathbb {R}\) defined on a Euclidean space \({\mathcal {X}}\) is piecewise smooth, if f is continuous and there is a finite collection of continuously differentiable functions \({\mathcal {R}}(f) = \mathop {\left\{ f_i: {\mathcal {X}} \rightarrow \mathbb {R}{:} i \in {\mathcal {I}} \right\} }\) indexed by the set \({\mathcal {I}}\) such that

for all \(x \in {\mathcal {X}}\). We call the collection \({\mathcal {R}}(f)\) a representation for f. A function \(f_i \in {\mathcal {R}}(f)\) satisfying \(f_i(x) = f(x)\) is an active function of f at x. The set \({\mathcal {A}}(f, x) = \mathop {\left\{ i \in {\mathcal {I}} \,:\, f_i(x) = f(x) \right\} }\) is the active index set of f at x. By

we denote the set of active gradients \(\nabla f_{i}(x)\) of active function \(f_i\) at x. Active gradients are directional derivatives of f. At differentiable points x the set of active gradients is of the form \(\partial f(x) = \mathop {\left\{ \nabla f(x) \right\} }\).

Piecewise smooth functions are closed under composition, scalar multiplication, finite sums, pointwise max- and min-operations. In particular, the max- and min-operations of a finite collection of differentiable functions allow us to construct piecewise smooth functions. Piecewise functions f are non-differentiable on a set of Lebesgue measure zero, that is f is differentiable almost everywhere.

3 Generalized gradient learning on time series spaces

This section generalizes gradient-based learning to time series spaces under elastic transformations. We first present the basic idea of the proposed approach in Sect. 3.1. Then Sect. 3.2 introduces the new concept of elastic functions. Based on this concept, Sect. 3.3 describes supervised generalized gradient learning on time series. As an example, Sect. 3.4 introduces elastic linear classifiers. In Sect. 3.5, we consider unsupervised generalized gradient learning. Section 3.6 sketches consistency results. Finally, Sect. 3.7 generalizes the proposed approach to other elastic proximity functions and arbitrary sequence data.

3.1 The basic idea

This section presents the basic idea of generalized gradient learning on time series. For this we assume that \({\mathcal {F_X}}\) is a hypothesis space consisting of functions \(F:{\mathcal {X}} \rightarrow \mathbb {R}\) defined on some Euclidean space \({\mathcal {X}}\). For example, \({\mathcal {F_X}}\) consists of all linear functions on \({\mathcal {X}}\). First we show how to generalize functions \(F \in {\mathcal {F_X}}\) defined on Euclidean spaces to functions \(f: {\mathcal {T}} \rightarrow \mathbb {R}\) on time series such that elastic transformations are preserved. The resulting functions f are called elastic. Then we turn the focus on learning an unknown elastic function over the new hypothesis space \({\mathcal {F_T}}\) of elastic functions obtained from \({\mathcal {F_X}}\).

We define elastic functions \(f:{\mathcal {T}}\rightarrow \mathbb {R}\) on time series as a pullback of a function \(F\in {\mathcal {F_X}}\) by an embedding \(\mu : {\mathcal {T}} \rightarrow {\mathcal {X}}\), that is \(f(\mathbf{x}) = F(\mu (\mathbf{x}))\) for all time series \(\mathbf{x} \in {\mathcal {T}}\).

In principle any injective map \(\mu \) can be used. Here, we are interested in embeddings that preserve elastic transformations. For this, we select a problem-dependent base time series \(\mathbf{z} \in {\mathcal {T}}\). Then we define an embedding \(\mu _{\mathbf{z}}: {\mathcal {T}} \rightarrow {\mathcal {X}}\) that is isometric with respect to \(\mathbf{z}\), that is

for all \(\mathbf{x} \in {\mathcal {T}}\). It is important to note that an embedding \(\mu _{\mathbf{z}}\) is distance preserving with respect to \(\mathbf{z}\), only. In general, we will have  showing that an embedding \(\mu _{\mathbf{z}}\) will be an expansion of the time series space. This form of a restricted isometry turns out to be sufficient for our purposes. We call the pullback \(f = F\circ \mu \) of F by \(\mu \) elastic, if embedding \(\mu \) preserves elastic distances with respect to some base time series. Figure 1 illustrates the concept of elastic function.

showing that an embedding \(\mu _{\mathbf{z}}\) will be an expansion of the time series space. This form of a restricted isometry turns out to be sufficient for our purposes. We call the pullback \(f = F\circ \mu \) of F by \(\mu \) elastic, if embedding \(\mu \) preserves elastic distances with respect to some base time series. Figure 1 illustrates the concept of elastic function.

Illustration of elastic function \(f:{\mathcal {T}} \rightarrow \mathbb {R}\) of a function \(F:{\mathcal {X}} \rightarrow \mathbb {R}\). The map \(\mu = \mu _{\mathbf{z}}\) embeds time series space \({\mathcal {T}}\) into the Euclidean space \({\mathcal {X}}\). Corresponding solid red lines indicate that distances between respective endpoints are preserved by \(\mu \). Corresponding dashed red lines show that distances between respective endpoints are not preserved. The diagram commutes, that is \(f(\mathbf{x}) = F(\mu (\mathbf{x}))\) is a pullback of F by \(\mu \) (Color figure online)

Next we show how to learn an unknown elastic function by risk minimization over the hypothesis space \({\mathcal {F_T}}\) consisting of pullbacks of functions from \({\mathcal {F_X}}\) by \(\mu \). For this we assume that \(\varTheta _{{\mathcal {T}}}\) is a set of parameters and the hypothesis space \({\mathcal {F_T}}\) consists of functions \(f_{\varvec{\theta }}\) with parameter \(\varvec{\theta } \in \varTheta _{{\mathcal {T}}}\). To convey the basic idea, we consider the simple case that the parameter set is of the form \(\varTheta _{{\mathcal {T}}} = {\mathcal {T}}\). Then the goal is to minimize a risk functional

as a function of \(\varvec{\theta }\in {\mathcal {T}}\). We cast problem (1) to the equivalent problem

Observe that the risk functional of problem (2) is a function of elements \(\mu (\varvec{\theta })\) from the Euclidean space \({\mathcal {X}}\). Since problem (2) is analytically difficult to handle, we consider the relaxed problem

where the minimum is taken over the whole set \({\mathcal {X}}\), whereas problem (2) minimizes over the subset \(\mu ({\mathcal {T}}) \subset {\mathcal {X}}\). The relaxed problem (3) is not only analytically more tractable but also learns a model from a larger hypothesis space and may therefore provide better asymptotical solutions, but may require more training data to reach acceptable test error rates (Vapnik et al. 1994).

3.2 Elastic functions

This section formally introduces the concept of elastic function, which generalize functions on matrix spaces \({\mathcal {X}} = \mathbb {R}^{n \times m}\) to time series spaces. The matrix space \({\mathcal {X}}\) is the Euclidean space of all real (\(n \times m\))-matrices with inner product

for all \(\mathbf{X}, \mathbf{Y} \in {\mathcal {X}}\). The inner product induces the Euclidean norm

also known as the Frobenius norm.Footnote 1 The dimension \(n \times m\) of \({\mathcal {X}}\) has the following meaning: the number n of rows refers to the maximum length of all time series from the training set \({\mathcal {D}}\). The number m of columns is a problem dependent parameter, called elasticity henceforth. A larger number m of columns admits higher elasticity and vice versa.

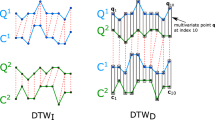

We first define an embedding from time series into the Euclidean space \({\mathcal {X}}\). We embed time series into a matrix from \({\mathcal {X}}\) along a warping path as illustrated in Fig. 2. Suppose that \(\mathbf{x} = (x_1, \ldots , x_k)\) is a time series of length \(k \le n\). By \({\mathcal {P}}(\mathbf{x})\) we denote the set of all warping paths in the grid \({\mathcal {G}} = [k] \times [m]\) defined by the length k of \(\mathbf{x}\) and elasticity m. An elastic embedding of time series \(\mathbf{x}\) into matrix \(\mathbf{Z} = (z_{ij})\) along warping path \(\phi \in {\mathcal {P}}(\mathbf{x})\) is a matrix \(\mathbf{x} \otimes _{\phi } \mathbf{Z} = (x_{ij})\) with elements

Suppose that \(F: {\mathcal {X}} \rightarrow \mathbb {R}\) is a function defined on the Euclidean space \({\mathcal {X}}\). An elastic function of F based on matrix \(\mathbf{Z}\) is a function \(f: {\mathcal {T}} \rightarrow \mathbb {R}\) with the following property: for every time series \(\mathbf{x} \in {\mathcal {T}}\) there is a warping path \(\phi \in {\mathcal {P}}(\mathbf{x})\) such that

The representation set and active set of f at \(\mathbf{x}\) are of the form

The definition of elastic function corresponds to the properties described in Sect. 3.1 and in Fig. 1. To see this, we define an embedding \(\mu _{\mathbf{Z}}: {\mathcal {T}} \rightarrow {\mathcal {X}}\) that first selects for every time series \(\mathbf{x}\) an active warping path \(\phi \in {\mathcal {A}}(f, \mathbf{x})\) and then maps \(\mathbf{x}\) to the matrix \(\mu _{\mathbf{Z}}(\mathbf{x}) = \mathbf{x} \otimes _{\phi } \mathbf{Z}\). Then we have \(F(\mu _{\mathbf{Z}}(\mathbf{x})) = f(\mathbf{x})\) for all \(\mathbf{x} \in {\mathcal {T}}\). Suppose that the rows of matrix \(\mathbf{Z}\) are all equal to \(\mathbf{z}\). Then \(\mu _{\mathbf{z}} = \mu _{\mathbf{Z}}\) is isometric with respect to \(\mathbf{z}\).

Embedding of time series \(\mathbf{x} = (2, 4, 3, 1)\) into matrix \(\mathbf{Z}\) along warping path \(\phi \). From left to right: Time series \(\mathbf{x}\), grid \({\mathcal {G}}\) with highlighted warping path \(\phi \), matrix \(\mathbf{Z}\), and matrix \(\mathbf{x} \otimes _\phi \mathbf{Z}\) obtained after embedding \(\mathbf{x}\) into \(\mathbf{Z}\) along \(\phi \). We assume that the length of the longest time series in the training set is \(n = 7\). Therefore the matrix \(\mathbf{Z}\) has \(n=7\) rows. The number m of columns of \(\mathbf{Z}\) is a problem dependent parameter and set to \(m=5\) in this example. Since time series \(\mathbf{x}\) has length \(k = 4\), the grid \({\mathcal {G}} = [k]\times [m]\) containing all feasible warping paths consists of 4 rows and 5 columns. Grids \({\mathcal {G}}\) vary only in the number k of rows in accordance with the length \(k \le n\) of the time series to be embedded, but always have m columns

Next, we consider examples of elastic functions. The first two examples are fundamental for extending a broad class of gradient-based learning algorithms to time series spaces.

Example 1

(Elastic Euclidean Distance) Let \(\mathbf{Y} \in {\mathcal {X}}\). Consider the function

Then

is an elastic function of \(D_{\mathbf{Y}}\). To see this, observe that from

follows \(\delta _{\mathbf{Y}}(\mathbf{x}) \in {\mathcal {R}}(\delta _{\mathbf{Y}}, \mathbf{x}) = \mathop {\left\{ D_{\mathbf{Y}}(\mathbf{x} \otimes _\phi \mathbf{Y})\,:\, \phi \in {\mathcal {P}}(\mathbf{x}) \right\} }\). See Fig. 3 for an illustration. We call \(\delta _{\mathbf{Y}}\) elastic Euclidean distance with parameter \(\mathbf{Y}\). \(\square \)

Example 2

(Elastic Inner Product) Let \(\mathbf{W} \in {\mathcal {X}}\). Consider the function

Then the function

is an elastic function of \(S_{\mathbf{W}}\), called elastic inner product with parameter \(\mathbf{W}\). \(\square \)

Elastic Euclidean distance \(\delta _{\mathbf{Y}}(\mathbf{x})\). From left to right: time series \(\mathbf{x} = (2, 4, 3, 1)\), matrix \(\mathbf{Y}\), matrix \(\mathbf{x} \otimes _\phi \mathbf{Y}\) obtained by embedding \(\mathbf{x}\) into matrix \(\mathbf{Y}\) along optimal warping path \(\phi \), and distance computation by aggregating the local costs giving \(\delta _{\mathbf{Y}}(\mathbf{x}) = \sqrt{19}\). The optimal path is highlighted in orange in \(\mathbf{Y}\) and in \(\mathbf{x} \otimes _\phi \mathbf{Y}\). Gray shaded areas in both matrices refer to parts that are not used, because the length \(k = 4\) of \(\mathbf{x}\) is less than \(n=7\). Since \(\mathbf{x}\) is embedded into \(\mathbf{Y}\) only elements lying on the path \(\phi \) contribute to the distance. All other local cost between elements of \(\mathbf{Y}\) and \(\mathbf{x} \otimes _\phi \mathbf{Y}\) are zero (Color figure online)

The elastic Euclidean distance and elastic inner product are elastic proximities closely related to the DTW distance, where the elastic Euclidean distance generalizes the DTW distance. The time and space complexity of both elastic proximities are O(n m). If no optimal warping path is required, space complexity can be reduced to \(O(\max (n, m))\). To see this, we refer to Algorithm 1. To obtain an optimal warping path, we can trace-back along the score matrix \(\mathbf{S}\) in the usual way. The procedure in Algorithm 1 applies exactly the same dynamic programming scheme as the one for the standard DTW distance and therefore has the same time and space complexity.

Observe that both elastic proximities embed time series into different matrices. Elastic Euclidean distances embed time series into the parameter matrix and elastic inner products always embed time series into the zero-matrix \(\mathbf{0}\).

Example 3

(Elastic Linear Function) Let \(\varTheta = {\mathcal {X}} \times \mathbb {R}\) be a set of parameters and let \(\varvec{\theta } = (\mathbf{W}, b) \in \varTheta \) be a parameter. Consider the linear function

where \(\mathbf{W}\) is the weight matrix and b is the bias. The function

is an elastic function of \(F_{\varvec{\theta }}\), called elastic linear function. \(\square \)

Example 4

(Single-Layer Neural Network) Let \(\varTheta = {\mathcal {X}}^r \times \mathbb {R}^{2r+1}\) be a set of parameters. Consider the function

where \(\alpha (z)\) is a sigmoid function, \(f_i = f_{\varvec{\theta }_i}\) are elastic linear functions with parameters \(\varvec{\theta }_i = \mathop {\left( \mathbf{W}_{i}, b_i \right) }\), and \(\varvec{\theta } = \mathop {\left( \varvec{\theta }_1, \ldots , \varvec{\theta }_r, w_1, \ldots , w_r, b \right) }\). The function \(f_{\varvec{\theta }}\) implements an elastic neural network for time series with r sigmoid units in the hidden layer and a single linear unit in the output layer. \(\square \)

3.3 Supervised generalized gradient learning

This section introduces a generic scheme of generalized gradient learning for time series under dynamic time warping.

Let \(\varTheta = {\mathcal {X}}^r \times \mathbb {R}^s\) be a set of parameters. Consider a hypothesis space \({\mathcal {F}}\) of functions \(f_{\varvec{\theta }}:{\mathcal {T}} \rightarrow {\mathcal {Y}}\) with parameter \(\varvec{\theta } = \mathop {\left( \mathbf{W}_{1}, \ldots , \mathbf{W}_{r}, \mathbf{b} \right) } \in \varTheta \). Suppose that \({\mathcal {D}} = \mathop {\left\{ \mathop {\left( \mathbf{x}_1, y_1 \right) }, \ldots , \mathop {\left( \mathbf{x}_N \right) }, y_N \right\} } \subseteq {\mathcal {T}} \times {\mathcal {Y}}\) is a training set. According to the empirical risk minimization principle, the goal is to minimize

as a function of \(\varvec{\theta }\). Since \(R_N\) is a function of \(\varvec{\theta }\), we rewrite the loss by interchanging the role of argument \(\mathbf{z} = (\mathbf{x}, y)\) and parameter \(\varvec{\theta }\) such that

We assume that the loss \(\ell _{\mathbf{z}}\) is piecewise smooth with representation set

indexed by r-tuples of warping paths from \({\mathcal {P}}(\mathbf{x})\). The gradient \(\nabla \ell _{\varvec{\Phi }}\) of an active function \(\ell _{\varvec{\Phi }}\) at \(\varvec{\theta }\) is given by

where \(\partial \ell _{\varvec{\Phi }}/\partial \varvec{\theta }_i\) denotes the partial derivative of \(\ell _{\varvec{\Phi }}\) with respect to \(\varvec{\theta }_i\). The incremental update rule of the generalized gradient method is of the form

for all \(i \in [r]\). Section 3.6 discusses consistency of variants of update rule (5) and (6).

3.4 Elastic linear classifiers

Let \({\mathcal {Y}} = \mathop {\left\{ \pm 1 \right\} }\) be the output space consisting of two class labels. An elastic linear classifier is a function of the form

where \(f_{\varvec{\theta }}(\mathbf{x}) = b + \sigma _{\mathbf{W}}(\mathbf{x})\) is an elastic linear function and \(\varvec{\theta } = (\mathbf{W}, b)\) summarizes the parameters. We assign a time series \(\mathbf{x}\) to the positive class if \(f_{\varvec{\theta }}(\mathbf{x}) \ge 0\) and to the negative class otherwise.

Depending on the choice of loss function \(\ell (y, f_{\varvec{\theta }}(\mathbf{x}))\), we obtain different elastic linear classifiers as shown in Table 1. The loss function of elastic logistic regression is differentiable as a function of \(f_{\varvec{\theta }}\) and b, but piecewise smooth as a function of \(\mathbf{W}\). All other loss functions are piecewise smooth as a function of \(f_{\varvec{\theta }}\), b and \(\mathbf{W}\).

From the partial derivatives, we can construct the update rule of the generalized gradient method. For example, the incremental / stochastic update rule of the elastic perceptron is of the form

where \((\mathbf{x}, y)\) is the training example at iteration t, and \(\mathbf{X} = \mathbf{x} \otimes _\phi \mathbf{0}\) with \(\phi \in {\mathcal {A}}(\ell ,\mathbf{x})\). From the factor \(\mathbb {I}_{\mathop {\left\{ \ell > 0 \right\} }}\) shown in Table 1 follows that the update rule given in (8) and (9) is only applied when \(\mathbf{x}\) is misclassified.

We present three convergence results. A proof is given in “Appendix”.

Convergence of the generalized gradient method. The generalized gradient method for minimizing the empirical risk of an elastic linear classifier with convex loss converges to a local minimum under the assumptions of Ermoliev and Norkin (1998, Theorem 4.1).

Convergence of the stochastic generalized gradient method. This method converges to a local minimum of the expected risk of an elastic linear classifier with convex loss under the assumptions of Ermoliev and Norkin (1998, Theorem 5.1).

Elastic margin perceptron convergence theorem. The perceptron convergence theorem states that the perceptron algorithm with constant learning rate finds a separating hyperplane, whenever the training patterns are linearly separable. A similar result holds for the elastic margin perceptron algorithm.

A finite training set \({\mathcal {D}} \subseteq {\mathcal {T}} \times {\mathcal {Y}}\) is elastic-linearly separable, if there are parameters \(\varvec{\theta } = (\mathbf{W}, b)\) such that \(h_{\varvec{\theta }}(\mathbf{x}) = y\) for all examples \((\mathbf{x}, y) \in {\mathcal {D}}\). We say, \({\mathcal {D}}\) is elastic-linearly separable with margin \(\xi > 0\) if

Then the following convergence theorem holds:

Theorem 1

(Elastic Margin Perceptron Convergence Theorem) Suppose that \({\mathcal {D}}\subseteq {\mathcal {T}} \times {\mathcal {Y}}\) is elastic-linearly separable with margin \(\xi > 0\). Then the elastic margin perceptron algorithm with fixed learning rate \(\eta \) and margin-parameter \(\lambda \le \xi \) converges to a solution \((\mathbf{W}, b)\) that correctly classifies the training examples from \({\mathcal {D}}\) after a finite number of update steps, provided the learning rate is chosen sufficiently small.

3.5 Unsupervised generalized gradient learning

Several unsupervised learning algorithms such as, for example, k-means, self-organizing maps, principal component analysis, and mixture of Gaussians are based on the concept of (weighted) mean. Once we know how to average a set of time series, extension of mean-based learning methods to time series follows the same rules as for vectors. Therefore, it is sufficient to focus on the problem of averaging a set of time series.

Suppose that \({\mathcal {D}} = \mathop {\left\{ \mathbf{x}_1, \ldots , \mathbf{x}_N \right\} } \subset {\mathcal {T}}\) is a set of unlabeled time series. Consider the sum of squared distances

A matrix \(\mathbf{Y}_{*}\) that minimizes F is a mean of the set \({\mathcal {D}}\) and the minimum value \(F_* = F(\mathbf{Y}_{*})\) is the variation of \({\mathcal {D}}\). The update rule of the generalized gradient method is of the form

where \(\mathbf{X}_i = \mathbf{x}_i \otimes _{\phi _{i}} \mathbf{Y}^t\) is the matrix obtained by embedding the i-th training example \(\mathbf{x}_i\) into matrix \(\mathbf{Y}^t\) along active warping path \(\phi _i\). Under the conditions of Ermoliev and Norkin (1998, Theorem 4.1), the generalized gradient method for minimizing f using update rule (11) is consistent in the mean and variation.

We consider the special case, when the learning rate is constant and takes the form \(\eta ^t = 1/N\) for all \(t\ge 0\). Then update rule (11) is equivalent to

where \(\mathbf{X}_i\) is as in (11).

3.6 A note on convergence and consistency

Gradient-based methods in statistical pattern recognition typically assume that the functions of the underlying hypothesis space is differentiable. However, many loss functions in machine learning are piecewise smooth, such as, for example, the loss of perceptron learning, k-means, and loss functions using \(\ell _1\)-regularization. This case has been discussed and analyzed by [2].

When learning in elastic spaces, hypothesis spaces consist of piecewise smooth functions, which are pullbacks of smooth functions. Since piecewise smooth functions are closed under composition, the situation is similar as in standard pattern recognition, where hypothesis spaces consist of smooth functions. What has changed is that we will have “more” non-smooth points. Nevertheless, the set of non-smooth points remains negligible in the sense that it forms a set of Lebesgue measure zero.

Piecewise smooth functions are locally Lipschitz and therefore admit a Clarke’s subdifferential Df at each point (Clarke 1975). A Clarke’s subdifferential Df is a set that contains elements, called generalized gradients. At differentiable points, the Clarke subdifferential coincides with the gradient, that is \(Df(x) = \mathop {\left\{ \nabla f(x) \right\} }\). A necessary condition of optimality of f at x is \(0 \in Df(x)\).

Using these and other concepts from non-smooth analysis, we can construct minimization procedures that generalize gradient descent methods. In previous subsections, we presented a slightly simpler variant of the following generalized gradient method: Consider the minimization problem

where f is a piecewise smooth function and \({\mathcal {Z}} \subseteq {\mathcal {X}}\) is a bounded convex constraint set. Let \({\mathcal {Z}}_*\) denote the subset of solutions satisfying the necessary condition of optimality and \(f({\mathcal {Z}}_*) = \mathop {\left\{ f(x) \,:\, x \in {\mathcal {Z}}_* \right\} }\) is the set of solution values. Consider the following iterative method:

where \(g^t \in Df(x^t)\) is a generalized gradient of f at \(x^t\), \(\Pi _{{\mathcal {Z}}}\) is the multi-valued projection onto \({\mathcal {Z}}\) and \(\eta ^t\) is the learning rate satisfying the conditions

The generalized gradient method (14)–(16) minimizes a piecewise smooth function f by selecting a generalized gradient, performing the usual update step, and then projects the updated point to the constraint set \({\mathcal {Z}}\). If f is differentiable at \(x^t\), which is almost always the case, then the update amounts to selecting an active index \(i \in {\mathcal {A}}(f, x)\) of f at the current iterate \(x^t\) and then performing gradient descent along direction \(-\nabla f_i(x^t)\).

Note that the constraint set \({\mathcal {Z}}\) has been ignored in previous subsections. We introduce a sufficiently large constraint set \({\mathcal {Z}}\) to ensure convergence. In a practical setting, we may ignore specifying \({\mathcal {Z}}\) unless the sequence \(\mathop {\left( x^t \right) }\) accidentally goes to infinity.

Under mild additional assumptions, this procedure converges to a solution satisfying the necessary condition of optimality (Ermoliev and Norkin 1998, Theorem 4.1): The sequence \(\mathop {\left( x^t \right) }\) generated by method (14)–(16) converges to the solution of problem (13) in the following sense:

- 1.:

-

the limits points \(\bar{x}\) of \(\mathop {\left( x^t \right) }\) with minimum value \(f(\bar{x})\) are contained in \({\mathcal {Z}}_*\).

- 2.:

-

the limits points \(\bar{f}\) of \(\mathop {\left( f(x^t) \right) }\) are contained in \(f({\mathcal {Z}}_*)\).

Consistency of the stochastic generalized gradient method for minimizing the expected risk functional follows from Ermoliev and Norkin (1998, Theorem 5.1), provided similar assumptions are satisfied.

3.7 Generalizations

This section indicates some generalizations of the concept of elastic functions.

3.7.1 Generalization to other elastic distance functions

Elastic functions as introduced here are based on the DTW distance via embeddings along a set of feasible warping paths with squared differences as local transformation costs. The choice of distance function and local transformation cost is arbitrary. We can equally well define elastic functions based on proximities other than the DTW distance. Results on learning carry over whenever a proximity \(\rho \) on time series satisfies the following sufficient conditions: (1) \(\rho \) minimizes the costs over a set of feasible paths, (2) the cost of a feasible path is a piecewise smooth function as a function of the local transformation costs, and (3) the local transformation costs are piecewise smooth.

With regard to the DTW distance, these generalizations include the Euclidean distance and DTW distances with additional constraints such as the Sakoe-Chiba band (1978). Furthermore, absolute differences as local transformation cost are feasible, because the absolute value function is piecewise smooth.

3.7.2 Generalization to multivariate time series

A multivariate time series is an ordered sequence \(\mathbf{x} = \mathop {\left( \mathbf{x}_1, \ldots , \mathbf{x}_n \right) }\) consisting of feature vectors \(\mathbf{x}_i \in \mathbb {R}^d\). We can define the DTW distance between multivariate time series \(\mathbf{x}\) and \(\mathbf{y}\) as in the univariate case but replace the local transformation cost \(c(x_i, y_j) = (x_i-y_j)^2\) by  .

.

To define elastic functions, we embed multivariate time series into the set \({\mathcal {X}} = (\mathbb {R}^d)^{n \times m}\) of vector-valued matrices \(\mathbf{X} = (\mathbf{x}_{ij})\) with elements \(\mathbf{x}_{ij} \in \mathbb {R}^d\). These adjustment preserve piecewise smoothness, because the Euclidean space \({\mathcal {X}}\) is a direct product of lower-dimensional Euclidean spaces.

3.7.3 Generalization to sequences with symbolic attributes

We consider sequences \(\mathbf{x} = \mathop {\left( x_1, \ldots , x_n \right) }\) with attributes \(x_i\) from some finite set \({\mathcal {A}}\) of d attributes (symbols). Since \({\mathcal {A}}\) is finite, we can represent its attributes \(a \in {\mathcal {A}}\) by d-dimensional binary vectors \(\mathbf{a} \in \mathop {\left\{ 0,1 \right\} }^d\), where all but one element is zero. The unique non-zero element has value one and is related to attribute a. In doing so, we can reduce the case of attributed sequences to the case of multivariate time series.

We can introduce the following local transformation costs

More generally, we can define local transformation costs of the form

where \(k:{\mathcal {A}} \times {\mathcal {A}} \rightarrow \mathbb {R}\) is a positive-definite kernel. Provided that the kernel is an inner product in some finite-dimensional feature space, we can reduce this generalization also to the case of multivariate time series.

4 Relationship to previous approaches

Previous work on adaptive methods either focus on computing or are based on a concept of (weighted) mean of a set of time series. Most of the literature is summarized in Kruskal and Liberman (1983), Petitjean et al. (2011, 2014), Somervuo and Kohonen (1999). To place those approaches into the framework of elastic functions, it is sufficient to consider the problem of computing a mean of a set of time series.

Suppose that \({\mathcal {D}} = \mathop {\left\{ \mathbf{x}_1, \ldots , \mathbf{x}_N \right\} }\) is a set of time series. A mean is any time series \(\mathbf{y}_{*}\) that minimizes the sum of squared DTW distances

Algorithm 2 outlines a unifying minimization procedure of f. The set \({\mathcal {Z}}\) in line 1 of the procedure consists of all matrices with n identical rows, where n is the maximum length of all time series from \({\mathcal {D}}\). Thus, there is a one-to-one correspondence between time series from \({\mathcal {T}}\) and matrices from the subset \({\mathcal {Z}}\). By construction, we have \(f(\mathbf{y}) = F(\mathbf{Y})\), where \(\mathbf{Y} \in {\mathcal {Z}}\) is the matrix with all rows equal to \(\mathbf{y}\) and \(F(\mathbf{Y})\) is as defined in Eq. (10).

In line 2.1, we determine active warping paths of the function \(F(\mathbf{Y})\) that embed \(\mathbf{x}_i\) into matrix \(\mathbf{Y}\). By construction this step is equivalent to computing optimal warping paths for determining the DTW distance between \(\mathbf{x}_i\) and \(\mathbf{y}\). Line 2.2 updates matrix \(\mathbf{Y}\) and line 2.3 projects the updated matrix \(\mathbf{Y}\) to the set \({\mathcal {Z}}\). The last step is equivalent to constructing a time series from a matrix.

Previous approaches differ in the form of update rule \(\upsilon \) in line 2.2 and the projection \(\pi \) in line 2.3. Algorithmically, steps 2.2 and 2.3 usually form a single step in the sense that the composition \(\psi = \pi \circ \upsilon \) can not as clearly decomposed in two separate processing steps as described in Algorithm 2. The choice of \(\upsilon \) and \(\pi \) is critical for convergence analysis. Problems arise when the map \(\upsilon \) does not select a generalized gradient and the projection \(\pi \) does not map a matrix from \({\mathcal {X}}\) to a closest matrix from \({\mathcal {Y}}\). In these cases, it may be unclear how to define necessary conditions of optimality for the function f. As a consequence, even if steps 2.2 and 2.3 minimize f, we do not know whether Algorithm 2 converges to a local minimum of f. The same problems arise when studying the asymptotic properties of the mean as a minimizer of f.

The situation is different for the function F defined in Eq. (10). When minimizing F, the set \({\mathcal {Z}}\) coincides with \({\mathcal {X}}\). Since the function F is piecewise smooth, the map \(\upsilon \) in line 2.2 corresponds to an update step of the generalized gradient method. The projection \(\pi \) in line 2.3 is the identity. Under the conditions of Ermoliev and Norkin (1998), Theorem 4.1 and Theorem 5.1 the procedure described in Algorithm 2 is consistent.

5 Experiments

The goal of this section is to assess the performance and behavior of elastic linear classifiers.We present and discuss results from two experimental studies. The first study explores the effects of the elasticity parameter on the error rate and the second study compares the performance of different elastic linear classifiers. We considered two-class problems of the UCR time series datasets (Keogh et al. 2011). Table 2 summarizes characteristic features of the datasets.

5.1 Exploring the effects of elasticity

The first experimental study explores the effects of elasticity on the error rate by controlling the number of columns of the weight matrix of an elastic perceptron.

5.1.1 Experimental setup

The elastic perceptron algorithm was applied to the Gun_Point, ECG200, and ECGFiveDays dataset using the following setting: The dimension of the matrix space \({\mathcal {X}}\) was set to \(n \times m\), where n is the length of the longest time series in the training set of the respective dataset. Bias and weight matrix were initialized by drawing random numbers from the uniform distribution on the interval \([-0.01, +0.01]\). The elasticity m was controlled via the ratio \(w = m/n\). For every \(w \in \mathop {\left\{ 0, 0.05, 0.1, 0.2, 0.3, 0.4, 0.5, 0.75, 1.0, 2.0, 3.0 \right\} }\) Footnote 2 the learning rate \(\eta \) with the lowest error on the training set was selected from the set \(\eta \in \mathop {\left\{ 1.0, 0.7, 0.3, 0.1, 0.03, 0.01, 0.003, 0.001 \right\} }\). To assess the generalization performance, the learned classifier was applied to the test set. The whole experiment was repeated 30 times for every value w.

5.1.2 Results and discussion

Figure 4 shows the mean error rates of the elastic perceptron as a function of \(w = m/n\). The error rates on the respective training sets were always zero.

Mean error rates of elastic perceptron on Gun_Point, ECG200, and ECGFiveDays. Vertical axes show the mean error rates in % averaged over 30 trials. Horizontal axes show the ratio \(w = m/n\), where m is the elasticity, that is the number of columns of the weight matrix and n is the length of the longest time series of the respective dataset. Ratio \(w = 0\) means \(m = 1\) and corresponds to the standard perceptron algorithm

One characteristic feature of the UCR datasets listed in Table 2 is that the number of training examples is low compared to the dimension of the time series. This explains the low training error rates and the substantially higher test error rates.

The three plots show typical curves also observed when applying the elastic perceptron to the other datasets listed in Table 2. The most important observation to be made is that the parameter w is problem-dependent and need to be selected carefully. If the training set is small and dimensionality is high, a proper choice of w becomes challenging. The second observation is that in some cases, the standard perceptron algorithm (\(w = 0\)) may perform best as in ECGFiveDays. Increasing w results in a classifier with larger flexibiltiy. Intuitively this means that an elastic perceptron can implement more decision boundaries the larger w is. If w becomes too large, the classifier becomes more prone to overfitting as indicated by the results on ECG200 and ECGFiveDays. We hypothesize that elasticity controls the capacity of an elastic linear classifier.

5.2 Comparative study

This comparative study assesses the performance of elastic linear classifiers.

5.2.1 Experimental setup

In this study, we used all datasets listed in Table 2. The four elastic linear classifiers of Sect. 3.4 were compared against different variants of the nearest neighbor (NN) classifier with DTW distance. The variants of the NN classifiers differ in the choice of prototypes. The first variant uses all training examples as prototypes (NN+ALL). The second and third variant learned one prototype per class from the training set using k-means (NN+KME) as second variant and agglomerative hierarchical clustering (NN+AHC) as third variant (Petitjean et al. 2014).

The settings of the elastic linear classifiers were as follows: The dimension of the matrix space \({\mathcal {X}}\) was set to \(n \times m\), where n is the length of the longest time series in the training set and \(m = \lceil n/10 \rceil \) is the elasticity. The elasticity m was set to 10 % of the length n for the following reasons: First, m should be small to avoid overfitting due to high dimensionality of the data and small size of the training set. Second, m should be larger than one, because otherwise an elastic linear classifier reduces to a standard linear classifier.

Bias and weight matrix were initialized by drawing random numbers from the uniform distribution on the interval \([-0.01, +0.01]\). Parameters were selected by k-fold cross validation on the training set of size N. We set \(k = 10\) if \(N > 30\) and \(k = N\) otherwise. The following parameters were selected: learning rate \(\eta \) for all elastic linear classifiers, margin \(\xi \) for elastic margin perceptron, and regularization parameter \(\lambda \) for elastic linear SVM. The parameters were selected from the following values

The final model was obtained by training the elastic linear classifiers on the whole training set using the optimal parameter(s). We assessed the generalization performance by applying the learned model to the test data. Since the performance of elastic linear classifiers depends on the random initialization of the bias and weight matrix, we repeated the last two steps 100 times, using the same selected parameters in each trial.

5.2.2 Results and discussion

Table 3 summarizes the error rates of all elastic linear (EL) classifiers and nearest neighbor (NN) classifiers.

Comparison of EL classifiers and NN methods is motivated by the following reasons: First, NN classifiers belong to the state-of-the-art and are considered to be exceptionally difficult to beat (Batista et al. 2011; Lines and Bagnall 2014; Xi et al. 2006). Second, in Euclidean spaces linear classifiers and nearest neighbors are two simple but complementary approaches. Linear classifiers are computationally efficient, make strong assumptions about the data and therefore may yield stable but possibly inaccurate predictions. In contrast, nearest neighbor methods make very mild assumption about the data and therefore often yield accurate but possibly unstable predictions (Hastie et al. 2001).

The first key observation suggests that overall generalization performance of EL classifiers is comparable to the state-of-the-art NN classifier. This observation is supported by the same same number of green shaded rows (EL is better) and red shaded rows (NN is better) in Table 3. As reported by Lines and Bagnall (2014), ensemble classifiers of different elastic distance measures are assumed to be first approach that significantly outperformed the NN+ALL classifier on the UCR time series dataset. This result is not surprising, because in machine learning it is well known for a long time that ensemble classifiers often perform better than their base classifiers for reasons explained in Dietterich (2000). Since any base classifier can contribute to an ensemble classifier, it is feasible to restrict comparison to base classifiers such as the state-of-the-art NN+ALL classifier.

The second key observation indicates that EL classifiers are clearly superior to NN classifiers with one prototype per class, denoted by NN\(_1\) henceforth. Evidence for this finding is provided by two results: first, AHC and KME performed best among several prototype selection methods for NN classification (Petitjean et al. 2014); and second, error rates of EL classifiers are significantly better than those of NN+AHC and NN+KME for eight, comparable for two, and significantly worse for two datasets.

The third key observation is that EL classifiers clearly better compromise between solution quality and computation time than NN classifiers. Findings reported by Wang et al. (2013) indicate that more prototypes may improve generalization performance of NN classifiers. At the same time, more prototypes increase computation time, though the differences will decrease for larger number of prototypes by applying certain acceleration techniques. At the extreme ends of the scale, we have NN+ALL and NN\(_1\) classifiers. With respect to solution quality, the first key observation states that EL classifiers are comparable to the slowest NN classifiers using the whole training set as prototypes and clearly superior to the fastest NN classifiers using one prototype per class. To compare computational efficiency, we first consider the case without applying any acceleration techniques. We measure computational efficiency by the number of proximity calculations required to classify a single time series. This comparison is justified, because the complexity of computing a DTW distance and an elastic inner product are identical. Then EL classifiers are p-times faster than NN classifiers, where p is the number of prototypes. Thus the fastest NN classifiers effectively have the same computational effort as EL classifiers for arbitrary multi-class problems, but they are not competitive to EL classifiers according to the second key observation. Next, we discuss computational efficiency of both types of classifiers, when one applies acceleration techniques. For NN classifiers, two common techniques to decrease computation time are global constraints such as the Sakoe-Chiba band (1978) and diminishing the number of DTW distance calculations by applying lower bounding technique (Ratanamahatana and Keogh 2004, 2005). Both techniques can equally well be applied to EL classifiers, where lower-bounding techniques need to be converted to upper-bounding techniques. Furthermore, EL classifiers can additionally control the computational effort by the number m of columns of the matrix space. Here m was set to 10 % of the length n of the shortest time series of the training set. The better performance of EL classifiers in comparison to NN\(_1\) classifiers is notable, because the decision boundaries that can be implemented by their counterparts in the Euclidean space are both the set of all hyperplanes. We assume that EL classifiers outperform NN\(_1\) classifiers, because learning prototypes by clustering minimizes a cluster criterion unrelated to the risk functional of a classification problem. Therefore the resulting prototypes may fail to discriminate the data for some problems.

The fourth key observation is that the strong assumption of elastic-linearly separable problems is appropriate for some problems in the time series classification. Error rates of elastic linear classifiers for Coffee, ItalyPowerDemand, and Wafer are below 5 %. For these problems, the strong assumption made by EL classifiers is appropriate. For all other datasets, the high error rates of EL classifiers could be caused by two factors: first, the assumption that the data is elastic-linearly separable is inappropriate; and second, the number of training examples given the length of the time series is too low for learning (see ratio \(\rho \) in Table 2). Here further experiments are required.

The fifth observation is that the different EL classifiers perform comparable with advantages for eLOGR and eLSVM. These findings correspond to similar findings for logistic regression and linear SVM in vector spaces.

To complete the comparison, we contrast the time complexities of all classifiers required for learning. NN+ALL requires no time for learning. The NN+AHC classifier learns a protoype for each class using agglomerative hierarchical clustering. Determining pairwise DTW distances is of complexity \(O(n^2 N(N-1)/2)\), where n is the length of the time series and N is the number of training examples. Given a pairwise distance matrix, the complexity of agglomerative clustering is \(O(N^3)\) in the general case. Efficient variants of special agglomerative methods have a complexity of \(O(N^2)\). Thus, the complexity of NN+AHC is \(O(n^2N^2)\) in the best and \(O(n^2N^2 + N^3)\) in the general case. The NN+KME learns a protoype for each class using k-means under elastic transformations. Its time complexity is \(O(2n^2 N t)\), where t is the number of iterations required until termination. The time complexity for learning an EL classifier is O(nmNt), where m is the number of columns of the weight matrix. This shows that the time complexity for learning an EL classifier is the same as learning two prototypes by KME. However, in this setting, learning an EL classifier is about factor 20 faster than KME, under the assumption that the number of iterations t is the same for both methods. If the number N of training examples is large, NN+AHC becomes prohibitively slow. In contrast, the learning procedures of NN+KME and EL classifiers can be terminated after some pre-specified maximum number of iterations. In doing so, we trade solution quality against feasible computation time.

To summarize, the results show that elastic linear classifiers are simple and efficient methods. They rely on the strong assumption that an elastic-linear decision boundary is appropriate. Therefore, elastic linear classifiers may yield inaccurate predictions when the assumptions are biased towards oversimplification and/or when the number of training examples is too low compared to the length of the time series. These findings are in line with those of linear classifiers in Euclidean space.

6 Conclusion

This paper introduces generalized gradient methods for learning on time series under elastic transformations. This approach combines (a) the novel concept of elastic functions that links elastic proximities on time series to piecewise smooth functions with (b) generalized gradient methods for non-smooth optimization. Using the proposed scheme, we (1) showed how a broad class of gradient-based learning can be applied to time series under elastic transformations, (2) derived general convergence statements that justify the generalizations, and (3) placed existing adaptive methods into proper context. Exemplarily, elastic logistic regression, elastic (margin) perceptron learning, and elastic linear SVM have been tested on two-class problems and compared to nearest neighbor classifiers using the DTW distance. Despite the simplicity in terms of the decision boundary and the computational efficiency, elastic linear classifiers perform convincing. There is still room for improvement by controlling elasticity and by applying different forms of regularization. The results indicate that adaptive methods based on elastic functions may complement the state-of-the-art in statistical pattern recognition on time series, in particular when powerful non-linear gradient-based methods such as deep learning are extended to time series under elastic transformations.

Notes

We call

Euclidean norm to emphasize that we regard \({\mathcal {X}}\) as a Euclidean space.

Euclidean norm to emphasize that we regard \({\mathcal {X}}\) as a Euclidean space.The value \(w = 0\) refers to \(m = 1\). Thus the weight matrix collapses to a column vector and the elastic perceptron becomes the standard perceptron.

References

Batista, G. E., Wang, X., & Keogh, E. J. (2011). A complexity-invariant distance measure for time series. SIAM International Conference on Data Mining, 11, 699–710.

Clarke, F. H. (1975). Generalized gradients and applications. Transactions of the American Mathematical Society, 205, 247–262.

Dietterich, T. G. (2000). Ensemble methods in machine learning. In Proceedings of the first international workshop on multiple classifier systems.

Ermoliev, Y., & Norkin, V. (1998). Stochastic generalized gradient method for nonconvex nonsmooth stochastic optimization. Cybernetics and Systems Analysis, 34(2), 196–215.

Fu, T. (2011). A review on time series data mining. Engineering Applications of Artificial Intelligence, 24(1), 164–181.

Geurts, P. (2001). Pattern extraction for time series classification. In Principles of data mining and knowledge discovery, pp. 115–127.

Hastie, T., Tibshirani, R., & Friedman, J. (2001). The elements of statistical learning. New York: Springer.

Hautamaki, V., Nykanen, P., & Franti, P. (2008). Time-series clustering by approximate prototypes. In International conference on pattern recognition.

Keogh, E., Zhu, Q., Hu, B., Hao, Y., Xi, X., Wei, L., et al. (2011). The UCR time series classification/clustering homepage. www.cs.ucr.edu/~eamonn/time_series_data/.

Kruskal, J. B., & Liberman, M. (1983). The symmetric time-warping problem: From continuous to discrete. In Time warps, string edits and macromolecules: The theory and practice of sequence comparison, pp. 125–161.

Lines, J., & Bagnall, A. (2014). Time series classification with ensembles of elastic distance measures. Data Mining and Knowledge Discovery, 29(3), 565–592.

Nedic, A., & Bertsekas, D. P. (2001). Incremental subgradient methods for nondifferentiable optimization. SIAM Journal on Optimization, 12(1), 109–138.

Niennattrakul, V., & Ratanamahatana, C. A. (2007). Inaccuracies of shape averaging method using dynamic time warping for time series data. In International conference on computational science, pp. 513–520.

Niennattrakul, V., & Ratanamahatana, C. A. (2007). On clustering multimedia time series data using k-means and dynamic time warping. In International conference on multimedia and ubiquitous engineering, pp. 733–738.

Norkin, V. (1986). Stochastic generalized-differentiable functions in the problem of nonconvex nonsmooth stochastic optimization. Cybernetics and Systems Analysis, 22(6), 804–809.

Petitjean, F., Ketterlin, A., & Gancarski, P. (2011). A global averaging method for dynamic time warping, with applications to clustering. Pattern Recognition, 44(3), 678–693.

Petitjean, F., Forestier, G., Webb, G. I., Nicholson, A. E., Chen, Y., & Keogh, E. (2014). Dynamic time warping averaging of time series allows faster and more accurate classification. In International conference on data mining.

Rabiner, L. R., & Wilpon, J. G. (1979). Considerations in applying clustering techniques to speaker-independent word recognition. The Journal of the Acoustical Society of America, 66(3), 663–673.

Rabiner, L. R., & Wilpon, J. G. (1980). A simplified, robust training procedure for speaker trained, isolated word recognition systems. The Journal of the Acoustical Society of America, 68(5), 1271–1276.

Ratanamahatana, C. A., & Keogh, E. J. (2004). Making time- series classification more accurate using learned constraints. In SIAM international conference on data mining, pp. 11–22.

Ratanamahatana, C. A., & Keogh, E. J. (2005). Three myths about dynamic time warping data mining. In SIAM international conference on data mining, pp. 506–510.

Sakoe, H., & Chiba, S. (1978). Dynamic programming algorithm optimization for spoken word recognition. IEEE Transactions on Acoustics, Speech, and Signal Processing, 26(1), 43–49.

Shor, N. Z. (1985). Minimization methods for nondifferentiable functions. Berlin, Heidelberg: Springer.

Somervuo, P., & Kohonen, T. (1999). Self-organizing maps and learning vector quantization for feature sequences. Neural Processing Letters, 10(2), 151–159.

Vapnik, V., Levin, E., & Le Cun, Y. (1994). Measuring the VC-dimension of a learning machine. Neural Computation, 6(5), 851–876.

Wang, X., Mueen, A., Ding, H., Trajcevski, G., Scheuermann, P., & Keogh, E. (2013). Experimental comparison of representation methods and distance measures for time series data. Data Mining and Knowledge Discovery, 26(2), 275–309.

Wilpon, J. P., & Rabiner, L. R. (1985). A modified K-means clustering algorithm for use in isolated work recognition. IEEE IEEE Transactions on Acoustics, Speech and Signal Processing, 33(3), 587–594.

Xi, X., Keogh, E., Shelton, C., Wei, L., & Ratanamahatana, C. A. (2006). Fast time series classification using numerosity reduction. In International conference on machine learning, pp. 1033–1040.

Author information

Authors and Affiliations

Corresponding author

Additional information

Editors: João Gama, Indrė Žliobaitė, Alípio M. Jorge, and Concha Bielza.

Appendix: Proof of convergence results for elastic linear classifiers

Appendix: Proof of convergence results for elastic linear classifiers

Since affine functions are convex and the maximum of convex functions is also convex, the elastic inner product is convex. In addition, the composition of convex functions is convex. Therefore the loss functions of elastic linear classifiers are convex. Then the first convergence results is shown in Shor (1985).

To show the two other convergence statements, we assume that \(\mathop {\left|{\mathcal {D}} \right|} = N\). For each training example \((\mathbf{x}_i, y_i) \in {\mathcal {D}}\) the loss

is real-valued and convex, where \(\varvec{\theta } = (\mathbf{W}, b)\). Then there is a positive scalar \(C_i\) that bounds the subdifferential of \(\ell _i\) at \(\varvec{\theta }\) for all \(i \in [N]\). Suppose that

Then from Nedic and Bertsekas (2001, Prop. 2.2). follows that the incremental generalized gradient method converges to a local minimum.

To show the Elastic Margin Perceptron Convergence Theorem, we assume that

is the error without averaging operation, that is \(E_N = N \cdot R_N\). By assumption, the training set \({\mathcal {D}}\) is elastic-linearly separable. Then the minimum value \(E_*\) of \(E_N\) is zero. From Nedic and Bertsekas (2001, Prop. 2.1) follows

where \(\eta \) is the learning rate. Choosing \(\eta \le \xi /C^2\) gives

Since \(\xi > 0\), this implies that there is a \(t_0\) such that \(\ell _t(\varvec{\theta }^t) < \xi \) for all \(t \ge t_0\). Here, \(\ell _t\) refers to example \((\mathbf{x}_t, y_t) \in {\mathcal {D}}\) presented at iteration t. From this follows that all training examples are classified correctly after a finite number of update steps, provided that \(\lambda \le \xi \). \(\square \)

Rights and permissions

About this article

Cite this article

Jain, B.J. Generalized gradient learning on time series. Mach Learn 100, 587–608 (2015). https://doi.org/10.1007/s10994-015-5513-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-015-5513-0

Euclidean norm to emphasize that we regard

Euclidean norm to emphasize that we regard