Abstract

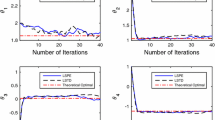

In this paper, a unified policy iteration approach is presented for the optimal control problem of stochastic system with discounted average cost and continuous state space. The approach consists of temporal difference learning-based potential function approximation algorithms and performance difference formula-based policy improvement. The approximation algorithms are derived by solving the Poisson equation-based fixed-point equation, which can be viewed as continuous versions of least squares policy evaluation algorithm and least squares temporal difference algorithm. The simulations are provided to illustrate the effectiveness of the approach.

Similar content being viewed by others

References

Bellman, R.E.: Dynamic Programming. Princeton University Press, Princeton (1957)

Bertsekas, D.P.: Dynamic Programming and Stochastic Control, vols. I and II, 3rd edn. Athena Scientific, Belmont (2007)

Powell, W.B.: Approximate Dynamic Programming: Solving the Curses of Dimensionality. Wiley, New York (2007)

Bertsekas, D.P., Tsitsiklis, J.N.: Neuro-Dynamic Programming. Athena Scientific, Belmont (1996)

Bertsekas, D.P.: Approximate policy iteration: a survey and some new methods. J. Control Theory Appl. 9, 310–335 (2011)

Powell, W.B., Ma, J.: A review of stochastic algorithms with continuous value function approximation and some new approximate policy iteration algorithms for multidimensional continuous applications. J. Control Theory Appl. 9, 336–352 (2011)

Cao, X.R., Chen, H.F.: Potentials, perturbation realization, and sensitivity analysis of Markov processes. IEEE Trans. Autom. Control 42, 1382–1393 (1997)

Cao, X.R.: A unified approach to Markov decision problems and performance sensitivity analysis. Automatica 36, 771–774 (2000)

Cao, X.R., Guo, X.P.: A unified approach to Markov decision problems and performance sensitivity analysis with discounted and average criteria: multichain cases. Automatica 40, 1749–1759 (2004)

Zhang, K.J., Xu, Y.K., Chen, X., Cao, X.R.: Policy iteration based feedback control. Automatica 44, 1055–1061 (2008)

Cao, X.R.: Single sample path-based optimization of Markov chains. J. Optim. Theory Appl. 100, 527–548 (1999)

Fang, H.T., Cao, X.R.: Potential-based on-line policy iteration algorithms for Markov decision processes. IEEE Trans. Autom. Control 49, 493–505 (2004)

Sutton, R.S.: Learning to predict by the methods of temporal differences. Mach. Learn. 3, 9–44 (1988)

Bradtke, S., Barto, A.: Linear least-squares algorithms for temporal difference learning. Mach. Learn. 22, 33–57 (1996)

Nedic, A., Bertsekas, D.P.: Least squares policy evaluation algorithms with linear function approximation. Discrete Event Dyn. Syst. 13, 79–110 (2003)

Lagoudakis, M., Parr, R.: Least-squares policy iteration. J. Mach. Learn. Res. 4, 1107–1149 (2003)

Bertsekas, D.P., Yu, H.Z.: Projected equation methods for approximate solution of large linear systems. J. Comput. Appl. Math. 227, 27–50 (2009)

Tsitsiklis, J.N., Roy, B.V.: An analysis of temporal-difference learning with function approximation. IEEE Trans. Autom. Control 42, 674–690 (1997)

Ma, J., Powell, W.B.: A convergent recursive least squares approximate policy iteration algorithm for multi-dimensional Markov decision process with continuous state and action spaces. In: ADPRL 2009—Proceedings, Nashville, USA, pp. 66–73 (2009)

Chan, K.S., Tong, H.: On the use of the deterministic Lyapunov function for the ergodicity of stochastic difference equations. Adv. Appl. Probab. 17, 666–678 (1985)

Meyn, S.P., Tweedie, R.L.: Markov Chains and Stochastic Stability. Springer, London (1993)

Yu, H.Z., Bertsekas, D.P.: Convergence results for some temporal difference methods based on least squares. IEEE Trans. Autom. Control 54, 1515–1531 (2009)

Cao, X.R.: Stochastic control via direct comparison. Discrete Event Dyn. Syst. 21, 11–38 (2011)

Acknowledgements

The authors would like to thank the editors and anonymous reviewers for their constructive comments that improved the manuscript. The work was supported by the National Natural Science Foundation of China under Grants Nos. 60874030 and 61374006, and the Major Program of National Natural Science Foundation of China under Grant No. 11190015.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Qianchuan Zhao.

Rights and permissions

About this article

Cite this article

Cheng, K., Fei, S., Zhang, K. et al. Temporal Difference-Based Policy Iteration for Optimal Control of Stochastic Systems. J Optim Theory Appl 163, 165–180 (2014). https://doi.org/10.1007/s10957-013-0418-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-013-0418-1