Abstract

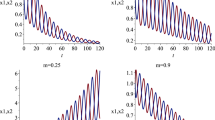

We consider an individual-based model where agents interact over a random network via first-order dynamics that involve both attraction and repulsion. In the case of all-to-all coupling of agents in \(\mathbb {R}^{d}\) this system has a lowest energy state in which an equal number of agents occupy the vertices of the \(d\)-dimensional simplex. The purpose of this paper is to sharpen and extend a line of work initiated in [56], which studies the behavior of this model when the interaction between the \(N\) agents occurs according to an Erdős–Rényi random graph \(\mathcal {G}(N,p)\) instead of all-to-all coupling. In particular, we study the effect of randomness on the stability of these simplicial solutions, and provide rigorous results to demonstrate that stability of these solutions persists for probabilities greater than \(Np = O ( \log N )\). In other words, only a relatively small number of interactions are required to maintain stability of the state. The results rely on basic probability arguments together with spectral properties of random graphs.

Similar content being viewed by others

References

Aldana, M., Huepe, C.: Phase transitions in self-driven many-particle systems and related non-equilibrium models: a network approach. J. Stat. Phys. 112(1), 135–153 (2003)

Altschuler, E.L., Williams, T.J., Ratner, E.R., Tipton, R., Stong, R., Dowla, F., Wooten, F.: Possible global minimum lattice configurations for Thomson’s problem of charges on a sphere. Phys. Rev. Lett. 78(14), 2681–2685 (1997)

Ballerini, M., Cabibbo, N., Candelier, R., Cavagna, A., Cisbani, E., Giardina, I., Lecomte, V., Orlandi, A., Parisi, G., Procaccini, A., et al.: Interaction ruling animal collective behavior depends on topological rather than metric distance: evidence from a field study. Proc. Natl. Acad. Sci. USA 105(4), 1232–1237 (2008)

Batir, N.: Some new inequalities for gamma and polygamma functions. J. Inequal. Pure Appl. Math. 6(4), 1–9 (2005)

Boi, S., Capasso, V., Morale, D.: Modeling the aggregative behavior of ants of the species Polyergus rufescens. Nonlinear Anal. Real World Appl. 1, 163–176 (2000)

Buhl, J., Sumpter, D.J.T., Couzin, I.D., Hale, J.J., Despland, E., Miller, E.R., Simpson, S.J.: From disorder to order in marching locusts. Science 312(5778), 1402–1406 (2006)

Cao, M., Morse, A.S., and Anderson, B.D.O.: Reaching an agreement using delayed information. In: 45th IEEE conference on decision and control, pp. 3375–3380, December 2006

Chuang, Y., Huang, Y.R., D’Orsogna, M.R., and Bertozzi, A.L. Multi-vehicle flocking: scalability of cooperative control algorithms using pairwise potentials. In: IEEE international conference on robotics and automation, pp. 2292–2299, 2007

Chung, F., Lu, L.: The average distances in random graphs with given expected degrees. Proc. Natl. Acad. Sci. USA 99(2), 15879–15882 (2002)

Chung, F., Lu, L.: Connected components in random graphs with given degree sequences. Ann. Comb. 6, 125–145 (2002)

Chung, F., Lu, L., Vu, V.: The spectra of random graphs with given expected degrees. Internet Math. 1(3), 257–275 (2004)

Chung, F., Radcliffe, M.: On the spectra of general random graphs. Electron. J. Combin. 18, P215 (2011)

Cohn, H., Kumar, A.: Universally optimal distribution of points on spheres. J. Am. Math. Soc. 20(1), 99–148 (2007)

Cohn, H., Kumar, A.: Algorithmic design of self-assembling structures. Proc. Natl. Acad. Sci. USA 106(24), 9570–9575 (2009)

Cucker, F., Smale, S.: Emergent behavior in flocks. IEEE Trans. Autom. Control 52(5), 852–862 (2007)

Degond, P., Motsch, S.: Large scale dynamics of the persistent turning walker model of fish behavior. J. Stat. Phys. 131, 989–1021 (2008). doi:10.1007/s10955-008-9529-8

Delprato, A.M., Samadani, A., Kudrolli, A., Tsimring, L.S.: Swarming ring patterns in bacterial colonies exposed to ultraviolet radiation. Phys. Rev. Lett. 87(15), 158102 (2001)

Edelstein-Keshet, L., Watmough, J., Grunbaum, D.: Do travelling band solutions describe cohesive swarms? An investigation for migratory locusts. J. Math. Biol. 36, 515–549 (1998). doi:10.1007/s002850050112

Feige, U., Ofek, E.: Spectral techniques applied to sparse random graphs. Random Struct. Algorithm 27(2), 251–275 (2005)

Ferrante, E., Turgut, A.E., Huepe, C., Birattari, M., Dorigo, M., Wenseleers, T.: Explicit and implicit directional information transfer in collective motion. Artif. Life 13, 551–577 (2012)

Ferrante, E., Turgut, A.E., Huepe, C., Stranieri, A., Pinciroli, C., Dorigo, M.: Self-organized flocking with a mobile robot swarm: a novel motion control method. Adapt. Behav. 20(6), 460–477 (2012)

Glotzer, S.C.: Some assembly required. Science 306(5695), 419–420 (2004)

Glotzer, S.C., Solomon, M.J.: Anisotropy of building blocks and their assembly into complex structures. Nat. Mater. 6, 557–562 (2007)

Hagan, M.F., Chandler, D.: Dynamic pathways for viral capsid assembly. Biophys. J. 91(1), 42–54 (2006)

Hatano, Y., Mesbahi, M.: Agreement over random networks. IEEE Trans. Autom. Control 50(11), 1867–1872 (2005)

Holm, D.D., Putkaradze, V.: Formation of clumps and patches in self-aggregation of finite-size particles. Phys. D 220(2), 183–196 (2006)

Huepe, C., Zschaler, G., Do, A.L., Gross, T.: Adaptive-network models of swarm dynamics. New J. Phys. 13(7), 073022 (2011)

Israelachvili, J.N.: Intermolecular and Surface Forces, 3rd edn. Academic Press, London (2011)

Jin, Z., Bertozzi, A.L.: Environmental boundary tracking and estimation using multiple autonomous vehicles. In: 46th IEEE conference on decision and control, pp. 4918–4923, 2007.

Kar, S., Moura, J.M.F.: Sensor networks with random links: topology design for distributed consensus. IEEE Trans. Signal Process. 56(7), 3315–3326 (2008)

Kuijlaars, A.B., Saff, E.B.: Asymptotics for minimal discrete energy on the sphere. Trans. Am. Math. Soc. 350(2), 523–538 (1998)

McDiarmid, C.: Concentration. In: Habib, M. (ed.) Probabilistic Methods for Algorithmic Discrete Mathematics, pp. 195–248. Springer, Berlin (1998)

Moreau, L. Stability of continuous-time distributed consensus algorithms. In: 43rd IEEE conference on decision and control, CDC, vol. 4, pp. 3998–4003 (2004)

Moreau, L.: Stability of multiagent systems with time-dependent communication links. IEEE Trans. Autom. Control 50(2), 169–182 (2005)

Motsch, S., Tadmor, E.: A new model for self-organized dynamics and its flocking behavior. J. Stat. Phys. 144, 923–947 (2011). doi:10.1007/s10955-011-0285-9

Olfati-Saber, R.: Flocking for multi-agent dynamic systems: algorithms and theory. IEEE Trans. Autom. Control 51(3), 401–420 (2006)

Olfati-Saber, R., Murray, R.M.: Consensus problems in networks of agents with switching topology and time-delays. IEEE Trans. Autom. Control 49(9), 1520–1533 (2004)

Oliveira, R.I.: Concentration of the adjacency matrix and of the laplacian in random graphs with independent edges. arXiv:0911.0600v2, 2010.

Olver, F.W.J., Lozier, D.W., Boisvert, R.F., Clark, C.W. (eds.): NIST Handbook of Mathematical Functions. Cambridge University Press, New York (2010)

Onufriev, A., Bashford, D., Case, D.A.: Exploring protein native states and large-scale conformational changes with a modified generalized born model. Proteins 55(2), 383–394 (2004)

Pérez-Garrido, A., Dodgson, M.J.W., Moore, M.A.: Influence of dislocations in thomson’s problem. Phys. Rev. B 56(7), 3640–3643 (Aug 1997)

Porfiri, M., Stilwell, D.J.: Consensus seeking over random weighted directed graphs. IEEE Trans. Autom. Control 52(9), 1767–1773 (2007)

Porfiri, M., Stilwell, D.J., Bollt, E.M.: Synchronization in random weighted directed networks. Circuits Syst. I 55(10), 3170–3177 (2008)

Porfiri, M., Stilwell, D.J., Bollt, E.M., Skufca, J.D.: Stochastic synchronization over a moving neighborhood network. In: American control conference, 2007 (ACC’07), pp. 1413–1418, 2007

Wei, R., Beard, R.W.: Consensus seeking in multiagent systems under dynamically changing interaction topologies. IEEE Trans. Autom. Control 50(5), 655–661 (2005)

Ren, W., Atkin, E.M.: Distributed multi-vehicle coordinated control via local information exchange. Int. J. Robust Nonlinear Control 17(10–11), 1002–1033 (2007)

Ren, W., Beard, R.: Distributed Consensus in Multi-vehicle Cooperative Control: Theory and Applications. Communications and Control Engineering. Springer, London (2008)

Ren, W., Moore, K., Chen, Y.: High-order and model reference consensus algorithms in cooperative control of multi-vehicle systems. ASME J. Dyn. Syst. Meas. Control 129(5), 678–688 (2007)

Sigalov, G., Fenley, A., Onufriev, A.: Analytical electrostatics for biomolecules: beyond the generalized born approximation. J. Chem. Phys. 124, 124902 (2006)

Skufca, J.D., Bollt, E.M.: Communication and synchronization in, disconnected networks with dynamic topology: moving neighborhood networks. Math. Biosci. Eng. 1(2), 347 (2004)

Thomson, J.J.: On the structure of the atom. Philos. Mag. 7(39), 237–265 (1904)

Tsimring, L., Levine, H., Aranson, I., Ben-Jacob, E., Cohen, I., Shochet, O., Reynolds, W.N.: Aggregation patterns in stressed bacteria. Phys. Rev. Lett. 75(9), 1859–1862 (1995)

van Oss, C.J.: Interfacial Forces in Aqueous Media, 2nd edn. Taylor & Francis, Boca Raton (2006)

van Oss, C.J., Giese, R.F., Costanzo, P.M.: Dlvo and non-dlvo interactions in hectorite. Clays Clay Miner. 38(2), 151–159 (1990)

Vicsek, T., Czirók, A., Ben-Jacob, E., Cohen, I., Shochet, O.: Novel type of phase transition in a system of self-driven particles. Phys. Rev. Lett. 75, 1226–1229 (1995)

von Brecht, J., Kolokolnikov, T., Bertozzi, A.L., Sun, H.: Swarming on random graphs. J. Stat. Phys. 151, 1–24 (2013)

Wales, D.J., McKay, H., Altschuler, E.L.: Defect motifs for spherical topologies. Phys. Rev. B 79(22), 224115 (Jun 2009)

Yang, W., Bertozzi, A.L., Wang, X.: Stability of a second order consensus algorithm with time delay. In: 47th IEEE conference on decision and control ‘CDC 2008’, pp. 2926–2931, 2008

Zandi, R., Reguera, D., Bruinsma, R.F., Gelbart, W.M., Rudnick, J.: Origin of icosahedral symmetry in viruses. Proc. Natl. Acad. Sci. USA 101(44), 15556–15560 (2004)

Zhang, Z., Glotzer, S.C.: Self-assembly of patchy particles. Nano Lett. 4(8), 1407–1413 (2004)

Acknowledgments

JvB and ALB acknowledge funding from NSF Grant DMS-0914856 and AFOSR MURI Grant FA9550-10-1-0569. JvB also acknowledges funding from NSF Grant DMS-1312344. The research of B. Sudakov is supported in part by AFOSR MURI Grant FA9550-10-1-0569, by a USA-Israeli BSF Grant and by SNSF Grant 200021-149111.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Estimates for Generalized Adjacency Matrices

Given a random graph drawn from \(\mathcal {G}(n,p),\) let \(A \in M_{nd \times nd}(\mathbb {R})\) denote an \(nd \times nd\) generalized adjacency matrix using any symmetric matrix \(M \in \mathbb {M}_{d \times d}(\mathbb {R})\) as a sub-block. In other words, if \(E\) denotes the adjacency matrix of the graph then we have

for \(\otimes \) denoting the Kronecker product. Let \(M_{jk} = M_{kj}, \; 1\le j \le n, \; j \le k \le n\) denote the i.i.d. matrix-valued random variables corresponding to the edges in the graph, so that \(\mathbb {E}(M_{jk}) = pM\). For a given a vector \(\mathbf {x}\in \mathbb {R}^{nd}\) consider the partition \(\mathbf {x}= (\mathbf {x}_{1},\ldots ,\mathbf {x}_{n})^{t}\) for \(\mathbf {x}_{i} \in \mathbb {R}^{d},\) and recall the “mean-zero” hypothesis

If we denote the corresponding subset of the unit ball \(\mathcal {S}^{nd} \subset \mathbb {R}^{nd}\) as

our aim lies in proving the following generalization of the theorem due to [19]:

Theorem 6.1

Let \(\alpha \) and \(c_{0}\) denote arbitrary positive constants. If \(np > c_{0} \log n\) then there exists a constant \(c = c(\alpha ,c_0,d,||M||_{2}) > 0\) so that the estimate

holds with probability at least \(1 - n^{-\alpha }\).

Note carefully that we only require one of \(\mathbf {x}\) or \(\mathbf {y}\) to satisfy the mean zero property (48). The proof of the theorem essentially reproduces the arguments of [19] by changing a few scalars to vectors and multiplications to inner products. The first ingredient is the following lemma:

Lemma 6.2

Fix \((\mathbf {x},\mathbf {y}) \in \mathcal {S}^{nd}_{0} \times \mathcal {S}^{nd}\) and let \(\Lambda = \{ (j,k) : |\langle \mathbf {x}_k , M \mathbf {y}_j \rangle | \le \sqrt{p/n} \}\). Then

Proof

As \(\mathbf {x}\in \mathcal {S}^{nd}_{0}\) it follows that

By definition, whenever \((j,k) \in \Lambda ^{c}\) it follows that \(|\langle \mathbf {x}_{k}, M \mathbf {y}_j \rangle | > \sqrt{p/n}\). Thus \(p|\langle \mathbf {x}_{k}, M \mathbf {y}_j \rangle | < \sqrt{np}|\langle \mathbf {x}_{k}, M \mathbf {y}_j \rangle |^2 \le \sqrt{np} ||M||^{2}_{2}||\mathbf {x}_k||^{2}_{2} ||\mathbf {y}_j||^{2}_{2}\) for any such \((j,k)\), where the last inequality follows from Cauchy-Schwarz. Combining these facts yields

as desired. \(\square \)

For a fixed \((\mathbf {x},\mathbf {y}) \in \mathcal {S}^{nd}_{0} \times \mathcal {S}^{nd}\) let \(S(\mathbf {x},\mathbf {y}),L(\mathbf {x},\mathbf {y}),U(\mathbf {x},\mathbf {y})\) denote the random variables defined as

Although dependencies exist between \(L\) and \(U\) due to the undirected graph, when considered in isolation each random variable is simply a sum of independent indicator random variables. Indeed, fix any ordering of the indices \(\Lambda \cap \{ j \le k \}\) and write \(U(\mathbf {x},\mathbf {y}) = \sum _{i} u_{i},\) where the sum ranges from one to the (deterministic) size of \(\Lambda \cap \{ j \le k \},\) and note that each \(u_{i}\) is either zero or \(\langle \mathbf {x}_j , M \mathbf {y}_k \rangle \) with probability \((1-p)\) or \(p\), respectively. Obviously for \(L(\mathbf {x},\mathbf {y})\) an analogous statement holds.

Note that \(|u_{i}| \le \sqrt{p/n}\) by definition of \(\Lambda \), and that

By applying the Chernoff bound 2.1 to the random variables \((u_{i} - \mathbb {E}(u_{i}))/2\sqrt{p/n}\) with the choices \(\sigma ^2 = nK||M||^{2}_{2}/4\) and \(\lambda ^2 = nK||M||^{2}_{2}\) these facts imply that for any \(K \ge 1\) the estimate

holds. A similar argument shows that the same inequality holds for \(L(\mathbf {x},\mathbf {y})\) as well. As \(S = L+U,\) the triangle inequality and the union bound yield the estimate

Next, given \(0< \delta < 1\) define the finite grid

and its mean-zero variant

The following lemma allows us to control the norm of \(A\) on \(\mathcal {S}^{nd}_{0}\) by controlling \(\langle \mathbf {x}, A \mathbf {y}\rangle \) for pairs of vectors in the finite grid instead:

Lemma 6.3

If \(|\langle \mathbf {x}, A \mathbf {y}\rangle | \le c\) for all \((\mathbf {x},\mathbf {y}) \in T^{\delta }_{0} \times T^{\delta }\) then \(|\langle \mathbf {x}, A \mathbf {y}\rangle | \le \frac{cd}{(1-\delta )^2}\) for all \((\mathbf {x},\mathbf {y}) \in \mathcal {S}^{nd}_{0} \times \mathcal {S}^{nd}\).

Proof

Let \(\mathbf {z}= (1-\delta ) \mathbf {x}, \mathbf {u}= (1-\delta ) \mathbf {y}\) and note that

Decompose \(\mathbf {z}\) as \(\mathbf {z}= \mathbf {z}_{1} + \cdots + \mathbf {z}_{d}\) where the non-zero components of \(\mathbf {z}_{k}\) correspond to the \(k^\mathrm{{th}}\) equation in (52); that is, \(\mathbf {z}_k\) contains the \(k^\mathrm{th}\), \((d+k)^\mathrm{th},(2d+k)^\mathrm{th},\) etc. components of \(\mathbf {z}\) and has zeros elsewhere. As

the vector in \(\mathbb {R}^n\) comprised of the non-zero locations of \(\mathbf {z}_{k}\) has norm less than \((1-\delta )\) and entries that sum to zero. By lemma 2.3 of [19], each \(\mathbf {z}_k\) is therefore a convex combination of points of \(T^{\delta }_0,\)

Summing the \(\mathbf {z}_{k}\) then shows that there exists an \(N = J_{1} + \cdots + J_d \in \mathbb {N}\), \(\theta _l > 0\) and \(\mathbf {v}_l \in T^{\delta }_{0}\) so that

Lemma 2.3 of [19] also implies that \(\mathbf {u}\) is a convex combination of points in \(T^{\delta },\) so that there exist \(M \in \mathbb {N}\), \(\eta _{j} > 0\) and \(\mathbf {w}_{j} \in T^{\delta }\) so that

As a consequence,

\(\square \)

An estimate of the total number of points \(|T^{\delta }|,|T^{\delta }_{0}|\) in the \(\delta \)-nets follows from a direct appeal to claim 2.9 of [19]. As \(T^{\delta }_{0} \subset T^{\delta }\) and \(|T^{\delta }| \le \mathrm {e}^{c(nd)}\) for some constant \(c\) that depends on \(\delta \), which follows from claim 2.9 of [19], it follows that \(|T^{\delta }_{0} \times T^{\delta }| \le \mathrm {e}^{2c(nd)}\) as well. Applying the union bound over \(T^{\delta }_{0} \times T^{\delta }\), we may therefore summarize the preceeding in the following lemma:

Lemma 6.4

Given any \(c > 0\) there exists \(c' > 0\) so that with probability at least \(1-\mathrm {e}^{-cn}\) the estimate

holds.

It remains to estimate, for \((\mathbf {x},\mathbf {y}) \in T^{\delta }_0 \times T^{\delta }\), the remaining contribution

where we recall \(\Lambda ^{c} := \{(j,k) : |\langle \mathbf {x}_j, M \mathbf {y}_k \rangle | > \sqrt{p/n} \}\). From Cauchy-Schwarz it follows that

Here and in what follows, for an index subset \(W\) the notation \(\mathbf {1}_{W}\) denotes the indicator function. If we define the sets

and fix an edge \((k,l)\) corresponding to a non-zero term in the sum (54), then \(k \in X_{i}\) and \(l \in Y_j\) for some \((i,j)\) and an edge \((k,l)\) exists between these two vertex sets. Moreover, as \((k,l) \in \Lambda ^{c}\) it follows that

provided we take \(\delta ||M||_{2} \le 1\). As a consequence,

where \(\mathrm {edge}(X_i,Y_j)\) denotes the number of edges between the sets.

To bound (55), we let \(c_{1}\) denote an as-yet-undetermined constant and put

then decompose the sum further as

Assuming that each vertex has degree bounded by \(c_{1}np\) (c.f. lemma 6.5) then \(\mathrm {edge}(X_i,Y_j) \le c_{1}np\min \{|X_i|,|Y_j|\}.\) Thus the first term is bounded by

Noting that

then shows that the first term is \(O(\sqrt{np})\) provided the bounded degree property holds. Fortunately, if \(np > c_{0} \log n\) for any \(c_{0} > 0\), this property holds with probability at least \(1-n^{-\alpha }\) for any \(\alpha > 0\) provided \(c_{1}\) is sufficiently large:

Lemma 6.5

(Bounded Degree) Let \(p \ge c_0 \log n/n\) for any \(c_0 > 0\) and \(\mathrm{deg}_1,\ldots ,\mathrm{deg}_n\) denote the vertex degrees of an Erdős–Rényi random graph \(\mathcal {G}(n,p)\). For any \(\alpha > 0\) there exists a \(c_1 = c_1(c_0,\alpha ) > 2\) so that

Proof

Write \(\mathrm{deg}_{i} = \sum _{j} e_{ij}\) where \(e_{ij}\) denote the edges of the graph. As \(\mathbb {E}(\mathrm{deg}_{i}) = np\) and \(\mathrm {Var}(\mathrm{deg}_{i}) = np(1-p) \le (c_{1}-1)np\) it follows from the Chernoff bound and the union bound that

The choice \(\lambda = \sqrt{(c_{1} - 1)np}\) yields

Taking \(c_1\) sufficiently large gives the desired result. \(\square \)

Therefore with probability at least \(1 - n^{-\alpha }\), the first term in (56) is \(O(\sqrt{np})\) uniformly for \((\mathbf {x},\mathbf {y}) \in T^{\delta }_0 \times T^{\delta }\) as long as the bounded degree property holds. It remains to handle the second term. From the definition of \(W^c\), if \(V = \{1,\ldots ,n\}\) and \(2^{|V|}\) its power set we need only consider those sets \(X \in 2^{|V|}\) with \(|X| \le (n/\mathrm {e})\),

Before proceeding, we first need to establish an estimate on the random variable \(\mathrm {edge}(X,Y)\) when \((X,Y) \in V_{s}\):

Lemma 6.6

For \((X,Y) \in V_s\) let \(\mathrm {edge}(X,Y)\) denote the random variable corresponding to the number of edges from an Erdős–Rényi random graph \(\mathcal {G}(n,p)\) between \(X\) and \(Y\). Let \(\mu (X,Y) = \mathbb {E}(\mathrm {edge}(X,Y))\) and \(\mathcal {M}(X,Y) := \max \{|X|,|Y| \}\). Given any \(\alpha > 0\) let \(k_{0}(X,Y)\) denote the unique solution to

Then

Proof

From the union bound, it follows (through slight abuse of notation) that

Using a one-sided concentration inequality (c.f. [32]) for sums of indicator variables,

which is valid for any \(k > 1\), shows that

since \(k_{0} > 1\). It therefore follows that

The fact that \(f(x) := x\log (n\mathrm {e}/x)\) is increasing for \(1 \le x \le n\) then implies

Combining this with the previous estimate yields

as desired. \(\square \)

The definition of \(k_0(X,Y)\) and the fact \(\mu (X,Y) \le p|X||Y|\) then combine to yield the following corollary, which provides the basic estimate for the remaining contribution of \(W^c\) to the sum.

Corollary 6.7

With probability at least \(1-n^{-\alpha }\), the estimate

holds for all \(X,Y \in V_s\).

Estimating the second term in (56)

now proceeds by decomposing into the following cases:

On \(\mathrm {I}\) we have that

due to (57) as before. On \(\mathrm {II}_{\mathrm {A}}\) and \(\mathrm {II}_{\mathrm {B}}\) the bounded degree property implies that \(\mathrm {edge}(X_i,Y_j) \le c_{1}np|X_i|\) and \(\mathrm {edge}(X_i,Y_j) \le c_{1}np|Y_j|,\) respectively. Therefore

For fixed \(i\) let \(j_{\mathrm{max}}(i)\) denote the largest \(j\) so that \((i,j) \in \mathrm {II}_{\mathrm {A}}\). Then

due to (57) once again. By reversing the roles of \(i\) and \(j\), a similar argument shows

as well. For the remaining set \(\mathrm {III},\) let \(\mathrm {III} = \mathrm {III}^{i>j} \cup \mathrm {III}^{j>i}\) where the notation signifies \(|Y_j| \ge |X_i|\) on \(\mathrm {III}^{j>i}\) and vice-versa. We treat the first set and leave the second as an exercise since it follows analogously. Using the probabilistic estimate (61) as a guide, we decompose further into

For the first set the estimate (61) implies

due to the fact that \(2^{j}\sqrt{np} > 2^{i}\), the geometric series argument from (62) and the estimate (57). For the second set note that if \((i,j) \in \mathrm {III}\) then \((i,j) \notin \mathrm {I},\) so that the inequality

holds on \(\mathrm {III}\) by definition. This combines with the definition of \(\mathrm {III}^{j>i}_{\mathrm {B}}\) to imply that

which shows that \(1 \le \log \frac{\mathrm {edge}(X_i,Y_j)}{\mathrm {e}p |X_i||Y_j| }\) and that \(2^i < \sqrt{np}\) as well. The estimate (61) therefore implies

due to the fact that \(2^{i} > \sqrt{np},\) the geometric series argument (62) and the estimate (57), exactly as before. On the final set the facts that

imply that \(\mathrm {edge}(X_i,Y_j) \le \mathrm {e}^2 np |X_i|2^{-2j},\) whence

Arguing as before, the fact that \(2^i2^j > \sqrt{np}\) gives that each sum over \(j\) is bounded by two, so that applying (57) one final time demonstrates that the sum is \(O(\sqrt{np})\).

The following lemma summarizes the preceeding arguments:

Lemma 6.8

Let \(np > c_{0} \log n\) for any \(c_{0} > 0\) and \(\alpha \) denote an arbitrary positive constant. Then there exists a \(c>0\) independent of \(n\) so that with probability at least \(1 - n^{-\alpha }\) the estimate

holds for all \((\mathbf {x},\mathbf {y}) \in T^{\delta }_0 \times T^{\delta }\).

Combining this with the estimate for \(S(\mathbf {x},\mathbf {y})\) and the reduction to the discrete set \(T^{\delta }_0 \times T^{\delta }\) yields the theorem.

Rights and permissions

About this article

Cite this article

von Brecht, J.H., Sudakov, B. & Bertozzi, A.L. Swarming on Random Graphs II. J Stat Phys 158, 699–734 (2015). https://doi.org/10.1007/s10955-014-0923-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-014-0923-0