Abstract

In this work, we discuss and compare three methods for the numerical approximation of constant- and variable-coefficient diffusion equations in both single and composite domains with possible discontinuity in the solution/flux at interfaces, considering (i) the Cut Finite Element Method; (ii) the Difference Potentials Method; and (iii) the summation-by-parts Finite Difference Method. First we give a brief introduction for each of the three methods. Next, we propose benchmark problems, and consider numerical tests—with respect to accuracy and convergence—for linear parabolic problems on a single domain, and continue with similar tests for linear parabolic problems on a composite domain (with the interface defined either explicitly or implicitly). Lastly, a comparative discussion of the methods and numerical results will be given.

Similar content being viewed by others

1 Introduction

Designing methods for the high-order accurate numerical approximation of partial differential equations (PDE) posed on composite domains with interfaces, or on irregular and geometrically complex domains, is crucial in the modeling and analysis of problems from science and engineering. Such problems may arise, for example, in materials science (models for the evolution of grain boundaries in polycrystalline materials), fluid dynamics (the simulation of homogeneous or multi-phase fluids), engineering (wave propagation in an irregular medium or a composite medium with different material properties), biology (models of blood flow or the cardiac action potential), etc. The analytic solutions of the underlying PDE may have non-smooth or even discontinuous features, particularly at material interfaces or at interfaces within a composite medium. Standard numerical techniques involving finite-difference approximations, finite-element approximation, etc., may fail to produce an accurate approximation near the interface, leading one to consider and develop new techniques.

There is extensive existing work addressing numerical approximation of PDE posed on composite domains with interfaces or irregular domains, for example, the boundary integral method [11, 56], difference potentials method [3, 6, 26, 27, 58, 65], immersed boundary method [30, 42, 61, 74], immersed interface method [2, 46, 47, 49, 69], ghost fluid method [31, 32, 50, 51], the matched interface and boundary method [82, 84,85,86], Cartesian grid embedded boundary method [19, 41, 57, 83], multigrid method for elliptic problems with discontinuous coefficients on an arbitrary interface [18], virtual node method [9, 39], Voronoi interface method [35, 36], the finite difference method [8, 10, 24, 75, 78, 79] and finite volume method [22, 34] based on mapped grids, or cut finite element method [13,14,15, 37, 38, 71, 76]. Indeed, there have been great advances in numerical methods for the approximation of PDE posed on composite domains with interfaces, or on irregular domains. However, it is still a challenge to design high-order accurate and computationally-efficient methods for PDE posed in these complicated geometries, especially for time-dependent problems, problems with variable coefficients, or problems with general boundary/interface conditions.

The aim of this work is to establish benchmark (test) problems for the numerical approximation of parabolic PDE defined in irregular or composite domains. The considered models (Sect. 2) arise in the study of mass or heat diffusion in single or composite materials, or as simplified models in other areas (e.g., biology, materials science, etc.). The formulated test problems (Sect. 4) are intended (a) to be suitable for comparison of high-order accurate numerical methods—and will be used as such in this study—and (b) to be useful in further research. Moreover, the proposed problems include a wide variety of possibilities relevant in applications, which any robust numerical method should resolve accurately, including constant diffusion; time-varying diffusion; high frequency oscillations in the analytical solution; large jumps in diffusion coefficients, solution, and/or flux; etc. For now, we will consider a simplified geometrical setting, with the intent of setting a “baseline” from which further research, or more involved comparisons, might be conducted. Therefore, in Sect. 2 we will introduce two circular geometries, which are defined either explicitly, or implicitly via a level set function.

In Sect. 3, we briefly introduce the numerical methods we will consider in this work, i.e., second- and fourth-order versions of (i) the Cut Finite Element Method (cut-FEM); (ii) the Difference Potentials Method (DPM), with Finite Difference approximation as the underlying discretization in the current work; and (iii) the summation-by-parts Finite Difference Method combined with the simultaneous approximation term technique (SBP–SAT–FD). These three methods are all modern numerical methods which may be designed for problems in irregular or composite domains, allowing for high-order accurate numerical approximation, even at points close to irregular interfaces or boundaries. We will apply each method to the formulated benchmark problems, and compare results. From the comparisons, we expect to learn what further developments of the methods at hand would be most important.

To resolve geometrical features of irregular domains, both cut-FEM and DPM use a Cartesian grid on top of the domain, which need not conform with boundaries or interfaces. These types of methods are often characterized as “immersed” or “embedded”. In the finite difference framework, embedded methods for parabolic problems are developed in [1, 23]. For comparison with cut-FEM and DPM, however, in this paper we use a finite difference method based on a conforming approach. The finite difference operators we use satisfy a summation-by-parts principle. Then, in combination with the SAT method to weakly impose boundary and interface conditions, an energy estimate of the semi-discretization can be derived to ensure stability. In addition, we use curvilinear grids and transfinite interpolation to resolve complex geometries.

For recent work on SBP–SAT–FD for wave equations in composite domains, see [10, 17, 75, 78], and the two review papers [21, 73]; for recent work in DPM for elliptic/parabolic problems in composite domains with interface defined explicitly, see [3,4,5,6, 26,27,28, 58, 59, 65]; and for recent work in cut-FEM, see [13,14,15, 37, 53, 71].

The paper is outlined as follows. In Sect. 2, we give brief overview of the continuous formulation of the parabolic problems in a single domain or a composite domain. In Sect. 3, we give introductions to the basics of the three proposed methods: cut-FEM, DPM, and SBP–SAT–FD. In Sect. 4, we formulate the numerical test problems. In Sect. 5, we present extensive numerical comparisons of errors and convergence rates, between the second- and fourth-order versions of each method. The comparisons include single domain problems with constant or time-dependent diffusivity; and interface problems with interface defined explicitly, or implicitly by a level set function. In Sect. 6, we give a comparative discussion of the three methods and the numerical results, together with a discussion on future research directions. Lastly, in Sect. 7, we give our concluding remarks.

2 Statement of Problem

In this section, we describe two diffusion problems, which will be the setting for our proposed benchmark (test) problems in Sect. 4. (Recall from Sect. 1 that these models arise, for example, in the study of mass or heat diffusion.) For brevity, in the following discussion, we denote \(u := u(x, y, t)\) and \(u_s := u_s(x, y, t)\), with \(s = 1, 2\).

2.1 The Single Domain Problem

First, we consider the linear parabolic PDE on a single domain \(\varOmega \) (e.g., Fig. 1a), with variable diffusion \(\lambda (t)\):

subject to initial and Dirichlet boundary conditions:

Here, the initial and boundary data \(u^0(x, y)\) and \(\psi (x, y, t)\), the diffusion coefficient \(\lambda (t)\), the forcing function f(x, y, t), and the final time T are known (given) data.

2.2 The Composite Domain Problem

Next, we consider the linear parabolic PDE on a composite domain \(\varOmega := \varOmega _1 \cup \varOmega _2\) (e.g., Fig. 1b), with constant diffusion coefficients \((\lambda _1, \lambda _2)\):

subject to initial conditions:

Dirichlet boundary conditions:

and interface/matching conditions:

In formula (9), \(\frac{\partial u_s}{\partial n}\), \(s = 1, 2\) denotes the normal derivative at the interface \(\varGamma \), i.e., \(\frac{\partial u_s}{\partial n} = \nabla u_s \cdot \mathbf {n}\), where \(\mathbf {n}\) is the outward unit normal vector at the interface \(\varGamma \). The initial, boundary, and interface data \(u_1^0(x, y)\), \(u_2^0(x, y)\), \(\psi (x, y, t)\), \(\mu _1(x, y, t)\), and \(\mu _2(x, y, t)\); the diffusion coefficients \((\lambda _1, \lambda _2)\); the forcing functions \(f_1(x,y,t)\) and \(f_2(x,y,t)\); and the final time T are some known (given) data.

Remark 1

We consider the circular geometries depicted in Fig. 1 as the geometrical setting for our proposed benchmark problems in this work. In applications (Sect. 1), other geometries will likely be considered, some much more complicated than Fig. 1. While our methods can handle more complicated geometry, this is (to the best of our knowledge) the first work looking to establish benchmarks—and compare numerical methods—for parabolic interface problems (3–9). As such, we think that the geometries in Fig. 1 are a good “baseline”—without all the added complexities that more complicated geometries might produce—from which further research, or more involved comparisons, might be done.

To be more specific, we aim to define a simple set of test problems that can be easily implemented and tested for any numerical scheme of interest. With circular domains, it suffices for us to compare/contrast performance of the numerical methods on a simple geometry with smooth boundary versus on a composite domain with fixed interface (explicit or implicit). The approximation of the solution to such composite-domain problems are already challenging for any numerical methods, since (i) the solution may fail to be smooth (or may be discontinuous) at the interface, and (ii) there may be discontinuous material coefficients (\(\lambda _1 \not = \lambda _2\)).

Remark 2

For both the single and composite domain problems, we could also consider other boundary conditions, e.g., a Neumann boundary condition as in [6, 13], etc.

3 Overview of Numerical Methods

3.1 Cut-FEM

In this section, we give a brief presentation of the cut-FEM method. For a more detailed presentation of cut-FEM, see, for example, [13, 14, 53].

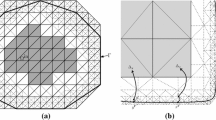

Let \(\varOmega _s\) be covered by a structured triangulation, \(\mathcal {T}_{s}\), so that each element \(T\in \mathcal {T}_{s}\) has some part inside of \(\varOmega _s\); see Fig. 2a and b. Here, \(s = 1,2\) is an index for the composite domain problem (3–9), which will be omitted when referring to the single domain problem (1, 2). (For the latter, note that \(\mathcal {T}\) covers \(\varOmega \).) Typically \(\mathcal {T}_1\) and \(\mathcal {T}_2\) would be created from a larger mesh by removing some of the cells. Further, let \(\mathcal {T}_\varGamma =\{T\in \mathcal {T}: T \cap \varGamma \ne \emptyset \}\) be the set of intersected elements; see Fig. 2c. In the following, we shall use \(\varGamma \) both for the immersed boundary of the single domain problem and for the immersed interface of the composite domain problem, in order to make the connection to the set \(\mathcal {T}_\varGamma \) clearer.

To construct the finite element spaces we use Lagrange elements with Gauss–Lobatto nodes of order p (\(Q_p\)-elements). Let \(V_h^s\) denote a continuous finite element space on \(\varOmega _s\), consisting of \(Q_p\)-elements on the mesh \(\mathcal {T}_s\):

For the single domain problem (1, 2) we solve for the solution \(u \in V_h\); while for the composite domain problem (3–9), we solve for the pair \(\{ {u}_{1} , {u}_{2} \}\in V_h^1 \times V_h^2\). For the latter problem, this means that the degrees of freedom are doubled over elements belonging to \(\mathcal {T}_\varGamma \).

We begin by stating the weak formulation for the single domain problem (1, 2). Let \((\cdot ,\cdot )_X\) and \(\left\langle \cdot ,\cdot \right\rangle _Y\) be the \(L_2\) scalar products taken over the two- and one-dimensional domains \(X\subset \mathbb {R}^2\) and \(Y\subset \mathbb {R}^{1}\), respectively. The present method is based on modifying the weak formulation by using Nitsche’s method [60] to enforce the boundary condition (2). By multiplying (1) with a test function \(v\in V_h\), and integrating by parts, we obtain:

Note that (2) is consistent with the following terms:

where \(\gamma _D\) is a constant, and \(h_T\) is the side length of the quadrilaterals in the triangulation. Now, adding (12, 13) to (11) gives the following weak form: Find \(u \in V_h\) such that

where

For \(\mathcal {T}_\varGamma \) (the elements intersected by \(\varGamma \)), note that one must integrate only over the part of the element that lies inside \(\varOmega \). A problem with this is that one cannot control how the intersections (cuts) between \(\varOmega \) and \(\mathcal {T}\) are made. Depending on how \(\varOmega \) is located with respect to the triangulation, some elements can have an arbitrarily small intersection with the domain—see, for example, Fig. 3a. If \(\varOmega \) is moved with respect to \(\mathcal {T}\) to make the cut arbitrarily small, then the condition numbers of the mass and stiffness matrices can become arbitrarily large.

To mitigate this issue, in this work we add a stabilizing term j—defined shortly in (19)—to the mass and stiffness matrices, so that their condition numbers are bounded, independently of how the domain \(\varOmega \) is located with respect to the triangulation \(\mathcal {T}\) [14, 53]. Adding stabilization to (14) results in the following weak form: Find \(u \in V_h\) such that

where \(\gamma _M\) and \(\gamma _A\) are scalar constants.

In order to state the definition of stabilization (19), denote by \(\mathcal {F}_{s}\) the set of faces, as seen in Fig. 3b and c. That is, \(\mathcal {F}_{s}\) is the set of all faces of the elements in \(\mathcal {T}_\varGamma \), excluding the boundary faces of \(\mathcal {T}_s\):

Then, the stabilization term is defined as:

where \([u] = u|_{F_+}-u|_{F_-}\) is the jump over a face, F; n refers to a normal of F; and \(\partial _n^k u\) denotes the k-th order normal derivative. The scaling with respect to k of the terms in (19) is based on how the stabilization was derived. In particular, the k!-factors come from the Taylor-expansion and the factor \(2k+1\) comes from integrating each term once.

We now consider the composite domain problem (3–9). To derive the weak formulation, one follows essentially the same steps as for the single domain problem, namely:

-

1.

For both (3) and (4), multiply the equation for \({u}_{s}\) with a test function \({v}_{s} \in V_h^s\), and then integrate by parts;

-

2.

Add terms consistent with the interface and boundary conditions; and

-

3.

Add stabilization terms \(j_1\) and \(j_2\) over \(\mathcal {F}_1\) and \(\mathcal {F}_2\), respectively.

This results in the following weak formulation for (3–9). Find \(u=\{ {u}_{1} , {u}_{2} \} \in V_h^1 \times V_h^2 \) such that:

where the bilinear forms M and A correspond to the stabilized mass and stiffness matrices:

\(L_\varOmega \) corresponds to the forcing function:

\(a_\varGamma \) and \(L_\varGamma \) consistently enforce the interface conditions (8, 9):

and the terms \(a_{\partial \varOmega }\) and \(L_{\partial \varOmega }\) enforce the boundary condition (7) along the outer boundary, \(\partial \varOmega \):

In (24–27), n denotes the outward pointing normal at either \(\varGamma \) or \(\partial \varOmega \) (depending on the domain of integration); \(\kappa _1 + \kappa _2 = 1\), so that \(\{ v \} = \kappa _1 v_1+ \kappa _2 v_2\) is a convex combination; and \(\gamma _\varGamma \), \(\kappa _1\), \(\kappa _2\) are chosen as in [13]:

The remaining parameters (appearing in Eqs. 21, 22, 26–28) are given by:

The scaling of \(\gamma _D\) with respect to p follows from an inverse inequality. When \(p=1\) these reduce to the same parameters as the ones used in [71], where \(\gamma _M\) was chosen based on numerical experiments on the condition number of the mass matrix. This also agrees with the choice of \(\gamma _A\) and \(\gamma _D\) in [14], where \(\gamma _A\) was investigated numerically.

In order to use cut-FEM, one needs a way to perform integration over the intersected elements \(\mathcal {T}_\varGamma \). For example, with the interface problem, on each element \(K \in \mathcal {T}_\varGamma \), we need a quadrature rule for the \(K\cap \varOmega _1\), \(K\cap \varOmega _2\) and \(K\cap \varGamma \). For the numerical tests in this work (Sect. 4), we represent the geometry by a level set function, and compute high-order accurate quadrature rules with the algorithm from [68].

Remark 3

Optimal (second-order) convergence was rigorously proven for cut-FEM applied to the Poisson problem in [14]. As far as we know, there is no rigorous proof of higher-order convergence for cut-FEM, though such a proof would likely be similar to the second-order case.

3.2 DPM

We continue in this section with a brief introduction to the Difference Potentials Method (DPM), which was originally proposed by Ryaben’kii (see [64,65,66], and see [29, 33] for papers in his honor). Our aim is to consider the numerical approximation of PDEs on arbitrary, smooth geometries (defined either explicitly or implicitly) using the DPM together with standard, finite-difference discretizations of (1) or (3, 4) on uniform, Cartesian grids, which need not conform with boundaries or interfaces. To this end, we work with high-order methods for interface problems based on Difference Potentials, which were originally developed in [67] and [3,4,5,6, 26, 28]. We also introduce new developments here for handling implicitly-defined geometries. (The reader can consult [65] for the general theory of the Difference Potentials Method.)

Broadly, the main idea of the DPM is to reduce uniquely solvable and well-posed boundary value problems in a domain \(\varOmega \) to pseudo-differential Boundary Equations with Projections (BEP) on the boundary of \(\varOmega \). First, we introduce a computationally simple auxiliary domain as part of the method. The original domain is embedded into the auxiliary domain, which is then discretized using a uniform Cartesian grid. Next, we define a Difference Potentials operator via the solution of a simple Auxiliary Problem (defined on the auxilairy domain), and construct the discrete, pseudo-differential Boundary Equations with Projections (BEP) at grid points near the continuous boundary or interface \(\varGamma \). (This set of grid points is called the discrete grid boundary.) Once constructed, the BEP are then solved together with the boundary/interface conditions to obtain the value of the solution at the discrete grid boundary. Lastly, using these reconstructed values of the solution at the discrete grid boundary, the approximation to the solution in the domain \(\varOmega \) is obtained through the discrete, generalized Green’s formula.

Mathematically, the DPM is a discrete analog of the method of Calderón’s potentials in the theory of partial differential equations. The DPM, however, does not require explicit knowledge of Green’s functions. Although we use an Auxiliary Problem (AP) discretized by finite differences, the DPM is not limited to this choice of spatial discretization. Indeed, numerical methods based on the idea of Difference Potentials can be designed with whichever choice of spatial discretization is most natural for the problem at hand (e.g., see [25]).

Practically, the main computational complexity of the DPM reduces to the required solutions of the AP, which can be done very efficiently using fast, standard \(\mathcal {O}(N \log N)\) solvers. Moreover, in general the DPM can be applied to problems with general boundary or interface conditions, with no change to the discretization of the PDE.

Let us now briefly introduce the DPM for the numerical approximation of parabolic interface models (3–9). First, we must introduce the point-sets that will be used throughout the DPM. (Note that the main construction of the method below applies to the single domain problem (1, 2), after omitting the index s and replacing interface conditions with boundary conditions; see [6].)

Let \(\varOmega _s\) (\(s=1,2\)) be embedded in a rectangular auxiliary domain \(\varOmega _{s}^0\). Introduce a uniform, Cartesian grid denoted \(M_s^0\) on \(\varOmega _s^0\), with grid-spacing \(h_s\). Let \(M_s^+ = M_s^0 \cap \varOmega _s\) denote the grid points inside each subdomain \(\varOmega _s\), and \(M_s^- = M_s^0 {\setminus } M_s^+\) the grid points outside each subdomain \(\varOmega _s\). Note that the auxiliary domains \(\varOmega _1^0\), \(\varOmega _2^0\) and auxiliary grids \(M_1^0\), \(M_2^0\) need not agree, and indeed may be selected completely independently, given considerations regarding accuracy, adaptivity, or efficiency.

Define a finite-difference stencil \(N_{j, k}^{s, \alpha }\), with \(\alpha = 5, 9\), to be the stencil of the standard five-point or a wide nine-point Laplacian, i.e.,

Next, with \(\alpha \) fixed, define the point-sets

The point-set \(N_s^+\) (\(N_s^-\), \(N_s^0\)) enlarges the point-set \(M_s^+\) (\(M_s^-\), \(M_s^0\)), by taking the union of finite-difference stencils at every point in \(M_s^+\) (\(M_s^-\), \(M_s^0\)). Therefore, \(N_s^+\) contains a “thin row” of points belonging to the complement of \(\varOmega _s\), so that \(N_s^+ \not \subset \varOmega _s\), even though \(M_s^+ \subset \varOmega _s\). (Likewise, \(N_s^- \not \subset \varOmega {\setminus } \varOmega _s\), even though \(M_s^- \subset \varOmega {\setminus } \varOmega _s\)).

Lastly, we now define the important point-set

which we call the discrete grid boundary. In words, \(\gamma _s\) is the set of grid points that straddle the continuous interface \(\varGamma \). (See Fig. 4 for an example of these point-sets, given a single elliptical domain \(\varOmega \).) Note that the point-sets \(M_s^+\), \(N_s^+\), and \(\gamma _s\) will be used throughout the Difference Potentials Method.

An example of the point-sets for the second-order Difference Potentials Method, applied to a single domain (s omitted for brevity), with a rotated ellipse \(\varOmega \) and a rectangular auxiliary domain \(\varOmega ^0\), showing (a) the physical grids \(M^+\) (solid dots) and \(N^+\) (open circles), with \(M^+\subset N^+\), and (b) the discrete grid boundary \(\gamma \) (open circles)

Here, we define the fully-discrete finite-difference discretization of (3, 4), and then define the Auxiliary Problem. Indeed, the discretization we consider is

where (i) \(L_{\Delta t, h}^s u_{s}^{i+1} := \lambda _s(t^{i+1}) \Delta _h u_{s}^{i+1} - \sigma u_{s}^{i+1}\), (ii) \(\Delta _h\) is either a five- or nine-point Laplacian in each subdomain, and (iii) \(\sigma \) and \(F_s^{i+1}\) follow from the choice of time- and spatial-discretizations. (Here, we have simplified notation slightly by assuming that \(h := h_1 = h_2\), which need not be the same in general.) For full details of the discretization, including the choice of BDF2 or BDF4 in the time discretization, we refer the reader to “Appendix 8.1”.

The choices of discretization (33) in each subdomain need not be the same. As in [3, 6], one could choose a second- and fourth-order discretization on \(M_1^+\) and \(M_2^+\), respectively, given considerations about accuracy, adaptivity, expected regularity of the analytical solution in each domain, etc.

Next, we define the discrete Auxiliary Problem, which plays a central role in the construction of the Difference Potentials operator, the resulting Boundary Equations with Projection at the discrete grid boundary, and in the numerical approximation of the solution via the discrete, generalized Green’s formula.

Definition 1

(Discrete Auxiliary Problem (AP)) At time \(t^{i+1}\), given the right-hand side grid function \(q_s^{i+1}\): \(M_s^0 \rightarrow \mathbb {R}\), the following difference Eqs. (34, 35) are defined as the discrete AP.

Remark 4

For a given right-hand side \(q_s^{i + 1}\), the solution of the discrete AP (34, 35) defines a discrete Green’s operator \(G_{\Delta t, h}^s q_s^{i + 1}\). The choice of boundary conditions (35) will affect the resulting grid function \(G_{\Delta t, h}^s q_s^{i + 1}\), and thus the Boundary Equations with Projection defined below. However, the choice of boundary conditions (35) in the AP will not affect the numerical approximation of (3–9), so long as the discrete AP is uniquely solvable and well-posed.

Let us denote by \(G_{\Delta t, h}^s F_s^{i + 1}\) the particular solution on \(N^+_s\) of the fully-discrete problem (33), defined by solving the AP (34, 35) with

and restricting the solution from \(N_s^0\) to \(N_s^+\).

Let us also introduce a linear space \(\mathbf {V}_{\gamma _s}\) of all grid functions denoted \(v_{\gamma _s}^{i + 1}\), which are defined on \(\gamma _s\) and extended by zero to the other points of \(N_s^0\). These grid functions are referred to as discrete densities on \(\gamma _s\).

Definition 2

(The Difference Potential of a density.) The Difference Potential of a given density \(v_{\gamma _s}^{i + 1}\) is the grid function \(P_{N_s^+}^{i + 1} v_{\gamma _s}^{i + 1}\) on \(N_s^+\), defined by solving the AP (34, 35) with

and restricting the solution from \(N_s^0\) to the point-set \(N_s^+\).

Note that \(P_{N_s^+}^{i + 1} : \mathbf {V}_{\gamma _s} \rightarrow N_s^+\) is a linear operator on the space \(\mathbf {V}_{\gamma _s}\) of densities. Moreover, the coefficients of \(P_{N_s^+}^{i + 1}\) can be computed by solving the AP (Definition 1) with the appropriate density \(v_{\gamma _s}^{i+1}\) defined at the points \((x_{j}, y_{k}) \in \gamma _s\).

Definition 3

(The Trace operator.) Given a grid function \(v_s^{i + 1}\), we denote by \({\text {Tr}}_{\gamma _s} [v_s^{i + 1}]\) the Trace (or Restriction) from \(N_s^+\) to \(\gamma _s\).

Moreover, for a given density \(v_{\gamma _s}^{i + 1}\), denote the trace of the Difference Potential of \(v_{\gamma _s}^{i + 1}\) by \(P_{\gamma _s} v_{\gamma _s}^{i + 1}\). In other words, \(P_{\gamma _s} v_{\gamma _s}^{i + 1} = {\text {Tr}}_{\gamma _s} [P_{N_s^+}^{i + 1} v_{\gamma _s}^{i + 1}]\).

Now we can state the central theorem of the Difference Potentials Method that will allow us to reformulate the finite-difference Eq. (33) on \(M_s^+\) (without imposing any boundary or interface conditions yet) into the equivalent Boundary Equations with Projections on \(\gamma _s\).

Theorem 1

(Boundary Equations with Projection (BEP)) At time-level \(t^{i+1}\), the discrete density \(u^{i+1}_{\gamma _s}\) (\(s=1,2\)) is the trace of some solution \(u^{i+1}_s\) on domain \(\varOmega _s\) to the Difference Eq. (33), i.e., \(u^{i+1}_{\gamma _s}:={{\text {Tr}}_{\gamma _s}}[u^{i+1}_s]\), if and only if the following BEP holds

with \({\text {Tr}}_{\gamma _s}[\cdot ]\) and \(P_{\gamma _s}\) defined in Definition 3.

Proof

See [65] for the general theory of DPM (including the proof for general elliptic PDE), or one of [3, 5, 6] for the proof in the case of parabolic interface problems. \(\square \)

Remark 5

A given density \(v_{\gamma _s}^{i+1}\) is the trace of some solution of the fully-discrete finite-difference Eq. (33) if and only if it is a solution of the BEP.

However, since boundary or interface conditions have not yet been imposed, the BEP will have infinitely many solutions \(u_{\gamma _s}^{i+1}\). As originally disucssed in [3,4,5,6, 26, 28], in this work we consider the following approach in order to find a unique solution of the BEP.

At each time level \(t^{i + 1}\), one can approximate the solution of (3–9) at the discrete grid boundary \(\gamma _s\), using the Cauchy data of (3–9) on the continuous interface \(\varGamma \), up to the desired second- or fourth-order accuracy. (By Cauchy data, we mean the trace of the solution of (3–9), together with the trace of its normal derivative, on \(\varGamma \).) Below, we will define an Extension Operator which will extend the Cauchy data of (3–9) from \(\varGamma \) to \(\gamma _s\).

As we will see, the Extension Operator in this work depends only on the given parabolic interface model. Moreover, we will use a finite-dimensional, spectral representation for the Cauchy data of (3–9) on \(\varGamma \). Then, we will use the Extension Operator, together with the BEP (38) and the interface conditions (8, 9), to obtain a linear system of equations for the coefficients of the finite-dimensional, spectral representation. Hence, the derived BEP will be solved for the unknown coefficients of the Cauchy data. Using this obtained Cauchy data, we will construct the approximation of (3–9) using the Extension Operator, together with the discrete, generalized Green’s formula.

Let us now briefly discuss the Extension Operator for the second-order numerical method, and refer the reader to “Appendix 8.2” for details (including details for the fourth-order numerical method). For points in the vicinity of \(\varGamma \), we define a coordinate system \((d, \vartheta )\), where \(\vartheta \) is arclength from some reference point, and d is the signed distance in the normal direction from the point to \(\varGamma \). Now, as a first step towards defining the Extension Operator, we define a new function

where n is the unit outward normal vector at \(\varGamma \). We choose \(p = 2\) for the second-order method (which we will discuss now) and \(p = 4\) for the fourth-order method (see “Appendix 8.2”).

As a next step for the second-order method (BDF2–DPM2), we define

where \(u_s^{i + 1} := u_s(x, y, t^{i + 1})\), \(\frac{\partial u_s^{i + 1}}{\partial n} := \frac{\partial u_s(x, y, t^{i + 1})}{\partial n}\), etc. As a last step, a straightforward sequence of calculations (see “Appendix 8.2”) shows that

where \(\kappa \) denotes the curvature of \(\varGamma \). Therefore, with \(v_s^{i+1}(d, \vartheta )\) defined by (39–41), the only unknown data at each time step \(t^{i + 1}\) are the unknown Dirichlet data \(u_s^{i + 1}\) and the unknown Neumann data \(\frac{\partial u_s^{i + 1}}{\partial n}\). The Extension Operator will incorporate the interface conditions (8, 9) when it is combined with the BEP (38), so that the only independent unknowns at each time step \(t^{i + 1}\) will be \(\left. \left( u_1^{i + 1}, \frac{\partial u_1^{i + 1}}{\partial n} \right) \right| _\varGamma \) or \(\left. \left( u_2^{i + 1}, \frac{\partial u_2^{i + 1}}{\partial n} \right) \right| _\varGamma \). (This is also true for the fourth-order numerical method—see Appendices 8.2 and 8.3.)

Now we are ready to define the Extension Operator that extends the Cauchy data of (3–9) from \(\varGamma \) to \(\gamma _s\).

Definition 4

(The Extension Operator.) Let \(v_s^{i + 1}(d, \vartheta )\) be defined by (39–41). Let \(\mathfrak {u}_{s, \varGamma }^{i + 1}\) denote the Cauchy data of (3–9) at \(t^{i + 1}\) on \(\varGamma \), i.e., \(\mathfrak {u}_{s, \varGamma }^{i + 1} = \left. \left( u_s^{i + 1}, \frac{\partial u_s^{i + 1}}{\partial n} \right) \right| _\varGamma \). The extension operator \({\text {Ex}}_s\) that extends \(\mathfrak {u}_{s, \varGamma }^{i + 1}\) from \(\varGamma \) to \(\gamma _s\) is

For a given point \((x_j, y_k) \in \gamma _s\), note that d is the signed distance between \((x_j, y_k)\) and its orthogonal projection on \(\varGamma \), while \(\vartheta \) is the arclength along \(\varGamma \) between a reference point and the orthogonal projection of \((x_j, y_k)\).

Next, we briefly discuss the finite-dimensional, spectral representation of Cauchy data \(\mathfrak {u}_{s, \varGamma }^{i + 1}\). Indeed, we wish to choose a basis \(\phi _{\nu }(\vartheta )\) on \(\varGamma \) (\(\nu = 1, 2, 3, \ldots \)) in order to accurately approximate the two components of the Cauchy data \(\mathfrak {u}_{s, \varGamma }^{i + 1}\). To be specific, whichever basis we choose, we require that

tends to zero as \(\mathcal {N}^0, \mathcal {N}^1 \rightarrow \infty \), for some sequence of real numbers \((c_{1, \nu }^{s, i+1})_{\nu =1}^{\mathcal {N}^0}\) and \((c_{2, \nu }^{s, i+1})_{\nu =1}^{\mathcal {N}^1}\). In other words, we require

Now let us discuss a choice of basis. In this work, recall that we consider interfaces \(\varGamma \) that are at least \(C^2(\varGamma )\) (due to the choice of smooth, circular geometries). Also, as we will see in Sect. 4.1, each function u considered in the test problems on a composite domain (TP–2A, TP–2B, TP–2C) is locally smooth, in the sense that \(u|_{\varOmega _1} = u_1\) and \(u|_{\varOmega _2} = u_2\) are smooth in \(\varOmega _1\) and \(\varOmega _2\), respectively. Moreover, each component of the Cauchy data \(\mathfrak {u}_{1, \varGamma }^{i + 1}\) and \(\mathfrak {u}_{2, \varGamma }^{i + 1}\) are smooth, periodic functions of arclength \(\vartheta \). (Note that \(\mathfrak {u}_{1, \varGamma }^{i + 1}\) and \(\mathfrak {u}_{2, \varGamma }^{i + 1}\) need not agree, and indeed do not—neither \(\mu _1(x, y, t)\) nor \(\mu _2(x, y, t)\) in (8, 9) are identically equal to zero, for any of our test problems on a composite domain.)

Therefore, in this work, we choose a standard trigonometric basis \(\phi _\nu (\vartheta )\), with

and \( K > 1 \). Moreover, at every time step \(t^{i + 1}\), we will discretize the Cauchy data \(\mathfrak {u}_{s, \varGamma }^{i + 1} = \left. \left( u_s^{i + 1}, \frac{\partial u_s^{i + 1}}{\partial n} \right) \right| _\varGamma \) using this basis. Therefore, we let

where \(\Phi ^0_{\nu } = (\phi _{\nu },0)\) and \(\Phi ^1_{\nu } = (0, \phi _{\nu })\) are the set of basis functions used to represent the Cauchy data on the interface \(\varGamma \).

Remark 6

It should be also possible to relax regularity assumption on the domain under consideration. For example, one can consider piecewise-smooth, locally-supported basis functions (defined on \(\varGamma \)) as the part of the Extension Operator. For example, [52] use this approach to design a high-order accurate numerical method for the Helmholtz equation, in a geometry with a reentrant corner. Furthermore, [80, 81] combine the DPM together with the XFEM, and design a DPM for linear elasticity in a non-Lipschitz domain (with a cut).

Next, in “Appendix 8.3”, we derive a linear system for the coefficients \((c_{1, \nu }^{s, i+1})_{\nu =1}^{\mathcal {N}^0}\) and \((c_{2, \nu }^{s, i+1})_{\nu =1}^{\mathcal {N}^1}\), by combining the interface conditions (8, 9), the BEP (38), the Extension Operator (39–41), and the spectral discretization (46). Then, the numerical approximation \(u_s^{i+1} \approx u_s(x_j, y_k, t^{i + 1})\) of (3–9) at all grid-points \((x_j, y_k) \in N_s^+\) follows directly from the discrete, generalized Green’s formula, which we state now.

Definition 5

(Discrete, generalized Green’s formula.) At each time step \(t^{i + 1}\), the numerical approximation \(u_s^{i+1} \approx u_s(x_j, y_k, t^{i + 1})|_{(x_j, y_k) \in N_s^+}\) of (3–9) is given by

Here, \(u_{\gamma _s}^{i + 1} = {\text {Ex}}_s \tilde{\mathfrak {u}}_{s, \varGamma }^{i + 1}\), and \(\tilde{\mathfrak {u}}_{s, \varGamma }^{i + 1}\) is constructed from (i) the solution of the BEP (see “Appendix 8.3”) and (ii) the spectral discretization (46). (Recall that \(P_{N_s^+}^{i + 1} u_{\gamma _s}^{i + 1}\) is the Difference Potential of the density \(u_{\gamma _s}^{i + 1}\), while \(G_{\Delta t, h}^s F_s^{i + 1}\) is the Particular Solution.)

In this work, we also propose a novel feature of DPM, extending the method originally developed in [67] and [3,4,5,6, 26, 28] to the composite domain problem (3–9) with implicitly-defined geometry. The primary difference between Difference Potentials Methods on explicitly-defined versus implicitly-defined composite domains is in the approximation of the interface \(\varGamma \), which must be done accurately and efficiently, in order to maintain the desired second- or fourth-order accuracy.

The main idea of DPM-based methods for implicitly-defined geometry is to seek an accurate and efficient explicit parameterization of the implicit boundary/interface. First, we represent the geometry implicitly via a level set function F(x, y) on \(M^0\). Then we construct a local interpolant \(\tilde{F}(x, y)\) of F(x, y) on a subset of \(M^0\) near the continuous interface \(\varGamma \). Next, we parameterize \(\varGamma \) by arc-length using numerical quadrature. With this parameterization, we (i) compute the Fourier series expansion from initial conditions for the Cauchy data \(\mathfrak {u}_{s, \varGamma }^{i + 1}\) on the implicit interface \(\varGamma \), and (ii) construct the extension operators (Definition 4) with \(p=2\) or \(p=4\).

Conjecture 1

(High-order accuracy of the DPM with implicit geometry) Due to the second- or fourth-order accuracy (in both space and time) of the underlying discretization (33), the extension operator (42) with \(p=2\) or \(p=4\), and the established error estimates and convergence results for the DPM for general linear elliptic boundary value problems on smooth domains (presented in [33, 62, 63, 65]), we expect second- and fourth-order accuracy in the maximum norm for the error in the computed solution (59 or 60) for both the single and composite domain parabolic problems.

Remark 7

Indeed, in the numerical results (Sect. 5) we see that the computed solution (47) at every time level \(t^{i+1}\) has accuracy \(\mathcal {O}(h^2 + \Delta t^2)\) for the second-order method, and \(\mathcal {O}(h^4 + \Delta t^4)\) for the fourth-order method, for both the single and composite domain problems, with explicit or implicit geometry. See [3, 6, 27, 67] for more details and numerical tests involving explicit (circular and elliptical) geometries.

Main Steps of the algorithm Let us summarize the main steps for the Difference Potentials Method.

-

Step 1 Introduce a computationally simple Auxiliary Domain \(\varOmega _s^0\) (\(s = 1,2\)) and formulate the Auxiliary Problem (AP; Definition 1).

-

Step 2 At each time step \(t^{i+1}\), compute the Particular Solution \(u_{s}^{i+1} = G^{i+1}_{\Delta t, h} F^{i+1}_{s}\), \((x_j,y_k)\in N_s^+\), using the AP with the right-hand side (36).

-

Step 3 Construct the matrix in the boundary Eq. (79) (discussed in “Appendix 8.3”), derived from the Boundary Equation with Projection (BEP) (38), via several solutions of the AP. (When the diffusion coefficients \(\lambda _s\) are constant, this is done once, as a pre-processing step before the first time step.)

-

Step 4 Solve boundary Eq. (79) and compute the approximation of the density \(u^{i+1}_{\gamma _s}\), by applying the Extension Operator (42) to the solution of (79).

-

Step 5 Construct the Difference Potentials \(P_{N_s^+\gamma _s} u^{i+1}_{\gamma _s}\) of the density \(u_{\gamma _s}^{i + 1}\), using the AP with the right-hand side (37).

-

Step 6 Compute the numerical approximation \(u_s^{i+1} \approx u_s(x_j, y_k, t^{i + 1})\) of the PDE (3–9) using the discrete, generalized Green’s formula (47).

3.3 SBP–SAT–FD

We continue in this section with a brief presentation of SBP–SAT–FD, for solving the parabolic problems presented in Sect. 2. For more detailed discussions of the SBP–SAT–FD method, we refer the reader to two review papers [21, 73].

The SBP–SAT–FD method was originally used on Cartesian grids. To resolve complex geometries, we consider a grid mapping approach by transfinite interpolation [43]. A smooth mapping requires that the physical domain is a quadrilateral, possibly with smooth, curved sides. If the physical domain does not have the desired shape, we then partition the physical domain into subdomains, so that each subdomain can be mapped smoothly to the reference domain. As an example, the single domain of equation (1, 2), shown in Figure 5a, is divided into five subdomains. The five subdomains consist of one square subdomain, and four identical quadrilateral subdomains (modulo rotation by \(\pi /2\)) with curved sides. Similarly, the composite domain of equation (3–9) is divided into nine subdomains, as shown in Fig. 5b. Suitable interface conditions are imposed to patch the subdomains together.

Although the side-length of the centered square is arbitrary (as long as the square is strictly inside the circle), its size and position have a significant impact on the quality of the curvilinear grid. In a high-quality mesh, the elements should not be skewed too much, and the sizes of the elements should be nearly uniform. In practice, it is usually difficult to know a priori the optimal way of domain division.

A Cartesian grid in the reference domain is mapped to a curvilinear grid in each subdomain. The grids are aligned with boundaries and interfaces, thus avoiding small-cut difficulties sometimes associated with embedded methods. In this paper, we only consider conforming grid interfaces, i.e., the grid points from two adjacent blocks match on the interface. For numerical treatment of non-conforming grid interfaces in the SBP–SAT–FD framework, see [44, 55].

A (a) circular domain (cf. Fig. 1), divided into five subdomains; and (b) composite domain, divided into nine subdomains

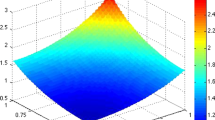

When a physical domain is mapped to a reference domain, the governing equation is transformed to the Cartesian coordinate in the reference domain. The transformed equation is usually in a more complicated form than the original equation. In general, a parabolic problem

in a physical domain will be transformed to

where \((\xi ,\eta )\) is the Cartesian coordinate in the unit square, and \(J(\xi ,\eta )\), \(\alpha (\xi ,\eta )\), \(\beta (\xi ,\eta )\), \(\gamma (\xi ,\eta )\) depend on the geometry of the physical domain and on the chosen mapping. In particular, we use transfinite interpolation for the grid mapping. In this case, the precise form of (49) and the derivation of the grid transformation are presented in Section 3.2 of [7]. Even though the original equation is in the simplest form with unit coefficients, the transformed equation has variable coefficients and mixed derivatives. Therefore, it is important to construct multi-block finite difference methods solving the transformed equation (49). Hence, we need two SBP operators, \(D_1\approx \partial /\partial x\) to approximate a first derivative, and \(D^{(b)}_2\approx \partial /\partial x(b(x)\partial /\partial x)\) to approximate a second derivative with variable coefficient, where \(b(x)>0\) is a known function. Below we discuss SBP properties, and start with the first derivative.

Consider two smooth functions u(x), v(x) on \(x\in [0,1]\). We discretize [0, 1] uniformly by N grid points, and denote the restriction of u(x), v(x) onto the grid by \(\mathbf {u},\mathbf {v}\), respectively. Integration by parts states:

The SBP operator \(D_1\) mimics integration by parts:

where H is symmetric positive definite—thus defining an inner product—and

In fact, H is also a quadrature [20]. It is easy to verify that (51) is equivalent to

which is the SBP property for the first derivative operator. At the grid points in the interior of the domain, standard, central, finite-difference stencils can be used in \(D_1\), and the weights of the standard, discrete \(L_2\)-norm are used in H. At a few points close to boundaries, special stencils and weights must be constructed in \(D_1\) and H, respectively, to satisfy (52).

The SBP operators \(D_1\) were first constructed in [45] and later revisited in [72]. The SBP norm H can be diagonal or non-diagonal. While non-diagonal norm SBP operators have a better accuracy property than diagonal norm SBP operators, when terms with variable coefficients are present in the equation, a stability proof is only possible with diagonal norm SBP operators. Therefore, we use diagonal norm SBP operators in this paper.

For a second derivative with variable coefficients, the SBP operators \(D^{(b)}_2\) were constructed in [54]. We remark that applying \(D_1\) twice also approximates a second derivative, but is less accurate and more computationally expensive than \(D^{(b)}_2\).

Due to the choice of centered difference stencils at interior grid points, the order of accuracy of the SBP operators is even at these points, and is often denoted by 2p. To fulfill the SBP property, at a few grid points near boundaries, the order of accuracy is reduced to p for diagonal norm operators. This detail notwithstanding, such a scheme is often referred to as \(2p^{th}\)-order accurate. In fact, for the second- and fourth-order SBP–SAT–FD schemes used in this paper to solve parabolic problems, we can expect a second- and fourth-order overall convergence rate, respectively [77].

An SBP operator only approximates a derivative. When imposing boundary and interface conditions, it is important that the SBP property is preserved and an energy estimate is obtained. For this reason, we consider the SAT method [16], where penalty terms are added to the semi-discretization, imposing the boundary and interface conditions weakly. This bears similarities with the Nitsche finite element method [60] and the discontinuous Galerkin method [40].

We note that in [75], SBP–SAT–FD methods were developed for the wave equation

with Dirichlet boundary conditions, Neumann boundary conditions, and interface conditions. Comparing Eq. (53) with (49), the only difference is that the wave equation has a second derivative in time, while the heat equation has a first derivative in time. The spatial derivatives of (53) and (49) are the same.

Assuming homogeneous boundary data for simplified notation, we write the SBP–SAT–FD discretization of (53) as

where Q is the spatial discretization operator including the boundary implementation. For the scheme developed in [75], stability is proved by the energy method by multiplying (54) by \(\mathbf {v}_t^TH_2\) from the left,

where \(H_2\) is a diagonal, positive-definite operator, obtained through a tensor product from the corresponding SBP norm, H, in one spatial dimension. It is shown in [75] that \(H_2Q\) is symmetric and negative semi-definite. Therefore, we can write (55) as

where the discrete energy, \(\mathbf {v}^T_tH_2\mathbf {v}_t - \mathbf {v}^TH_2Q\mathbf {v}\), for (53) is conserved.

If we use the same operator Q to discretize the heat equation (49) with the same boundary condition as the wave equation (53), then the scheme

is also stable. To see this, we multiply (56) by \(\mathbf {v}^TH_2\) from the left, and obtain

where \(\mathbf {v}^TH_2\mathbf {v}\) is the discrete energy for (49). In this paper, we use the spatial discretization operators developed in [75] to solve both the single (1, 2) and composite domain problems (3–9).

In [10], SBP–SAT–FD methods are discussed for the one-dimensional heat equation with constant coefficients, both in a single domain and a composite domain. In theory, these schemes can also be generalized to solve Eq. (49), but are different from the ones used in this paper.

4 Test Problems

In this section, we first list the test problems that we will consider (in Sect. 4.1), and then briefly motivate and discuss these choices (in Sect. 4.2). The tests we propose are “manufactured solutions”, in the sense that we state an exact solution u(x, y, t) or \((u_1(x, y, t), \, u_2(x, y, t))\) and a diffusion coefficient \(\lambda (t)\) or \((\lambda _1, \lambda _2)\). From (1, 2) (for the single domain problem) or (3–9) (for the composite domain problem) we compute the (i) right-hand side, (ii) initial conditions, (iii) boundary condition, and (iv) functions \((\mu _1(x, y, t), \, \mu _2(x, y, t))\) for the interface/matching conditions. Then, (i–iv), together with the diffusion coefficient, serve as the inputs for our numerical methods.

4.1 List of Test Problems

-

1.

Single-domain, with an explicitly-defined boundary for DPM and SBP–SAT–FD, or an implicitly-defined boundary for cut-FEM.

-

(a)

Constant diffusion (Test Problem 1A; TP–1A): Consider the PDE (1, 2), with \(\lambda (t) \equiv 1\), \(\varOmega = \{ (x, y) \in \mathbb {R}^2 : x^2 + y^2 \le 1 \}\), and the final time \(T=1.0\). Then, TP–1A (adapted from [6]), is given by

-

(b)

Time-varying diffusion (Test Problem 3A; TP–3A): Same as TP–1A, but with diffusion coefficient

-

(a)

-

2.

Composite-domain, with an explicitly-defined interface (for DPM and SBP–SAT–FD) or implicitly-defined interface (for cut-FEM and DPM). Consider the PDE (3–9), with \(\varOmega = [-2, 2] \times [-2, 2]\), \(\varOmega _2 = \{ (x, y) \in \mathbb {R}^2 : x^2 + y^2 \le 1 \}\), \(\varOmega _1 = \varOmega {\setminus } \varOmega _2\), \(\varGamma = \{ (x, y) \in \mathbb {R}^2 : x^2 + y^2 = 1 \}\), and the final time \(T = 1.0\).

-

(a)

(Test Problem 2A; TP–2A): A modified version of the test adapted from [6, 48]. Let \((\lambda _1, \lambda _2) = (10, 1)\), and

-

(b)

High-frequency oscillations (Test Problem 2B; TP–2B): A modified version of the test adapted from [6]. Let \((\lambda _1, \lambda _2) = (10, 1)\), and

-

(c)

Large contrast in diffusion coefficients, and large jumps in both solution and flux at interface (Test Problem 2C; TP–2C): Let \((\lambda _1, \lambda _2) = (1000, 1)\), and

-

(a)

4.2 Motivation of the Chosen Test Problems

Test Problem 1A (TP–1A) involves a high-degree polynomial, with total degree of 17. This is a rather straightforward test problem, which allows us to establish a good “baseline” with which to compare each method. The choice of high degree ensures that there will be no cancellation of local truncation error, so that we should see—at most—second- or fourth-order convergence for the given methods, barring some type of superconvergence. Next, (TP–3A) adds on (incrementally) the complication of time-varying diffusion.

Likewise, (TP–2A) offers a straightforward “baseline” with which to consider the interface problem: The test problem is piecewise-smooth, and the geometry is simplified (see Remark 1). However, there is a jump in both the analytical solution and its flux, which requires a well-designed numerical method to accurately approximate. Moreover, (TP–2A) was first proposed in [48] (see also [6]), and is a good comparison with the immersed interface method therein.

Then, (TP–2B) adds additional challenges onto (TP–2A) in the form of much higher-frequency oscillations; while (TP–2C) adds onto (TP–2A) in the form of both (i) large contrast in diffusion, and (ii) large jumps in the analytical solution and its flux.

5 Numerical Results

5.1 Time Discretization

The spatial discretization for each method is discussed in Sect. 3. For the time discretization, the backward differentiation formulas of second- and fourth-order (BDF2 and BDF4) are used for the second- and fourth-order methods, respectively. In each case, the time-step is given by

However, note that h in (58) bears different physical meanings for each method. Indeed, for cut-FEM, h is the average distance between the Gauss–Lobatto points; for DPM, h is the grid spacing in the uniform, Cartesian grid \(M^0\) (see the text prior to (33)); and for SBP–SAT–FD, h is the minimum grid spacing in the reference domain.

5.2 Measure for Comparison

Let \(\smash {u_{j, k}^i}\) denote the computed numerical approximation of u(x, y, t) at the grid-point \((x_j, y_k) \in \varOmega \) and time \(t^i = i \Delta t \in (0, T]\). For the three methods, we will compare the size of the maximum error in u at the grid points, with respect to the number of degrees of freedom (DOF). For the single domain problem (1, 2), the maximum error is computed as:

and for the composite domain problem (3–9) as:

5.3 Convergence Results

In the following tables and figures, we state the number of degrees of freedom in the grid, maximum error (59, 60 for the single- and composite-domain problems, respectively), and an estimate of the rate of convergence.

In Tables 1, 2, 3, 4 and 5, the estimate of rate of convergence is computed as follows. Let \((\text {DOF}_{n}, \, E_n)\) be given, with \(n = 1, 2, 3\) referring to the first, second, and third grids (from coarsest to finest). Then, for \(n = 2, 3\), compute the standard estimate

which is the estimated rate of convergence, denoted in Tables 1, 2, 3, 4 and 5 by “Rate”.

In Figs. 6, 7, 10, 11 and 12, the estimate of rate of convergence is computed differently. Computing a least-square linear regression for the data \((\log _{10}(\sqrt{\text {DOF}_n}), \, \log _{10}(E_n))\) gives a line with slope m, where m is the estimate of rate of convergence, reported in the legend on the right side of each figure.

Overall, we see in Tables 1, 2, 3, 4 and 5 that the error for second-order methods (denoted, for brevity, as CUT2, DPM2, SBP2) on the finest mesh is similar, or sometimes larger, than the error for fourth-order methods (denoted CUT4, DPM4, SBP4) on the coarsest mesh—this illustrates the effectiveness of higher-order methods, when high accuracy is important. Additionally, comparing the three methods together, the size of the errors for the single-domain problems (TP–1A, TP–3A) are similar, up to a constant factor; while for the composite-domain problems (TP–2A, TP–2B, TP–2C) we do see differences of one or two orders of magnitude, with the DPM having the smallest errors.

In Table 1 and Fig. 6, we observe that the measured rates of convergence for the numerical approximation of Test Problem 1A (TP–1A) are all \(\approx 2\) (for the second-order versions) or \(\approx 4\) (for the fourth-order versions), except for DPM4, which for this test problem is superconvergent, with fifth-order convergence. Such higher-than-expected convergence might occur due to several reasons—for example, (i) if the geometry is smooth; (ii) if the magnitude of the derivatives have fast decay (effectively reducing the local truncation error by a factor of h); or (iii) if there is cancellation of error due to symmetries in the geometry, or in the analytical solution.

Table 2 and Fig. 7 show the numerical results for (TP–3A). This test problem has the same manufactured solution as (TP–1A), but with a time-varying diffusion coefficient. Despite this added complexity, the numerical results are the same order of accuracy, and in many cases the errors are the same up to seven digits, when compared with the results for (TP–1A). This similarity in the numerical results demonstrates that the three methods can robustly handle time-varying diffusion coefficients.

The plots of spatial error at the final time \(T = 1.0\), shown in Fig. 8, are representative of other tests (not included in this text) on a single circular domain. The error in the cut-FEM solution presents largely at the boundary; the error in the DPM solution typically has smooth error, even for grid points very near \(\varGamma \); while the error in the SBP–SAT–FD solution is not smooth at interfaces introduced by the domain partitioning.

The plots of spatial error at the final time \(T =1.0\) for (TP–2A) are shown in Fig. 9. These plots are fairly representative of the other composite domain tests reported herein, and also of others test problems not included in this work. As in Fig. 8, the cut-FEM has its largest error at degrees of freedom on cut (intersected) elements; the DPM has piecewise smooth error, including even grid points at the boundary/interface; and the SBP–SAT–FD has its largest error at the interfaces between computational subdomains, with particularly pronounced error at the corners of \(\varOmega \), where the grid is most stretched.

Regarding the max-norm error in presented in Table 3 and Fig. 10, we see that the DPM has smaller max-norm by more than an order of magnitude. We also observe that the convergence rate of the fourth-order SBP–SAT–FD is only three. This suboptimal convergence is inline with the error plot in Fig. 9c, which shows that the error at the corners of the domain is significantly larger than elsewhere. In addition, the error is only non-smooth along the interfaces on the two diagonal lines of the domain. We have also measured the \(L_2\) error at the final time \(T=1.0\) (not reported in this work), and fourth-order convergence is obtained.

In Table 4 and Fig. 11, we see the numerical results for (TP–2B). The analytical solution is similar to (TP–2A), though much more oscillatory—this additional challenge is manifested by an increase in error by several orders of magnitude.

In Table 5 and Fig. 12, we see the numerical results for (TP–2C), which shows that our numerical methods are robust to large jumps in diffusion coefficients, the analytical solution, and/or the flux of the true solution. Also, observe that the errors from DPM2/DPM4 (explicit geometry) and DPM2-I/DPM4-I (implicit geometry) in Tables 3, 4 and 5 are almost identical, which demonstrates the robustness and flexibility of the DPM.

6 Discussion

There are many possible methods (Sect. 1) for the numerical approximation of PDE posed on irregular domains, or on composite domains with interfaces. In this work, we consider three such methods, designed for the high-order accurate numerical approximation of parabolic PDEs (1, 2 or 3–9). Each implementation was written, tested, and optimized by the authors most experienced with the method—the cut-Finite Element Method (cut-FEM) by G. Ludvigsson, S. Sticko, G. Kreiss; the Difference Potentials Method (DPM) by K. R. Steffen, Q. Xia, Y. Epshteyn; and the Finite Difference Method satisfying Summation-By-Parts, with a Simultaneous Approximation Term (SBP–SAT–FD) by S. Wang, G. Kreiss. Although we consider only one type of boundary/interface (a circle), we hope that the benchmark problems considered will be a valuable resource, and the numerical results a valuable comparison, for researchers interested in numerical methods for such problems.

The primary differences between the cut-FEM and the standard finite element method are the stabilization terms for near-boundary degrees of freedom, and the quadrature over cut (intersected) elements. Tuning the free parameters in the stabilization terms could mitigate the errors observed in Figs. 8, 9. (We have done some preliminary experiments suggesting that the errors decrease when tuning these parameters, but further investigations are required in order to guarantee robustness.) Given a level-set description of the geometry, there are robust algorithms for constructing the quadrature over cut elements. Together, these differences allow for an immersed (non-conforming) grid to be used. The theoretical base for cut-FEM is well established.

The DPM is based on the equivalence between the discrete system of Eq. (33) and the Boundary Equations with Projection (Theorem 1). The formulation outlined in Sect. 3.2 allows for an immersed (non-conforming) grid; fast \(\mathcal {O}(N \log N)\) algorithms, even for problems with general, smooth geometry; and reduces the size of the system to be solved at each time-step. The convergence theory is well-established for general, linear, elliptic boundary value problems, and we conjecture in Sect. 3.2 that this extends to the current setting. In this work, we have extended DPM to work with implicitly-defined geometries for the first time. This is a first step for solving problems where the interface moves with time.

In the finite difference framework (the SBP–SAT–FD method, in this work), the SBP property makes it possible to prove stability and convergence for high-order methods by an energy method. Combined with the SAT method to impose boundary and interface conditions, the SBP–SAT–FD method can be efficient to solve time-dependent PDE. Geometrical features are resolved by curvilinear mapping, which requires an explicit parameterization of boundaries and interfaces. High quality grid generation is important—our experiments, though not reported in this work, have shown that the error in the solution is sensitive to both the orthogonality of the grid and the grid stretching.

Similarities between the cut-FEM and the DPM (beyond the use of an immersed grid) include the thin layer of cut cells along the boundaries/interfaces (cut-FEM) and the discrete grid boundary \(\gamma \) (DPM); and the use of higher-order normal derivatives in the stabilization term (cut-FEM) and extension operator (in the Boundary Equations with Projection; DPM). A similarity between the cut-FEM and SBP–SAT–FD is the weak imposition of boundary conditions, via Nitsche’s method (cut-FEM) or the SAT method (SBP–SAT–FD). In this work, the DPM and the SBP–SAT–FD method both use an underlying finite-difference discretization, but the DPM is not restricted to this type of discretization.

Although both the cut-FEM and the DPM use higher-order normal derivatives in their treatment of the boundary/interface, the precise usage differs. For cut-FEM, it is the normal of the element interfaces cut by \(\varGamma \), while for DPM, it is the normal of the boundary/interface \(\varGamma \). Moreover, in the cut-FEM, stabilization terms (19) involving higher-order normal derivatives at the boundaries of cut-elements are added to the weak form of the PDE, to control the condition number of the mass and stiffness matrices, with a priori estimation of parameters to guarantee positive-definiteness of these matrices; while in the DPM, the Boundary Equations with Projection is combined with the Extension Operator (Definition 4), which incorporates higher-order normal derivatives at the boundary/interface \(\varGamma \).

Returning to Sect. 5.3, we see (in Tables 1, 2, 3, 4, 5 and Figs. 6, 7, 8, 9, 10, 11, 12) that the expected rate of convergence for the second- and fourth-order versions of DPM and cut-FEM is achieved, while the DPM has the smallest error constant across all tests. For the SBP–SAT–FD method, expected convergence rates are obtained in some experiments. A noticeable exception is Test Problem 2A, for which the fourth-order SBP–SAT–FD method only has a convergence rate of three.

From the error plot in Fig. 9c, we observe that the large error is localized at the four corners of the domain \(\varOmega \), where the curvilinear grid is non-orthogonal and is stretched the most (see Fig. 5b).

As seen in the error plots (Figs. 8, 9), the error for the cut-FEM and the SBP–SAT–FD has “spikes”, while for the DPM the error is smooth. A surprising observation from Fig. 9 is that conforming grids (on which the SBP–SAT–FD method is designed) do not necessarily produce more accurate solutions than immersed grids (on which the cut-FEM and the DPM are designed). Indeed, it is challenging to construct a high-quality curvilinear grid for the considered composite domain problem.

Future directions we hope to consider (in the context of new developments and also further comparisons) include: (i) parabolic problems with moving boundaries/interfaces, (ii) comparison of numerical methods for interface problems involving wave equations [12, 70, 71, 75, 78], (iii) extending our methods to consider PDEs in 3D, (iv) design of fast algorithms, and (v) design of adaptive versions of our methods.

Indeed, for (i), difficulties for the cut-FEM might be the costly construction of quadrature, while for DPM difficulties might be the accurate construction of extension operators. Regarding (iii), this has already been done for the cut-FEM and SBP–SAT–FD; while for the DPM, this is current work, with the main steps extending from 2D to 3D in a straightforward manner.

7 Conclusion

In this work, we propose a set of benchmark problems to test numerical methods for parabolic partial differential equations in irregular or composite domains, in the simplified geometric setting of Sect. 2, with the interface defined either explicitly or implicitly. Next, we compare and contrast three methods for the numerical approximation of such problems: the (i) cut-FEM; (ii) DPM; and (iii) SBP–SAT–FD. Brief introductions of the three numerical methods are given in Sect. 3. It is noteworthy that the DPM has, for the first time, been extended to problems with an implicitly-defined interface.

For the three methods, the numerical results in Sect. 5.3 illustrate the high-order accuracy. Similar errors (different by a constant factor) are observed at grid points away from the boundary/interface, while the observed errors near the boundary/interface vary depending upon the given method. Although we consider only test problems with circular boundary/interface, the ideas underlying the three methods can readily be extended to more general geometries.

In general, all three methods require an accurate and efficient resolution of the explicitly- or implicitly-defined irregular geometry: cut-FEM relies on accurate quadrature rules for cut elements, and a good choice of stabilization parameters; DPM relies on an accurate and efficient representation of Cauchy data using a good choice of basis functions; and SBP–SAT–FD relies on the smooth parametrization to generate a high-quality curvilinear grid.

References

Abarbanel, S., Ditkowski, A.: Asymptotically stable fourth-order accurate schemes for the diffusion equation on complex shapes. J. Comput. Phys. 133, 279–288 (1997). https://doi.org/10.1006/jcph.1997.5653

Adams, L., Li, Z.: The immersed interface/multigrid methods for interface problems. SIAM J. Sci. Comput. 24(2), 463–479 (2002). https://doi.org/10.1137/S1064827501389849

Albright, J.: Numerical Methods Based on Difference Potentials for Models with Material Interfaces. Ph.D. thesis, University of Utah (2016)

Albright, J., Epshteyn, Y., Medvinsky, M., Xia, Q.: High-order numerical schemes based on difference potentials for 2D elliptic problems with material interfaces. Appl. Numer. Math. 111, 64–91 (2017). https://doi.org/10.1016/j.apnum.2016.08.017

Albright, J., Epshteyn, Y., Steffen, K.R.: High-order accurate difference potentials methods for parabolic problems. Appl. Numer. Math. 93, 87–106 (2015). https://doi.org/10.1016/j.apnum.2014.08.002

Albright, J., Epshteyn, Y., Xia, Q.: High-order accurate methods based on difference potentials for 2D parabolic interface models. Commun. Math. Sci. 15(4), 985–1019 (2017). https://doi.org/10.4310/CMS.2017.v15.n4.a4

Almquist, M., Karasalo, I., Mattsson, K.: Atmospheric sound propagation over large-scale irregular terrain. J. Sci. Comput. 61, 369–397 (2014). https://doi.org/10.1007/s10915-014-9830-4

Appelö, D., Petersson, N.A.: A stable finite difference method for the elastic wave equation on complex geometries with free surfaces. Commun. Comput. Phys. 5(1), 84–107 (2009)

Bedrossian, J., Von Brecht, J.H., Zhu, S., Sifakis, E., Teran, J.M.: A second order virtual node method for elliptic problems with interfaces and irregular domains. J. Comput. Phys. 229(18), 6405–6426 (2010). https://doi.org/10.1016/j.jcp.2010.05.002

Berg, J., Nordström, J.: Spectral analysis of the continuous and discretized heat and advection equation on single and multiple domains. Appl. Numer. Math. 62, 1620–1638 (2012). https://doi.org/10.1016/j.apnum.2012.05.002

Bouchon, M., Campillo, M., Gaffet, S.: A boundary integral equation-discrete wavenumber representation method to study wave propagation in multilayered media having irregular interfaces. Geophysics 54(9), 1134–1140 (1989). https://doi.org/10.1190/1.1442748

Britt, S., Tsynkov, S., Turkel, E.: Numerical solution of the wave equation with variable wave speed on nonconforming domains by high-order difference potentials. J. Comput. Phys. (2017). https://doi.org/10.1016/j.jcp.2017.10.049

Burman, E., Claus, S., Hansbo, P., Larson, M.G., Massing, A.: CutFEM: Discretizing geometry and partial differential equations. Int. J. Numer. Methods Eng. 104(7), 472–501 (2015). https://doi.org/10.1002/nme.4823

Burman, E., Hansbo, P.: Fictitious domain finite element methods using cut elements: II. A stabilized Nitsche method. Appl. Numer. Math. 62(4), 328–341 (2012). https://doi.org/10.1016/j.apnum.2011.01.008

Burman, E., Hansbo, P., Larson, M.G., Zahedi, S.: Cut finite element methods for coupled bulksurface problems. Numer. Math. 133(2), 203–231 (2016). https://doi.org/10.1007/s00211-015-0744-3

Carpenter, M.H., Gottlieb, D., Abarbanel, S.: Time-stable boundary conditions for finite-difference schemes solving hyperbolic systems: methodology and application to high-order compact schemes. J. Comput. Phys. 111, 220–236 (1994). https://doi.org/10.1006/jcph.1994.1057

Carpenter, M.H., Nordström, J., Gottlieb, D.: Revisiting and extending interface penalties for multi-domain summation-by-parts operators. J. Sci. Comput. 45(1–3), 118–150 (2009). https://doi.org/10.1007/s10915-009-9301-5

Coco, A., Russo, G.: Second order multigrid methods for elliptic problems with discontinuous coefficients on an arbitrary interface, I: one dimensional problems. Numer. Math. Theory Methods Appl. 5(01), 19–42 (2012). https://doi.org/10.4208/nmtma.2011.m12si02

Crockett, R., Colella, P., Graves, D.T.: A Cartesian grid embedded boundary method for solving the Poisson and heat equations with discontinuous coefficients in three dimensions. J. Comput. Phys. 230(7), 2451–2469 (2011). https://doi.org/10.1016/j.jcp.2010.12.017

Del Rey Fernández, D.C., Boom, P.D., Zingg, D.W.: A generalized framework for nodal first derivative summation-by-parts operators. J. Comput. Phys. 266, 214–239 (2014). https://doi.org/10.1016/j.jcp.2014.01.038

Del Rey Fernández, D.C., Hicken, J.E., Zingg, D.W.: Review of summation-by-parts operators with simultaneous approximation terms for the numerical solution of partial differential equations. Comput. Fluids 95, 171–196 (2014). https://doi.org/10.1016/j.compfluid.2014.02.016

Demirdz̆ić, I., Muzaferija, S.: Finite volume method for stress analysis in complex domains. Int. J. Numer. Methods. Eng. 37(21), 3751–3766 (1994). https://doi.org/10.1002/nme.1620372110

Ditkowski, A., Harness, Y.: High-order embedded finite difference schemes for initial boundary value problems on time dependent irregular domains. J. Sci. Comput. 39, 394–440 (2009). https://doi.org/10.1007/s10915-009-9277-1

Duru, K., Virta, K.: Stable and high order accurate difference methods for the elastic wave equation in discontinuous media. J. Comput. Phys. 279, 37–62 (2014). https://doi.org/10.1016/j.jcp.2014.08.046

Epshteyn, Y.: Upwind-difference potentials method for Patlak–Keller–Segel chemotaxis model. J. Sci. Comput. 53(3), 689–713 (2012). https://doi.org/10.1007/s10915-012-9599-2

Epshteyn, Y.: Algorithms composition approach based on difference potentials method for parabolic problems. Commun. Math. Sci. 12(4), 723–755 (2014). https://doi.org/10.4310/cms.2014.v12.n4.a7

Epshteyn, Y., Medvinsky, M.: On the solution of the elliptic interface problems by difference potentials method. In: Kirby, R.M., Berzins, M., Hesthaven, J.S. (eds.) Spectral and High Order Methods for Partial Differential Equations ICOSAHOM 2014, pp. 197–205. Springer (2015). https://doi.org/10.1007/978-3-319-19800-2_16

Epshteyn, Y., Phippen, S.: High-order difference potentials methods for 1D elliptic type models. Appl. Numer. Math. 93, 69–86 (2015). https://doi.org/10.1016/j.apnum.2014.02.005

Epshteyn, Y., Sofronov, I., Tsynkov, S.: Professor V. S. Ryaben’kii. On the occasion of the 90-th birthday. Appl. Numer. Math. 93(Supplement C), 1–2 (2015). https://doi.org/10.1016/j.apnum.2015.02.001

Fadlun, E., Verzicco, R., Orlandi, P., Mohd-Yusof, J.: Combined immersed-boundary finite-difference methods for three-dimensional complex flow simulations. J. Comput. Phys. 161(1), 35–60 (2000). https://doi.org/10.1006/jcph.2000.6484

Fedkiw, R.P., Aslam, T., Merriman, B., Osher, S.: A non-oscillatory Eulerian approach to interfaces in multimaterial flows (the ghost fluid method). J. Comput. Phys. 152(2), 457–492 (1999). https://doi.org/10.1006/jcph.1999.6236

Gibou, F., Fedkiw, R.: A fourth order accurate discretization for the Laplace and heat equations on arbitrary domains, with applications to the Stefan problem. J. Comput. Phys. 202(2), 577–601 (2005). https://doi.org/10.1016/j.jcp.2004.07.018

Godunov, S.K., Zhukov, V.T., Lazarev, M.I., Sofronov, I.L., Turchaninov, V.I., Kholodov, A.S., Tsynkov, S.V., Chetverushkin, B.N., Epshteyn, Y.Y.: Viktor Solomonovich Ryaben’kii and his school (on his 90th birthday). Russ. Math. Surv. 70(6), 1183 (2015). https://doi.org/10.1070/RM2015v070n06ABEH004981

Gong, J., Xuan, L., Ming, P., Zhang, W.: An unstructured finite-volume method for transient heat conduction analysis of multilayer functionally graded materials with mixed grids. Numer. Heat Transfer, Part B 63(3), 222–247 (2013). https://doi.org/10.1080/10407790.2013.751251

Guittet, A., Lepilliez, M., Tanguy, S., Gibou, F.: Solving elliptic problems with discontinuities on irregular domains—the Voronoi interface method. J. Comput. Phys. 298, 747–765 (2015). https://doi.org/10.1016/j.jcp.2015.06.026

Guittet, A., Poignard, C., Gibou, F.: A Voronoi interface approach to cell aggregate electropermeabilization. J. Comput. Phys. 332, 143–159 (2017). https://doi.org/10.1016/j.jcp.2016.11.048

Hansbo, A., Hansbo, P.: An unfitted finite element method, based on Nitsche’s method, for elliptic interface problems. Comput. Methods Appl. Mech. Eng. 191(47), 5537–5552 (2002). https://doi.org/10.1016/S0045-7825(02)00524-8

Hansbo, P., Larson, M.G., Zahedi, S.: A cut finite element method for a Stokes interface problem. Appl. Numer. Math. 85, 90–114 (2014). https://doi.org/10.1016/j.apnum.2014.06.009

Hellrung, J.L., Wang, L., Sifakis, E., Teran, J.M.: A second order virtual node method for elliptic problems with interfaces and irregular domains in three dimensions. J. Comput. Phys. 231(4), 2015–2048 (2012). https://doi.org/10.1016/j.jcp.2011.11.023

Hesthaven, J.S., Warburton, T.: Nodal Discontinuous Galerkin Methods. Springer, Berlin (2008). https://doi.org/10.1007/978-0-387-72067-8

Johansen, H., Colella, P.: A Cartesian grid embedded boundary method for Poisson’s equation on irregular domains. J. Comput. Phys. 147(1), 60–85 (1998). https://doi.org/10.1006/jcph.1998.5965

Kim, J., Kim, D., Choi, H.: An immersed-boundary finite-volume method for simulations of flow in complex geometries. J. Comput. Phys. 171(1), 132–150 (2001). https://doi.org/10.1006/jcph.2001.6778

Knupp, P., Steinberg, S.: Fundamentals of Grid Generation. CRC Press, Boca Raton (1993)

Kozdon, J.E., Wilcox, L.C.: Stable coupling of nonconforming, high-order finite difference methods. SIAM J. Sci. Comput. 38, A923–A952 (2016). https://doi.org/10.1137/15M1022823

Kreiss, H.O., Scherer, G.: Finite element and finite difference methods for hyperbolic partial differential equations. In: Mathematical Aspects of Finite Elements in Partial Differential Equations, Symposium Proceedings pp. 195–212 (1974). https://doi.org/10.1016/B978-0-12-208350-1.50012-1

Leveque, R.J., Li, Z.: The immersed interface method for elliptic equations with discontinuous coefficients and singular sources. SIAM J. Numer. Anal. 31(4), 1019–1044 (1994). https://doi.org/10.1137/0731054

LeVeque, R.J., Li, Z.: Immersed interface methods for Stokes flow with elastic boundaries or surface tension. SIAM J. Sci. Comput. 18(3), 709–735 (1997). https://doi.org/10.1137/S1064827595282532

Li, Z., Ito, K.: The immersed interface method: numerical solutions of PDEs involving interfaces and irregular domains. Front. Appl. Math. Soc. Ind. Appl. Math. (2006). https://doi.org/10.1137/1.9780898717464

Linnick, M.N., Fasel, H.F.: A high-order immersed interface method for simulating unsteady incompressible flows on irregular domains. J. Comput. Phys. 204(1), 157–192 (2005). https://doi.org/10.1016/j.jcp.2004.09.017

Liu, T., Khoo, B., Yeo, K.: Ghost fluid method for strong shock impacting on material interface. J. Comput. Phys. 190(2), 651–681 (2003). https://doi.org/10.1016/S0021-9991(03)00301-2

Liu, X.D., Sideris, T.: Convergence of the ghost fluid method for elliptic equations with interfaces. Math. Comput. 72(244), 1731–1746 (2003). https://doi.org/10.1090/S0025-5718-03-01525-4

Magura, S., Petropavlovsky, S., Tsynkov, S., Turkel, E.: High-order numerical solution of the Helmholtz equation for domains with reentrant corners. Appl. Numer. Math. 118, 87–116 (2017). https://doi.org/10.1016/j.apnum.2017.02.013

Massing, A., Larson, M.G., Logg, A., Rognes, M.E.: A stabilized Nitsche fictitious domain method for the Stokes problem. J. Sci. Comput. 61(3), 604–628 (2014). https://doi.org/10.1007/s10915-014-9838-9

Mattsson, K.: Summation by parts operators for finite difference approximations of second-derivatives with variable coefficient. J. Sci. Comput. 51, 650–682 (2012). https://doi.org/10.1007/s10915-011-9525-z

Mattsson, K., Carpenter, M.H.: Stable and accurate interpolation operators for high-order multiblock finite difference methods. SIAM J. Sci. Comput. 32, 2298–2320 (2010). https://doi.org/10.1137/090750068

Mayo, A.: The fast solution of Poisson’s and the biharmonic equations on irregular regions. SIAM J. Sci. Comput. 21(2), 285–299 (1984). https://doi.org/10.1137/0721021

McCorquodale, P., Colella, P., Johansen, H.: A Cartesian grid embedded boundary method for the heat equation on irregular domains. J. Comput. Phys. 173(2), 620–635 (2001). https://doi.org/10.1006/jcph.2001.6900

Medvinsky, M., Tsynkov, S., Turkel, E.: The method of difference potentials for the Helmholtz equation using compact high order schemes. J. Sci. Comput. 53(1), 150–193 (2012). https://doi.org/10.1007/s10915-012-9602-y

Medvinsky, M., Tsynkov, S., Turkel, E.: Solving the Helmholtz equation for general smooth geometry using simple grids. Wave Motion 62, 75–97 (2016). https://doi.org/10.1016/j.wavemoti.2015.12.004

Nitsche, J.: Über ein variationsprinzip zur lösung von Dirichlet-problemen bei verwendung von teilrämen, die keinen randbedingungen unterworfen sind. Abhandlungen aus dem mathematischen Seminar der Universität Hamburg 36(9), 9–15 (1971). https://doi.org/10.1007/BF02995904