Abstract

Generating replicable and empirically valid models of human decision-making is crucial for the scientific accuracy and reproducibility of agent-based models. A two-fold challenge in developing models of decision-making is a lack of high resolution and high quality behavioral data and the need for more transparent means of translating these data into models. A common and largely successful approach to modeling is hand-crafting agent decision heuristics from qualitative field interviews. This empirically-based, qualitative approach successfully incorporates contextual decision making, heterogeneous preferences, and decision strategies. However, it is labor intensive and often leads to models that are hard to replicate, thereby limiting the scale and scope over which such methods can be usefully applied. A potential solution to these problems is provided by new approaches in natural language processing, which can use textual sources ranging from field interview transcripts to unstructured data from the web to capture and represent human cognition. Here we use word embeddings, a vector-based representation of language, to create agents that reason using similarity comparison. This approach proves to be effective at mirroring theoretical expectations for human decision biases across a range of natural language decision-making tasks. We provide a proof-of-concept agent-based model that illustrates how the agents we create can be readily deployed to study cultural diffusion. The agent-based model replicates previously found results with the added benefit of qualitative interpretability. The agent architecture we propose is able to mirror human likelihood assessments from natural language and offers a new way to model agent cognitive processes for a broad array of agent-based modeling use cases.

Similar content being viewed by others

1 Introduction

Agent-based models (ABM) have become a prominent tool to understand the complex dynamics of human and environment interactions related to global sustainability issues [1]. This approach enables researchers to consider feedbacks between heterogeneous agent decision process and locally dynamic environments, and formally consider the diverse human factors that drive human behavior and global change [2, 3]. Agent-based models have been applied to the study of land use and land cover change [4], common pool resource management [5], agricultural diffusion of innovations [6], and many other human-environment phenomena that impact long-term global sustainability [7]. Recent reviews have found that the ABM community has an opportunity to leverage other literatures on human decision-making, which could significantly improve model performance, validation, and generalizability [3, 8]. In order to take advantage of the breadth of decision models from other disciplines, the ABM community currently faces the two-fold challenge of 1) gathering high resolution and high quality behavioral data for training and validation, and 2) identifying transparent and valid means of translating these data into theoretically sound decision models.

Agent-based modeling has often been used as a tool to synthesize diverse sets of empirical data [9] ranging from qualitative interviews to streams of information from mobile technologies [10]. Traditionally, ABM have been understood as a way to scale-up case-based data and synthesize results into more generalizable findings [11]. Ethnographic and field interviews can be transcribed and then analyzed qualitatively to generate agent decision heuristics, which are “rules-of-thumb” that agents use to make decisions [12]. These can come in the form of extensive sets of if-then statements or hand-tuned multiple criteria utility functions. In this way, a mixture of qualitative and quantitative data can be used to parameterize theoretical models of human decision-making [11]. These approaches have generated significant advances in ABM and our understanding of complex social systems.

Qualitative data has served a critical role in ABM and the decision sciences more broadly because of its ability to help researchers understand the nuanced and contextual aspects of decision-making [12]. Often, researchers have engaged in in-depth case studies using qualitative interviews with individuals, households, or other decision-making units in order to capture the various frames taken and heuristics used to address complex decision problems. In the context of participatory research, qualitative data sources gathered iteratively throughout the modeling process from stakeholders have formed the primary data input for ABM. Such an iterative, participatory, and language-based process can have dramatic effects on stakeholders understanding of the system under study, and thus can play a fundamental role in addressing complex sustainability issues [13].

With the rise of mobile and online technologies, new sources of data are emerging that create a challenge for hand-tuned approaches for generating models of human agents [10]. The volume of data generated by new mobile and online technologies can make it impossible for a human analyst to manage without computer assistance. This creates a new challenge for existing approaches to creating agents that mirror human choices in ABM. While quantitative approaches have been successful in analyzing data such as call logs or email metadata, a significant portion of these new data sources are qualitative and unstructured including online blogs, social media messages, and forums. Without the nuanced and contextual information that qualitative data often provides, overall model performance may be limited.

One potential way forward with qualitative data in ABM is to leverage new approaches from natural language processing to model human cognition. These approaches can help resolve some of the challenges related to the labor intensive nature of translating natural language data sources into agent decision heuristics - arguably, a primary reason that modelers currently use a narrow number of approaches to human behavior in ABM [14]. Unlike hand coding approaches, natural language processing tools are applicable across many scales, capture many of the nuances associated with qualitative research programs including the heuristics approach, and are readily reproducible. What is currently lacking is an explicit connection between these approaches and agent-based modeling.

In this paper, we provide an example of how word embeddings, a natural language processing approach, can be used in combination with agent-based modeling to rapidly generate agents from natural language data. Word embeddings are vector-based representations of words based on word co-occurrence statistics [15]. Similar to many other tasks in natural language processing, we use word embeddings as input features for a downstream language processing task [16], namely agent decision-making in an ABM. After reviewing the relevant literature, we present a new agent architecture that uses word embeddings and natural language text. We provide validation of the agent model across 18 natural language prompts relevant to the domain of human-environment interactions to test for match to theoretical expectation. Finally, we provide a proof-of-concept ABM to illustrate the agents’ performance.

2 Background

2.1 Vector-based representations of agents

Alongside fields, objects, time, and relations, agents play a critical role in modeling individual-level processes in geographic information science (GISci) [17]. Agents have particularly played an important role in representing human processes in geographic information science [18], but building and parameterizing agents remains a challenge for the field [2]. While human decision-making has been represented many different ways as agents, ranging from using sets of if-then statements to utility maximization, a common approach has been to use vectors of integers or real numbers [4].

One of the classic ABM’s is the Epstein and Axtel’s [19] Sugarscape, which represents agent culture as a binary vector in eight dimensions and built on earlier work in cultural evolution that relied on agent ‘tags’ by which agents could identify one another. In this model, agents were either of the culture red or blue based upon whether or not the summed vector was above an integer threshold. Homophily (or in other words, the degree to which agents were attracted to similar agents) was determined by simple similarity comparison between vectors (namely, hamming distance). What was surprising about such representations was that, even in such a highly simplified and abstracted form, rich behaviors emerged that qualitatively resembled observed cultural phenomena.

Following a similar approach, Axelrod [20] developed one of the foundational models of cultural dissemination. Here, culture was represented as a vector containing integers. The probability of agents interacting at a time step was a function of spatial proximity and the proportion of features that agents shared in common. More recently, research has extended the Axelrod model, and has augmented the vector-based approach using the tools of statistical physics. In this literature, agent culture is represented as either a discrete or continuous vector space [21, 22]. Such vector-based statistical models, albeit sophisticated mathematically from the perspective of many social scientists, have largely lacked empirical grounding and have not been evaluated for their cognitive realism [23]. Thus, while these highly abstracted approaches to modeling complex human cultural factors have had considerable theoretical usefulness, it still remains a substantial challenge to ground them in specific cases.

2.2 Word Embeddings: A way to ground ABM’s in natural language

Word embeddings offer a potentially powerful way to advance vector-based representations of humans and more directly ground ABM in data. Word embeddings are vector-based representations of words (e.g. vector space models (VSMs)) that capture critical aspects of semantic meaning [15]. In broad terms, a VSM is a mathematical representation of a text, be it a sentence or something longer, like a document, web page, or interview transcript. In natural language processing (NLP), VSMs in general and word embeddings in particular are an attempt to solve a representational problem – how can language, a highly nonlinear system, be functionally mapped into a linear system that is easily used by a computer while maintaining the semantic relationships between words as understood by the word users? While undoubtedly still a simplification compared to human interpretation of textual data, word embeddings provide a computationally tractable approach to simulate aspects of human decision-making in ABM [16].

A word embedding is one row in a vector space model representation of multiple words (Fig. 1). These models capture aspects of semantic meaning through distance measures between vectors, such as cosine similarity:

where V1 and V2 are word vectors (i.e. embeddings) of an equal length. This means that if two words are close together in vector space, they have a more similar meaning. For example, semantic relationships are maintained with certain linear algebra operations such as addition and subtraction. Word embeddings have been used to perform associative reasoning, where vector addition and subtraction could be used to mirror human associative reasoning [24]. Famously, vector addition and subtraction were used to mirror human associative reasoning (e.g. king - man + women = queen) [25].

Intuitively, word embeddings are able to capture the related meanings of words because they represent a word by its context. For example, the words cow and chicken are more semantically similar than cow and motorcycle because they more often share similar contexts within language use such as “The ____ is in the pasture” and “I’m going to take the _____ to the meat market”. Although stylized examples, they illustrate the point that it’s more likely that cow and chicken would be observed in these contexts than motorcycle. It is this relationship - called the distributional hypothesis in linguistics [26] - that word embeddings capture in a vector that allow them to be useful for assessing similarity between words.

In addition to assessing word similarity, vectors can be crudely combined to create a representation of multiple words and phrases by averaging them together. Then, direct similarity comparisons can be made at the textual level (though more sophisticated approaches exist, for example Mikolov et al. [27]). For the purposes of ABM, word embeddings could be averaged across a phrase that an individual responded to a prompt to create an embedding. This embedding would represent the meaning of that person’s language and could be compared to other vectors based on distance measures such as Eq. (1) [24]. Even when vectors have been averaged together, the closer together in semantic space they are, the more similar their meanings. This means that when the vectors are associated with individual people, they capture one interpretation of the meanings associated with the language produced by that person (Fig. 2). In this way, the meaning of the language used by different people can be directly compared through distance measures such as Eq. (1).

2.3 Word embedding construction

Word embeddings are typically constructed using either deep learning or statistical natural language process approaches, and are learned mathematical representations of language [15, 16]. Broadly, word embeddings are constructed by assessing the statistical co-occurrence of words in a set of texts (corpus). Thus, if words appear in similar contexts, i.e. the words may not occur together, but often share the same neighbors, then it is inferred that the words have similar meanings.

This conception of semantics has been called the distributional hypothesis [28]. Such an approach in part represents how the mind operates in parallel and through weighted connections [25]. Vector and matrix algebra are the principal tools to utilize word embeddings, and the technologies have become a common input into other downstream natural language processing task. While there are multiple approaches for constructing VSMs, they all rely on tabulating word co-occurrence within a moving window of some arbitrary size [15]. As detailed by Baroni et al. [29], two potential methods exist to generate VSM’s from the underlying co-occurrence data, namely prediction-based or count-based approaches.

Prediction-based VSMs are created through a (semi)supervised learning processes. The word2vec software has two prominent models that rely on a simple, single layer neural network architecture [25]. The algorithms work by predicting the target word within the moving window based on its context (continuous bag of words, CBOW) or predict the context based on the target word (Skip-gram) [25]. The CBOW and Skip-gram models represent two sides of the same coin with slightly different performance depending on the size of the training data set. Specifically, CBOW smooths the embeddings by treating contexts as linked observations whereas Skip-gram treats each instance as a separate observation. As a result, CBOW works better on smaller datasets and Skip-gram on larger. When compared directly to human performance, CBOW and Skip-gram achieve between 24 and 64% match with human behavior, depending on the specific task at hand. While this may seem low, they perform as well as inputs to other downstream application tasks such as identifying formal names of people and places and tagging different parts of speech [30].

Count-based VSMs are created by counting the raw co-occurrences of words within a moving window of a document. Using raw co-occurrence counts is most often not accurate because words with very low information content carry a larger than appropriate weight [29]. In order to improve the vector representations, vector transformations are used to reweight word co-occurrences. Often this is done in accord with information theory and through dimensionality reduction, which means condensing a vector from 20,000 or more dimensions (depending on the number of words in the texts) to a representation that has better performance with only 50 to 1000 dimensions. Major advancements have been made recently by making insightful transformations to the raw counts in the input space, leading to state-of-the-art results [31].

One of the most prominent approaches to generating word embeddings is a count-based model, the Global Vectors for Word Representations model [31]. This approach was developed through the realization that concepts became differentiable from one another once the ratio between concepts was considered. For example, the probability a target word k given ice, P(k | ice), or steam, P(k | steam), is not highly differentiable across context co-occurrence probabilities. It is only once the ratio of P(k | ice) / P(k | steam) is found that word attributes such as gas, solid, and water become much greater or much less than 1 in the co-occurrence matrix, which subsequently allows for easy differentiation. Compared to human similarity assessments, percentage alignment with human behavior range from ~50 to ~80% depending on the task.

Depending on how the word embeddings are utilized within an agent, they may or may not account for significant modifiers such as “not.” This is due to the fact that word embeddings do not in-and-of-themselves handle the issue of compositionality, because they do not account for how the rules of grammar influence how smaller sections of text combine with larger sections of text to produce meaning. Tuning word embeddings to account for compositionality is an open area of research in deep learning and natural language processing research [32]. Because our objective is not to advance these fields, but instead translate existing methods for spatial agent-based modeling, we rely on this well-established approach for combining words. The agent architecture described below ignores grammar and does not account for compositionality. As a result, our approach should be understood as a first attempt at using word vectors and is not able to account for modifiers.

2.4 Word Embeddings and representing decision-making

In addition to their use in information retrieval, word embeddings have been used to understand and match multiple complex human processes. Across a diverse range of research fields from psychology to computer science, word embeddings have a proven track record at addressing complex representational challenges for studying human cognitive and social processes through language. Word embeddings have been successfully applied to contexts including language learning [33]; language test taking; reflecting psychological priming effects on decision-making [16]; capturing gender and racial biases [34]; understanding perceptions of political candidates in news media [35]; and matching human likelihood assessment patterns [24].

The primary reason that word embeddings generalize to multiple different domains is because of their ability to mirror human associative memory. Associative memory has been theorized to underlie many of the documented human decision biases, perhaps even forming a general cognitive mechanism by which different types of decision-making emerges [36]. In particular, associative memory is understood to cause the conjunction fallacy, which is the tendency for humans to evaluate the likelihood of two events occurring simultaneously as more likely than one event occurring independently [37]. This tendency has been observed across a wide range of contexts ranging from decision under risk to personality judgement, and thus underlies many of the evaluative decisions human agents make in routine and high-stakes decisions that are of concern for ABM, which can be modeled using word embeddings.

Using pre-trained embeddings Bhatia [24] was able to reproduce the conjunction fallacy across both classic behavioral experiments [37] and hundreds of crowdsourced decision tasks with similar structure, illustrating the generalizability of word embedding to human cognition. To apply the pre-trained embeddings to these tasks, Bhatia assumed that the choice options could be aggregated as a weighted average to produce a phrase embedding. This technique draws on long-standing theories of information accumulation in decision science more generally [38]. This research suggests that word embeddings may form the basis of an easy-to-use tool to translate language into agent decision processes.

3 Data collection and analysis

To assess the ability of word embeddings to ground vector-based representations of humans in ABM, we built and validated an agent architecture for correspondence to associative memory theory in a simple ABM of cultural diffusion. First, we have described the agent architecture in line with the expectations of the Modeling Human Behavior framework that suggest models of human behavior clearly define agent routines for perception, evaluation, state storage, decision-making, and behavior [2]. To assess the agent architecture’s performance, we compared to conjunction fallacy using a series of known and new natural language decision problems. Importantly, while our standard of comparison is the expectation of the conjunction fallacy, word embeddings are understood to mirror more general mechanisms of associative memory [24]. Second, we have described our ABM (see Supplemental Materials for Overview, Design, and Details + Decisions protocol [39]) and used multiple scenarios to illustrate the performance of the agent architecture in spatial simulations. The methods and results for the individual agent architecture and the full ABM are separated into two sections because we wanted to clearly validate the models across levels. We first validated the agent at the individual-level in Section 4; then, built on the individual-level validation results in Section 5, we validated the agent architecture in an ABM. This multi-level, multi-pattern approach is considered to provide a more rigorous approach to validation than single-level validation only [40]. All models were built using Python 3.5 in Jupyter 1.0.0 with Numpy 1.14.0. Visualizations were created using Maplotlib 2.1.0. All code and data – except for the word embeddings files – can be found at https://github.com/runck014/2018-geoinformatica-submission.

4 Agent architecture validation

4.1 Approach

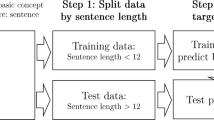

We use algorithms and operations for querying, averaging, and similarity comparison to test word embeddings for human decision making that have shown to be useful in human psychology experiments [24]. The model of choice works as follows: the agent perceives two streams of input data, namely a decision prompt and a set of decision options (Fig. 3). Each input consists of a string of natural language. The agent architecture cleans the raw natural language text by performing stopword (e.g. “a”, “the”, etc.) and punctuation removal, and translates the string into an array (e.g. tokenization). Then, the agent intersects each word with pre-trained word embeddings, which involves matching the string in the prompt with the string associated with each word embedding. Finally, the agent computes the cosine similarity according to Eq. (1) between the prompt and each option and selects the option with the highest similarity.

In this case, the GloVE 6B Gigaword word embeddings were used [31]. These embeddings were created using the GloVe model described above using the 2014 Wikipedia and the English Gigaword Fifth edition corpora of texts for training. Because word embeddings capture the semantic meanings present on the text they are trained on, these embeddings can be understood to capture broad-based understandings of the world and are not tuned for informal contexts such as those trained on social media data.

4.2 Validation data sources

To test this agent architecture, we developed 18 different natural language prompts of relevance to human-environment interactions and agriculture. These prompts were developed to cover a variety of aspects related to risk and social perception in food and agriculture for farmers and consumers. The main requirement for each prompt is that it needed to have an expected theoretical response according to the conjunction fallacy [37]. The conjunction fallacy is the widely observed phenomena that people violate the axiom of probability theory that states the probability that one thing is true, P(A), will always be less than or equal to that thing happening in conjunction with another thing, P(B), e.g. P(A) > = P(A) & P(B); P(B) > = P(B) & P(A). Many models of human choice assume that humans follow the axioms of probability theory [12]. Yet, substantial experimental evidence has consistently shown that humans deviate from this theoretical expectation and instead select options that indicate the P(A) & P(B) > P(A) [36, 37]. This deviation from probability theory is known as the conjunction fallacy.

In human experiments, the general experimental setup used to assess the conjunction fallacy is as follows. The researcher generates a series of questions that ask respondents to read a description of something, such as a person or an event. Then, the respondent is asked to select one of two or more options based on what seems to be most likely to them. In the case of two options, one of the options is specified as consisting of the likelihood of only one item and the other option is the conjunction of two items. For example, two of the most widely used questions to study the conjunction fallacy are those of the Linda and Bill problems, both of which are listed in Appendix Table 1. Here, human respondents consistently choose option 2 - the conjunction - instead of options 1 or 3.

The conjunction fallacy is important for multiple different domains modeled with ABM including risk perception and social perception. Because risk perceptions and social perceptions are thought to be in part influenced by likelihood perceptions [36], the conjunction fallacy is an important factor to consider in these domains. For example, likelihood assessments are important for understand risk perceptions related to human health [41], environmental harm [42], and economic and policy risk [43]. Likelihood assessments are also important for social similarity assessments [44] that have been found to influence human choices related to a wide range of aspects of importance for food and agriculture including land and water management [45], perceptions of the need for regulation [46], and consumer food purchasing behavior [47].

In many cases, people do not follow the conjunction fallacy. This can be because they have been trained to overcome the bias or the specific decision context does not lend itself to the structure of this bias [36]. Importantly though, the approach deployed here has been previously validated against other naturalistic decision-making contexts, where the conjunction fallacy would not be expected to follow, but associative memory may play a role in determine choice patterns [24]. Thus, while theory establish a baseline expectation, they do not establish an absolute gold standard approach to validation across every decision problem.

In the validation prompts deployed here assess the agent architecture’s ability to model risk perceptions (Appendix Table 1; UID 3–9) and social perception (Appendix Table 1; UID 10–18) related to food and agriculture. Food and agriculture are common systems modeled using ABM, and likelihood assessments underlie perceptions that drive a diverse array of agents ranging from farmers to consumers [2, 4, 9]. This agent architecture is intended to eventually be applied to these domains because of the widespread importance of ABM and likelihood assessments in modeling these decision contexts. Inputs were given to the agent as presented in Appendix Table 1.

4.3 Validation results and discussion

The agent architecture matches theoretical expectation on both of the classic psychology questions (Appendix Table 1; UID 1 and 2) related to the conjunction fallacy. Similar to human performance, the proposed agent architecture violates the laws of probability and reproduces the conjunction fallacy. This result is similar to the results of Bhatia [24]. Of the 18 prompts, 50% matched the theoretical expectation (compared to a ~33% chance of random matching). This result is similar to what has been observed in studies related to the ability of vector space models to answer trivia questions or do general knowledge tests [16, 24]. In simple terms, agents using word-embeddings mimic a key feature of human decision-making.

This validation step suggests that language-based, data-driven approaches are able to result in decision patterns that reproduce key features of decision-making. At times, word embeddings match theoretical expectation – which, in theory is because word embeddings mirror the underlying properties of associative memory that in turn drive likelihood perceptions and thus influence risk assessment [36]. In the following, we provide additional interpretation of the validation results. It is important to note, the absolute measure of cosine similarity is not meaningful for the decision-making agent. This is because likelihood perceptions, similar to utility and value perceptions, are considered to be on an ordinal scale, meaning the results can only be compared relative to one another. In cases where it appears small differences exist between options, it does not have any intrinsic meaning from a decision theoretic perspective. From an information retrieval perspective, it suggests that the meaning of all of the phrases were relatively close to the prompt. It is possible, given a stochastic implementation of the agent, that if the variance of choice were larger than the difference between options, reversal of choice may occur; this represents an extension to our current agent architecture and a possibility for future research.

On deeper inspection, the questions where the model does not match the theoretical expectation, it appears to be picking up on other factors relevant to ABM studies (Appendix Table 1; UID 6–8, 10–14, 17). On these questions, the agent chooses options that appear consistent with some stereotypical social descriptions. For example, UID 8 – related to human health and efficacy of applying insecticides to orchards – the agent selects the option that it is more likely to make people sick, as opposed to kill the pests and make people sick (the answer predicted by the conjunction fallacy). This presents an interesting result when compared to the agent response for UID 9, where the only difference in the prompt and options is substituting corn crop for apple orchard. In the case of corn crop, the agent matches theoretical perceptions predicted by the conjunction fallacy. This point suggests both a strength and a weakness of such data-driven approaches – they will reflect whatever biases exist in the training data, which in this case is a large corpus of text scraped from the internet.

In line with how data-driven approaches will reflect the inherent biases embedded in the training data, the agent similarly chooses options that are out of line with the conjunction fallacy. On questions related to social perceptions, UID 11 and 13 had prompts Urban Farm and Organic Farm, the agent more closely associates these phrases with option 1, The farmer values environmental sustainability. Whereas for UID 12, Conventional Farm, the agent more closely associates this phrase with The farmer values money. Similar to the difference between UID 8 and 9, the agent does not choose in line with the conjunction fallacy, but appears to choose in line with certain sub-groups’ perceptions of different types of farms. This fits with the expectation that data-driven approaches will reflect the biases of the data.

Similarly, the model deviates from the conjunction fallacy when asked to associate descriptions of people’s consumption patterns with underlying identities and values (Appendix Table 1; UID 16–18). Here, our agent architecture associates meat consumption, driving large vehicles, and drinking soda with valuing convenience more than environmentalism, whereas a description of someone who hunts and fishes and eats kale is associated with environmentalism more than convenience. The person description in UID 16 – which includes outdoor-clothing line Patagonia, kale, tea drinking, and bike riding – causes a similar agent response. In considering the agent ordering of options, for UID 16, the agent selects option 2 – the person values convenience – and then option 3 – environmentalist – as the next most likely responses. Whether or not humans would choose similarly is an open question for future research.

In these cases where word embeddings do not match the theoretical expectation, they are possibly also reflecting the underlying properties of associative memory – though further research will be needed to unpack whether or not this is true. It may be that people learn patterns of association through their larger cultural and environmental context, which then are subsequently captured in word usage patterns. These ideas – that language meaning and learning is a function of co-occurrence – have been described in different behavioral and cognitive theories of language, even though to date, no consensus has emerged yet around how memory, learning, and decision-making interact to produce choice [16].

As noted above, in the section “Word Embeddings and Representing Decision-making”, word emeddings offer a machine learning approach to modeling important facets of human cognition. Because the approach is data-driven, it will reflect the inherent biases that exist in the training data. These results suggest that, in order for the approach to match real-world cases, the training data will need to come from the target population being studied. The first round of experiments suggest that this approach allows the agent to develop realistic social perceptions of different types of farms and how different consumption patterns signal human identity and values, both of which are important to using ABM to model land change and agriculture. Importantly, even when the agent occasionally fails to match the conjunction fallacy, it deviates from this expectation in a way that further behavioral research may reveal more closely mirrors human choice patterns. These results suggest that this approach to agent construction should be further explored as a way to generate agents that have a high level of empirical validity and are easily constructed using natural language. The value of word embeddings for spatial modeling is that – once deployed in a spatial simulation - they provide a way to begin to capture aspects of how cultural processes interact in specific locations to generate spatial patterns.

5 Simulation experiments with a proof of concept agent-based model

5.1 Methods

The results of the agent architecture validation suggested that an agent built on top of pre-constructed word embeddings has potential use for studying factors related to social comparison and cultural diffusion. To assess the performance of the agent architecture in spatial simulation, we deployed the agent in a variation of Axelrod’s [20] model of cultural diffusion, where social comparison determines agent interactions and cultural flow. Axelrod’s model sought to deal with the general theoretical challenge that, despite a general tendency for cultures to become more similar the more they interact, differences continue to exist in the world between individuals and groups. Here, we adapted the Axelrod model to illustrate the potential of this language-based agent architecture for ABM.

5.2 Model description

In the Axelrod model, each agent was a vector of n dimensions. Each dimension contained an integer and represents a specific type of cultural feature. For example, vector dimension 1 may have held the integer 5. This dimension could have stood for the cultural feature of language, dress, food, religion, or so forth, and 5 represented the type of culture in that broader cultural category. Agents were immobile and placed randomly at a location on a 10 × 10 grid. For each model event, a target agent was selected at random to evaluate whether or not to interact with a random neighbor in their Moore neighborhood (i.e., all neighbors). The probability of interacting was proportional to the similarity of the target agent to the random neighbor. If the agents interact, the target agent selected a cultural feature at random where the agents differ and copies that feature, which mimics the real-world action of people from different cultures interacting and adopting different cultural practices. This process was simulated until the model converges.

In order to apply our general agent architecture to this model, we modified the model routine and the above described agent architecture. First, we replaced the integers in each cultural dimension with a word. Second, the probability that a target agent interacts with a random neighbor was made proportional to the similarity between agents’ average word vectors. If agents interact, the target agent would select a word at random that was not previously known and adds it to its collection of words. We did not limit the size of the agents’ culture, but instead allowed the agent to learn new words as the model progresses. See the Supplemental Materials for a full model description according to the Overview, Design and Details + Decisions protocol [39].

In order to understand the ability of the new agent architecture to qualitatively match the results from Axelrod [20], we used language from two Wikipedia articles on agriculture and corporation. To instantiate the model, agents drew randomly from a common pool of natural language text collected from Wikipedia. We ran the model with three different starting bag-of-word sizes for each agent, namely 5, 10, and 15, which was the same as the original Axelrod [20] experiment. Bag-of-word representations were used because they simplify the how language is represented as an unordered “bag” or set of words and closely mirrors the way Axelrod [20] used integers as disconnected cultural features. The goal of this set of simulations was to understand the extent to which readily available language can be used as an input into ABM. Descriptions scraped from Wikipedia provide some of the most easily collected text available. We used this easily collected text to provide a proof of concept for the agent architecture.

5.3 Agent-based model results and discussion

Simulation results illustrate that word embeddings are able to match the qualitative pattern of cultural diffusion described by Axelrod [20]. Axelrod [20] illustrated that given sufficient time for interaction, few distinct cultural regions would remain in the model. Here, we find a similar dynamic emerges with an adapted model built with the proposed agent architecture (Fig. 4). Agents’ local similarity, calculated as the average cosine similarity in line with Eq. (1) between an agent and its surrounding neighbors, shows the impact of initial conditions on model development. Agents with starting dissimilar words maintain pockets of dissimilarity longer in the model. This illustrates that word embeddings may be useful for understanding how aspects of cultural processes and are set within and constrained by a spatial context, which result in emergent spatial patterns. Because the model relies on qualitative data, we can look more deeply into the agents to understand this path dependency of different locations.

Figure shows time series of model development in space for starting culture size, e.g. bag of words, of 5 words. Heat maps are calculated by computing the cosine similarity between each agent and its neighbors. Similar to Axelrod (1997), cultural similarity increases over time as agents interact, though spatial pockets of differentiation remain where initial large differences existed. In the lower right corner, the average similarity of each agent to every other agent in the model is plotted

Because agents learn language by adding it to the end of their bag-of-words, it is possible to see this learning process over time as a function of who agents interact with. Consider the agent at location (x = 5, y = 5). The initial set of words in this agent were monarch husbandry 105,000 (grains), cereals compared to its neighbor at location (x = 4, y = 5) although registration. Around to at. As time persists, the agents learn new words. Agent at location (x = 5, y = 5) begins to learn words such as development, thousands, divided, and deforestation. Whereas the agent at location (x = 4, x = 5) begins to learn words such as depletion, foods, (grains), liability, and fibers. We see that the agents begin to share the same cognitive models as represented by word embeddings as the model progresses. Agent at location (x = 4, x = 5) eventually learns (grains) and 105,000 and the agent at location (x = 5, y = 5) learns although and eventually registration.

Looking across the 3 different starting agent conditions, we see that the smaller the starting cultural size, the longer it takes for the global similarity to converge to ~1 (Fig. 5). The results qualitatively resemble those of Axelrod (1997), who found that smaller cultures with greater features resulted in more durable groups. Here, we see that with word embedding dimensions of 300, the smaller cultural set takes longer to converge toward 1. The difference in convergence time between starting sizes of 10 and 15 though appear to be less dramatic and appears to indicate a strong averaging effect for additional words on the overall similarity between agents.

Average global similarity comparisons calculated by averaging the cosine similarity (Eq. 1) between each agent and all other agents in the model. Illustrates the impact of the initial size of culture, e.g. bag of words, on the rate of similarity convergence

These preliminary results suggest that the word embeddings are an effective way to represent simple learning dynamics around the dynamics of word diffusion, and more broadly, to represent certain aspects of learning and cognition. This approach to agent communication and learning is clearly a simplification of real-world dynamics, but it successfully serves as a proof-of-concept of an agent architecture that may employ word embeddings. This second experiment extends a well-reviewed and broadly accepted model of human cognition and offers qualitative interpretability by using words instead of integers. This approach is promising because agents use language in this approach to modeling culture and one-to-one comparisons are possible with empirically observed textual data, which in turn allows for more direct validation and parameterization of new agent architectures for specific real-world contexts. Future work could use this modeling approach to directly assess the flow of opinion dynamics in online contexts, test different decision-making and interaction rules, and consider the impacts of different word embeddings.

Jointly, the validation results from Section 4 and Section 5 suggest that the proposed agent architecture is capable of representing both individual-level and group-level behaviors that are of interest to ABM of human-environment interactions. The individual-level validation results illustrated that the agent architecture is able to reproduce the conjunction fallacy on 50% of the prompts. Where the architecture deviates from theoretical expectation, it appears to do so in ways that are consistent with some sub-groups’ social perceptions of agriculture, which may be validated by further behavioral research. The ABM illustrates that even with simple learning dynamics, agents develop similar cultural features and cognitive models over time and show sensitivity to the starting number of features that exist in a culture. Together, this multi-level validation of the agent architecture illustrates the potential for natural language processing tools in general, and particularly word embeddings, to advance ABM.

6 Conclusions

Generating agents from natural language has traditionally relied on qualitative human interpretation and translation into hand-tuned agent decision heuristics. While this approach has generated meaningful scientific advances in our understanding of complex human-environment systems, human-driven approaches are time intensive, struggle to scale to larger data sources, and can be difficult to reproduce or replicate. Here, we presented a new agent architecture based on word embeddings that can successfully replicate many of the dynamics predicted by the conjunction fallacy and associative memory theory. We then deployed this agent architecture in a model of cultural diffusion to illustrate the agents’ potential for ABM and show that as a proof-of-concept the model matches previous theoretical results. Jointly, these results suggest that natural language processing, and particularly word embeddings, have potential to aid in agent construction and simulation.

Future work should focus on three areas. First, additional validation is needed at the systems-level for the general agent architecture. Because the agent architecture is able to reproduce individual-level dynamics, opportunities exist to validate modeled and observed opinion dynamics, which would improve confidence that it is capturing the appropriate social factors of relevance. Second different theories for learning, memory querying, and decision-making should be tested. Agents’ concepts could be tagged with additional information related to each concept, which could be further integrated into the decision-making model. Furthermore, because the associative relationships between concepts in a vector space model inherently implies a weighted graph structure, there may be direct connections to the use of alternative approaches such as causal maps [48] and Bayesian belief networks [49]. While this proof of concept suggests that word embeddings may be an approach to ground ABM in empirical data, significant work remains to accomplish the objective of understanding influence dynamics in real-world contexts [23].

References

National Research Council (2014) Advancing land change modeling: opportunities and research. The. National Academies Press, Washington, D.C.

Schlüter M, Baeza A, Dressler G, Frank K, Groeneveld J, Jager W, Janssen MA, McAllister RRJ, Müller B, Orach K, Schwarz N, Wijermans N (2017) A framework for mapping and comparing behavioural theories in models of social-ecological systems. Ecol Econ 131:21–35. https://doi.org/10.1016/j.ecolecon.2016.08.008

Schulze J, Müller B, Groeneveld J, Grimm V (2017) Agent-based modelling of social-ecological systems: achievements, challenges, and a Way Forward. J Artif Soc Soc Simul 20. https://doi.org/10.18564/jasss.3423

Parker DC, Manson SM, Janssen MA, Hoffman MJ, Deadman P (2003) Multi-agent Systems for the Simulation of land-use and land-cover change: a review. Ann Am Assoc Geogr 93:314–337

Bousquet F, Bakam I, Proton H, Le Page C (1998) Cormas: common-pool resources and multi-agent systems. In: tasks and methods in. Appl Artif Intell:826–837

Berger T (2001) Agent-based spatial models applied to agriculture: a simulation tool for technology diffusion, resource use changes and policy analysis. Agric Econ 25:245–260. https://doi.org/10.1016/S0169-5150(01)00082-2

Ligmann-Zielinska A, Jankowski P (2007) Agent-based models as laboratories for spatially explicit planning policies. Environ Plan B Plan Des 34:316–335. https://doi.org/10.1068/b32088

Groeneveld J, Müller B, Buchmann CM, Dressler G, Guo C, Hase N, Hoffmann F, John F, Klassert C, Lauf T, Liebelt V, Nolzen H, Pannicke N, Schulze J, Weise H, Schwarz N (2017) Theoretical foundations of human decision-making in agent-based land use models – a review. Environ Model Softw 87:39–48. https://doi.org/10.1016/j.envsoft.2016.10.008

Robinson DT, Brown DG, Parker DC, Schreinemachers P, Janssen MA, Huigen M, Wittmer H, Gotts N, Promburom P, Irwin E, Berger T, Gatzweiler F, Barnaud C (2007) Comparison of empirical methods for building agent-based models in land use science. J land use Sci 2:31–55. https://doi.org/10.1080/17474230701201349

Bell AR (2017) Informing decisions in agent-based models — a mobile update. Environ Model Softw 93:310–321. https://doi.org/10.1016/j.envsoft.2017.03.028

Janssen MA, Ostrom E (2006) Empirically Based, Agent-based models. Ecol Soc 11:art37. https://doi.org/10.5751/ES-01861-110237

Baron J (2008) Thinking and deciding, 4th edn. Cambridge University Press, Cambridge

Zellner ML, Lyons LB, Hoch CJ, Weizeorick J, Kunda C, Milz DC (2012) Modeling, learning, and planning together: an application of participatory agent-based modeling to environmental planning. Urisa J 24:77–92

Barberis N (2012) Thirty years of Prospect theory in economics: a review and assessment. SSRN Electron J 27:173–195. https://doi.org/10.2139/ssrn.2177288

Turney PD, Pantel P (2010) From frequency to meaning: vector space models of semantics. J Artif Intell Res 37:141–188. https://doi.org/10.1613/jair.2934

Clark S (2015) Vector Space Models of Lexical Meaning. In: The Handbook of Contemporary Semantic Theory, pp 493–522. Chichester: John Wiley & Sons, Ltd

Yu C, Peuquet DJ (2009) A GeoAgent-based framework for knowledge-oriented representation: embracing social rules in GIS. Int J Geogr Inf Sci 23:923–960. https://doi.org/10.1080/13658810701602104

Heppenstall AJ, Crooks AT, See LM, Batty M (2012) Agent-based models of geographical systems. Springer

Epstein JM, Axtell R (1996) Growing artificial societies: social science from the bottom up. Brookings Institution Press

Axelrod R (1997) The dissemination of culture. J Confl Resolut 41:203–226. https://doi.org/10.1177/0022002797041002001

Battiston F, Nicosia V, Latora V, Miguel MS (2017) Layered social influence promotes multiculturality in the Axelrod model. Sci Rep 7:1809. https://doi.org/10.1038/s41598-017-02040-4

Lorenz J (2007) Continuous opinion dynamics under bounded confidence: a survey. Int J Mod Phys C 18:1819–1838. https://doi.org/10.1142/S0129183107011789

Flache A, Mäs M, Feliciani T, Chattoe-Brown E, Deffuant G, Huet S, Lorenz J (2017) Models of social influence: towards the next frontiers. J Artif Soc Soc Simul 20(2). https://doi.org/10.18564/jasss.3521

Bhatia S (2017) Associative judgment and vector space semantics. Psychol Rev 124:1–20. https://doi.org/10.1037/rev0000047

Mikolov T, Chen K, Corrado G, Dean J (2013) Efficient estimation of word representations in vector space. Proc Int Conf learn represent (ICLR 2013) 1–12. https://doi.org/10.1162/153244303322533223

Cruse DA (2017) The Lexicon. In: The Handbook of Linguistics, 2nd ed. John Wiley & Sons, Ltd

Mikolov T, Sutskever I, Chen K, Corrado G, Dean J (2013) Distributed Representations of Words and Phrases and their Compositionality. https://doi.org/10.1162/jmlr.2003.3.4-5.951

Clark S, Pulman S (2007) Combining symbolic and distributional models of meaning. Proc AAAI Spring Symp Quantum Interact 52–55

Baroni M, Dinu G, Kruszewski G (2014) Don’t count, predict! A systematic comparison of context-counting vs. context-predicting semantic vectors. In: Proceedings of the 52nd annual meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Association for Computational Linguistics, Stroudsburg, PA, USA, pp 238–247

Lai S, Liu K, He S, Zhao J (2016) How to generate a good word embedding. IEEE Intell Syst 31:5–14. https://doi.org/10.1109/MIS.2016.45

Pennington J, Socher R, Manning C (2014) Glove: global vectors for word representation. Proc 2014 Conf Empir methods Nat Lang process 1532–1543. https://doi.org/10.3115/v1/D14-1162

Scheepers T, Kanoulas E, Gavves E (2018) Improving Word Embedding Compositionality using Lexicographic Definitions. Proc 2018 World wide web Conf world wide web - WWW ‘18 1083–1093. https://doi.org/10.1145/3178876.3186007

Landauer TK (2002) On the computational basis of learning and cognition: Arguments from LSA. In: psychology of learning and motivation. Advances in research and theory. pp 43–84

Caliskan-Islam A, Bryson JJ, Narayanan A (2016) Semantics derived automatically from language corpora necessarily contain human biases. arXiv160807187v2 [csAI] 30 Aug 2016 1–14

Bhatia S, Goodwin GP, Walasek L (2018) Trait associations for Hillary Clinton and Donald Trump in news media. Soc Psychol Personal Sci 194855061775158:123–130. https://doi.org/10.1177/1948550617751584

Morewedge CK, Kahneman D (2010) Associative processes in intuitive judgment. Trends Cogn Sci 14:435–440. https://doi.org/10.1016/j.tics.2010.07.004

Tversky A, Kahneman D (1983) Extensional versus intuitive reasoning: the conjunction fallacy in probability judgment. Psychol Rev 90:293–315

Hastie R, Dawes RM (2010) Rational choice in an uncertain world, 2nd ed. Sage Publications

Müller B, Bohn F, Dreßler G, Groeneveld J, Klassert C, Martin R, Schlüter M, Schulze J, Weise H, Schwarz N (2013) Describing human decisions in agent-based models – ODD + D, an extension of the ODD protocol. Environ Model Softw 48:37–48. https://doi.org/10.1016/j.envsoft.2013.06.003

Grimm V, Revilla E, Berger U, Jeltsch F, Mooij WM, Railsback SF, Thulke H-H, Weiner J, Wiegand T, DeAngelis DL (2005) Pattern-oriented modeling of agent-based complex systems: lessons from ecology. Science 310:987–991. https://doi.org/10.1126/science.1116681

Saba A, Messina F (2003) Attitudes towards organic foods and risk/benefit perception associated with pesticides. Food Qual Prefer 14:637–645. https://doi.org/10.1016/S0950-3293(02)00188-X

Morgan MG, Keith DW (2008) Improving the way we think about projecting future energy use and emissions of carbon dioxide. Clim Chang 90:189–215. https://doi.org/10.1007/s10584-008-9458-1

Camerer CF, Kunreuther H (1989) Decision processes for low probability events: policy implications. J Policy Anal Manag 8:565. https://doi.org/10.2307/3325045

Funder DC (1987) Errors and mistakes: evaluating the accuracy of social judgment. Psychol Bull 101:75–90. https://doi.org/10.1037/0033-2909.101.1.75

Siegrist M, Earle TC, Gutscher H (2012) Trust in cooperative risk management: Uncertainty and scepticism in the public mind

Slovic P (1986) Perception of risk. Science 236:280–285

Yeung RMW, Morris J (2001) Food safety risk consumer perception and purchase behaviour. Br Food J 103:170–186. https://doi.org/10.1108/00070700110386728

Gray S, Jordan R, Crall A, Newman G, Hmelo-silver C, Huang J, Novak W, Mellor D, Frensley T, Prysby M, Singer A (2016) Combining participatory modelling and citizen science to support volunteer conservation action. BIOC 208:76–86. https://doi.org/10.1016/j.biocon.2016.07.037

Sun Z, Müller D (2013) A framework for modeling payments for ecosystem services with agent-based models, Bayesian belief networks and opinion dynamics models. Environ Model Softw 45:15–28. https://doi.org/10.1016/j.envsoft.2012.06.007

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

ESM 1

(PDF 728 kb)

Appendix

Appendix

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Runck, B.C., Manson, S., Shook, E. et al. Using word embeddings to generate data-driven human agent decision-making from natural language. Geoinformatica 23, 221–242 (2019). https://doi.org/10.1007/s10707-019-00345-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10707-019-00345-2