Abstract

Iodine and selenium deficiencies are common worldwide. We assessed the iodine and selenium status of Gilgit-Baltistan, Pakistan. We determined the elemental composition (ICP-MS) of locally grown crops (n = 281), drinking water (n = 82), urine (n = 451) and salt (n = 76), correcting urinary analytes for hydration (creatinine, specific gravity). We estimated dietary iodine, selenium and salt intake. Median iodine and selenium concentrations were 11.5 (IQR 6.01, 23.2) and 8.81 (IQR 4.03, 27.6) µg/kg in crops and 0.24 (IQR 0.12, 0.72) and 0.27 (IQR 0.11, 0.46) µg/L in water, respectively. Median iodised salt iodine was 4.16 (IQR 2.99, 10.8) mg/kg. Population mean salt intake was 13.0 g/day. Population median urinary iodine (uncorrected 78 µg/L, specific gravity-corrected 83 µg/L) was below WHO guidelines; creatinine-corrected median was 114 µg/L but was unreliable. Daily selenium intake (from urinary selenium concentration) was below the EAR in the majority (46–90%) of individuals. Iodine and selenium concentrations in all crops were low, but no health-related environmental standards exist. Iodine concentration in iodised salt was below WHO-recommended minimum. Estimated population average salt intake was above WHO-recommended daily intake. Locally available food and drinking water together provide an estimated 49% and 72% of EAR for iodine (95 µg/day) and selenium (45 µg/day), respectively. Low environmental and dietary iodine and selenium place Gilgit-Baltistan residents at risk of iodine deficiency disorders despite using iodised salt. Specific gravity correction of urine analysis for hydration is more consistent than using creatinine. Health-relevant environmental standards for iodine and selenium are needed.

Similar content being viewed by others

Introduction

Iodine (I) and selenium (Se) are important micronutrients for human health and play a vital role in the synthesis of thyroid hormones and wider aspects of metabolism, including brain development in early pregnancy (Jin et al., 2019; Schomburg & Köhrle, 2008; Velasco et al., 2018; Wu et al., 2015). Low dietary I causes a variety of health complications, collectively known as iodine deficiency disorders (IDD) (Hetzel 1983; Zimmermann 2009), which are some of the most important and common preventable public health problems worldwide (Kapil, 2007; Gizak et al., 2017; IGN, 2020a). Iodine deficiency can affect any stage of human development including foetus, neonate, child, adolescent and adult (Hetzel, 2000; Zimmermann et al., 2008). However, the consequences are more serious during foetal development and childhood (Bath et al., 2013; Hay et al., 2019; Obican et al., 2012; Velasco et al., 2018) with pre-pregnancy deficiency thought to be deleterious to brain development (Velasco et al., 2018). Globally, approximately two billion people are living at risk of IDD, including 240–285 million school-age children (Anderson et al., 2007; Biban & Lichiardopol, 2017; IGN, 2020b). Iodine deficiency was widespread in Pakistan prior to the start of the Universal Salt Iodisation programme in 1994, with goitre prevalence of > 70% in some parts of the country (Khattak et al., 2017). It is reported that the Universal Salt Iodisation programme has resulted in up to a 50% decrease in IDD, but it is suspected that this decline may be non-uniform and possibly over-estimated (Khattak et al., 2017). Our study area, Gilgit-Baltistan in the remote mountainous north-east, has long been known for endemic IDDs (Chapman et al., 1972; McCarrison, 1909; Stewart, 1990), which are still considered a major public health problem there (Shah et al., 2014). The 2011 national nutritional survey of Pakistan reported a urinary I concentration (UIC) of 64 and 68 µg/L in mothers (n = 34) and 6–12-year-old children (n = 29) from Gilgit-Baltistan (GoP, 2011), well below the adequate range of 100–199 µg/L, and classed as mild iodine deficiency by WHO (Benoist et al., 2004), although the sample size was small.

Selenium deficiency is associated with a range of health problems including cardiovascular and myodegenerative diseases, infertility and cognitive decline (Rayman, 2000, 2012; Shreenath & Dooley, 2019). There are approximately 0.5–1.0 billion people affected by Se deficiency globally (Haug et al., 2007; Jones et al., 2017). A coexisting deficiency of both I and Se aggravates hypothyroidism and may result in myxoedematous cretinism and other thyroid disorders (Arthur et al., 1999; Rayman, 2000; Ventura et al., 2017). Currently, there appears to be no literature available on the Se status of the Gilgit-Baltistan population.

Both I and Se deficiencies occur when there is inadequate dietary intake of these essential elements (Bath et al., 2014; Kapil, 2007; Rayman, 2008; Shreenath & Dooley, 2019). The I nutritional status of a population can be estimated from UIC, which is a well-established, cost-effective and easily obtainable biomarker (WHO, 2013). Selenium concentration in blood plasma is the trusted biomarker for assessing Se level at both individual and population level (Fairweather-Tait et al., 2011), but obtaining blood plasma samples is demanding. However, urinary Se concentration (USeC) has been reported as a plausible alternative to blood plasma to assess population-level Se status (Middleton et al., 2018; Phiri et al., 2020).

The population of Gilgit-Baltistan consumes mainly locally grown foods. Therefore, we aimed to (1) measure I and Se concentration in locally grown agricultural produce, (2) evaluate the I and Se status of residents of the Gilgit district of Gilgit-Baltistan using urinary concentrations (UIC, USeC) with corrections for hydration, and (3) study the role of iodised salt in the I status of the local population using urinary sodium concentration as an indicator of salt intake.

Methods

Sampling overview

Two separate sets of samples were collected: (1) environmental samples comprising edible plants and drinking water, and (2) population samples including individual urine samples, and family-based culinary salt samples supported by a salt intake questionnaire.

A wide range of locally grown edible plants (n = 281), representing 34 different crop types from various villages across five districts (Fig. 1) of Gilgit-Baltistan, covering approximately 65% of the food items consumed daily, were sampled. The selection of villages for plant sampling was part of a wider study that included soil and irrigation water sampling (Ahmad et al., 2021) and for which the criteria for selection was the accessibility and availability of fertile agricultural land. Mountain Agriculture Research Centre (MARC) staff, who are familiar with the area and have multiple research stations across different districts of Gilgit-Baltistan, identified sampling villages. Accessibility in our sampling design was important because Gilgit-Baltistan is very mountainous with rough terrain and some villages are difficult to reach. Water was sampled in the villages where plant sampling was undertaken and also between villages (Fig. 1). Samples (n = 82) were collected from a mix of surface water (rivers/streams/lakes) and groundwater (springs/wells), all of which were used as drinking water by the Gilgit-Baltistan local population throughout the year.

The University of Nottingham Research Ethics Committee granted ethical approval for (1) urine sampling of individuals representing different age groups and (2) undertaking a household questionnaire survey to estimate local salt consumption. MARC staff obtained verbal permission from the elders of the community; informed written consent from each adult and the parents of each participating child was also obtained. For the urine sampling, the study was advertised to the wider population of the district of Gilgit only, with the support of MARC staff and community elders by verbal announcements and notices in community centres. Then, our survey team organised community gatherings and provided verbal and written information about the study to the local population to recruit volunteers. Only healthy local volunteers (n = 415), representing different age groups and gender, who declared no known history of medically diagnosed thyroid disease were recruited to participate in the study. The participants came from 76 different households; therefore, a single salt sample was obtained from each participating household. Furthermore, a female representative of each household, who was responsible for cooking and other food preparations for the household, was asked to attend a face to face interview and answer a standard salt intake questionnaire.

Sample preparation

Plants

Plants were oven-dried at 40 °C and then milled in an ultra-centrifugal mill to obtain finely ground powder for analysis by ICP-MS (iCAP-Q; Thermo-Fisher, Bremen, Germany). Approximately 0.2 g of the finely ground plant sample was microwave acid digested in 6 mL of 70% HNO3 in a pressurised PFA vessel at 140 °C. Grain samples were digested in 3 mL HNO3 (70%), 3 mL Milli-Q water and 2 mL H2O2 (30%). Sample digests of plants and grain were diluted to 2% HNO3 prior to Se analysis by ICPMS. Microwave-assisted tetra methyl ammonium hydroxide (TMAH) extraction of plant samples (c. 0.2 g) was undertaken at 110 °C in 5 mL of 5% TMAH. The extracts were diluted to 1% TMAH with Milli-Q water, centrifuged, syringe-filtered to < 0.22 µm and analysed by ICPMS.

Water

Water samples were filtered at the sampling point, using syringe filters (< 0.22 µm), into a universal tube and kept in the dark at 4 °C. Duplicate samples were adjusted to 1% TMAH or acidified to 2% HNO3 prior to I and Se analysis, respectively.

Urine

A first-morning void spot urine sample was collected in an 8-mL capacity polypropylene tube from each participant, kept in a cool box without filtration and transported to the MARC laboratory where they were stored at − 20 °C. Frozen urine samples were shipped to the University of Nottingham for elemental and hydration adjustment analyses.

Salt

Upon collection in a sealed plastic bag from each participating household, domestic salt samples were kept in the dark at the MARC in Gilgit before shipping to the University of Nottingham where they were stored at 20 °C pending chemical analysis.

Salt intake survey

We designed a questionnaire specifically aimed at ascertaining information on the frequency and amount of salt (iodised/non-iodised) purchased, its storage and daily consumption. It was piloted on ~ 20% (n = 15) of surveyed households before the study began. The pilot survey results were excluded from the final analysis. The survey team members were fluent in speaking the local language (Shina) and were familiar with local culture which negated any barrier to communicating with the residents. Individual daily salt consumption was estimated by dividing the estimated amount of salt purchased by the number of individuals (age > 5 years) in a household allowing for the frequency of purchase.

Chemical analysis

Plant and water samples

Analysis of I in all plant and water samples was undertaken in 1% TMAH with the ICP-MS operating in standard mode (no cell gas) and an internal standard of 5 µg/L rhenium (Re) to correct for instrumental drift. For Se analysis in an acidic matrix (2% HNO3), the ICP-MS (iCAP Q) instrument was in ‘hydrogen cell’ mode with internal standards of 5 µg/L rhodium (Rh) and Re in 4% methanol and 2% HNO3.

Salt samples

Duplicate samples (1.25 g) were dissolved in 25 mL of Milli-Q water and diluted 1-in-10 with 1% TMAH prior to I analysis by ICP-MS.

Urine samples

Elemental analyses (I, Se and Na) were made on all urine samples using ICP-MS. Iodine and Se were measured to confirm population I and Se status while Na was measured to estimate salt intake. Iodine was measured in an alkaline matrix following a 1-in-20 dilution (0.5 mL urine + 9.5 mL 1% TMAH), whereas Se and Na were measured in an acidic matrix (0.5 mL + 9.5 mL 2% HNO3).

About 90% of Na consumed is excreted in urine (McLean, 2014). Accordingly, participants’ salt intake was calculated using the method of Chen et al. (2018), who used 24 h urine samples to estimate Na intake. However, we used spot urine samples as these are a plausible alternative in determining population salt intake (Huang et al., 2016).

Adjustment of urinary analyte concentrations was undertaken both with urinary creatinine concentration and specific gravity to allow for the effect of hydration status. Urinary creatinine was measured spectrophotometrically, and specific gravity was measured with a handheld pocket refractometer (PAL-10S, Atago, Japan) on 300 µL of urine (Phiri et al., 2020; Watts et al., 2019).

Equation 1 was used to adjust urinary analyte concentration for variation in creatinine concentration.

where Ccre-adj is the adjusted concentration (µg/g), Cm is the uncorrected concentration (µg/L) of analyte in urine and Ccre represents creatinine concentration (g/L). For each sample, correction with specific gravity was also undertaken, using Eq. 2.

where Csg-adj is the adjusted concentration (µg/L), sgmean represents the average specific gravity of all the samples analysed in the study and sgmeas is the measured specific gravity.

Calculating iodine and selenium dietary intake

We estimated the individual daily I and Se intake from locally grown food using typical consumption patterns from the Pakistani national food basket as no local food basket has been published. For the food types which were not sampled in this study but were part of the food basket, we obtained I and Se concentrations from published literature. As an alternative approach to estimating daily I and Se intake, we assumed that the entire diet originated locally and simply scaled up to 100% the 65% for which we could account from the survey data. Individual I and Se intake from drinking water was calculated based on a drinking water consumption of 2–4 L/day. The recommended value for adults and children is to drink at least 2 L water per day, but individual’s daily drinking water requirements vary from 2 to 4 L/day depending on the nature of their work and lifestyle and the climate of the area (WHO, 2016).

Estimating iodine and selenium nutrition from urine

We used the WHO UIC epidemiological criteria (Zimmermann & Andersson, 2012; WHO, 2013) for evaluating population I nutrition and severity of I deficiency. There are no approved guidelines for estimating Se nutrition status from USeC, and different approaches are reported. We used three different reported approaches to estimate population Se nutrition status from USeC: (1) 50–70% of dietary Se is excreted in urine (Alaejos & Romero, 1993), (2) 40–60% of Se intake is excreted in urine (EFSA NDA, 2014), (3) 73 and 77% of ingested Se is excreted via urine in men and women, respectively (Nakamura et al., 2019; Yoneyama et al., 2008). We used a published age group classification (IOM, 2000) to describe Se nutrition.

Quality control

Certified reference materials (CRM) wheat flour (NIST 1567b) and tomato leaves (NIST 1573a) were digested/extracted alongside plant samples. For I analysis in urine samples, two reference materials (Seronorm™ Trace Elements Urine, L-1 and L-2) were analysed simultaneously with urine samples.

Statistical analysis

Statistical calculations (mean, median, standard deviation and interquartile ranges) were performed in Microsoft Excel 2016 while Minitab (version 18.1) was used for the Anderson Darling normality test, Pearson correlation and ANOVA.

Results

Population demographics

Of the sampled population (n = 415) (Table 1), 44% were male and 56% female; 32% were women of reproductive age (15–49 years), of whom 10% (n = 13) were reported to be pregnant (n = 7) and/or lactating (n = 7). Although the number of pregnant and nursing women is small, we cannot be sure that they are the only pregnant or nursing women in the study, so we have included them in the wider group of women of reproductive age.

Iodine and selenium concentrations in edible plants and water

Plant samples were divided into six groups: leafy vegetables (n = 56), grains (n = 55), crop vegetables (n = 75), tubers (n = 30), fruits (n = 63) and nuts (n = 2). The concentrations of I and Se in all plant species (Tables 2 and 3, respectively) decreased in the order: leafy vegetables > grains > crop vegetables > tubers > nuts > fruits. Iodine and Se concentrations across all water samples ranged from 0.01 to 10 µg/L and 0 to 3.0 µg/L with median values of 0.24 (IQR 0.12, 0.72) and 0.27 (IQR 0.11, 0.46) µg/L, respectively (supplementary material Table B1).

Salt iodine concentration

Iodine concentration in salt samples ranged from 0.03 to 45.8 mg/kg (median 4.2; IQR 2.99, 10.8) and was not normally distributed (p < 0.005) even when log-transformed (supplementary material Fig. A1). There were five different brands of salt available locally, but all lacked information on I concentration or species (iodide or iodate).

Questionnaire

The household (n = 76) questionnaire showed 85% (n = 65) of households use iodised salt; the majority (95%) stored salt in lidded containers. Based on the amount of salt purchased, per capita salt use was estimated as 52.8 g/day.

Urinary sodium concentration (UNaC)

The median UNaC, uncorrected (UNaCuncor), corrected for creatinine (UNaCcre) or specific gravity (UNaCsg) were 133, 198 and 143 mmol/L, respectively (Table 4), which are equivalent to 186, 277 and 200 mmol/day based on a urinary volume of 1.4 L in 24 h (Karim, 2018; Medlineplus, 2019). From UNaC, it was estimated that the median salt intake in the study area was 12, 18 and 13 g/day based on UNaCuncor, UNaCcre and UNaCsg, respectively.

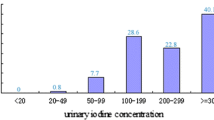

Urinary iodine concentration (UIC)

Figure A2 (supplementary material) shows the distribution of uncorrected UIC (UICuncor), creatinine-corrected UIC (UICcre) and specific gravity-corrected UIC (UICsg). The median UICuncor for all participants (n = 415) was 78 µg/L, similar to median UICsg (83 µg/L) but smaller than UICcre (114 µg/L) (Table 5). No significant difference was observed between the UIC of male and female participants at the household level (p > 0.05). No correlation (p > 0.05) was observed between UIC values and age for all participants together. The median concentration of UICcre differed across the age groups while those of UICuncor and UICsg were consistent (Table 5).

Urinary selenium concentration (USeC)

Figure A3 (supplementary material) shows the distribution of USeC of all participants together (n = 415). The median USeCuncor was 17 µg/L, similar to the median USeCsg (17 µg/L) but smaller than USeCcre (23 µg/L) (Table 6). No significant difference was observed between the USeC of male and female participants at the household level (p > 0.05). There was no correlation (p > 0.05) between age (whole dataset) and USeC. As expected from UIC, the median concentration of USeCcre differed across the age groups from that of USeCuncor and USeCsg, both of which were consistent (Table 6).

Hydration correction

Uncorrected concentrations of analytes (Na, I and Se) showed positive correlations with both creatinine and specific gravity (Figs. 2, 3 and 4) suggesting the need for correction to remove hydration-driven variation. The effectiveness of correction is seen in the reduced correlation in corrected data (Figs. 2, 3 and 4). A greater proportion of variation in UIC was attributable to sample dilution, as shown by specific gravity correction, and was less evident for creatinine correction (Figs. 2, 3 and 4).

Iodine and selenium daily dietary intake

Daily I and Se intakes, based on a typical Pakistani national food basket, were estimated as 46.4 and 32.3 µg/day, respectively, using a combination of measured I and Se concentrations in locally grown foods and literature values for the remaining food items (Table 7). The I and Se intakes from drinking water were 1.28–2.56 and 0.78–1.17 µg/day, respectively, based on 2–4 L daily consumption. Combined estimated intakes of I and Se from food and water were therefore 47.7–49.0 and 33.1–33.5 µg/day, respectively. Comparison between reported I and Se contents of foods in the wider literature with the values measured for locally grown produce in this study indicates that locally sourced foods are typically lower in I and Se (Tables 2 and 3). The use of literature values may therefore overestimate I and Se intake. If, instead, we scale the intakes measured for the local foods (65% of the diet; I = 8.8 µg/day and Se = 17.7 µg/day) to 100%, then I and Se intake is estimated as 14.1–15.7 and 28.0–28.4 µg/day, respectively.

Iodine and selenium nutritional status

Considering the WHO-recommended concentrations for UIC, 63% of the Gilgit-Baltistan sampled population had UICuncor < 100 µg/L and 6% had UICuncor > 300 µg/L. UICcre indicated only 42% participants with < 100 µg/L and 13% > 300 µg/L while for UICsg 64% were < 100 µg/L and 6% > 300 µg/L. We can make no comment on pregnant and nursing women as their numbers were too small to be considered separately.

For Se, compared to the ‘Estimated Average Requirement’ (EAR) (IOM, 2000), a higher proportion (46–90%) of individuals in age groups ≥ 14 years had insufficient Se intake based on USeCuncor and USeCCre while USeCcre indicated that 14–68% individuals had insufficient Se intake (Table 8). The mean Se intake in pregnant and lactating women was ≤ 54 µg/day estimated from USeC (USeCuncor; USeCcre; USeCsg) assuming that 50–70% of dietary Se is excreted in urine.

Quality control recoveries

The recoveries of I and Se in the wheat flour and tomato leaf CRMs are provided in supplementary material Table B2. The recoveries (%) of I in the urine CRMs Seronorm L-1 (certified value: 105 µg/L, measured value: 98.7 ± 2.9 µg/L, n = 4) and Seronorm L-2 (certified value: 297 µg/L, measured value: 297 ± 9.7 µg/L, n = 4) were 94% and 100%, respectively.

Discussion

This study is the first investigation to evaluate local population I and Se status in NE Pakistan in relation to their dietary intake and to use urine as a biomarker for population Se status. It is apparent that the Gilgit-Baltistan population is at risk of both dietary I and Se deficiency.

There is no recommended range of I concentrations in drinking water for human consumption (WHO, 2008), but the concentrations found in Gilgit-Baltistan (0.01–10 µg/L) are at the bottom of the internationally reported ranges. Drinking water I concentration ranges of 0.2–304, < 1–139 and 55–545 µg/L have been reported in Kenya, Denmark and Algeria respectively (Watts et al., 2019). Selenium concentration in drinking water (0–3.0 µg/L) was also at the lower end of the global range, of 0.06 to > 6000 µg/L (WHO, 2011). However, Se concentration in public water supplies usually does not exceed 10 µg/L (WHO, 2011).

Crop types in Gilgit-Baltistan typically had lower I and Se concentrations when compared to published values for crops from other areas (Tables 2 and 3), possibly due to the low concentrations of iodine and selenium in soil available for plant uptake (Ahmad et al., 2021). Gilgit-Baltistan is a remote mountainous area, where the population largely consumes locally grown agricultural produce. Assuming a typical Pakistani national food basket (Hussain, 2001), the consumption of locally produced food items would only provide 49% and 72% of I and Se EAR based on concentrations in this study and the literature (Hussain, 2001; Fordyce, 2003; Iqbal et al., 2008; USDA, 2019). If local food is used to calculate daily intake, then the diet would provide only 9% and 39% of I and Se EAR, respectively. The daily intake of I and Se from drinking water would be < 3% of I and Se EAR. This is much smaller than the I contribution (10%) from drinking water suggested by Gao et al., (2021). The smaller contribution of Se from drinking water is not unusual as most drinking water has < 10 µg/L of Se (WHO, 2011). Thus, estimated intakes of I and Se in Gilgit-Baltistan fall substantially below recommended levels.

Iodised salt is a proven approach to the prevention of IDD. The I concentration in the majority of Gilgit-Baltistan salt samples (83%) was well below the minimum level of the WHO-recommended range of 20–40 mg I per kg salt (WHO/UNICEF/ICCID, 2007). Our results are comparable with the fortification assessment coverage toolkit survey undertaken in 2017 in Pakistan in which 87% of different iodised salt brands (n = 30) collected from marketplaces across Pakistan were shown to be inadequately iodised (IGN, 2020c). Our observed salt I concentration was lower than that reported in the 2011 national nutrition survey: 30% of Gilgit-Baltistan iodised salt had ‘inadequate’ I, using 15 mg I per kg of salt as the ‘adequate’ threshold (GoP, 2011). The reasons for this are not known. However, possible causes may include initial inadequate addition of I, poor mixing and/or storage conditions which may affect volatilisation (light, humidity, temperature). Most households stored salt in lidded containers at home, which may help to reduce salt moisture content and volatilisation of I. In the current study, no information was collected from participating households on the storage time of the salt and there was no indication of dates of production on the manufacturers’ labels. Iodine loss of 8.4–23% from different types of iodised salts, stored in different lidded containers over 15 days, has been reported (Jayasheree & Naik, 2000); the stability of I in iodised salt stored at room temperature at a relative humidity of 30–45% was 59% after ~ 3.5 years (Biber et al., 2002). Further investigations are required to establish the reasons for such low salt I concentrations which put at risk the health of the local community and challenge the integrity of the national salt iodisation programme.

The widespread use (85%) of iodised salt in the region demonstrates that either the communication of the need for iodised salt has fully penetrated this remote region, or that the supply of non-iodised salt has been reduced significantly. Either reason produces a good result for the national iodised salt programme. The current use of iodised salt at household level (85%) was slightly lower than previously reported (91%) for Gilgit-Baltistan but higher than the national average (80%) and the average for rural areas of Pakistan (GoP/UNICEF, 2018). Based on the amount of salt purchased by each household, per capita salt use was estimated as 52.8 g/day, which is far greater than normal salt consumption of 5 to 15 g/day across different cultures (Medeiros-Neto & Rubio, 2016). This is also ten times greater than the WHO-recommended salt intake of 5 g/day (WHO, 2012). The household salt intake estimation from monthly purchases is therefore wholly unreliable. There may be loss of salt through spillage, in cooking water and from the use of salt for other purposes such as preserving meat, particularly during the religious festival of Eid ul Adha when animals are slaughtered.

About 85–90% of Na ingested is excreted in urine (WHO, 2007; Cogswell et al., 2018). Hence, daily salt intake estimated from UNaC is more reliable than that calculated from monthly or weekly salt purchase. However, UNaC still underestimates the actual salt intake by about 10–15% as it only accounts for electrolyte loss via kidney and excludes other forms of excretion (Amara & Khor, 2015). High salt (Na) intake is associated with adverse health effects including high blood pressure and cardiovascular diseases (Cappuccio, 2013; Partearroyo et al., 2019). The salt intake estimated by UNaCcre (18.0 g/day) was higher than that predicted by UNaCuncor (12.0 g/day) or UNaCsg (13.0 g/day). The salt intake calculated from UNaCuncor or UNaCsg fell within the normal range for daily salt consumption (5–15 g) but was much lower than the consumption rate estimated from the salt intake questionnaire survey. However, it still indicates a higher salt consumption than the WHO-recommended amount of 5 g/day (WHO, 2012). It is also slightly higher than the average global salt intake of 10.1 g/day (Powles et al., 2013). However, the salt intake estimated from UNaC (UNaCuncor, UNaCsg) is comparable with salt consumption reported in other countries in the region such as Bangladesh and India, which have a similar cuisine. A daily salt consumption of 13.4 g (UNaC) was reported for Bangladesh (Zaman et al., 2017) and 11 g (24-h recall) in India (Johnson et al., 2019). The daily salt consumption found for Gilgit-Baltistan is higher than other countries; for example, UNaC-based salt consumption rates of 6.5, 8.0, 8.3, 8.4 and 10 g/day have been reported for Malaysia, the UK, Singapore, Thailand and Vietnam, respectively (Amarra & Khor, 2015; Bates et al., 2016). One of the reasons for higher salt intake could be the consumption of salty tea, a local tradition. Whether there is evidence of hypertension or other disorders associated with salt intake was not investigated.

Average daily iodised salt intake estimated from UNaCuncor or UNaCsg in Gilgit-Baltistan would provide ≤ 54 µg/day of I to an adult based on the median (4.2 mg/kg) I concentrations in locally available iodised salts. The combined I intake from all food sources and drinking water, together with the contribution from iodised salt, was approximately ≤ 103 µg/day; this is still below the RDA (150 µg/day). Therefore, we note that, although an iodised salt programme is running in the area, the local population may still be living at risk of I deficiency and the resulting disorders. If I contribution from iodised salt is considered based on the salt intake estimated from UNaCcre, then the total dietary I intake from all sources would be 124 µg/day (but see discussion of creatinine correction below) which is still below the RDA. This, of course, still leaves the population at risk from low Se intake, so the risk of IDD remains.

These estimates are put in context by the urinary I aspect of the study. According to the WHO, a population median UIC concentration of < 100 µg/L represents I deficiency and ≥ 300 µg/L shows an excessive I intake, which can also result in health complications, including hyperthyroidism and autoimmune thyroid diseases (Zimmermann & Andersson, 2012; WHO, 2013; Sun et al., 2014). The UIC concentrations (UICuncor, UICsg) confirm that the surveyed population have an inadequate I intake [mild deficiency (WHO, 2013)], leaving them at risk of a range of IDD. This is not surprising, given that I concentration was at the lower end of the global concentrations in the majority of locally grown agricultural produce and drinking waters, and that the population consumes locally grown food. Whether this deficiency is seasonal in Gilgit-Baltistan is unclear, given possible variations in a diet that largely depends on locally grown produce. The median UICcre values indicate sufficiency in some parts of the community (Table 5). However, there are reasons to doubt the creatinine correction.

The hydration correction with creatinine significantly influenced the rate of I deficiency or sufficiency in different age groups (Table 5), which is concerning since UICuncor and UICsg remained consistent across age groups. It is important to apply hydration correction to urinary analytes in order to compare data with other investigations, which may have examined populations with differing hydration statuses (Watts et al., 2015, 2019), which can distort comparisons between populations (Remer et al., 2006)—although WHO uses uncorrected UIC. The variation in UIC attributable to hydration status (dilution) is consistent with other investigations that creatinine correction is less useful as a corrective measure for hydration than specific gravity (Watts et al., 2019), despite the fact that specific gravity measurements made with a refractometer are prone to interference by urinary solutes such as protein, glucose and ketones (Middleton et al., 2016). Furthermore, creatinine excretion varies among demographic groups depending on protein intake, incidence of malnutrition, muscle mass, exercise, age and gender (Cockell 2015; Middleton et al., 2016; Watts et al., 2015, 2019). This would explain the differences found in our results (Table 5) between UICcre and UICuncor or UICsg. In the context of this study, corrections with creatinine may be questionable due to the prevalence of malnutrition, with low protein intake within the Pakistani population (Aziz & Hosain, 2014; GoP/UNICEF, 2018). By contrast, correction with specific gravity may be a more reliable approach as it is also considered a proxy for osmolality—the most reliable approach for urinary dilution correction (Middleton et al., 2018, 2019; Watts et al., 2019). However, there are limited data available on, or discussion about, utilising alternative correction methods to creatinine. Standard guidelines on the proper use of dilution corrections are also lacking, and would benefit from further research. Nevertheless, we do not recommend the use of creatinine as a corrective factor in UIC surveys.

The daily Se intake estimated from USeC (USeCuncor, USeCsg) was below the EAR of 45 µg/day (IOM, 2000) in a large proportion (46–90% depending on method of evaluation) of the population. However, since UIC is a population measure we were unable to investigate how the I and Se intakes were related in any one individual. The fact that the creatinine-corrected urinary I concentration, if accepted as reliable, was above the WHO guideline value (Table 5) should not give rise to complacency, as the low Se status is likely to counteract this, adding to the picture of a community at risk of IDD.

Urinary Se excretion is one of the most effective indicators of Se intake (Rodríguez et al., 1995; Hawkes et al., 2003; Yoneyama et al., 2008) which suggest that USeC can be used to predict the Se intake of different populations (Longnecker et al., 1996; Nakamura et al., 2019). The median USeC for school-age children and women of reproductive age were comparable to or less than USeC values reported by Phiri et al. (2020) for a Malawian population, who were reported to be Se deficient based on their plasma Se status. A strong correlation (r = 0.962, p < 0.001) has been found between USeC and dietary Se intake over a wide range of diets from across the world (Alaejos & Romero, 1993). It is reported that approximately 50–70% of Se ingested is excreted in urine (Alaejos & Romero, 1993). Based on this approach, 24–81% of Gilgit-Baltistan participants aged ≥ 14 years were found to have inadequate Se intake, estimated from all measures of USeC. With the same approach, a comparatively smaller proportion of participants from age groups 4–8 and 9–13 years were found to be Se deficient by all measures of USeC. However, after hydration correction with creatinine the number of participants with inadequate Se intake decreased and this creatinine-driven effect was more pronounced in participants aged 4–8 and 9–13 years compared to participants in the ≥ 14 years age group. The reason for this could be the variation in creatinine concentration with age, sex and body weight, decreasing the reliability of the creatinine correction, again indicating the problems of the creatinine approach to hydration. We cannot recommend creatinine correction for hydration.

The USeC reflects Se intake and approximately 40–60% of Se intake is excreted in urine (EFSA NDA Panel, 2014), which, for the current study, means that approximately 32–54% participants of the surveyed population is living at risk of Se deficiency (USeCuncor, USeCsg) but 7–30% when USeCcre is used. A study investigating daily Se intake in a Japanese population from 24-h urinary Se reported 73 and 77% of ingested Se excreted via urine in men and women, respectively (Nakamura et al., 2019; Yoneyama et al., 2008). If urine samples collected in our study are assumed equivalent to 24-h urine sampling, then the mean Se intake based on USeCuncor and USeCsg in males and females of all age groups ≥ 14 years would be less than the EAR (45 µg/day). Using USeCuncor and USeCsg, participants aged 4–8 years have sufficient Se and those aged 9–13 years had an intake slightly below their EAR (35 µg/day). The intake estimated from USeCcre indicated that the EAR of all age groups were met, but, as noted earlier, hydration correction with creatinine may not be very robust.

We conclude that Se intake in the majority of the Gilgit population appears to be low. However, further research is required to quantify Se status and daily Se intake from the consumption of different food items and its estimation from blood plasma and urinary Se in Gilgit. Currently, there are no accepted limits available to assess the severity of Se deficiency based on USeC; if these can be established, USeC could be used as an effective, non-invasive and cost-effective alternative to blood plasma assays.

No correlation (p > 0.05) was found between uncorrected and corrected UIC and USeC which conflicts with the findings of Karim (2018) who found a correlation between spot urine UICuncor and USeCuncor (n = 410) in the Kurdistan region of Iraq. This also conflicts with the correlation between UICuncor and USeCuncor in both spot and 24-h urine (n = 62) in a New Zealand population (Thomson et al., 1996). Our lack of correlation between UIC and USeC may indicate that these essential nutrients are supplied from different food sources in northern Pakistan.

This study is a snapshot in time, and this is not the ideal approach to describe I deficiency in a population because individual UIC can vary day to day or season to season as diet changes. The 2011 national nutritional survey of Pakistan noted that 76% of women (n = 34) from Gilgit-Baltistan and 48% nationwide (n = 1460) were deficient (GoP, 2011). In 2018, the national nutrition survey reported that 18% of women of reproductive age in Pakistan were I deficient (GoP/UNICEF, 2018). Our study suggests that I deficiency in this area is more common than reported in 2018. We included more participants from a more geographically confined area compared to the national nutrition survey and hence our work is more locally representative. Our findings on the I status of Gilgit-Baltistan women of reproductive age (median UIC of < 100 µg/L) are concerning, given the adverse outcomes of such deficiency on foetal brain development in particular (Toloza et al., 2020). Furthermore, the optimal I nutrition status in pregnancy and lactation is a median UIC within the range 150–249 µg/L, indicating that children born to women entering pregnancy in Gilgit-Baltistan are likely to suffer reduced cognition (Velasco et al., 2018).

There was no correlation observed between salt intake and I status at a household or individual level (p > 0.05). The reason for this is not known, and it implies that iodised salt may not be the main source of I in the population of the study area, which is a disappointing result given the national iodised salt program. If iodised salt is the primary source of I for individuals, a relationship between UNaC and UIC should be apparent. Up to 90% of Na and I are excreted in urine and a good relationship has been noted between UIC and UNaC in a Kurdish population where iodised salt was the main source of I in the population (Karim, 2018).

Although the water and crop data indicate a limited supply of both I and Se from the local environment in Gilgit-Baltistan, there are no recognised standards to define an environment that is I deficient, whether for water, plants or soil. Environments may be classed as deficient simply by the local presence of IDD, or where the concentrations in water, crops or soils are small compared to other locations. An I concentration in water below 5–10 µg/L may indicate deficiency, but very few situations have been investigated using the full ‘source-pathway-receptor’ approach to demonstrate the deficiency pathway. While it is plausible, perhaps likely, that I concentrations in water, for example, below 5–10 µg/L contribute to the local IDD, this needs further proof since it is also plausible that other factors (goitrogens) antagonise the local I supply. On the one hand, there is clear evidence that increasing I intake decreases the incidence and prevalence of many IDD, but there are also many reports of the presence of local goitrogens (anti-thyroid agents) affecting the incidence and prevalence of IDD (Chandra et al., 2016; Eastman & Zimmermann, 2018; Gaitan, 1989; Langer & Greer, 1977; Mondal et al., 2016). In Gilgit-Baltistan, the I and Se intakes are both low. Selenium is necessary for the proper functioning of the deiodinase enzyme group and a low intake affects thyroid hormone function; Se itself is not goitrogenic, but low Se intake is. Thus, the aetiology of IDD in locations such as Gilgit-Baltistan, and other areas with low Se concentrations or the presence of known goitrogens, is likely to be more complex than can be confirmed simply from a low environmental I concentration, or even from a low (or normal) median UIC. This is an area ripe for research, since nowadays most communities with IDD are only mildly or moderately deficient in I, and any antagonism of thyroid hormone genesis and action may have a greater adverse effect on health than the UIC would predict. However, if not investigated such causes will be missed, with resulting difficulties in devising effective preventative programmes.

Strengths of the current study include analysis of I and Se content of dietary sources alongside related outcome measures at the population (I) and individual (Se) levels. The study had a large (n = 450) sample size of people from across the community, compared to previous studies with < 40 participants in Gilgit-Baltistan (GoP, 2011). We also compared, in this large dataset, different approaches to correction for hydration status and raise further questions about the utility of creatinine correction. Weaknesses include the small number of samples in some of the food classes (Table 2) and the lack of seasonal repetition of the work. Nor were we able to compare plasma Se with urinary Se concentrations to show robustly that our USeC results are reliable. Nevertheless, we do not believe that these weaknesses undermine the findings of the study. Despite all the difficulties and caveats, the fact remains that the Gilgit-Baltistan population remains at risk of I deficiency and the resulting range of IDD. There is an urgent need to improve the I nutrition of the population further than what has already been achieved.

Conclusions

In summary, this study suggests that the Gilgit-Baltistan population is I deficient and at continuing risk of IDD’s. This status is compounded by Se deficiency, which can act as an antithyroid goitrogen, further decreasing the ability of the body to utilise the low I supply from the local diet. There is some uncertainty regarding hydration correction of UIC using creatinine in developing countries; correction with specific gravity may be more robust. Further research is required to establish proper guidelines for hydration correction of urinary analytes.

The problem of I deficiency could be resolved if the local/regional government can ensure adequate iodisation of salt and establish an annual UIC monitoring programme so that population iodine intake can be regularly checked. Furthermore, an awareness programme with the support of health professionals to encourage the intake of highly variable diet in women of childbearing age would also be useful in reducing the incidence of iodine deficiency. With regard to resolving Se deficiency, wheat biofortification with Se (selenate)-treated fertilisers would probably be the best solution as wheat is the primary staple carbohydrate crop in Gilgit-Baltistan and constitutes an essential and consistent item in all daily meals.

More work is needed to define the role of antithyroid substances (goitrogens) in I-deficient and borderline sufficient areas. Similarly, more work is needed to understand what environmental I concentrations are needed to supply adequate dietary I to the local community. It is not currently possible to infer population deficiency from any indices of ‘environmental iodine’. The use of the source-pathway-receptor approach would help achieve this objective.

Availability of data and material

The authors confirm that the summary of data supporting the findings of this study are available within the article. Detailed data is available from the corresponding author upon request.

Code availability

Not applicable.

References

Ahmad, et al. (2021). Multiple geochemical factors may cause iodine and selenium deficiency in Gilgit-Baltistan, Pakistan.

Alaejos, M., & Romero, C. (1993). Urinary selenium concentrations. Clinical Chemistry, 39, 2040–2052.

Al-Ahmary, K. M. (2009). Selenium content in selected foods from the Saudi Arabia market and estimation of the daily intake. Arabian Journal of Chemistry, 2, 95–99.

Amarra, M. S., & Khor, G. L. (2015). Sodium consumption in Southeast Asia: An updated review of intake levels and dietary sources in six countries. In A. Bendich & R. J. Deckelbaum (Eds.), Preventive nutrition. Springer.

Andersson, M., de Benoist, B., Darnton-Hill, I., & Delange, F. (2007). Iodine deficiency in Europe: A continuing public health problem. https://www.who.int/nutrition/publications/VMNIS_Iodine_deficiency_in_Europe.pdf. Accessed 20 August 2020.

Anke, M., Groppel, B., & Bauch, K. H. (1993). Iodine in the food chain. In F. Delange, J. T. Dunn, & D. Glinoer (Eds.), Iodine deficiency in Europe. Springer.

Aquaron, R., Zarrouck, K., Jarari, M. E., Ababou, R., Talibi, A., & Ardissone, J. P. (1993). Endemic goiter in Morocco (Skoura-Toundoute areas in the high atlas). Journal of Endocrinological Investigation, 16, 9–14.

Arthur, D. (1972). Selenium content of Canadian foods. Canadian Institute of Food Science and Technology Journal, 5, 165–169.

Arthur, J. R., Beckett, G. J., & Mitchell, J. H. (1999). The interactions between selenium and iodine deficiencies in man and animals. Nutrition Research Reviews, 12, 55–73.

Askar, A., & Bielig, H. J. (1983). Selenium content of food consumed by Egyptians. Food Chemistry, 10, 231–234.

Aziz, S., & Hosain, K. (2014). Carbohydrate (CHO), protein and fat intake of healthy Pakistani school children in a 24 hour period. Journal of Pakistan Medical association, 6, 1255–1259.

Barclay, M. N. I., Macpherson, A., & Dixon, J. (1995). Selenium content of a range of UK foods. Journal of Food Composition and Analysis, 8, 307–318.

Barr, D. B., Wilder, L. C., Caudill, S. P., Gonzalez, A. J., Needham, L. L., & Pirkle, J. L. (2005). Urinary creatinine concentrations in the U.S. population: Implications for urinary biologic monitoring measurements. Environmental Health Perspectives, 113, 192–200.

Bates, B., Cox, L., Maplethorpe, N., Mazumder, A., Nicholson, S., Page, P. Prentice, A., Rooney, K., Ziauddeen, N., & Swan, G. (2016). National diet and nutrition survey: Assessment of dietary sodium Adults (19 to 64 years) in England, 2014. Public Health England publications gateway number: 2015756. London, UK.

Bath, S. C., Steer, C. D., Golding, J., Emmett, P., & Rayman, M. P. (2013). Effect of inadequate iodine status in UK pregnant women on cognitive outcomes in their children: Results from the Avon longitudinal study of parents and children (ALSPAC). The Lancet, 382, 331–337.

Bath, S. C., Walter, A., Taylor, A., Wright, J., & Rayman, M. P. (2014). Iodine deficiency in pregnant women living in the South East of the UK: The influence of diet and nutritional supplements on iodine status. British Journal of Nutrition, 111, 1622–1631.

Biban, B. G., & Lichiardopol, C. (2017). Iodine deficiency, still a global problem? Current Health Sciences Journal, 43, 103–111.

Biber, F. Z., Ünak, P., & Yurt, F. (2002). Stability of iodine content in iodized salt. Isotopes in Environmental and Health Studies, 38, 87–93.

Bratakos, M. S., Zafiropoulos, T. F., Siskos, P. A., & Ioannou, P. V. (1987). Selenium in foods produced and consumed in Greece. Journal of Food Science, 52, 817–822.

Cappuccio, F. P. (2013). Cardiovascular and other effects of salt consumption. Kidney International Supplements, 3, 312–315.

Chandra, A. K., Debnath, A., Tripathy, S., Goswami, H., Mondal, C., Chakraborty, A., & Pearce, E. N. (2016). Environmental factors other than iodine deficiency in the pathogenesis of endemic goiter in the basin of river Ganga and Bay of Bengal, India. BLDE University Journal of Health Sciences, 1, 33–38.

Chapman, J. A., Grant, I. S., Taylor, G., Mahmud, K., Mulk, S. U., & Shahid, M. A. (1972). Endemic goitre in the Gilgit Agency, West Pakistan. Philosophical Transactions of the Royal Society B: Biological Sciences, 263, 459–490.

Chen, S. L., Dahl, C., Meyer, H. E., & Madar, A. A. (2018). Estimation of salt intake assessed by 24-hour urinary sodium excretion among Somali adults in Oslo, Norway. Nutrients, 10, 900.

Cockell, K. A. (2015). Measuring iodine status in diverse populations. British Journal of Nutrition, 114 (4), 499–500.

Cogswell, M. E., Loria, C. M., Terry, A. L., Zhao, L., Wang, C.-Y., Chen, T.-C., Wright, J. D., Pfeiffer, C. M., Merritt, R., Moy, C. S., & Appel, L. J. (2018). Estimated 24-hour urinary sodium and potassium excretion in US adults. JAMA, 319, 1209–1220.

de Benoist, B., Anderson, M., Egli, I., Takkouche, B., & Allen, H. (2004). Iodine status worldwide WHO Global database on iodine deficiency. https://apps.who.int/iris/bitstream/handle/10665/43010/9241592001.pdf. Accessed 17 June 2020.

De Temmerman, L., Waegeneers, N., Thiry, C., Du Laing, G., Tack, F., & Ruttens, A. (2014). Selenium content of Belgian cultivated soils and its uptake by field crops and vegetables. Science of the Total Environment, 468, 77–82.

Díaz-Alarcón, J. P., Navarro-Alarcón, M., López-García, S. H., & López-Martínez, M. C. (1996). Determination of selenium in cereals, legumes and dry fruits from southeastern Spain for calculation of daily dietary intake. Science of the Total Environment, 184, 183–189.

Eastman, C. J., & Zimmermann, M. B. (2018). The iodine deficiency disorders. https://www.ncbi.nlm.nih.gov/books/NBK285556/. Accessed 7 June 2020.

Eckhoff, K. M., & Maage, A. (1997). Iodine content in fish and other food products from East Africa analyzed by ICP-MS. Journal of Food Composition and Analysis, 10, 270–282.

EFSA Nda (European Food Safety Authority Panel on Dietetic Products, Nutrition and Allergies). (2014). Scientific opinion on dietary reference values for selenium. European Food safety Authority Journal, 12, 3846.

Fairweather-Tait, S. J., Bao, Y., Broadley, M. R., Collings, R., Ford, D., Hesketh, J. E., & Hurst, R. (2011). Selenium in human health and disease. Antioxidants and Redox Signaling, 14, 1337–1383.

Fordyce, F. (2003). Database of the iodine content of food and diets populated with data from published literature. British Geological Survey report no. CR/03/84N. Nottingham, UK. http://nora.nerc.ac.uk/id/eprint/8354/1/CR03084N.pdf. Accessed 11 January 2020.

Gaitan, E. (1989). Environmental goitrogenesis. CRC Press.

Gao, M., Chen, W., Dong, S., Chen, Y., Zhang, Q., Sun, H., Zhang, Y., Wu, W., Pan, Z., Gao, S., & Lin, L. (2021). Assessing the impact of drinking water iodine concentrations on the iodine intake of Chinese pregnant women living in areas with restricted iodized salt supply. European Journal of Nutrition, 60, 1023–1030.

Gizak, M., Gorstein, J., & Andersson, M. (2017). Epidemiology of iodine deficiency. In E. N. Pearce (Ed.), Iodine deficiency disorders and their elimination. Springer.

GoP (Government of Pakistan). (2011). National nutritional survey 2011. https://pndajk.gov.pk/uploadfiles/downloads/NNS%20Survey.pdf. Accessed 11 March 2020.

GoP/UNICEF (Government of Pakistan/United Nations International Children's Emergency Fund). (2018). National nutritional survey 2018. https://www.unicef.org/pakistan/reports/national-nutrition-survey-2018-full-report-3-volumes-key-findings-report. Accessed December 2019.

Haldimann, M., Alt, A., Blanc, A., & Blondeau, K. (2005). Iodine content of food groups. Journal of Food Composition and Analysis, 18, 461–471.

Haug, A., Graham, R. D., Christophersen, O. A., & Lyons, G. H. (2007). How to use the world’s scarce selenium resources efficiently to increase the selenium concentration in food. Microbial Ecology in Health Disease, 19, 209–228.

Hawkes, W. C., Alkan, F. Z., & Oehler, L. (2003). Absorption, distribution and excretion of selenium from beef and rice in healthy North American men. The Journal of Nutrition, 133, 3434–3442.

Hay, I., Hynes, K. L., & Burgess, J. R. (2019). Mild-to-moderate gestational iodine deficiency processing disorder. Nutrients, 11, 1974.

Hetzel, B. S. (1983). Iodine deficiency disorders (IDD) and their eradication. The Lancet, 322 (8359), 1126–1129.

Hetzel, B. S. (2000). Iodine and neuropsychological development. The Journal of Nutrition, 130, 493S-495S.

Huang, L., Crino, M., Wu, J. H. Y., Woodward, M., Barzi, F., Land, M.-A., McLean, R., Webster, J., Enkhtungalag, B., & Neal, B. (2016). Mean population salt intake estimated from 24-h urine samples and spot urine samples: A systematic review and meta-analysis. International Journal of Epidemiology, 45, 239–250.

Hussain, T. (2001). Proximate composition mineral and vitamin content of food. In Ministry of Planning and Development, Government of Pakistan (Ed.), Food composition table for Pakistan. Islamabad.

Hussein, L., & Bruggeman, J. (1999). Selenium analysis of selected Egyptian foods and estimated daily intakes among a population group. Food Chemistry, 65, 527–532.

IGN (Iodine Global Network). (2020a). Resources. 5. What happens if we don’t get enough iodine. https://www.ign.org/5-what-happens-if-we-dont-get-enough-iodine.htm. Accessed 6 November 2020.

IGN (Iodine Global Network). (2020b). The world observes global IDD prevention day. https://www.ign.org/the-world-observes-global-idd-prevention-day.htm. Accessed 7 June 2020.

IGN (Iodine Global Network). (2020c). FACT survey: Salt iodization in Pakistan needs strengthening. https://www.ign.org/newsletter/idd_aug18_pakistan.pdf. Accessed 5 October 2020.

IM (Institute of Medicine). (2000). Dietary reference intakes for vitamin C, vitamin E, selenium, and carotenoids. Washington DC: National Academies Press. https://www.ncbi.nlm.nih.gov/books/NBK225470/.

Iqbal, S., Kazi, T. G., Bhanger, M. I., Akhtar, M., & Sarfraz, R. A. (2008). Determination of selenium content in selected Pakistani foods. International Journal of Food Science and Technology, 43, 339–345.

Jayashree, S., & Naik, R. K. (2000). Iodine losses in iodised salt following different storage methods. The Indian Journal of Pediatric, 67, 559–561.

Jin, Y., Coad, J., Weber, J. L., Thomson, J. S., & Brough, L. (2019). Selenium Intake in iodine-deficient pregnant and breastfeeding women in New Zealand. Nutrients, 11, 69.

Johnson, C., Santos, J. A., Sparks, E., Raj, T. S., Mohan, S., Garg, V., Rogers, K., Maulik, P. K., Prabhakaran, D., Neal, B., & Webster, J. (2019). Sources of dietary salt in North and South India estimated from 24 hour dietary recall. Nutrients, 11, 318.

Johnson, C. C. (2003). The geochemistry of iodine and its application to environmental strategies for reducing the risks from iodine deficiency disorders (IDD). British Geological Survey report no. CR/03/057N. Nottingham, UK. https://www.bgs.ac.uk/research/international/DFID-KAR/CR03057N_COL.pdf. Accessed 11 July 2020.

Johnson, C. C., Strutt, M. H., Hmeurras, M., & Mounir, M. (2002). Iodine in the environment of the High Atlas Mountain, Morocco. British Geological Survey report no. CR/02/196N, Nottingham, UK. https://www.bgs.ac.uk/research/international/dfid-kar/CR02196N_col.pdf. Accessed 11 July 2020.

Jones, G. D., Droz, B., Greve, P., Gottschalk, P., Poffet, D., Mcgrath, S. P., et al. (2017). Selenium deficiency risk predicted to increase under future climate change. Proceedings of the National Academy of Science, 114, 2848–2853.

Kapil, U. (2007). Health consequences of iodine deficiency. Sultan Qaboos University Medical Journal, 7, 267–272.

Karim, A. B. (2018). Selenium and iodine status in the Kurdistan region of Iraq. PhD Thesis. University of Nottingham.

Khattak, R. M., Khattak, M. N. K., Ittermann, T., & Völzke, H. (2017). Factors affecting sustainable iodine deficiency elimination in Pakistan: A global perspective. Journal of Epidemiology, 27, 249–257.

Kopp, W. (2004). Nutrition, evolution and thyroid hormone levels—A link to iodine deficiency disorders? Medical Hypotheses, 62, 871–875.

Langer, P., & Greer, M. A. (1977). Antithyroid substances and naturally occurring goitrogens. Karger.

Longnecker, M. P., Stram, D. O., Taylor, P. R., Levander, O. A., Howe, M., Veillon, C., McAdam, P. A., Patterson, K. Y., Holden, J. M., Morris, J. S., Swanson, C. A., & Willett, W. C. (1996). Use of selenium concentration in whole blood, serum, toenails, or urine as a surrogate measure of selenium intake. Epidemiology, 7, 384–390.

Longvah, T., & Deosthale, Y. G. (1998). Iodine content of commonly consumed foods and water from the goitre-endemic northeast region of India. Food Chemistry, 61, 327–331.

Mahesh, D. L., Deosthale, Y. G., & Rao, B. S. N. (1992). A sensitive kinetic assay for the determination of iodine in foodstuffs. Food Chemistry, 43, 51–56.

Mangan, B. N., Lashari, M. S., Hui, L., Ali, M., Baloch, A. W., & Song, W. (2016). Comparative analysis of the selenium concentration in grains of wheat and barley species. Pakistan Journal of Botany, 48, 2289–2296.

McCarrison, R. (1909). Observations on endemic cretinism in the Chitral and Gilgit valleys. Proceedings of the Royal Society of Medicine, 1, 36.

Mclean, R. M. (2014). Measuring population sodium intake: A review of methods. Nutrients, 6, 4651–4662.

Medeiros-Neto, G., & Rubio, I. G. S. (2016). Iodine-deficiency disorders. In J. L. Jameson, L. J. De Groot, D. M. De Kretser, L. C. Giudice, A. B. Grossman, S. Melmed, J. T. Potts, & G. C. Weir (Eds.), Endocrinology: adult and pediatric.W. B. Saunders.

Medlineplus. (2019). Urine 24-hour volume. https://medlineplus.gov/ency/article/003425.htm. Accessed 25 March 2020.

Middleton, D. R., McCormack, V. A., Munishi, M. O., Menya, D., Marriott, A. L., Hamilton, E. M., Mwasamwaja, A. O., Mmbaga, B. T., Samoei, D., Osano, O., & Schüz, J. (2018). Intra-household agreement of urinary elemental concentrations in Tanzania and Kenya: Potential surrogates in case–control studies. Journal of Exposure Science and Environmental Epidemiology, 29, 335–343.

Middleton, D. R. S., Watts, M. J., Lark, R. M., Milne, C. J., & Polya, D. A. (2016). Assessing urinary flow rate, creatinine, osmolality and other hydration adjustment methods for urinary biomonitoring using NHANES arsenic, iodine, lead and cadmium data. Environmental Health, 15, 68.

Middleton, D. R. S., Watts, M. J., & Polya, D. A. (2019). A comparative assessment of dilution correction methods for spot urinary analyte concentrations in a UK population exposed to arsenic in drinking water. Environment International, 130, 104721.

Mondal, C., Sinha, S., Chakraborty, A., & Chandra, A. K. (2016). Studies on goitrogenic/antithyroidal potentiality of thiocyanate, catechin and after concomitant exposure of thiocyanate-catechin. International Journal of Pharmaceutical and Clinical Research, 8, 108–116.

Nakamura, Y., Fukushima, M., Hoshi, S., Chatt, A., & Sakata, T. (2019). Estimation of daily selenium intake by 3- to 5-year-old Japanese children based on selenium excretion in 24-h urine samples. Journal of Nutritional Science, 8, e24.

Navarro-Alarcon, M., & Cabrera-Vique, C. (2008). Selenium in food and the human body: A review. Science of the Total Environment, 400, 115–141.

Obican, S. G., Jahnke, G. D., Soldin, O. P., & Scialli, A. R. (2012). Teratology public affairs committee position paper: Iodine deficiency in pregnancy. Birth Defects Research Part (A): Clinical and Molecular Teratology, 94, 677–682.

Olson, O. E., & Palmer, I. S. (1984). Selenium in foods purchased or produced in South Dakota. Journal of Food Science, 49, 446–452.

Partearroyo, T., Samaniego-Vaesken, M., Ruiz, E., Aranceta-Bartrina, J., Gil, Á., González-Gross, M., Ortega, R. M., Serra-Majem, L., & Varela-Moreiras, G. (2019). Sodium intake from foods exceeds recommended limits in the Spanish population: The ANIBES study. Nutrients, 11, 2451.

Phiri, F. P., Ander, E. L., Lark, R. M., Bailey, E. H., Chilima, B., Gondwe, J., et al. (2020). Urine selenium concentration is a useful biomarker for assessing population level selenium status. Environment International, 134, 105218.

Powles, J., Fahimi, S., Micha, R., Khatibzadeh, S., Shi, P., Ezzati, M., Engell, R. E., Lim, S. S., Danaei, G., & Mozaffarian, D. (2013). Global, regional and national sodium intakes in 1990 and 2010: A systematic analysis of 24 h urinary sodium excretion and dietary surveys worldwide. British Medical Journal Open, 3, e003733.

Rayman, M. P. (2000). The importance of selenium to human health. The Lancet, 356, 233–241.

Rayman, M. P. (2008). Food-chain selenium and human health: Emphasis on intake. British Journal of Nutrition, 100, 254–268.

Rayman, M. P. (2012). Selenium and human health. The Lancet, 379, 1256–1268.

Remer, T., Fonteyn, N., Alexy, U., & Berkemeyer, S. (2006). Longitudinal examination of 24-h urinary iodine excretion in schoolchildren as a sensitive, hydration status–independent research tool for studying iodine status. The American Journal of Clinical Nutrition, 83, 639–646.

Rodríguez, E. M., Sanz Alaejos, M. T., & Díaz Romero, C. (1995). Urinary selenium status of healthy people. Clinical Chemistry and Laboratory Medicine, 33, 127–133.

Salau, B. A., Ajani, E. O., Soladoye, M. O., & Bisuga, N. A. (2011). Evaluation of iodine content of some selected fruits and vegetables in Nigeria. African Journal of Biotechnology, 10, 960–964.

Schomburg, L., & Köhrle, J. (2008). On the importance of selenium and iodine metabolism for thyroid hormone biosynthesis and human health. Molecular Nutrition and Food Research, 52, 1235–1246.

Shah, N., Uppal, A. M. & Ahmed, H. (2014). Impact of a school-based intervention to address iodine deficiency disorder in adolescent girls in Gilgit, Pakistan. https://ecommons.aku.edu/aku_symposium/2014_nhsrs/PP/71/. Accessed 15 November 2019.

Shinonaga, T., Gerzabek, M. H., Strebl, F., & Muramatsu, Y. (2001). Transfer of iodine from soil to cereal grains in agricultural areas of Austria. Science of the Total Environment, 267, 33–40.

Shreenath, A. P. & Dooley, J. (2019). Selenium deficiency. https://www.ncbi.nlm.nih.gov/books/NBK482260/. Accessed 10 April 2020.

Simonoff, M., Hamon, C., Moretto, P., Llabador, Y., & Simonoff, G. (1988). Selenium in foods in France. Journal of Food Composition and Analysis, 1, 295–302.

Singh, V., & Garg, A. N. (2006). Availability of essential trace elements in Indian cereals, vegetables and spices using INAA and the contribution of spices to daily dietary intake. Food Chemistry, 94, 81–89.

Stewart, A. (1990). Drifting continents and endemic goitre in northern Pakistan. British Medical Journal, 300, 1507–1512.

Sun, X., Shan, Z., & Teng, W. (2014). Effects of increased iodine intake on thyroid disorders. Endocrinology and Metabolism, 29, 240–247.

Thomson, C. D., Smith, T. E., Butler, K. A., & Packer, M. A. (1996). An evaluation of urinary measures of iodine and selenium status. Journal of Trace Elements in Medicine and Biology, 10, 214–222.

Toloza, F. J. K., Motahari, H., & Maraka, S. (2020). Consequences of severe iodine deficiency in pregnancy: Evidence in humans. Frontiers in Endocrinology, 11, 409.

USDA (United States Department of Agriculture). (2019). Food data central. https://fdc.nal.usda.gov/. Accessed 17 August 2019.

Velasco, I., Bath, S. C., & Rayman, M. P. (2018). Iodine as essential nutrient during the first 1000 days of life. Nutrients, 10, 290.

Ventura, M., Melo, M., & Carrilho, F. (2017). Selenium and thyroid disease: From pathophysiology to treatment. International Journal of Endocrinology, 2017, 1297658.

Waheed, S., Zaidi, J. H., Ahmad, S., & Saleem, M. (2002). Instrumental neutron activation analysis of 23 individual food articles from a high altitude region. Journal of Radioanalytical and Nuclear Chemistry, 254, 597–605.

Watts, M. J., Joy, E. J. M., Young, S. D., Broadley, M. R., Chilimba, A. D. C., Gibson, R. S., Siyame, E. W. P., Kalimbira, A. A., Chilima, B., & Ander, E. L. (2015). Iodine source apportionment in the Malawian diet. Scientific Reports, 5, 15251.

Watts, M. J., Middleton, D. R. S., Marriott, A., Humphrey, O. S., Hamilton, E., Mccormack, V., et al. (2019). Iodine status in western Kenya: A community-based cross-sectional survey of urinary and drinking water iodine concentrations. Environmental Geochemistry and Health, 42, 1141–1151.

WHO/UNICEF/ICCID (World Health Organisation/ United Nations International Children's Emergency Fund/International Council for Control of IDD). (2007). Assessment of iodine deficiency disorders and monitoring their elimination, A guide for programme managers. https://www.who.int/nutrition/publications/micronutrients/iodine_deficiency/9789241595827/en/. Accessed July 2020.

WHO (World Health Organisation). (2007). Reducing salt intake in populations. https://www.who.int/dietphysicalactivity/reducingsaltintake_EN.pdf. September 2020.

WHO (World Health Organisation). (2008). Guidelines for drinking-water quality 3rd edition. Geneva. https://www.who.int/water_sanitation_health/publications/gdwq3rev/en/. Accessed 15 March 2020.

WHO (World Health Organisation). (2011). Selenium in drinking-water: Background document for development of WHO guidelines for drinking-water quality. Geneva: WHO. https://apps.who.int/iris/handle/10665/75424. Accessed 15 March 2020.

WHO (World Health Organisation). (2012). Guideline: Sodium intake for adults and children. https://www.who.int/publications/i/item/9789241504836. Accessed 8 February 2020.

WHO (World Health Organisation). (2013). Urinary iodine concentrations for determining iodine status deficiency in populations. Vitamin and Mineral Nutrition Information System. https://apps.who.int/iris/bitstream/handle/10665/85972/WHO_NMH_NHD_EPG_13.1_eng.pdf?ua=1. Accessed 10 March 2020.

WHO (World Health Organisation). (2016). Be smart drink water : A guide for school principals in restricting the sale and marketing of sugary drinks in and around schools. Manila: WHO, Drinking Water; Beverages: Health Promotion: Food Services; School Health Services. http://iris.wpro.who.int/handle/10665.1/13218. Accessed 3 February 2020.

Wu, Q., Rayman, M. P., Lv, H., Schomburg, L., Cui, B., Gao, C., Chen, P., Zhuang, G., Zhang, Z., Peng, X., & Li, H. (2015). Low population selenium status is associated with increased prevalence of thyroid disease. The Journal of Clinical Endocrinology and Metabolism, 100, 4037–4047.

Yoneyama, S., Miura, K., Itai, K., Yoshita, K., Nakagawa, H., Shimmura, T., Okayama, A., Sakata, K., Saitoh, S., Ueshima, H., & Elliott, P. (2008). Dietary intake and urinary excretion of selenium in the Japanese adult population: The INTERMAP Study Japan. European Journal of Clinical Nutrition, 62, 1187–1193.

Zaman, M. M., Choudhury, S. R., Ahmed, J., Khandaker, R. K., Rouf, M. A., & Malik, A. (2017). Salt intake in an adult population of Bangladesh. Global Heart, 12, 265–266.

Zia, M. H., Watts, M. J., Gardner, A., & Chenery, S. R. (2014). Iodine status of soils, grain crops, and irrigation waters in Pakistan. Environmental Earth Sciences, 73, 7995–8008.

Zimmermann, M. B. (2009). Symposium on ‘geographical and geological influences on nutrition’ iodine deficiency in industrialised countries. Proceedings of the Nutrition Society, 69, 133–143.

Zimmermann, M. B., & Andersson, M. (2012). Assessment of iodine nutrition in populations: Past, present, and future. Nutrition Reviews, 70, 553–570.

Zimmermann, M. B., Jooste, P. L., & Pandav, C. S. (2008). Iodine-deficiency disorders. The Lancet, 372, 1251–1262.

Acknowledgements

The University of Nottingham provided financial support for this study. We would like to thank Dr Muhammad Jaffar Khan of Khyber Medical University and Abaseen Foundation for assistance in transporting urine samples from Pakistan to the University of Nottingham. Thanks also to Simon Welham and Lisa Coneyworth at the School of Biosciences, University of Nottingham for creatinine measurements. AGS acknowledges the continuing support of Khaliq O Malik.

Funding

Financial support for this study was provided by the University of Nottingham.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ahmad, S., Bailey, E.H., Arshad, M. et al. Environmental and human iodine and selenium status: lessons from Gilgit-Baltistan, North-East Pakistan. Environ Geochem Health 43, 4665–4686 (2021). https://doi.org/10.1007/s10653-021-00943-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10653-021-00943-w