Abstract

Digital advances in the learning space have changed the contours of student engagement as well as how it is measured. Learning management systems and other learning technologies now provide information about student behaviors with course materials in the form of learning analytics. In the context of a large, integrated and interdisciplinary Core curriculum course in a graduate school of public health, this study undertook a pilot randomized controlled trial testing the effect of providing a “behavioral nudge” in the form of digital images containing specific information derived from learning analytics about past student behaviors and performance. The study found that student engagement varied significantly from week to week, but nudges linking coursework completion to assessment grade performance did not significantly change student engagement. While the a priori hypotheses of this pilot trial were not upheld, this study yielded significant findings that can guide future efforts to increase student engagement. Future work should include a robust qualitative assessment of student motivations, testing of nudges that tap into these motivations and a richer examination of student learning behaviors over time using stochastic analyses of data from the learning management system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Background

Student engagement, a complex multi-dimensional construct, reflects the investment of a student’s time, energy, and other resources in course content. Dimensions include behavioral engagement (i.e., observable actions that represent investment in learning, including attendance, coming to class prepared, and completing tasks) as well as the cognitive efforts that drive behavioral engagement, and a broader sense of connectedness or belonging students feel to the course and the academic institution (Trowler, 2010; Axelson & Flick 2010; Henrie et al., 2015). Fincham et al. (2019) define academic engagement as “a student’s time on task, credit accrual, and homework completion” and operationalize it through attention to analytics related to video watching, problem submissions and weeks active in the learning management system (LMS) that houses the course. Engagement is a critical construct as it has been shown to correlate with satisfaction (Rajabalee & Santally, 2021), improve course persistence (Fincham et al., 2019), and predict student success (including grades, degree completion), educational attainment, and occupational attainment (Reeve et al., 2020; Kahu & Nelson, 2018; Henrie et al., 2015).

A promising strategy to promote student engagement is the use of nudges, inexpensive, easily avoidable changes in the “choice architecture,” the environment in which people make decisions (Thaler, 2018) that seek to help people make beneficial changes in behavior without significantly changing incentives (Szaszi et al., 2018). Nudges are increasingly being used in education to influence the underlying cognitive processes that lead to a change in behaviors building toward an end goal (Damgaard & Nielsen, 2018; Weijers et al., 2020). In education, nudge approaches used to increase engagement have included reframing (focusing on gains rather than losses, or presenting performance relative to other students), adding interim deadlines, and setting individualized goals (Damgaard & Nielsen, 2018).

Digital advances in the learning space have changed the contours of student engagement as well as how it is measured. LMSs – now nearly ubiquitous in higher education -- have presented new opportunities for students to interact with course content (Vogt, 2016). The rapid deployment of digital technology in the COVID-19 pandemic surfaced and enabled the examination of how students engage with learning in digital spaces (Nkomo et al., 2021); as students returned to live instruction, many are in blended learning environments, with digital course content including videos, interactive modules, and readings hosted on an LMS. Traditionally, student engagement has been assessed using self-report via surveys, with some students over-reporting their engagement (Henrie et al., 2015). LMSs represent an opportunity to overcome the drawbacks of self-reports by data mining to assess student engagement objectively (Nkomo & Nat, 2021) using learning analytics. Learning analytics has been defined as “the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimising [sic] learning and the environments in which it occurs” (Long & Siemens, 2011, p. 33). Research on learning analytics produced in an LMS examines faculty and student behaviors through digital interactions such as clicks, views, downloads, submissions and communications.

In the context of a large, integrated and interdisciplinary Core curriculum course in a graduate school of public health, we undertook a pilot randomized controlled trial testing the effect of providing a “behavioral nudge” in the form of digital images containing specific information about past student behaviors and included in weekly to-do lists modules on the online course management system. This nudge intervention is the latest initiative in an ongoing effort to implement active learning strategies in our interdisciplinary Core curriculum (Garbers et al., 2022) using design-based research criteria by (1) addressing the complex problem of designing for broad-scale integration of an educational innovation; (2) integrating user-centered design principles; (3) using an iterative design process that incorporates rigorous methods for usability testing; (4) moving to the implementation stage of a long-term project with refined design questions; (5) collaborating with interdisciplinary researchers and practitioners; and (6) exploring emerging design principles related to scalability (Reeves et al., 2005). Prior published work describes the rationale and development (Fried et al., 2014), short-term impact (Begg et al., 2015), implementation and long-term impacts of these efforts (Garbers et al., 2022). This pilot trial assesses the impact of using behavioral nudges that make explicit to students the interconnection between course materials and assessments on course engagement and subsequent learning outcomes.

2 Methods

2.1 Study design

This was a prospective, two-arm interventional randomized controlled trial carried out within a public health graduate school Core curriculum course to test the impact of nudges on student engagement with course content. Participants were randomized to receive nudges (or not) based on the section they were enrolled in. The course had four separate sections: two sections received the nudge intervention and two sections did not receive the nudges (control condition). Given the small number of sections, sections were assigned sequentially: control, intervention, control, intervention.

2.2 Setting

All candidates in the Masters of Public Health (MPH) program spend their first semester in an interdisciplinary Core curriculum designed to provide an interlocking foundation of public health knowledge. Public Health Interventions is one course in this Core curriculum; the course includes three units (referred to as “concentrations”) with different faculty and specific learning objectives for each concentration. The course is taught in hybrid format, with some content delivered through asynchronous lecture videos and interactive modules, and some live lectures and hands-on lab sessions. The faculty across all concentrations within the course meet regularly to integrate content. Grading across the three concentrations was conducted by a single team of 14 graduate teaching assistants who were blind to the project status.

2.3 Subjects

There was no direct recruitment of participants; this study included all 563 students from the school’s six departments who were enrolled in the Public Health Interventions in Fall 2021. Three students were excluded from analyses because they withdrew from the program during the Fall semester, leaving a final sample size of 560 (Fig. 1). This research was approved by the Institutional Review Board of the authors’ institution following expedited review.

2.4 Measures

The primary hypothesis was that, for each nudge, exposure to the nudge intervention would be associated with increased engagement with related course content. Secondarily, we hypothesized that increased engagement would in turn improve student learning outcomes for the assessment related to the nudge and improve course-wide outcomes. The primary outcome, engagement with course content, was collected via the learning management system data. Engagement was operationalized as a dichotomous (yes/no) variable, with any student who engaged with all of the course content mapped to that week’s nudge was classified as engaging. For each element within a week, students who accessed a mapped reading (by clicking on it) and/or who watched at least 97% of the total minutes of that week’s asynchronous video(s) and/or 100% of that week’s asynchronous module were labeled as engaging with that specific course content. Learning outcomes for each nudge were operationalized as the numerical grade (on a scale of 0-100) earned on the assessment mapped to the nudge. Course-wide learning outcomes were operationalized as agreeing (strongly or somewhat) with the following statements: I feel prepared to apply the course content to public health problems; I feel prepared to demonstrate the [course] competencies; and I am able to integrate concepts across the Core with ease (vs. with difficulty, or not able). Course-wide student experience outcomes included course rating for each concentration (excellent vs. good or very good vs. poor or very poor); and satisfaction with the Public Health Interventions course as a whole (very and somewhat satisfied vs. somewhat or very dissatisfied).

These outcomes were derived from three data sources. Data on engagement (and data to develop the nudge wording) used learning analytics associated with academic engagement from two LMS tools, Canvas and Panopto, as evidence of past student behaviors. Student engagement data and assessment data was exported from the LMS, cleaned and merged. The other data source were two course evaluations implemented by the Office of Educational Initiatives (OEI): a course evaluation that asked about each concentration, implemented in November 2021, and a Core Experience Survey (implemented in January 2022), which asks about students’ experience overall in the Core. The LMS data were transmitted to the OEI to merge with course evaluation data, with all identifiers removed before transmission to the study team for analyses. Estimated time to complete the work in each week (summarized in Table 2) was provided by the school’s instructional design team which completed the design analysis and mapping (Russell et al., 2022).

2.5 Content and implementation of the intervention

Four nudges were implemented in the nudge intervention group; the control group did not receive any nudges (their course messaging was delivered as usual). Each nudge was associated with a course assessment (assignment), implemented 3–5 days before the assessment due date. The nudge messages were digital images containing specific information about past student behaviors and placed in the to-do lists in the week’s module on the LMS, and in a to-do list transmitted as an LMS announcement. These to-do lists became available weekly to prepare students for the following week.

The content of the nudges was informed by two preparatory tasks as part of a systematic review of course content including an instructional design mapping (Russell et al., 2022). First, for all courses in the Core, each week’s course materials (readings, asynchronous videos, or asynchronous interactive modules using Rise) were mapped to a formative or summative assessment, and the estimated courseload (in hours) was calculated. Then, within the course, bivariate analyses compared the grades on the course’s four primary assessments between those who did and did not engage with all of the course content (as described above) that was mapped to that assignment. The following nudges were implemented: 1) Applying Theory to Interventions (Week 2: “We checked the data….Students who read the key readings for Weeks 1 and 2 scored 3 points higher on this week’s quiz compared to students who didn’t.” 2) Program Planning & Evaluation − 1 (Week 3): “What needs to get done this week? Students who completed the readings and videos on this to-do list scored 4.26 points higher than students who did not.” 3) Program Planning & Evaluation − 2 (Week 5): “Do I need to watch this? Students who watched this week’s lecture videos scored 7 points higher on this assignment than students who didn’t watch the videos. Yes, you do.” 4) Systems Thinking (Week 9/10): “Triaging your to-do’s? Students who reviewed the Rise and watched all the videos for this week scored 5 points higher on their final assignment compared to students who didn’t.”

2.6 Data analyses

Exposure to the intervention was conceptualized in two ways: (1) using an intent-to-treat design, in which exposure to the nudges was classified as allocated to the intervention vs. control sections, independent of actual exposure to the deployed nudges; (2) using an as-treated design, classifying students on their actual exposure to each nudge, based on whether the student had or had not accessed the weekly to-do list (the to-do list included the nudge for those in the nudge sections) through the LMS (via module and/or announcement).

Bivariate analyses (Chi-square, Fisher’s exact, and independent t tests) compared the sociodemographic (age; sex; and academic program) characteristics of those allocated to the nudge intervention sections versus those in control sections. The effect of the nudges was assumed to be non-cumulative, meaning each exposure to a week’s nudge was independent. Bivariate analyses compared engagement and assessment grades for each of the 4 nudges across those exposed vs. not exposed to the nudge intervention, for the intent-to-treat groups and the as-treated groups. Course-wide learning outcomes, student experience and metacognition, as assessed in course evaluations, were compared between those allocated and not-allocated to the nudge sections (intent-to-treat). Finally, logistic regression models compared the odds of completing all of the assigned work for each nudge, among intent-to-treat sample, adjusting for age and program type (characteristics that differed significantly between those in the nudge vs. no nudge sections).

3 Results

The sample (N = 560) of graduate MPH students in this study ranged in age from 18 to 51 years (mean = 24), was racially and ethnically diverse, and predominantly female (82.6%) and enrolled in a full-time program (87%) (Table 1). Students in the sections randomized to receive the nudge interventions were disproportionately in the full-time program, and were younger, reflecting the structure of the course to put students in an accelerated program for students more advanced in their careers in one section (a section randomly allocated to not receive the nudge).

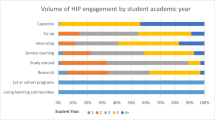

Engagement with course content (Table 2) varied substantially by week and was generally low: the overall proportion of students engaging with all of the content for each of the nudges was 49.3% in Week 2, 29.5% in Week 3, 44.3% in Week 5, and 24.1% in Week 9/10. In the as-treated analyses, engagement differed significantly (Chi square p = 0.035) by nudge exposure status only for the first Program Planning & Evaluation nudge in Week 3, with 48% of those in the nudge sections and 40.6% in the no-nudge sections completing all of the work (watching three asynchronous videos and reading one article).

Recognizing that the sections allocated to receive the nudge intervention differed significantly from those not allocated with regard to age and program type, we used logistic regression models adjusting for these factors to assess the odds of completing engagement for each week. After adjusting for these confounders (Table 3), no significant relationship remained between intervention status and engagement.

We also repeated the unadjusted comparisons of engagement in as-treated analyses, restricting the sample to students who clicked on the to-do list in each week. In the as-treated nudge groups, exposure to the deployed nudges by viewing the to-do list was initially high but declined steadily as the semester progressed, from 96% (Week 2) to 72% (Weeks 9–10). Within the as-treated analyses (Table 2), there were no significant differences in engagement between those who viewed the nudge and those who did not.

Given the high variation in engagement in each week (from 24 to 49% overall), we explored the extent to which contextual factors in the course might explain the variation (Table 2). While we did not observe a relationship between engagement and the amount of time required to complete the work mapped to the nudge, or the type of work, engagement was substantially higher in the weeks in which the students had a live session with their faculty member and classmates in the week in which the nudge was deployed (49.3% and 44.3% overall) compared to the weeks in which they did not (29.5% and 24.1%).

The assignments mapped to each nudge varied in type, and grades were generally high, with mean grades for all students ranging from 93 to 97 for the four major assessments (Table 4). We found no significant differences in grades between those who did and didn’t receive a nudge in the intent-to-treat or the as-treated analyses.

In intent-to-treat analyses of course-wide outcomes (Table 5, above), including learning outcomes, student experience, and meta-cognition, we found only one significant difference between those allocated to receive or not receive nudges: students in the nudge sections rated the Systems Thinking concentration significantly lower, including a lower proportion rating the concentration as excellent (27.1% vs. 38.6%, Chi square p = 0.018).

4 Discussion

In this evaluation of behavioral expectancy nudge intervention within a graduate-level interdisciplinary, integrated public health Core curriculum, we found that student engagement varied significantly from week-to-week, but our overall hypothesis was not upheld. Nudges linking coursework completion to assessment grade performance did not significantly change student engagement. Students in this Core curriculum undertake a rigorous course of study, with an estimated 40 or more hours of coursework per week. As a result, engagement in some weeks was quite low, with only a quarter of students completing all the out-of-class work in some weeks. This rate of engagement, while low, is similar to those observed in other graduate courses, in which students did about half of the assigned reading (McMinn et al., 2009), though directly comparable data on student engagement rates for graduate schools of public health are not reported in the literature. Still, the 44.3% of students watching all of the lecture videos in Week 5 is substantially higher engagement than reported for online video viewing in other higher education courses using asynchronous videos (Ozan & Ozarslan, 2016; Radunovich, 2018).

This inquiry has some methodological limitations. LMS data have a number of constraints, including the lack of a “shared understanding of what constitutes student engagement” with this learning technology (Nkomo et al., 2021, p. 21). Numerous factors in students’ lives affect behavioral engagement, including access to technology and students’ lived experience; data assessed via LMS is best understood in the context of these factors (Olson, 2021). Measurement of student engagement is complex – here, we examined only behavioral engagement, using a crude proxy for engagement with material. The absolute levels of engagement with readings in this analysis may lead to an over-estimate of engagement for reading-based content, as LMS data can only assess whether the student accessed the reading, not whether they read the entire document. Recent research suggests that the shift to LMS-hosted reading – on one hand, a boon to students, as it reduces the cost burden of purchasing textbooks -- has led to changes in how students read the material (online, rather than in hard copy) and the types of readings faculty assign (shorter readings), resulting in a decline in longform reading (Baron & Mangen, 2021). While our measurement of engagement with online videos is more precise, we were unable to measure the extent to which students were actively engaged in the videos while they were playing using existing technologies such as eye tracking or screen capture.

Our a priori hypotheses were that nudges may increase student engagement, in turn improving assignment-specific and course-wide learning outcomes, learning experience, and meta-cognition. Our overall null findings are consistent with a review of nudges by Daamgard & Nielsen (2018) which found that nudges may not have positive effects for all users exposed to them. Nudges may work best when they consider the underlying behavioral mechanisms. This study did yield information that can guide the deployment of future nudge interventions to increase student engagement, which has been shown in observational and interventional settings to be associated with better learning outcomes (Baker et al., 2019; Fincham et al., 2019). Nudges may be more effective if they relate to behavioral expectancies that drive student engagement, rely on self-regulatory tools (such as reminders and deadlines), and are deployed with sufficient time to allow students to change their behavior (Daamgard & Neilsen, 2018). The substantially higher engagement in weeks in which the students and faculty met in person suggest that what drives graduate student engagement may be social presence or social identity rather than grades on assessments. Doo and Bonk (2020) found that social presence is an important factor to enhance student engagement in large university classes using a flipped learning model. Future work should include a robust assessment of the behavioral mechanisms and motivations that underlie student behaviors, testing of nudges that tap into these motivations, and a richer examination of student learning behaviors over time using stochastic analyses of data from the learning management system (Jovanovic et al., 2017; Kelly et al., 2017).

Data availability

The datasets generated and analyzed during the current study are not publicly available as educational records are covered under the Family Educational Rights and Privacy Act. De-identified data may be available upon request from the authors, with permission from the Institutional Review Board.

References

Axelson, R. D., & Flick, A. (2010). Defining student engagement. Change: The Magazine of Higher Learning, 43(1), 38–43.

Baker, R., Evans, B., Li, Q., & Cung, B. (2019). Does inducing students to schedule lecture watching in online classes improve their academic performance? An experimental analysis of a time management intervention. Research in Higher Education, 60(4), 521–552.

Baron, N. S., & Mangen, A. (2021). Doing the reading: The decline of long long-form reading in higher education. Poetics Today, 42(2), 253–279.

Begg, M. D., Fried, L. P., Glover, J. W., Delva, M., Wiggin, M., Hooper, L., et al. (2015). Columbia public health core curriculum: Short-term impact. American Journal of Public Health, 105(12), e7–e13.

Damgaard, M. T., & Nielsen, H. S. (2018). Nudging in education. Economics of Education Review, 64, 313–342.

Doo, M. Y., & Bonk, C. (2020). The effects of self-efficacy, self-regulation and social presence on learning engagement in a large university class using flipped learning. Journal of Computer Assisted Learning, 36(6), 997–1010.

Fincham, E., Whitelock-Wainwright, A., Kovanović, V., Joksimović, S., van Staalduinen, J. P., & Gašević, D. (2019). Counting clicks is not enough: Validating a theorized model of engagement in learning analytics. In Proceedings of the 9th international conference on learning analytics & knowledge (pp. 501–510). LAK19. ACM.

Fried, L. P., Begg, M. D., Bayer, R., & Galea, S. (2014). MPH education for the 21st century: Motivation, rationale, and key principles for the new Columbia public health curriculum. American Journal of Public Health, 104(1), 23–30.

Garbers, S., March, D., Kornfeld, J., Baumgartner, S. R., Wiggin, M., Westley, L. A., Ballasteros-Gonzalez, D., Delva, M., Fried, L. P. (2022). Columbia University master of public health core curriculum: implementation, student experience, and learning outcomes, 2013–2018. Public Health Reports, 137(1), 168–178.

Henrie, C. R., Halverson, L. R., & Graham, C. R. (2015). Measuring student engagement in technology-mediated learning: A review. Computers & Education, 90, 36–53.

Jovanović, J., Gašević, D., Dawson, S., Pardo, A., & Mirriahi, N. (2017). Learning analytics to unveil learning strategies in a flipped classroom. The Internet and Higher Education, 33(4), 74–85.

Kelly, N., Montenegro, M., Gonzalez, C., Clasing, P., Sandoval, A., Jara, M., Saurina, E., & Alarcón, R. (2017). Combining event- and variable-centred approaches to institution-facing learning analytics at the unit of study level. International Journal of Information and Learning Technology, 34(1), 63–78. https://doi.org/10.1108/IJILT-07-2016-0022

Kahu, E. R., & Nelson, K. (2018). Student engagement in the educational interface: Understanding the mechanisms of student success. Higher Education Research & Development, 37(1), 58–71.

Long, P., & Siemens, G. (2011). Penetrating the fog: Analytics in learning and education. Educause Review, 46(5), 30–40.

McMinn, M. R., Tabor, A., Trihub, B. L., Taylor, L., & Dominguez, A. W. (2009). Reading in graduate school: A survey of doctoral students in clinical psychology. Training and Education in Professional Psychology, 3(4), 233.

Nkomo, L. M., Daniel, B. K., & Butson, R. J. (2021). Synthesis of student engagement with digital technologies: A systematic review of the literature. International Journal of Educational Technology in Higher Education, 18(1), 1–26.

Nkomo, L. M., & Nat, M. (2021). Student Engagement patterns in a blended learning environment: An Educational Data Mining Approach. TechTrends, 65(5), 808–817.

Olson, L. (2021). How can learning management systems be used effectively to improve student engagement?. Center on Reinventing Public Education.

Ozan, O., & Ozarslan, Y. (2016). Video lecture watching behaviors of learners in online courses. Educational Media International, 53(1), 27–41.

Prochaska, J. O., et al. (2015). Chapter 7: The transtheoretical model and stages of change. In K. Glanz (Ed.), Health Behavior: Theory, Research, and practice (5th ed., pp. 125–143). Jossey Bass.

Radunovich, H. L., & Acharya, S. (2018). If you flip it, will they watch? Case evaluation of a hybrid course. NACTA Journal, 62(1), 84–88.

Rajabalee, Y. B., & Santally, M. I. (2021). Learner satisfaction, engagement and performances in an online module: Implications for institutional e-learning policy. Education and Information Technologies, 26(3), 2623–2656.

Reeve, J., Cheon, S. H., & Jang, H. (2020). How and why students make academic progress: Reconceptualizing the student engagement construct to increase its explanatory power. Contemporary Educational Psychology, 62, 101899.

Reeves, T. C., Herrington, J., & Oliver, R. (2005). Design research: A socially responsible approach to instructional technology research in higher education. Journal of Computing in Higher Education, 16, 96–115.

Russell, L. R., Kane Sample, L., Bhaskar, S., & Lewis, P. (2022, April 21). A systematic approach to quality online course design and development. Educause Review.

Saunders, R. P., Evans, M. H., & Joshi, P. (2005). Developing a process-evaluation plan for assessing health promotion program implementation: A how-to guide. Health Promotion Practice, 6(2), 134–147.

Skinner, C. S., et al. (2015). Chapter 5: The health belief model. In K. Glanz (Ed.), Health behavior: theory, research, and practice (5th ed., pp. 75–94). Jossey Bass.

Szaszi, B., Palinkas, A., Palfi, B., Szollosi, A., & Aczel, B. (2018). A systematic scoping review of the choice architecture movement: Toward understanding when and why nudges work. Journal of Behavioral Decision Making, 31(3), 355–366.

Thaler, R. H. (2018). From cashews to nudges: The evolution of behavioral economics. American Economic Review, 108(6), 1265–1287.

Trowler, V. (2010). Student engagement literature review. The Higher Education Academy, 11(1), 1–15.

Vogt, K. L. (2016). Measuring student engagement using learning management systems (Doctoral dissertation, University of Toronto (Canada)).

Weijers, R., de Koning, B., & Paas, F. (2020). Nudging in education: From theory towards guidelines for successful implementation. European Journal of Psychology of Education, 36. https://doi.org/10.1007/s10212-020-00495-0

Acknowledgements

The authors thank members of the Office of Educational Initiatives who contributed to data management, Lauren Westley and Maggie Wiggin; and Lauren Kane-Sample of the Digital Learning Studio.

Funding

This research was supported by an internal grant from the Science of Learning Research (SOLER) Initiative at Columbia University. The funding agency contributed to formulation of the study hypotheses and design but was not involved in data collection or analysis.

Author information

Authors and Affiliations

Contributions

All authors made substantial contributions to the manuscript, in accordance with the National Academy of Sciences guidelines. Specifically, the authors had the following roles:

SG – Study design and conception, human subjects protection protocol, data analysis supervision, interpretation and writing.

ADC – Data management and analysis, data interpretation, writing.

ASB – Study design and conception, substantive revision.

RR – Study design and conception, interpretation, writing & substantive revision.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Garbers, S., Crinklaw, A.D., Brown, A.S. et al. Increasing student engagement with course content in graduate public health education: A pilot randomized trial of behavioral nudges. Educ Inf Technol 28, 13405–13421 (2023). https://doi.org/10.1007/s10639-023-11709-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10639-023-11709-5