Abstract

This paper proposes a framework to investigate the influence of physical interactions to sensory information, during robotic palpation. We embed a capacitive tactile sensor on a robotic arm to probe a soft phantom and detect and classify hard inclusions within it. A combination of PCA and K-Means clustering is used to: first, reduce the dimensionality of the spatiotemporal data obtained through the probing of each area in the phantom; second categorize the re-encoded data into a given number of categories. Results show that appropriate probing interactions can be useful in compensating for the quality of the data, or lack thereof. Finally, we test the proposed framework on a palpation scenario where a Support Vector Machine classifier is trained to discriminate amongst different types of hard inclusions. We show the proposed framework is capable of predicting the best-performing motion strategy, as well as the relative classification performance of the SVM classifier, solely based on unsupervised cluster analysis methods.

Similar content being viewed by others

1 Introduction

In the last decades, substantial efforts have been made in enhancing the sensing capabilities of robots by providing them with a sense of touch (Dahiya et al. 2010; Drimus et al. 2014). Haptic sensing differs from other modalities, such as vision, in virtue of its tight coupling with, and need of, physical interactions. Haptic sensing requires direct physical contacts with sensing targets, inducing spatio-temporal force patterns on the contact surface, which may or may not be the consequence of motor behaviors of the robots. Furthermore, force patterns are significantly related to the shape and mechanical properties of sensing surfaces (e.g. stiffness) and the target objects (Scimeca et al. 2018; Iida and Nurzaman 2016).

In medical palpation diagnosis, for example, given the nature of soft tissues in the human body, haptic perception plays a fundamental role (Puangmali et al. 2008). Here, practitioners necessitate the use of different palpation strategies according to the task, whether this is an organ to examine, finding cancerous inclusions or investigating their characteristics. In this context, contacts and physical interactions are the basis of rich sensory stimuli, with which practitioners can judge the conditions of target areas (Bendtsen et al. 1995; Eberman and Finn 2010; Wang et al. 2010). Indeed, previous research has focused on the use of haptics for RMIS and medical training (McLaughlin et al. 2002). These systems, currently based on vision, can be augmented with tactile information, improving the ability of surgeons to detect the mechanical properties of touched organs, and help in the localization of tumors and lumps (Konstantinova et al. 2014a).

In the past, many robotics palpation systems have been developed, for the detection of hard inclusions in soft tissue. In the early 1980s, the development of the WAPRO-4 showed how a robotics palpation system couple be capable of performing simple breast palpation to identify relatively large inclusions (Kato et al. 1988). This has been followed in more recent years with increasingly anthropomorphic palpation systems (Dario and Bergamasco 1988) and abdominal palpation systems (Davaria et al. 2014). Over a decade prior to this work, Trejos et al investigated the ability to use TSI to augment the abilities of surgeons to perform internal organ palpation under minimally invasive surgery (Trejos et al. 2009). In Hui et al. (2016) and Hui and Kuchenbecker (2014), Hui et al investigated the use of SynTouch BioTac tactile sensor, coupled with a Gaussian inference model or Support Vector Machine classifiers, for the detection of soft plastic inclusions within a simulated silicon tissue sample. In Gwilliam et al. (2010) Gwilliams et al compared the ability of an artificial tactile sensor to that of a human finger, thus showing key differences between the two, and the ability of the former to outperform the latter in specific scenarios. In Li et al. (2017), Li et al proposed the use of a compliant capacitive tactile sensor array, between the tissue and a probing apparatus, to capture tissue properties during palpation. They show the technology is capable of imaging lumps, if somewhat dependent from their depth within the tissue.

In all previous research, there has been little focus on the physical palpation techniques employed by the robot during the palpation examination. The robotic palpation techniques employed, in fact, were mainly simple vertical displacement, mostly with the tactile sensor constantly normal to the surface of the tissue under palpation. However, it is generally possible to positively influence sensory response through appropriate physical interaction, as advocated by the sensory–motor coordination framework. In the context of palpation, the efficacy of diagnosis could be significantly improved by influencing the sensory response through appropriate palpation techniques (Lungarella et al. 2003; Scimeca et al. 2018).

The strong dependence between the somatosensory system and motor actions in human palpation has been investigated in relation to the development of robotic palpation systems for detection of hard inclusions (Konstantinova et al. 2014b; Sornkarn and Nanayakkara 2016; Yen 2003; Konstantinova et al. 2017; Herzig et al. 2018). In the context of hard inclusion detection, the structure of sensory stimuli generated physical palpation, helps to understand similarities or differences amongst the palpated objects. Through pertinent physical interactions, sensory stimuli of similar objects will maintain strong invariant similarities in the sensing space, whilst increasing their difference with dissimilar objects. In this context, the invariances allow for the dissociation of stimuli originated from different objects and the association, instead, of stimuli derived from similar objects. This fundamental process, corresponding to the separation and association of sensor stimuli into groups, will be referred to as categorization.

The importance of categorization has previously been emphasized in Hoffmann and Pfeifer (2012), and the use of active interactions to solve the categorization problem has been explored in previous research (Pfeifer and Scheier 1997; Nolfi and Marocco 2002; Tuci et al. 2009). Considering the problem of categorization in the scenario of robotic palpation systems, much is still unclear. This paper addresses two related problems. First, we wish to investigate how motor actions can aid in the separation and categorization of tactile sensor information. Research has previously shown that motor actions can introduce structure in sensory information (Lungarella et al. 2005; Sporns and Lungarella 2006; Pfeifer et al. 2007), but it is yet to be understood which principles guide the emergence of such structure. Second, as later shown in this paper, knowing the task to solve may not be enough to understand which physical interaction strategy is appropriate to use, or predict its effects to the tactile information. Here, instead, it is first necessary to understand the properties of the objects in interaction with the agent and the level of abstraction intended for the categorization.

In order to address the above problems this paper investigates the processing of sensor signals based on dimensionality reduction and clustering. We propose a framework to explore the way active physical interactions with a soft body affect the structure of haptic spatio-temporal information. Through the proposed framework it is thus possible to choose in which way it is most appropriate to interact with objects, to improve their categorization and thus solve a classification task. The task, in this scenario, is the detection and classification of hard inclusions within a robotic palpation system.

The paper is organized as follows: In Sect. 2 we describe the methods used, starting from the experimental set-up in Sect. 2.1, to the acquisition of tactile data though various probing strategies in Sects. 2.2 and 2.3. In Sect. 3 we describe the proposed framework. In Sect. 4 we report the results of the experiments followed by a case study in Sect. 5 and the conclusion in Sect. 6.

2 Methods

We arrange an experimental scenario where a robotic arm, equipped with an end-effector and a tactile sensor, probes the soft tissue of a soft phantom organ, to detect hard inclusions within it. The properties of the phantom organ designed to test the ability of the robotic agent to be detect hard inclusions by their depth and size, as shown to be important in previous systems (Herzig et al. 2018).

2.1 Soft phantom and robot set-up

We built two \(160\times 160\times 40\) mm soft phantom organs using Ecoflex 00-10Footnote 1 from Smooth-on. The phantom organs are divided in 16 locations disposed in a coarse grained grid system as shown in Fig. 1e. Each location in the phantoms may or may not contain hard inclusions. An inclusion consists of a 3D-printed hard, spherical bead, embedded in the phantoms at a depth of either 5 mm or 15 mm, and having a diameter of 7 mm or 20 mm (Fig. 1d). Hereafter we may refer to a 7 mm inclusion placed at a depth of 5 mm as SS (Small-Shallow), a 20 mm inclusion placed at 5 mm as BS (Big-Shallow), a 7 mm inclusion placed at 15 mm as SD (Small-Deep), a 20 mm inclusion placed at 15 mm as BD (Big-Deep) and an area containing no hard inclusions as NA.

The experiments were performed on two phantoms: \(Ph\text {-}1\), containing \(12\times \)NA, \(1\times \)SD, \(1\times \)SS, \(1\times \)BS, \(1\times \)BD (Fig. 1b); and \(Ph\text {-}2\), containing \(4\times \)NA, \(3\times \)SD, \(3\times \)SS, \(3\times \)BS, \(3\times \)BD (Fig. 1c).

We 3D-printed a custom-made end-effector and integrated a capacitive tactile sensor onto its surface to retrieve \(tactile\ images\) during the probing experiments (Fig. 2b). The printed end-effector, coupled with the tactile sensor, was mounted onto an ST-Robotics R12/5 robotic armFootnote 2 (Fig. 1a).

2.2 Tactile sensor technology and data acquisition

High spatial resolution is a crucial component of the sensor technology necessary for the analysis in this paper. The tactile sensor used is described in Schmitz et al. (2011). The adopted sensing mode is based on the capacitive transduction principle. A capacitive transducer (i.e., a tactile element, or taxel) is organized in a layered structure: the lower layer consists of the positive electrode, which is mounted on a Flexible Printed Circuit Board (FPCB); a small air chamber act as dielectric and the upper layer is a ground plane made with conductive lycra. The tactile sensor is made up of a number of taxels geometrically organized in triangular modules.

In the current prototype, each module hosts 7 taxels (Fig. 2b), as well as the Capacitance to Digital Converter (CDC) chip (namely, the AD7147 from Analog Devices) for converting capacitance values to digital. The CDC chip can measure variations in capacitance values with 16 bits of resolution. All the modules are interconnected and communicate through an SPI bus to a read-out board which performs a preliminary processing of the tactile sensor data and send them to the PC through CAN bus (Fig. 2a) with a sensitivity of 0.32 fF.

In this context, the normal forces exerted on the sensor produce variations in capacitance values reflecting the varied pressure over the taxel positions. A sensor reading, or tactile image, from the tactile sensor described is produced at 20 Hz, and corresponds to a 7-dimensional array, where each element contains the capacitance variation value of the corresponding taxel.

2.3 Probing strategies

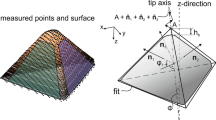

We control the r12/5 robotic arm open-loop in Cartesian coordinates. A teach-pendant was used to manually teach the robot the x-y location of the areas to probe. We use the stored end-effector positions in the subsequent control algorithm, where the robot automatically probes each location using the preferred probing strategy. We differentiate between two qualitatively different types of probing strategies, summarized in Fig. 3: vertical and rotatory.

First, the vertical probing strategy is performed with the probe aligned vertically and plunged directly down into the phantom at 0.5 mm increments. After each increment, the robot briefly pauses to allow a tactile image to be recorded before continuing with the next movement. This continues until the probe is at a depth d below the surface of the silicon, whereupon it stops recording and returns to a neutral position 10 mm above the surface in a single movement.

Second, the rotary motion is performed with the robot d mm below the surface of the silicone, rotating about a nexus point r mm away in the vertical direction. To reach the initial position of this motion strategy, the robot moves vertically downward from its rest position, until it reaches the position set by d. Hence, a nexus point r distant from the end effector is assumed, and the robot rotates about it in the \(+\theta \) direction until it is at an angle of \(30^{\circ }\) from its initial, vertical, position. Here, the palpation action can begin. The probe rotates in the \(-\theta \) direction at \(1^{\circ }\) increments, recording a tactile image after each step. Once the probe has rotated of \(60^{\circ }\) it stops recording, and returns to its rest position 10 mm above the surface of the silicone.

In general, a probing strategy can be uniquely identified by a depth d and a radius r, thus:

where if \(r=0\), the probing motion will be vertical, while if \(r>0\) the probing takes place via the rotatory strategy (Fig. 3).

3 Analytical framework

In this paper we consider the framework in Fig. 4. In the framework, an agent retrieves tactile sensor information while interacting with samples of objects, defined by a task. Here, the tactile information is directly influenced by the interactions with the samples. A categorization system allows for the information to be: first, re-encoded into a meaningful, lower-dimensional space (Cognitive Mapping); second, differentiated into useful categories (Category Formation). The abstraction level corresponds to the number of categories that should be observed in the sensor information and has a direct influence on the significance of the formed categories. At its limit, 2 categories might be too coarse to be useful in capturing differences amongst different types of objects, while a number of categories equal to the number of object samples is impractical in identifying any similarities amongst them, and therefore amongst similar objects. The direct influence of the physical interactions to the tactile information, if substantial, should be observable in the category formation process.

3.1 Task and physical interactions

Within the considered framework, the agent is an embodied system equipped with a tactile sensor, and capable of performing probing actions. The interactions consist of physical probing, through different strategies, of target areas in a soft phantom, as was described in Sect. 2.3. As exemplified in Fig. 5, an experiment consists of an agent probing a preselected phantom with a chosen probing strategy. The agent iteratively selects a target area in the phantom to probe, and performs the chosen probing strategy for the experiment (described by \(\varTheta \)) while acquiring and storing tactile information. After probing all intended areas the stored sensor information can undergo categorization.

3.2 Categorization

3.2.1 Cognitive mapping

A process is needed to reduce the high dimensionality of the spatiotemporal data acquired through the tactile sensor, while interacting with the environment. We define a tactile image sequence as a series of tactile sensor readings taken at set intervals, and concatenated into a single array. After acquiring tactile image sequences for each probed location, we use Principal Component Analysis projection (PCA) Tipping and Bishop (1999) to reduce the dimensionality of the acquired data (Lloyd 1982).

For a set of N different locations in a phantom, let \(\mathbf {X}\) be a \((N\times D)\) matrix where each unique tactile image sequence for a probed location is a D dimensional row (\(D\gg 2\)) in the matrix. The dimension of D, then, will be strictly dependent on the probing strategy and on the interval at which the agent captures each tactile image within the sequence.

After obtaining the tactile image sequences matrix \(\mathbf {X}\), we begin the process by finding the average tactile sequence \({\varvec{\mu }}\) as:

where \(\mathbf {x}_i\) is a column vector corresponding to the ith row in \(\mathbf {X}\). We compute a (\(D\times D\)) scatter matrix \(\mathbf {S}\) as:

and use Single Value Decomposition to factorize \(\mathbf {S}\) into

where \(\mathbf {Q}\) is a matrix such that each column \(q_j\) corresponds to an eigenvector of \(\mathbf {S}\), and each element \(\lambda _{jj}\) in the diagonal matrix \(\varLambda \) is its corresponding eigenvalue. We list the eigenvectors in ascending order of eigenvalue and select the first two in the list. Let \(\mathbf {p}_1\) and \(\mathbf {p}_2\) be the selected eigenvectors obtained from PCA.

We form a \((D\times 2)\) projection matrix \(\mathbf {P}\) as:

where \(\mathbf {p}_1^{\ T}\) and \(\mathbf {p}_2^{T}\) are column vectors in \(\mathbf {P}\).

Finally, we project the D-dimensional row vectors in \(\mathbf {X}\) onto a 2-dimensional subspace by:

where \(\mathbf {W}\) is a \((N\times 2)\) matrix. Each row in the matrix is a 2-dimensional encoding of a tactile image sequence for a probed location.

3.2.2 Category formation and abstraction level

To observe the effects of the probing strategies to the tactile sensor information we wish to have a process to categorize the re-encoded sensor information. We use K-Means Clustering (KMC) to find clusters in the data, where each found cluster will represent a potential category of inclusion types. The abstraction level is set by the number of clusters we wish to find in the data. We initialize the KMC algorithm with random centroids, and split the re-encoded sequences in \(\mathbf {W}\) into K clusters by:

The resulting \(\mathbf {v}\) is an N-dimensional array, where each element \(\mathbf {v}_i\in \{1, \ldots , K\}\), and \(\forall i\in \{1,\ \ldots \ , N\}\ \exists j\in \{1,\ \ldots \ , N\}:\ i\ne j\ \wedge \ v_i\ne v_j\) (Fig. 5); in other words, none of the resulting clusters can contain all the sample areas in the phantom.

In general \(\mathbf {v}_i=k\) only if the ith tactile image sequence belongs to cluster k, thus the \(\mathbf {v}\) vector contains the cluster membership of each probed location in the initial set.

Raw spatiotemporal tactile image sequences, as captured when probing \(Ph\text {-}2\) vertically at varying depths, in an area containing no hand inclusion, and an area containing a 15 mm inclusion placed 20 mm deep. Figure a shows the spatial layout of the taxels in the Cyskin sensor, while each tactile image sequence in b and c corresponds to a re-shaped \(x_i\)

To avoid cluster anomalies due to the random centroid initializations we run the KMC algorithm three times and discard the clustering attempt if, after convergence, any of the three cluster guess vectors differs from any other. At the end of the clustering process a list of centroids C is obtained, uniquely dividing the space into K categories (5). In this context, the cluster assignments for each probed location is largely dependent on the probing strategy employed.

The category formation is an unsupervised analysis to the data, and it is thus useful if performed on all available data at the time of analysis.

3.3 Motion strategy scoring

At the end of the clustering process it is necessary to be able to assess the usefulness of the probing in generating meaningful data for classification. For the unsupervised clustering algorithm to be able to find meaningful clusters in the re-encoded tactile data, it is necessary that the data exhibits structure. Therefore we score the probing strategy that generated the data via a metric of structure tightly connected to the type of clustering utilized in this paper, i.e. the silhouette score (or coefficient) (Rousseeuw 1987).

The silhouette score s(i) for cluster i can be computed as:

where a(i) is the mean intra-cluster distance of cluster i, and b(i) is its mean nearest-cluster distance. We will refer to the silhouette score s as the average score for each cluster found by KMC, i.e.:

The score will thus be a number \(s \in [-1,1]\), where data exhibiting more structure will score higher s values.

After probing the selected phantom through various probing strategies, the maximum observed silhouette score can identify which probing strategy is capable of generating structured data for hard inclusion detection and classification. The analysis as described thus far can be done without any prior labelling, and can thus be applied to all available data at the time of analysis. After, a supervised method can, for example, be used to perform the classification.

3.4 Experimental procedure

We execute 180 experiments, each of which sees the robot probing all 16 areas of \(Ph\text {-}1\) or \(Ph\text {-}2\) with the preferred \(\varTheta \) parameters. The experiments are carried out for all combinations of \(d\in [6.5\,\text {mm}, \ldots , 20.5\,\text {mm}]\) at 1 mm increments and \(r\in [0~\text {mm}, 10~\text {mm}, 12~\text {mm}, 14~\text {mm}, 16~\text {mm}]\). The bounds were chosen to reach the minimal/maximal experimentally feasible probing depth and rotation with the robotic arm, and the devised soft phantoms.

Given the procedure two datasets are collected, consists of time-series tactile data collected from either the first or the second phantom under examination (Ph-1 or Ph-2). Each dataset contains 90 sets of experiments, each of which is composed of 12 data-points, so 1440 data-points are present for each of the datasets. Each data-point is a time series of tactile images with variable length (each tactile image is a 7-dimensional array). For the rotatory motion, each tactile image was taken at \(1^{\circ }\) increments, while for the vertical motion each image was taken at 0.5 mm increments.

For each of the experiments, after the probing has ended, the time-concatenated data is used to form the tactile image sequence matrix described (see Sect. 3.2.1). The matrix can then be used to re-encode the tactile sensor information for each probed location into a lower dimensional space (\(Cognitive\ Mapping\)). After clustering, each probed location will be differentiated into one of a predetermined number of categories (\(Category\ Formation\)).

4 Results

The following sections will progressively analyse the described framework, starting from the dimensionality reduction process (PCA), to the repercussions of physical interactions to categorization (KMC).

4.1 Sound dimensionality reduction

One of the principal components of the proposed framework is the reduction of the high dimensional spatiotemporal tactile information, into re-encoded lower dimensional data. An example of the acquired tactile information is shown in Fig. 6. Without knowing which category each tactile sequence vector \(\mathbf {x_i}\) belongs to, it is impossible to assess the quality of dimensionality reduction from \(\mathbf {X}\) to \(\mathbf {W}\). However, it is feasible to maximize the information retention in the original tactile sensor data.

The change in explained variance by the 2D PCA subspace projection, when probing vertically (a) and through the rotatory motion (b), changing the number of samples used to find the principal components (N in \(\mathbf {X}\), see Sect. 3.2.2)

The explained variance can be thought of as a measure of the information captured by the PCA subspace after projection. As the eigenvalues in \(\varLambda \) (see Eq. 4) are proportional to the variance captured by the corresponding PCA principal components, we can compute the explained variance \(\tau _i\) for the principal component \(\mathbf {p_i}\) as:

where \(\lambda _i\) is the eigenvalue corresponding to the ith principal component. Here, \(\tau _i\) is a measure of the proportion of variance in the data, captured along the direction the principal component \(\mathbf {p_i}\) in the original sensor space.

Figure 7 shows the explained variance of each \(\mathbf {p}_i\), after the robot probed \(Ph\text {-}2\) in two different experiments where both \(\varTheta \) and the number of probed areas used for the projection (N) were varied. As clear from the figure, the number of probed areas and the \(\varTheta \) choice significantly affect the distribution of the sensor data in its original D space. In one case, the sensor data is mainly spread along 7 axis (\(\mathbf {p}_1-\mathbf {p}_7\)) (Fig. 7b), making it unsuitable for dimensionality reduction. In the other, instead, \(\mathbf {p}_1\) captures the majority of the information in the data (Fig. 7a). The figure suggests the suitability of the tactile information to the drastic reduction in dimensionality is dependent both on the properties of the probed areas, and probing strategy employed.

We further explore the way the probing strategy, and the properties of the probed areas in the phantom, affect the amount of information retained after dimensionality reduction. The explained variance achieved prior to categorization is \(I = \tau _1+\tau _2\). Fig. 8b shows the explained variance trends when the number of probed areas used for PCA projection varies. When the number of probed areas in maximal (16 areas, red plot in Fig. 8b), the influence of \(\varTheta \) is negligible. Conversely, with less data to base the PCA projection on (2 areas, blue plot in Fig. 8b), the choice of \(\varTheta \) can be the sole determinant to induce structure in the data. A second interesting phenomenon can be observed in Fig. 8a, when comparing the explained variance obtained after projecting \(\mathbf {X}\) based on 4 vs 8 probing areas in the phantom (yellow vs green plots). Here, the agent retains more information, even when basing the projection on less data, if the employed probing is vertical and at a depth of at least 17.5 mm. This result suggests that proper physical interaction can help information retention in the absence of enough data.

Ultimately, we observe the influence of the quality of the data samples to the information retention after PCA projection. Fig. 9a shows how in presence of very diverse inclusion types (left triangle plot), the effects of the vertical probing strategy \(\varTheta \) to I is negligible. The presence of very diverse data, in fact, is useful for PCA to find good projection axis. In absence of good data, or non-diverse inclusion types, instead, appropriate interaction can minimize information loss (peaks in Fig. 9a, b). In the figures, it is possible to see how the least diverse set of samples can yet induce the tactile information to retain most of the information when the phantom is appropriately probed (peak in triangle plot, Fig. 9a).

The explained variance analysis shown allows for a preliminary assessment of the robot palpation action employed. For any one palpation we show that good palpation actions correspond to information that can be safely projected onto a two dimensional space, preserving most of the information in but a few principal components.

4.2 Information structure and silhouette coefficient

Similarly to the previous sections we wish to observe the effects of changing the \(\varTheta \) parameters to the structure of the information after PCA projection. The silhouette coefficient, as explained in Sect. 3.3, depends on the mutual mean intra-cluster distance, and mean nearest-cluster distance for each pair of clusters (Fig. 10).

The change in position for the 2D PCA projected NA and BS samples when probing the phantom vertically at a depth of 6.5 mm and 19.5 mm. The yellow and blue line show the two parameters on which the silhouette score is based, i.e. intra-cluster distance and nearest cluster distance respectively (Color figure online)

Figures 11 and 12 both show how the change in \(\varTheta \) influences the silhouette score. This influence, however, is primarily dependent on N and the diversity of the inclusions probed, as suggested by the change in trends of the plots in each of the figures. Fig. 11a shows that little structure emerges when probing \(Ph\text {-}2\) vertically too superficially or too deeply. In both cases, in fact, the sensor response is uniformly too moderate or too steep to have any variation from an area of the phantom to another, thus inducing no variation in the information. Fig. 11b, instead, shows how, when in absence of enough data samples (2 areas, blue plot), a correct choice of \(\varTheta \) can be the sole determinant for good or bad structure in the information. In Fig. 12a and b, interestingly, it is shown how even without much diversity in the inclusion types, good structure can emerge when the phantom is probed appropriately (\(\varTheta = \small {\begin{Bmatrix}16.5\\ 0\end{Bmatrix}}\) or \(\varTheta = \small {\begin{Bmatrix}14.5\\ 16\end{Bmatrix}}\) ).

The change in silhouette coefficient by the 2D PCA subspace projection, when probing vertically (a) and through the rotatory motion (b), changing the number of samples used to find the principal components (N in \(\mathbf {X}\), see Sect. 3.2.1)

At last, we investigate the influence of the number of clusters K to the structure of the information s. The number of clusters sets the level of abstraction that the robot may wish to have to make use of the tactile information, and directly affect the interpretation of the emerging clusters. We choose three varying number of clusters: \(K=2\), presence vs. absence of an hard inclusion; \(K=3\), absence vs. small vs. large inclusion; \(K=5\), all inclusion types. Fig. 13 shows the trends when probing the soft phantom vertically at varying depths and changing K in the KMC algorithm. The emerging clusters present different structural properties. The different trends in the figure suggest how K directly affect the way the probing strategy influences the structure of the data. Interestingly, probing at a deeper depth increasingly helps to sense inclusions, or detect their size. To dissociate between all different inclusion types, instead, an optimal probing depth is found for d = 14.5 mm, after which the increasingly high sensor response converges, and renders the clusters less separable, thus decreasing the values of s.

4.3 Motion influence on cognitive maps

Predicting the effects of \(\varTheta \) to the low-level encoding of the information in \(\mathbf {W}\) is a highly complex process. Understanding such effects, however, would allow an agent to appropriately choose a \(\varTheta \) when solving the probing task.

To understand this relationship we make a plot of the cognitive maps for each set of motion parameters in \(\varTheta \) and observe how the encoding of each probed area changes according to the probing strategy used. Here, to have a better understanding of the motion effects, we perform the experiment on the least cluttered phantom, i.e. \(Ph\text {-}1\) (Fig. 1c), which would suffer less from disturbances due to the vicinity of adjacent inclusions. Figure 14a and b show the plots corresponding to probing the phantom vertically at the minimal and maximal experimental depth. By increasing the depth of probing, two very interesting effects take place: one, nearest cluster distance b(i) between almost all types of inclusions increases, allowing for better dissociation of diverse tactile information; two, the intra cluster distance a(i) between any two probing areas with the same type of hard inclusion decreases, allowing for each possible phantom inclusion type to be better represented.

Extending the analysis to the rotational probing strategy we can similarly observe the effects of changing the parameters in \(\varTheta \) from their minimal to their maximal experimental values. Interestingly, when employing the rotational strategy, the generated tactile information presents a structured layout, by which it is already possible to dissociate one stimulus type from another. In this scenario, then, the effect of the rotational parameter r to the structure of the data s appears to only mildly act upon the nearest-cluster distance parameter (Fig. 15a, b). The effect of increasing d, instead, confirms the hypothesis by which the probing depth influence acts upon the intra cluster distance of each stimulus type.

The effect of the depth parameter can be attributed to the strength in response of the sensorised probe. The tactile sensor, in fact, detects pressure levels on its surface. When probing the phantom at the minimum depth, the pressure registered by the sensor is mostly due to the elastic response of the Ecoflex 00-10 soft phantom, almost independently from the presence or absence of inclusions in the probed area. As the depth increases, the elastic response is influenced by the non-elasticity of the hard inclusion, should there be one in the probed area. We hypothesize this influence can be captured by the sensor response in three ways: first, the response should be higher when inclusions are present in the probed area; second, the sensor’s increase in detected pressure should arise at slightly different sample intervals depending on where the inclusion is placed in the phantom (deep vs shallow inclusion); third, the area of the response should vary depending on the size and depth of the inclusion.

In this framework, an acceptable probing depth is one which neither saturates the sensor response in each area, nor fails to detect changes in pressure when the probed area contains non-elastic inclusion. The task of dissociating amongst all different types of inclusions is optimized (i.e. maximal silhouette score) for \(\varTheta = \tiny {\begin{Bmatrix}12.5\\ 0\end{Bmatrix}}\) in \(Ph\text {-}1\) and \(\varTheta = \tiny {\begin{Bmatrix}14.5\\ 0\end{Bmatrix}}\) in \(Ph\text {-}2\). This analysis can be applied to any one dataset, to explore which way the robot action has influenced the haptic data in terms of information structure. The action parameters generating the data with the maximal silhouette score can thus be used to perform palpation on the tissue under examination.

4.4 Categorization and similarity abstractions

In robotics palpation, proper physical interaction can help in the dissociation of tactile information, such that the emerging clusters can be meaningful with respect to solving a task (e.g. finding hard inclusions in a soft phantom). Besides dissociating amongst different object types, however, another fundamental, yet usually neglected, fragment of information is related to the similarity associations between clusters. The distances between found clusters in the 2D re-encoded tactile information subspace, in fact, grants the agent the possibility to associate types of objects, and order or rank them based on such association.

In the context of probing a soft phantom to find hard inclusions, for example, the agent might need to prioritize possible findings based on the depth of the inclusion, e.g. [NA, SD/BD, SS/BS], we’ll refer to this as rank-1. In a different scenario where the size of the hard inclusion should take priority over its depth, the ranking might, for example, change to [NA, SD/SS, BD/BS], or rank-2. In this scenario, the influence of the physical interactions with the soft phantom may induce the agent to see some inclusion types as more similar to others, depending on which property is deemed more important.

To assess the performance of category formation in each experiment, we first need to match the clusters found by the KMC algorithm to any set of target classes for the phantom under analysis. We devise a cluster matching process based on maximal accuracy.

Given the previously computed guess vector \(\mathbf {v}\) and classes \(\mathbf {C}\), we first define a function \(\varGamma \) such that

where \(\mathbf {v}_i\) is the ith element in \(\mathbf {v}\), \(\mathbf {v}_i\in \mathbf {C}\), and \(\mathbf {C}_{\mathbf {v}_i}\) is the \(\mathbf {v}_i^{th}\) element in C. The function remaps the elements in \(\mathbf {v}\) based on \(\mathbf {C}\).

Given a target vector \(\mathbf {t}\) we define a function \(\varPsi \) to re-associate the classes in C such that the distance between the target and the guess vector is minimal, thus:

where \(C^{\ '}\in S(\mathbf {C})\), S(C) is the set of all permutations of C, and \(||\cdot ||\) is the Euclidean norm of a vector. Finally we define the cluster-matching as:

We use the cluster-matching process to re-associate the cluster memberships

Here \(\mathbf {v}\ '\) is a new vector maximizing accuracy for a particular task given (specified by the target vector \(\mathbf {t}\)). A vector \(\mathbf {v}=[2\ 2\ 1\ 0\ 0]\) for a task \(\mathbf {t}=[1\ 1\ 0\ 2\ 2]\), for example, would be re-associated as \(\mathbf {v}^{\ \prime }=[1\ 1\ 0\ 2\ 2]\). We utilize the cluster memberships in \(\mathbf {v}\ '\) to compute each cluster center and retrieve the mutual distances between clusters.

In this analysis we consider two scenarios where we may want to associate the clusters by depth or size of inclusion, and use the NA type as ground zero, we thus consider the distance from the cluster-matched NA inclusion type and the remaining types (Fig. 16). As clear from Fig. 16, by duly interacting with the soft phantom, the distance between each cluster type and the NA cluster changes drastically. In this context, then, it is possible to induce a ranked understanding of robot’s perceived similarities between different inclusion types by simply acting on the \(\varTheta \) parameters.

We demonstrate the ability to achieve similarity relationships of the kind previously described by finding the parameters for which the agent can rank the system based on rank-1 or rank-2. We perform the experiments in \(Ph\text {-}2\), and we use the experimental data gathered through the probing of the soft phantom to find the parameters by which we can solve the ranking. We find the robot capable of abstracting similarities relationships according to rank-1 for \(\varTheta = \tiny {\begin{Bmatrix}9.5\\ 0\end{Bmatrix}}\) (Fig. 17a), and according to rank-2 for \(\varTheta = \tiny {\begin{Bmatrix}15.5\\ 0\end{Bmatrix}}\) (Fig. 17b).

5 Palpation test case

We perform experiments to test the ability of the framework developed to assess and identify the motion control which can best allow an agent to differentiate among different types of inclusions. For this purpose, the robot is set to perform palpation on a phantom containing \(4\times \)NA, \(3\times \)SD, \(3\times \)SS, \(3\times \)BS, \(3\times \)BD. The sensorized robotic arm is made to palpate the phantom vertically on each location, as described in Sect. 2.3. At this point, dimensionality reduction is used to pass from a high dimensional sensor description of each palpated phantom location, to a two dimensional descriptor based on PCA analysis (see Sect. 3.2.1).

After dimensionality reduction it is possible to utilize Equations (7) through (9) to assess the quality of each motion strategy with respect to the collected data. The motion strategy parameters generating the highest structure in the data can thus be saved.

Here we make use of a standard classification procedure to dissociate amongst the different types of inclusions, and we assess the ability of the framework described in this paper to assist in determining which motion would have generated the best data for palpation classification. We use a off-the-shelf multi-class Support Vector Machine (SVM) (Cortes and Vapnik 1995) classifier, as implemented in the scikit-learn python tool (Pedregosa et al. 2011).

The dataset utilized for this test scenario consists of 224 data-points, each consisting of sequence of tactile images. The data corresponds to vertical palpations performed at 14 different depths spaced by 0.5 mm each, thus for each depth 16 data samples are present. An SVM classifier is trained on a single sample for each type of inclusion (one-shot learning), at each different depth. We thus fit 14 different SVM classifiers, and we show how the unsupervised analysis run on the same data is capable of faithfully predicting the most performing action parameter before any supervised learning is necessary.

Three different type of classification are executed, following the same qualitative analysis in Sect. 4.2. First a classification with two classes, where the SVM classifier is trained to discriminate between locations containing hard inclusions, and locations with no inclusions. Second, three classes, where the classifier is trained to discriminate between large inclusions, small inclusions or no inclusions. Third, 5 classes, where all inclusion types are considered. For each of the three classification types, the classifier is trained on the minimal possible number of inclusions per class, i.e. 1 sample, and the data-set is split into training and test set accordingly. For each probed depth, the 16 data-points are therefore divided into 5 samples for training and 11 for testing. This is done at all 14 different depths. The split was purposefully chosen to observe the classifier performance when lacking large amounts of data.

After training, the SVM classifier separates the two dimensional space according to the two, three or five classes, maximizing the distance to the nearest training data points of any class. Once the classifier has been fit to the training samples, we test the ability of the SVM to classify a new inclusion correctly by testing it on the unseen phantom test locations.

Figure 18 shows the resulting accuracy of the classifier at different probing depths and when classifying the inclusions following the three different sets of classes described. Given the difficulty of the classification task with the limited amount of data, the classifier can only achieve an average classification accuracy of 68.78% when detecting hard inclusions, 36.26% when detecting inclusions based on size and 47.40% when discriminating inclusions based on all their properties. Even in this scenario, the motion strategy detected by the proposed framework can achieve accuracies of respectively 78.57%, 69.23% and 63.63% in the same tasks, improving on the average classification accuracy of up to 10–33%, as shown by the black circles in Fig. 18. More significantly, when comparing Figs. 13 and 18, it becomes clear how the general performance of the classification can indeed be predicted by the framework proposed, by solely relying on information structure. In fact, additionally to the best performing motion strategy, both the motion parameters resulting in the least accurate classification, as well as the general flow of the accuracy graph in Fig. 18 can be almost faithfully predicted based on the scores in Fig. 13.

The classification test accuracy of a multi-class SVM trained on a single sample for each inclusion type, when performing a vertical probing action at different depths, and over varying number of clusters. The highlighted black circles correspond to the maximal silhouette score computed through the proposed framework (see Fig. 13)

6 Conclusion

In this paper we investigated the effects of various motion strategies to the response of a capacitive tactile sensor, for the task of detecting hard inclusions in a soft body. Actively choosing an interaction strategy, to optimize sensory reception for a specific task at hand, has the potential to be a powerful tool. Such tool could endow robots with the ability to dynamically filter properties of touched objects, actively helping in the completion of a task (Olsson et al. 2004; Bohg et al. 2017) even before the sensor information arrives to a central processing unit.

The experiments were performed by embedding a capacitive tactile sensor onto a 3D-printed end-effector, and probing two soft phantoms with various hard inclusions through different probing strategies. The sequential sensor data obtained through the probing of each area in the phantom was clustered, and the change in information due to each strategy observed and analised.

We found the amount of information retained after PCA projection to be highly dependent both on the probing strategy and the properties of the sample areas in interaction. More interestingly, we found that appropriate probing strategies can help retain information even when lacking a large quantity or good quality of it. Using the explained variance as a measure of information is useful in ensuring large amount of heterogeneity is kept in the data, but it is not capable of ensuring the quality of the information retained. In fact, it could be possible that the projection makes the information relative to highly distinct object, indistinguishable after projection. However, under the assumption of no prior knowledge of target labels, keeping variance in the data is usually a sensible choice. The proposed analysis can therefore help choose those actions which allow sound dimensionality reduction, with the minimum loss of information variance in a low dimensional inference space.

Furthermore, we analysed the impact due to motion on cognitive maps and extracted how the motion influenced the tactile information. This analysis is useful in understanding the effects of motion to the perception of the probed areas, and can be used to appropriately choose an interaction strategy that generates structure. To make full use of such effects, however, it would be ideal to instead be able to predict such change, before interaction takes place. Here, the change in position of each point within a cognitive map could be interpreted as a transformation in the same domain. The transformation function could be learned from initial interaction and used in future tasks to optimize the sensor response for a specific task. The transformation function, however, would not only be dependent on the motion parameters employed, but also on the properties of the sample objects in interaction, like demonstrated in the results.

It is also possible to take categorization one step further and abstract similarities between object types from Cognitive Maps. Here we have shown that the physical interaction can drive the similarity relationship between objects. In an unsupervised scenario, the abstractions can be highly informative and can, for example, be useful to fix an ordering, via mutual distances, on the sensed object types. The object ordering can be purposefully fixed to the agent’s advantage. In a real scenario a practitioner might diagnose the gravity of a detected inclusion based on various features. In our fictitious example we show how it is possible for an agent to prioritize over two features by simply changing the palpation strategy.

The unsupervised analysis framework proposed in this work is meant to assess how the robot’s palpation technique may influence its ability to diagnose hard inclusions in soft tissue. Without need for explicit labels or knowledge of whether the tissue under palpation has abnormal lumps, the framework can still inform a robotic agent on what type of haptic interaction is likely to be most discriminative. As such the framework is most useful when used as a pre-learning step, before any actual supervised learning takes place. We later show this with a simple supervised test case, where the most discriminative palpation actions are found to be coherent with the unsupervised analysis proposed. In the test case application of the proposed framework a robot is made to palpate a clustered phantom, and an SVM multi-class classifier is trained on the minimal possible number of samples per class. The classifier is shown to perform best when employing the highest scoring motion strategy, as detected by the proposed framework. The chosen strategy is shown to improve the classification accuracy of the classifier of up to 33%. More interestingly, we observe the silhouette analysis based on our method can predict the general relative performance of the classification a priori. The SVM based classifier utilized is effective in showing the usefulness of the analysis, however, it is too simplistic to outperform any other state of the art supervised learning system. The SVM, in fact, performs simple on-shot learning, and tries to classify lumps based on only one example of each type. Although not in the scope of this research, more complex inference methods can also be considered, and are likely to still benefit from analysis shown in this paper.

As a parametric discretization of the sizes of the lumps was necessary for the analysis in this paper, the work described palpation on 5 different types of spherical inclusions, thus no evidence was shown for other types or sizes. As the analysis itself was independent of the size or type of lump, be believe it can extend to any-one type of lump parametrization necessary, as shown by the consistency of the results when sub-sampling the lumps in type groups of 2, 3 or 5 types (see Sect. 5). However, a second assumption was due to the location of the inclusion, should there have been one present in the tissue under palpation. Here it is key that the type of lump is approximately the same across experiments, this is true also of its location with respect to the examining probe. Should this not hold true, it is possible the unsupervised clustering method may classify two lumps of the same type into different clusters. Here, additional research is necessary to address the need of haptic search algorithms to locate, rather than discriminate, between lumps in a soft tissue.

Finally, the parameters were optimized with respect to the actual tissue under palpation, and are thus likely to be valid for the phantom devised for these experiments. For any new tissue under palpation, however, the same analysis can be applied, and new optimized parameters retrieved without knowledge of whether an inclusion is or is not present under the palpated tissue. This can serve as a first filtering procedure before carrying out more expensive, supervised experiments, where the breath of possible robot action may be too large to be employed. Moreover, the analysis can retrieve motion parameters which either maximise information retention in lower dimensional sensor inference space, and/or maximize information structure within the retrieved haptic data.

References

Bendtsen, L., Jensen, R., Jensen, N., & Olesen, J. (1995). Pressure-controlled palpation: A new technique which increases the reliability of manual palpation. Cephalalgia, 15(3), 205–210.

Bohg, J., Hausman, K., Sankaran, B., Brock, O., Kragic, D., Schaal, S., et al. (2017). Interactive perception: Leveraging action in perception and perception in action. IEEE Transactions on Robotics, 33(6), 1273–1291.

Cortes, C., & Vapnik, V. (1995). Support-vector networks. Machine Learning, 20(3), 273–297.

Dahiya, R., Metta, G., Valle, M., & Sandini, G. (2010). Tactile sensing-from humans to humanoids. IEEE Transactions on Robotics, 26(1), 1–20.

Dario, P., & Bergamasco, M. (1988). An advanced robot system for automated diagnostic tasks through palpation. IEEE Transactions on Biomedical Engineering, 35(2), 118–126.

Davaria, S., Najafi, F., Mahjoob, M., & Motahari-Bidgoli, S. (2014). Design and fabrication of a robotic tactile device for abdominal palpation. In 2014 second RSI/ISM international conference on robotics and mechatronics (ICRoM), IEEE, (pp. 339–344).

Drimus, A., Kootstra, G., Bilberg, A., & Kragic, D. (2014). Design of a flexible tactile sensor for classification of rigid and deformable objects. Robotics and Autonomous Systems, 62(1), 3–15.

Eberman, L. E., & Finn, M. E. (2010). Enhancing clinical evaluation skills: Palpation as the principal skill. Athletic Training Education Journal, 5(4), 170–175.

Gwilliam, J. C., Pezzementi, Z., Jantho, E., Okamura, A. M., & Hsiao, S. (2010). Human vs. robotic tactile sensing: Detecting lumps in soft tissue. In 2010 IEEE haptics symposium, IEEE (pp. 21–28).

Herzig, N., Maiolino, P., Iida, F., & Nanayakkara, T. (2018). A variable stiffness robotic probe for soft tissue palpation. IEEE Robotics and Automation Letters, 3(2), 1168–1175.

Hoffmann, M., & Pfeifer, R. (2012). The implications of embodiment for behavior and cognition: animal and robotic case studies. arXiv preprint arXiv:1202.0440.

Hui, J. C., Block, A. E., Taylor, C. J., & Kuchenbecker, K. J. (2016). Robust tactile perception of artificial tumors using pairwise comparisons of sensor array readings. In 2016 IEEE Haptics symposium (HAPTICS), IEEE (pp. 305–312).

Hui, J. C., & Kuchenbecker, K. J. (2014). Evaluating the biotac’s ability to detect and characterize lumps in simulated tissue. In: International conference on human Haptic sensing and touch enabled computer applications (pp. 295–302). Berlin: Springer.

Iida, F., & Nurzaman, S. (2016). Adaptation of sensor morphology: An integrative view of perception from biologically inspired robotics perspective. Interface Focus, 6, 20160016.

Kato, I., Koganezawa, K., & Takanishi, A. (1988). Automatic breast cancer palpation robot: Wapro-4. Advanced Robotics, 3(4), 251–261.

Konstantinova, J., Cotugno, G., Dasgupta, P., Althoefer, K., & Nanayakkara, T. (2017). Palpation force modulation strategies to identify hard regions in soft tissue organs. PLoS ONE, 12(2), e0171706.

Konstantinova, J., Jiang, A., Althoefer, K., Dasgupta, P., & Nanayakkara, T. (2014a). Implementation of tactile sensing for palpation in robot-assisted minimally invasive surgery: A review. IEEE Sensors Journal, 14(8), 2490–2501.

Konstantinova, J., Li, M., Mehra, G., Dasgupta, P., Althoefer, K., & Nanayakkara, T. (2014b). Behavioral characteristics of manual palpation to localize hard nodules in soft tissues. IEEE Transactions on Biomedical Engineering, 61(6), 1651–1659.

Li, B., Shi, Y., Fontecchio, A., & Visell, Y. (2017). Mechanical imaging of soft tissues with a highly compliant tactile sensing array. IEEE Transactions on Biomedical Engineering, 65(3), 687–697.

Lloyd, S. (1982). Least squares quantization in PCM. IEEE Transactions on Inform Theory, 28(2), 129–137. https://doi.org/10.1109/TIT.1982.1056489.

Lungarella, M., Metta, G., Pfeifer, R., & Sandini, G. (2003). Developmental robotics: A survey. Connection Science, 15(4), 151–190.

Lungarella, M., Pegors, T., Bulwinkle, D., & Sporns, O. (2005). Methods for quantifying the informational structure of sensory and motor data. Neuroinformatics, 3(3), 243–262.

McLaughlin, M. L., Hespanha, J. P., & Sukhatme, G. S.: Introduction to haptics. In: Touch in Virtual Environments, pp. 1–31. MISC Press Multimedia Series (Prentice Hall) (2002)

Nolfi, S., & Marocco, D. (2002). Active perception: A sensorimotor account of object categorization. In From animals to animats 7: Proceedings of the VII international conference on simulation of adaptive behavior, et al., Cambridge, MA (pp. 266–271).

Olsson, L., Nehaniv, C. L., & Polani, D. (2004). Sensory channel grouping and structure from uninterpreted sensor data. In Proceedings of NASA/DoD conference on evolvable hardware, IEEE, (pp. 153–160).

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., et al. (2011). Scikit-learn: Machine learning in Python. Journal of Machine Learning Research, 12, 2825–2830.

Pfeifer, R., Lungarella, M., Sporns, O., & Kuniyoshi, Y. (2007). On the information theoretic implications of embodiment–principles and methods. In 50 years of artificial intelligence, Berlin: Springer (pp. 76–86).

Pfeifer, R., & Scheier, C. (1997). Sensory-motor coordination: The metaphor and beyond. Robotics and Autonomous Systems, 20(2–4), 157–178.

Puangmali, P., Althoefer, K., Seneviratne, L., Murphy, D., & Dasgupta, P. (2008). State-of-the-art in force and tactile sensing for minimally invasive surgery. IEEE Sensors Journal, 4(8), 371–381.

Rousseeuw, P. J. (1987). Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. Journal of Computational and Applied Mathematics, 20, 53–65.

Schmitz, A., Maiolino, P., Maggiali, M., Natale, L., Cannata, G., & Metta, G. (2011). Methods and technologies for the implementation of large-scale robot tactile sensors. IEEE Transaction on Robotics, 27(3), 389–400. https://doi.org/10.1109/TRO.2011.2132930.

Scimeca, L., Maiolino, P., & Iida, F. (2018). Soft morphological processing of tactile stimuli for autonomous category formation. In IEEE international conference on soft robotics, Robosoft.

Sornkarn, N., & Nanayakkara, T. (2016). The efficacy of interaction behavior and internal stiffness control for embodied information gain in haptic perception. In IEEE international conference on robotics and automation (ICRA), IEEE (pp. 2657–2662).

Sporns, O., & Lungarella, M. (2006). Evolving coordinated behavior by maximizing information structure. In Artificial life X: Proceedings of the tenth international conference on the simulation and synthesis of living systems, Citeseer (vol. 10, p 323).

Tipping, M. E., & Bishop, C. M. (1999). Probabilistic principal component analysis. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 61(3), 611–622. https://doi.org/10.1111/1467-9868.00196.

Trejos, A. L., Jayender, J., Perri, M., Naish, M. D., Patel, R. V., & Malthaner, R. (2009). Robot-assisted tactile sensing for minimally invasive tumor localization. The International Journal of Robotics Research, 28(9), 1118–1133.

Tuci, E., Massera, G., & Nolfi, S. (2009). On the dynamics of active categorisation of different objects shape through tactile sensors. In European conference on artificial life, Berlin: Springer (pp. 124–131).

Wang, N., Gerling, G. J., Childress, R. M., & Martin, M. L. (2010). Quantifying palpation techniques in relation to performance in a clinical prostate exam. IEEE Transactions on Information Technology in Biomedicine, 14(4), 1088–1097.

Yen, P. L. (2003). Palpation sensitivity analysis of exploring hard objects under soft tissue. In: Proceedings of IEEE/ASME international conference on advanced intelligent mechatronics, AIM, IEEE (vol. 2, pp. 1102–1106).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was funded by the UK Agriculture and Horticulture Development Board and by The United Kingdom Engineering and Physical Sciences Research Council (EPSRC) MOTION Grant [EP/N03211X/2].

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 1 (mp4 13821 KB)

Supplementary material 2 (mp4 7622 KB)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Scimeca, L., Maiolino, P., Bray, E. et al. Structuring of tactile sensory information for category formation in robotics palpation. Auton Robot 44, 1377–1393 (2020). https://doi.org/10.1007/s10514-020-09931-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10514-020-09931-y