Abstract

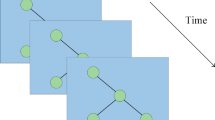

As an essential part of traffic management, traffic flow prediction attracts worldwide attention to intelligent traffic systems (ITSs). Complicated spatial dependencies due to the well-connected road networks and time-varying traffic dynamics make this problem extremely challenging. Recent works have focused on modeling this complicated spatial-temporal dependence through graph neural networks with a fixed weighted graph or an adaptive adjacency matrix. However, fixed graph methods cannot address data drift due to changes in the road network structure, and adaptive methods are time consuming and prone to be overfitting because the learning algorithm thoroughly optimizes the adaptive matrix. To address this issue, we propose a principal graph embedding convolutional recurrent network (PGECRN) for accurate traffic flow prediction. First, we propose the adjacency matrix graph embedding (AMGE) generation algorithm to solve the data drift problem. AMGE can model the distribution of spatiotemporal series after data drift by extracting the principal components of the original adjacency matrix and performing an adaptive transformation. At the same time, it has fewer parameters, alleviating overfitting. Then, except for the essential spatial correlations, traffic flow data are also temporally dynamic. We utilize temporal variation by integrating gated recurrent units (GRU) and AMGE to comprise the proposed model. Finally, PGECRN is evaluated on two real-world highway datasets, PeMSD4 and PeMSD8. Compared with the existing baselines, the better prediction accuracy of our model shows that it can accurately and efficiently model traffic flow.

Similar content being viewed by others

References

Zhang J, Wang F-Y, Wang K, Lin W-H, Xu X, Chen C (2011) Data-driven intelligent transportation systems: a survey. IEEE Trans Intell Transp Syst 12(4):1624–1639

Cui Z, Henrickson K, Ke R, Wang Y (2019) Traffic graph convolutional recurrent neural network: a deep learning framework for network-scale traffic learning and forecasting. IEEE Trans Intell Transp Syst 21(11):4883–4894

Wang Z, Su X, Ding Z (2020) Long-term traffic prediction based on lstm encoder-decoder architecture. IEEE Trans Intell Transp Syst 22(10):6561–6571

Jia T, Yan P (2020) Predicting citywide road traffic flow using deep spatiotemporal neural networks. IEEE Trans Intell Transp Syst 22(5):3101–3111

Yu B, Yin H, Zhu Z (2018) Spatio-temporal graph convolutional networks: a deep learning framework for traffic forecasting. In: Proceedings of the 27th international joint conference on artificial intelligence, pp 3634–3640

Guo S, Lin Y, Feng N, Song C, Wan H (2019) Attention based spatial-temporal graph convolutional networks for traffic flow forecasting. In: Proceedings of the AAAI conference on artificial intelligence, vol 22, pp 922–929

Jia W, Tan Y, Liu L, Li J, Zhang H, Zhao K (2019) Hierarchical prediction based on two-level gaussian mixture model clustering for bike-sharing system. Knowl-Based Syst 178:84–97

Wu Z, Pan S, Long G, Jiang J, Zhang C (2019) Graph wavenet for deep spatial-temporal graph modeling. In: Proceedings of the 28th international joint conference on artificial intelligence, pp 1907–1913

Bai L, Yao L, Li C, Wang X, Wang C (2020) Adaptive graph convolutional recurrent network for traffic forecasting. Adv Neural Inf Process Syst 33:17804–17815

Lin Z, Feng J, Lu Z, Li Y, Jin D (2019) Deepstn+: context-aware spatial-temporal neural network for crowd flow prediction in metropolis. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, pp 1020–1027

Geng X, Li Y, Wang L, Zhang L, Yang Q, Ye J, Liu Y (2019) Spatiotemporal multi-graph convolution network for ride-hailing demand forecasting. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, pp 3656–3663

Wang H, Zhang R, Cheng X, Yang L (2022) Hierarchical traffic flow prediction based on spatial-temporal graph convolutional network. IEEE Trans Intell Transp Sys

Koesdwiady A, Soua R, Karray F (2016) Improving traffic flow prediction with weather information in connected cars: a deep learning approach. IEEE Trans Veh Technol 65(12):9508–9517

Guo J, Williams BM (2010) Real-time short-term traffic speed level forecasting and uncertainty quantification using layered kalman filters. Transp Res Rec 2175(1):28–37

Gu Y, Lu W, Xu X, Qin L, Shao Z, Zhang H (2019) An improved bayesian combination model for short-term traffic prediction with deep learning. IEEE Trans Intell Transp Syst 21(3):1332– 1342

Zheng H, Lin F, Feng X, Chen Y (2020) A hybrid deep learning model with attention-based conv-lstm networks for short-term traffic flow prediction. IEEE Trans Intell Transp Syst 22(11):6910–6920

Cheng G, Yang C, Yao X, Guo L, Han J (2018) When deep learning meets metric learning: remote sensing image scene classification via learning discriminative cnns. IEEE Trans Geosci Remote Sens 56 (5):2811–2821

Wang J, Yang Y, Mao J, Huang Z, Huang C, Xu W (2016) Cnn-rnn: a unified framework for multi-label image classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2285–2294

Zhong Z, Li J, Luo Z, Chapman M (2017) Spectral–spatial residual network for hyperspectral image classification: a 3-d deep learning framework. IEEE Trans Geosci Remote Sens 56(2):847–858

Baevski A, Hsu W-N, Conneau A, Auli M (2021) Unsupervised speech recognition. Adv Neural Inf Process Syst 34:27826–27839

Saon G, Tüske Z, Bolanos D, Kingsbury B (2021) Advancing rnn transducer technology for speech recognition. In: ICASSP 2021-2021 IEEE international conference on acoustics, speech and signal processing (ICASSP), pp 5654–5658. IEEE

Gu Y, Tinn R, Cheng H, Lucas M, Usuyama N, Liu X, Naumann T, Gao J, Poon H (2021) Domain-specific language model pretraining for biomedical natural language processing. ACM Transactions on Computing for Healthcare (HEALTH) 3(1):1–23

Hong W-C, Dong Y, Zheng F, Wei SY (2011) Hybrid evolutionary algorithms in a svr traffic flow forecasting model. Appl Math Comput 217(15):6733–6747

Qi T, Li G, Chen L, Xue Y (2021) Adgcn: an asynchronous dilation graph convolutional network for traffic flow prediction. IEEE Internet Things J 9(5):4001–4014

Li Y, Yu R, Shahabi C, Liu Y (2018) Diffusion convolutional recurrent neural network: data-driven traffic forecasting. In: International conference on learning representations

Chen Z, Lu Z, Chen Q, Zhong H, Zhang Y, Xue J, Wu C (2022) A spatial-temporal short-term traffic flow prediction model based on dynamical-learning graph convolution mechanism. arXiv:2205.04762

Lai Q, Tian J, Wang W, Hu X (2022) Spatial-temporal attention graph convolution network on edge cloud for traffic flow prediction. IEEE Trans Intell Transp Sys

Kong X, Zhang J, Wei X, Xing W, Lu W (2022) Adaptive spatial-temporal graph attention networks for traffic flow forecasting. Appl Intell 52(4):4300–4316

Belhadi A, Djenouri Y, Djenouri D, Lin JC-W (2020) A recurrent neural network for urban long-term traffic flow forecasting. Appl Intell 50(10):3252–3265

Zhao Z, Chen W, Wu X, Chen PC, Liu J (2017) Lstm network: a deep learning approach for short-term traffic forecast. IET Intell Transp Syst. 11(2):68–75

Fu R, Zhang Z, Li L (2016) Using lstm and gru neural network methods for traffic flow prediction. In: 2016 31st Youth academic annual conference of chinese association of automation (YAC), pp 324–328. IEEE

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Cho K, van Merriënboer B, Bahdanau D, Bengio Y (2014) On the properties of neural machine translation: encoder–decoder approaches. In: Proceedings of SSST-8, eighth workshop on syntax, semantics and structure in statistical translation, pp 103–111

Zhao L, Song Y, Zhang C, Liu Y, Wang P, Lin T, Deng M, Li H (2019) T-gcn: a temporal graph convolutional network for traffic prediction. IEEE Trans Intell Transp Syst 21(9):3848–3858

Zhang J, Zheng Y, Qi D (2017) Deep spatio-temporal residual networks for citywide crowd flows prediction. In: Thirty-first AAAI conference on artificial intelligence

Guo S, Lin Y, Li S, Chen Z, Wan H (2019) Deep spatial–temporal 3d convolutional neural networks for traffic data forecasting. IEEE Trans Intell Transp Syst 20(10):3913–3926

Pan S, Hu R, Fung S-f, Long G, Jiang J, Zhang C (2019) Learning graph embedding with adversarial training methods. IEEE Trans Cybern 50(6):2475–2487

Kipf TN, Welling M (2017) Semi-supervised classification with graph convolutional networks. ICLR

Wang C, Pan S, Long G, Zhu X, Jiang J (2017) Mgae: marginalized graph autoencoder for graph clustering. In: Proceedings of the 2017 ACM on conference on information and knowledge management, pp 889–898

Yan S, Xiong Y, Lin D (2018) Spatial temporal graph convolutional networks for skeleton-based action recognition. In: Thirty-second AAAI conference on artificial intelligence

Zhang M, Chen Y (2018) Link prediction based on graph neural networks. Adv Neural Inf Process Syst, vol 31

Niepert M, Ahmed M, Kutzkov K (2016) Learning convolutional neural networks for graphs. In: International conference on machine learning, pp 2014–2023. PMLR

Hammond DK, Vandergheynst P, Gribonval R (2011) Wavelets on graphs via spectral graph theory. Appl Comput Harmon Anal 30(2):129–150

Drucker H, Burges CJ, Kaufman L, Smola A, Vapnik V (1996) Support vector regression machines. Adv Neural Inf Process Syst, vol 9

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

This work is supported in part by the National Key Research and Development Project under Grant 2020YFB2103900, in part by the National Natural Science Foundation of China under Grant 61936014, in part by the Shanghai Municipal Science and Technology Major Project under Grant 2021SHZDZX0100, in part by the Shanghai Science and Technology Innovation Action Plan Project No. 22511105300, and in part by the Fundamental Research Funds for the Central Universities.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: A Baseline parameter settings

Appendix: A Baseline parameter settings

We list the experimental parameter settings for the baseline methods as follows:

-

HA: Historical Average, which models traffic flow as a seasonal process, and uses the weighted average of previous seasons as the prediction. The period used is 1 week, and the prediction is based on aggregated data from previous weeks.

-

SVR: Linear Support Vector Regression; the penalty term C = 1, and the number of historical observations is 12.

-

GRU: Gated Recurrent Unit, which has 16 hidden layers; each layer contains 512 units. The model is trained with a batch size of 64, learning rate of 0.001, and MAE as the loss function.

-

LSTM: Long-Short Term Memory with 16 hidden layers; each layer contains 512 units. The model is trained with a batch size of 64, learning rate of 0.001, and MAE as the loss function.

-

T-GCN: Temporal Graph Convolutional Network, the learning rate is set to 0.001, the batch size to 32, the number of training epochs to 5000, and hidden units to 100.

-

STGCN: The channels of the three layers in the ST-Conv block are 64, 16, and 64. Both the graph convolution kernel size K and temporal convolution kernel size Kt are set to 3.

-

ASTGCN: The Chebyshev polynomial K and the kernel size along the temporal dimension are set to 3. All graph convolution layers and temporal convolution layers use 64 convolution kernels.

-

DCRNN: Diffusion Convolutional Recurrent Neural Network encoder and decoder contain two recurrent layers. In each recurrent layer, there are 64 units, the initial learning rate is 0.01. The maximum steps of random walks is set to 3.

-

Graph WaveNet: Graph WaveNet uses eight layers with a sequence of dilation factors 1, 2, 1, 2, 1, 2, 1, 2. The diffusion step in the graph convolution layer is set to 2. Node embeddings are randomly initialized by a uniform distribution with a size of 10. The model uses the Adam optimizer with an initial learning rate of 0.001.

-

AGCRN: Adaptive Graph Convolutional Recurrent Network has two layers, and 64 hidden units for all AGCRN cells. The embedding dimension is 10. The model is optimized with the L1 Loss and Adam optimizer for 100 epochs, and the learning rate is set to 0.003.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Han, Y., Zhao, S., Deng, H. et al. Principal graph embedding convolutional recurrent network for traffic flow prediction. Appl Intell 53, 17809–17823 (2023). https://doi.org/10.1007/s10489-022-04211-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-022-04211-x