Abstract

We present an algorithm for the rigorous integration of delay differential equations (DDEs) of the form \(x'(t)=f(x(t-\tau ),x(t))\). As an application, we give a computer-assisted proof of the existence of two attracting periodic orbits (before and after the first period-doubling bifurcation) in the Mackey–Glass equation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The goal of this paper is to present an algorithm for the rigorous integration of delay differential equations (DDEs) of the form

where \(0 < \tau \in \mathbb {R}\).

Despite its apparent simplicity, Eq. (1) generates all kinds of possible dynamical behaviors: from simple stationary solutions to chaotic attractors. For example, this happens for the well-known Mackey–Glass equation:

for which numerical experiments show the existence of a series of period-doubling bifurcations which lead to the creation of an apparent chaotic attractor [16, 17]. Later in the paper, we will apply our rigorous integrator to this equation.

There are many important works that establish the existence and the shape of a (global) attractor under various assumptions on f in Eq. (1). Much is known about systems of the form \(\dot{x} = -\mu x(t) + f\left( x(t-1)\right) \) when f is strictly monotonic, either positive or negative [9]. Let us mention here a few developments in this direction. Mallet-Paret and Sell used discrete Lyapunov functionals to prove a Poincaré–Bendixson-type theorem for special kind of monotone systems [19]. Krisztin et al. have conducted a thorough study on systems having a monotone positive feedback, including studies on the conditions needed to obtain the shape of a global attractor; see [11] and references therein. In the case of a monotonic positive feedback f and under some assumptions on the stationary solutions, Krisztin and Vas proved that there exist large amplitude slowly oscillatory periodic solutions (LSOPs) which revolve around more than one stationary solution. Together with their unstable manifolds, connecting them with the classical spindle-like structure, they constitute the full global attractor for the system [10]. In a recent work, Vas showed that f may be chosen such that the structure of the global attractor may be arbitrarily complicated (containing an arbitrary number of unstable LSOPs) [30].

Lani-Wayda and Walther were able to construct systems of the form \(\dot{x} = f\left( x(t-1)\right) \) for which they proved the existence of transversal homoclinic trajectory, and a hyperbolic set on which the dynamics are chaotic [13].

Srzednicki and Lani-Wayda proved, by the use of the generalized Lefshetz fixed point theorem, the existence of multiple periodic orbits and the existence of chaos for some periodic, tooth-shaped (piecewise linear) f [12].

The results from [10, 12, 13, 30], while impressive, are established for functions which are close to piecewise affine ones. The authors of these works construct equations where an interesting behavior appears; however, it is not clear how to apply their techniques for some well-known equations.

In recent years, there appeared many computer-assisted proofs of various dynamical properties for ordinary differential equations and (dissipative) partial differential equations by an application of arguments from the geometric theory of dynamical systems plus the rigorous integration; see, for example, [2, 7, 20, 29, 32, 36] and references therein. By the computer-assisted proof, we understand a computer program which rigorously checks assumptions of abstract theorems. This paper is an attempt to extend this approach to the case of DDEs by creating a rigorous forward-in-time integration scheme for Eq. (1). By the rigorous integration we understand a computer procedure which produces rigorous bounds for the true solution. In the case of DDEs, the integrator should reflect the fact that, after the integration time longer than the delay \(\tau \), the solution becomes smoother, which gives the compactness of the evolution operator. Having an integrator, one should be able to directly apply standard tools from dynamics such as Poincaré maps, various fixed point theorem. In this paper, as an application, we present computer-assisted proofs of the existence of two stable periodic orbits for Mackey–Glass equation; however, we do not prove that these orbits are attracting.

There are several papers that deal with computer-assisted proofs of periodic solutions to DDEs [8, 14, 33], but the approach used there is very different from our method. These works transform the question of the existence of periodic orbits into a boundary value problem (BVP), which is then solved by using the Newton–Kantorovich theorem [8, 14] or the local Brouwer degree [33]. It is clear that the rigorous integration may be used to obtain more diverse spectrum of results. There are also several interesting results that apply rigorous numerical computations to solve problems for DDEs [3, 4], but they do not rely on the rigorous, forward-in-time integration of DDEs.

The rest of the paper is organized as follows. Section 2 describes the theory and algorithms for the integration of Eq. (1). Section 3 defines the notion of the Poincaré map and discusses computation of the Poincaré map using the rigorous integrator. Section 4 presents an application of the method to prove the existence of two stable periodic orbits in the Mackey–Glass equation (Eq. (2)). Here, we investigate case for \(n = 6\) (before the first period-doubling bifurcation) and for \(n = 8\) (after the first period-doubling bifurcation). To the best of our knowledge, these are the first rigorous proofs of the existence of these orbits. The presented method has been also successfully used by the first author to prove the existence of multiple periodic orbits in some other nonlinear DDEs [25].

1.1 Notation

We use the following notation. For a function \(f : \mathbb {R} \rightarrow \mathbb {R}\), by \(f^{(k)}\), we denote the kth derivative of f. By \(f^{[k]}\), we denote the term \(\frac{1}{k!}\cdot f^{(k)}\). In the context of piecewise smooth maps by \(f^{(k)}(t^-)\) and \(f^{(k)}(t^+)\), we denote the one-sided derivatives f w.r.t. t.

For \(F : \mathbb {R}^m \rightarrow \mathbb {R}^n\) by DF(z), we denote the matrix \(\left( \frac{\partial F_i}{\partial x_j}(z) \right) _{i \in \{1, \ldots , n\}, j \in \{1, \ldots , m\}}\).

For a given set A, by \(\mathrm{cl}\,(A)\) and \(\mathrm{int}\,(A)\), we denote the closure and interior of A, respectively (in a given topology, e.g., defined by the norm in the considered Banach space).

Let \(A = \varPi _{i=1}^n [a_i, b_i]\) for \(a_i \le b_i\), \(a_i, b_i \in \mathbb {R}\). Then, we call A an interval set (a product of closed intervals in \(\mathbb {R}^n\)). For any \(A \subset \mathbb {R}^n\), we denote by hull(A) a minimal interval set, such that \(A \subset hull(A)\). If \(A \subset \mathbb {R}\) is bounded then \(hull(A) = [\inf (A), \sup (A)]\). For sets \(A \subset \mathbb {R}\), \(B \subset \mathbb {R}\), \(a \in \mathbb {R}\) and for some binary operation \(\diamond : \mathbb {R} \times \mathbb {R} \rightarrow \mathbb {R}\) we define \(A \diamond B = \left\{ a \diamond b: a \in A, b \in B\right\} \) and \(a \diamond A = A \diamond a = \{ a \} \diamond A\). Analogously, for \(g : \mathbb {R} \rightarrow \mathbb {R}\) and a set \(A \in \mathbb {R}\) we define \(g(A) = \{g(a) \ | \ a \in A\}\).

For \(v \in \mathbb {R}^n\) by \(\pi _i v\) for \(i \in \{1, 2, \ldots , n \}\), we denote the projection of v onto the ith coordinate. For vectors \(u, v \in \mathbb {R}^n\) by \(u \cdot v\), we denote the standard scalar product: \(u \cdot v = \sum _{i=1}^{n} \pi _i v \cdot \pi _i u\)

We denote by \(C^r\left( D, \mathbb {R}\right) \) the space of all functions of class \(C^r\) over a compact set \(D \subset \mathbb {R}\), equipped with the supremum \(C^r\) norm: \(\Vert g \Vert = \sum _{i=0}^{r} \sup _{x \in D} | g^{(i)}(x) |\). In case \(D = [-\tau , 0]\), when \(\tau \) is known from the context, we will write \(C^k\) instead of \(C^k\left( [-\tau ,0], \mathbb {R}\right) \).

For a given function \(x : [-1, a) \rightarrow \mathbb {R}\), \(a \in \mathbb {R}_+ \cup \{ \infty \}\) for any \(t \in [0, a)\) we denote by \(x_t\) a function such that \(x_t(s) = x(t+s)\) for all \(s \in [-1, 0]\).

We will often use a symbol in square brackets, e.g., [r], to denote a set in \(\mathbb {R}^m\). Usually it will happen in formulas used in algorithms, when we would like to stress the fact that a given variable represents a set. If both variables r and [r] are used simultaneously, then usually r represents a value in [r]; however, this is not implied by default and it will be always stated explicitly. Please note that the notation [r] does not impose that the set [r] is of any particular shape, e.g., an interval box. We will always explicitly state if the set is an interval box.

For any set X by mid(X), we denote the midpoint of hull(X) and by diam(X) the diameter of hull(X).

1.2 Basic Properties of Solutions to DDEs

For the convenience of the reader, we recall (without proofs) several classical results for DDEs [5].

We define the semiflow \(\varphi \) associated with Eq. (1) by:

where \(x^\psi : [-\tau , a_\psi ) \rightarrow \mathbb {R}\) is a solution to a Cauchy problem:

for a maximal \(a_\psi \in \mathbb {R}_+ \cup \{ \infty \}\) such that the solution exists for all \(t < a_\psi \).

Lemma 1

[Continuous (local) semiflow] If f is (locally) Lipshitz, then \(\varphi \) is a (local) continuous semiflow on \(C^0([-\tau , 0], \mathbb {R})\).

Lemma 2

[Smoothing property] Assume f is of class \(C^m\), \(m > 0\). Let \(n \in \mathbb {N}\) be given and let \(t \ge n \cdot \tau \). If \(x_0 \in C^0\) then \(x_t = \varphi (t, x_0)\) is of class at least \(C^{\min (m+1, n)}\).

The smoothing of solutions gives rise to some interesting objects in DDEs [31]. Assume for a while that \(f \in C^\infty \). Then for any \(n \ge 0\), there exists a set (in fact a manifold) \(M^n \subset C^n\), such that \(M^n\) is forward invariant under \(\varphi \).

It is easy to see that for \(n=1\) we have:

and the conditions for \(M^n\) with \(n > 1\) can be simply obtained by differentiating both sides of (1). We follow [31] and we call \(M^n\) a \(C^n\) solution manifold.

Notice that \(M^n \subset M^k\) for \(k \le n\) and \(\varphi (k \tau , \cdot ) : M^n \rightarrow M^{n+k}\).

2 Rigorous Integration of DDEs

This section is a reorganized excerpt from the Ph.D. dissertation of the first author (Robert Szczelina). A detailed analysis of results from numerical experiments with the proposed methods, more elaborate description of the algorithms, and detailed pseudo-codes of the routines can be found in the original dissertation [24].

2.1 Finite Representation of “Sufficiently Smooth” Functions

Here, we would like to present the basic blocks used in the algorithm for the rigorous integration of Eq. (1). The idea is to implement the Taylor method for Eq. (1) based on the piecewise polynomial representation of the solutions plus a remainder term. We will work on the equally spaced grid and we will fix the step size of the Taylor method to match the selected grid.

Remark 1

In this section, for the sake of simplicity of presentation, we assume that \(\tau = 1\). All computations can be easily redone for any delay \(\tau \).

We also assume that r.h.s. f of Eq. (1) is “sufficiently smooth” for various expressions to make sense. The class of f in (1) restricts the possible order of the Taylor method that can be used in our algorithms, that is, if f is of class \(C^n\), then we can use Taylor method of order at most n. Therefore, thorough the paper it can be assumed that \(f \in C^\infty \). This is a reasonable assumption in the case of applications of computer-assisted proofs where r.h.s. of equations are usually presented as a composition of elementary functions. The Mackey–Glass equation (2) is a good example (away from \(x = -1\)).

We fix two integers \(n \ge 0\) and \(p > 0\) and we set \(h = \frac{1}{p}\).

Definition 1

By \(C^{n}_{p}\), we denote the set of all functions \(g : [-1, 0] \rightarrow \mathbb {R}\) such that, for \(i \in \left\{ 1,\ldots ,p\right\} \), we have:

-

g is \((n+1)\)-times differentiable on \((-i \cdot h, -i \cdot h + h)\),

-

\(g^{(k)}\left( -i \cdot h^+\right) \) exists for all \(k \in \{0,\ldots ,n+1\}\) and \(\lim \limits _{\xi \rightarrow 0^+} g^{(k)}(-i \cdot h+\xi ) = g^{(k)}(-i \cdot h^+)\),

-

\(g^{(n+1)}\) is continuous and bounded on \((-i \cdot h, -i \cdot h + h)\).

From now on, we will abuse the notation and we will denote the right derivative \(g^{(k)}(-i \cdot h^+)\) by \(g^{(k)}(-i \cdot h)\) unless explicitly stated otherwise. The same holds for \(g^{[k]}(-i \cdot h^+)\). Under this notation, it is clear that we can represent \(g \in C^n_p\) by a piecewise Taylor expansion on each interval \(\left[ -i \cdot h, -i \cdot h + h\right) \). For \(t = - i \cdot h + \varepsilon \) and \(1 \le i \le p\), \(0 \le \varepsilon < h\) we can write:

with \(\xi (\varepsilon ) \in [0, h]\).

In our approach, we store the piecewise Taylor expansion as a finite collection of coefficients \(g^{[k]}(- i \cdot h)\) and interval bounds on \(g^{[n+1]}(\cdot )\) over the whole interval \([-i \cdot h, -i \cdot h + h]\) for \(i \in \{1,\ldots ,p\}\). Our algorithm for the rigorous integration of (1) will then produce rigorous bounds on the solutions to (1) for initial functions defined by such piecewise Taylor expansion.

Please note that we are using here a word functions instead of a single function, as, because of the bounds on \(g^{[n+1]}\) over intervals \([-i\cdot h, -i \cdot h + h]\), the finite piecewise Taylor expansion describes an infinite set of functions in general. This motivates the following definitions.

Definition 2

Let \(g \in C^n_p\) and let \(\mathbb {I} : \{ 1, \ldots , p\} \times \{ 0, \ldots , n\} \rightarrow \{1, \ldots , p \cdot (n+1) \}\) be any bijection.

A minimal (p, n)-representation of g is a pair \(\bar{g} = (a, B)\) such that

Please note, that the index function \(\mathbb {I}\) should be simply understood as an ordering in which, during computations, we store coefficients in a finite-dimensional vector—its precise definition is only important from the programming point of view, see Sect. 2.4 for a particular example of \(\mathbb {I}\). So, in this paper for theoretical considerations, we would like to use the following \({\bar{g}}_{}^{i,[k]}\) notation instead:

-

\({\bar{g}}_{}^{0,[0]} := \pi _{1} a\),

-

\( {\bar{g}}_{}^{i,[k]} := \pi _{1+\mathbb {I}(i,k)} a\),

-

\({\bar{g}}_{}^{i,[n+1]} := \pi _i B\)

We call \({\bar{g}}_{}^{i,[k]}\) the (i,k)th coefficient of the representation and \({\bar{g}}_{}^{i,[n+1]}\) the ith remainder of the representation. The interval set B is called the remainder of the representation. We will call the constant \(m = p \cdot (n+2) + 1\) the size of the (p, n)-representation. When parameters n and p are known from the context, we will omit them and we will call \(\bar{g}\) the minimal representation of g.

Definition 3

We say that \(G \subset C^n_p\) is a (p, n)-f-set (or (p, n)-functions set) if there exists bounded set \([\bar{g}] = (A, C) \subset \mathbb {R}^{p\cdot (n+1)+1} \times \mathbb {R}^p = \mathbb {R}^m\) such that

As the set \([\bar{g}]\) contains the minimal representation of f for any \(f \in G\), we will also say that \([\bar{g}]\) is a (p, n)-representation of G. We will also use G and \([\bar{g}]\) interchangeably and we will write \(f \in [\bar{g}]\) for short, if the context is clear.

Please note that the minimal (p, n)-representation \(\bar{g}\) of g defines (p, n)-f-set \(G \subset C^n_p\), which, in general, contains more than the sole function g. Also, in general, for any (p, n)-f-set G there are functions \(g \in G\) which are discontinuous at grid points \(-i \cdot h\) (see (5)). Sometimes, we will need to assume higher regularity, therefore we define:

Definition 4

Let \(G=[\bar{g}]\) be a (p, n)-f-set. The \(C^k\) -support of G is defined as:

For convenience we also set:

Please note that, in general, \(C^n_p \supset Supp(G) \ne Supp^{(0)}(G) \subset C^0\). It may also happen that \(Supp^{(k)}(G)=\emptyset \) for non-empty G even for \(k=0\).

Now we present three simple facts about the convexity of the support sets. These properties will be important in the context of the computer-assisted proofs and in an application of Theorem 11 to (p, n)-f-sets in Sect. 4.

Lemma 3

For (p, n)-representations \([\bar{g}], [\bar{f}] \subset \mathbb {R}^m\), \(m = p \cdot (n+2) + 1\), the following statements hold true:

-

If \([\bar{g}] \subset [\bar{f}]\), then \(Supp([\bar{g}]) \subset Supp([\bar{f}])\).

-

If \([\bar{g}]\) is a convex set in \(\mathbb {R}^m\), then \(Supp([\bar{g}])\) is a convex set in \(C^n_p\).

-

If \([\bar{g}]\) is a convex set in \(\mathbb {R}^m\), then \(Supp([\bar{g}]) \cap C^k\) is a convex set for any \(k \ge 0\).

We omit the easy proof.

To extract information on \(g^{[k]}\left( -{i \over p} + \varepsilon \right) \) for any i and k having only information stored in a (p, n)-representation, we introduce the following definition.

Definition 5

Let (p, n)-representation \(\bar{g}\) be given. We define

for \(0< \varepsilon < \frac{1}{p}\), \(1 \le i \le p\) and \(0 \le k \le n+1\).

We will omit subscript \(\bar{g}\) in \(c^{i,[k]}_{\bar{g}}(\varepsilon )\) if it is clear from the context. The following lemma follows immediately from the Taylor formula, so we skip the proof:

Lemma 4

Assume \(g \in C^n_p\) and its (p, n)-representation \(\bar{g}\) are given. Then for \(0< \varepsilon < \frac{1}{p}\), \(1 \le i \le p\) and \(0 \le k \le n+1\)

holds.

Before proceeding to the presentation of the integration procedure, we would like to discuss the problem of obtaining Taylor coefficients of a solution x to Eq. (1) at a given time t (whenever they exist). From Eq. (1), we have (we remind that, at grid points, by the derivative we mean the right derivative):

For example, in case of \(k=1\), we obviously have:

and in case \(k=2\), by applying the chain rule, we get:

If we define a function \(F_{(1)} : \mathbb {R}^4 \rightarrow \mathbb {R}\) as

then we see that

Now, by a recursive application of the chain rule, we can obtain a family of functions \(\mathbb {F}_f = \left\{ F_{(k)} : \mathbb {R}^{2 \cdot (k+1)} \rightarrow \mathbb {R}\right\} _{k \in \mathbb {N}}\) such that:

By setting

we can write similar identity in terms of the Taylor coefficients \(x^{[k]}\):

As we are using the Taylor coefficients instead of derivatives to represent our (p, n)-f-sets, this notation would be more suitable to describe computer algorithms. From now on, we will also slightly abuse the notation and we will denote \(F_{[k]}\) by \(F^{[k]}\) and \(F_{(k)}\) by \(F^{(k)}\). This is reasonable, since, for a function \(F : \mathbb {R}\rightarrow \mathbb {R}\) defined by \(F(t) := f(x(t-1), x(t))\), we have:

Remark 2

The task of obtaining family \(\mathbb {F}_f\) by directly and analytically applying the chain rule may seem quite tedious, especially, if one will be required to supply this family as implementations of computer procedures. It turns out, that this is not the case for a wide class of functions. In fact, only the r.h.s. of Eq. (1) needs to be implemented and the derivatives may be obtained by the means of the automatic differentiation (AD) [21, 26]. We use Taylor coefficients \(x^{[k]}\) to follow the notation and implementation of AD in the CAPD library [1] which provide a set of rigorous interval arithmetic routines used in our programs.

2.2 One Step of the Integration with Fixed-Size Step \(h = \frac{1}{p}\)

We are given (p, n)-f-set \(\bar{x}_0\) and the task is to obtain \(\bar{x}_h\)—a (p, n)-f-set such that \(\varphi (h, \bar{x}_0) \subset \bar{x}_h\). We will denote the procedure of computing \(\bar{x}_h\) by \(I_h\), that is:

First of all, we consider how \(\bar{x}_0\) and \(\bar{x}_h = I_h(\bar{x}_0)\) relate to each other. Their mutual alignment is shown in Fig. 1.

A graphical presentation of the integrator scheme. We set \(n=2\) and \(p=4\). A (p, n)-representation is depicted as dots at grid points and rectangles stretching on the whole intervals between consecutive grid points. The dot is used to stress the fact that the corresponding coefficient represents the value at a given grid point. Rectangles are used to stress the fact that remainders are bounds for derivative over whole intervals. Below the time line we have an initial (p, n)-representation. Above the time line, we see a representation after one step of size \(h = \frac{1}{p}\). Black solid dots and gray rectangles represent the values we do not need to compute—this is the shift part. The forward part, i.e., the elements to be computed, are presented as empty dots and an empty rectangle. The doubly bordered dot represents the exact value of the solution at the time \(t = h = \frac{1}{p}\) (in practical rigorous computations it is an interval bound on the value). The doubly bordered empty rectangle is an enclosure for the \(n+1\)th derivative on the interval \(\left[ 0, h\right] \)

We see that \({\bar{x}}_{h}^{i,[k]}\) overlap with \({\bar{x}}_{0}^{i-1,[k]}\), so they can be simply shifted to the new representation—we call this procedure the shift part. Other coefficients need to be estimated using the dynamics generated by Eq. (1). We call this procedure the forward part. This procedure will be divided into three subroutines:

-

1.

computing coefficients \({\bar{x}}_{h}^{1,[k]}\) for \(k \in \{1, \ldots , n\}\),

-

2.

computing the remainder \({\bar{x}}_{h}^{1,[n+1]}\),

-

3.

computing the estimate for \(x_h(0)\) (stored in \({\bar{x}}_{h}^{0,[0]}\)).

Forward Part: Subroutine 1

This procedure is immediately obtained by a recursive application of Eq. (7):

where \(1 \le k \le n\).

Forward Part: Subroutine 2

This subroutine can be derived from the mean value theorem. We have for \(\varepsilon < h\):

for some \(0 \le \xi \le \varepsilon \). Let us look at the two terms that appear on the r.h.s. of Eq. (8). The question is: Can we estimate them by having only \(\bar{x}_0\) and already computed \({\bar{x}}_{h}^{1,[k]}\) from Subroutine 1? Let us discuss each of these terms separately.

By Lemma 4, we have for \(0 \le k \le n+1\):

Moreover, by Definition 2, we know that:

So those terms can be easily obtained. The problem appears when it comes to \(x(\xi )\) and \(x^{[k]}(\xi )\), for \(\xi \in (0,h)\)

Assume for a moment that we have some a priori estimates for x([0, h]), i.e., a set \(Z \subset \mathbb {R}\) such that \(x(\left[ 0, h]\right) \subset Z\). We call this set the rough enclosure of x on the interval [0, h]. Having rough enclosure Z, we could apply Eq. (7) (as in the case of Subroutine 1) to obtain the estimates on \(x^{[k]}\left( [0, h]\right) \) for \(k > 0\). So the question is: how to find a candidate Z and prove that \(x(\left[ 0, h]\right) \subset Z\)? The following lemma gives a procedure to test the later.

Lemma 5

Let \(Y \subset \mathbb {R}\) be a closed interval and let \(x_0\) be a function defined on \([-1, 0]\). Assume that the following holds true:

Then the solution x(t) of Eq. (1) with the initial condition \(x_0\) exists on the interval \(\left[ 0, h\right] \) and

Proof

We can treat Eq. (1) on the interval \(\left[ 0, h\right] \) as a non-autonomous ODE of the form:

where \(a(t) = x(t)\) for \(t \in [-1, -1+h]\) is a known function. Now the conclusion follows from the proof of the analogous theorem for ODEs. The proof can be found in [35]. \(\square \)

Using Lemma 5, a heuristic iterative algorithm may be designed such that it starts by guessing an initial Y, and then it applies Eq. (8) to obtain Z. In a case of failure of the inclusion, i.e., \(Z \not \subset Y\), a bigger Y is taken in the next iteration. Please note that this iteration may never stop or produce unacceptably big Y, especially when the step size h is large. Finding a rough enclosure is the only place in the algorithm of the integrator that can in fact fail to produce any estimates. In such a case, we are not able to proceed with the integration, and we signalize an error.

Now we can summarize the algorithm for Subroutine 2 as follows:

Remark 3

Please note that the term \(a^*\) is computed the same way as other coefficients in Subroutine 1 and the rough enclosure do not influence this term. In fact this is the \(n+1\)th derivative of the flow w.r.t. time. It is possible to keep track of those coefficients during the integration and after p steps (full delay) those coefficients may be used to build a \((p,n+1)\)-representation of the solutions—this is a direct reflection of consequences of Lemma 2.

This fact is also important for the compactness of the evolution operator—an essential property that allows for an application of the topological fixed point theorems in infinite-dimensional spaces.

Forward Part: Subroutine 3

The last subroutine of the forward part can be simply obtained by using Definition 2 and Eq. (5):

Notice that the possible influence of the usually overestimated rough enclosure Z is present only in the last term of the order \(h^{n+1}\), so for small h (large enough p), it should not be a problem.

The Integrator: Altogether

Strictly speaking, the mapping \(I_h\) does not produce a (p, n)-f-set which exactly represents \(\bar{x}_h = \varphi (h, \bar{x}_0)\). Instead, it returns some bigger set \([\bar{x}_h]\) such that \(\bar{x}_h\) is contained in it. Of course, we are interested in obtaining a result as close as possible to the set of true solutions represented by \(\varphi (h, \bar{x}_0)\). So, for technical reasons which will be apparent in Sect. 2.3, we decompose \(I_h\) into \(I_h = \varPhi + R\) such that \(\varPhi : \mathbb {R}^m \rightarrow \mathbb {R}^m\) and \(R : \mathcal {P}(\mathbb {R}^m) \rightarrow \mathcal {P}(\mathbb {R}^m)\). Let \(\varPhi (\bar{x}) = \bar{\phi }\) and put:

Let \(R(\bar{x}) = \bar{r}\) and put:

The map \(\varPhi \) is called the Taylor part, while the map R is called the Remainder part. This decomposition is important for an efficient reduction of negative effects caused by using interval arithmetic, primarily the wrapping effect, but also the dependency problem to some extent.

2.3 Reducing the Wrapping Effect

A representation of objects in computations as the interval sets has its drawbacks. Possibly the most severe of them are the phenomena called the wrapping effect and the dependency problem. Their influence is so dominant that they are discussed in virtually every paper in the field of rigorous computations (see [35] and references therein). The dependency problem arises in interval arithmetic when two values theoretically representing the same (or dependent) value are combined. The most trivial example is an operation \(x--x\) which is always 0, but it is not the case for intervals. For example, applying the operation to the interval \(x = [-1,1]\) gives as the result the interval \([-2,2]\) which contains 0, but it is far bigger than we would like it to be.

An illustration of the wrapping effect problem for a classical, idealized mathematical pendulum ODE \(\ddot{x} = -x\). The picture shows a set of solutions in a phase space \((x, \dot{x})\). The gray boxes present points of initial box moved by the flow. The colored boxes present the wrapping effect occurring at each step when we want to enclose the moving points in a product of intervals in the basic coordinate system. For example, the blue square on the left encloses the image of the first iteration. Its image is then presented with blue rhombus which is enclosed again by an orange square. Then the process goes on (Color figure online)

The wrapping effect arises when one intends to represent a result of some evaluation on sets as a simple interval set. Figure 2 illustrates this when we consider the rotation of the square.

One of the mostly used and efficient methods for reducing the impact of the wrapping effect and the dependency problem was proposed by Lohner [15]. In the context of the iteration of maps and the integration of ODEs, he proposed to represent sets by parallelograms, i.e., interval sets in other coordinate systems. In the sequel, we follow [35], and we sketch the Lohner methods briefly.

By J we denote a computation of \(I_h(\cdot )\) using point-wise evaluation of the Taylor part, i.e.,

Let us consider an iteration:

with initial set \([x_0]\).

Let us denote \(x_k = mid([x_k])\) and \([r_k] = [x_k] - x_k\). By a simple argument based on the mean value theorem [35], it can be shown that:

We can reformulate the problem of computing \([x_{k+1}]\) to the following system of equations:

Now the reduction in the wrapping effect could be obtained by choosing suitable representations of sets \([r_k]\) and a careful evaluation of Eq. (16). The terminology used for this in [35] is the rearrangement computations. We will briefly discuss possible methods of handling Eq. (16).

Method 0 (Interval Set): Representation of \([r_k]\) by an interval box and the direct evaluation of (16) is equivalent to directly computing \(I_h([x_{k+1}])\). This method is called an interval set and is the least effective.

Method 1 (Parallelepiped): we require that \([r_k] = B_k \cdot [\tilde{r}_k]\) for \([\tilde{r}_k]\) being an interval box and \(B_k\) being an invertible matrix. Then (16) becomes:

Since it is difficult to obtain the exact matrix inverse in computer calculations, we will use interval matrices \([B_k]\) and \([B_k^{-1}]\) that contain \(B_k\) and \(B_k^{-1}\), respectively. Thus the equation on \([\tilde{r}]\) becomes:

If \([B_k]\)’s are well-chosen, then the formula in brackets can be evaluated to produce a matrix close to identity with very small diameter, thus the wrapping effect reduction is achieved. The Parallelepiped method is obtained when \(B_{k+1}\) is chosen such that \(B_{k+1} \in [A_k][B_{k+1}]\). This approach is of limited use because of the need to compute the matrix inverse of a general matrix \(B_{k+1}\), which may fail or produce unacceptable results if \(B_{k+1}\) is ill-conditioned.

Method 2 (Cuboid): this is a modification of Method 1. In this method, we choose \(U \in [A_k][B_{k+1}]\), and we do the floating point approximate QR decomposition of \(U = Q \cdot R\), where Q is close to an orthogonal matrix. Next we obtain matrix [Q] by applying the interval (rigorous) Gram–Schmidt method to Q, so there exist orthogonal matrix \(\tilde{Q} \in [Q]\) and \(Q^{-1} = Q^{T} \in [Q]^T\). We set \(B_{k+1} = [Q]\), \(B_{k+1}^{-1} = [Q]^{T}\).

Method 3 (Doubleton): this representation is used in our computations as it proved to be the most efficient in numerical tests [24] and in other applications; see [35] and references therein. The original idea by Lohner is to separate the errors introduced due to the large size of initial data and the local errors introduced by the numerical method at every step. Namely, we set:

where \([\tilde{r}_k]\) is evaluated by any method 0–2. To reduce the possible wrapping effect in the product \({[A_k]}{[E_k]}\), Lohner proposed the following:

Again, \([\tilde{r}]\) is evaluated by any method 0–2. Please note that there is no need to inverse a matrix in the doubleton representation when \([\tilde{r}]\) is evaluated either by Method 0 or Method 2, so this approach is suitable for the case where \(A_k\) may be close to singular. In the computer-assisted proofs presented in this paper, we use Method 0 to represent \([\tilde{r}]\) because it is less computationally expensive and, in our current setting, using the other methods have not improved the results. This is puzzling, as it contradicts our experience with ODEs where Method 2 is preferable, and thus it might be worthwhile to investigate this phenomena in some later study.

2.4 Optimization Exploiting the Block Structure of \(D\varPhi (x)\)

In our setting, \([A_k] = D\varPhi (\bar{x}_k)\), where \(\bar{x}_k\) is the (p, n)-f-set in the kth step of integration. As \([A_k]\) is \(m \times m\) matrix, where m is the size of (p, n)-representation (\(m = p \cdot (n+2) + 1\)), and therefore, if we decide to represent the error part \([\tilde{r}]\) in doubleton by interval box (Lohner Method 0), then the matrix multiplications involving the matrix \([A_k]\) take the most of the execution time in one step of integration in the Lohner algorithm, especially for large n and/or p. From Eqs. (9–12), we see that \(D\varPhi (x)\) has a nice block structure and contains a large number of zero entries, i.e., it is a sparse matrix. This structure is well visible when we use the following index function \(\mathbb {I}\):

Under this index function, \([A_k]\) is of the form:

where \(A_{11}\) is \(n+1 \times 1\) matrix (column vector), \(A_{13}\) is \(n+1 \times n+2\), and Id is an identity matrix of size \((p-1) \cdot (n+1)\). Therefore, we use this index function to define blocks for all matrices and vectors appearing in all methods discussed in Sect. 2.3, as, with such block representation of matrices and vectors, we can easily program the multiplication by a matrix or a vector so that all the operations on any zero block are avoided. We will refer to this as the optimized algorithm.

If we have an arbitrary matrix C, then the cost of computing \([A_k] \cdot C\) by a standard algorithm for the matrix multiplication is of order \(O(n^3 \cdot p^3)\) in both the scalar addition and multiplication operations (we remind, that p, n are the parameters of (p, n)-representation). In the case of the optimized algorithm, the block structure and sparseness of \([A_k]\) reduce the computational cost to \(O(n^2 \cdot p^2)\) in scalar additions and \(O(n^3)\) in scalar multiplications.

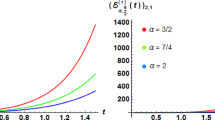

The computation times for the computer-assisted proofs discussed in Sect. 4 on the 2.50 GHz processor (see Sect. 4 for a detailed specification) are presented in Table 1. We see that the optimized algorithm is much faster than the direct multiplication, the speed-up is evident especially for the larger (p, n)-representations.

3 Poincaré Map for Delay Differential Equations

3.1 Definition of a Poincaré Map

We begin with the definition of the (transversal) section of the semiflow \(\varphi \) associated with (1). First, we would like to recall the ODE setting where, for a flow \(\varphi : \mathbb {R}\times \mathbb {R}^m \rightarrow \mathbb {R}^m\), a (local) transversal section S is usually defined as a (subset of) smooth manifold \(\mathcal {M}\) of codimension one satisfying the transversality condition:

where \(T_x\mathcal {M}\) denotes the tangent bundle at x. If \(\mathcal {M}\) is a hyperplane

for some given normal vector \(s \in R^m\), \(\Vert s \Vert \ne 0\) and \(a \in \mathbb {R}\), then condition (17) becomes

We will use a similar approach in the context of the semiflow \(\varphi \) associated with (1). We will restrict ourselves to the linear sections, and we will use the fact that from Eq. (4) and Lemma 2, it follows

for any \(t_0 \ge \tau \). Moreover, \(\dot{x}_{t_0}\) is of class \(C^{n-1}\) wherever \(t_0 \ge n \cdot \tau \). This observation will be crucial for the definition of a transversal section in the DDE context and, later, for the rigorous computation of Poincaré maps.

Definition 6

Let \(\varphi \) be the semiflow associated with the system (1). Let \(n \in \mathbb {N}\), \(s : C^n \rightarrow \mathbb {R}\) be a continuous affine mapping, i.e., \(s(x)=l(x)+a\), where l is a bounded linear functional and \(a \in \mathbb {R}\). We define a global \(C^n\)-section as a hyperplane:

Any convex and bounded subset \(S \subset \mathcal {S}\) is called a local \(C^n\) -section (or simply a section).

A section S is said to be transversal if there exists a convex open set \(W \subset C^n\) such that

where

satisfying the condition

We will refer to (18) as the transversality condition.

Remark 4

Please note that the requirement \(x \in W \cap C^{n+1}\) in (18) is essential to guarantee that \(\dot{x}\) and thus \(l(\dot{x})\) in (18) are well defined, as, for \(x_0 \in C^{n+1}\) and \(t > 0\), it might happen that \(\varphi (t, x_0)\) is of class \(C^k\), \(k < n+1\) (the loss of regularity), but Lemma 2 states that we only need to “long enough” integrate the initial functions to get rid of this problem completely. These two phenomena are illustrated in the following example.

Let \(x_0 \in W\) for W as in Definition 6. In general, it happens that \(\varphi (\varepsilon , x_0) \not \in C^n\) for small \(\varepsilon > 0\). This may seem at first to contradict intuition from Lemma 2, but in fact it is not. Consider the following r.h.s. of Eq. (1):

Let \(x_0 \equiv 1\) be an initial function and let x be a solution to Eq. (1) with \(x|_{[-1,0]} \equiv x_0\) and delay \(\tau = 1\). We see that x(t) is \(C^\infty \) on \([-1, 0)\). However, at \(t=0\), we have

so \(x_t : [-1,0] \rightarrow \mathbb {R}\) is only of class \(C^0\) for any \(t \in (0, 1)\). This is a very undesirable phenomena, but the solution will be smoothed after a full delay, according to Lemma 2. As \(x(t) = 1+t\) on [0, 1], we have at \(t = 1\):

One can show again that \(x^{(2)}(1^-) \ne x^{(2)}(1^+)\), therefore x is of class \(C^1\) on (1, 2), and the smoothing of solutions goes on with the increasing t.

This shows for any \(x_0 \in C^n\), if \(\omega > n \cdot \tau \), then we have “only” \(\varphi (\omega , x_0) \in C^n\) in a general case. On the other hand, “long enough” integration time \(\omega \) can be used to guarantee that every initial function \(x \in C^0\) has a well defined image in \(C^n\) under mapping \(\varphi (\omega , \cdot )\). This is essential in the following construction of a Poincaré map for DDEs (Theorem 5 and Definition 7).

Theorem 5

Assume \( n \in \mathbb {N}\), \(V \subset C^0\). Let S be a local transversal \(C^n\)-section for (1) and let W be as in Definition 6. Let \(\omega = (n+1) \cdot \tau \).

Assume that there exist \(t_1,t_2 \in \mathbb {R}\), \(\omega \le t_1 < t_2\), such that the following conditions hold for all \(x \in V\):

Then, for each \(x_0 \in V\), there exists unique \(t_{S}(x_0) \in (t_1, t_2)\) such that \(\varphi \left( t_{S}(x_0), x_0\right) \in S\). Also, \(t_{S} : V \rightarrow [t_1, t_2]\) is continuous.

Proof

Let \(x_0 \in V\). By assumptions, \(\varphi (t, x_0) = x_t \in W\) for \(t \in [t_1, t_2]\) but also \(x_t \in C^{n+1}\), by the assumption on constants \(\omega \), \(t_1\), \(t_2\) (by Lemma 2). So \(s(\dot{x}_t)\) is well defined and Condition (18) guarantees that

Therefore, the function defined by \(\hat{s}(t) := s(x_t)\) is continuous and strictly increasing on \([t_1, t_2]\). Now, from (19), it follows there exists unique \(t_0 \in (t_1, t_2)\) such that \(s\left( \varphi (t_0, x_0)\right) = c\). Together with continuity of \(\varphi \) (Lemma 1), this implies continuity of \(t_{S}:V \rightarrow (t_1,t_2)\). \(\square \)

Definition 7

The same assumptions as in Theorem 5, in particular assume \(t_1 \ge \tau \cdot (n+1) = \omega \). We define the transition map to the section S after (at least) \(\omega \) by

for \(t_S\) defined as in Theorem 5. If \(V \subset S\), then the map \(P_{\ge \omega }\) will be called the Poincaré return map on the section S after \(\omega \).

Finally, we state the last and the most important theorem that will allow us to apply topological fixed point theorems to \(P_{\ge \omega }\).

Theorem 6

Consider Poincaré map (after \(\omega \)) \(P_{\ge \omega }: S \supset V \rightarrow S\) for some section S under the same assumptions as in Theorem 5, especially assume \((n+1) \cdot \tau \le \omega \le t_S(V) \in [t_1, t_2]\).

Assume additionally that \(\varphi ([\omega ,t_2],V)\) is bounded in \(C^n\).

Then the map \(P_{\ge \omega }\) is continuous and compact in \(C^n\), i.e., if \(K \subset V\) is bounded, then \(\mathrm{cl}\,(P_{\ge \omega }(K))\) is compact in \(C^n\).

Proof

By Theorem 5, \(P_{\ge \omega }\) is well defined for any \(x_0 \in V\) since \(t_1 \ge \omega \ge (n+1) \cdot \tau \) and \(P_{\ge \omega }(x_0) \in C^{n+1}\).

The continuity follows immediately from the continuity of \(t_{S}\) (Theorem 5) and \(\varphi \) (Lemma 1).

Let \(D = P_{\ge \omega }(V)\). From our assumptions, it follows that D is bounded in \(C^n\). A known consequence of the Arzela–Ascoli Theorem is that, if \(D \subset C^{n}\) is closed and bounded, \(x \in D\), \(x^{(n+1)}\) exists, and there is M such that \(\sup _{t} \left| x^{(n+1)}(t)\right| \le M\) for all \(x \in D\), then D is compact (in \(C^{n}\)-norm). Therefore, to finish the proof, it is enough to show that there is a uniform bound on \(P_{\ge \omega }(x)^{(n+1)}\). For this, it is sufficient to have a uniform bound on \((\varphi (t,x))^{(n+1)}\) for \(t \in [\omega ,\sup _{x \in V} t_S]\). The existence of this bound follows from boundedness of derivatives up to order n and formula (7). \(\square \)

The restriction on the transition time may seem a bit unnatural since each solution becomes \(C^\infty \) eventually, as discussed in Remark 4. In fact, it should be possible to work directly with the solutions on the \(C^n\) solution manifold \(M^n\) (i.e., \(M^n \subset C^n\) and \(\varphi (t, M^n) \subset M^n\) for all \(t \ge 0\)). When we restrict the flow to the solutions manifold \(M^n\), then we do not need to demand that the transition time to the section is bigger than \(\omega = (n+1) \cdot \tau \). Instead, to obtain the compactness, we need to shift the set forward only by one full delay. Therefore, we obtain the following theoretical result:

Theorem 7

Consider Poincaré map (after \(\tau \)) \(P_{\ge \tau }: S \supset V \rightarrow S\) for some section S, where \(V \subset M^n\). Let \(t_1\) and \(t_2\) be like in Theorem 5.

Assume that \(\varphi ([0,t_2],V)\) is bounded in \(C^n\).

Then the map \(P_{\ge \tau }: V \rightarrow S \cap M^n\) is continuous and compact in \(C^n\), i.e., if \(K \subset V\) is bounded, then \(\mathrm{cl}\,(P_{\ge \omega }(K))\) is compact in \(C^n\).

At the present stage of the development of our algorithm, we do not have the constructive parametrization of the manifold \(M^n\), therefore we need to use the “long enough” integration time \(\omega \) in the rigorous numerical computations.

3.2 Rigorous Computation of Poincaré Maps

The restriction of the integration procedure \(I_h\) (Sect. 2) to the fixed-size step \(h = \frac{1}{p}\) is a serious obstacle when we consider computation of Poincaré map \(P_{\ge \omega } : V \rightarrow S\). Obviously, if we assume for simplicity that \(\omega = q \cdot {\tau \over p}\), \(q \in \mathbb {N}\) and \(t_S(V) \subset \omega + [\varepsilon _1, \varepsilon _2]\) with \(0< \varepsilon _1< \varepsilon _2< {\tau \over p}\), then we have to find a method to compute image of the set after small time \(\varepsilon \in [\varepsilon _1, \varepsilon _2]\). The definition of the (p, n)-representation together with Eq. (5) give a hint how to compute the value of the function (and the derivatives up to the order n) for some intermediate time \(0< \varepsilon < h\). But again, we face yet another obstacle, as computing the (p, n)-f-set representing \(\varphi (\varepsilon , x_0)\) for all initial functions \(x_0\) in some given (p, n)-f-set turns out to be impossible. It can be seen from the very same example as in Remark 4. In the example, \(x_\varepsilon \) would be only \(C^0\) at \(t = -\varepsilon \). So if \(\varepsilon \) is not a multiple of h, then, for any \(n > 0\), there is no (p, n)-representation of \(x_\varepsilon \), unless we restrict the computations to the set \(C^n_p \cap C^{n+1}\) (or to the solutions manifold \(M^n\)). This is again a reason for an appearance of the “long enough” integration time in Definition 7.

This discussion motivates the following definition and lemma.

Definition 8

Let \(\bar{x}_0\) be a (p, n)-representation, and let \(\bar{x}_h = I_h(\bar{x}_0)\) and \(c_{\bar{x}_0}\) be as in Definition 5. For \(\varepsilon \in [0, h]\) we define (p, n)-f-set \(\bar{x}_\varepsilon \) by the following (p, n)-representation:

For a given \(\bar{x}_0\) we denote:

Function \(I_\varepsilon \) will be called the shift by \(\varepsilon \) or the \(\varepsilon \) -step integrator.

Remark 8

\(I_\varepsilon (\bar{x}_0)\) is constructed in such a way that it contains all solutions to (4) for initial functions \(x_0 \in Supp(\bar{x}_0) \cap M^{n+1}\) after time \(t = \varepsilon \).

Theorem 9

Assume that \(\varepsilon \in [0, h]\), \(\bar{x}_0\) is a (p, n)-f-set. Let define

If \(n \cdot p \le q \in \mathbb {N}\), then

for all \(x \in \bar{x}_0\).

Proof

Since \(q \ge n \cdot p\), then \(q \cdot h \ge n \cdot \tau \) and the proof follows from Lemmas 2, 4 and Definition 2. \(\square \)

Now, the application of \(I_h\) and \(I_\varepsilon \) to compute \(P_{\ge \omega }\) is straightforward.

Input:

-

1.

a section S;

-

2.

a (p, n)-f-set \(\bar{x}_0 \subset S\);

-

3.

\(\omega = (n+1) \cdot \tau \);

Output:

-

1.

\(q \in \mathbb {N}\), \(0< \varepsilon _1< \varepsilon _2 < {\tau \over p}\) such that \(t_S(\bar{x}_0) \subset q \cdot {\tau \over p} + [\varepsilon _1, \varepsilon _2]\) for \(\omega \le q \cdot {\tau \over p}\);

-

2.

(p, n)-f-set \(\bar{x}_{\ge \omega }\) such that \(P_{\ge \omega }(x_0) \in \bar{x}_{\ge \omega }\) for all \(x_0 \in \bar{x}_0\);

Algorithm:

-

1.

do at least \((n+1) \cdot p\) iterations of \(I_h\) to guarantee the \(C^{n+1}\) regularity of solutions for all initial functions (so the map \(P_{\ge (n+1) \cdot \tau }\) is well defined and compact).

-

2.

find \(q \ge (n+1) \cdot p\) and \(\varepsilon _1\), \(\varepsilon _2 < h\) (for example by the binary search algorithm) such that for the assumptions of Theorem 5 are guaranteed for section S, \(t_1 = q \cdot h + \varepsilon _1\), \(t_2 = q \cdot h + \varepsilon _2\) and set W defined by

$$\begin{aligned} W := C^n \cap \left( I_{[\varepsilon _1, \varepsilon _2]} \circ I_h^q (\bar{x}_0) \right) . \end{aligned}$$ -

3.

By assumptions and by Theorems 5 and 9, we know that we have \(P_{t_1}(x_0) \in W \cap S\) for each \(x_0 \in V\). Moreover, by Theorem 6, the map \(P_{t_1}\) is compact (in \(C^n\)).

Please note, that the operator \(I_{[\varepsilon _1, \varepsilon _2]}\) should be interpreted as computation of the sum \(\bigcup _{\varepsilon \in [\varepsilon _1, \varepsilon _2]} I_\varepsilon (\cdot )\) or as any reasonable bound on this sum. In our program, we just evaluate \(I_\varepsilon \) with \(\varepsilon =[\varepsilon _1, \varepsilon _2]\).

Remark 10

We can use the decomposition of \(I_\varepsilon \) into \(\varPhi _\varepsilon \) and \(R_\varepsilon \) such that the Lohner algorithm can again be used in the last step of the integration as described in Sect. 2.3. We skip the details and refer to the source code documentation of the library available at [24].

Now, the question arises: How to represent the section S in a manner suitable for computation of the program \(P_\ge \omega \)?

3.3 (p, n)-Sections

Since we are using the (p, n)-representations to describe functions in \(C^n\), it is advisable to define sections in such a way that it would be easy to rigorously check whether \(x \in S\) for all functions represented by a given (p, n)-f-set. The straightforward way is to require l in the definition of S to depend only on representation coefficients \({\bar{x}}_{}^{i,[k]}\).

Definition 9

Let \(l_{i,k}\in \mathbb {R}\) for \((i,k) \in \mathcal {C}=\{1,\dots ,p\} \times \{0,\dots ,n\} \cup \{(0,0)\}\). We assume that at least one \(l_{i,k}\) is not equal to zero. Let \(a \in \mathbb {R}\) be given. For \(x \in C^n\) we define a linear continuous map \(l : C^n \rightarrow \mathbb {R}\) by

The section \(S=\{x \in C^n \ | \ l(x) - a = 0\}\) is called a (p, n)-section.

3.4 Choosing an Optimal Section

Numerical experiments with the rigorous integrator have shown that the choice of a good section is a key factor to obtain sufficiently good bounds on images of the Poincaré map to be used in computer-assisted proofs reported in Sect. 4. The section has to be chosen so that the diameter of the bounds on transition time \(t_S\) should be as small as possible; see Fig. 3.

Left: an illustration of the problem with big difference in transition time \(t_S\) for poorly chosen section S. If \(t_S \in q \cdot {\tau \over p} + [\varepsilon _1, \varepsilon _2]\) and \(|\varepsilon _1 - \varepsilon _2|\) is large, then \(I_{[\varepsilon _1, \varepsilon _2]}\) produces estimates on solutions distant from the section (blue rectangles), so the interval enclosure W of all solutions tend to be very large (green rectangle). Right: if the section is chosen carefully, then all the solution obtained from \(I_{[\varepsilon _1, \varepsilon _2]}\) are close to the section, so the set W is small

We will discuss the problem of choosing optimal section in the ODEs case. Later, in Sect. 4, we will apply a heuristic procedure based on this discussion to obtain a good candidate for an optimal section in the DDEs setting.

Let us consider an ODE of the form:

Let \(x_0\) be a periodic orbit of period T of the flow \(\varphi \) induced by (21). Then, \(f(x_0)\) is a right eigenvector of the matrix \(M = \frac{\partial \varphi }{\partial x}(T,x_0)\) with eigenvalue \(\lambda =1\). Let l be a row vector which is a left eigenvector of M corresponding to \(\lambda =1\). Let us assume, that the periodic orbit passing through \(x_0\) is hyperbolic. In such a case, the left and right eigenvectors corresponding to the eigenvalue \(\lambda =1\) are uniquely defined up to a multiplier and we have

We normalize l so that

For any given row vector \(v \in \mathbb {R}^n\), let us consider a section \(S_v=\{ x \ | \ v \cdot x - v \cdot x_0=0 \}\). We define

hence, \(v^\bot \) is the tangent space to the section \(S_v\).

Under the above assumptions, we have the following lemma.

Lemma 6

If \(v \cdot f(x_0) \ne 0\), then \(S_v\) is locally transversal and

where \(t_{S_v}\) is the transition time to the section \(S_v\), defined in some neighborhood of \(x_0\).

Moreover,

iff \(v = \alpha l\) for some \(\alpha \ne 0\)

Proof

The transition time to section \(S_v\) is defined by the following implicit equation

From this, we immediately obtain (22).

The second assertion is obtained as follows. At first, assume (23). We have

Therefore, v is proportional to l.

The other direction of the second assertion is obvious. \(\square \)

In simple words, Lemma 6 states that choosing the left eigenvector of the monodromy matrix \(\frac{\partial \varphi }{\partial x}(T,x_0)\) gives a section such that the return time to this section is constant in the first order approximation.

4 The Existence of Periodic Orbits in Mackey–Glass Equation

The Mackey–Glass system (2) is one of the best known delay differential equations. The original work of Mackey and Glass [17] spawned wide attention, being cited by many papers with a broad spectrum of topics: from theoretical mathematical works to neural networks and electrical engineering. Numerical experiments show that, as either parameter \(\tau \) [17] or n [16] is increased, the system undergoes a series of period-doubling bifurcations, and they lead to the creation of an apparent chaotic attractor.

In this section, we present computer-assisted proofs of the existence of attracting periodic orbits in Mackey–Glass system (2). We use the classical values of parameters: \(\tau = 2\), \(\beta = 2\) and \(\gamma = 1\), and we investigate the existence of periodic orbits with \(n = 6\) (before the first period doubling) and \(n = 8\) (after the first period doubling) [16]. We would like to stress, that we are not proving that these orbits are attracting. This would require some \(C^1\)-estimates for the Poincaré map defined by (2).

4.1 Outline of the Method for Proving Periodic Orbits

The scheme of a computer-assisted proof of a periodic orbit consists of several steps:

-

1.

find a good, finite representation of bounded sets in the phase space \(C^k\) (or in other suitable function space),

-

2.

choose suitable section S and some a priori initial set V on the section,

-

3.

compute image of V by Poincaré map \(P_{\ge \omega }\) on section S,

-

4.

prove that the map \(P_{\ge \omega }\), the set V, and the set \(W := P_{\ge \omega }(V)\) all satisfy assumptions of some fixed point theorem so that it implies the existence of a fixed point for \(P_{\ge \omega }\) in V. This gives rise to the periodic orbit in Eq. (1).

To this point, we have presented ingredients needed in steps 1 and 3. In Step 4, we will use the Schauder fixed point theorem [27, 34]:

Theorem 11

Let X be a Banach space, let \(V \subset X\) be non-empty, convex, bounded set and let \(P : V \rightarrow X\) be continuous mapping such that \(P(V) \subset K \subset V\) and K is compact. Then the map P has a fixed point in V.

Theorem 11 is suitable for proving the existence of periodic orbits for which there is a numerical evidence that they are attracting. The unstable periodic orbits can be treated by adopting the covering relations approach from [6], which may be applied in the context of infinite-dimensional phase space (for such an adaptation in the context of dissipative PDEs; see [37]).

In Sect. 3.4, we have presented some theoretical background on the selection of a suitable section that is the foundation of Step 2. Now, we would like to put more emphasis on technical details, as the procedure in Step 2 introduces some difficulties due to large size of the data defining (p, n)-representations. In the proofs we use (32, 4)- and (128, 4)-f-sets with representation sizes \(m = 193\) and \(m = 769\), respectively. Thus we are not able to simply “guess” good coordinates or refine them “by hand”—we need an automated way to do that.

The following discussion is a bit technical and involves some heuristics, and thus it is probably relevant only for people interested in implementing their own version of the software. Those interested only in the actual proofs of the existence of periodic orbits should move to Sect. 4.3.

4.2 Finding Suitable Section and Good Initial Set for a Computer-Assisted Proof

Here we give an outline for the selection of a good initial data for the proof of the existence of an apparently attracting orbit. It consists of the following steps:

-

1.

find a good numerical approximation \(x_0\) of a periodic solution to Eq. (1),

-

2.

find a good section S—by this we mean the difference between transition times \(t_1\) and \(t_2\) (as defined in Theorem 5) is as small as possible (in the vicinity of \(x_0\)),

-

3.

choose a good coordinate frame in S for the initial (p, n)-representation, and then choose the (p, n)-f-set \(V \subset S\), such that \(x_0 \in V\) and \(P_{\ge \omega }(V) \subset V\).

We will now describe shortly how each of the above steps was implemented. In this description, we refer to non-rigorous computations, that is algorithms: \(\hat{I}_h\) defined as in Sect. 2, and \(\hat{I}_\varepsilon \) defined as in Sect. 3, but with the remainder terms depending on the rough enclosure ignored and explicitly set to 0. Using non-rigorous integrators \(\hat{I}_h\) and \(\hat{I}_\varepsilon \), we construct a finite-dimensional semiflow \(\hat{\varphi }\) that approximates \(\varphi \) by

Now, the procedure for finding good initial conditions can be described as follows:

Step 1. Since we are looking for an attracting orbit, we start by non-rigorously integrating forward in time an initial function \(\hat{x}_0 \equiv 1.1\) for some arbitrary, long time \(T_{iter}\), until we see that \(\hat{x}_{T_{iter}}\) approach the apparently stable periodic orbit. Then, we refine \(\hat{x}_{T_{iter}}\) by the Newton algorithm applied to \(x \mapsto \hat{P}_{\ge \omega }(x) - x\), where the map \(\hat{P}_{\ge \omega }\) is a non-rigorous version of \(P_{\ge \omega }\) defined as a first return map for semiflow \(\hat{\varphi }\) to a simple section \(S = \{x \ | \ x(0) = \hat{x}_{T_{iter}}(0)\}\). The output of this step is a numerical candidate for the periodic solution \(x_0\), given by its (p, n)-representation \(\bar{x}_0\) such that \(\bar{x}_0\) and \(P_{\ge \omega }(\bar{x}_0)\) are close.

Step 2. This is an essential step, as numerical experiments with the rigorous integrator have shown that the choice of a good section is the key factor to obtain tight bounds on the image of the Poincaré map. We use an observation from Sect. 3.4, and we find the left eigenvector \(\hat{l}\) of the matrix \(\frac{\partial \hat{\varphi }}{\partial x}(T,x_0)\) corresponding to eigenvalue 1, where T is an apparent period of the approximate periodic orbit for the non-rigorous semiflow \(\hat{\varphi }\).

Please note that \(\hat{l}\) might be considered a (p, n)-representation with remainder part set to 0, and therefore, we can define a (p, n)-section by

where the dot product is computed using the coordinates of (p, n)-representation, i.e., in the vector space \(\mathbb {R}^m\), where m is the size of a (p, n)-representation, \(m = (n+2) \cdot p + 1\).

Step 3. Having a good candidate for the section S (defined by (24)), we need to introduce the coordinates on it. For this, we create the following matrix:

Now, let C denote the matrix obtained after orthonormalization of columns of A. Please note that matrix C acts on the variables corresponding to the remainder terms as an identity. This follows from the fact that \(\hat{l}_{i,[n+1]}\equiv 0\). It is easy to see that all (p, n)-representations that lie on the section S are given by:

for all y such that \(\pi _1 y = 0\).

Now, on section S, using the coordinates defined by the matrix C, we define a candidate set [V], in a form of (p, n)-f-set in a following manner. Let \([r] \subset \mathbb {R}^{m-p}\) (these correspond to variables \(x^{i,[k]}\) for \(k \le n\)) and \([B] \subset \mathbb {R}^{p}\) (these are bounds for \(x^{i,[n+1]}\)—the remainders) be two interval boxes centered at 0 such that \(diam(\pi _1 [r]) = 0\). We put \([\bar{r}_0] := [r] \times [B]\), and we define (p, n)-f-set [V] by:

Diameters of \(\pi _i [\bar{r}_0]\) for \(i \ge 2\) are selected experimentally to follow some exponential law in parameter k (i.e., \(diam(\pi _{\mathbb {I}(i, k)}[\bar{r}_0]) \approx a^k\) for \(1 \le i \le n\)), as the periodic solutions to Eq. (2) are at least of class \(C^\infty \) and, if \(x(t) > -1\) for all t, then they should be analytic [18, 22]. The remainder [B] is chosen initially such that \(diam\left( B\right) \gg diam\left( [r_0]\right) \). Therefore, the initial selection of \([\bar{r}_0]\) may not be good enough to satisfy assumptions of Theorem 11 right away. As the dynamics of the system is strongly contracting, we hope to obtain a good initial condition by the following iteration. We start with \([V]_0 = [V]\) and we compute \([V]_{i+1} = P_{\ge \omega }([V]_i) \cap [V]_i\), until the condition \(P_{\ge \omega }([V]_i) \subset [V]_i\) is eventually met at some \(i_{stop}\). Then, the initial set for the computer-assisted proof is \([\tilde{V}] = V_{i_{stop}}\). Both initial sets that are used in computer-assisted proofs in this paper were generated with such procedure (see source codes).

Observe that we are not very careful in the choice of coordinates on the section—we simply choose some basis orthonormal to the normal vector \(\hat{l}\) of the section hyperplane. Definitely better choice would be to use approximate eigenvectors of the Poincaré map, but in the case of strongly attracting periodic orbits, it is enough to choose a good section. Observe also, that the orthonormal matrix is easy to invert rigorously, which is an important step in the comparison of the initial set and its image by the Poincaré map.

4.3 Attracting Periodic Orbits in Mackey–Glass Equation for \(n = 6\) and \(n = 8\)

In this section we present two theorems about the existence of periodic orbits in Mackey–Glass equation. As they depend heavily on the estimates obtained from the rigorous numerical computations, we would like to discuss first the textual presentation of numbers used in this section and how they are related to the input/output values used in rigorous computations.

In the rigorous numerics, we use intervals with ends being representable computer numbers. The representable numbers are implemented as binary32 or binary64 data types defined in IEEE Standard for Floating Point Arithmetic (IEEE 754) [28], so that they are stored (roughly speaking) as \(s \cdot m \cdot 2^e\), where s is the sign bit, m is the mantissa and e the exponent. Such a representation means that most numbers with a finite representation in the decimal base are not representable (e.g., number 0.3333). In this paper, for better readability, we are going to use the decimal representation of numbers with the fixed precision (usually 4 decimal places), so we have rewritten computer programs to handle those values rigorously. For example, if we write in the text that \(a = 0.3333\), then we put the following rigorous operation in the code:

That is, all numbers presented here in theorems and/or proofs should be regarded by the reader as the real, rigorous values, even if they are not representable in the sense of IEEE 754 standard.

In the proofs, we refer to computer programs mg_stable_n6 and mg_stable_n8. Their source codes, together with instructions on the compilation process, can be downloaded from [23]. The codes were tested on a laptop with Intel® Core™ I7-2860QM CPU (2.50 GHz), 16 GB RAM under 64-bit Linux operating system (Ubuntu 12.04 LTS) and C/C++ compiler gcc version 4.6.3.

4.3.1 Case \(n=6\)

Our first result is for the periodic orbit for the parameter value before the first period-doubling bifurcation.

With \(n=6\), numerical experiments clearly show that the minimal period of the periodic orbit is around 5.58. In our proof, however, due to the problem with the loss of the regularity at the grid points, thus the need to use the “long enough” transition time, we consider the second return to the section.

Numerical experiments indicate that the orbit is attracting with the most significant eigenvalues of the map \(P_{\ge \omega }\) (again, this is the second return to the Poincaré section) estimated to be:

\(Re \lambda \) | − 0.0437 | − 0.0437 | 0.0030 | 0.0030 | − 0.0028 | 0.0019 | − 0.0003 | − 0.0003 | 0.0005 |

\(Im \lambda \) | 0.0793 | − 0.0793 | 0.0097 | − 0.0097 | 0.0018 | − 0.0018 | |||

\( |\lambda \)| | 0.0905 | 0.0905 | 0.0102 | 0.0102 | 0.0028 | 0.0019 | 0.0019 | 0.0019 | 0.0005 |

Therefore, the contraction appears to be quite strong, so the choice of good coordinates on the section appears to be not important.

We obtained the following theorem.

Theorem 12

There exists a T-periodic solution x with period \(T \in \left[ 10.9671, 10.9673\right] \) to Eq. (2) for parameters \(\gamma = 2\), \(\alpha = 1\), \(\tau = 2\) and \(n = 6\). Moreover,

for \(\hat{x}\) defined by

Proof

Verification of assumptions of the Schauder theorem is done with the computer assistance in the program mg_stable_n6. It uses the (p, n)-representation of the phase space with \(p = 32\) and \(n = 4\). Initial (p, n)-f-set \([\bar{x}_0]\) is provided directly in the source code, and it was selected with procedure described in Sect. 4.2. For the map \(P_{\ge \omega }\), \(\omega = (n+1) \cdot \tau = 10\), which represents the second return to the section S, we obtained:

which guarantees the \(C^{n+1}\)-regularity of the solutions and the compactness of the map \(P_{\ge \omega }\) (in \(C^k\) norm for \(k \le n\)). The inclusion condition \(P_{\ge \omega }\left( [\bar{x}_0]\right) \subset [\bar{x}_0]\) of the Schauder fixed point theorem is checked rigorously; see output of the program for details. Together, these two facts guarantee the non-emptiness of \(Supp^{(n+1)}([\bar{x}_0])\). The transversality is guaranteed with \(l(\dot{x}) \ge 0.2828\) for all \(x \in C^{n+1} \cap P(\bar{x}_0)\). The distance in \(C^{0}\) norm is rigorously estimated to

Similarly, we have verified the other norms; see output of the program. \(\square \)

The execution of the program realizing this proof took around 12 seconds on 2.50 GHz machine.

The diameter of the estimation for period T (also for the last step \([\varepsilon _1, \varepsilon _2]\)) obtained from the computer-assisted proof is close to \(1.15 \cdot 10^{-4}\).

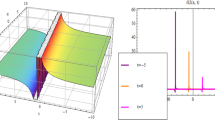

A graphical representation of the estimates obtained in the proof is found in Fig. 4.

4.3.2 Case \(n=8\)

For \(n=8\) we consider the periodic orbit after the first period doubling. This time the period of the orbit is long enough to overcome the initial loss of regularity, so we consider the first return Poincaré map.

Numerical computation shows that the orbit is attracting with the 10 most significant eigenvalues of the map \(P_{\ge \omega }\) estimated to be:

\(Re \lambda \) | 0.3090 | − 0.1359 | − 0.0067 | \(-\,7.58 \cdot 10^{-4}\) | \(6.58 \cdot 10^{-4}\) | \(-1.23 \cdot 10^{-4}\) | \( 2.184 \cdot 10^{-5}\) |

\(Im \lambda \) | \( 6.265 \cdot 10^{-6}\) | ||||||

\( |\lambda \)| | 0.3090 | 0.1359 | 0.0067 | \(7.58 \cdot 10^{-4}\) | \(6.58 \cdot 10^{-4}\) | \(1.23 \cdot 10^{-4}\) | \( 2.272 \cdot 10^{-5}\) |

Theorem 13

There exists a T-periodic solution x with period \(T \in \left[ 11.1350, 11.1353\right] \) to Eq. (2) for parameters \(\gamma = 2\), \(\alpha = 1\), \(\tau = 2\) and \(n = 8\). Moreover,

for \(\hat{x}\) defined by

Proof

The proof follows the same lines as in the case of Theorem 12 (except this time we consider the first return to the section). Therefore, we just list the parameters from the proof.

\(\square \)

The diameter of the estimation for period T (also for the last step \([\varepsilon _1, \varepsilon _2]\)) obtained from the computer-assisted proof is close to \(3.899 \cdot 10^{-6}\). A graphical representation of the estimates obtained in the proof is found in Fig. 5.

The execution time was around 12 min. This increase when compared to \(n=6\) is due to much larger representation size in this case which affects the complexity of matrix and automatic differentiation algorithms which we are using.

5 Outlook and Future Directions

The results presented in this work might be improved in several ways:

-

An extension of the integration algorithm to the systems of delay equations in \(\mathbb {R}^k\) for \(k>1\). This is rather straightforward and it does not require any new ideas;

-

A different representation of function sets. Currently, we use the piecewise Taylor expansions, but other approaches, like the Chebyshev polynomials, might be better as they may produce better approximations on longer intervals;

-

avoiding the loss of the regularity at the beginning of the integration, which imposes the requirement for the transition time to section \(t_S\) to be “long enough.” The complete solution would be to confine the initial condition to the invariant set \(M^n \subset C^n\). We are currently working on this matter;

Other goal would be to apply the integrator to prove the existence of hyperbolic periodic orbits with one or more unstable directions, for example to establish the existence of LSOPs [10] in some general smooth DDEs, or unstable periodic solutions to Mackey–Glass equation. Good theorems, suitable for that task, already exist; see [37] for the analogous question in the dissipative PDEs setting.

The ultimate goal is to establish tools to prove chaotic dynamics in general DDEs, such as Mackey–Glass equation.

References

CAPD DynSys library. http://capd.ii.uj.edu.pl, 2014. Accessed: 2017-07-11.

G. Arioli and H. Koch. Integration of dissipative partial differential equations: A case study. SIAM J. Appl. Dyn. Syst., 9:1119–1133, 2010.

B. Bánhelyi, T. Csendes, A. Neumaier, and T. Krisztin. Global attractivity of the zero solution for Wright’s equation. J. Appl. Dyn. Syst., 13:537–563, 2014.

F.A. Bartha, A. Garab, and T. Krisztin. Local stability implies global stability for the 2-dimensional Ricker map. J. Difference Equ. Appl., 19:2043–2078, 2013.

R.D. Driver. Ordinary and Delay Differential Equations. Springer-Verlag, New York, 1977.

M. Gidea and P. Zgliczyński. Covering relations for multidimensional dynamical systems. J. Differential Equations, 202(1):32–58, 2004.

T. Kapela and P. Zgliczyński. The existence of simple choreographies for the n -body problem-a computer-assisted proof. Nonlinearity, 16(6):1899, 2003.

G. Kiss and J.P. Lessard. Computational fixed-point theory for differential delay equations with multiple time lags. J. of Differential Equations, 252(4):3093–3115, 2012.

T. Krisztin. Global dynamics of delay differential equations. Period. Math. Hungar., 56(1):83–95, 2008.

T. Krisztin and G. Vas.Large-Amplitude Periodic Solutions for Differential Equations with Delayed Monotone Positive Feedback. J. Dyn. Diff. Eq., 23(4):727–790, 2011.

T. Krisztin, H.O. Walther, and J. Wu. Shape, smoothness and invariant stratification of an attracting set for delayed monotone positive feedback, volume 11. American Mathematical Society, Providence, RI, 1999.

B. Lani-Wayda and R. Srzednicki. A generalized lefschetz fixed point theorem and symbolic dynamics in delay equations. Ergodic Theory Dynam. Systems, 22:1215–1232, 8 2002.

B. Lani-Wayda and H-O. Walther. Chaotic motion generated by delayed negative feedback part ii: Construction of nonlinearities. Math. Nachr., 180(1):141–211, 1996.

J.P. Lessard. Recent advances about the uniqueness of the slowly oscillating periodic solutions of Wright’s equation. J. of Differential Equations, 248(5):992–1016, 2010.

R.J. Lohner, J.R. Cach (ed), and I. Gladwel (ed). Computation of Guaranteed Enclosures for the Solutions of Ordinary Initial and Boundary Value Problems: in Computational Ordinary Differential Equations. Clarendon Press, Oxford, New York, 1992.

M. C. Mackey and L. Glass. Mackey–Glass equation, article on scholarpedia. http://www.scholarpedia.org/article/Mackey-Glass_equation.

M. C. Mackey and L. Glass. Oscillation and chaos in physiological control systems. Science, 197(4300):287–289, 1977.

J. Mallet-Paret and R. D. Nussbaum. Analyticity and nonanalyticity of solutions of delay-differential equations. SIAM J. Math. Anal., 46(4):2468–2500, 2014.

J. Mallet-Paret and G. R. Sell. The Poincaré-Bendixson Theorem for Monotone Cyclic Feedback Systems with Delay. J. of Differential Equations, 125(2):441–489, 1996.

K. Mischaikow, M. Mrozek, and A. Szymczak. Chaos in the lorenz equations: A computer assisted proof part iii: Classical parameter values. J. of Differential Equations, 169(1):17–56, 2001.

R.E. Moore. Interval Analysis. Prentice Hall, 1966.

R. D. Nussbaum. Periodic solutions of analytic functional differential equations are analytic. Michigan Math. J., 20:249–255, 1973.

Szczelina R. Source codes for the computer assisted proofs. http://scirsc.org/p/mackeyglass. Accessed: 2017-07-11.

Szczelina R. Rigorous Integration of Delay Differential Equations. PhD Thesis, Jagiellonian University, Kraków, Poland, 2015, materials published online: http://scirsc.org/p/phd, 2015. Accessed: 2017-07-11.

Szczelina R. A computer assisted proof of multiple periodic orbits in some first order non-linear delay differential equation. Electron. J. Qual. Theory Differ. Equ., 83:1–19, 2016.

L.B. Rall. Automatic Differentiation: Techniques and Applications. In: Lecture Notes in Computer Science, vol 120. Springer Verlag, 1981.

J. Schauder. Der Fixpunktsatz in Funktionalräumen. Studia Math., 2:171–189, 1930.

IEEE Computer Society. IEEE Standard for Floating-Point Arithmetic. published online: http://ieeexplore.ieee.org/servlet/opac?punumber=4610933, https://doi.org/10.1109/IEEESTD.2008.4610935, ISBN 978-0-7381-5753-5, 2008. Accessed: 2017-07-11.

W. Tucker. A rigorous ODE solver and Smale’s 14th problem. Found. Comput. Math., 2(1):53–117, 2002.

Gabriella Vas. Configurations of periodic orbits for equations with delayed positive feedback. J. of Differential Equations, 262(3):1850–1896, 2017.

H.-O. Walther. The solution manifold and \(C^1\)-smoothness for differential equations with state-dependent delay. J. of Differential Equations, 195(1):46–65, 2003.

D. Wilczak and P. Zgliczyński. Computer Assisted Proof of the Existence of Homoclinic Tangency for the Hénon Map and for the Forced Damped Pendulum. SIAM J. Appl. Dyn. Syst., 8(4):1632–1663, 2009.

M. Zalewski. Computer-assisted proof of a periodic solution in a nonlinear feedback DDE. Topol. Methods Nonlinear Anal., 33(2):373–393, 2009.

E. Zeidler. Applied Functional Analysis: Applications to Mathematical Physics. Springer New York, New York, NY, 1995.

P. Zgliczyński. \(C^1\)-Lohner algorithm. Found. Comput. Math., 2, 2002.

P. Zgliczyński. Rigorous Numerics for Dissipative Partial Differential Equations II. Periodic Orbit for the Kuramoto-Sivashinsky PDE; A Computer-Assisted Proof. Found. Comput. Math., 4(2):157–185, April 2004.

P. Zgliczyński. Rigorous Numerics for Dissipative Partial Differential Equations III. An effective algorithm for rigorous integration of dissipative PDEs. Topol. Methods Nonlinear Anal., 36:197–262, 2010.

Acknowledgements

Research has been supported by Polish National Science Centre Grants 2011/03B/ST1/04780 and 2016/22/A/ST1/00077.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Hans Munthe-Kaas.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Szczelina, R., Zgliczyński, P. Algorithm for Rigorous Integration of Delay Differential Equations and the Computer-Assisted Proof of Periodic Orbits in the Mackey–Glass Equation. Found Comput Math 18, 1299–1332 (2018). https://doi.org/10.1007/s10208-017-9369-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10208-017-9369-5

Keywords

- Computer-assisted proofs

- Delay differential equations

- Periodic orbit

- Topological methods

- Interval arithmetic