Abstract

We address the problem of the exact computation of two joint spectral characteristics of a family of linear operators, the joint spectral radius (JSR) and the lower spectral radius (LSR), which are well-known different generalizations to a set of operators of the usual spectral radius of a linear operator. In this paper we develop a method which—under suitable assumptions—allows us to compute the JSR and the LSR of a finite family of matrices exactly. We remark that so far no algorithm has been available in the literature to compute the LSR exactly.

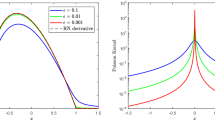

The paper presents necessary theoretical results on extremal norms (and on extremal antinorms) of linear operators, which constitute the basic tools of our procedures, and a detailed description of the corresponding algorithms for the computation of the JSR and LSR (the last one restricted to families sharing an invariant cone). The algorithms are easily implemented, and their descriptions are short. If the algorithms terminate in finite time, then they construct an extremal norm (in the JSR case) or antinorm (in the LSR case) and find their exact values; otherwise, they provide upper and lower bounds that both converge to the exact values. A theoretical criterion for termination in finite time is also derived. According to numerical experiments, the algorithm for the JSR finds the exact value for the vast majority of matrix families in dimensions ≤20. For nonnegative matrices it works faster and finds the JSR in dimensions of order 100 within a few iterations; the same is observed for the algorithm computing the LSR. To illustrate the efficiency of the new method, we apply it to give answers to several conjectures which have been recently stated in combinatorics, number theory, and formal language theory.

Similar content being viewed by others

References

F. Alizadeh, D. Goldfarb, Second-order cone programming, Math. Program. 95(1), 3–51 (2003).

E.D. Andersen, C. Roos, T. Terlaky, On implementing a primal-dual interior-point method for conic quadratic optimization, Math. Program. 95(2), 249–277 (2003).

T. Ando, M.-H. Shih, Simultaneous contractibility, SIAM J. Matrix Anal. Appl. 19(2), 487–498 (1998).

N.E. Barabanov, Lyapunov indicator for discrete inclusions, I–III, Autom. Remote Control 49(2), 152–157 (1988).

M.A. Berger, Y. Wang, Bounded semigroups of matrices, Linear Algebra Appl. 166, 21–27 (1992).

J. Berstel, Growth of repetition-free words—a review, Theor. Comput. Sci. 340(2), 280–290 (2005).

V.D. Blondel, R.M. Jungers, V.Yu. Protasov, On the complexity of computing the capacity of codes that avoid forbidden difference patterns, IEEE Trans. Inf. Theory 52(11), 5122–5127 (2006).

V.D. Blondel, Yu. Nesterov, Computationally efficient approximations of the joint spectral radius, SIAM J. Matrix Anal. Appl. 27(1), 256–272 (2005).

V.D. Blondel, Y. Nesterov, J. Theys, On the accuracy of the ellipsoid norm approximation of the joint spectral radius, Linear Algebra Appl. 394, 91–107 (2005).

V.D. Blondel, J. Theys, A.A. Vladimirov, An elementary counterexample to the finiteness conjecture, SIAM J. Matrix Anal. Appl. 24(4), 963–970 (2003).

V. Blondel, J. Tsitsiklis, Approximating the spectral radius of sets of matrices in the max-algebra is NP-hard, IEEE Trans. Autom. Control 45(9), 1762–1765 (2000).

J. Cassaigne, Counting overlap-free binary words, in STACS 93. Lecture Notes in Comput. Sci., vol. 665 (Springer, Berlin, 1993), pp. 216–225.

A. Cicone, N. Guglielmi, S. Serra-Capizzano, M. Zennaro, Finiteness property of pairs of 2×2 sign-matrices via real extremal polytope norms, Linear Algebra Appl. 432(2–3), 796–816 (2010).

I. Daubechies, J. Lagarias, Two-scale difference equations. II. Local regularity, infinite products of matrices and fractals, SIAM J. Math. Anal. 23(4), 1031–1079 (1992).

S. Finch, private communication, 2008.

S. Finch, P. Sebah, Z.-Q. Bai, Odd entries in Pascal’s trinomial triangle, arXiv:0802.2654 (2008).

E. Fornasini, M.E. Valcher, Stabilizability of discrete-time positive switched systems, in Proceedings of the 49th IEEE Conference on Decision and Control—CDC (2010), pp. 432–437.

J. Goldwasser, W. Klostermeyer, M. Mays, G. Trapp, The density of ones in Pascal’s rhombus, Discrete Math. 204, 231–236 (1999).

G. Gripenberg, Computing the joint spectral radius, Linear Algebra Appl. 234, 43–60 (1996).

N. Guglielmi, C. Manni, D. Vitale, Convergence analysis of C 2 Hermite interpolatory subdivision schemes by explicit joint spectral radius formulas, Linear Algebra Appl. 434, 784–902 (2011).

N. Guglielmi, F. Wirth, M. Zennaro, Complex polytope extremality results for families of matrices, SIAM J. Matrix Anal. Appl. 27(3), 721–743 (2005).

N. Guglielmi, M. Zennaro, An algorithm for finding extremal polytope norms of matrix families, Linear Algebra Appl. 428(10), 2265–2282 (2008).

N. Guglielmi, M. Zennaro, Finding extremal complex polytope norms for families of real matrices, SIAM J. Matrix Anal. Appl. 31(2), 602–620 (2009).

N. Guglielmi, M. Zennaro, Balanced complex polytopes and related vector and matrix norms, J. Convex Anal. 14, 729–766 (2007).

N. Guglielmi, M. Zennaro, On the asymptotic properties of a family of matrices, Linear Algebra Appl. 322(1–3), 169–192 (2008).

L. Gurvits, Stability of discrete linear inclusions, Linear Algebra Appl. 231, 47–85 (1995).

J. Hechler, B. Mößner, U. Reif, C 1-continuity of the generalized four-point scheme, Linear Algebra Appl. 430(11–12), 3019–3029 (2009).

R. Horn, C.R. Johnson, Matrix analysis (Cambridge University Press, Cambridge, 1985).

R.M. Jungers, The Joint Spectral Radius: Theory and Applications, Lecture Notes in Control and Information Sciences, vol. 385 (Springer, Berlin, 2009).

R.M. Jungers, V.Yu. Protasov, Counterexamples to the complex polytope extremality conjecture, SIAM J. Matrix Anal. Appl. 31(2), 404–409 (2009).

R.M. Jungers, V.Yu. Protasov, V.D. Blondel, Efficient algorithms for deciding the type of growth of products of integer matrices, Linear Algebra Appl. 428(10), 2296–2312 (2008).

R.M. Jungers, V.Yu. Protasov, V.D. Blondel, Overlap-free words and spectra of matrices, Theor. Comput. Sci. 410(38–40), 3670–3684 (2009).

V.S. Kozyakin, Algebraic unsolvability of problem of absolute stability of desynchronized systems, Autom. Remote Control 51(6), 754–759 (1990).

V.S. Kozyakin, On the computational aspects of the theory of joint spectral radius, Dokl. Math. 80(1), 487–491 (2009).

M. Maesumi, An efficient lower bound for the generalized spectral radius, Linear Algebra Appl. 240, 1–7 (1996).

G.G. Magaril-Il’yaev, V.M. Tikhomirov, Convex analysis: theory and applications, Translations of Mathematical Monographs, vol. 222 (2001). Transl. from the Russian by Dmitry Chibisov.

O. Mason, R.N. Shorten, Quadratic and copositive Lyapunov functions and the stability of positive switched linear systems, in Proceedings of the American Control Conference (ACC 2007) (2007), pp. 657–662.

P.A. Parrilo, A. Jadbabaie, Approximation of the joint spectral radius using sum of squares, Linear Algebra Appl. 428(10), 2385–2402 (2008).

V.Yu. Protasov, The joint spectral radius and invariant sets of linear operators, Fundam. Prikl. Mat. 2(1), 205–231 (1996).

V.Yu. Protasov, The generalized spectral radius. A geometric approach, Izv. Math. 61(5), 995–1030 (1997).

V.Yu. Protasov, Asymptotic behaviour of the partition function, Sb. Math. 191(3–4), 381–414 (2000).

V.Yu. Protasov, On the regularity of de Rham curves, Izv. Math. 68(3), 27–68 (2004).

V.Yu. Protasov, Invariant functionals for random matrices, Funct. Anal. Appl. 44(3), 230–233 (2010).

V.Yu. Protasov, R.M. Jungers, V.D. Blondel, Joint spectral characteristics of matrices: a conic programming approach, SIAM J. Matrix Anal. Appl. 31(4), 2146–2162 (2010).

B. Reznick, Some binary partition functions, in Analytic Number Theory: Proceedings of a Conference in Honor, ed. by P.T. Bateman, B.C. Berndt, H.G. Diamond, H. Halberstam, A. Hildebrand (Birkhäuser, Boston, 1990), pp. 451–477.

G.C. Rota, G. Strang, A note on the joint spectral radius, Kon. Ned. Acad. Wet. Proc. 63, 379–381 (1960).

W.R. Rudin, Principles of Mathematical Analysis, 3rd edn. (McGraw-Hill, New York, 1976).

G.W. Stewart, J.G. Sun, Matrix perturbation theory (Academic Press, New York, 1990).

G. Strang, The joint spectral radius, Commentary by Gilbert Strang, Collected Works of Gian-Carlo Rota, 2000.

J.N. Tsitsiklis, V.D. Blondel, The Lyapunov exponent and joint spectral radius of pairs of matrices are hard—when not impossible—to compute and to approximate, Math. Control Signals Syst. 10(1), 31–40 (1997).

C. Vagnoni, M. Zennaro, The analysis and the representation of balanced complex polytopes in 2D, Found. Comput. Math. 9(3), 259–294 (2009).

L. Villemoes, Wavelet analysis of refinement equations, SIAM J. Math. Anal. 25(5), 1433–1460 (1994).

J.S. Vandergraft, Spectral properties of matrices which have invariant cones, SIAM J. Appl. Math. 16, 1208–1222 (1968).

Acknowledgements

The authors are grateful to both anonymous referees for their attentive reading and many valuable comments and suggestions. The research of the first author is supported by Italian M.I.U.R. and G.N.C.S. The research of the second author is supported by RFBR grants No. 11-01-00329 and No. 10-01-00293, and by a grant of the Dynasty foundation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Michael L. Overton.

Appendix

Appendix

1.1 10.1 Proof of Proposition 3

We denote by ∥⋅∥ the Minkowski norm in ℝd generated by the polytope P k−1, and the corresponding operator norm. We have

Let us recall that \(\|A\|_{1} = \max_{j=1, \ldots, d}\sum_{i=1}^{d} |A_{ij}|\) for every (d×d) matrix A. First of all, we show that \(\tilde{A}_{j}P_{k-1} \subset (1+h) P_{k-1}\). If v is a vertex of P k−1, then \(\tilde{A}_{j}v \in P_{k-1}\), unless j=d n ,v=v n . Indeed, \(\tilde{A}_{j}v\) is either a vertex, and hence, belongs to P k−1, or a “dead” point, in which case \((1 + \varepsilon- \delta_{3})\tilde{A}_{j} v \in P_{k-1}\), and therefore \(\tilde{A}_{j}v \in P_{k-1}\), because δ 3<ε. The only exception may be in the case j=d n ,v=v n , since the equality \(\tilde{A}_{d_{n}}v_{n} = v_{1}\) may fail for the approximate Algorithm (R). In this case, however, \(\tilde{A}_{d_{n}}v_{n} \in\frac{1}{t_{0}-\delta_{3}}P_{k-1} \subset (1+h)P_{k-1}\), where t 0 is the value of LP problem (8) with \(z=\tilde{A}_{d_{n}}v_{n}\). Thus, \(\|\tilde{A}_{j}\| \le 1+h\) for all \(\tilde{A}_{j} \in \tilde{M}\).

From (12) it follows that δ 3<ε/4<1/200. On the other hand, the radius of the inscribed ball of P k−1 does not exceed ∥v 1∥1=1, hence \(\frac{r}{1+r\delta_{3}} \le1 \), and therefore \(1+r\delta_{3} \le 1 + \frac{\delta_{3}}{1-\delta_{3}} < 1.01\). Furthermore, \(\|\tilde{A}_{j} - \tilde{A}_{j}'\|_{1} \le d \delta_{1}\) for all j, and \(\| v_{1} - v_{1}'\|_{1} \le d\delta_{2}\); hence, applying (34), we get

and similarly \(\| v_{1} - v_{1}'\| \le \frac{1.01 d \delta_{2}}{r}\). This yields that for every N≤n+k and for every product \(\tilde{A}_{q_{N}}\ldots\tilde{A}_{q_{1}}\) we have

Since h<1/(4N) (by the assumption) and \(\frac{1.01 d R \delta_{1}}{r} < \varepsilon/4 < 1/(4N) \) (by (12)), applying the inequality \((1+\frac {1}{2N} )^{N} < \sqrt{e}\), we conclude that both matrix products in (35) are bounded above by \(\sqrt{e} = 1.648\ldots\) . Furthermore,

which is proved easily by writing \(\tilde{A}_{q_{i}}' = \tilde{A}_{q_{i}} + B_{q_{j}} \) with \(\|B_{j}\| < \frac{1.01 d R \delta_{1} }{r}\) for all i=1,…,N, opening all brackets in the product \(\prod_{i=1}^{N} ( \tilde{A}_{q_{i}} + B_{q_{j}} )\), and using the submultiplicativity of the norm. Applying now inequality \((1+x+y)^{N} - (1+x)^{N}\le1 + \sqrt{e} N y\) for all x,y≥0 such that \(x+y \le\frac{1}{2N}\), we obtain

Furthermore, \(\| \tilde{A}_{q_{N}}'\ldots\tilde{A}_{q_{1}}'v_{1}' - \tilde{A}_{q_{N}}\ldots\tilde{A}_{q_{1}}v_{1} \| \le\)

The first term is bounded above by \(\frac{1.7 N d R \delta_{1}}{r}\), the second one is bounded by \(\sqrt{e} \| v_{1}' - v_{1}\| \le \sqrt{e} \frac{1.01 d \delta_{2}}{r} \le \frac{1.7 d \delta_{2}}{r}\) (we used (35) to estimate the norm of the product, and (34) to estimate \(\|v_{1}' - v_{1}\|\)). Thus,

The right-hand side will be denoted by γ. Every vertex obtained by the approximate Algorithm (R) has the form \(v = \tilde{A}_{q_{N}}\ldots\tilde{A}_{q_{1}}v_{1}\) for some N<k+n. The distance from that vertex to the corresponding vertex \(v' = \tilde{A}_{q_{N}}'\ldots\tilde{A}_{q_{1}}'v_{1}'\) does not exceed γ. Thus, when we pass from the polytope P k−1 to the polytope \(P_{k-1}'\), each vertex moves to the distance (in the norm ∥⋅∥) of at most γ. Hence, every point x such that ∥x∥<1−γ stays inside the polytope. Consequently, if ∥x∥<1−2γ and ∥y∥<γ, then \((x + y) \in P_{k-1}'\). For every dead vertex v removed by the approximate algorithm we have ∥v′−v∥<γ. Since v is removed, it follows that v(1+ε−δ 3)∈P k−1, and so \(\|v\| \le \frac{1}{1+ \varepsilon- \delta_{3}} < 1 - 2 \gamma\) (the last inequality is checked directly). Whence \(v' \in P_{k-1}'\), and the exact Algorithm (R) will sort out this vertex. Thus, if a vertex is dead in the approximate algorithm, then the corresponding vertex is for the exact algorithm. Therefore, \(P_{k-1}'\) is an extremal polytope for M′, and so Π′ is an s.m.p. □

We next give the proofs of two main results of this paper (Theorems 4 and 7) and details about the proof of Theorem 8.

1.2 10.2 Proof of Theorem 4

We give the proof for Algorithm (R); the proofs for Algorithms (C) and (P) are analogous. We use two auxiliary results.

Lemma 12

Let us have a cyclic tree \({\mathcal {T}}\) with a root B generated by an irreducible word b=d n …d 1; then for any word a, which is not a power of b, we have a n∉B.

Proof

Let l be the length of a and let p be the greatest common divisor of l and n. If l=kn for some integer k, then the words a and b k must have different letters at some position, otherwise a=b k. Therefore, a∉B, and so a n∉B.

If l is not divisible by n, then p<n, and there exists an index j such that d j+p ≠d j , otherwise b is a power of the word d p …d 1, which contradicts the irreducibility. The Diophantine equation lx−ny=p has a solution (x,y)∈ℤ2 such that 0≤x≤n−1. Since the words a x+1 and b y+2 have different letters at the position lx+j, we have a x+1∉B, and hence a n∉B, because n≥x+1. □

Lemma 13

Let \({\mathcal {T}}\) be the cyclic tree generated by the product \(\tilde{\varPi}\). If Algorithm (R) terminates within k steps, then there is ε>0 such that all nodes of \({\mathcal {T}}\) of level ≥k are in the polytope (1−ε)P k−1.

Proof

If the algorithm terminates after k steps, then \(\tilde{A}_{j}P_{k-1} \subset P_{k-1}\) for all j=1,…,m. Moreover, we have \({\mathcal {U}}_{k} = \emptyset\), which means that every node \(v\in {\mathcal {T}}\) of the level k belongs to a dead branch, and therefore \(v \in \operatorname {int}P_{k-1}\). The total number of nodes of level k is finite; hence all of them are in (1−ε)P k−1 for some ε>0. If v is a node of a bigger level r>k, then v=Rv 0, where \(R \in \tilde{{\mathcal {M}}}^{ r-k}\) and v 0 is some node of the kth level. Since v 0∈(1−ε)P k−1, we have v∈(1−ε)RP k−1⊂(1−ε)P k−1, because RP k−1⊂P k−1. □

Proof of Theorem 4

Necessity. Consider the cyclic tree \({\mathcal {T}}\) generated by the product \(\tilde{\varPi}\). Assume the algorithm terminates after k steps. By Lemma 13 all nodes of levels at least k belong to (1−ε)P k−1, where ε>0 is fixed. For every product C, which is not a power of \(\tilde{\varPi}_{i}\), the node C n v i does not belong to the root (Lemma 12). Hence for each v i from the root and for every product \(C\in{\tilde{M}}^{l}\) that is not a power of a cyclic permutation of \(\tilde{\varPi} \), the level of the node C n+k v i is bigger than k. If v is not in the root, then this level is bigger than ln+k>k. Thus, C n+k v∈(1−ε)P k−1 for each node \(v \in {\mathcal {T}}\), and hence, for each vertex v of the polytope P k−1. This yields that C n+k P k−1⊂(1−ε)P k−1. Therefore, ρ(C n+k)<1−ε, and so ρ(C)<(1−ε)1/(n+k). Consequently, \(\tilde{\varPi}\) is dominant.

Let us now show that 1 is its unique and simple leading eigenvalue. Since for i≠1 the product \(\tilde{\varPi}_{1}\) is not a power of \(\tilde{\varPi}_{i}\), it follows that the node \(\tilde{\varPi}_{1}^{n}v_{i}\) does not belong to the root (Lemma 13). Hence, the level of the node \(\tilde{\varPi}_{1}^{ n+k}v_{i}\) is bigger than k. If v is not in the root, then the level of \(\tilde{\varPi}_{1}^{ n+k}v\) is bigger than k as well. Thus, \(\tilde{\varPi}_{1}^{ n+k}v \in (1-\varepsilon) P_{k-1}\) for all vertices v of P k−1, except for v=±v 1. For any eigenvector u≠v 1 of the operator \(\tilde{\varPi}_{1}\), take the one-dimensional subspace U⊂ℝd spanned by u (if u is complex, then U is the two-dimensional subspace spanned by u and by its conjugate). Since v 1∉U, it follows that \(\tilde{\varPi}_{1} (P_{k-1}\cap U) \subset \operatorname {int}(P_{k-1}\cap U)\), where the interior is taken in U. This implies that the spectral radius of \(\tilde{\varPi}_{1}\) on the subspace U is smaller than 1. Thus, all eigenvalues of \(\tilde{\varPi}_{1}\) different from 1 are smaller than 1 by modulo, and the eigenvalue 1 has a unique eigenvector. Hence, the leading eigenvalue 1 is unique and has only one Jordan block. The dimension of this block cannot exceed 1, otherwise \(\|\tilde{\varPi}_{1}^{k}\|\to\infty\) as k→∞, which contradicts the nondefectivity of the family \(\tilde{{\mathcal {M}}}\). Therefore, the eigenvalue 1 is simple.

Sufficiency. The proof uses arguments similar to those given for the Small CPE Theorem in [21], to which we refer the reader.

Assume \(\tilde{\varPi}\) is dominant and its leading eigenvalue is unique and simple. If the algorithm does not terminate, then the tree \({\mathcal {T}}\) has an infinite path of living leaves v (0)→v (1)→⋯ (the node v (i) is on the ith level) starting at a node v (0)=v p from the root. For every r we have \(v^{(r)} \notin \operatorname {int}P_{r-1}\). Hence, \(v^{(r)} \notin \operatorname {int}P_{k}\) for all k<r. Since the family \(\tilde{{\mathcal {M}}}\) is irreducible, it follows that \(0 \in \operatorname {int}P_{k}\) for some k, and hence the polytope P k defines a norm ∥⋅∥ k in ℝd. For this norm ∥v (r)∥≥1 for all r>k. On the other hand, \(\tilde{{\mathcal {M}}}\) is nondefective, hence the sequence {v (r)} is bounded. Thus, there is a subsequence \(\{v^{(r_{i})}\}_{i\in \mathbb {N}} , r_{1} \ge k\), that converges to some point v∈ℝd. Clearly, ∥v∥ k ≥1. For every i we have \(v^{(r_{i})} = R_{i}v^{(r_{1})}\) and \(v^{(r_{i+1})} = C_{i} v^{(r_{i})}\), where \(R_{i} \in\tilde{{\mathcal {M}}}^{ r_{i}-r_{1}} , C_{i} \in\tilde{{\mathcal {M}}}^{ r_{i+1}-r_{i}}\). Denote by \(\mathrm {c}\ell [\tilde{M}]\) the closure of the semigroup of all products of operators from \(\tilde{{\mathcal {M}}}\). Since this semigroup is bounded, after possible passage to a subsequence, it may be assumed that R i and C i converge to some \(R, C \in \mathrm {c}\ell [\tilde{M}]\), respectively, as i→∞. We have Cv=v, hence ρ(C)≥1, which, by the domination assumption, implies that there is j∈{1,…,n} such that C belongs to \(\mathrm {c}\ell [\tilde{\varPi}_{j}]\), which is the closure of the semigroup {(Π j )q} q∈ℕ. Moreover, since the leading eigenvalue of \(\tilde{\varPi}\) is unique and simple, we see that v=λv j , where λ∈ℝ. We have ∥v j ∥ k =1 and ∥v∥ k ≥1, hence |λ|≥1. Thus, \(Rv^{(r_{1})} = \lambda v_{j}\). The nodes v j and v (0)=v p are both from the root, hence there is a product S such that Sv j =v (1). Taking into account that \(v^{(r_{1})} = R_{1}v^{(1)} \), we obtain R 1 SRv (1)=λv (1). Hence, ρ(R 1 SR)≥|λ|, and we conclude that λ=±1 and that \(R_{1}SR \in \mathrm {c}\ell [\tilde{\varPi}_{i}]\) for some i. This yields v (1)=μv i , where v i is the corresponding vector from the root, μ∈ℝ. Since ∥v i ∥=1 and ∥v (1)∥≥1, we have |μ|≥1. The elements v i and v (0)=v p are both from the root, hence \(\tilde{A}_{p}\cdots\tilde{A}_{i} v_{i} = v_{p}\), and consequently \(\tilde{A}_{s}\tilde{A}_{p}\cdots\tilde{A}_{i} v_{i} = v^{(1)}\) for some \(\tilde{A}_{s} \in {\mathcal {M}}\). Note that d s ≠d p+1, because the node v (1) is not in the root. Therefore, the product \(Q = \tilde{A}_{s} \tilde{A}_{p}\cdots \tilde{A}_{i}\) does not coincide with Π i , and its length is at most n. Thus, Qv i =μv i , hence ρ(Q)=1 and μ=±1. Thus, Q has spectral radius 1 and the leading eigenvector v i ; therefore \(Q \in \mathrm {c}\ell [\tilde{\varPi}_{i}]\). On the other hand, the length of Q does not exceed n, hence \(Q = \tilde{\varPi}_{i}\), which is a contradiction. Hence, the algorithm terminates within finitely many steps. □

1.3 10.3 Proof of Theorem 7

We use several auxiliary results. The proof of the following lemma is similar to the proof of Lemma 13.

Lemma 14

Let \({\mathcal {T}}\) be the cyclic tree generated by the product \(\tilde{\varPi}\). If the algorithm terminates within k steps, then there is ε>0 such that all vertices of the tree of level ≥k belong to the infinite polytope (1+ε)P k−1.

Lemma 15

[53] If an operator B has an invariant cone K, then for every its eigenvector from \(\operatorname {int}K\) the corresponding eigenvalue equals to ρ(B).

Proof of Theorem 7

Necessity. Consider the cyclic tree \({\mathcal {T}}\) generated by the product \(\tilde{\varPi}\). If the algorithm terminates after k steps, then by Lemma 14 all vertices of levels at least k belong to (1+ε)P k−1. For an arbitrary product C, which is not a power of \(\tilde{\varPi}_{i}\), the node C n v i does not belong to the root (Lemma 12). Hence, for every v i from the root the level of the node C n+k v i is bigger than k. If v is not in the root, then the level of C n+k v is bigger than k as well, and consequently C n+k v∈(1+ε)P k−1 for each node \(v \in {\mathcal {T}}\) and hence for every vertex of P k . This yields that C n+k P k−1⊂(1+ε)P k−1; therefore ρ(C n+k)>1+ε, and so ρ(C)>(1+ε)1/(n+k). This holds for every product C that is not a power of \(\tilde{\varPi}\) or of its cyclic permutations, which completes the proof.

Sufficiency. Assume the converse: the product \(\tilde{\varPi}\) is under-dominant, but the algorithm does not produce an extremal infinite polytope. This means that the tree \({\mathcal {T}}\) has an infinite path of living leaves v (0)→v (1)→… starting at a vertex v (0)=v p from the root. Since the family \({\mathcal {M}}\) is eventually positive, it follows from Lemma 9 that there exists an internal invariant cone \(\tilde{K}\), which, moreover, contains all leading eigenvectors of products of operators from \({\mathcal {M}}\). Hence, \(\tilde{K}\) contains the root of \({\mathcal {T}}\), and therefore it contains all the nodes v (k). For every r we have \(v^{(r)} \notin \operatorname {int}P_{r-1}\). Hence, \(v^{(r)} \notin \operatorname {int}P_{k}\) for all k<r. Let g(⋅) be the antinorm defined by the infinite polytope P k : g(x)=sup{λ|λ −1 x∈P k }. Since a concave function is continuous at every interior point of its domain (see, for instance, [36]), it follows that g is equivalent to every norm and to every antinorm on the interior cone \(\tilde{K}\). In particular, there are positive constants c 1,c 2 such that

where f is an invariant antinorm for \(\tilde{{\mathcal {M}}}\) (Theorem 6). For arbitrary r we have g(v (r))≤1. On the other hand, since f is invariant and f(v k )=1 for all k, we have f(v)≥1 for every node v of the tree. In particular, f(v (r))≥1. Thus, c 1≤g(v (r))≤1 for all r. Since g is equivalent to each norm on \(\tilde{K}\), we see that the sequence {v (r)} is bounded, and hence there is a subsequence \(\{v^{(r_{i})}\}_{i\in \mathbb {N}}\), r 1≥1, that converges to some point v∈ℝd. Clearly, \(v \in\tilde{K}\) and c 1≤g(v)≤1. For every i we have \(v^{(r_{i})} = R_{i}v^{(r_{1})}\) and \(v^{(r_{i+1})} = C_{i}v^{(r_{i})}\), where \(R_{i} \in\tilde{{\mathcal {M}}}^{ r_{i}-r_{1}} , C_{i} \in\tilde{{\mathcal {M}}}^{ r_{i+1}-r_{i}}\). The sequence \(\{v^{(r_{i})}\}\) is contained in \(\tilde{K}\), bounded, and separated from zero; hence by Lemma 11 the sequences of operators {R i } and {C i } are both bounded. Therefore, after a passage to subsequences it may be assumed that these two sequences converge to some \(R, C \in \mathrm {c}\ell [\tilde{M}]\), respectively, as i→∞ (see the proof of Theorem 4 for the definition of \(\mathrm {c}\ell [\tilde{{\mathcal {M}}}]\) and \(\mathrm {c}\ell [\tilde{\varPi}]\)). We have Cv=v. Since \(v \in \operatorname {int}\mathbb {R}^{d}_{+}\), if follows from Lemma 15 that v is the leading eigenvector of C. Consequently, ρ(C)=1, which, by the domination assumption, implies \(C \in \mathrm {c}\ell [\tilde{\varPi}_{j}]\) and v=λv j for some j=1,…,n, and λ>0. Since g(v j )=1 and g(v)≤1, it follows that λ≤1. Thus, \(Rv^{(r_{1})} = \lambda v_{j}\). The elements v j and v (0)=v p are both from the root; hence there is a product S such that Sv j =v (1). Taking into account that \(v^{(r_{1})} = R_{1}v^{(1)} \), we obtain R 1 SRv (1)=λv (1). Again invoking Lemma 15, we conclude that v (1) is the leading eigenvector of R 1 SR. Hence, ρ(R 1 SR)=λ, and we see that λ=1; hence \(R_{1}SR \in \mathrm {c}\ell [\tilde{\varPi}_{i}]\) for some i. This yields v (1)=μv i , where v i is the corresponding vector from the root, μ>0. Since g(v i )=1 and g(v (1))≤1, we have μ≤1. Elements v i and v (0)=v p are both from the root; hence \(\tilde{A}_{p}\cdots\tilde{A}_{i} v_{i} = v_{p}\), and consequently \(\tilde{A}_{s}\tilde{A}_{p}\cdots\tilde{A}_{i} v_{i} = v^{(1)}\) for some \(A_{s} \in {\mathcal {M}}\). Note that d s ≠d p+1, because the vertex v (1) is not in the root. Therefore, the product \(Q = \tilde{A}_{s} \tilde{A}_{p}\cdots\tilde{A}_{i}\) does not coincide with Π i , and its length is at most n. Thus, Qv i =μv i , and by Lemma 15 ρ(Q)=μ. Consequently, μ=1 and v i is the leading eigenvector of the operator \(Q \in \mathrm {c}\ell [\tilde{\varPi}_{i}]\). On the other hand, the length of the product Q does not exceed n, and therefore \(Q = \tilde{\varPi}_{i}\), which is a contradiction. □

1.4 10.4 The 20×20 matrices A 1,A 2 for the Problem of Overlap-Free Words of Sect. 8.1 and the Proof of Theorem 8

We write the two matrices associated to the problem discussed in Sect. 8.1:

and

To give a rigorous proof of the theorem, it now suffices to list all the vertices of the extremal polytopes obtained by applying Algorithms (P) and (L).

Proof of Theorem 8

Denote Π=A 1 A 2. To show that

it suffices to present an extremal polytope P for the operators \(\tilde{A}_{1} = [ \rho(\varPi) ]^{-1/2}A_{1} \) and \(\tilde{A}_{2} = [ \rho(\varPi) ]^{-1/2}A_{2}\). This polytope is \(P = \mathrm {co}_{-} ( \{v_{i}\}_{i=1}^{54} )\), where the first vertex v 1 is the leading eigenvector of Π, and the other vertices are:

Now let \(\varPi= A_{1}A_{2}^{10}\). To prove that

it suffices to present an extremal infinite polytope P for the operators \(\tilde{A}_{1} = [ \rho(\varPi) ]^{-1/11}A_{1} \) and \(\tilde{A}_{2} = [ \rho(\varPi) ]^{-1/11}A_{2}\). This polytope is \(P = \mathrm {co}( \{v_{i}\}_{i=1}^{104} ) + K_{{\mathcal {H}}}\), where the first vertex v 1 is the leading eigenvector of Π, and the other vertices are:

The proof is then completed by routine computations. □

Rights and permissions

About this article

Cite this article

Guglielmi, N., Protasov, V. Exact Computation of Joint Spectral Characteristics of Linear Operators. Found Comput Math 13, 37–97 (2013). https://doi.org/10.1007/s10208-012-9121-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10208-012-9121-0