Abstract

Nearly 100,000 deaf patients worldwide have had their hearing restored by a cochlear implant (CI) fitted to one ear. However, although many patients understand speech well in quiet, even the most successful experience difficulty in noisy situations. In contrast, normal-hearing (NH) listeners achieve improved speech understanding in noise by processing the differences between the waveforms reaching the two ears. Here we show that a form of binaural processing can be achieved by patients fitted with an implant in each ear, leading to substantial improvements in signal detection in the presence of competing sounds. The stimulus in each ear consisted of a narrowband noise masker, to which a tonal signal was sometimes added; this mixture was half-wave rectified, lowpass-filtered, and then used to modulate a 1000-pps biphasic pulse train. All four CI users tested showed significantly better signal detection when the signal was presented out of phase at the two ears than when it was in phase. This advantage occurred even though subjects only received information about the slowly varying sound envelope to be presented, contrary to previous reports that waveform fine structure dominates binaural processing. If this advantage generalizes to multichannel situations, it would demonstrate that envelope-based CI speech-processing strategies may allow patients to exploit binaural unmasking in order to improve speech understanding in noise. Furthermore, because the tested patients had been deprived of binaural hearing for eight or more years, our results show that some sensitivity to time-varying interaural cues can persist over extended periods of binaural deprivation.

Similar content being viewed by others

Introduction

Since the introduction of the single-channel cochlear implant (CI) in the 1970s, implant users' speech perception in quiet has improved to the stage where many previously deaf patients can converse confidently over the telephone. These improvements have been largely produced by two major developments: the introduction of multichannel implants, and improvements in speech-processing strategies (McDermott et al. 1992; Wilson et al. 1991). One major remaining challenge is to improve speech perception in noisy situations, where even the most successful CI users experience great difficulty. Because most CI users have only one implant, and because normal-hearing (NH) listeners use binaural hearing to improve speech perception in noise (Akeroyd and Summerfield 2000; Bronkhorst and Plomp 1988), the introduction of bilateral CIs seems a strong candidate for improving the performance of CI users. However, to date it appears that bilateral CI users exploit only one of the two major cues available to two-eared NH listeners. The “better ear effect” refers to the fact that, when two talkers occupy different locations, attenuation by the listener's head will improve the signal-to-noise ratio (SNR) at one or the other ear. This cue is available to both NH and CI listeners (Litovsky et al. 2004; Schleich et al. 2004; van Hoesel and Tyler 2003). In addition, the presence of a signal from a different location than a masker can reduce the correlation between the right-ear and left-ear acoustic signals. This “decorrelation” can allow a substantial threshold (THR) reduction when an NH listener detects a signal against a masker (Palmer and Shackleton 2002). It can also provide a basis for speech identification (Akeroyd and Summerfield 2000; Culling and Colburn 2000) and provides about half the benefit NH users obtain from listening through two ears (Zurek 1993). The standard measure of this [binaural masking level difference (BMLD)] is the threshold difference between the case where both the masker and signal are identical at the two ears and the one where the signal is inverted at one ear (Hirsh 1948).

In contrast to NH listeners, there has been limited evidence that bilateral CI users are sensitive to cues beyond better ear effects (van Hoesel 2004). We can think of three reasons for this. First, CI users often have extensive periods without bilateral hearing, and this could lead to degeneration of the brainstem structures known to process decorrelation (Vollmer et al. 2005). Second, NH studies have usually demonstrated sensitivity to binaural cues in the waveform fine structure (Smith et al. 2002), whereas present CI processors only extract the envelope in each channel. Third, bilateral implants are usually fitted without care being taken to ensure that a given frequency region of the audio input is mapped on to electrodes innervating “matched” electrodes in the two cochleas. This matching has been shown to be crucial for binaural processing in the NH system and CIs (Long et al. 2003; Nuetzel and Hafter 1981).

Our experiments were motivated by recent psychoacoustical evidence (van der Par and Kohlrausch 1997) that NH subjects are sensitive to decorrelation solely in the envelope, at least for some stimuli. We reasoned that, if analogous processing could be demonstrated in CI users, this would show that the absence of interaural fine-structure information does not preclude sensitivity to interaural decorrelation. Furthermore, by testing patients who had been deprived of binaural hearing for several years, we could determine whether binaural sensitivity can survive prolonged periods without binaural input. Our results show that CI users are indeed sensitive to envelope-based interaural differences, and potentially have important implications for the fitting and future design of bilateral CIs.

Methods

We measured the BMLD for a 125-Hz pure tone masked by a 50-Hz-wide band of noise (centered on the tone), processed in a way similar to that of the continuous interleaved sampling (CIS) strategy implemented by the speech processors of the majority of CIs worldwide (Wilson et al. 1991). The processing scheme is illustrated in Figure 1, which also shows example waveforms for the condition where the signal was out of phase at the two ears. The input noise was generated by randomly selecting a 400-ms interval from a 2000-ms noise whose frequency components outside the passband had been zeroed after generation in the time domain. The noise was identical on the left and right sides, whereas the signal was either identical across sides, or phase-shifted by π radians or time-delayed by 600 μs on one side. Signal-to-noise ratios from −25 to 20 dB were used. Stimuli were half-wave rectified, low-pass filtered (at 500 Hz by a second-order Butterworth filter), and used to modulate a 1000-pps electric pulse train. Each pulse was biphasic, had a duration of 25 μs per phase with an interphase gap of 7.4 μs (listener CI2) or 45 μs (listeners CI1, CI3, CI4), and was presented to an electrode near the apex (CI4), middle (CI1 and CI2), or basal end (CI3) of the array in monopolar (MP1+2) mode. The total stimulus duration was 400 ms (with the signal duration equal to 300 ms in the middle of the stimulus).

In experiment 1, current levels were set as a fraction of the most comfortable level linearly derived from the input signal. In experiment 2, the top 30 dB of the acoustic input dynamic range was compressed into the subject's electric dynamic range in a method based on the standard mapping of a CI24M device (Cochlear Limited 1999). Our fit to the standard loudness growth map used the following equation:

-

y = round [(1−exp(−5.09x))*(MCL−THR)+THR]

-

x = input levels normalized to 1

-

y = output expressed in clinical units from 0 to 255.

Levels 30 dB down from the top of the input dynamic range are dropped as is done in the clinical mapping (Seligman, personal communication). This equation provides a good fit to the clinical map. Over the average dynamic range of 77 CUs, our map deviates from the standard clinical map by a root mean square (RMS) difference of 5 CUs. In the top 10 dB of the acoustic dynamic range, our mapping elevates the levels slightly compared to the standard clinical map and below that point our levels are reduced. Given that different devices have used different compression schemes (e.g., logarithmic and power function), and that measures of THR and most comfortable loudness (MCL) can differ with repeated testing of the same patients, we consider these differences to be negligible.

Four bilateral CI users with the CI24M device were tested. The subjects had been without binaural hearing before their second CI for between 8 and 13 years (Table 1). Approval from the local research ethics committee had been previously obtained. Synchronized stimuli (<1 μs resolution) were presented to the two devices using custom software driving the SPEAR3 experimental processor (HearWorks Pty Ltd). All subjects showed thresholds for detecting interaural time differences (ITDs) of less than 500 μs on the electrode pair used for all testing. A three-alternative forced-choice task, with a fixed SNR per block of 72 trials, was used. Feedback was provided.

Experiments 2b and 2c investigated the nature of the interaural time-varying cues introduced by the signal. Examination of the right-hand part of Figure 1 reveals that these cues operate on two broad time scales. One of these, which we have already referred to, is indicated by the solid vertical line; the Sπ signal introduces a timing difference between the individual pulses in the two ears. These differences are produced by the changes in the fine structure of the narrowband noise modulator. In addition, changes in the modulator envelope produce time differences over a longer time scale; this is most easily seen in Figure 2a, which shows the NoSπ condition over a 200-ms period. Experiments 2b and 2c examined the contribution of these slowly varying cues, by introducing additional processing (Fig. 3). In experiment 2b, the additional stages involve (1) extracting the low-frequency (below 50 Hz) envelope of the left and right ear signals; (2) multiplying the right ear signal by the left ear envelope divided by the right ear envelope. As shown in Figure 2b, this caused the slowly fluctuating aspects of the stimulus to become more similar in the two ears (comparing to Fig. 2a). This is not surprising because the bandwidth of the noise modulator was only 50 Hz, and so the fluctuations in the modulator envelope would have been greatest for rates below 25 Hz. Experiment 2c was similar, except that the filter cutoff set to 10 Hz rather than 50 Hz (Fig. 2c). Only the NoSpi condition was tested in experiments 2b and 2c, because the NoSo condition is not altered by the above processing.

(a) An NoSpi-compressed stimulus shown at the threshold (SNR=−8 dB) demonstrates the presence of ITDs and time-varying interaural level differences. (b) Envelope of the right-electrode stimulus after processing to remove interaural envelope amplitude variations below 50 Hz more closely resembles that of the left in panel (a). (c) Envelope of the right-electrode stimulus after processing to remove interaural envelope amplitude variations below 10 Hz bears an intermediate resemblance to that on the left in panel (a). Horizontal lines represent the THR and MCL. The envelope was obtained from the smoothed local maxima (n=3) on the nonzero values.

Schematic layout of processing and representation of signals presented to both ears with additional envelope information removed. This is a modified version of the block diagram in Figure 1.

Results

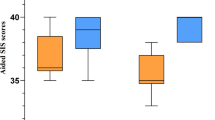

For all results presented in this article, decibels are defined in terms of the stimulus level prior to any compression. Figure 4a shows subject CI2's psychometric functions for the detection of an in-phase and an out-of-phase signal in experiment 1, in which the stimuli were not compressed. We defined the BMLD as the dB difference in the intercept between the best-fitting probit function describing each psychometric function, at the point where they intercepted the 70% correct point. A comparison of the open and gray boxes in Figure 4b shows that this BMLD was very large and highly significant (p ≪ 0.01) for all four subjects. The average value was 29 dB. We have also, instead of inverting the signal to one ear, delayed it by 600 μs—an interaural time delay which occurs in a real life—and obtained a BMLD of 15 dB for the three subjects (CI2, CI3, CI4) tested.

(a) Difference between the percent correct scores with NoSo (dashed line) and NoSπ (solid line) is large and significant as shown in these psychometric functions for detection of a signal for subject CI2. (b) Each subject's NoSo threshold is significantly higher than his NoSπ threshold. The vertical axis shows the 70% correct threshold as derived from the probit fit (with 95% confidence intervals). (c) With the inputs compressed, each subject's NoSo threshold (NoSoCompress) is significantly higher than his NoSπ threshold (NoSπCompress). The vertical axis shows the 70% correct threshold as derived from the probit fit (with 95% confidence intervals).

However, the stimuli used in experiment 1 lack the compression normally used in CIs, and which is required by the differences in acoustic dynamic range (120 dB) and the dynamic range in electric hearing (14 dB for these subjects on average, cf. Table 1). When we modified the processing to be more like that used in a CIS strategy, by compressing the top 30 dB of the input waveform into each subject's dynamic range, the BMLD was reduced (Fig. 4c), but remained substantial (mean 9 dB) and significant (p < 0.01). This value of 9 dB corresponds to the binaural advantage that subjects would obtain for an acoustic signal in the real world presented to the inputs of CI speech processors.

The results of experiments 2a and 2b, removing the more slowly varying interaural cues, are shown in Figure 5. When fluctuations slower than 50 Hz were equated between the two ears (Fig. 2b), the BMLD was reduced to an average of 1.3 dB, which was not significantly different from zero. However, when only those fluctuations slower than 10 Hz were removed, a substantial BMLD of 7.4 dB remained. This value was not significantly different from that obtained with no additional envelope processing.

With the inputs compressed, each subject's NoSo threshold (NoSoCompress) is similar to NoSπ with ILD cues below 50 Hz removed (NoSπCompressEnvF50Hz), but significantly higher than his NoSπ threshold (NoSπCompress) and NoSπ with ILD cues below 10 Hz removed (NoSπCompressEnvF10Hz). The vertical axis shows the 70% correct threshold as derived from the probit fit (with 95% confidence intervals).

Discussion

High-rate limitations on binaural processing

It is important to consider what interaural cues the subjects were using to detect the signal. One possibility that can be easily ruled out is the fact that on any given trial, the addition of the signal could introduce an average ILD that differed from zero, and which, because of the odd-man-out procedure, could be extracted by subjects even though its sign varied from trial to trial. One reason for rejecting this explanation comes from some additional analyses (not shown), which revealed that the absolute size of the ILD was not a function of SNR. A more direct test comes from our finding that the BMLD remained when fluctuations slower than 10 Hz were equated across the two ears. Furthermore, the fact that the BMLD obtained with this processing (experiment 2c) did not differ from that obtained with no such processing is evidence that fluctuations faster than 10 Hz were more important for the effect than were interaural fluctuations slower than that value.

A consideration of the highest envelope rates that subjects could exploit is hampered by the fact that the modulator bandwidth of 50 Hz meant that, when fluctuations slower than 50 Hz were equated across the two ears, nearly all envelope differences were eliminated. So, for example, we do not know whether, if we had used a modulator bandwidth of say 200 Hz, some BMLD would have remained in experiment 2b. Some hint of the upper limit comes from the fact that, even when the modulator envelope was equated between the two ears, fluctuations due to the noise band fine structure, centered on 125 Hz, remained. Because the BMLD was abolished under this condition, it is tempting to conclude that the interaural differences that vary at a rate of 125 Hz are too fast for the listener to exploit. However, it is worth noting that, in experiment 2b, these “fast” differences were always accompanied by slower fluctuations that had been equated in the two ears. Hence our conclusions should be restricted to the observation that a rate of 125 Hz is too fast for CI listeners to use in the presence of slower, diotic, contradicting fluctuations. It is possible that setting the time-varying ILD to zero dominated the percept such that the residual ITD information was not usable. Finally, it should be noted that the dominance of relatively slow fluctuations may be responsible for van Hoesel's (2004) finding of only a small (1.8 dB) BMLD for two listeners detecting a 500-Hz signal in a narrowband noise, processed by the Pulse Derived Timing (PDT) strategy (van Hoesel and Tyler 2003). Unfortunately, the noise bandwidth was not specified in his study, and so we do not know what envelope fluctuations would have been preserved in his stimuli.

Existing evidence for an upper limit on binaural processing comes both from research with CI listeners, and from experiments in which NH listeners are presented with transposed stimuli (Bernstein and Trahiotis 2002; van der Par and Kohlrausch 1997). Although ITD sensitivity with low-frequency pure tones in NH improves as frequency is increased from 200 to 500 Hz (Durlach and Colburn 1978), the opposite trend is observed with transposed stimuli—a finding that has also been observed in the responses of neurons in the inferior colliculus of the guinea pig (Griffin et al. 2005; Shackleton et al., 2005). In tests of ITD sensitivity to electric pulse trains presented to bilateral CIs, van Hoesel and Tyler (2003) found that performance was worse with pulse rates higher than 200 pps, compared to a rate of 50 pps. What these results point to is that there appears to be an “upper rate” limitation for binaural processing that is somehow bypassed when NH listeners are presented with low-frequency pure tones. This limitation has been successfully modeled by Bernstein and Trahiotis (2002) by using a 135-Hz lowpass filter. Although its physiological basis remains to be determined, it is worth noting that it may share a basis with a finding in pitch perception: rate discrimination of pulse trains by CI listeners is usually limited to rates below about 300 Hz, and a slightly higher limit of about 600 Hz applies to NH listeners presented with acoustic pulse trains that have been filtered so as to remove resolved harmonics (Carlyon and Deeks 2002).

Implications for binaural hearing in real life

Current clinical practice usually involves fitting the two CIs independently to the two ears. This independence means that corresponding frequency regions of the sound reaching the two ears may not drive electrodes that innervate matching regions of the two cochleae. Because the binaural system is tonotopically organized, this mismatch is disastrous for the processing of interaural timing information (Long et al. 2003; Nuetzel and Hafter 1981). It is therefore perhaps not surprising that, when wearing their speech proces-sors, bilateral CI users do not reliably benefit from the interaural decorrelation processing that is so useful for NH listeners. The main scientific reason for the continued use of this “independent fitting” strategy has been the lack of evidence that CI users can exploit time-varying interaural differences. Our results provide a crucial “proof of concept” that such an advantage is possible. Although the binaural advantage observed here appears to be driven by relatively slow fluctuations, envelope rates in the 2–50 Hz range provide segmental cues to the manner of articulation, to voicing, and to prosody (Rosen 1992). Speech arising from different spatial locations and differing in these features will produce interaural differences that fluctuate in this range of rates, and which, according to our results, could potentially be exploited by CI users. Certainly, the size of the detection advantage observed here is comparable to the BMLDs typically obtained with NH listeners. Our results suggest the possibility that, if clinical practice were modified so that the same frequency range excited “matched” electrodes in the two ears, then the binaural advantage observed here could generalize to a multichannel, suprathreshold task such as speech understanding in noise. A major caveat is that such a generalization has yet to be clearly demonstrated. Additional requirements may be to ensure that no misleading level differences are introduced by the automatic gain controls in each device; the use of use carrier pulse rates at least of 1000 pps, so as to reduce sensitivity to the fine-structure cues of the carrier; and the use of relatively low modulation filter cutoffs.

Plasticity and deprivation in binaural auditory processing

As with many other forms of sensory processing, the binaural auditory system has been shown to adapt to changes in the input it receives. Hofman et al. (1998) required NH listeners to listen through modified pinnae, which altered the mapping between the filtered waveform reaching the inner ear and source location, for a period of up to six weeks. Subsequently, subjects were sensitive to location cues based on both the new and the old maps. Florentine (1976) showed that an earplug in one ear produced shifts in azimuthal localization after the plug had been removed. Robinson and Gatehouse (1995) reported that patients with symmetrical bilateral hearing loss, who wore an aid in one ear only, were subsequently better at intensity discrimination at low levels in their unaided ear, and at high levels in their aided ear. Perhaps of most relevance to the present results, Hogan et al. (1996) reported that children who had experienced extended periods of unilateral otitis media showed reduced BMLDs initially, but not when tested 6 years later. What our results show is that adult patients can either maintain some sensitivity to time-varying interaural cues over long periods of binaural deprivation (between 8 and 13 years for the subjects tested) or recover this ability after binaural hearing has been restored. As noted above, this sensitivity is either restricted to fluctuations slower than about 50 Hz, or is at least dominated by these slower fluctuations when they exist. Whether subjects retain or regain binaural sensitivity could be explored in future studies, by testing patients at different times after their second implant. In this regard, it is worthwhile to take note of Eddington's (2005) work, which indicated that the subset of electrode pairs that produce a fused image can change over the months after activation of a second implant.

References

Akeroyd MA, Summerfield AQ. Integration of monaural and binaural evidence of vowel formants. J. Acoust. Soc. Am. 107:3394–3406, 2000.

Bernstein LR, Trahiotis C. Enhancing sensitivity to interaural delays at high frequencies by using “transposed stimuli.” J. Acoust. Soc. Am. 112:1026–1036, 2002.

Bronkhorst A, Plomp R. The effect of head-induced interaural time and level differences on speech intelligibility in noise. J. Acoust. Soc. Am. 83:1508–1517, 1998.

Carlyon RP, Deeks JM. Limitations on rate discrimination. J. Acoust. Soc. Am. 112:1009–1025, 2002.

Cochlear Limited. Nucleus Technical Reference (Manual Z43470 Issue I), 1999.

Culling JF, Colburn HS. Binaural sluggishness in the perception of tone sequences and speech in noise. J. Acoust. Soc. Am. 107:517–527, 2000.

Durlach N, Colburn H. Binaural phenomena. In: Carterette E and Friedman M (eds.) Handbook of Perception, Volume IV: Hearing. New York, Academic Press, pp. 365–466, 1978.

Eddington D, Poon B, Noel V. Changes in fusion and localization performance when transitioning from monolateral to bilateral listening, Conference on Implantable Auditory Prostheses; Pacific Grove, California, 2005.

Florentine M. Relation between lateralization and loudness in asymmetrical hearing losses. J. Am. Audiol. Soc. 1:243–251, 1976.

Griffin SJ, Bernstein LR, Ingham NJ, McAlpine D. Neural sensitivity to interaural envelope delays in the inferior colliculus of the guinea pig. J. Neurophysiol. 93:3463–3478, 2005.

Hirsh IJ. The influence of interaural phase on interaural summation and inhibition. J. Acoust. Soc. Am. 20:536–544, 1948.

Hofman PM, Van Riswick JG, Van Opstal AJ. Relearning sound localization with new ears. Nat. Neurosci. 1:417–421, 1998.

Hogan SC, Meyer SE, Moore DR. Binaural unmasking returns to normal in teenagers who had otitis media in infancy. Audiol. Neurootol. 1:104–111, 1996.

Litovsky RY, Parkinson A, Arcaroli J, Peters R, Lake J, Johnstone P, Yu G. Bilateral cochlear implants in adults and children. Arch. Otolaryngol. Head Neck Surg. 130:648–655, 2004.

Long CJ, Eddington DK, Colburn HS, Rabinowitz WM. Binaural sensitivity as a function of interaural electrode position with a bilateral cochlear implant user. J. Acoust. Soc. Am. 114:1565–1574, 2003.

McDermott HJ, McKay CM, Vandali AE. A new portable sound processor for the University-of-Melbourne nucleus limited multielectrode cochlear implant. J. Acoust. Soc. Am. 91:3367–3371, 1992.

Nuetzel J, Hafter E. Discrimination of interaural delays in complex waveforms: spectral effects. J. Acoust. Soc. Am. 69:1112–1118, 1981.

Palmer AR, Shackleton TM. The physiological basis of the binaural masking level difference. Acta Acustica 88:312–319, 2002.

Robinson K, Gatehouse S. Changes in intensity discrimination following monaural long-term use of a hearing aid. J. Acoust. Soc. Am. 97:1183–1190, 1995.

Rosen S. Temporal Information in speech: acoustic, auditory, and linguistic aspects. In: Carlyon R, Darwin C and Russell I (eds.) Processing of Complex Sounds by the Auditory System. Oxford, Clarendon Press, pp. 73–79, 1992.

Schleich P, Nopp P, D'Haese P. Head shadow, squelch, and summation effects in bilateral users of the MED-EL COMBI 40/40+ cochlear implant. Ear Hear 25:197–204, 2004.

Shackleton TM, Arnott RH, Palmer AR. Sensitivity to interaural correlation of single neurons in the inferior colliculus of guinea pigs. J. Assoc. Res. Otolaryngol. 1–16, 2005.

Smith ZM, Delgutte B, Oxenham AJ. Chimaeric sounds reveal dichotomies in auditory perception. Nature 416:87–90, 2002.

van der Par Z, Kohlrausch A. A new approach to comparing binaural masking level differences at low and high frequencies. J. Acoust. Soc. Am. 101:1671–1680, 1997.

van Hoesel R. Exploring the benefits of bilateral cochlear implants. Audiol. Neurootol. 9:234–246, 2004.

van Hoesel R, Tyler R. Speech perception, localization, and lateralization with bilateral cochlear implants. J. Acoust. Soc. Am. 113:1617–1630, 2003.

Vollmer M, Leake PA, Beitel RE, Rebscher SJ, Snyder RL. Degradation of temporal resolution in the auditory midbrain after prolonged deafness is reversed by electrical stimulation of the cochlea. J. Neurophysiol. 93:3339–3355, 2005.

Wilson BS, Finley CC, Lawson DT, Wolford RD, Eddington DK, Rabinowitz WM. Better speech recognition with cochlear implants. Nature 352:236–238, 1991.

Zurek PM. Binaural advantages and directional effects in speech intelligibility. In: Hockberg I (ed.) Acoustical Factors Affecting Hearing Aid Performance, Boston, Allyn and Bacon, pp. 255–276, 1993.

Acknowledgments

We thank H. Cooper, C. Fielden, and the team at the Hearing Assessment and Rehabilitation Centre in Birmingham for access to patients and for their help. Fan-Gang Zeng and anonymous reviewer provided helpful comments on a previous version of this article. We thank the patients for their hard work. In addition, we acknowledge the support of the Royal National Institute for Deaf People and Deafness Research, UK.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Long, C.J., Carlyon, R.P., Litovsky, R.Y. et al. Binaural Unmasking with Bilateral Cochlear Implants. JARO 7, 352–360 (2006). https://doi.org/10.1007/s10162-006-0049-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10162-006-0049-4