Abstract

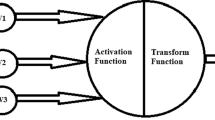

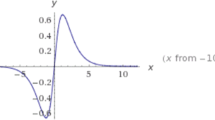

Activation functions (AFs) are the basis for neural network architectures used in real-world problems to accurately model and learn complex relationships between variables. They are preferred to process the input information coming to the network and to produce the corresponding output. The kernel-based activation function (KAF) offers an extended version of ReLU and sigmoid AFs. Therefore, KAF faced with the problems of bias shift originating from the negative region, vanishing gradient, adaptability, flexibility, and neuron death in parameters during the learning process. In this study, hybrid KAF + RSigELUS and KAF + RSigELUD AFs, which are extended versions of KAF, are proposed. In the proposed AFs, the gauss kernel function is used. The proposed KAF + RSigELUS and KAF + RSigELUD AFs are effective in the positive, negative, and linear activation regions. Performance evaluations of them were conducted on the MNIST, Fashion MNIST, CIFAR-10, and SVHN benchmark datasets. The experimental evaluations show that the proposed AFs overcome existing problems and outperformed ReLU, LReLU, ELU, PReLU, and KAF AFs.

Similar content being viewed by others

References

Pacal I, Karaboga D (2021) A robust real-time deep learning based automatic polyp detection system. Comput Biol Med 134:104519

Ozkok FO, Celik M (2021) Convolutional neural network analysis of recurrence plots for high resolution melting classification. Comput Methods Progr Biomed 207:1061139. https://doi.org/10.1016/j.cmpb.2021.106139

Kiliçarslan S, Celik M (2021) RSigELU: a nonlinear activation function for deep neural networks. Expert Syst Appl 174:114805

Scardapane S, Van Vaerenbergh S, Totaro S, Uncini A (2019) Kafnets: kernel-based non-parametric activation functions for neural networks. Neural Netw 110:19–32

Chieng HH, Wahid N, and Ong P (2020) Parametric flatten-T Swish: an adaptive non-linear activation function for deep learning. arXiv preprint arXiv:2011.03155

Zhao H, Liu F, Li L, Luo C (2018) A novel softplus linear unit for deep convolutional neural networks. Appl Intell 48(7):1707–1720

Bengio Y, Simard P, Frasconi P (1994) Learning long-term dependencies with gradient descent is difficult. IEEE Trans Neural Netw 5(2):157–166

Hochreiter S, Jurgen S (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Krizhevsky A, Sutskever I, Hinton G (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1106–1114

Maas AL, Hannun AY, and Ng AY (2013) Rectifier nonlinearities improve neural network acoustic models. In: Proc. Icml, vol 30, no 1, p 3

Szandała T (2021) Review and comparison of commonly used activation functions for deep neural networks. In: Bio-inspired neurocomputing, Springer, Singapore, pp 203–224

Apicella A, Donnarumma F, Isgrò F, Prevete R (2021) A survey on modern trainable activation functions. Neural Netw 138:14–32

Basirat M, and Roth PM (2021) S* ReLU: learning piecewise linear activation functions via particle swarm optimization. In: Proceedings of the proceedings of the 16th international joint conference on computer vision, imaging and computer graphics theory and applications

Chung H, Lee SJ, and Park JG (2016) Deep neural network using trainable activation functions. In: 2016 International joint conference on neural networks (IJCNN), IEEE, pp 348–352

Godin F, Degrave J, Dambre J, De Neve W (2018) Dual rectified linear units (DReLUs): a replacement for tanh activation functions in quasi-recurrent neural networks. Pattern Recogn Lett 116:8–14

Klambauer G, Unterthiner T, Mayr A, and Hochreiter S (2017) Self-normalizing neural networks. In: Advances in neural information processing systems, pp 971–980

Nair V, and Hinton GE (2010) Rectified linear units improve restricted boltzmann machines. In: Proceedings of the 27th international conference on machine learning (ICML-10), pp 807–814

Qiumei Z, Dan T, Fenghua W (2019) Improved convolutional neural network based on fast exponentially linear unit activation function. IEEE Access 7:151359–151367

Ramachandran P, Zoph B, and Le QV (2017) Searching for activation functions. arXiv preprint arXiv:1710.05941

Bawa VS, Kumar V (2019) Linearized sigmoidal activation: A novel activation function with tractable non-linear characteristics to boost representation capability. Expert Syst Appl 120:346–356

He K, Zhang X, Ren S, and Sun J (2015). Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE international conference on computer vision, pp 1026–1034

Maguolo G, Nanni L, Ghidoni S (2021) Ensemble of convolutional neural networks trained with different activation functions. Expert Syst Appl 166:114048

Trottier L, Gigu P, and Chaib-draa B (2017) Parametric exponential linear unit for deep convolutional neural networks. In: 16th IEEE International conference on machine learning and applications (ICMLA), IEEE, pp 207–214

Clevert DA, Unterthiner T, and Hochreiter S (2015) Fast and accurate deep network learning by exponential linear units (elus). arXiv preprint arXiv:1511.07289

Kong S, and Takatsuka M (2017) Hexpo: a vanishing-proof activation function. In: 2017 international joint conference on neural networks (IJCNN), IEEE, pp 2562–2567

Solazzi M, Uncini A (2004) Regularising neural networks using flexible multivariate activation function. Neural Netw 17(2):247–260

Yun BI (2019) A neural network approximation based on a parametric sigmoidal function. Mathematics 7(3):262

Zhou Y, Li D, Huo S, Kung SY (2021) Shape autotuning activation function. Expert Syst Appl 171:114534

Agostinelli F, Hoffman M, Sadowski P and Baldi P (2014) Learning activation functions to improve deep neural networks. arXiv preprint arXiv:1412.6830

Krizhevsky A, and Hinton G (2009) Learning multiple layers of features from tiny images. Master's thesis, University of Tront

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Xiao H, Rasul K and Vollgraf R (2017) Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms. arXiv preprint arXiv:1708.07747

Netzer Y, Wang T, Coates A, Bissacco A et al (2011) Reading digits in natural images with unsupervised feature learning

Kilicarslan S, Adem K, Celik M (2020) Diagnosis and classification of cancer using hybrid model based on reliefF and convolutional neural network. Med Hypotheses 137:109577

Kilicarslan S, Celik M, Sahin Ş (2021) Hybrid models based on genetic algorithm and deep learning algorithms for nutritional Anemia disease classification. Biomed Signal Process Control 63:102231

LeCun Y, Boser BE, Denker JS, Henderson D et al (1990) Handwritten digit recognition with a back-propagation network. In: Advances in neural information processing systems conference, pp 396–404

Murphy KP (2012) Machine learning: a probabilistic perspective. MIT Press

Adem K, Közkurt C (2019) Defect detection of seals in multilayer aseptic packages using deep learning. Turk J Electr Eng Comput Sci 27(6):4220–4230

Adem K, Orhan U, Hekim M (2015) Image processing based quality control of the impermeable seams in multilayered aseptic packages. Expert Syst Appl 42(7):3785–3789

Adem K (2018) Exudate detection for diabetic retinopathy with circular Hough transformation and convolutional neural networks. Expert Syst Appl 114:289–295

Genton MG (2001) Classes of kernels for machine learning: a statistics perspective. J Mach Learn Res 2:299–312

Krzywinski M, Altman N (2014) Visualizing samples with box plots. Nat Methods 11:119–120. https://doi.org/10.1038/nmeth.2813

Karakoyun M, Hacıbeyoğlu M (2014) Statistical comparison of machine learning classification algorithms using biomedical datasets. Dokuz Eylül Üniv Mühendis Fak Fen ve Mühendis Derg 16(48):30–42

Adem K, Kilicarslan S, Comert O (2019) Classification and diagnosis of cervical cancer with softmax classification with stacked autoencoder. Expert Syst Appl. https://doi.org/10.1016/j.eswa.2018.08.050

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We, the authors, have no conflict of interest and disclose any financial and personal relationships with other people or organizations that could inappropriately influence the presented work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kiliçarslan, S., Celik, M. KAF + RSigELU: a nonlinear and kernel-based activation function for deep neural networks. Neural Comput & Applic 34, 13909–13923 (2022). https://doi.org/10.1007/s00521-022-07211-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07211-7