Abstract

Background

Automatic surgical workflow recognition is a key component for developing the context-aware computer-assisted surgery (CA-CAS) systems. However, automatic surgical phase recognition focused on colorectal surgery has not been reported. We aimed to develop a deep learning model for automatic surgical phase recognition based on laparoscopic sigmoidectomy (Lap-S) videos, which could be used for real-time phase recognition, and to clarify the accuracies of the automatic surgical phase and action recognitions using visual information.

Methods

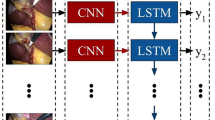

The dataset used contained 71 cases of Lap-S. The video data were divided into frame units every 1/30 s as static images. Every Lap-S video was manually divided into 11 surgical phases (Phases 0–10) and manually annotated for each surgical action on every frame. The model was generated based on the training data. Validation of the model was performed on a set of unseen test data. Convolutional neural network (CNN)-based deep learning was also used.

Results

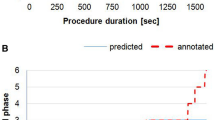

The average surgical time was 175 min (± 43 min SD), with the individual surgical phases also showing high variations in the duration between cases. Each surgery started in the first phase (Phase 0) and ended in the last phase (Phase 10), and phase transitions occurred 14 (± 2 SD) times per procedure on an average. The accuracy of the automatic surgical phase recognition was 91.9% and those for the automatic surgical action recognition of extracorporeal action and irrigation were 89.4% and 82.5%, respectively. Moreover, this system could perform real-time automatic surgical phase recognition at 32 fps.

Conclusions

The CNN-based deep learning approach enabled the recognition of surgical phases and actions in 71 Lap-S cases based on manually annotated data. This system could perform automatic surgical phase recognition and automatic target surgical action recognition with high accuracy. Moreover, this study showed the feasibility of real-time automatic surgical phase recognition with high frame rate.

Similar content being viewed by others

References

Jin Y, Dou Q, Chen H, Yu L, Qin J, Fu CW, Heng PA (2018) SV-RCNet: workflow recognition from surgical videos using recurrent convolutional network. IEEE Trans Med Imaging 37:1114–1126

Lalys F, Jannin P (2014) Surgical process modelling: a review. Int J Comput Assist Radiol Surg 9:495–511

Cleary K, Chung HY, Mun SK (2005) OR 2020: the operating room of the future. Laparoendosc Adv Surg Tech 15:495–500

Rattner WD, Park A (2003) Advanced devices for the operating room of the future. Semin Laparosc Surg 10:85–88

Meeuwsen FC, van Luyn F, Blikkendaal MD, Jansen FW, van den Dobbelsteen JJ (2019) Surgical phase modelling in minimal invasive surgery. Surg Endosc 33:1426–1432

Padoy N, Blum T, Feussner H, Berger MO, Navab N (2008) Online recognition of surgical activity for monitoring in the operating room. AAAI 2008:1718–1724

Ahmadi SA, Sielhorst T, Stauder R, Horn M, Feussner H, Navab N (2006) Recovery of surgical workflow without explicit models. In: Larsen R, Nielsen M, Sporring J (eds) Medical image computing and computer-assisted intervention—MICCAI 2006. MICCAI 2006. Lecture Notes in Computer Science, Springer, Berlin, pp 420–428

Bouarfa L, Jonker P, Dankelman J (2011) Discovery of high-level tasks in the operating room. J Biomed Inform 44:455–462

Stauder R, Okur A, Peter L, Schneider A, Kranzfelder M, Feussner H, Navab N (2014) Random forests for phase detection in surgical workflow analysis. In: Stoyanov D, Collins DL, Sakuma I, Abolmaesumi P, Jannin P (eds) Information Processing in Computer-Assisted Interventions. IPCAI 2014. Lecture Notes in Computer Science, Springer, Cham, vol 8498, pp 148–157

Klank U, Padoy N, Feussner H, Navab N (2008) Automatic feature generation in endoscopic images. Int J Comput Assist Radiol Surg 3:331–339

Blum T, Feussner H, Navab N (2010) Modeling and segmentation of surgical workflow from laparoscopic video. In: Jiang T, Navab N, Pluim JPW, Viergever MA (eds) Medical Image Computing and Computer-Assisted Intervention—MICCAI 2010. MICCAI 2010. Lecture Notes in Computer Science, Springer, Berlin, Heidelberg, vol 6363, pp 400–407

Dergachyova O, Bouget D, Huaulmé A, Morandi X, Jannin P (2016) Automatic data-driven real-time segmentation and recognition of surgical workflow. Int J Comput Assist Radiol Surg 11:1081–1089

Primus MJ, Schoeffmann K, Böszörmenyi L (2016) Temporal segmentation of laparoscopic videos into surgical phases. In: 14th International Workshop on Content-based Multimedia Indexing, pp 1–6

Twinanda AP, Shehata S, Mutter D, Marescaux J, de Mathelin M, Padoy N (2017) EndoNet: a deep architecture for recognition tasks on laparoscopic videos. IEEE Trans Med Imaging 36:86–97

Lea C, Choi JH, Reiter A, Hager GD (2016) Surgical phase recognition: from instrumented ORs to hospitals around the world. In: Medical Image Computing and Computer-Assisted Intervention M2CAI—MICCAI workshop, pp 45–54

Pascual M, Salvans S, Pera M (2016) Laparoscopic colorectal surgery: current status and implementation of the latest technological innovations. World J Gastroenterol 22:704–717

Miskovic D, Ni M, Wyles SM, Parvaiz A, Hanna GB (2012) Observational clinical human reliability analysis (OCHRA) for competency assessment in laparoscopic colorectal surgery at the specialist level. Surg Endosc 26:796–803

Szegedy C, Ioffe S, Vanhoucke V, Alemi AA (2017) Inception-v4, inception-resnet and the impact of residual connections on learning. In: Proceedings of the 31st AAAI Conference on Artificial Intelligence. 2017, vol 4

Byra M, Styczynski G, Szmigielski C, Kalinowski P, Michałowski Ł, Paluszkiewicz R, Ziarkiewicz-Wróblewska B, Zieniewicz K, Sobieraj P, Nowicki A (2018) Transfer learning with deep convolutional neural network for liver steatosis assessment in ultrasound images. Int J Comput Assist Radiol Surg 13:1895–1903

Friedman JH (2001) Greedy function approximation: a gradient boosting machine. Ann Stat 29:1189–1232

Deng L, Pan J, Xu X, Yang W, Liu C, Liu H (2018) PDRLGB: precise DNA-binding residue prediction using a light gradient boosting machine. BMC Bioinform 19:522

Foster JD, Miskovic D, Allison AS, Conti JA, Ockrim J, Cooper EJ, Hanna GB, Francis NK (2016) Application of objective clinical human reliability analysis (OCHRA) in assessment of technical performance in laparoscopic rectal cancer surgery. Tech Coloproctol 20:361–367

Quellec G, Charrière K, Lamard M, Droueche Z, Roux C, Cochener B, Cazuguel G (2014) Real-time recognition of surgical tasks in eye surgery videos. Med Image Anal 18:579–590

Quellec G, Lamard M, Droueche Z, Cochener B, Roux C, Cazuguel G (2013) A polynomial model of surgical gestures for real-time retrieval of surgery videos. In: Greenspan H, Müller H, Syeda-Mahmood T (eds) Medical Content-Based Retrieval for Clinical Decision Support. MCBR-CDS 2012. Lecture Notes in Computer Science, Springer, Berlin, vol 7723, pp 10–20

Quellec G, Lamard M, Cochener B, Cazuguel G (2015) Real-time task recognition in cataract surgery videos using adaptive spatiotemporal polynomials. IEEE Trans Med Imaging 34:877–887

Charriere K, Quellec G, Lamard M, Martiano D, Cazuguel G, Coatrieux G, Cochener B (2016) Real-time multilevel sequencing of cataract surgery videos. In: 14th International Workshop on Content-based Multimedia Indexing, pp 1–6

Charrière K, Quellec G, Lamard M, Martiano D, Cazuguel G, Coatrieux G, Cochener B (2017) Real-time analysis of cataract surgery videos using statistical models. Multimed Tools Appl 76:22473–22491

Despinoy F, Bouget D, Forestier G, Penet C, Zemiti N, Poignet P, Jannin P (2016) Unsupervised trajectory segmentation for surgical gesture recognition in robotic training. IEEE Trans Biomed Eng 63:1280–1291

Funding

This research was supported by Japan Agency for Medical Research and Development (AMED) under Grant Number JP18he1802002.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclosures

Daichi Kitaguchi, Nobuyoshi Takeshita, Hiroki Matsuzaki, Hiroki Takano, Yohei Owada, Tsuyoshi Enomoto, Tatsuya Oda, Hirohisa Miura, Takahiro Yamanashi, Masahiko Watanabe, Daisuke Sato, Yusuke Sugomori, Seigo Hara and Masaaaki Ito have no conflicts of interest or financial ties to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kitaguchi, D., Takeshita, N., Matsuzaki, H. et al. Real-time automatic surgical phase recognition in laparoscopic sigmoidectomy using the convolutional neural network-based deep learning approach. Surg Endosc 34, 4924–4931 (2020). https://doi.org/10.1007/s00464-019-07281-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00464-019-07281-0