Abstract

The inhomogeneous Poisson point process is a common model for time series of discrete, stochastic events. When an event from a point process is detected, it may trigger a random dead time in the detector, during which subsequent events will fail to be detected. It can be difficult or impossible to obtain a closed-form expression for the distribution of intervals between detections, even when the rate function (often referred to as the intensity function) and the dead-time distribution are given. Here, a method is presented to numerically compute the interval distribution expected for any arbitrary inhomogeneous Poisson point process modified by dead times drawn from any arbitrary distribution. In neuroscience, such a point process is used to model trains of neuronal spikes triggered by the detection of excitatory events while the neuron is not refractory. The assumptions of the method are that the process is observed over a finite observation window and that the detector is not in a dead state at the start of the observation window. Simulations are used to verify the method for several example point processes. The method should be useful for modeling and understanding the relationships between the rate functions and interval distributions of the event and detection processes, and how these relationships depend on the dead-time distribution.

Similar content being viewed by others

1 Introduction

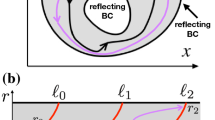

The inhomogeneous Poisson point process is commonly used to model time series of discrete, stochastic events. It is applied to diverse phenomena, such as in the fields of neuroscience (e.g., Siebert 1970; Srulovicz and Goldstein 1983; Brown et al. 1998; Liu et al. 2001; Amarasingham et al. 2006), optical communications (e.g., Vannucci and Teich 1978; Drost et al. 2015; Verma and Drost 2017), and particle physics (e.g., Müller 1981b). An inhomogeneous Poisson point process has a time-varying rate and generates a sequence of events that occur at random times (Snyder 1975; Cox and Isham 1980). In the ideal case, all of the events can be observed using a suitable detector. In reality, a subset of events often fails to be observed due to a dead time in the detector (Müller 1981a; Grupen and Shwartz 2008; Picinbono 2009). One must therefore distinguish between two point processes: one of which describes the “events” themselves and the other of which describes the “detections” (i.e., the subset of events that is detected). An example from biology is the firing of spikes (i.e., action potentials) by a postsynaptic neuron in response to the release of excitatory neurotransmitter by a presynaptic neuron (with the further simplifying assumption that each neurotransmitter release event triggers a spike unless the postsynaptic neuron is in a dead state): the event process corresponds to the times at which neurotransmitter is released by the presynaptic neuron, the detection process corresponds to the times at which the released neurotransmitter triggers spikes in the postsynaptic neuron, and the dead time arises from a physiological property of neurons, known as the refractory period, that prevents spikes from being generated too soon after the previous spike (Hodgkin and Huxley 1952). An example from physics is the counting of photons by a sensor: the event process corresponds to the times at which photons arrive at the sensor, the detection process corresponds to the times at which the sensor detects photons, and the dead time arises from a property of the sensor that prevents photons from being detected if they arrive too soon after the previous detection. For simplicity, it is often assumed that all dead times in a given process have the same fixed duration (e.g., Müller 1981a; Teich 1985; Teich and Khanna 1985; Picinbono 2009), but some processes are better described by dead times with random durations drawn from a fixed distribution (e.g., Teich et al. 1978; Müller 1981a; Young and Barta 1986; Deger et al. 2010; Peterson and Heil 2018). A distinction is made between “paralyzable” and “nonparalyzable” detectors (Müller 1981a). With a paralyzable detector, an event that occurs during a dead time causes the dead time to be prolonged. With a nonparalyzable detector, an event that occurs during a dead time simply fails to be detected, but the dead time is not prolonged. Only the nonparalyzable case, as would apply to neurons, is considered here. Although a detection is synonymous with a spike in the context of a neuronal spike train, the more general term “detection” is used here to emphasize the broader applicability of the work.

Figure 1 demonstrates how an inhomogeneous event process can be modified by random dead times to yield a detection process. Figure 1a shows the time-varying event rate that defines the process. This rate function (also known as the intensity function) determines the probability of an event occurring at each time point in the observation window. Figure 1b shows stochastic event times in such a process. Figure 1c shows random dead times and indicates the subset of events (marked by “x”) that fall into each dead time and are therefore not detected. For illustrative purposes, the dead times are relatively long so that many events fail to be detected. Figure 1d shows all events that do not fall into a dead time and are therefore detected. Each detection triggers the start of a new random dead time. Figure 1e shows the first-order intervals between detections. The statistical properties of point processes are often investigated by computing the distributions of such intervals (e.g., Johnson 1978; Wilbur and Rinzel 1983; Gummer 1991; Shcherbakov et al. 2005; Kroó et al. 2007; Nawrot et al. 2008; Arkani and Raisali 2015; Pommé et al. 2015). However, except in very specific cases, typically involving a homogeneous Poisson point process or other simple renewal processes, it is difficult or impossible to obtain a closed-form expression to describe the interval distribution (Picinbono 2009).

modified by random dead times. a Time-varying event rate of the point process. b Sequence of stochastic events generated by the process. c Random dead times, with each undetected event marked by an “x.” d Sequence of stochastic detections. e First-order intervals between detections

An inhomogeneous Poisson point process

Here, a numerical method is presented for computing the interval distribution expected for any arbitrary inhomogeneous Poisson point process modified by dead times drawn from any arbitrary distribution. The interval distributions obtained with this method are intended to be analogous to distributions computed from experimental data. Because experimental data are collected over a finite observation window (or several repetitions of a finite window), empirical interval distributions are distorted by the fact that an interval between detections cannot exceed the time remaining in the observation window and therefore cannot be arbitrarily long. This distortion effect is known as right censoring and leads to an overrepresentation of short intervals and underrepresentation of long intervals (e.g., Wiener 2003; Nawrot et al. 2008). Nonetheless, to maintain equivalence with experimentally obtained interval distributions, the numerical method must also reproduce the right censoring caused by the finite observation window. The numerical method presented here does this and is therefore applicable to a variety of experimentally observed phenomena in several fields of study.

2 Methods

The numerical method presented below was implemented in MATLAB R2019b and is publicly available in an online code repository (Peterson 2020). The method has been divided into several algorithms, with the goal of presenting each algorithm as straightforwardly as possible without regard for computational demand. Those algorithms found to be computationally demanding were then reimplemented using more optimized but less comprehensible MATLAB code. One particularly demanding computation (Eq. 8) was reimplemented using C code and compiled to a MATLAB MEX file to enable faster execution. All implementations of each algorithm are included in the online repository, along with a demonstration of their equivalence.

The following notations and conventions are used throughout:

-

1.

The observation window of the point process spans the interval from tmin to tmax and is divided into bins of width Δt. Each bin is referenced by the time point at its right edge, denoted ti, where i is the index of the bin within the observation window. For an observation window starting at tmin = 0 s, the time point marking the first bin is t1 = Δt.

-

2.

Durations in the dead-time distribution are denoted dj, where j is the index of a bin within the distribution. For the numerical method presented here, the shortest duration that needs to be considered is d1 = Δt.

-

3.

Waiting times in the forward recurrence distributions or intervals in the interval distributions are denoted wk, where k is the index of a bin within the distribution. For the numerical method presented here, the shortest waiting time or interval that needs to be considered is w1 = Δt.

-

4.

For simplicity, the bin indices i, j, and k are omitted from the figures, captions, and occasionally elsewhere.

Before describing each step of the numerical method below, it is necessary to define several prerequisite functions. For clarity, all prerequisites and algorithm steps are demonstrated using one example point process of events and one example dead-time distribution.

2.1 Prerequisite 1: The rate (or probability) function of the detection process

To compute the interval distribution expected for an inhomogeneous Poisson point process modified by random dead times, it is necessary to know the rate of the detection process over the observation window (i.e., the rate obtained from experimental measurements). The numerical method presented here will work for any arbitrary rate function. Figure 2 shows the example rate Rdetection (in detections/s) of the detection process used to demonstrate the method. Here, the observation window is 5 ms long and the time step is Δt = 0.1 ms. Note that multiplying the rate function by Δt (in seconds) will convert it to an equivalent probability function pdetection, which is also shown (right axis).

2.2 Prerequisite 2: The distribution of dead times

To compute the interval distribution expected for an inhomogeneous Poisson point process modified by random dead times, it is also necessary to know the distribution from which the dead times are drawn. The numerical method presented here will work for any arbitrary distribution, as long as the random dead times are independent and drawn from the same distribution. Throughout the demonstrations below, the dead time is a random variable ddead equal to the sum of a fixed duration dfixed and a random duration drand drawn from the geometric distribution with a mean of μrand. This is the discrete equivalent of a continuous distribution of dead times consisting of a fixed portion and a random portion drawn from the exponential distribution, such as was used by Young and Barta (1986) and Peterson and Heil (2018). The cumulative distribution function (CDF) for ddead (i.e., the probability that ddead is shorter than or equal to duration dj) is given by

This distribution is equivalent to the cumulative geometric distribution \(G(k)=1-{\left(1-p\right)}^{k}\) (with success probability p and trial k ∈ {1, 2, 3,…}) but is parameterized in terms of time and is delayed by dfixed. Figure 3a shows the CDF for the example dead-time distribution (with dfixed = 0.5 ms and μrand = 0.5 ms). The survivor function for ddead (i.e., the probability that ddead is longer than duration dj) is given by the complement of the CDF,

Figure 3b shows the survivor function for the example dead-time distribution.

The probability mass function (PMF) for ddead (i.e., the probability that ddead is equal to duration dj) is given by

Figure 3c shows the PMF for the example dead-time distribution. Note that, as the time step decreases toward 0 ms, the PMF approaches the continuous probability density function (PDF) for a dead time having a random portion drawn from the exponential distribution (not shown).

With the rate of the detection process and the dead-time distribution specified, it is now possible to proceed to the first step in the numerical method.

2.3 Step 1: Computing the rate (or probability) function of the event process

For the numerical method presented here, it is necessary to know the probability pevent of an event at each time point in the observation window. If the dead-time distribution is known, then it is possible to compute pevent from pdetection. This computation requires knowing the probability pdead that the detector is in a dead state at each time point in the observation window, given by

where Sdead(d) is the survivor function for the dead times (i.e., the probability that ddead is longer than duration d), th = h⋅Δt is a time point of a bin preceding bin i, and di-h = (i−h)⋅Δt is a dead-time duration. For simplicity, the process is assumed to be free from dead-time effects at the first time point in the observation window. For each subsequent time point ti, the value of pdead(ti) can be obtained from the probability pdetection of a detection at each previous time point th and the probability Sdead(di-h) that the dead time following each previous detection would extend through time point ti. More precisely, the contribution of a previous time point th to pdead at each subsequent time point ti is given by the joint probability pdetection(th)·Sdead(di-h). Figure 4a shows how each time point in the example process (marked by a symbol) contributes to pdead at all later time points (corresponding gray line). The summation of the joint probabilities in Eq. 4 is equivalent to the binwise sum of the individual joint probability functions in Fig. 4a. Figure 4b shows the resultant pdead(ti) computed for the example process. Note that Eq. 4 is nothing other than the convolution of pdetection and Sdead and could be replaced by a more efficient algorithm such as convolution based on the fast Fourier transform.

Computing the event rate. a Each time point (marked by symbols) contributes to the probability pdead of the detector being in a dead state during all time points that follow. The contribution of each time point (gray lines) is given by the dead-time survivor function Sdead (Fig. 3b) scaled by probability pdetection of a detection at the preceding time point. b The overall pdead function during the observation window, computed with Eq. 4, is equivalent to the binwise sum of the individual contributions in a. c The event probability pevent (right axis) computed with Eq. 6, which can be scaled to yield the event rate Revent (left axis)

Recall that two conditions must be met for a detection to occur: an event must occur, and the detector must not be in a dead state. The detection probability pdetection(ti) is therefore given by the joint probability

Equation 5 can be rearranged to obtain the probability pevent of an event at each time point in the observation window,

Figure 4c shows pevent(ti) computed for the example process. It is higher than pdetection(ti) (Fig. 2) at all time points except the first one. At the first point, pevent and pdetection are identical because it is assumed that the detector is not in a dead state at the start of the observation window (i.e., it is assumed that pdead(t1) = 0). Note that Eq. 6 is undefined in the unlikely case that pdead(ti) = 1, which would occur only when ti falls into the fixed portion of a dead time following a time point for which a detection is guaranteed to occur (i.e., for which pdetection = 1). In such a case, pdetection(ti) = 0 regardless of the value of pevent(ti), and the event probability would therefore be unknowable and unrecoverable.

Note that if pevent were known, rather than pdetection, then pdead and pdetection could be computed using Eqs. 4 and 5. For all time points ti following the initial point, the computation of pdead (Eq. 4) depends on the value of pdetection from all previous time points th, whereas the computation of pdetection (Eq. 5) depends on pdead from the current time point ti. This interdependence of pdead and pdetection requires that both values be computed at a given time point before proceeding to the next point. For the numerical method presented here, it is necessary to know both pevent and pdetection, no matter which of the two was known initially.

2.4 Step 2: Computing forward recurrence distributions for events

The numerical method presented here makes heavy use of forward recurrence distributions. The distribution of forward recurrence times, fevent(ti,wk), specifies the probability that, at time point ti, the waiting time (or recurrence interval) to the next event is equal to wk. More precisely, the probability that the next event will occur with a waiting time of wk equals the joint probability that there is an event at time ti + wk and that there are no events between times ti and ti + wk. At time point ti in the observation window, the forward recurrence probability fevent that the waiting time to the next event is equal to wk is given by

Here, for each time point ti, the product given by the ∏ notation computes the survivor function of the event process over the waiting times wk. Figure 5 shows selected forward recurrence distributions computed for the example process in Fig. 2. Figure 5a shows the forward recurrence distribution fevent computed for the first time point (t1 = 0.1 ms), Fig. 5b shows fevent computed for the tenth time point (t10 = 1 ms), and Fig. 5c shows fevent computed for the twentieth time point (t20 = 2 ms). For each recurrence distribution, potential waiting times range from as short as a single bin (w1 = Δt) to as long as the total number of bins remaining in the observation window (wmax = tmax − ti). Recurrence probabilities are not needed for waiting times that would extend the process beyond the edge of the observation window and are omitted; each distribution shown is therefore incomplete because the sum of its probabilities will be less than 1. For each recurrence distribution in Fig. 5, the upper time axis shows the corresponding time within the observation window. The forward recurrence distribution in Fig. 5a is one bin shorter than the observation window, with this edge marked by the gray shading beginning at w = 4.9 ms (i.e., t = 5 ms on the upper axis). As ti increases, the corresponding forward recurrence distribution becomes increasingly incomplete. Although results are shown for only three example time points, the method requires fevent to be computed for all time points in the observation window except for the final point (there is no recurrence possible after the final point, so there is no need to compute its recurrence distribution here). Note that when the event rate is periodic, as in the example (Fig. 4c), the distributions of forward recurrence times to the next event are identical for any two points separated by an integer number of periods, except that the distribution for the later point is more incomplete. This means that, for periodic event rates, the forward recurrence distributions only need to be computed for the points within the first cycle in the observation window; they can then be duplicated (incompletely) to obtain corresponding distributions for the points in each subsequent cycle. For aperiodic event rates, the forward recurrence distributions must be computed for all points in the observation window.

Example forward recurrence distributions, fevent(t,w), showing the probability that, at time point t in the process, the waiting time to the next event will equal w. a fevent for time t1 = 0.1 ms. b fevent for time t10 = 1 ms. c fevent for time t20 = 2 ms. Each distribution is computed with Eq. 7. The top axis in each panel shows the time relative to the 5-ms observation window. Gray shading marks the region missing from each distribution (i.e., the region that would extend beyond the edge of the observation window). Each distribution is therefore incomplete

2.5 Step 3: Computing forward recurrence distributions for detections

Due to the random dead times in the detection process, the distribution of forward recurrence times to the next detection cannot be computed as straightforwardly as the distribution of forward recurrence times to the next event. In the method presented here, recurrence times to the next detection after time ti are computed under the assumption that a detection has occurred at time ti. Note that, with the assumption of a detection at ti, the forward recurrence times actually represent intervals (unlike forward recurrence times in the event process, for which there was no assumption of an event at time ti). Assuming a detection at time ti, the distribution of forward recurrence times to the next detection can be computed by considering the effect of each possible dead-time duration. If the dead time following a detection at ti ends after duration dj (i.e., at time ti+j = ti + dj), two facts are apparent about the distribution of forward recurrence times to the next detection, fdetection(ti,wk). First, for any waiting time wk shorter than dj, the recurrence probability fdetection = 0. Second, for any waiting time wk longer than or equal to dj, the recurrence probability fdetection must follow the time course of fevent (Eq. 7) computed for time point ti+j (i.e., for the first point in the observation window free from the effects of the dead time). More specifically, the joint probability that a detection at time ti is followed by a dead time with duration dj (i.e., ending at time ti+j) and that the next detection occurs after waiting time wk following the detection is given by gdead(dj)·fevent(ti+j,wk). For each time point in the observation window, such a joint probability function is computed over all remaining bins. For time point ti, the forward recurrence probability fdetection is obtained by summing the respective contributions from each of the joint probability functions,

Here, wk is the waiting time following a detection at time ti and gdead(dj) is the probability that the dead time ddead has duration dj (Eq. 3 and Fig. 3c). Figure 6 shows selected results for the example process. Figure 6a shows all joint probability functions contributing to the forward recurrence distribution for the first time point (t1 = 0.1 ms), each of which originates at a time point marked by a symbol. Figure 6b shows the corresponding fdetection, equivalent to the binwise sum of all functions in Fig. 6a (note that the vertical axes differ in scaling). Figure 6c, d shows the contributing joint probability functions and fdetection for the tenth time point (t10 = 1 ms), and Fig. 6e, f shows the contributing joint probability functions and fdetection for the twentieth time point (t20 = 2 ms). Although results are shown for only three example time points, the method requires fdetection to be computed for all time points in the observation window except for the final point (there is no recurrence possible after the final point, so there is no need to compute its recurrence distribution here). Note that when the event rate is periodic, as in the example (Fig. 4c), the forward recurrence distributions only need to be computed for the points within the first cycle in the observation window and can then be duplicated (incompletely) to obtain corresponding distributions for the points in each subsequent cycle.

Example forward recurrence distributions, fdetection(t,w), showing the probability that, given a detection at time point t in the process, the waiting time to the next detection will equal w. a Contributions to fdetection following a detection at time t1 = 0.1 ms. Each possible waiting time (marked by symbols) contributes to fdetection for all time points that follow. The contribution of each waiting time w (gray lines) is given by the portion of fevent (Fig. 5a) over the interval [w, wmax], scaled by the probability gdead that the dead time has duration d = w (Fig. 3c). b The overall fdetection function for t1 = 0.1 ms, computed with Eq. 8, is equivalent to the binwise sum of all individual contributions in a. c Contributions to fdetection following a detection at time t10 = 1 ms. d The overall fdetection function for t10 = 1 ms. e Contributions to fdetection following a detection at time t20 = 2 ms. f The overall fdetection function for t20 = 2 ms. The top axis in each panel shows the time relative to the 5-ms observation window. Gray shading marks the region missing from each distribution (i.e., the region that would extend beyond the edge of the observation window). Each distribution is therefore incomplete

2.6 Step 4: Computing the interval distributions for the event process and for the detection process

It is now possible to compute the distribution of interevent intervals (IEIs) and the distribution of interdetection intervals (IDIs). The former requires knowing pevent(ti) (Eq. 6 and Fig. 4c) and the distribution of forward recurrence times to the next event for each time point in the observation window, fevent(ti,wk) (Eq. 7 and Fig. 5). The latter requires knowing pdetection(ti) (Eq. 5 and Fig. 2) and the distribution of forward recurrence times to the next detection for each time point in the observation window, fdetection(ti,wk) (Eq. 8 and Fig. 6). For an observation window having m time points, the probability pIEI of observing an IEI with duration wk is given by

Here, nIEIs = nevents − 1 + pzero is the expected number of IEIs per observation window, with nevents being the expected number of events per observation window and pzero being the probability that an observation window contains zero events. Note that if every observation window were guaranteed to contain at least one event, then the expected number of IEIs would simply be nIEIs = nevents − 1. However, observation windows containing zero events typically can occur and will yield zero IEIs rather than zero minus one IEIs, such that some portion of the 1 which was subtracted must be added back. This portion is equal to the probability that an observation window contains zero events. Here, \({n}_{\text{events}}=\left({t}_{\text{max}}-{t}_{\text{min}}\right)\cdot\left(\bar{p}_{\text{event}}/\Delta t\right)\) and \({p}_{\text{zero}}=\prod_{i=1}^{m}\left(1-{p}_{\text{event}}\left(t_i\right)\right)\), with tmax − tmin being the duration of the observation window (in seconds), \(\bar{p}_{\mathrm{event}}\) being the mean event probability in the observation window, and Δt being the time step (in seconds). Note that if pevent is a row vector with column index i and fevent is a matrix with row index i and column index k, then pIEI in Eq. 9 is given by the matrix product of pevent and fevent, normalized by nIEIs.

The corresponding probability pIDI of observing an IDI with duration wk is given by

Here, nIDIs = ndetections − 1 + pzero is the expected number of IDIs per observation window, with \({n}_{\mathrm{detections}}=\left({t}_{\mathrm{max}}-{t}_{\mathrm{min}}\right)\cdot \left(\bar{p}_{\mathrm{detection}}/\Delta t\right)\). The assumption that the detector is not in a dead state at the start of the observation window means the probability that an observation window contains zero detections is identical to the probability that it contains zero events: \({p}_{\mathrm{zero}}=\prod_{i=1}^{m}\left(1-{p}_{\mathrm{event}}\left(t_i\right)\right)\). Note that if pdetection is a row vector with column index i and fdetection is a matrix with row index i and column index k, then pIDI in Eq. 10 is given by the matrix product of pdetection and fdetection, normalized by nIDIs.

The IEI distribution (in the form of a probability mass function as in Eq. 9) can be converted to rate RIEI (in IEIs/s), by dividing each probability by the time step Δt (in seconds):

Naturally, the IDI distribution (Eq. 10) can be scaled in the same way to yield rate RIDI (in IDIs/s):

Numerically computed IEI and IDI distributions are shown below for the example process and several additional processes. Note that, in the context of a neuronal spike train, the IDI distribution can be equated with the interspike interval (ISI) distribution.

3 Results

The correctness of the method presented above will now be demonstrated for several point processes by comparing results obtained with the numerical method to results computed from stochastic simulations. Although relatively high rates are used in these demonstrations, the numerical method yields equally precise results for low rates.

3.1 Inhomogeneous periodic Poisson point process modified by random dead times

For the example inhomogeneous Poisson point process above (see Methods and Figs. 2, 3, 4, 5, 6), the event rate Revent (in events/s) is given by a sinusoid passed through an exponential function,

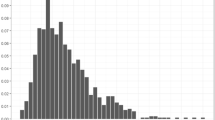

where A is a scale factor (in events/s) that specifies the event rate when the instantaneous value of the sinusoid equals zero, B is a slope factor, f is the frequency (in Hertz) of the sinusoid, and ti is a time point (in seconds) in the observation window. For the example process in Fig. 4c, A = 600 events/s, B = 1, and f = 400 Hz. Figure 7a shows an IEI distribution computed from one million simulations of the event process (gray line) and the IEI distribution obtained using the numerical method (dashed black line). Figure 7b shows the corresponding IDI distributions of the detection process. For each interval distribution, the numerical result and the simulation result are in close agreement. The other functions obtained using the numerical method (i.e., pdetection, pdead, fevent, and fdetection) are in correspondingly close agreement with the simulation results (not shown). These results show that the numerical method works correctly for the example process having a periodic event rate.

Comparison of numerical and simulation results for the example inhomogeneous Poisson point process with a periodic event rate given by Eq. 13 with A = 600 events/s, B = 1, and f = 400 Hz. a IEI distributions computed from one million simulations (gray line) and from the numerical method (dashed black line). b IDI distributions computed from simulations and from the numerical method after taking the dead-time effects into consideration, with dfixed = 0.5 ms and μrand = 0.5 ms

3.2 Inhomogeneous random-walk Poisson point process modified by random dead times

Figure 8 shows results for an inhomogeneous Poisson point process whose event rate over the observation window is aperiodic and equal to one realization of a random walk. Figure 8a shows the particular random walk used for this example. The initial rate at time t1 was 600 events/s, and the rates at later time points were obtained by cumulatively summing random values from the uniform distribution on [− 75, 75] events/s. Figure 8b shows the detection rate expected if this process were modified by dead times drawn from the example distribution (Fig. 3c). Figure 8c shows an IEI distribution computed from one million simulations of the event process (gray line) and the IEI distribution obtained using the numerical method (dashed black line). Figure 8d shows the corresponding IDI distributions. The numerical results and the simulation results are in close agreement.

Comparison of numerical and simulation results for an inhomogeneous Poisson point process with an aperiodic event rate equal to one realization of a random walk. a The particular random walk used for this example. b The expected detection rate after taking the dead-time effects into consideration, with dfixed = 0.5 ms and μrand = 0.5 ms. c IEI distributions computed from one million simulations (gray line) of the same random walk shown in a, and from the numerical method (dashed black line). d IDI distributions computed from the simulations and from the numerical method

3.3 Homogeneous Poisson point process modified by random dead times

Figure 9 shows results for a homogeneous Poisson point process. Figure 9a shows its constant event rate of 1000 events/s. Figure 9b shows the detection rate expected if the process were modified by dead times drawn from the example distribution (Fig. 3c). Figure 9c shows an IEI distribution computed from one million simulations of the event process (gray line) and the IEI distribution obtained using the numerical method (dashed black line). The IEIs in the homogeneous Poisson point process have a geometric distribution (or, equivalently, an exponential distribution in the case of a continuous-time process). The inset in Fig. 9c shows the IEI distribution from the example process plotted with a logarithmic rate axis. Due to right censoring caused by the finite observation window, this distribution deviates at longer IEIs from the straight line that would be expected for a geometric distribution. Figure 9d shows the corresponding IDI distributions. The numerical results and the simulation results are in close agreement. Although a closed-form expression is available for the continuous counterpart to this distribution (Young and Barta 1986; Heil et al. 2007; Neubauer et al. 2009), it has been derived assuming an infinitely long observation window which avoids the effects of right censoring. This closed-form solution would therefore account poorly for any experimentally obtained interval distributions that are strongly affected by right censoring. As the observation window becomes shorter and right censoring becomes more pronounced, the mean and standard deviation of the observed intervals decrease relative to those expected for the underlying process (Nawrot et al. 2008). Because a distribution computed with the numerical method presented here includes the effects of right censoring, it can be preferable to a distribution computed from a known closed-form expression.

Comparison of numerical and simulation results for a homogeneous event process. a The constant event rate of Revent = 1000 events/s. b The expected detection rate after taking the dead-time effects into consideration, with dfixed = 0.5 ms and μrand = 0.5 ms. c IEI distributions computed from one million simulations (gray line) and from the numerical method (dashed black line). d IDI distributions computed from the simulations and from the numerical method. Insets in c and d show the same distributions with a logarithmic rate axis

Although a homogeneous event process (Fig. 9a) is in equilibrium, the detection process (Fig. 9b) that arises after applying random dead times is not. The nonequilibrium nature of the detection process manifests as a detection rate that initially equals the event rate but then decreases and settles into a lower steady-state rate. The higher detection rate at the start of the observation window results from the assumption that the detector is not initially in a dead state, such that the distribution of forward recurrence times to the first detection is identical to the distribution of forward recurrence times to the first event. The lower detection rate in the steady state results from the fact that, for all detections after the first one, dead-time effects cause the probability mass in the distribution of forward recurrence times to the next detection to be shifted toward later times than in the corresponding distribution of forward recurrence times to the next event. For a homogeneous event process, the steady-state detection rate equals the inverse of the sum of the mean IEI and the mean dead time. This yields a steady-state rate of 526.3 detections/s for the example process (Fig. 9b), which has a mean IEI of (1−pevent)·(Δt/pevent) = 0.9 ms and a mean dead time of dfixed + μrand = 1 ms.

4 Discussion

The numerical method presented here yields the expected distribution of intervals between detections of events in an inhomogeneous Poisson point process, assuming the detector is nonparalyzable and each detection is followed by a dead time drawn from a fixed probability distribution. There are, however, several limitations and considerations to keep in mind, as described below.

4.1 The method models the process in discrete time

Although point processes in nature operate in continuous time, numerical computation methods necessarily treat them as though that they operate in discrete time. For a stochastic process that operates in continuous time, the IDI distribution obtained with the discrete numerical method is therefore an estimate which converges to the exact solution as the time step approaches zero. There are, however, practical limits to how brief the time step can be in the numerical method. Shortening the time step or prolonging the rate function increases the number of time points in the observation window, and the computation time of the method grows super-linearly with the number of time points. The main computational bottleneck is Eq. 8, which has a computation time that grows approximately in proportion to the cube of the number of time points. Nonetheless, to obtain an adequate description of a continuous point process, the time step in the numerical method must be sufficiently brief. At a minimum, it should be brief enough that each time step would be expected to have either zero events or one event, but not several events. It should also be brief enough that fluctuations in the rate function are not undersampled. For example, to model a process in which the rate fluctuates sinusoidally with a frequency of f Hz, the author suggests a time step of Δt ≤ (200⋅f)−1 s, corresponding to a sampling rate of at least 200 times f.

4.2 The method describes a nonequilibrium detection process

It has been shown that the numerical method can be used to compute IDI distributions for either homogeneous (Fig. 9) or inhomogeneous (Figs. 1, 2, 3, 4, 5, 6, 7, 8) event processes. In either case, the detection process is not in equilibrium, because there are transient changes in the detection rate at the start of the process before it settles into a steady state (Deger et al. 2010). This effect is most apparent for the process with a constant event rate (Fig. 9a, b), although it also occurs for the process with a periodic event rate (Figs. 4c and 2). For a process to be in equilibrium at the start of the observation window, it must have both begun and settled into its steady state prior to the start of the observation window. However, if a process began prior to the start of the observation window, then it might be in a dead state at the start of the observation window, which violates an assumption of the numerical method presented here. Adapting the method to describe an equilibrium detection process would therefore require considering the probability that each time point in the observation window is in a dead state resulting from a detection occurring prior to the observation window. The numerical method presented here is therefore suitable only for processes that begin at the start of the observation window or processes that have a negligible event probability prior to the start of the observation window. An example of such a process would be a train of spikes recorded from a neuron in response to a stimulus, provided the neuron fires no (or negligibly few) spikes in the absence of a stimulus prior to the observation window.

4.3 The method requires that the detection probability be independent of the process history prior to the previous detection

The numerical method presented here works only for processes in which the instantaneous probability of a detection depends only on the current time point in the observation window and on the time elapsed since the most recent detection (necessary for knowing the probability that the process is still in a dead state). The detection process can be either an “ordinary renewal process” (which results if the underlying event process is homogeneous such that the intervals between detections are independent and identically distributed) or an “inhomogeneous renewal process” (which results if the underlying event process is inhomogeneous such that the distribution of intervals to the next detection will depend on the rate function following the most recent detection). The numerical method can be applied in either case, so long as the probability of detection is not influenced by the process history prior to the most recent detection. The method does not work for nonrenewal processes.

4.4 The method operates in one direction only

Given a particular dead-time distribution, the numerical method can be used to work forward from the rate function to the IDI distribution. It is not possible, however, to work backward from the IDI distribution to the rate function that gave rise to it because the interval distribution contains little information about the timing of detections within the observation window (Turcott et al. 1994; Bi et al. 1988). Indeed, many different rate functions can give rise to virtually identical interval distributions. This can be demonstrated for a simple case by comparing a homogeneous Poisson point process to a “doubly stochastic” point process in which the event rate varies stochastically over time (Cox 1955). For sufficiently long observation windows, both processes can yield interval distributions that are essentially identical, despite the processes having different rate functions (Teich et al. 1990; Lowen and Teich 1991).

4.5 Previous approaches

Several investigators have presented results that relate, to varying degrees, to the present work. To the author’s knowledge, however, none has presented a method to compute the IDI distribution expected for any arbitrary inhomogeneous Poisson point process of events modified by dead times drawn from any arbitrary distribution. For example, closed-form expressions have been presented to describe the IDI distribution for the dead-time-modified homogeneous (i.e., constant-rate) Poisson point process, which in the absence of right censoring is given by the convolution of the IEI distribution and the dead-time distribution (Young and Barta 1986; Li and Young 1993; Picinbono 2009; Prijs et al. 1993; Heil et al. 2007; Neubauer et al. 2009). Although Turcott et al. (1994) provide an expression for the IDI distribution of an inhomogeneous Poisson point process, it is for the very specific case in which the rate function decays exponentially and dead times are nonrandom and inversely proportional to the instantaneous detection rate. Deger et al. (2010) present a method to compute the time-dependent hazard function (their Eq. 22) for an inhomogeneous Poisson point process modified by random dead times. If converted to the corresponding probability distribution, this hazard function is equivalent to the distribution of forward recurrence times to the next detection obtained in the present study (Eq. 8). However, Deger et al. (2010) did not proceed to compute the expected IDI distribution from this result. Yakovlev et al. (2005) present a method to compute the expected IEI distribution for any arbitrary inhomogeneous Poisson point process, but not for one that has been modified by dead times. Deriving the expected IDI distribution is therefore a novel contribution of the present study.

In the neuroscience literature, there exist two common implementations for applying dead-time effects to a point process of events. One implementation treats the dead time as a random variable, as in the present study, and any events which occur during a dead time fail to be detected (e.g., Teich et al. 1978; Young and Barta 1986; Li and Young 1993; Franklin and Bair 1995; Liu et al. 2001; Heil et al. 2007; Neubauer et al. 2009; Deger et al. 2010; Peterson et al. 2014; Peterson and Heil 2018). In the alternative implementation, the dead time has the effect of transiently and deterministically reducing the rate of the process following each detection (e.g., Gray 1967; Lütkenhöner et al. 1980; Teich and Diament 1980; Gaumond et al. 1982; Johnson and Swami 1983; Jones et al. 1985; Miller 1985; Bi et al. 1988; Bi 1989; Miller and Mark 1992; Carney 1993; Prijs et al. 1993; Delgutte 1996; Johnson 1996; Berry and Meister 1998; Zhang et al. 2001; Sumner et al. 20022003; Meddis 2006; Zilany and Bruce 2006; Zilany et al. 2009). In this implementation, the detection rate is conceived of as the product of two independent components, one of which is the stimulus-dependent excitation function, s(t), describing the rate of the event process without dead-time effects, and the other of which is the recovery function, r(t-t’), describing how the rate is to be scaled down following a detection at time t’ (or, equivalently, describing the probability that an event which has occurred based on the original event-rate function will be detected). In the notation of the present study, the detection rate following a detection at time ti would be given by Rdetection(ti+j) = Revent(ti+j)·Gdead(dj), where Gdead is, for example, given by Eq. 1 but is interpreted as a recovery function rather than as a cumulative dead-time distribution. Although the difference between the two implementations can be quite small when the refractory periods are short and the rate is low, the implementations are not equivalent (Peterson et al. 2014). The results obtained using the numerical method presented here are valid only for the implementation in which the dead time is treated as a random variable. Jones et al. (1985) present a procedure to obtain the IDI distribution for the alternative implementation.

4.6 Applications and future work

Although the numerical method is presented as though the rate function of the detection process is known from experimental observation and can therefore be used to compute the expected IDI distribution, this is not the primary application of the method envisioned by the author. After all, an investigator who has observed a process experimentally to obtain the rate function would be well positioned to simply compute an empirical IDI distribution directly from the data. Rather, the more interesting application of the method is in modeling. One can use the method to answer the question of how a given dead-time distribution will affect the IDI distribution of a process characterized by a given rate function. This is true whether the rate function assumed by the investigator describes the event process or the detection process, because the method enables each one to be obtained from the other. It is not necessary that the rate function or the dead-time distribution be representable by a closed-form expression, and it is certainly not necessary that a closed-form expression exists for either the IEI or IDI distributions. The method could also be useful in cases for which the dead-time distribution giving rise to a particular experimentally observed IDI distribution is unknown; in such a case, it can be used to model how several candidate dead-time distributions would affect the shape of the IDI distribution and thereby aid in selecting a dead-time distribution that can account well for the observed data.

Future work could include adapting the method to also account for equilibrium processes, although this would require knowledge of the rate function prior to the start of the observation window so that the probability that the process is in a dead state due to a detection occurring prior to the window can be taken into consideration. This would require that a steady state can be derived for the rate function prior to the observation window (e.g., if the function is constant or periodic). Otherwise, the equilibrium process can only be approximated using the current method by extending the observation window backward in time to allow the process to reach a steady state prior to the start of the original observation window, and then computing the IDI distribution using only the time points within the original observation window. The method can perhaps also be adapted to describe non-Poisson point processes modified by random dead times, or even multiple non-Poisson point processes which are superposed and then modified by random dead times as has been suggested previously to account for nonrenewal properties of spontaneous spike trains of auditory-nerve fibers (Peterson and Heil 2018). However, the longer-term history effects present in a nonrenewal event process would make such an extension of the method substantially more complex than the comparatively simple renewal event process presented here.

Availability of data and material

Not applicable.

References

Amarasingham A, Chen T-L, Geman S, Harrison MT, Sheinberg DL (2006) Spike count reliability and the Poisson hypothesis. J Neurosci 26:801–809

Arkani M, Raisali G (2015) Measurement of dead time by time interval distribution method. Nucl Instrum Methods Phys Res A 774:151–158

Berry MJ, Meister M (1998) Refractoriness and neural precision. J Neurosci 18:2200–2211

Bi Q (1989) A closed-form solution for removing the dead time effects from the poststimulus time histograms. J Acoust Soc Am 85:2504–2513

Bi Q, Brown JL Jr, Lachs G (1988) Statistical expectation of the interval histograms from marginal time statistics of the Poisson process. J Acoust Soc Am 83:1186–1189

Brown EN, Frank LM, Tang D, Quirk MC, Wilson MA (1998) A statistical paradigm for neural spike train decoding applied to position prediction from ensemble firing patterns of rat hippocampal place cells. J Neurosci 18:7411–7425

Carney LH (1993) A model for the responses of low-frequency auditory-nerve fibers in cat. J Acoust Soc Am 93:401–417

Cox DR (1955) Some statistical methods connected with series of events. J R Stat Soc B 17:129–157

Cox DR, Isham V (1980) Point processes. Chapman and Hall, London

Deger M, Helias M, Cardanobile S, Atay FM, Rotter S (2010) Nonequilibrium dynamics of stochastic point processes with refractoriness. Phys Rev E 82:021129

Delgutte B (1996) Physiological models for basic auditory percepts. In: Hawkins HL, McMullen TA, Popper AN, Fay RR (eds) Auditory computation. Springer, New York, pp 157–220

Drost RJ, Sadler BM, Chen G (2015) Dead time effects in non-line-of-sight ultraviolet communications. Opt Express 23:15748–15761

Franklin J, Bair W (1995) The effect of a refractory period on the power spectrum of neuronal discharge. SIAM J Appl Math 55:1074–1093

Gaumond RP, Molnar CE, Kim DO (1982) Stimulus and recovery dependence of cat cochlear nerve fiber spike discharge probability. J Neurophysiol 48:856–873

Gray PR (1967) Conditional probability analyses of the spike activity of single neurons. Biophys J 7:759–777

Grupen C, Shwartz B (2008) Particle detectors. Cambridge monographs on particle physics, nuclear physics, and cosmology 26, 2nd edn. Cambridge University Press, New York

Gummer AW (1991) Probability density function of successive intervals of a nonhomogeneous Poisson process under low-frequency conditions. Biol Cybern 65:23–30

Heil P, Neubauer H, Irvine DRF, Brown M (2007) Spontaneous activity of auditory-nerve fibers: insights into stochastic processes at ribbon synapses. J Neurosci 27:8457–8474

Hodgkin AL, Huxley AF (1952) A quantitative description of membrane current and its application to conduction and excitation in nerve. J Physiol 117:500–544

Johnson DH (1978) The relationship of post-stimulus time and interval histograms to the timing characteristics of spike trains. Biophys J 22:413–430

Johnson DH (1996) Point process models of single-neuron discharges. J Comput Neurosci 3:275–299

Johnson DH, Swami A (1983) The transmission of signals by auditory-nerve fiber discharge patterns. J Acoust Soc Am 74:493–501

Jones K, Tubis A, Burns EM (1985) On the extraction of the signal-excitation function from a non-Poisson cochlear neural spike train. J Acoust Soc Am 78:90–94

Kroó N, Varró S, Farkas G, Oszetzky D, Nagy A, Czitrovszky A (2007) Quantum metal optics. J Mod Opt 54:2679–2688

Li J, Young ED (1993) Discharge-rate dependence of refractory behavior of cat auditory-nerve fibers. Hearing Res 69:151–162

Liu RC, Tzonev S, Rebrik S, Miller KD (2001) Variability and information in a neural code of the cat lateral geniculate nucleus. J Neurophysiol 86:2789–2806

Lowen SB, Teich MC (1991) Doubly stochastic Poisson point process driven by fractal shot noise. Phys Rev A 43:4192–4215

Lütkenhöner B, Hoke M, Bappert E (1980) Effect of recovery properties on the discharge pattern of auditory nerve fibres. In: Cochlear and brainstem evoked response audiometry and electrical stimulation of the VIIIth nerve (Hoke M, Kauffmann C, Bappert E, eds). Scand Audiol Suppl 11:25–43.

Meddis R (2006) Auditory-nerve first-spike latency and auditory absolute threshold: a computer model. J Acoust Soc Am 119:406–417

Miller MI (1985) Algorithms for removing recovery-related distortion from auditory-nerve discharge patterns. J Acoust Soc Am 77:1452–1464

Miller MI, Mark KE (1992) A statistical study of cochlear nerve discharge patterns in response to complex speech stimuli. J Acoust Soc Am 92:202–209

Müller JW (1981a) Bibliography on dead time effects. Report BIPM-81/11, Bureau International des Poids et Mesures, Sèvres, France

Müller JW (1981) Counting statistics of short-lived nuclides. J Radioanal Nucl Chem 61:345–359

Nawrot MP, Boucsein C, Molina VR, Riehle A, Aertsen A, Rotter S (2008) Measurement of variability dynamics in cortical spike trains. J Neurosci Methods 169:374–390

Neubauer H, Köppl C, Heil P (2009) Spontaneous activity of auditory nerve fibers in the barn owl (Tyto alba): analyses of interspike interval distributions. J Neurophysiol 101:3169–3191

Peterson AJ (2020) MATLAB code for the numerical computation of interval distributions. GitHub repository. https://github.com/PetersonHeil/MATLAB-code-for-the-numerical-computation-of-interval-distributions

Peterson AJ, Heil P (2018) A simple model of the inner-hair-cell ribbon synapse accounts for mammalian auditory-nerve-fiber spontaneous spike times. Hear Res 363:1–27

Peterson AJ, Irvine DRF, Heil P (2014) A model of synaptic vesicle-pool depletion and replenishment can account for the interspike interval distributions and nonrenewal properties of spontaneous spike trains of auditory-nerve fibers. J Neurosci 34:15097–15109

Picinbono B (2009) Output dead-time in point processes. Commun Stat Simul Comput 38:2198–2213

Pommé S, Fitzgerald R, Keightley J (2015) Uncertainty of nuclear counting. Metrologia 52:S3–S17

Prijs VF, Keijzer J, Versnel H, Schoonhoven R (1993) Recovery characteristics of auditory nerve fibres in the normal and noise-damaged guinea pig cochlea. Hear Res 71:190–201

Shcherbakov R, Yakovlev G, Turcotte DL, Rundle JB (2005) Model for the distribution of aftershock interoccurrence times. Phys Rev Lett 95:218501

Siebert WM (1970) Frequency discrimination in the auditory system: place or periodicity mechanisms? Proc IEEE 58:723–730

Snyder DL (1975) Random point processes. Wiley, New York

Srulovicz P, Goldstein JL (1983) A central spectrum model: A synthesis of auditory-nerve timing and place cues in monaural communication of frequency spectrum. J Acoust Soc Am 73:1266–1276

Sumner CJ, Lopez-Poveda EA, O’Mard LP, Meddis R (2002) A revised model of the inner-hair cell and auditory-nerve complex. J Acoust Soc Am 111:2178–2188

Sumner CJ, Lopez-Poveda EA, O’Mard LP, Meddis R (2003) Adaptation in a revised inner-hair cell model. J Acoust Soc Am 113:893–901

Teich MC (1985) Normalizing transformations for dead-time-modified Poisson counting distributions. Biol Cybern 53:121–124

Teich MC, Diament P (1980) Relative refractoriness in visual information processing. Biol Cybern 38:187–191

Teich MC, Khanna SM (1985) Pulse number distribution for the neural spike train in the cat’s auditory nerve. J Acoust Soc Am 77:1110–1128

Teich MC, Matin L, Cantor BI (1978) Refractoriness in the maintained discharge of the cat’s retinal ganglion cell. J Opt Soc Am 68:386–402

Teich MC, Turcott RG, Lowen SB (1990) The fractal doubly stochastic Poisson point process as a model for the cochlear neural spike train. In: Dallos P, Geisler CD, Matthews JW, Ruggero MA, Steele CR (eds) The mechanics and biophysics of hearing. Springer, New York, pp 354–361

Turcott RG, Lowen SB, Li E, Johnson DH, Tsuchitani C, Teich MC (1994) A nonstationary Poisson point process describes the sequence of action potentials over long time scales in lateral-superior-olive auditory neurons. Biol Cybern 70:209–217

Vannucci G, Teich MC (1978) Effects of rate variation on the counting statistics of dead-time-modified Poisson processes. Opt Commun 25:267–272

Verma G, Drost RJ (2017) Inhomogeneous Poisson process rate function inference from dead-time limited observations. J Opt Soc Am A 34:770–782

Wiener MC (2003) An adjustment of the time-rescaling method for application to short-trial spike train data. Neural Comput 15:2565–2576

Wilbur WJ, Rinzel J (1983) A theoretical basis for large coefficient of variation and bimodality in neuronal interspike interval distributions. J Theor Biol 105:345–368

Yakovlev G, Rundle JB, Shcherbakov R, Turcotte DL (2005) Inter-arrival time distribution for the non-homogeneous Poisson process. arXiv:cond-mat/0507657

Young ED, Barta PE (1986) Rate responses of auditory nerve fibers to tones in noise near masked threshold. J Acoust Soc Am 79:426–442

Zhang X, Heinz MG, Bruce IC, Carney LH (2001) A phenomenological model for the responses of auditory-nerve fibers: I. Nonlinear tuning with compression and suppression. J Acoust Soc Am 109:648–670

Zilany MS, Bruce IC (2006) Modeling auditory-nerve responses for high sound pressure levels in the normal and impaired auditory periphery. J Acoust Soc Am 120:1446–1466

Zilany MS, Bruce IC, Nelson PC, Carney LH (2009) A phenomenological model of the synapse between the inner hair cell and auditory nerve: long-term adaptation with power-law dynamics. J Acoust Soc Am 126:2390–2412

Acknowledgements

The author thanks Peter Heil for helpful discussions and feedback on the manuscript.

Funding

Open Access funding enabled and organized by Projekt DEAL. This work was supported by a grant of the Deutsche Forschungsgemeinschaft (Priority Program 1608 “Ultrafast and temporally precise information processing: Normal and dysfunctional hearing,” He1721/11-2 to Peter Heil).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No conflicts of interest or competing interests.

Code availability

The custom MATLAB code used in this study is publicly accessible in an online repository.

Additional information

Communicated by Benjamin Lindner.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Peterson, A.J. A numerical method for computing interval distributions for an inhomogeneous Poisson point process modified by random dead times. Biol Cybern 115, 177–190 (2021). https://doi.org/10.1007/s00422-021-00868-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00422-021-00868-8