Abstract

Purpose

To evaluate the diagnostic performance and reproducibility of a computer-aided diagnosis (CAD) system for thyroid cancer diagnosis using ultrasonography (US) based on the operator’s experience.

Materials and methods

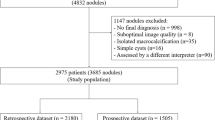

Between July 2016 and October 2016, 76 consecutive patients with 100 thyroid nodules (≥ 1.0 cm) were prospectively included. An experienced radiologist performed the US examinations with a real-time CAD system integrated into the US machine, and three operators with different levels of US experience (0–5 years) independently applied the CAD system. We compared the diagnostic performance of the CAD system based on the operators’ experience and calculated the interobserver agreement for cancer diagnosis and in terms of each US descriptor.

Results

The sensitivity, specificity, positive predictive value (PPV), negative predictive value (NPV), and accuracy of the CAD system were 88.6, 83.9, 81.3, 90.4, and 86.0%, respectively. The sensitivity and accuracy of the CAD system were not significantly different from those of the radiologist (p > 0.05), while the specificity was higher for the experienced radiologist (p = 0.016). For the less-experienced operators, the sensitivity was 68.8–73.8%, specificity 74.1–88.5%, PPV 68.9–73.3%, NPV 72.7–80.0%, and accuracy 71.0–75.0%. The less-experienced operators showed lower sensitivity and accuracy than those for the experienced radiologist. The interobserver agreement was substantial for the final diagnosis and each US descriptor, and moderate for the margin and composition.

Conclusions

The CAD system may have a potential role in the thyroid cancer diagnosis. However, operator dependency still remains and needs improvement.

Key Points

• The sensitivity and accuracy of the CAD system did not differ significantly from those of the experienced radiologist (88.6% vs. 84.1%, p = 0.687; 86.0% vs. 91.0%, p = 0.267) while the specificity was significantly higher for the experienced radiologist (83.9% vs. 96.4%, p = 0.016).

• However, the diagnostic performance varied according to the operator’s experience (sensitivity 70.5–88.6%, accuracy 72.0–86.0%) and they were lower for the less-experienced operators than for the experienced radiologist.

• The interobserver agreement was substantial for the final diagnosis and each US descriptor and moderate for the margin and composition.

Similar content being viewed by others

Abbreviations

- AUC:

-

Area under receiver operating characteristic curve

- CAD:

-

Computer-aided diagnosis

- CI:

-

Confidence interval

- FNA:

-

Fine-needle aspiration

- NPV:

-

Negative predictive value

- PPV:

-

Positive predictive value

- PTC:

-

Papillary thyroid carcinoma

- ROC:

-

Receiver operating characteristic

- US:

-

Ultrasonography

References

Haugen BR (2017) 2015 American Thyroid Association management guidelines for adult patients with thyroid nodules and differentiated thyroid cancer: what is new and what has changed? Cancer 123:372–381

Shin JH, Baek JH, Chung J et al (2016) Ultrasonography diagnosis and imaging-based management of thyroid nodules: revised Korean Society of Thyroid Radiology Consensus Statement and Recommendations. Korean J Radiol 17:370–395

Choi SH, Kim EK, Kwak JY, Kim MJ, Son EJ (2010) Interobserver and intraobserver variations in ultrasound assessment of thyroid nodules. Thyroid 20:167–172

Kim HG, Kwak JY, Kim EK, Choi SH, Moon HJ (2012) Man to man training: can it help improve the diagnostic performances and interobserver variabilities of thyroid ultrasonography in residents? Eur J Radiol 81:e352–e356

Park CS, Kim SH, Jung SL et al (2010) Observer variability in the sonographic evaluation of thyroid nodules. J Clin Ultrasound 38:287–293

Park SH, Kim SJ, Kim EK, Kim MJ, Son EJ, Kwak JY (2009) Interobserver agreement in assessing the sonographic and elastographic features of malignant thyroid nodules. AJR Am J Roentgenol 193:W416–W423

Kim SH, Park CS, Jung SL et al (2010) Observer variability and the performance between faculties and residents: US criteria for benign and malignant thyroid nodules. Korean J Radiol 11:149–155

Acharya UR, Sree SV, Krishnan MM et al (2014) Computer-aided diagnostic system for detection of Hashimoto thyroiditis on ultrasound images from a Polish population. J Ultrasound Med 33:245–253

Chang Y, Paul AK, Kim N et al (2016) Computer-aided diagnosis for classifying benign versus malignant thyroid nodules based on ultrasound images: a comparison with radiologist-based assessments. Med Phys 43:554

Choi YJ, Baek JH, Park HS et al (2017) A computer-aided diagnosis system using artificial intelligence for the diagnosis and characterization of thyroid nodules on ultrasound: initial clinical assessment. Thyroid 27:546–552

Li LN, Ouyang JH, Chen HL, Liu DY (2012) A computer aided diagnosis system for thyroid disease using extreme learning machine. J Med Syst 36:3327–3337

Lim KJ, Choi CS, Yoon DY et al (2008) Computer-aided diagnosis for the differentiation of malignant from benign thyroid nodules on ultrasonography. Acad Radiol 15:853–858

Fleiss JL (1971) Measuring nominal scale agreement among many raters. Psychol Bull 76:378–382

Kundel HL, Polansky M (2003) Measurement of observer agreement. Radiology 228:303–308

Chen KY, Chen CN, Wu MH et al (2014) Computerized quantification of ultrasonic heterogeneity in thyroid nodules. Ultrasound Med Biol 40:2581–2589

Funding

The authors state that this work was supported by the National Research Foundation of Korea (# 2017R1C1B5016217).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Guarantor

The scientific guarantor of this publication is Eun Ju Ha.

Conflict of interest

The authors of this manuscript declare no relationships with any companies, whose products or services may be related to the subject matter of the article.

Statistics and biometry

No complex statistical methods were necessary for this paper.

Informed consent

Written informed consent was obtained from all patients before they underwent US.

Ethical approval

This study was approved by our institutional review board.

Methodology

• Prospective case-control study

Rights and permissions

About this article

Cite this article

Jeong, E.Y., Kim, H.L., Ha, E.J. et al. Computer-aided diagnosis system for thyroid nodules on ultrasonography: diagnostic performance and reproducibility based on the experience level of operators. Eur Radiol 29, 1978–1985 (2019). https://doi.org/10.1007/s00330-018-5772-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00330-018-5772-9