Abstract

Adaptation to visual motion can induce marked distortions of the perceived spatial location of subsequently viewed stationary objects. These positional shifts are direction specific and exhibit tuning for the speed of the adapting stimulus. In this study, we sought to establish whether comparable motion-induced distortions of space can be induced in the auditory domain. Using individually measured head related transfer functions (HRTFs) we created auditory stimuli that moved either leftward or rightward in the horizontal plane. Participants adapted to unidirectional auditory motion presented at a range of speeds and then judged the spatial location of a brief stationary test stimulus. All participants displayed direction-dependent and speed-tuned shifts in perceived auditory position relative to a ‘no adaptation’ baseline measure. To permit direct comparison between effects in different sensory domains, measurements of visual motion-induced distortions of perceived position were also made using stimuli equated in positional sensitivity for each participant. Both the overall magnitude of the observed positional shifts, and the nature of their tuning with respect to adaptor speed were similar in each case. A third experiment was carried out where participants adapted to visual motion prior to making auditory position judgements. Similar to the previous experiments, shifts in the direction opposite to that of the adapting motion were observed. These results add to a growing body of evidence suggesting that the neural mechanisms that encode visual and auditory motion are more similar than previously thought.

Similar content being viewed by others

Introduction

Physical objects moving in the environment are rarely perceived by a single sensory system in isolation. Rather, when an object translates across external space in front of an observer, salient sources of motion information are typically available to both the visual and auditory systems. It is well established that the human visual system contains specialised and dedicated mechanisms for analysing object motion (Van Essen and Maunsell 1983; Albright and Stoner 1995). In contrast, our understanding of the processes and structures underlying auditory motion processing remains comparatively poor. Unlike the visual system, where the inputs for position and motion coding are derived from the pattern of light falling on the retina, input to the auditory system contains no inherent spatial organisation. Instead, the position of a sound source is computed based on frequency and timing information transmitted by the cochlea. Three cues are used to encode auditory spatial position and its change over time (motion): interaural timing and level differences that arise from the separation of the ears by the solid mass of the head (Rayleigh 1907), and spectral cues that arise from frequency filtering caused by the interaction of sound waves with the trunk, shoulders and pinnae (Békésy 1960). As a result, any map of auditory space is fundamentally computational, requiring reconstruction based on these cues (King 1993).

Two alternative accounts of how the auditory system might encode motion have emerged. The first, termed the ‘snapshot’ hypothesis, suggests that motion information is derived indirectly by tracking changes in object position over time (e.g. Grantham 1986). This could be achieved using the same mechanisms involved in the localisation of stationary auditory stimuli, without recourse to dedicated motion sensors. According to this hypothesis, auditory motion perception should be limited by the sensitivity of the auditory system to detecting auditory features and the precision by which they are localised in space and time. Proponents of the ‘snapshot’ hypothesis point to the fact that both detectability (Wilcott and Gales 1954; Grantham and Leuthke 1988; Xiao and Grantham 1997) and spatial resolution (Harris and Sargeant 1971; Grantham 1986) of moving targets tend to be similar to, or poorer than, comparable measures for stationary targets.

Alternatively, it has been argued that the auditory system does possess dedicated neural structures which directly encode attributes of motion in a manner analogous to the visual system (Perrott and Marlborough 1989; Warren et al. 2002). Two of the fundamental properties of neurons comprising the cortical substrate of visual motion analysis are selectivity to the scalar components of velocity: direction and speed. Directional selectivity is present as early as the primary visual cortex (V1) but is a dominant characteristic of extra-striate cortical area V5/MT, where in excess of 90% of neurons show marked directional preferences (Zeki 1974; Albright and Desimone 1987). V5/MT neurons also exhibit speed tuning that is invariant to changes in the stimulus pattern, such as spatial frequency, orientation or temporal frequency (Maunsell and Van Essen 1983; Perrone and Thiele 2001; Liu and Newsome 2003; Priebe et al. 2003). If auditory motion is encoded in a similar manner to its visual counterpart, one might expect to find neurons with comparable tuning properties in the auditory system.

A number of physiological studies have provided evidence consistent with the existence of motion sensitive mechanisms in the auditory systems of both cat and monkey. Neurons exhibiting directional selectivity to moving auditory stimuli have been shown to exist, both subcortically (Altman 1968; Altman et al. 1970) and in the primary auditory cortex (AI) (Ahissar et al. 1992). Additionally, recent evidence suggests that some AI neurons also show properties that could be considered consistent with speed tuning (Jenison et al. 2001). However, the proportion of cells exhibiting these qualities is extremely modest compared with the visual system, and it remains to be seen whether a true auditory analogue of V5/MT exists—i.e. an area dedicated to the analysis of motion.

Perhaps the most compelling psychophysical support for direct motion encoding in the visual system comes from the visual motion aftereffect (vMAE). Following prolonged exposure to motion in a particular direction, subsequent viewing of a stationary object elicits the non-veridical percept of motion in the opposite direction. This effect is readily demonstrable, extremely robust and is widely accepted as evidence for adaptation of specialised motion detecting mechanisms (Barlow and Hill 1963). By manipulating the characteristics of adapting and test stimuli, it is possible to demonstrate aftereffects with at least two distinct sets of properties. The first becomes apparent when a stationary test stimulus is used and is thought to reflect adaptation of motion sensitive cells in V1 (Maffei et al. 1973). The second occurs when a dynamic (or flickering) test stimulus is used and displays properties consistent with adaptation in extra-striate areas of visual motion analysis, most likely V5/MT (Van Wezel and Britten 2002).

Efforts to demonstrate an auditory motion aftereffect (aMAE) have met with mixed results. Early studies typically failed to demonstrate an effect that was both convincing and reliable between observers (e.g. Grantham and Wightman 1979; Grantham 1989; Reinhardt-Rutland 1992). In contrast, the results of recent studies provide more compelling evidence that a robust and replicable aMAE does indeed exist (Grantham 1998; Dong et al. 2000; Neelon and Jenison 2003, 2004). Critically, studies showing a strong aMAE have used stimuli containing all possible sources of motion information, produced either by physically moving a sound source through space (Dong et al. 2000), by filtering sounds through generic head-related transfer functions (HRTFs) (Grantham 1998), or by filtering sounds with individually measured HRTFs (Neelon and Jenison 2003, 2004). In contrast, studies failing to find a substantial aMAE have typically opted to simulate auditory motion via dynamic manipulation of interaural timing and loudness cues (Grantham and Wightman 1979; Ehrenstein 1994; Reinhardt-Rutland 1992). Although these stimuli can produce a sensation of lateral motion, it is possible that they provide suboptimal stimulation to the auditory system.

In recent years, it has been further demonstrated that adaptation to visual motion produces shifts in the perceived position of subsequently presented objects in a direction opposite to that of the adapting stimulus (Snowden 1998; McGraw et al. 2002, 2004; Whitney 2005). These effects demonstrate a direct interaction between neural mechanisms that encode motion and those that contribute to the representation of object position. Shifts in perceived visual position exhibit many tuning properties consistent with dynamic MAEs, but can also be induced using stimulus configurations that do not directly result in the perception of illusory motion (Whitney et al. 2003; McGraw et al. 2004). This has lead to the suggestion that these effects constitute a new and distinct class of motion aftereffect (Whitney and Cavanagh 2003).

In this study, we ask if analogous positional distortions also exist in the auditory domain. We demonstrate that adapting to auditory motion induces shifts in the perceived position of stationary auditory stimuli (Experiment 1). We then compare the properties of these shifts with those found in the visual system (Experiment 2). Finally, we investigate whether adapting to motion in one sensory domain (visual) results in shifts in perceived position in another sensory domain (auditory) (Experiment 3).

Methods

Participants

Four participants (three male) between the ages of 24 and 40 took part. Participants RWD, NWR and PVM were the authors of this study. Participant LKS was naïve to the purposes of the experiment. Each participant gave their informed consent prior to their inclusion in the study. All participants had normal hearing as assessed by standard audiological techniques and normal visual acuity.

Experiment 1

HRTF Measurements

HRTF measurements were made for each participant using Knowles elecret condenser microphones (EK-3133) positioned flush with the entrance of the ear canal. Microphones were embedded in a viscous silicone audiological impression material (Egger-flex/AB-40 Shore), which acted both to hold the microphone in place and completely block the ear canal (see also Pralong and Carlile 1996; Wightman and Kistler 2005). Tenth-order maximum length sequences (MLS) were computer generated and delivered via a stand-mounted Bose Acoustimass cube speaker adjusted to participants’ ear-height. Observers sat 2 m from the speaker on a custom-built rotating chair in an anechoic chamber, with their heads co-aligned with the centre of rotation and held in place via a small chin cup. HRTF measurements were made with the speaker located at 91 different azimuth positions relative to the observer (2° step size from −90° to +90°). Minimum-phase versions of the time-domain head-related impulse response functions (HRIR) were piecewise cubic Hermite interpolated in order to increase the spatial resolution of the filter set to 0.1° azimuth steps. Interaural time differences (ITDs) were independently derived at each location (by comparing the time delay of the maximum in the cross-correlation function between measured and minimum-phase HRIRs for each ear) and interpolated to the same resolution. Figure 1 shows an example of a full HRTF set as measured for one observer.

Example HRTF set for one observers. The left and central panels illustrate the HRTF measured at the left and right ear, respectively, plotted as a function of stimulus position in the horizontal plain (azimuth). Lighter shading represents regions of relatively higher gain. The rightmost panel displays interaural time differences (ITDs) derived from the same set of measurements. Convolution of a broadband stereo stimulus with the pair of HRTFs for a given location, coupled with the delay off each channel by the appropriate ITD, creates the compelling percept of a sound localised in external space

Stimuli

Static auditory localisation stimuli were generated by convolving 200 ms bursts of bandpass filtered Gaussian noise (8th order Butterworth filter, 200 Hz–12 kHz passband; 44.1 kHz sampling rate) with a given pair of HRIRs, and delaying each channel by the appropriate ITD. Adapting stimuli were created in an equivalent fashion, with the exception that HRIRs and ITDs were sequentially updated in 0.1° steps to produce motion in the azimuthal plane. Each motion stimulus traversed a fixed angular extent of 20° azimuth, centred immediately in front of the participant. A range of velocities (2, 4, 8, 16, and 32°/s) were produced by systematically manipulating the length of each noise burst. Both static and moving stimuli were presented via Sennheiser HD 250 headphones at 84 dB SPL and cosine ramped at onset and offset over 5 ms. These stimuli mimicked the complete set of localisation cues normally available to each observer in the free field and produced compelling percepts of sound externalised in space.

Procedure

Participants were required to complete a simple auditory localisation task, with and without prior adaptation to motion in a particular direction (see Fig. 2a). A single interval forced-choice paradigm was employed, whereby participants judged the position of a stationary noise burst relative to their internal midline (i.e. did the sound appear to originate from the right or left?). Stimulus location was manipulated via a method of constant stimuli, comprising 40 trials at each of 7 different locations. Data was collected in two blocks per condition. The proportion of sounds at each position that were perceived as originating from the right of the participant’s midline were fit with a logistic function of the form:

Experimental procedure used to examine the influence of motion adaptation on the perceived position of subsequently presented stationary stimuli. a Auditory motion adaptation and subsequent localisation judgements, b visual adaptation and subsequent visual localisation judgements, and c visual motion adaptation and subsequent auditory localisation judgements. In each experiment, participants were initially exposed to the adapting motion for 60 s. Following a 0.2 s inter-stimulus interval, participants were presented with a stationary test stimulus and made spatial localisation judgements. Prior to the next trial, participants were exposed to 10 s of top up adaptation

where β refers to the just noticeable difference (JND) and is an index of the participant’s localisation sensitivity, and α refers to the point of subjective equality (PSE), which defines the physical location at which participants were equally likely to report that the sound came from their left as their right (i.e. the physical location of their subjective midline).

In adaptation conditions, each trial on the localisation task was preceded by the repeated presentation of unidirectional (leftward or rightward) auditory motion (60 s initial adaptation, 10 s top up adaptation between each trial). For each period of adapting motion, the start position (and consequently also the end position) was randomly selected within the 20° interval, ensuring that the position of the adaptor immediately prior to the localisation judgment did not systematically differ between conditions. Motion-induced shifts in perceived position were quantified by calculating the shift in PSE relative to a no-adaptation baseline (see Fig. 3).

Quantifying the effect of unidirectional motion adaptation on the perceived position of a subsequently presented stimulus. The figure shows example psychometric functions for one observer (PVM, 8°/s adapting velocity condition). Motion adaptation induces a marked lateral displacement of the psychometric function. The magnitudes of positional aftereffects are inferred from the shift in PSE (the physical position perceived to be straight ahead of the observer) relative to a no adaptation baseline condition

Experiment 2

Stimuli

Visual stimuli were generated via a Cambridge Research Systems ViSaGe and displayed on a Mitsubishi Diamond Pro 2045U 22-inch CRT monitor (1,024 × 768 resolution, 100 Hz framerate). The non-linear luminance response of the display was corrected using the inverse function of the luminance response as measured with a Spectrascan PR650 spectra-photometer. Participants’ heads were stabilised using a chin rest set to a viewing distance of 32.66 cm. At this distance, 1 pixel subtended 4 arcminutes of visual angle. As depicted in Fig. 2b, localisation stimului consisted of two luminance-defined patches, one above the other with a centre-to-centre vertical separation of 16.67°. Stimuli had a rectangular vertical profile (height 12.87°) and a Gaussian horizontal profile, with the equation:

where L background refers to the background luminance which was 47 cd/m2, C refers to peak Michelson contrast (fixed at 1) and σ refers to the standard deviation of the Gaussian envelope. Localisation stimuli were presented for 100 ms. Visual motion adaptation stimuli consisted of two luminance defined sine wave gratings (Fig. 2b), with a centre-to-centre vertical separation of 16.67° presented in a rectangular vertical window (height 12.87°) with equation:

where L background refers to the background luminance which was set to 47 cd/m2, C refers to contrast which is set to 100% and f refers to spatial frequency which was set to 0.5 cycle/deg. The adapting gratings were presented above and below fixation and drifted horizontally in opposite directions at velocities of 1, 2, 4, 8, 16, 32 and 48°/s.

Procedure

Participants were required to judge the relative horizontal positions of the two localisation patches (i.e. did the upper or lower patch appear offset to the right?). A comparable procedure to Experiment 1 was used, whereby the physical offset of the patches was controlled in a method of constant stimuli (7 offsets, 40 trials per offset) and logistic fits to participants’ psychometric functions allowed quantification of both the PSE and JND. To further aid comparison with the results from Experiment 1, a preliminary experiment was conducted in which baseline performance was measured for a range of different visual patch sizes. Changing the standard deviation of the Gaussian envelope (σ) provides a robust means of manipulating positional sensitivity (Toet and Koenderink 1988; Whitaker et al. 2002). Mapping out this relationship allowed us to individually tailor the visual localisation stimuli for each participant such that positional sensitivity was equivalent to that obtained on the auditory task (Experiment 1). In subsequent adaptation conditions, visual motion stimuli were presented for identical periods to those used in Experiment 1 (60 s initial adaptation plus 10 s top up adaptation) and motion-induced shifts in perceived visual position were measured using the methods described previously. Because simultaneous motion adaptation was induced in opposite directions above and below fixation, the magnitude of the induced positional offsets was halved in order to allow comparison with the results of Experiment 1.

Experiment 3

Stimuli

Localisation stimuli consisted of the same auditory localisation stimuli used Experiment 1. Viewing conditions were the same as those in Experiment 2. The visual adapting stimulus consisted of a single luminance defined sine wave grating with the equation:

where L background refers to the background luminance which was set to 47 cd/m2, C refers to contrast which is set to 100% and f refers to spatial frequency which was set to 0.5 cycle/deg. The adapting gratings were presented across fixation and drifted in a single (azimuthal) direction at a fixed velocity of 16°/s.

Procedure

Participants were required to complete the auditory localisation task (7 positions, 40 trials per position, manipulated within a method of constant stimuli) employed in Experiment 1, with the important difference that instead of adapting to auditory motion prior to making auditory localisation judgements, participants adapted to unidirectional (leftwards or rightwards) visual motion. A single adapting velocity (16°/s) was selected, as this velocity successfully induced shifts in Experiments 1 and 2. Adaptation durations were consistent with those used in Experiments 1 and 2 (60 s initial adaptation, 10 s top up adaptation between each trial). Data was analysed in the same way as in Experiments 1 and 2.

Results

Experiment 1

Auditory localisation thresholds were first obtained for the baseline localisation (without motion adaptation) task to allow comparison between HRTF techniques and free-field procedures. The localisation threshold for each participant was 0.63° (SEM ± 0.10) for PVM, 1.14° (SEM ± 0.25) for RWD, 0.51° (SEM ± 0.11) for NWR and 1.20 (SEM ± 0.23) for LKS. These values compare favourably with localisation thresholds obtained in free-field environments, which are commonly in the order of 1° (Middlebrooks and Green 1991; Chandler and Grantham 1992).

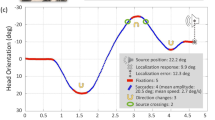

Figure 4a–d plots the PSEs for each participant following adaptation to leftward and rightward auditory motion, at each of the adaptor velocities tested. Adaptation to a unidirectional motion stimulus produced systematic shifts in the PSE in the direction of motion, indicative of a distortion of perceived auditory location in the opposing direction. These effects were roughly equivalent for leftward and rightward adapting motion and exhibit clear speed tuning—adaptors moving between 4 and 16°/s produced sizeable shifts, whereas adaptors that were slower (2°/s), or faster (32°/s) than this did not. Whilst the pattern of band-pass speed tuning holds for each of the participants, some variation in the absolute magnitude of the effect can be seen. Figure 4e displays average positional shifts for each participant, which have been re-plotted in units of sensitivity (JND units). When individual differences in localisation sensitivity are taken into account, the peak effect for each participant is similar (~1.5 JND units).

Shifts in the perceived location of stationary auditory stimuli following motion adaptation. a–d Individual participants’ points of subjective equality (PSE) for leftwards and rightwards adapting motion, plotted as a function of speed. For comparison, PSEs are also shown for the no-adaptation baseline condition (dashed line). e Comparison of positional shifts between participants, once averaged across direction and normalised with respect to the individual’s just-noticeable-difference (JND)

Experiment 2

Figure 5 shows visual positional sensitivity for different envelope sizes, in the absence of motion adaptation. From the best fitting power function to each dataset (RWD: 0.13 + x 1.24, R 2 = 0.98; PVM: 0.15 + x 0.97, R 2 = 0.99; NWR: 0.10 + x 1.03, R 2 = 0.99; LKS: 0.03 + x 1.32, R 2 = 0.99). Envelope sizes were selected for each individual so as to match positional sensitivity to that obtained with auditory stimuli in Experiment 1 (σ = 7.60, 3.90, 3.57 and 5.60 for RWD, PVM, NWR and LKS, respectively). The influence of motion adaptation on the perceived position of these individually tailored stimuli is shown in Fig. 6a–d, plotted as a function of adaptor speed. Motion adaptation produced shifts in perceived position in the opposite direction to the adaptor which, when expressed in units of individual sensitivity (Fig. 6e), are of a similar magnitude to those found in Experiment 1. However, whereas the auditory positional aftereffect exhibited clear band-pass speed tuning, such a profile is not evident in the visual data. Participant LKS is the only participant who shows band-pass tuning. For the remaining participants, there is little evidence of visual positional shifts dissipating at high adaptor speeds. In contrast to the results presented here, Snowden (1998) reported that adaptation to a visual grating drifting at 32°/s produced little or no shift in perceived position. As the adaptor spatial frequency used by Snowden (1 cycle/deg) differed to that used in the present study (0.5 cycle/deg), we decided to repeat the experiment for two participants (RWD and LKS) using a range of spatial frequencies (0.0625 to 4 cycle/deg) in an attempt to reconcile these conflicting results. Although the range of speeds that can be implemented for a given spatial frequency is limited by the experimental setup, Fig. 7a–b shows that this manipulation produces a family of nested band-pass tuning functions. At lower speeds, the magnitude of shifts in perceived position is largely independent of spatial frequency. However, the peak and upper limits of each curve are inversely related to spatial frequency, consistent with the known falloff in spatiotemporal contrast sensitivity (Kelly 1979).

Matching positional sensitivity to allow direct comparison between auditory and visual domains. Visual sensitivity (JNDs) in the absence of motion adaptation is shown, plotted as a function of the stimulus envelope size (σ). For comparison, horizontal dashed lines show baseline auditory JNDs obtained in Experiment 1

Perceived alignment of stationary visual stimuli following adaptation to visual motion over the range of adapting velocities. a–d PSE values for the localisation task following motion adaptation over the range of speeds tested for each participant. e Shifts in perceived visual position plotted in units of sensitivity (JND units) for all three participants

Experiment 3

The influence of visual motion adaptation on the perceived position of auditory localisation stimuli is shown in Fig. 8 for each of the four participants. Data is plotted in units of sensitivity. Consistent with Experiments 1 and 2, motion adaptation produced direction specific shifts in perceived position. Furthermore, shifts are of similar magnitude to those shifts observed in Experiments 1 and 2 (~1.5 JND units).

The demonstration of an auditory aftereffect following adaptation to visual motion is consistent with previous findings from Kitagawa and Ichihara (2002) and Ehrenstein and Reinhardt-Rutland (1996). Kitagawa and Ichihara examined the effect of adapting to auditory and visual stimuli, moving in depth, on stationary test stimuli. Following adaptation to visual motion, the authors found that subsequently presented auditory test stimuli of fixed volume appeared to modulate in loudness. However, adapting to an auditory stimulus moving in depth had no measurable effect on static visual test stimuli. It is unclear whether this is because auditory motion adaptation does not induce visual aftereffects, or whether the lack of an aftereffect was a consequence of the paucity of motion cues present in the adapting stimulus used. Our current experimental setup does not easily allow us to resolve this issue. Adapting to auditory motion may shift both the visual test stimulus itself and the visual reference stimulus against which its position is judged. It is for this reason that we used a stable internal auditory reference (perceived midline) for our cross modal experiment. Ensuring the stability of an internal visual reference is considerably more challenging and requires that eye position is held constant. This is impossible to guarantee without the use of image stabilisation devices. The effect of auditory motion adaptation on perceived visual position is certainly an interesting question, and one that will be addressed in greater detail in future work.

Discussion

Taken together, these experiments demonstrate that adaptation to auditory motion produces shifts in the perceived position of stationary test stimuli that are comparable with those observed in the visual domain. The observed shifts in perceived position are direction specific and band pass tuned for adaptor speed. The localisation shifts that occur following adaptation to both visual and auditory motion demonstrate some striking similarities. Apart from the fact that both are direction specific, the magnitude of each shift is similar when expressed in units of sensitivity, and shows band-pass tuning that is largely governed by adaptor speed. The shape of speed tuning for visual stimuli is governed by the spatial frequency of the adapting pattern and consists of two distinct regions. Initially, the magnitude of the motion-induced positional offset increases with adaptor speed and is essentially independent of adaptor spatial frequency. However, for each individual spatial frequency the peak offset occurs at a specific adaptor speed. Beyond this peak, stimulus visibility is reduced and the magnitude of the positional offset declines. The reduction in the size of the positional offset is entirely consistent with changes in spatiotemporal contrast sensitivity which occur as stimulus velocity is increased (Kelly 1979). The fact that auditory stimuli show similar band-pass speed tuning is somewhat surprising given the superior temporal processing properties of the auditory system. Differences in acoustic and photochemical transduction times in each sensory system set very different limits to the upper velocity of motion that can be encoded (Lennie 1981; King and Palmer 1985). Thresholds for discriminating ITDs are in the region of 9 μs (Klumpp and Eady 1956) and psychoacoustical studies demonstrate that human observers are more than capable of detecting velocities up to 360°/s (Chandler and Grantham 1992)—well beyond the 32°/s upper limit of our auditory shifts in position. This suggests that the locus of our effect might lie in cortical areas with poorer temporal processing capabilities than those typically encountered in the auditory system. Further support for this notion can be drawn from our third experiment where we demonstrated cross-modal effects (adapting to visual motion and testing with spatially localised auditory stimuli) that were also direction specific and similar in magnitude.

It is well known that the perceived location of an auditory stimulus can be influenced by stationary sounds presented in other regions of auditory space. Following a period of adaptation to a stationary sound located in a particular region of the auditory field, human listeners typically misperceive the location of subsequent sounds in a direction consistent with them appearing spatially repulsed from the adapted location (Taylor 1962; Thurlow and Jack 1973; Carlile et al. 2001). These effects are thought to reflect selective adaptation of auditory neurons tuned to particular regions of auditory space. Indeed, analogous effects are also found in the visual system where adaptation to a static visual target has a marked influence upon the perceived position of subsequently presented stimuli (Whitaker et al. 1997). However, static adaptation effects of this type are extremely unlikely to explain the present findings. Although each period of auditory motion adaptation was centred on the listener’s midline, the starting position of the first motion sweep in the series was randomised on each presentation. This meant that whilst both leftward and rightward adapting sequences traversed identical spatial extents, importantly, the start and end points were randomly distributed across the adapted region of auditory space. Therefore, no single auditory location was systematically subjected to the adapting stimuli either at onset or offset. Nonetheless, we found robust direction-dependent shifts in perceived position. Clearly these results are a consequence of adaptation to coherent unidirectional motion, rather than to any individual spatial components contributing to the motion sequence.

The neural processes that mediate motion-induced positional shifts have recently been investigated in both the visual and auditory systems. Studies of neurones in the cat (Fu et al. 2004) and monkey (Sundberg et al. 2006) visual cortex have suggested that motion signals have the capacity to induce dynamic shifts in the spatial representation of receptive fields (RF). Similarly, a very recent study examining auditory neurons in the owl’s optic tectum has also demonstrated a dynamic shift of spatial RFs in the presence of auditory motion (Witten et al. 2006). Critically however, in each sensory domain, the reported RF shift is in opposite directions. In the visual studies, the induced displacement in RF spatial representation occurs in a direction opposite to that of the motion signals inducing it. At first glance this seems surprising since it is in exactly the opposite direction to behavioural measurements of motion-induced positional shifts in human subjects, where the perceived location of a stationary object is displaced in the direction of motion (Ramachandran and Anstis 1990; De Valois and De Valois 1991; Arnold et al. 2007). However, as retinotopic location is derived from a population response (e.g. a vector average code, Georgopoulos et al. 1986), in order to produce a shift in the positional average derived from all active neurons, individual neurons would need to modify their spatial profiles in the opposite direction, whilst retaining their original positional labels within the map. In this scheme, shifts in the spatial profiles of individual neurons in one direction result in a mislocalisation of the vector average response in the opposite direction (Sundberg et al. 2006). Although visual RF shift models are compatible with the psychophysical observations we report here, where the spatial displacement of both visual and auditory test stimuli is in the opposite direction to that of the adapting stimulus, recent attempts to replicate the observations from animal studies using human functional neuroimaging techniques (fMRI) have failed. Specifically, rather than showing a displacement in the spatial preference of cortical RFs when motion signals are present, the retinotopic map of visual space found in the primary visual cortex (V1) remains invariant when measured with stimuli moving in different directions (Liu et al. 2006).

The RF shifts reported in the auditory domain, on the other hand, are qualitatively different to their visual counterparts (Witten et al. 2006). That is, the receptive field is displaced in the direction of motion, and presumably in the direction opposite to this following a period of adaptation, though this has not been explicitly tested. Furthermore, in the auditory domain, the behavioural and neurophysiological measures differ considerably in their speed tuning. We show that auditory motion-induced shifts in perceived location are band-passed tuned for speed, becoming minimal by 32°/s. In contrast, auditory motion-induced RF shifts recorded in the owl tectum are about twice as large and increase systematically as a function of speed, reaching a maximum value by 32°/s (Witten et al. 2006). Given this difference in operational characteristics it seems likely that these motion-induced shifts serve different functional roles. Witten and colleagues argue that the speed dependant nature of their RF shifts suggests they play an important role in adaptively compensating for unavoidable processing delays that are inherent to the analysis moving objects. The band-pass tuning of our behavioural effects are obviously inconsistent with such a role. Moreover, auditory RF shifts are mediated by a displacement of both edges of the receptive field (Witten et al. 2006), whilst motion-induced spatial misperceptions measured psychophysically, in the visual domain at least, are brought about by changes in apparent contrast at only one edge of the stimulus (Arnold et al. 2007; Tsui et al. 2007). Clearly, the physiological processes that mediate motion-induced positional shifts remain uncertain. What we do know is that despite reported differences in the underlying neurophysiology gathered from different species, the perceived displacement of stationary visual and auditory stimuli following motion adaptation is very similar in humans.

Within the visual domain, virtually every strand of previous research places the locus of interaction between motion and position at the level of area V5/MT. Adaptation-induced positional shifts display a lack of specificity for basic stimulus properties such as spatial frequency, orientation and contrast, consistent with adaptation occurring at this level (McGraw et al. 2002; Whitney 2005). In addition, the disruption of ongoing cortical activity (using transcranial magnetic stimulation) immediately following motion adaptation, dramatically reduces the magnitude of perceived spatial shifts that normally occur, when delivered to V5/MT, but has little or no effect when delivered to earlier cortical areas (V1) (McGraw et al. 2004). As yet, no auditory analogue of V5/MT has been firmly established. Candidate areas that have been investigated include the planum temporale (PT) (Baumgart et al. 1999), the premotor cortex (PMC) (Griffiths et al. 2000) and right parietal cortex (rPC) (Griffiths et al. 1998). However, most studies that have implicated these areas have contrasted neuronal activity associated with auditory motion with that of stationary control stimuli. Such a comparison is problematic, since selective cortical activity might still simply represent a response to source location, or changes therein, rather than motion per se. Indeed, when coherent motion is compared with a more appropriate control, which presents stationary stimuli that randomly change location over time, neuronal activity in areas such as PT and rPC is very similar, arguing against each being the seat of a specialised auditory motion area (Smith et al. 2004; Smith et al. 2007).

The inability to identify a dedicated auditory motion complex is not incompatible with the existence of a specialized auditory motion processing system. The visual and auditory systems may simply display differences in the spatial concentration of direction selective neurons. In area V5/MT virtually all neurons are direction selective, whereas in the AI motion-selective neurons are typically interspersed with non motion-selective neurons (Ahissar et al. 1992). The fact that motion-selective neurons are fewer in number and dispersed over wider cortical areas may make any auditory motion processing system more difficult to detect using current neuroimaging techniques. An alternative and intriguing possibility is that auditory positional shifts are mediated by cortical areas traditionally thought to analyse visual motion. One candidate is area V5/MT, which has shown to be active when subjects are presented with both auditory (Poirier et al. 2005) and tactile (Hagen et al. 2002) motion. Such a mechanism might explain our results by updating auditory position with respect to ongoing motion activity in the visual cortex. Results from our third experiment are consistent with this possibility, as the magnitude of auditory position shifts is similar regardless of whether participants adapt to visual or to auditory motion. However, alternative interpretations are possible. A recent investigation has shown that another form of auditory aftereffect (loudness aftereffect), resulting from exposure to radial visual motion, is mediated by a high-level attention-guided integrative spatial mechanism that operates between the visual and auditory systems (Hong and Papathomas 2006).

The existence of a specialised auditory motion processing system remains a matter of some debate. Psychoacoustical studies have produced conflicting evidence. Some support a system that infers motion direction and speed from a series of positional estimates accumulated over time—the so-called snapshot hypothesis (Grantham 1986). Others point to the reliable perception of motion properties such as acceleration and deceleration, where the time and distance travelled by motion stimuli are equated, as evidence for more sophisticated motion processing than that offered by a simple position-based mechanism (Perrott and Marlborough 1989; Perrott et al. 1992). Our results are clearly more compatible with the latter proposal. In the visual domain it is well established that motion detectors can be influenced by adaptation and this process results in a variety of perceptual aftereffects, such as the motion aftereffect (Barlow and Hill 1963) and adaptation-induced changes in spatial position (Snowden 1998; McGraw et al. 2002; Whitney and Cavanagh 2003; McGraw et al. 2004; Whitney 2005). However, systems that track features over time are not thought to induce such effects in static test stimuli (Anstis 1980; Badcock and Derrington 1985). Here we show that the perceived location of static auditory stimuli is directly modified by motion adaptation. We also show that these effects share similar properties with their visual counterparts. These results add to a growing literature highlighting considerable overlap in the processing mechanisms that encode visual and auditory motion.

References

Ahissar M, Ahissar E, Bergman H, Vaadia E (1992) Encoding of sound-source location and movement: activity of single neurons and interactions between adjacent neurons in the monkey auditory cortex. J Neurophysiol 67:203–215

Albright TD, Desimone R (1987) Local precision of visuotopic organization in the middle temporal area (MT) of the macaque. Exp Brain Res 65:582–592

Albright TD, Stoner GR (1995) Visual motion perception. Proc Natl Acad Sci USA 92:2433–2440

Altman JA (1968) Are there neurons detecting direction of sound source motion? Exp Neurol 22:13–25

Altman JA, Syka J, Shmigidina GN (1970) Neuronal activity in the medial geniculate body of the cat during monaural and binaural stimulation. Exp Brain Res 10:81–93

Anstis SM (1980) The perception of apparent motion. Philos Trans R Soc Lond B Biol Sci 290:153–168

Arnold DH, Thompson M, Johnston A (2007) Motion and position coding. Vision Res 47:2403–2410

Badcock DR, Derrington AM (1985) Detecting the displacement of periodic patterns. Vision Res 25:1253–1258

Barlow HB, Hill RM (1963) Evidence for a physiological explanation of the waterfall phenomenon and figural after-effects. Nature 200:1345–1347

Baumgart F, Gaschler-Markefski B, Woldorff MG, Heinze H, Scheich H (1999) A movement sensitive area in auditory cortex. Nature 400:724–726

Békésy GV (1960) Experiments in hearing. McGraw Hill, New York

Carlile S, Hyams S, Delaney S (2001) Systematic distortions of auditory space perception following prolonged exposure to broadband noise. J Acoust Soc Am 110:416–424

Chandler DW, Grantham DW (1992) Minimum audible movement angle in the horizontal plane as a function of stimulus frequency and bandwidth, source azimuth, and velocity. J Acoust Soc Am 91:1624–1636

De Valois RL, De Valois KK (1991) Vernier acuity with stationary moving gabors. Vision Res 31:1619–1626

Dong C, Swindale NV, Zakaruskas P, Hayward V, Cynader MS (2000) The auditory motion aftereffect: its tuning and specificity in the spatial and frequency domains. Percept Psychophys 62:1099–1111

Ehrenstein WH (1994) Auditory aftereffects following simulated motion produced by varying interaural intensity or time. Perception 23:1249–1259

Ehrenstein WH, Reinhardt-Rutland AH (1996) A cross-modal aftereffect: auditory displacement following adaptation to visual motion. Percept Mot Skills 82:23–26

Fu Y, Shen Y, Gao H, Dan Y (2004) Asymmetry in visual cortical circuits underlying motion-induced perceptual mislocalization. J Neurosci 24:2165–2171

Georgopoulos AP, Schwartz AB, Kettner RE (1986) Neuronal population coding of movement direction. Science 233:1416–1419

Grantham DW (1986) Detection and discrimination of simulated motion of auditory targets in the horizontal plane. J Acoust Soc Am 79:1939–1949

Grantham DW (1989) Motion aftereffects with horizontally moving sound sources in the free field. Percept Psychophys 45:129–136

Grantham DW (1998) Auditory motion aftereffects in the horizontal plane: the effects of spectral region, spatial sector and spatial richness. Acta Acustica 84:337–347

Grantham DW, Leuthke LE (1988) Detectability of tonal signals with changing interaural phase differences in noise. J Acoust Soc Am 83:1117–1123

Grantham DW, Wightman FL (1979) Auditory motion aftereffects. Percept Psychophysiol 26:403–408

Griffiths TD, Green GGR, Rees A, Rees G (2000) Human brain areas involved in the analysis of auditory movement. Hum Brain Mapp 9:72–80

Griffiths TD, Rees G, Rees A, Green GGR, Witton C, Rowe D, Büchel C, Turner R, Frackowiak SJ (1998) Right parietal cortex is involved in the perception of sound movement in humans. Nat Neurosci 1:74–78

Hagen MC, Franzen O, McGlone F, Essick G, Dancer C, Pardo JV (2002) Tactile motion activates the human middle temporal/V5 (MT/V5) complex. Eur J Neurosci 16:957–964

Harris JD, Sargeant RL (1971) Monaural-binaural minimum audible angles for a moving sound source. J Speech Hear Res 14:618–629

Hong J, Papathomas TV (2006) Influences of attention on auditory aftereffects following purely visual adaptation. Spat Vis 19:569–580

Jenison RL, Schnupp JWH, Reale RA, Brugge JF (2001) Auditory space-time receptive field dynamics revealed by spherical white-noise analysis. J Neurosci 21:4408–4415

Kelly DH (1979) Motion and vision. II. Stabilized spatio-temporal threshold surface. J Opt Soc Am 69:1340–1349

King AJ (1993) A map of auditory space in the mammalian brain: neural computation and development. Exp Physiol 78:559–590

King AJ, Palmer AR (1985) Integration of visual and auditory information in bimodal neurones in the guinea-pig superior colliculus. Exp Brain Res 60:492–500

Kitagawa N, Ichihara S (2002) Hearing visual motion in depth. Nature 416:172–174

Klumpp RG, Eady HR (1956) Some measurements of interaural time difference thresholds. J Acoust Soc Am 28:859–860

Lennie P (1981) The physiological basis of variations in visual latency. Vision Res 21:815–824

Liu J, Newsome WT (2003) Functional organization of speed tuned neurons in visual area MT. J Neurophysiol 89:246–256

Liu JV, Ashida H, Smith AT, Wandell BA (2006) Assessment of stimulus-induced changes in human V1 visual field maps. J Neurophysiol 96:3398–3408

Maffei L, Fiorentini A, Bisti S (1973) Neural correlate of perceptual adaptation to gratings. Science 182:1036–1038

Maunsell JH, Van Essen DC (1983) The connections of the middle temporal visual area (MT) and their relationship to a cortical hierarchy in the macaque monkey. J Neurosci 3:2563–2586

McGraw PV, Whitaker D, Skillen J, Chung ST (2002) Motion adaptation distorts perceived visual position. Curr Biol 12:2042–2047

McGraw PV, Walsh V, Barrett BT (2004) Motion-sensitive neurones in V5/MT modulate perceived spatial position. Curr Biol 14:1090–1093

Middlebrooks JC, Green DM (1991) Sound localization by human listeners. Annu Rev Psychol 42:135–159

Neelon MF, Jenison RL (2003) The effect of trajectory on the auditory motion aftereffect. Hear Res 180:57–66

Neelon MF, Jenison RL (2004) The temporal growth and decay of the auditory motion aftereffect. J Acoust Soc Am 115:3112–3123

Perrone JA, Thiele A (2001) Speed skills: measuring the visual speed analyzing properties of primate MT neurons. Nat Neurosci 4:526–532

Perrott DR, Marlborough K (1989) Minimum audible movement angle: marking the end points of the path traveled by a moving sound source. J Acoust Soc Am 85:1773–1775

Perrott DR, Constantino B, Ball J (1992) Discrimination of moving events which accelerate of decelerate over the listening interval. J Acoust Soc Am 93:1053–1057

Poirier C, Collignon O, DeVolder AG, Renier L, Vanlierde A, Tranduy D, Scheiber C (2005) Specific activation of the V5 brain area by auditory motion processing: an fMRI study. Brain Res Cogn Brain Res 25:650–658

Pralong D, Carlile S (1996) Generation and validation of virtual auditory space. In: Carlile S (ed) Virtual auditory space: generation and applications. Landes, Austin, pp 109–152

Priebe NJ, Cassanello CR, Lisberger SG (2003) The neural representation of speed in macaque area MT/V5. J Neurosci 23:5650–5661

Ramachandran VS, Anstis SM (1990) Illusory displacement of equiluminous kinetic edges. Perception 19:611–616

Rayleigh L (1907) On our perception of sound direction. Philos Mag 13:214–232

Reinhardt-Rutland AH (1992) Changing-loudness aftereffect following simulated movement: implications for channel hypotheses concerning sound level change and movement. J Gen Psychol 119:113–121

Smith KR, Okada K, Saberi K, Hickok G (2004) Human cortical auditory motion areas are not motion selective. Neuroreport 15:1523–1526

Smith KR, Saberi K, Hickock G (2007) An event-related fMRI study of auditory motion perception: No evidence for a specialized cortical system. Brain Res 1150:94–99

Snowden RJ (1998) Shifts in perceived position following adaptation to visual motion. Curr Biol 8:1343–1345

Sundberg K, Fallah M, Reynolds J (2006) A motion-dependent distortion of retinotopy in area V4. Neuron 49:447–457

Taylor MM (1962) The distance paradox of the figural after-effect in auditory localization. Can J Psychol 16:278–282

Thurlow WR, Jack CE (1973) Some determinants of localization-adaptation effects for successive auditory stimuli. J Acoust Soc Am 53:1573–1577

Toet A, Koenderink JJ (1988) Differential spatial displacement discrimination thresholds for gabor patches. Vision Res 28:133–143

Tsui SY, Khuu SK, Hayes A (2007) The perceived position shift of a pattern that contains internal motion is accompanied by a change in the pattern’s apparent size and shape. Vision Res 47:402–410

Van Essen DC, Maunsell JHR (1983) Hierarchical organization and functional streams in the visual cortex. Trends Neurosci 6:370–375

Van Wezel RJA, Britten KH (2002) Motion adaptation in area MT. J Neurophysiol 88:3469–3476

Warren JD, Zielinski BA, Green GGR, Rauschecker JP, Griffiths TD (2002) Perception of sound-source motion by the human brain. Neuron 34:139–148

Whitaker D, McGraw PV, Levi DM (1997) The influence of adaptation on perceived visual location. Vision Res 37:2207–2216

Whitaker D, Bradley A, Barrett BT, McGraw PV (2002) Isolation of stimulus characteristic contributing to Weber’s law for position. Vision Res 42:1137–1148

Whitney D (2005) Motion distorts perceived position without awareness of motion. Curr Biol 15:R324–R326

Whitney D, Cavanagh P (2003) Motion adaptation shifts apparent position without the motion aftereffect. Percept Psychophys 65:1011–1018

Whitney D, Goltz HC, Thomas CG, Gati JS, Menon RS, Goodale MA (2003) Flexible retinotopy: motion-dependent position coding in the visual cortex. Science 302:878–881

Wightman F, Kistler D (2005) Measurement and validation of human HRTFs for use in hearing research. Acta Acustica 91:429–439

Wilcott RC, Gales RS (1954) Comparison of the masked thresholds of a simulated moving and stationary auditory signal. J Exp Psychol 26:136

Witten IB, Bergan JF, Knudsen EI (2006) Dynamic shifts in the owl’s auditory space map predict moving sound location. Nat Neurosci 9:1439–1445

Xiao X, Grantham DW (1997) The effect of a free-field auditory target’s horizontal motion on its detectability. J Acoust Soc Am 102:1907–1910

Zeki SM (1974) Functional organization of a visual area in the posterior bank of the superior temporal sulcus of the rhesus monkey. J Physiol (Lond) 236:549–573

Acknowledgments

This work was funded by The Wellcome Trust, UK. We are grateful to Bose Ltd., Knowles Europe and Oto-tech Limited for providing equipment for this experiment.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Deas, R.W., Roach, N.W. & McGraw, P.V. Distortions of perceived auditory and visual space following adaptation to motion. Exp Brain Res 191, 473–485 (2008). https://doi.org/10.1007/s00221-008-1543-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-008-1543-1