Abstract

Summary

Fracture risk assessments on bone mineral density reports guide family physicians’ treatment decisions but are subject to inaccuracy. Qualitative analysis of interviews with 22 family physicians illustrates their pervasive questioning of reported assessment accuracy and independent assumption of responsibility for assessment. Assumption of responsibility is common despite duplicating specialists’ work.

Introduction

Fracture risk is the basis for recommendations of treatment for osteoporosis, but assessments on bone mineral density (BMD) reports are subject to known inaccuracies. This creates a complex situation for referring physicians, who must rely on assessments to inform treatment decisions. This study was designed to broadly understand physicians’ current experiences with and preferences for BMD reporting; the present analysis focuses on their interpretation and use of the fracture risk assessments on reports, specifically

Methods

A qualitative, thematic analysis of one-on-one interviews with 22 family physicians in Ontario, Canada was performed.

Results

The first major theme identified in interview data reflects questioning by family physicians of reported fracture risk assessments’ accuracy. Several major subthemes related to this included questioning of: 1) accuracy in raw bone mineral density measures (e.g., g/cm2); 2) accurate inclusion of modifying risk factors; and 3) the fracture risk assessment methodology employed. A second major theme identified was family physicians’ independent assumption of responsibility for risk assessment and its interpretation. Many participants reported that they computed risk assessments in their practice to ensure accuracy, even when provided with assessments on reports.

Conclusions

Results indicate family physicians question accuracy of risk assessments on BMD reports and often assume responsibility both for revising and relating assessments to treatment recommendations. This assumption of responsibility is common despite the fact that it may duplicate the efforts of reading physicians. Better capture of risk information on BMD referrals, quality control standards for images and standardization of risk reporting may help attenuate some inefficiency.

Similar content being viewed by others

Introduction

In recent years, assessment of fracture risk has emerged as the basis for treatment recommendations for osteoporosis. The 2010 Osteoporosis Canada guidelines explicitly recommend that physicians who refer patients for a bone mineral density (BMD) test make decisions about pharmacological therapy based on risk [1]. Organizations like the International Society for Clinical Densitometry (ISCD) [2] and the Canadian Association of Radiologists (CAR) [3] recommend that these risk assessments appear, when they are applicable, on BMD reports.

However, assessment of risk is nuanced for several reasons. Most significantly, risk assessment depends on information above and beyond raw BMD results [1]. Specialists who generate BMD reports must attend to additional risk factors, such as corticosteroid and fragility fracture history, in order to ensure risk assessment accuracy [4]. These additional risk factors, however, may not readily be available to reading specialists, resulting in assessments that are based on incomplete information. As an example, in a 2008 survey of BMD reports for individuals with a known history of fragility fracture, more than 50 % of the reports made no mention of previous fracture; reported risk assessments were underestimated on these reports as a result [5]. A 2006 survey of more than 700 clinician members of the ISCD found that 71 % had seen BMD reports with interpretation errors; 25 % reported that they saw errors more than once a week [6].

In addition to their dependence on modifying clinical factors, fracture risk assessments are complicated by the fact that they can be computed using a variety of heuristics from different clinical practice guidelines. A recent systematic review of fracture assessment methods identified 12 different externally validated fracture risk assessment tools available for use [7]. The Canadian Association of Radiologists (CAR) has sanctioned the use of the Canadian Association of Radiologists and Osteoporosis Canada tool (CAROC) for fracture risk assessment in the Canadian population [8], but a commonly used and similarly sanctioned alternative is the Canadian version of the FRAX assessment tool [9].

An accurate risk assessment therefore requires reporting clinician not only to accurately image bone but also to accurately determine modifying clinical factors corresponding to an assessment heuristic; these are independent of imaging results. This makes BMD reporting a relatively time-consuming process, as clinical factors must be both gathered and verified by imaging facilities. Reimbursement for the time to create BMD reports, however, has been increasingly scrutinized both in the US [10] and Canada [11]. While this scrutiny has curbed rates of potentially unnecessary BMD testing in Ontario [12], it may also be encouraging diagnostic imaging facilities to limit the resources dedicated to the BMD reporting process. Indeed, while detail-rich “consultative reports” have been found to be both appreciated by reading physicians and to positively influence care [13], they are understood to be time-consuming to produce and are therefore vulnerable to funding limitations.

The purpose of this study was to understand family physicians’ current experiences with and preferences for BMD reporting in Ontario, Canada [1]; the present analysis focuses on their experiences receiving and interpreting the fracture risk assessments on reports, specifically. In Ontario, Canada, the majority of BMD tests are ordered by family physicians.

Materials and methods

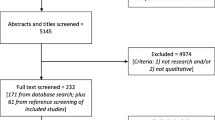

Study design and overview

In order to identify BMD-reporting issues commonly encountered by referring physicians, a qualitative methodology was used involving thematic analysis of data from one-on-one interviews [14]. The purpose of the interviews was to yield rich, but simple descriptions of problems and issues encountered so that recommendations for BMD referrals and reports could be adjusted accordingly. While interviews covered a broad range of topics related to reporting and referral, results presented here focus on findings related to the interpretation and use of fracture risk assessments, in particular. The pragmatic nature of the work draws from qualitative description, which is a qualitative research approach known to yield practical answers of relevance to policy makers and health practitioners [15, 16].

Recruitment

Family physicians were recruited between November 2011 and June 2012 through an event on osteoporosis held by the Ontario College of Family Physicians. Recruited physicians were asked to identify additional physicians who might be interested in participating; these additional physicians were also approached to participate as the study progressed [17]. Requirements for participation were that the physicians be English-speaking, in active family practice in Ontario, and have a history of ordering and receiving results of BMD tests for patients. Approval for the study was obtained from the Research Ethics Board at Women’s College Research Institute (Protocol Reference #2008-0064-E).

Recruitment ceased when the researchers determined that data saturation had been reached, which is the point when successive interviews become repetitive and no new significant responses or major themes were discussed [17].

Data collection

Interviews consisted of questions related to the following topic areas: (1) ordering or referring for BMD exams; (2) interpreting and utilizing BMD exam results; and (3) communicating results to patients. Probes for each topic area were developed to explore issues in depth and to verify interviewers’ understanding of the information being collected. The interview guide was pilot tested with both a family physician and a researcher experienced in qualitative research in osteoporosis (JS).

Two members of the research team (SM, LC) were responsible for conducting interviews. All interviews took place either in person or over the telephone. Each lasted an average of 45 min, was digitally recorded, and subsequently transcribed. All interviews closed with questions designed to capture basic demographic information of each participant including roster size and the location of practice.

Data analysis

To summarize the data, an inductive thematic analysis of transcripts was performed [18]. To do this, the researchers periodically met to review incoming interview data and become familiar with the themes of interviews as they were being consolidated. Two of the researchers (SM, LC) labeled or “coded” transcripts so as to highlight themes; the themes were then clustered and clusters refined as analysis progressed. To facilitate coding and reflection upon themes, notes from interviews and transcripts were analyzed. NVivo 9 (QSR, Victoria, Australia), a qualitative data storage software package, was used to facilitate both coding and analysis of transcripts.

Results

Study participants

Twenty-two family physicians with practices located in urban or suburban Ontario participated in interviews. Of these, five were from the Ontario College of Family Physicians’ event on osteoporosis, and the remainder were identified by prior participants through iterative sampling. The mean roster size of the physicians was 1,208, and participants reported seeing an average of four BMD reports in a week.

Overview of findings

“Fracture risk” was identified early in the analysis as an organizing theme bridging several clusters of codes and spanning many topic areas (i.e., referral, report interpretation, and report communication). Under the umbrella of this theme, two major subthemes were developed: (1) questioning by family physicians of reported fracture risk assessments’ accuracy and (2) family physicians’ independent assumption of responsibility for risk assessment and its interpretation. Results that illustrate these themes were organized by the lead author (SA) and verified by some members of the research team (SA, SM, LC, and SJ).

Questioning by family physicians of reported fracture risk assessments’ accuracy

During interviews, the majority of family physicians indicated that they questioned the accuracy of the risk assessments on BMD reports. The specific manner in which the accuracy of fracture risk assessments was questioned, however, varied significantly. Three major subcategories were identified in an effort to capture this variation; these reflected questioning by family physicians of: (1) accuracy in raw bone mineral density measures (e.g., g/cm2); (2) accurate inclusion of modifying risk factors; and (3) the fracture risk assessment methodology employed.

Among physicians that questioned accuracy of raw bone mineral density measures, some identified technical factors as probable sources of error while others identified factors associated with interpretation of images with artifacts. Accuracy concerns related to technical factors were, in general, very broadly described by participants and related either to antiquated or ill-maintained equipment. As an example, Participant #7 had several patients call a particular scanning facility “dirty,” and added:

“…because it was a private [facility], I’m not really sure how old the scanner was. I mean, if patients complain the place isn’t clean, you kind of wonder about the equipment.”

Participant #19 also questioned the accuracy of raw BMD measures on reports, but implicated a probable source of error to lie in the interface between the BMD scanning machine and reports. This participant described scanning attachments to reports to verify raw BMD data:

“I do skim the actual absolute numbers because sometimes… you know, we’re all human. Sometimes they report [raw BMD]… incorrectly.”

Other participants, by contrast, identified compromising issues related to the clinical interpretation of raw BMD measures, particularly for patients with osteoarthritis or manifest degenerative change in the spine. As Participant #2 explained:

“I say ‘this patient has osteoarthritis, I don’t care what the report tells me’…. [On the report] the bone density is normal and people will say ‘but I’m normal!’ I say ‘no, but you fractured so you’re not normal’. This is my problem with bone density.”

Rather than commenting on inaccuracy due to artifacts, Participant #7 commented on inaccuracy due inappropriate choices of regions of interest:

“Some facilities… do not report the femoral neck T-score at all. They report total hip. If you don’t have your eyes open and look carefully, you could use the wrong T-score. I have to go through the subsequent pages and find femoral neck…”

A more substantial number of participants, however, focused their questioning around reports’ inclusion of modifying risk factor information. Participants pointed specifically to the fact that age constraints, fracture history, treatment status, or other clinical variables were often missing from the BMD reports they received. Participant #18 explained:

“Sometimes they’re wrong…. I’ve seen people not put in fractures [on reports] when I know that [the patient] had them.”

Similarly, Participant #13 noted missing risk factors on reports, as well as missing assessments on reports, where fracture risk was applicable:

“Risk factors are not incorporated into reports consistently…. If you look at the various reports, there are some whereby they’re still reporting just the T-scores.”

Opinions regarding why and how modifying risk factors might be incorrectly factored into assessments were various. Three participants explained that, when patients are asked about risk factors, the resulting information is generally low in quality. As an example of this, Participant #6 described a study in which a resident compared risk factors reported by patients on patient questionnaires to clinical data stored in patient charts:

“It was kind of interesting that even when you get patients to fill [the questionnaires], a lot of times they’re either incomplete or they’re actually not accurate.”

Several physicians, however, indicated that they felt specific clinicians played a role in inaccurate capture of modifying patient risk factors. For example, a few family physicians questioned technologists’ ability to gather accurate or relevant information about modifying risk factors.

“It really depends on who’s taking the [patient] history at the bone density reporting facility in terms of the consistency of the information that you’re going to get from the patient, because not all fractures are fragility fractures. You need a… technologist educated around fragility fractures and other risk factors.” (Participant #13)

Participant #18 also focused questioning around technologists’ ability to accurately collect patient information:

“[Technologists] don’t always have stuff about falls. Sometimes they’re wrong…. you know, someone’s forgotten they’ve had a fracture or they think they had a fracture when they actually didn’t or something like that. There’s a whole lot of different kinds of things that make you just kind of wonder if they really know the person… like, we probably know them better.”

Like Participant #18, many of the interviewed family physicians identified themselves as better sources of risk factor information than the clinicians at scanning facilities. Participant #8, for example, posited that:

“[Reports] will have comments…. ‘this person just recently had a fracture’, because they asked through the history. But I know a little bit more.”

To address the information gap between the family physicians and the radiologists, Participant #19 described modifying the standard BMD requisitions in an effort to better communicate risk factors to reading radiologists:

“I provide whatever I think they need [on the requisition] instead of just circling high risk. My radiology colleagues and friends, they often go like ‘yeah we are blind’… because the radiologists don’t always have a chance to see the patient.”

A relatively small number of participants, by contrast, felt that modifying risk factor information assembled by BMD scanning facilities might actually be more trustworthy than the same information, assembled by family doctors. The reasons for this revolved around the hectic pace of family doctors’ offices as well as the relative level of unfamiliarity on the part of many family physicians with relevant risk factors. As an example, Participant #21 noted, “if the office is crazy then in may be hard for us to look at all the risk factors.” Participant #11 explained that older family doctors, in particular, may not be familiar with all the modifying risk factors emphasized by recent clinical guidelines [1]. This participant volunteered that, upon returning to practice after a hiatus:

“I had a conversation with my residents and my medical students about [recent guidelines] and… it wasn’t anything new to them. It was relatively new to me. I think people in my generation, the message isn’t getting out… I’m going to have to look [the new guidelines] up a few times [before] it’s going to be stuck in my brain.”

As illustrated above, questioning of reported risk accuracy related to raw BMD measures or missing modifying factors was extensively represented in the interview data. In addition, a relatively small but rich subcategory of questioning focused on fracture risk assessment methodologies. This encapsulated both questioning of the CAROC as a potentially incomplete or excessively abbreviated assessment tool and questioning of fracture risk assessments’ application to older patients with multiple, complex conditions. As an example, two participants (#7 and #3) expressed a basic skepticism of the CAROC assessment tool due to the fact that it incorporates a limited number of modifying risk factors relative to competing assessment tools, like the FRAX. As Participant #7 explained:

“The CAROC doesn’t incorporate the smoking or the alcohol or the parental hip fracture or whatever. It only incorporates glucocorticoids and a previous fracture…. They said it’s just as good [as the FRAX], but it’s not.”

Questioning of fracture assessment methodology also focused on assessment tools’ applicability to older, more “fragile” individuals and those with multiple conditions. Examples of this variety of questioning follow:

“My patient population is 75 plus. The amount of research that goes into just baseline figuring out what the prognosis is of someone at the age of 75, 80 or 85 is nonexistent. They’re excluded from almost every randomized controlled trial. So I’m not sure what business we have of prognosticating 10-year risks on people over the age of 80…. 10-year risks are ridiculous. Nobody knows what’s going to happen 10 years down the line.” (Participant #17)

“If the person is 85 and they’re likely not to live more than a few years, maybe it doesn’t matter if the risk is high over 10 years.” (Participant #15)

In summary, while questioning of the accuracy of the components used to produce risk assessments (i.e. raw measures of BMD and information about modifying factors) was pervasive, a small but vocal group of physicians also questioned the basic methodology used to arrive at 10-year fracture risk assessments. Some felt the CAROC, which is commonly used by radiologists in Canada, to be incomplete or inferior to the FRAX while others felt the 10-year horizon on risk assessments to be inapplicable to their older patients, in particular.

Family physicians’ independent assumption of responsibility for risk assessment and its interpretation

Many family physicians also described independently taking steps either to recompute reported assessments entirely or to verify components of fracture risk. While only a few physicians reported exhaustively recomputing fracture risk assessments, a relatively large number reported verifying elements like raw BMD measures or modifying factors. Others described recomputing assessments using tools like the FRAX, but only when practical constraints allowed.

As examples of physicians that regularly recomputed reported fracture risks in their entirety, Participants #3 and #7 both described deriving FRAX assessments using reported BMD. Participant #7, for example, reported scanning BMD reports to find femoral neck T-scores and extracting these to “put in [the] FRAX.” While Participant #7 acknowledged that this practice added substantial time to the reporting process, the participant strongly asserted the need for family physicians to dedicate this additional time in order to ensure reporting accuracy:

“[A fracture risk assessment] does take time to work out; I’m not going to pretend it doesn’t. But… we need to focus on the main priorities in medicine. When osteoporosis kills more people that so many other major diseases like strokes and heart attacks and even breast cancer, really there’s no excuse to say ‘I don’t have the time to do a FRAX or a CAROC’.”

Similarly, Participant #3 described regularly calculating FRAX assessments for patients using the BMD measures contained in reports. This participant stressed recomputation to be particularly important for patients labeled “at risk:”

“If [the report] is at moderate and also if it’s at high risk, I would still want to confirm it’s at high risk by doing a FRAX score…. I basically just go into it and do the calculation when I’m presented with the BMD.”

Like Participant #7, Participant #3 noted that the practice of recomputing assessments added time-consuming steps to the task of report interpretation, explaining: “I have to put the numbers in [to the FRAX calculator], get the calculation, print it and then scan it into the EMR.”

Participant #13 also described recomputation of risk as a routine:

“I don’t trust the actual summaries [on BMD reports] anymore. I still look at the T-scores. I still use the CAROC method for assessing risk.”

And like Participant #7, this participant emphasized the need for family physicians to assume assessment responsibility despite practical constraints:

“I liken [10-year fracture risk assessment] to the Framingham Risk Assessment that we’ve been doing for years. I don’t think anything of getting the cholesterol back and then punching in the other risk factors… and coming up with an assessment. Assessing 10-year fracture risk is really no different than that. Rather than a cholesterol value, you’ve for a T-score, you know.”

Other physicians, by contrast, did not describe consistently dedicating the time to completely recompute fracture risk assessments. Rather, a substantial number reported verifying components of reported risk assessments (generally modifying risk factors). For example, Participant #8 detailed the steps taken to ensure consistent inclusion of risk factors on reports:

“What I am doing now and I find it very helpful is I copy the 10-year risk factors, I throw them in the chart, and then every time I plot them as well. The first time [a patient gets a BMD] I’ll put all their risk factors, the minor and the major. [I] tick [risk factors] off and see on the next one… if it’s changed.”

Participants #5 and #10 similarly described consulting charts after receiving a BMD exam to validate modifying risk factors; as Participant #10 explained:

“I pull the chart. Sometimes I know the patient well and I sort of say, ok, I don’t remember anything like that… so I don’t bother looking. If I don’t know the patient well I pull [the chart] and start looking through it quickly, to make sure that there are no fractures that I can find.”

Participants #14 and #20, by contrast, explained that they would sometimes recalculate assessments, but relatively infrequently. As Participant #20 explained:

“I will plug things into the FRAX… but it takes a little time, so I usually don’t.”

This same participant expressed mixed feelings about assuming responsibility for risk assessment calculations, explaining: “I should [do the FRAX] because I know it’s there. I don’t know, there is no good reason why I’m not using it.”

In total, more than half of the participants indicated that they either recomputed reported risk assessments or took steps to verify risk assessments in some fashion. Those that made it a practice of routinely and exhaustively recomputing risk assessments acknowledged the process to be time-consuming, but most shared a feeling that dedication of the time was a responsibility. Other participants reported less time-consuming methods to verify components of assessments or to gauge overall accuracy. The sense of personal responsibility for ensuring assessment accuracy was less forcefully expressed in this group.

Those physicians that routinely recomputed assessments also tended to assume sole responsibility for arriving at treatment recommendations. Several in the group, in fact, described their ideal reports as devoid of treatment recommendations. Examples follow:

“For me, ideally, one page is fine. It means less scanning. Just the T-scores of the spine and the femoral neck and that’s it because I do my own fracture risk anyway.” (Participant # 7)

“The way the report is [right now], it doesn’t help me to make the decision about treatment… [but] I’m not saying it doesn’t help me. I need the T-score from it.” (Participant #3)

Participant #3, however, acknowledged that treatment recommendations were theoretically useful on reports for “at-risk” patients:

“What you should be sending me is ‘high risk should mean a patient should be treated’… moderate risk, help us out a bit, [say] ‘treat or don’t treat’, you know.”

Participants who described less exhaustive verification of risk assessments rather than overt recomputation, by contrast, expressed more mixed opinions as to the value of treatment recommendations on reports. Participant #19, for example, expressed appreciation for recommendations as follows:

“If they dictate a recommendation to start something based on what they see, it’s great… whether it’s a reminder or because they read so much they know the guidelines better or something. At least then it’s like a checkmark for us, too, so it sort of helps share the care.”

Participant #18, however, who described validating risk assessments, claimed that in treatment recommendations:

“… I see a lot of variations in what I think are really basically the same clinical scenario and I get different recommendations from two different people. So [the recommendations] don’t really make sense to me.”

Similarly, Participant #5, who described consulting charts to verifying risk, asked of reading radiologists:

“Don’t recommend anything to me. I’m the one who has to make the judgment as to if a patient should be treated and with what.”

In summary, participants that reported routinely recomputing risk assessments in their entirety and as a routine also described assuming responsibility for treatment recommendations; many of these same individuals explained that they relied on the BMD report for T-scores alone. Some, however, added that treatment recommendations would theoretically be of use on reports, particularly for patients categorized as ‘at risk’. By contrast, most participants that either verified parts of assessments or recomputed them only on occasion were less dismissive of treatment recommendations. While a few claimed not to value treatment recommendations, others described responsibility for treatment recommendations as shared between them and the reading radiologist. Many in this latter group explained that they would weigh radiologists’ treatment recommendations, even while they sometimes questioned the accuracy of radiologists’ overall assessments.

Discussion

Questioning by family physicians of reported fracture risk assessments’ accuracy

In the current qualitative study of family physicians, questioning of fracture risk accuracy on reports was pervasive with three underlying themes: questioning of raw BMD measures/images, accurate inclusion of risk factors, and the validity of specific fracture risk assessment tools.

Results here indicate substantial concern among family physicians as to the raw image quality of bone mineral density exams. This reflects the pervasive concern about BMD report quality documented in a 2006 survey of ISCD members [6] and the frequency of positioning errors, particularly at the spine and hip [19]. The ISCD has, in fact, developed an accreditation program for facilities that addresses several of the imaging quality concerns addressed by participants here [20, 21]. Other imaging modalities have benefited from accreditation; the image quality of mammograms, for example, has notably improved since the implementation of the Mammography Quality Standards Act (MSRA) [22] and its requirement for US-based mammography facilities to meet uniform standards. In Canada, similar improvements to mammograms have been noted, due to factors that include improved technology and related measures for quality control [23]. The ISCD accreditation program for BMD exams, however, has yet to penetrate Canada, and the ISCD Website lists fewer than 40 accredited imaging facilities in the US as of 2013 [24].

Results also indicate some family physicians lack confidence that imaging artifacts, most notably those due to spinal osteoarthritis or spine degeneration, are reliably addressed in BMD reports. According to CAR’s Technical Standards for BMD reporting, such artifacts should be clearly noted and considered on BMD reports when and where they exist [3]. It is not possible, however, given the data in this study, to determine if consideration of artifacts was in fact lacking on reports received and described by participants.

Questioning related to the accurate inclusion of modifying factors reflects recent evidence suggesting factors like fracture history [5] may not, in fact, reliably be captured on BMD reports. Our results indicate many family physicians are independently taking steps to ensure such factors are reliably incorporated into reported fracture risk assessments. Not all physicians in the study, however, felt that they could confidently or reliably recall factors that modify risk. The CAR 2010 Technical Standards for BMD indicate that the “specific history employed in risk determination” should appear on BMD reports for all patients [3]; our results indicate that an exhaustive list of relevant modifying factors may additionally serve to remind physicians of factors that require attention. An exhaustive list, moreover, may help physicians better identify when and where the FRAX, as opposed to the CAROC, has been used.

In addition, results indicate that the sources of modifying risk factor information may influence family physicians’ acceptance of the accuracy of this information. Several questioned information provided on patient questionnaires or collected by technologists. Questioning of information provided on patient questionnaires is, in fact, well founded; in a recent survey of more than 6,500 post-menopausal female patients in the US, for example, almost 50 % of documented spine fractures and 20 % of documented hip fractures were missed in patient self-reports [25]. A potential solution to this issue would be to provide modifying risk factor information on referral forms. Participant #19, in fact, described modifying existing referral forms to better communicate modifying factors to reading radiologists, as is detailed in the study results. Explicit capture of clinical factors relevant to risk assessment has, moreover, already become standard practice on the BMD referral forms in the provinces of British Columbia [26], Nova Scotia, and Manitoba. Whether information from referral forms reliably propagates to reports, however, remains to be determined.

Finally, results demonstrate that a small group of family physicians are skeptical of the CAROC’s equivalence to the FRAX assessment tool, particularly when applied to individuals at the boundary of moderate and high risk. Indeed, when the CAROC and FRAX were validated in two Canadian cohorts, the discordance in risk classifications assigned by either tool was found to be greater than 10 % [27]. Clearer guidelines or physician education as to the significance of such discrepancies and the corresponding clinical implications may therefore be warranted.

Family physicians’ independent assumption of responsibility for risk assessment and its interpretation

The most notable result in this study is that a significant percentage of participants reported actively dedicating time to recalculating or verifying fracture risk assessments on BMD reports. The amount of time that was dedicated related to their interest in treatment recommendations. Those individuals that recalculated risk entirely also tended to disregard radiologists’ treatment recommendations. Those that verified components of assessments expressed relatively mixed opinions about the utility of radiologists’ treatment recommendations.

Prior research has indicated referring physicians to be strongly divided in their views of treatment recommendations on BMD reports [28, 29]. In a study of referring physicians performed by Binkley and Krueger [28], 20 % of participants indicated that treatment recommendations were “not required” or “definitely unnecessary” on BMD reports while 66 % found them either “essential” or “useful.” In a similar survey of physicians referring for a wide range of ultrasounds, 9 % indicated “recommendations of further nonradiological investigation” not to be of use while 71 % expressed that they valued these recommendations [29]. Results presented here, however, suggest that questioning of the accuracy in reported risk assessments may moderate physicians’ interest in the treatment recommendations that follow.

More importantly, however, the present study suggests a substantial inefficiency in the current BMD reporting process. About a half of the participants explained that they were dedicating some time to the reporting process, (e.g., to recalculate the 10-year fracture risk assessment). Some participants additionally explained that they disregarded treatment recommendations on reports, meaning any work performed by the radiologist to produce an assessment and relate it to a recommendation was lost. By contrast, other participants explained that they spent time to check assessments yet still weighed treatment recommendations on reports in their final decisions. In essence, these individuals expressed a desire for the kind of detail-rich, “consultative” BMD reports that have been demonstrated to improve care but have also been found to be time-consuming for specialists to produce [13]. These same participants, however, contributed their own time to the reporting process even in the presence of these consultative reports. In many reporting scenarios, then, substantial duplication of efforts was found to be taking place.

Limitations

A significant limitation of the current study is that participants were initially recruited via an educational event related to osteoporosis and subsequently recruited through peer networks. As such, most of the participants had an active interest in the area of osteoporosis and a high degree of expertise related to the subject. Awareness of reporting errors in this sample then may not reflect awareness on the part of most family physicians in Ontario. In fact, it is relatively safe to assume that any gaps in the knowledge or practices of the interviewed “experts” would be magnified in a sample of family physicians from the general population. Similarly, the interviewed participants were all from urban or suburban areas. It is unknown whether differences in knowledge and practice would have been observed between physician participants here and those with practices in rural areas.

Nevertheless, this study does suggest there is an ongoing problem with the way fracture risk assessments are produced and translated into treatment recommendations. Fracture risk assessments on BMD reports are often inaccurate as they depend on clinical information that radiologists may find difficult to access [5]; obtaining reliable risk factor information is time-consuming as is translating this information onto assessments and treatment recommendations. At the same time, reimbursement for BMD testing is on the decline internationally [10, 11] which offers clinicians less incentive to assume responsibility for risk reporting and the provision of guideline-driven recommendations. Despite this, obtaining an accurate fracture risk assessment and corresponding treatment recommendations, especially for those at high risk, is of paramount importance to the goal of reducing fractures. The current situation appears to be one in which some family physicians are modifying their behaviors in order to fill care gaps, despite a lack of compensation, duplication of efforts, and other practical constraints. Better capture of risk information on BMD referrals, quality control standards for images, and standardization of risk reporting may help attenuate some of this ambiguity and inefficiency.

References

Papaioannou A, Morin S, Cheung AM, Atkinson S, Brown JP, Feldman S, Hanley DA, Hodsman A, Jamal SA, Kaiser SM, Kvern B, Siminoski K, Leslie WD, Scientific Advisory Council of Osteoporosis Canada (2010) 2010 clinical practice guidelines for the diagnosis and management of osteoporosis in Canada: summary. Can Med Assoc J 182(17):1864–1873

Writing Group for the ISCD Position Development Conference (2004) Indications and reporting for dual-energy X-ray absorptiometry. J Clin Densitom 7(1):37–44

Siminosk K, O’Keeffe M, Lévesque J, Hanley D, Brown JP (2010) CAR technical standards for bone mineral densitometry reporting. http://www.car.ca/uploads/standards%20guidelines/201001_en_car_bmd_technical_standards.pdf. Accessed 24 Jan 2014.

Siminoski K, Leslie WD, Frame H, Hodsman A, Josse RG, Khan A, Lentle BC, Lévesque J, Lyons DJ, Tarulli G, Brown JP, Canadian Association of Radiologists (2005) Recommendations for bone mineral density reporting in Canada. Can Assoc Radiol J 56(3):178–188

Allin S, Munce S, Schott AM, Hawker G, Murphy K, Jaglal SB (2013) Quality of fracture risk assessment in post-fracture care in Ontario, Canada. Osteoporos Int 24(3):899–905

Lewiecki EM, Binkley N, Petak SM (2006) DXA quality matters. J Clin Densitom 9(4):388–392

Rubin KH, Friis-Holmberg T, Hermann AP, Abrahamsen B, Brixen K (2013) Risk assessment tools to identify women with increased risk of osteoporotic fracture. Complexity or simplicity? A systematic review. J Bone Miner Res 28(8):1701–1717

Lentle B, Cheung AM, Hanley DA, Leslie WD, Lyons D, Papaioannou A, Atkinson S, Brown JP, Feldman S, Hodsman AB, Jamal AS, Josse RG, Kaiser SM, Kvern B, Morin S, Siminoski K, Scientific Advisory Council of Osteoporosis Canada (2011) Osteoporosis Canada 2010 guidelines for the assessment of fracture risk. Can Assoc Radiol J 62(4):243–250

Kanis JA, on behalf of the World Health Organisation Scientific Group (2007) Assessment of osteoporosis at the primary health care level. WHO Collaborating Centre for Metabolic Bone Diseases. http://www.iofbonehealth.org/sites/default/files/WHO_Technical_Report-2007.pdf. Accessed 24 Jan 2014.

Zhang J, Delzell E, Zhao H, Laster AJ, Saag KG, Kilgore ML, Morrisey MA, Wright NC, Yun H, Curtis J (2012) Central DXA utilization shifts from office-based to hospital-based settings among medicare beneficiaries in the wake of reimbursement changes. J Bone Miner Res 27(4):858–864

Ministry of Health and Long-Term Care, Ontario, Canada. Changes to schedule of facility fees. Bulletin Number 2053, 2008 April 18. http://www.health.gov.on.ca/en/pro/programs/ohip/bulletins/2000/bul2053.pdf. Accessed 24 Jan 2014.

Jaglal S, Hawker G, Croxford R, Cameron C, Munce S, Allin S. (2012) Impact of a reimbursement change on bone mineral density testing in Ontario, Canada. Plenary Poster Presentation, American Society for Bone Mineral Research 2012 Annual Meeting. http://www.asbmr.org/Meetings/AnnualMeeting/AbstractDetail.aspx?aid=4505c913-a92c-4b15-b913-20eb2fe9ac01. Accessed 3 Mar 2014.

Oppermann B, Ayoub W, Newman E, Wood GC, Olenginski TP (2010) Consultative DXA reporting improves guideline-driven quality of care-implications for increasing DXA reimbursement. J Clin Densitom 13(3):315–319

Braun V, Clarke V (2006) Using thematic analysis in psychology. Qual Res Psychol 3(2):77–101

Sandelowski M (2010) What’s in a name? Qualitative description revisited. Res Nurs Health 33:77–84

Sandelowski M (2000) Whatever happened to qualitative description? Res Nurs Health 23:334–340

Creswell JW (2003) Research design: qualitative, quantitative and mixed method approaches. Sage, Thousand Oaks

Pope C, Mays N (eds) (2006) Qualitative research in health care, 3rd edn. BMJ Books, London

Çetin A, Özgüçlü E, Özçakar L, Akıncı A (2008) Evaluation of the patient positioning during DXA measurements in daily clinical practice. Clin Rheumatol 27(6):713–715

The ISCD Certification Program. http://www.iscd.org/certification/. Accessed 24 Jan 2014.

Lewiecki EM, Lane N (2008) Common mistakes in the clinical use of bone mineral density testing. Nat Rev Rheumatol 4:667–674

Spelic D, Kaczmarek R, Hilohi M, Belella S (2007) United States radiological health activities: inspection results of mammography facilities. Biomed Imaging Interv J 3(2):e35

Suleiman OH, Spelic DC, McCrohan JL, Symonds GR, Houn F (1999) Mammography in the 1990s: the United States and Canada. Radiology 210(2):345–351

List of United States facilities accredited by the ISCD. http://www.iscd.org/accreditation/accredited-facilities/. Accessed 24 Jan 2014.

Chen Z, Kooperberg C, Pettinger MB, Bassford T, Cauley JA, LaCroix AZ, Lewis CE, Kipersztok S, Borne C, Jackson RD (2004) Validity of self-report for fractures among a multiethnic cohort of postmenopausal women: results from the Women’s Health Initiative observational study and clinical trials. Menopause 11(3):264–274

HLTH1905 Standard Outpt Bone Densitometry Requisition. British Columbia Radiological Society. http://medstaff.providencehealthcare.org/media/HLTH1905%20Standard%20Outpatient%20Bone%20Densitometry%20Requisition%20(Jun-06)scan.pdf. Accessed 3 March 2014.

Leslie WD, Berger C, Langsetmo L, Lix LM, Adachi JD, Hanley DA, Ioannidis G, Josse RG, Kovacs CS, Towheed T, Kaiser S, Olszynski WP, Prior JC, Jamal S, Kreiger N, Goltzman D, Canadian Multicentre Osteoporosis Study Research Group (2011) Construction and validation of a simplified fracture risk assessment tool for Canadian women and men: results from the CaMos and Manitoba cohorts. Osteoporos Int 22(6):1873–1883

Binkley N, Krueger D (2009) What should DXA reports contain? Preferences of ordering health care providers. J Clin Densitom 12(1):5–10

Plumb AA, Grieve FM, Khan SH (2009) Survey of hospital clinicians’ preferences regarding the format of radiology reports. Clin Radiol. 64(4):386–394; 395–396.

Acknowledgments

This study was supported by Osteoporosis Program, which is funded by the Ministry of Health and Long Term Care, Ontario, Canada.

Conflicts of interest

None.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Allin, S., Munce, S., Carlin, L. et al. Fracture risk assessment after BMD examination: whose job is it, anyway?. Osteoporos Int 25, 1445–1453 (2014). https://doi.org/10.1007/s00198-014-2661-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00198-014-2661-1