Abstract

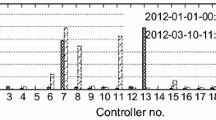

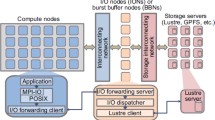

In petascale systems with a million CPU cores, scalable and consistent I/O performance is becoming increasingly difficult to sustain mainly because of I/O variability. The I/O variability is caused by concurrently running processes/jobs competing for I/O or a RAID rebuild when a disk drive fails. We present a mechanism that stripes across a selected subset of I/O nodes with the lightest workload at runtime to achieve the highest I/O bandwidth available in the system. In this paper, we propose a probing mechanism to enable application-level dynamic file striping to mitigate I/O variability. We implement the proposed mechanism in the high-level I/O library that enables memory-to-file data layout transformation and allows transparent file partitioning using subfiling. Subfiling is a technique that partitions data into a set of files of smaller size and manages file access to them, making data to be treated as a single, normal file to users. We demonstrate that our bandwidth probing mechanism can successfully identify temporally slower I/O nodes without noticeable runtime overhead. Experimental results on NERSC’s systems also show that our approach isolates I/O variability effectively on shared systems and improves overall collective I/O performance with less variation.

Similar content being viewed by others

References

Argonne Leadership Computing Facility. http://www.alcf.anl.gov/intrepid/

Bent J, Faibish S, Ahrens J, Grider G, Patchett J, Tzelnic P, Woodring J (2012) Jitter-free co-processing on a prototype exascale storage stack. In: IEEE 28th Symposium on Mass Storage Systems and Technologies (MSST), pp 1–5

Bent J, Gibson G, Grider G, McClelland B, Nowoczynski P, Nunez J, Polte M, Wingate M (2009) PLFS: A checkpoint filesystem for parallel applications. In: Proceedings of the Conference on High Performance Computing Networking, Storage and Analysis

Byna S, Uselton A, Praphat Knaaky D, He YH (2013) Trillion particles, 120,000 cores, and 350 TBs: lessons learned from a hero I/O run on hopper. In: Cray user group meeting

Carns P, Latham R, Ross R, Iskra K, Lang S, Riley K (2009) 24/7 characterization of petascale I/O workloads. In: Proceedings of the First Workshop on Interfaces and Abstractions for Scientific Data Storage

Dai D, Chen Y, Kimpe D, Ross R (2014) Two-choice randomized dynamic I/O scheduler for object storage systems. International Conference for High Performance Computing, Networking, Storage and Analysis, pp 635–646

Dickens PM, Logan J (2009) Y-lib: a user level library to increase the performance of MPI-IO in a Lustre file system environment. In: Proceedings of the 18th ACM International Symposium on High Performance Distributed Computing, pp 31–38

Dorier M, Antoniu G, Ross R, Kimpe D, Ibrahim S (2014) CALCioM: mitigating I/O interference in HPC systems through cross-application coordination. In: Proceedings of the 2014 IEEE 28th International Parallel and Distributed Processing Symposium, pp 155–164

Edwards J A high-level parallel I/O library for structured grid applications. https://github.com/NCAR/ParallelIO

Fang A, Chien AA (2015) How much ssd is useful for resilience in supercomputers. In: Proceedings of the 5th Workshop on Fault Tolerance for HPC at eXtreme Scale, pp 47–54

Fryxell B, Olson K, Ricker P, Timmes FX, Zingale M, Lamb DQ, MacNeice P, Rosner R, Truran JW, Tufo H (2000) FLASH: an adaptive mesh hydrodynamics code for modeling astrophysical thermonuclear flashes. Astrophys J Suppl Ser 131(1):273

Fu J, Liu N, Sahni O, Jansen KE, Shephard MS, Carothers CD (2010) Scalable parallel I/O alternatives for massively parallel partitioned solver systems. In: Proceedings of Workshop on Large-Scale Parallel Processing

Fu J, Min M, Latham R, Carothers CD (2011) Parallel I/O performance for application-level checkpointing on the blue gene/P system. In: Proceedings on Workshop on Interfaces and Architectures for Scientific Data Storage, pp 465–473

Gao K, Liao Wk, Nisar A, Choudhary A, Ross R, Latham R (2009) Using Subfiling to improve programming flexibility and performance of parallel shared-file I/O. In: Proceedings of the International Conference on Parallel Processing, pp 470–477

Gunasekaran R, Kim Y (2014) Feedback computing in leadership compute systems. In: 9th International Workshop on Feedback Computing (Feedback Computing 14). USENIX Association, Philadelphia, PA (2014). https://www.usenix.org/conference/feedbackcomputing14/workshop-program/presentation/gunasekaran

Kendall W, Huang J, Peterka T, Latham R, Ross R (2011) Visualization viewpoint: towards a general I/O layer for parallel visualization applications. IEEE Comput Graph Appl 31(6):6–10

Kim Y, Atchley S, Vallée GR, Shipman GM (2015) LADS: optimizing data transfers using layout-aware data scheduling. In: Proceedings of the 13th USENIX Conference on File and Storage Technologies, FAST’15, pp 67–80

Kotz D (1997) Disk-directed I/O for MIMD multiprocessors. ACM Trans Comput Syst 15(1):41–74

Kumar S, Vishwanath V, Carns P, Levine JA, Latham R, Scorzelli G, Kolla H, Grout R, Chen J, Ross R, Papka ME, Pascucci V (2012) Efficient data restructuring and aggregation for IO acceleration in PIDX. In: Proceedings of the Conference on High Performance Computing Networking, Storage and Analysis

Kumar S, Vishwanath V, Carns P, Summa B, Scorzelli G, Pascucci V, Ross R, Chen J, Kolla H, Grout R (2011) PIDX: Efficient parallel I/O for multi-resolution multi-dimensional scientific datasets. In: Proceedings of the 2011 IEEE International Conference on Cluster Computing, pp 103–111

Lang S, Carns P, Latham R, Ross R, Harms K, Allcock W (2009) I/O performance challenges at leadership scale. In: Proceedings of the Conference on High Performance Computing Networking, Storage and Analysis, pp 40:1–40:12

Latham R, Daley C, Keng Liao W, Gao K, Ross R, Dubey A, Choudhary A (2012) A case study for scientific I/O: improving the FLASH astrophysics code. Comput Sci Discov 5(1):015, 001

Li J, Liao Wk, Choudhary A, Ross R, Thakur R, Gropp W, Latham R, Siegel A, Gallagher B, Zingale M (2003) Parallel netCDF: a high-performance scientific I/O interface. In: Proceedings of the Conference on High Performance Computing Networking, Storage and Analysis

Li Y, Lu X, Miller EL, Long DDE (2015) ASCAR: automating contention management for high-performance storage systems. In: IEEE 31st Symposium on Mass Storage Systems and Technologies, MSST, pp 1–16

Liao Wk, Choudhary A (2008) Dynamically adapting file domain partitioning methods for collective I/O based on underlying parallel file system locking protocols. In: Proceedings of the Conference on High Performance Computing Networking, Storage and Analysis

Liao WK, Coloma K, Choudhary A, Ward L, Russell E, Pundit N (2006) Scalable design and implementations for mpi parallel overlapping I/O. IEEE Trans Parallel Distrib Syst 17(11):1264–1276

Liao WK, Coloma K, Choudhary A, Ward L, Russell E, Tideman S (2005) Collective caching: application-aware client-side file caching. In: Proceedings of 14th IEEE International Symposium on High Performance Distributed Computing, pp 81–90

Liu N, Cope J, Carns PH, Carothers CD, Ross RB, Grider G, Crume A, Maltzahn C (2012) On the role of burst buffers in leadership-class storage systems. In: Proceedings of the IEEE Conference on Mass Storage Systems, pp 1–11

Lofstead J, Ross R (2013) Insights for exascale IO APIs from building a petascale IO API. In: Proceedings of the International Conference on High Performance Computing, Networking, Storage and Analysis, pp 87:1–87:12

Lofstead J, Zheng F, Liu Q, Klasky S, Oldfield R, Kordenbrock T, Schwan K, Wolf M (2010) Managing variability in the IO performance of petascale storage systems. In: Proceedings of the 2010 ACM/IEEE International Conference for High Performance Computing, Networking, Storage and Analysis, SC ’10, pp 1–12

Lofstead JF, Klasky S, Schwan K, Podhorszki N, Jin C (2008) Flexible IO and Integration for scientific codes through the adaptable IO system (ADIOS). In: Proceedings of the 6th International Workshop on Challenges of Large Applications in Distributed Environments, pp 15–24

Lustre File System. http://www.lustre.org

Ma X, Winslett M, Lee J, Yu S (2003) Improving MPI-IO output performance with active buffering plus threads. In: Proceedings of the 17th International Symposium on Parallel and Distributed Processing

Message Passing Interface Forum. MPI-2: extensions to the message passing interface. http://www.mpi-forum.org/docs/docs.html

National Energy Research Scientific Computing Center. http://www.nersc.gov/users/computational-systems/hopper/

Park S, Shen K (2012) FIOS: a fair, efficient flash I/O scheduler. In: Proceedings of the 10th USENIX Conference on File and Storage Technologies, pp 13–13

Randall D, Khairoutdinov M, Arakawa A, Grabowski W (2003) Breaking the cloud parameterization deadlock. Bull Am Meteor Soc 84:1547–1564

del Rosario JM, Bordawekar R, Choudhary A (1993) Improved parallel I/O via a two-phase run-time access strategy. In: Proceedings of Workshop on Input/Output in Parallel Computer Systems, pp 56–70

Sankaran R, Hawkes ER, Chen JH, Lu T, Law CK (2006) Direct numerical simulations of turbulent lean premixed combustion. J Phys Conf Ser 46(1):38

Sato K, Mohror K, Moody A, Gamblin T, d. Supinski BR, Maruyama N, Matsuoka S (2014) A user-level infiniband-based file system and checkpoint strategy for burst buffers. In: 2014 14th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGrid), pp 21–30

Schuchardt K, Palmer B, Daily J, Elsethagen T, Koontz A (2007) IO strategies and data services for petascale data sets from a global cloud resolving model. J Phys Conf Ser 78:012089

Seamons KE, Chen Y, Jones P, Jozwiak J, Winslett M (1995) Server-directed collective I/O in Panda. In: Proceedings of the Conference on High Performance Computing Networking, Storage and Analysis

Shende SS, Malony AD (2006) The TAU parallel performance system. Int J High Perform Comput Appl 20(2):287–311

Son SW, Sehrish S, k. Liao W, Oldfield R, Choudhary A (2013) Dynamic file striping and data layout transformation on parallel system with fluctuating I/O workload. In: 2013 IEEE International Conference on Cluster Computing (CLUSTER), pp 1–8

Song H, Yin Y, Sun XH, Thakur R, Lang S (2011) Server-side I/O coordination for parallel file systems. In: Proceedings of 2011 International Conference for High Performance Computing, Networking, Storage and Analysis (2011)

Tavakoli N, Dai D, Chen Y (2016) Log-assisted straggler-aware I/O scheduler for high-end computing. In: 2016 45th International Conference on Parallel Processing Workshops (ICPPW), pp 181–189. doi:10.1109/ICPPW.2016.38

Thakur R, Choudhary A (1996) An extended two-phase method for accessing sections of out-of-core arrays. Sci Progr 5(4):301–317

Thakur R, Gropp W, Lusk E (1999) Data sieving and collective I/O in ROMIO. In: Proceedings of the 7th Symposium on the Frontiers of Massively Parallel Computation

Thapaliya S, Bangalore P, Lofstead J, Mohror K, Moody A (2014) IO-Cop: managing concurrent accesses to shared parallel file system. In: 43rd International Conference on Parallel Processing Workshops, pp 52–60

Thapaliya S, Bangalore P, Lofstead J, Mohror K, Moody A (2016) Managing I/O interference in a shared burst buffer system. In: 2016 45th International Conference on Parallel Processing (ICPP), pp. 416–425

The HDF Group (2000–2010) Hierarchical data format version 5. http://www.hdfgroup.org/HDF5

Wachs M, Abd-El-Malek M, Thereska E, Ganger GR (2007) Argon: performance insulation for shared storage servers. In: Proceedings of the 5th USENIX Conference on File and Storage Technologies

Wang T, Oral S, Pritchard M, Wang B, Yu W (2015) TRIO: burst buffer based I/O orchestration. In: 2015 IEEE International Conference on Cluster Computing, pp 194–203

Wang T, Oral S, Wang Y, Settlemyer B, Atchley S, Yu W (2014) BurstMem: a high-performance burst buffer system for scientific applications. In: 2014 IEEE International Conference on Big Data (Big Data), pp 71–79

Xie B, Chase J, Dillow D, Drokin O, Klasky S, Oral S, Podhorszki N (2012) Characterizing output bottlenecks in a supercomputer. In: Proceedings of the International Conference on High Performance Computing, Networking, Storage and Analysis, pp 8:1–8:11

Yildiz O, Dorier M, Ibrahim S, Ross R, Antoniu G (2016) On the root causes of cross-application I/O interference in HPC storage systems. In: 2016 IEEE International Parallel and Distributed Processing Symposium (IPDPS), pp 750–759

Ying L (2008) Lustre ADIO collective write driver—white paper. Tech. rep, Sun and ORNL

Yu W, Vetter J (2008) ParColl: partitioned collective I/O on the cray XT. In: Proceedings of the 37th International Conference on Parallel Processing, pp 562–569

Yu W, Vetter J, Canon RS, Jiang S (2007) Exploiting lustre file joining for effective collective IO. In: Proceedings of the Seventh IEEE International Symposium on Cluster Computing and the Grid, pp 267–274

Zhang X, Davis K, Jiang S (2011) QoS support for end users of I/O-intensive applications using shared storage systems. In: Proceedings of 2011 International Conference for High Performance Computing, Networking, Storage and Analysis, pp 18:1–18:12

Zhou Z, Yang X, Zhao D, Rich P, Tang W, Wang J, Lan Z (2016) I/O-aware bandwidth allocation for petascale computing systems. Parallel Comput 58:107–116

Zingale M (2001) FLASH I/O benchmark routine—parallel HDF 5. http://www.ucolick.org/~zingale/flash_benchmark_io/

Acknowledgments

This work is supported in part by the following grants: NSF awards CCF-0833131, CNS-0830927, IIS-0905205, CCF-0938000, CCF-1029166, and OCI-1144061; DOE awards DE-FG02-08ER25848, DE-SC0001283, DE-SC0005309, DESC0005340, and DESC0007456; AFOSR award FA9550-12-1-0458. This research used resources of the National Energy Research Scientific Computing Center, which is supported by the Office of Science of the U.S. Department of Energy under Contract No. DE-AC02-05CH11231.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Son, S.W., Sehrish, S., Liao, Wk. et al. Reducing I/O variability using dynamic I/O path characterization in petascale storage systems. J Supercomput 73, 2069–2097 (2017). https://doi.org/10.1007/s11227-016-1904-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-016-1904-7