Abstract

Firms often include summaries with earnings releases. However, manager-generated summaries may be prone to strategic tone and content management, compared to the underlying disclosures they summarize. In contrast, computer algorithms can summarize text without human intervention and may provide useful summary information with less bias. We use multiple methods to provide evidence regarding the characteristics of algorithm-based summaries of earnings releases compared to those provided by managers. Results suggest that automatic summaries are generally less positively biased, often without sacrificing relevant information. We then conduct an experiment to test whether these differing attributes of automatic and management summaries affect individual investors’ judgments. We find that investors who receive an earnings release accompanied by an automatic summary arrive at more conservative (i.e., lower) valuation judgments and are more confident in those judgments. Overall, our results suggest that summaries affect investors’ judgments and that these effects differ for management and automatic summaries.

Similar content being viewed by others

Notes

Throughout the paper, we use the term “bias” in a directional and descriptive sense to indicate more or less positive reporting of performance and not necessarily to indicate suboptimal or deceptive reporting.

LexRank is short for Lexical PageRank, a method developed by Larry Page, one of the founders of Google.

We provide detail on the summarization algorithms used in this study in the online appendix. For further details on the LexRank algorithm specifically, we refer the reader to Section 2.1.

LexRank’s superior performance is consistent with findings in previous studies (e.g., Verma and Om 2016).

See Henry (2008) for the regulatory context. In June 2016, the SEC adopted an interim final rule that allows issuers to include, at their option, a summary page in Form 10-K. As noted therein, summary information must be presented “fairly and accurately.”

In our tests, we employ a variety of frequently used sentence-extraction-based approaches for generic summarization, applicable for settings in which no additional information or knowledge (e.g., about user need) is needed. We exclude from our analysis genre-specific (e.g., academic journal articles) and domain-specific (e.g., medical) approaches; see Nenkova and McKeown (2011) for an overview.

While management has incentives to positively bias summaries (e.g., by downplaying bad news or adding positive tone), it is difficult to define what optimal tone should be. For example, management might use more negative tone in the underlying earnings release to reduce litigation risk. If this negative tone is reflected in an automatic summary, then automatic summaries may be negatively biased. Further, if the overall interpretation of the news in an earnings release drifts negative after the news is released, for example as analysts scrutinize the results and question management (e.g., Chen et al. 2018), then a more positive summary may be a rational starting point for management. Thus, although we expect automatic summaries to be less positive than management summaries and to better reflect the content and tone of the underlying text, we stop short of predicting that either summary type will be more or less optimal.

The online appendix compares the tone and readability of these two earnings releases as well as the earnings release used in the experiment reported in Section 4 to the sample of 78 earnings releases used in our archival analyses. Results suggest that the three earnings releases have net tone (i.e., number of positive words minus number of negative tone words) and Gunning-Fog scores that are reasonably close (within one standard deviation) to the sample mean. Thus these three earnings releases exhibit characteristics that represent earnings releases more generally.

In pretests, reported in the online appendix, we tested two additional algorithms, known as Luhn (Luhn 1958) and TextRank (Mihalcea and Tarau 2004), which produced summaries that were significantly longer than either the management summaries or the other automatic summaries. Therefore we exclude them from our main tests and focus instead on algorithms that produce summaries that are more similar in length to management summaries.

Because we used the same procedures to recruit and screen participants for the pretest in the online appendix, the user evaluation study reported here, and the experiment reported in Section 4, we report aggregate demographics here. A large majority of our participants (88%) had taken college courses, and 71% held a bachelor’s degree or higher. Participants report having taken an average of 3.8 accounting or finance classes and had an average of 14.5 years of full-time work experience. According to Elliott et al. (2007), nonprofessional individual investors on average have taken 3.5 accounting or finance courses, and 97% have experience with financial statements. Our participants thus had similar profiles to the nonprofessional individual investors studied by Elliott et al. (2007), suggesting that they were appropriate proxies for nonprofessional individual investors.

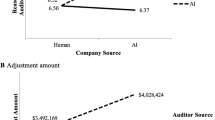

Compared to the management summary, for each earnings release, LexRank has lower “Bias” scores and slightly higher “Capture,” “Reliance,” and “Should be included” scores. Statistically, however, only participants assigned to Target rated “Bias” as significantly lower for the LexRank summary compared to the management summary (F1,56 = 12.45, p < 0.01). An explanation for this difference is that Target gave too little attention to two major news events—a credit-card breach and a struggling Canadian segment—in the management summary. Three raters (two co-authors and an independent rater) coded how often participants indicated that information related to these events was missing from each summary. In untabulated analyses for the LexRank (management) summary, we find that 19.30% (35.09%) of the participants indicated that important information related to these events was missing. This difference is statistically significant (F1,56 = 4.53, p = 0.04) and significantly related to the difference in “Bias” (F1,56 = 5.81, p = 0.02). This evidence suggests that Target’s management avoided highlighting important negative news events in its summary.

As reported in pretests in the online appendix, Table OA.1, LexRank has the highest readability score of all automatic summaries and does not differ, compared to management summaries, in terms of readability. User evaluation scores further suggest that participants’ evaluations of credibility, informativeness, and usefulness are significantly ranked higher for LexRank, in comparison to the management summary.

For each earnings release, a list of fundamentals was agreed upon by two of the authors, who independently identified fundamental terms mentioned in the underlying text.

In the accounting and finance literature, out of several sentiment word lists, two have been used extensively: Henry (2008), developed in the context of earnings releases, and Loughran and McDonald (2011), created in the context of 10-K filings. For a discussion of these and other sentiment word lists—e.g., Harvard IV-4, Diction—see Loughran and McDonald (2016). To empirically motivate our choice of word list, we use regression analysis to correlate participants’ “Bias” judgments, from the user evaluation test reported earlier, with an earnings release’s net sentiment score (positive word frequencies minus negative word frequencies), calculated using either the Henry (2008) or the Loughran and McDonald (2011) sentiment word list. As reported in the online appendix, Table OA.2, the coefficients on Net sentiment are statistically significantly positive only when using the Henry (2008) word list.

As regards firm and corporate governance characteristics, this analysis yields several interesting insights. For instance, when earnings surprises are larger, the management summary contains fewer negative words, compared to the automatic summary. For firms operating in litigious industries, management, on the one hand, uses more cautious language (i.e., fewer negative tone words), relative to the algorithm summary, yet, at the same time, uses more positive tone words, suggesting that the tone in the algorithm-generated summary is more neutral. When the algorithm extracts a positively toned sentence from the underlying text of the earnings release, this is more likely for larger firms, whose disclosures tend to be more reliable (e.g., DeFond and Jiambalvo 1991). Finally, management summaries tend to be more negatively toned for firms exhibiting higher leverage and having larger boards.

Media reports on Target’s first quarter of 2016 performance also indicate that the sales decrease was interpreted as both significant and negative (e.g., CNBC 2016; Oyedele 2016; Zacks 2016). To help us better understand why the sentence that describes the sales decrease is included in the LexRank summary, we conducted qualitative analysis. The word “sales” and the term “sale of the pharmacy and clinic business” are quite prominent in the underlying earnings release and are also contained in the selected sentence. If we drop the first part of the sentence (“First quarter 2016 sales decreased 5.4% to $16.2 billion from $17.1 billion last year”) or the second part of the sentence (“as a 1.2% increase in comparable sales was more than offset by the impact of the sale of the pharmacy and clinic businesses”), LexRank no longer includes the sentence. Likewise, if we delete “sales,” “pharmacy,” or other words from elsewhere in the underlying text (but not in the selected sentence), LexRank no longer includes the sentence. In line with the sentence similarity approach taken by LexRank, as described in section 2.1, these analyses confirm that, if the respective sentence becomes less similar to other sentences or if other sentences become less similar, the likelihood of including the sentence in the LexRank summary decreases.

An untabulated analysis shows that both summaries have college-level readability scores. Specifically, the Gunning-Fog score, calculated using Python package textstat (https://github.com/shivam5992/textstat), is 14.24 for the automatic summary and 16.54 for the management summary. This suggests that, in relative terms, the automatic summary is slightly more readable.

Results for valuation judgments are inferentially identical if we instead compare final valuation judgments across summary conditions, controlling for initial valuation judgments.

SEM has advantages over regression in testing mediation, especially in cases that deviate from simple X➔M➔Y relationships, as is the case in our models with their multiple potential mediators (e.g., Iacobucci et al. 2007).

We also allow error terms for the mediators to covary. (These covariance paths are omitted from Fig. 1 to simplify the presentation.)

SEM tests all four mediators simultaneously. Mediation results are inferentially identical when testing each potential mediator separately, with one exception. Risk judgments partially mediate the effect of summary type (automatic versus management) when risk judgments are tested without the other potential mediators in the model.

For example, the company distributed an unusually large amount of cash—more than $1.2 billion, representing more than twice net income from continuing operations from the quarter—to shareholders during the quarter, either as dividends or share repurchases. This information was included in the “Capital Returned to Shareholders” section.

We note, however, that these results should be interpreted with caution, as we do not examine qualifiers that accompany these words, and some participants mention sales in an ambiguous way (e.g., without stating explicitly whether sales influenced their judgments positively or negatively).

The online appendix discusses additional insights from factors important to participants’ valuation judgments.

Compared to earnings releases, research suggests managers use more boilerplate language (e.g., DeFranco et al. 2016) and have less opportunity to exercise discretion (Davis and Tama-Sweet 2012) in the MD&A. While these characteristics seem likely to reduce the effectiveness of the algorithms we test, technological advances may overcome these issues.

References

Ahern, K. R., & Sosyura, D. (2014). Who writes the news? Corporate press releases during merger negotiations. The Journal of Finance, 69, 241–291.

Allee, K., & DeAngelis, M. (2015). The structure of voluntary disclosure narratives: Evidence from tone dispersion. Journal of Accounting Research, 53, 241–274.

Arslan-Ayaydin, O., Boudt, K., & Thewissen, J. (2016). Managers set the tone: Equity incentives and the tone of earnings press releases. Journal of Banking & Finance, 72, S132–S147.

Asay, H. S., Elliott, W. B., & Rennekamp, K. M. (2017). Disclosure readability and the sensitivity of investors’ valuation judgments to outside information. The Accounting Review, 92, 1–25.

Asch, S. E. (1946). Forming impressions of personality. The Journal of Abnormal and Social Psychology, 41, 258–290.

Baron, R. M., & Kenny, D. (1986). The moderator-mediator variable distinction in social psychological research: Conceptual, strategic, and statistical considerations. Journal of Personality and Social Psychology, 51, 1173–1182.

Barth, M. E. (2015). Financial accounting research, practice, and financial accountability. ABACUS: A Journal of Accounting, Finance and Business Studies, 51, 499–510.

Blankespoor, E., Hendricks, B. E., & Miller, G. S. (2017a). Perceptions and price: Evidence from CEO presentations at IPO roadshows. Journal of Accounting Research, 55, 275–327.

Blankespoor, E., deHaan, E., Wertz, J., & Zhu, C. (2017b). Why do some investors disregard earnings news? Awareness costs versus processing costs. Working paper.

Blankespoor, E., deHaan, E., & Zhu, C. (2018). Capital market effects of media synthesis and dissemination: Evidence from robo-journalism. Review of Accounting Studies, 23, 1–36.

Bonner, S. E., Clor-Proell, S., & Koonce, L. (2014). Mental accounting and disaggregation based on the sign and relative magnitude of income statement items. The Accounting Review, 89, 2087–2114.

Brandow, R., Mitze, K., & Rau, L. E. (1995). Automatic condensation of electronic publications by sentence selection. Information Processing & Management, 31, 675–685.

Chen, J. V., Nagar, V., & Schoenfeld, J. (2018). Manager-analyst conversations in earnings conference calls. Review of Accounting Studies, 23, 1315–1354.

Clerwall, C. (2014). Enter the robot journalist. Journalism Practice, 8, 519–531.

CNBC. (2016). Target earnings top expectations, but revenue is light. Retrieved from http://www.cnbc.com/2016/05/18/target-q1-earnings-report.html.

Daniel, K., Hirshleifer, D., & Teoh, S. H. (2002). Investor psychology in capital markets: Evidence and policy implications. Journal of Monetary Economics, 49, 139–209.

Davis, A., & Tama-Sweet, I. (2012). Managers use of language across alternative disclosure outlets: Earnings press releases versus MD&a. Contemporary Accounting Research, 29, 804–837.

DeFond, M., & Jiambalvo, J. (1991). Incidence and circumstances of accounting errors. The Accounting Review, 66, 643–655.

DeFranco, G., Fogel-Yaari, H., & Li, H. (2016). MD&A textual similarity and auditors. Working paper.

Desai, H., Rajgopal, S., & Yu, J. J. (2016). Were information intermediaries sensitive to the financial statement-based leading indicators of bank distress prior to the financial crisis. Contemporary Accounting Research, 33, 576–606.

Dyck, A., & Zingales, L. (2003). The media and asset prices. Working paper.

Dyer, T., Lang, M., & Stice-Lawrence, L. (2017). The evolution of 10-K textual disclosure: Evidence from latent Dirichlet allocation. Journal of Accounting and Economics, 64, 221–245.

Elliott, W. B., Hodge, F. D., Kennedy, J. J., & Pronk, M. (2007). Are MBA students a good proxy for nonprofessional investors? The Accounting Review, 82, 139–168.

Elliott, W. B., Hobson, J. L., & White, B. J. (2015). Earnings metrics, information processing, and price efficiency in laboratory markets. Journal of Accounting Research, 55, 555–592.

Erkan, G., & Radev, D. R. (2004). LexRank: Graph-based lexical centrality as salience in text summarization. Journal of Artificial Intelligence Research, 22, 457–479.

Farrell, A., Grenier, J. H., & Leiby, J. (2017). Scoundrels or stars? Theory and evidence on the quality of workers in online labor markets. The Accounting Review, 92, 93–114.

FASB. (2015). FASB’s simplification initiative: An update. Retrieved from http://www.fasb.org/jsp/FASB/Page/SectionPage&cid=1176165963019.

Francis, J., Schipper, K., & Vincent, L. (2002). Expanded disclosures and the increased usefulness of earnings announcements. The Accounting Review, 77, 515–546.

Frederickson, J., & Miller, J. (2004). The effects of pro forma earnings disclosures on analysts’ and nonprofessional investors’ valuation judgments. The Accounting Review, 79, 667–686.

Ganesan, K. (2015). ROUGE 2.0: Updated and improved measures for evaluation of summarization tasks. Retrieved from https://arxiv.org/abs/1803.01937.

Graefe, A., Haim, M., Haarmann, B., & Brosius, H.-B. (2016). Readers’ perception of computer-generated news: Credibility, expertise, and readability. Journalism, 1–16.

Guillarmon-Saorin, E., Garcia Osma, B., & Jones, M. J. (2012). Opportunistic disclosure in press release headlines. Accounting and Business Research, 42, 143–168.

Hales, J., Kuang, X., & Venkataraman, S. (2011). Who believes the hype? An experimental investigation of how language affects investor judgments. Journal of Accounting Research, 49, 223–255.

Henry, E. (2008). Are investors influenced by how earnings press releases are written? Journal of Business Communications, 45, 363–407.

Henry, E., & Leone, A. (2016). Measuring qualitative information in capital markets research: Comparison of alternative methodologies to measure disclosure tone. The Accounting Review, 91, 153–178.

Hirshleifer, D., & Teoh, S. H. (2003). Limited attention, information disclosure, and financial reporting. Journal of Accounting and Economics, 36, 337–386.

Hirshleifer, D., Lim, S. S., & Teoh, S. H. (2009). Driven to distraction: Extraneous events and underreaction to earnings news. The Journal of Finance, 64, 2289–2325.

Hogarth, R. M., & Einhorn, H. J. (1992). Order effects in belief updating: The belief-adjustment model. Cognitive Psychology, 24, l–55.

Huang, X., Nekrasov, A., & Teoh, S.H. (2013). Headline salience and over- and underreactions to earnings. Working paper.

Huang, X., Teoh, S. H., & Zhang, Y. (2014). Tone management. The Accounting Review, 89, 1083–1113.

Iacobucci, D. (2010). Structural equations modeling: Fit indices, sample size, and advanced topics. Journal of Consumer Psychology, 20, 90–98.

Iacobucci, D., Saldanha, N., & Deng, X. (2007). A meditation on mediation: Evidence that structural equations models perform better than regression. Journal of Consumer Psychology, 17, 139–153.

Kline, R. B. (2011). Principles and practice of structural equation modeling (3rd ed.). New York: The Guilford Press.

KPMG. (2011). Disclosure overload and complexity: Hidden in plain sight. Retrieved from https://www.kpmg.com/US/en/IssuesAndInsights/ArticlesPublications/Documents/disclosure-overload-complexity.pdf.

Krische, S.D. (2018). Investment experience, financial literacy, and investment-related judgments. Contemporary Accounting Research, forthcoming.

Lin, C-Y. (2004). ROUGE: A package for automatic evaluation of summaries. Retrieved from http://www.aclweb.org/anthology/W04-1013.

Loughran, T., & McDonald, B. (2011). When is a liability not a liability? Textual analysis, dictionaries, and 10-Ks. The Journal of Finance, 66, 35–65.

Loughran, T., & McDonald, B. (2014). Measuring readability in financial disclosures. The Journal of Finance, 69, 1643–1671.

Loughran, T., & McDonald, B. (2016). Textual analysis in accounting and finance: A survey. Journal of Accounting Research, 54, 1187–1230.

Luhn, H. P. (1958). The automatic creation of literature abstracts. IBM Journal, 159–165.

McDonald, S., & Stevenson, R. J. (1998). Navigation in hyperspace: An evaluation of the effects of navigational tools and subject matter expertise on browsing and information retrieval in hypertext. Interacting with Computers, 10, 129–142.

Mihalcea, R., & Tarau, P. (2004). TextRank: Bringing order into texts. UNT Digital Library. Retrieved from http://digital.library.unt.edu/ark:/67531/metadc30962/. Accessed December 19, 2016.

Moen, H., Peltonen, L.-M., Heimonen, J., Airola, A., Pahikkala, T., Salakoski, T., & Salanterä, S. (2016). Comparison of automatic summarization methods for clinical free text notes. Artificial Intelligence in Medicine, 67, 25–37.

Nenkova, A., & McKeown, K. (2011). Automatic summarization. Foundations and Trends in Information Retrieval, 5, 103–233.

Newman, M.E.J. (2008). The mathematics of networks. The New Palgrave Dictionary of Economics, 2nd edition, edited by S.N. Durlauf and L.E. Blume, London: Palgrave Macmillan.

Nisbett, R., & Ross, L. (1980). Human inference: Strategies and shortcomings of social judgment. Englewood Cliffs: Prentice Hall.

Oyedele, A. (2016). Target says its sales will stink. Business Insider. Retrieved from http://www.businessinsider.com/target-earnings-2016-5.

Paredes, T. (2003). Blinded by the light: Information overload and its consequences for securities regulation. Washington University Law Quarterly, 81, 417–485.

Paredes, T. (2013). Remarks at the SEC speaks in 2013. Retrieved from www.sec.gov/News/Speech/Detail/Speech/1365171492408.

Pennington, N., & Hastie, R. (1986). Evidence evaluation in complex decision making. Journal of Personality and Social Psychology, 51, 242–258.

Rennekamp, K. M. (2012). Processing fluency and investors’ reactions to disclosure readability. Journal of Accounting Research, 50, 1319–1354.

Salton, G., Sighhal, A., Mitra, M., & Buckley, C. (1997). Automatic text structuring and summarization. Information Processing & Management, 33, 193–207.

SEC. (2013). Report on review of disclosure requirements in Regulation S-K. Retrieved from https://www.sec.gov/news/studies/2013/reg-sk-disclosure-requirements-review.pdf.

SEC. (2016). Release no. 34–77969: Form 10-K summary. Retrieved from https://www.sec.gov/rules/interim/2016/34-77969.pdf.

Sinclair, R. C. (1988). Mood, categorization breadth, and performance appraisal: The effects of order of information acquisition and affective state on halo, accuracy, information retrieval, and evaluations. Organizational Behavior and Human Decision Processes, 42, 22–46.

Tan, H.-T., Wang, E. Y., & Zhou, B. (2014). When the use of positive language backfires: The joint effect of tone, readability, and investor sophistication on earnings judgments. Journal of Accounting Research, 52, 273–302.

Tombros, A. & Sanderson, M. (1998). Advantages of query biased summaries in information retrieval. Proceedings of the 21st annual international ACM SIGIR conference on Research and development in information retrieval, 2–10.

Umar, T. (2018). Complexity aversion when seeking alpha. Working paper.

Van der Kaa, H., & Krahmer, E. (2014). Journalist versus news consumer: The perceived credibility of machine written news. In: Proceedings of the Computation + Journalism Conference. New York.

Verma, P., & Om, H. (2016). Extraction based text summarization methods on user’s review data: A comparative study. In: Unal, A., et al. (eds.), “Smart trends in information technology and computer communications.” Communications in Computer and Information Science, 628, 346–354.

White, R. W., Jose, J. M., & Ruthven, I. (2003). A task-oriented study on the influencing effects of query-biased summarization in web searching. Information Processing and Management, 39, 707–733.

Zacks. (2016). Target (TGT) Q1 earnings beat, sales miss; Stock plunges. Retrieved from https://www.zacks.com/stock/news/217811/target-tgt-q1-earnings-beat-sales-miss-stock-plunges.

Acknowledgements

We thank Russell Lundholm (editor), an anonymous reviewer, Margaret Abernethy, Ed deHaan (RAST Conference discussant), David Veenman, Sarah Zechman (LBS Accounting Symposium discussant), and participants at the 2018 Review of Accounting Studies Conference, the London Business School Accounting Symposium, the Financial Accounting and Reporting Section (FARS) Midyear Meeting, Radboud University Nijmegen, the University of Texas at Austin, the University of Melbourne, the University of Pittsburgh, the University of Amsterdam, the University of New South Wales, Jinan University, Tilburg University, NHH Norwegian School of Economics, and Texas A&M University for helpful comments. We also thank Arnaud Nicolas for generating expert summaries of earnings releases and Ties de Kok and Shannon Garavaglia for their capable research assistance. This paper won the 2018 FARS Midyear Meeting Best Paper Award.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

ESM 1

(DOCX 411 kb)

Appendix

Appendix

1.1 Experimental Materials

Panel A: Earnings Release Header

Panel B: Management Summary

Panel C: Automatic Summary

Panel D: Earnings Release Sections

Note: After viewing the earnings release header and summary (header only for no summary condition), participants clicked a button labeled “access earnings release sections,” which displayed hyperlinks to the different parts of the company’s full earnings release, as shown in Panel D.

Rights and permissions

About this article

Cite this article

Cardinaels, E., Hollander, S. & White, B.J. Automatic summarization of earnings releases: attributes and effects on investors’ judgments. Rev Account Stud 24, 860–890 (2019). https://doi.org/10.1007/s11142-019-9488-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11142-019-9488-0