Abstract

This paper discusses regression analysis of current status data, a type of failure time data where each study subject is observed only once, in the presence of dependent censoring. Furthermore, there may exist a cured subgroup, meaning that a proportion of study subjects are not susceptible to the failure event of interest. For the problem, we develop a sieve maximum likelihood estimation approach with the use of latent variables and Bernstein polynomials. For the determination of the proposed estimators, an EM algorithm is developed and the asymptotic properties of the estimators are established. Extensive simulation studies are conducted and indicate that the proposed method works well for practical situations. A motivating application from a tumorigenicity experiment is also provided.

Similar content being viewed by others

1 Introduction

This paper discusses regression analysis of current status data, a type of failure time data that arise when each study subject is observed only once (Jewell and van der Laan 1995; Sun 2006). In this case, the failure time of interest is known only to be either smaller or greater than the observation time, or is either left- or right-censored (Kalbfleisch and Prentice 2002). One field that usually yields current status data is cross-sectional studies and in these situations, the failure time of interest can be the time to the first marriage or birth, the time under unemployment, or the time to a certain disease. Other or more specific fields that often produce current status data include demographic studies, epidemiological surveys, social sciences and tumorigenicity experiments (Huang 1996; Lin et al. 1998; Sun 2006). Sometimes current status data are also referred to as case I interval-censored data.

Many procedures have been developed in the literature for regression analysis of current status data under various regression models (Chen et al. 2009; Finkelstein and Schoenfeld 1989; Huang 1996; Jewell and van der Laan 1995; Lin et al. 1998; Rossini and Tsiatis 1996, Sun 1999). For example, Huang (1996), Rossini and Tsiatis (1996), and Lin et al. (1998) considered the problem under the proportional hazards model, the proportional odds model and the additive hazards model, respectively, and Chen et al. (2009) also discussed the regression problem but for multivariate situations. A common feature of the methods mentioned above is that they apply only to independent current status data, meaning that the failure time of interest and the observation time are independent given covariates. Otherwise the data are usually referred to as dependent current status data and some methods have also been established for them too. Among others, for example, Zhang et al. (2005) and Chen et al. (2012) investigated regression analysis of dependent current status data arising from the additive hazards model and the linear transformation model, respectively, by using the latent variable approach.

For all of the statistical methods described above, a common assumption is that all study subjects will experience or are susceptible to the failure event of interest. That is, there exists a finite failure time variable associated with each subject that may or may not be observed exactly. In reality, however, there may exist some situations where the study population consists of both a susceptible subpopulation and a nonsusceptible subpopulation and only individuals in the susceptible subpopulation will experience the failure event of interest (Farewell 1982; Liu and Shen 2009; Lu and Ying 2004). A common type of examples is tumorigenicity studies or human cancer studies where the study subjects may never experience the type of tumors under the study. Such a tumorigenicity study is described in details below. For the analysis of such data, a cure model is often used and in this case, we usually say that a subject is cured if it is not susceptible to the failure event such as a disease. In practice, it is apparent that a subject’s cured status would be known if he or she is observed to experience the failure event or his or her failure time is known. On the other hand, for the subjects whose failure times have not been observed, the cured status will be unknown.

In addition to the references mentioned above, many other authors have discussed the situations with the presence of a cured subgroup and/or dependent censoring (Fang et al. 2005; Lam and Xue 2005; Li et al. 2007; Ma 2009, Othus et al. 2007). In particular, Li et al. (2007) and Othus et al. (2007) considered the fitting of right-censored failure time data to cure models in the presence of dependent censoring. Also some authors have pointed out that the omitting of the existence of a cured subgroup could yield misleading inference such as an overestimation of the survival of non-cured subjects (Rondeau et al. 2011) and the ignoring of the dependent censoring could cause serious estimation bias (Huang and Wolfe 2002). Moreover, sometimes it may be of great biomedical interest to estimate the probability of cure. Of course, in practice, it is important and may not be easy to identify the existence of a cure subgroup. One common approach is to look at the survival curve and the one with a long and stable non-zero plateau at the tail may be taken as the evidence for the existence (Rondeau et al. 2011; Chen 2013). More discussion on these will be given in the application below.

In the following, we will present a maximum likelihood estimation procedure for regression analysis of dependent current status data in the presence of a cured subgroup. Before presenting the procedure, we will first introduce some notation and models and describe the likelihood function in Sect. 2. Here we will focus on the data arising from the proportional hazards model and employ the latent variable approach to describe the dependent censoring. A sieve maximum likelihood estimation procedure will then be described in Sect. 3 and in the procedure, Bernstein polynomials will be used to approximate unknown functions. For the determination of the proposed estimators, an EM algorithm will be developed and the asymptotic properties of the estimators are established. Section 4 will present some results obtained from an extensive simulation study conducted to assess the performance of the proposed methodology and an illustrative example is provided in Sect. 5. The article will conclude with some discussion and remarks in Sect. 6.

2 Assumptions, models and likelihood function

Consider a failure time study in which there may exist a cured subgroup. Let T denote the failure time of interest and suppose that each subject is observed only once at time C and there exists a vector of covariates denoted by X. That is, T is either left- or right-censored and we only have current status data. To describe the possible cured subgroup, define the cure indicator variable \(U = 0\) if the subject is cured and nonsusceptible and \(U = 1\) otherwise, and suppose that we can write T as

where \(T^*<\infty \) denotes the failure time of a susceptible subject. It is apparent that covariates may have some effects on U also and for this, we will assume that U follows the logistic model

(Farewell 1982). Here Z denotes the vector of covariates that may have effects on U, which may be the same as, a part of or different from X, and \(\alpha \) denotes a vector of regression parameters.

In the following, by following Chen et al. (2002), we will define \(\pi (Z) = P ( U = 1 | Z )\) as a function of Z. Note that under the assumptions, the survival function of the entire population can be written as

where \(S{(t|U=1)}\) denotes the survival function of susceptible subpopulation. It follows that

In other words, the survival estimation omitting the presence of cured subpopulation would result in \(\hat{S}(t)\) that may overestimate the survival of susceptible subpopulation \(S (t|U=1)\).

In the following we will suppose that \(T^*\) and C may be related. In other words, we have dependent current status data. To describe covariate effects and the relationship between \(T^*\) and C, we will assume that there exists a latent variable b with mean zero and variance \(\sigma ^2\) and given X and b, the cumulative hazard functions of \(T^*\) and C have the forms

and

respectively. In the above, \(\Lambda _{T} (t)\) and \(\Lambda _{C} (t)\) denote unknown cumulative baseline functions, \(\beta \) and \(\gamma \) are the vectors of regression parameters. That is, both \(T^*\) and C follow the proportional hazards frailty model (Kalbfleisch and Prentice 2002).

Suppose that the study consists of n independent subjects and there exists an noninformative censoring time \(C^*\), such as the administrative stopping time, that is independent of both T and C. Define the observed censoring time \(\tilde{C}\) = min(\(C,C^*\)), \(\delta = I ( T \le \tilde{C} )\), the observed censoring indicator, and \(\rho \) = I (\(C\le C^*\)), the administrative censoring indicator. For \(i = 1 ,\ldots , n\), let \(T_i\), \(\tilde{C}_i\), \(b_i\), \(\delta _i\), \(\rho _i\), \(X_i\) and \(Z_i\) be defined as T, \(\tilde{C}\), b, \(\delta \), \(\rho \), X and Z, respectively, but associated with subject i. Assume that the observed data have the form \(\{ \, O_i =( \tilde{C}_i , \delta _i , \rho _i , X_i , Z_i) , \; i = 1 ,\ldots , n \, \}.\)

To derive the likelihood function, let \(\lambda _{C} (t)\) denote the first derivative of \(\Lambda _{C} (t)\) and \(f ( b_i ;\sigma ^2)\) the density function of the \(b_i\)’s, which is assumed to be known up to \(\sigma ^2\). Denote the survival functions of \(T^*\) and C by \(S_t(t)\) and \(S_c(t)\), respectively, and let the density function of C be \(f_c(t)\). For the derivation of the likelihood function, note the following facts. For the subjects whose have failed before the observed censoring time \(\tilde{C}_i\) (\(\delta _i=1\)), the subject has to be non-cured and thus gives the likelihood contribution \(\pi (Z_i)(1-S_t(\tilde{C}_i))\). If the failure has not been observed by the observed censoring time \(\tilde{C}_i\) (\(\delta _i=0\)), the corresponding subject could be either non-cured but has survived up to time \(\tilde{C}_i\) or cured with survival probability being 1 all the time. In this case, the likelihood contribution would be \(\pi (Z_i)S_t(\tilde{C}_i)+1-\pi (Z_i)\). It follows that the full likelihood function of \(\theta = (\alpha , \beta , \gamma , \sigma ^2 , \Lambda _T(\cdot ) , \Lambda _C (\cdot ))\) has the form \(L_f ( \theta ) = \prod _{i=1}^n L_{f} (\theta |O_i)\), where

In the next section, we will discuss estimation of the parameter \(\theta \).

3 Inference procedure

3.1 Sieve maximum likelihood estimation

To estimate the unknown parameter \(\theta \), it is apparent that it would be natural to maximize the likelihood function \(L_f ( \theta )\). On the other hand, it is well-known that this may not be straightforward due to the dimensions of \(\Lambda _{T} (t)\) and \(\Lambda _{C} (t)\). For this, by following Huang and Rossini (1997) and others, we propose to approximate \(\Lambda _{T} (t)\) and \(\Lambda _{C} (t)\) by the Bernstein polynomials over \(I = [ a , b ]\), where a and b denote the lower and upper bounds of the observation times \(\tilde{C}_i\)’s. Denote the parameter space of \(\theta \) by \(\Theta \) and define

where \({\mathcal {B}} = \left\{ (\alpha ',\beta ',\gamma ',\sigma ^2)' \in R^{2p+q+1} ,\Vert \alpha \Vert +\Vert \beta \Vert +\Vert \gamma \Vert +|\sigma ^2|\le M\right\} \),

In the above, p denotes the dimension of \(\beta \) and \(\gamma \), q the dimension of \(\alpha \), the \(\xi _i\)’s and \(\eta _i\)’s are unknown parameters to be estimated, and

with m representing the degree of the Bernstein polynomials which is usually chosen as \(m = O( n^\nu )\) for some \(0<\nu <1/2,\) \(M_n=O(n^{a}),\) \(0<a<1/2\). Then it can be shown that \(\Theta _n=\mathcal {B}\times {\mathcal {M}}^1_n\times {\mathcal {M}}_n^2\) can be used as a sieve space of \(\Theta \) (Lorentz 1953), and thus it is natural to define the estimator \(\hat{\theta } = (\hat{\alpha }, \hat{\beta }, \hat{\gamma }, \hat{\sigma }^2, \hat{\Lambda }_{1n} (\cdot ), \hat{\Lambda }_{2n} (\cdot ) )\) as the value of \(\theta \) that maximizes the log likelihood function \(l ( \theta )\) over \(\Theta _n\).

In the following, we will first establish the asymptotic properties of \(\hat{\theta }\) and then discuss its determination. To describe the asymptotic properties, for any \(\theta _j^* = (\alpha _j^* , \beta _j^* , \gamma _j^* , \sigma _j^{2 *} , \Lambda _{T 1}^* (\cdot ) , \Lambda _{C j}^* (\cdot )) \in \Theta , j = 1 , 2 ,\) define the distance

and the \(L_2(P)\) norm \(\Vert g(Y)\Vert _2 = (\int |g|^2 dP)^{1/2}\) for a function g and a random variable Y with probability measure P. Let \(\theta _0 = ( \alpha _0,\beta _0,\gamma _0,\sigma _0^2,\Lambda _{T0}, \Lambda _{C0})\) denote the true value of \(\theta \). We will first describe the consistency and convergence rate of \(\hat{\theta }\) and then the asymptotic normality of \(\hat{\alpha },\) \(\hat{\beta },\) \(\hat{\gamma }\) and \(\hat{\sigma }^2.\) All limits below are taken as \(n \rightarrow \infty \).

Theorem 1

Suppose that Conditions A1–A4 described in the Appendix hold. Then \(\hat{\alpha },\) \(\hat{\beta },\) \(\hat{\gamma }\) and \(\hat{\sigma }^2\) are strong consistent estimators of \(\alpha _{0},\) \(\beta _{0},\) \(\gamma _0\) and \(\sigma _0^2\), respectively, and furthermore, we have

almost surely.

Theorem 2

Suppose that Conditions A1-A4 described in the Appendix hold. Then we have

Theorem 3

Assume that Conditions A1 - A5 described in the Appendix hold. Then we have

in distribution and furthermore, \((\hat{\alpha }',\hat{\beta }',\hat{\gamma }',\hat{\sigma }^2)'\) is semiparametrically efficient, where \(\Sigma \) is given in the Appendix.

The proofs of the theorems above are sketched in the Appendix. In the next subsection, we will discuss the determination of \(\hat{\theta }\) and present an EM algorithm.

3.2 EM algorithm

To determine \(\hat{\theta }_n\), we will employ or develop an EM algorithm. For this, first note that if the \(b_i\)’s were observed, the pseudo-complete data likelihood function based on \(\{ (O_i , b_i ) , \; i=1,\ldots ,n\}\) would be

In the above, \(\theta _1 = ( \alpha , \beta , \Lambda _T (\cdot ) )\), \(\theta _2 = ( \gamma , \Lambda _C (\cdot ) )\)

and \(L_3 (\sigma ^2) = \prod _{i=1}^n f (b_i;\sigma ^2)\).

Let \(\hat{\theta }^{(k)}\) denote the estimator of \(\theta \) obtained after the k-th iteration. Note that for the \((k+1)\)th iteration, in the E-step, we need to computer

where \(E_b \{ \, \log L_c(\theta )| O_i 's ,\hat{\theta }^{(k)} \, \}\) denotes the conditional expectation of \(\log L_c(\theta )\) with respect to b given \( O_i 's\) and the current parameter value \(\hat{\theta }^{(k)}.\) In the M-step, we need to maximize

with respect to \(\theta _1\), \(\theta _2\) and \(\sigma ^2\), respectively. For all expectations above, it is clear that they do not have closed forms and for this, by following Chen et al. (2009) and others, we can employ the Monte Carlo method. Specifically, for any arbitrary function \(h(b_i)\) and sufficiently large L, we can approximate the conditional expectation \(E_b \{ \, h (b_i) | O_i 's , \hat{\theta }^{(k)} \, \}\) by

In the above,

and \(\{b_{il},l=1,\ldots ,L\}\) are generated from the density function \(f ( b_i ; \sigma ^{2 (k)} )\).

In the M-step, to obtain the updated estimator of \(\theta _1\) in the k-th iteration, we need the following score functions

and

for \(j = 0 , 1,\ldots , m\). In the above,

and

For the determination of the updated estimators of \(\gamma \) and \(\Lambda _C (t)\) in the M-step, we need the following score functions

and

where \(B'_j(t,m,a,b)\) denotes the first order derivative of \(B_j(t,m,a,b)\) for \(j = 0 , 1,\ldots , m\). Note that in the sieve space \(\Theta _n\), \(\lambda _C (t)\) has the form

Finally in the M-step, the updated estimator of \(\sigma ^2\) has a closed form

By combining all discussions above, the EM algorithm can be described as follows.

-

Step 0. Choose m and initial values for all parameters or \(\hat{\theta }^{(0)}\).

-

Step 1. At the \((k+1)\)th iteration, generate a random sample \(\{ \, b_{il} , \; l = 1 ,\ldots , L , i = 1 ,\ldots , n \, \}\) from \(f ( b ; (\hat{\sigma }^{(k)})^2)\).

-

Step 2. Calculate the five conditional expectations \(E_{b_i} ( \exp \{b_i\} | O_i 's , \hat{\theta }^{(k)})\), \(E_{b_i} ( b_i^2 | O_i 's , \hat{\theta }^{(k)})\), \(E_{b_i} ( \phi _{i1} ( b_i ; \theta _1 ) | O_i 's , \hat{\theta }^{(k)})\), \(E_{b_i} ( \phi _{i2} ( b_i ; \theta _1 ) | O_i 's , \hat{\theta }^{(k)})\) and \(E_{b_i} ( \phi _{i3} ( b_i ; \theta _1 ) | O_i 's , \hat{\theta }^{(k)})\) at \(\theta = \hat{\theta }^{(k)}\).

-

Step 3. Determine the updated estimators \(\hat{\alpha }^{(k+1)}\) and \(\hat{\beta }^{(k+1)}\) by solving the equations \(S_\alpha ( \theta _1) = 0\) and \(S_\beta ( \theta _1) = 0\) with \(\xi _j = \hat{\xi }_j^{(k)}\), \(j = 0 , 1 ,\ldots , m\).

-

Step 4. Determine the updated estimators \(\hat{\xi }_j^{(k+1)}\)’s by solving the equations \(\{ \, S_{\xi _j} ( \theta _1 ) = 0 \, \}\) with \(\alpha = \hat{\alpha }^{(k+1)}\) and \(\beta = \hat{\beta }^{(k+1)}\).

-

Step 5. Determine the updated estimator \(\hat{\gamma }^{(k+1)}\) by solving the equation \(S_\gamma (\theta _2) = 0\) with \(\eta _j = \hat{\eta }_j^{(k)}\), \(j = 0 , 1 ,\ldots , m\).

-

Step 6. Determine the updated estimators \(\hat{\eta }_j^{(k+1)}\)’s by solving the equations \(\{ \, S_{\eta _j} ( \theta _2 ) = 0 \, \}\) with \(\gamma = \hat{\gamma }^{(k+1)}\).

-

Step 7. Determine the updated estimator \((\hat{\sigma }^{(k+1)})^2\) by the estimator given in (5).

-

Step 8. Repeat Steps 1–7 until the convergence.

To implement the EM algorithm described above, one needs to pay attention to several issues. One is the methods to be used for solving the equations involved in Steps 3–7 and for this, various algorithms can be employed. In the numerical studies below, we applied the Newton-Raphson algorithm in Steps 3 and 5 and the Broyden–Fletcher–Goldfard–Shanno method (Fletcher 1987) in Steps 4 and 6. The selection of initial values is another important issue and for this, we employed the following procedure that temporarily treats the current status data as right-censored data and ignores the dependent censoring. More specifically, for obtaining the initial values for \(\alpha \), \(\beta \) and \(\xi _j\), \(j=0,1,\ldots ,m\), we apply the method given in the data. The initial values for \(\alpha \) and \(\beta \) are set to be the corresponding maximum likelihood estimators and the initial values for \(\xi _j\) are obtained by fitting the estimated cumulative baseline hazard function of T using Bernstein polynomials. Similarly the initial value of \(\gamma \) is taken to be the maximum partial likelihood estimator and the initial values of the \(\eta _j\)’s are obtained by fitting the Nelson–Aalen estimator for the cumulative baseline hazard function of C using Bernstein polynomials.

For the convergence, we employed two criteria. One is to check \(\Vert \hat{\alpha }^{(k+1)} - \hat{\alpha }^{(k)}\Vert \le \epsilon \), \(\Vert \hat{\beta }^{(k+1)} - \hat{\beta }^{(k)}\Vert \le \epsilon \), \(\Vert \hat{\xi _j}^{(k+1)} - \hat{\xi _j}^{(k)}\Vert \le \epsilon \), \(\Vert \hat{\gamma }^{(k+1)}-\hat{\gamma }^{(k)}\Vert \le \epsilon \), \(\Vert \hat{\eta _j}^{(k+1)} - \hat{\eta _j}^{(k)}\Vert \le \epsilon \), and \(| \hat{\sigma }^{(k+1)} - \hat{\sigma }^{(k)} |^2 \le \epsilon \) for a given positive number \(\epsilon \), \(j = 0 , 1 ,\ldots , m\). The other is to stop the EM algorithm when the standard errors of the \(\alpha ^{(k)}\)’s, \(\beta ^{(k)}\)’s, \(\xi _{j}^{(k)}\)’s, \(\gamma ^{(k)}\)’s, \(\eta _{j}^{(k)}\)’s and \(\sigma ^{(k)}\)’s from 30 consecutive iterations are all smaller than \(\epsilon _1\), also a given positive number. Note that it is well known that the Monte Carlo method adopted for the approximation of the condition expectation in (4) could cause approximation errors (Chen et al. 2012). Also we observed that the estimators may not converge completely but rather fluctuate within a small range and these motivated the use of the two criteria above together.

A third issue for the implementation of the EM algorithm above is the selection of m, the degree of the Bernstein polynomials which controls the smoothness of the approximation. It is apparent that a simple approach is to try several different values that are in the order \(O(n^\nu )\) and compare the obtained results. By following the BIC criterion for model selection (Burnham and Anderson 2002), an alternative is to choose the value of m that minimizes

For inference about \(\theta \), of course, one also needs to estimate \(\Sigma \) or the covariance matrix of \(\hat{\theta }_n\). For this, we employ the method given in Louis (1982) and estimate it by the inverse of the matrix

4 A simulation study

Now we present some results obtained from an extensive simulation study conducted to assess the performance of the estimation procedure proposed in the previous sections. In the following, we will focus on the situations where \(X \ne Z\) and b follows the normal distribution. The results for the situation with \(X = Z\) gave similar results. For the generation of the covariates, we considered several distributions including the uniform distribution and the normal distribution, and assumed that they can be either one- or two-dimensional. To generate the data, we first generated the \(X_i\)’s and the \(b_i\)’s and then the \(C_i\)’s from model (3) with \(\Lambda _{C} (t) = 0.01 \, t^2\). of course, before the generation of the failure time \(T_i\), we also need to generate the \(U_i\)’s based on model (1) and generate the \(T_i^*\)’s based on model (2) with \(\Lambda _{T} (t) = 0.05 \, t^2\). The noninformative censoring time \({C}_i^*\) was set to be a constant \(\tau \), which was chosen to give the desired percentage of the right-censored \(C_i\)’s. The results given below are based on \(n = 200\) or 400 and with 1000 replications.

Tables 1, 2 and 3 give the results on estimation of regression parameters \(\beta \), \(\alpha \) and \(\gamma \) and variance parameter \(\sigma \) for the single covariate situation with the \(Z_i\)’s following the uniform distribution over ( 0 , 2), \(X_i\)’s following N(0, 1), and \(\alpha _0 = 1\), \(( \beta _0 , \gamma _0 ) = (0,0)\), (0, 0.5), (0.5, 0), or (0.5, 0.5). Table 1 considered the situation with \(\sigma _0 = 0 \), meaning no correlation between T and C, Table 2 studied the situation with \(\sigma _0\) = 0.5, and Table 3 assumed \(\sigma _0 \,=\, 1\). They include the estimated bias (Bias) given by the average of the estimates minus the true value, the sample standard deviation (SSD) of the estimates, the average of the estimated standard errors (SEE), and the 95 % empirical coverage probability (CP). The right-censoring rate (Cr) and the cure proportion (Cure%) for each scenario are also provided. For the degree of the Bernstein polynomials, similar to Zhou et al. (2016), we took \(m = [n^{1/4}]= 3\) for \(n = 200\) and \(m = [n^{1/4}]= 4\) for \(n = 400\).

One can see from the tables that the proposed estimator seems to be unbiased and the variance estimation is reliable as it is close the sample standard deviation. In addition, the 95 % empirical coverage probabilities are close to 0.95, indicating that the normal approximation to the distribution of the proposed estimator appears to be appropriate. Furthermore, as expected, both the bias and the estimated standard error decreased and the 95 % empirical coverage probability became closer to 0.95 as the sample size increased. Also the results seem robust for different magnitude of \(\sigma _0\) and especially it is interesting to note that under the situation that the censoring time is actually independent with the failure time (\(\sigma _0=0\)), the proposed estimation procedure provides robust inference for the regression parameters \(\alpha \), \(\beta \) and \(\gamma \). However, we found that the estimate for \(\sigma \) is sensitive to its initial value and the estimate for \(\sigma \) became unreliable if its initial value are chosen to be large.

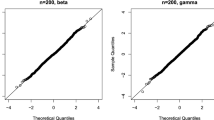

The results obtained for the two covariate situation are presented in Table 4 with \(\beta _0 = ( 1 , 1.5 ) '\), \(\alpha _0 = ( 1 , 1 ) '\), \(\gamma _0 = ( 1 , 1 ) '\) or \(( 1 , -1 )'\), and \(\sigma _0 = 0.5\). Here we considered the case where \(Z_1\) and \(Z_2\) were generated from the standard normal distribution and the uniform distribution over \(( -1 , 1 )\), respectively, and \(X_1\) and \(X_2\) were generated from the Bernoulli distribution with \(p=0.5\) and the standard normal distribution, respectively. For the degree of the Bernstein polynomials, again we chose \(m = 3\) for \(n = 200\) and \(m = 4\) for \(n = 400\). It is apparent that they gave similar conclusions to those seen in the one covariate scenarios. To see the performance of the proposed method on estimation of the cumulative hazard functions \(\Lambda _T (t)\) and \(\Lambda _C (t)\), Fig. 1 displays the averages of the proposed estimators for the situation of \(\gamma = ( 1 , 1)'\) with \(n = 400\). One can see that they indicate that the proposed estimation approach seems to perform well. We also considered other set-ups, including using different values for m, and obtained similar results.

5 An application

Now we apply the methodology proposed in the previous sections to a set of current status data arising from a 2-year tumorigenicity study conducted by the National Toxicology Program. The study consists of groups of 50 male and female F344/N rats and B6C3F1 mice and they were exposed to chloroprene at concentrations of 0, 12.8, 32, or 80 ppm by inhalation, 6 h per day, 5 days per week, for 2 years. Some animals died naturally during the study and others who survived at the end of the study were sacrificed. At the time of death or sacrifice, each animal was examined for the presence or absence of tumors in various organs. Therefore only current status data are available for the tumor occurrence times. In the following, we will focus on a liver tumor, hepatocellular carcinoma, from 200 male and female B6C3F1 mice in the control and the highest dose group. As it is well-known, as most tumors, the liver tumor is between lethal and nonlethal, or its occurrence can have some effects on the death rate. In other words, the tumor onset time (failure time) and death time (censoring time) are correlated and thus we have informative current status data.

Before applying the proposed inference procedure, to check the possible existence of a cured subgroup in the data, we first considered the model for the dependent current status data without incorporating the cure model. Figure 2 presents the estimated marginal survival functions for the time to tumor onset, and one can see that the estimated survival curves for both female and male control groups have a clear non-zero plateau at the tail, indicating the possible existence of a non-susceptible mice subgroup. In other words, it seems to be reasonable and more appropriate to incorporate the cure model for analyzing the data here.

For the analysis, define \(X_1 = 0\) for female animals and 1 otherwise and \(X_2 = 0\) for the animals in the control group and 1 otherwise. That is, we have two covariates. For the cure model, we assume that it has the form

Table 5 presents the estimated covariate effects as well as the estimated standard errors and the p-values for testing no covariate effects. Here for comparison, we considered several different values for m and the results given in the table were obtained based on \(m = 3\), 4 and 5. By using the BIC criterion defined in (6), \(m = 4\) yields the best result .

With respect to the dose effect on tumor growth, the results in Table 5 suggest that the mice in the higher dose group had a significantly higher rate or hazard of developing tumor. The male mice seem to be also more likely than the female mice to develop tumors, however, the effect is not statistically significant. The estimated non-susceptible (cure) probabilities are given in Table 6. They indicate that the animals in the higher dose group seem to be more susceptible to the tumor, but the gender did not seem to have significant effect on the cure rate. One possible reason for this could be that most health mice in normal environment do not develop liver tumor in their whole life. With respect to the dose effect on the animal death rate, the conclusion is similar to that for the dose effect on tumor onset, but the death rate did not seem to have any significant relationship with the gender of the animals. In addition, we obtained the estimate of \(\sigma ^2\) being 0.672, which seems to indicate the existence of some informative censoring. On the other hand, one should be careful about this as was shown by the simulation study, the estimate can be sensitive to the non-testable dependence assumption and initial value.

To see the dose and gender effects on tumor onset graphically, Fig. 3 presents the separate, estimated marginal survival functions for the times to tumor onset and they gave similar conclusions. Note that the left plot of Fig. 3 presents the estimated survival functions for the entire population, while the right plot presents the estimated survival functions for the susceptible subpopulation. Here \(\hat{S}(t|U=1)\) is the estimated marginal survival function for \(T^*\) based on model (2) with \(b=0\). By comparing Figs. 2 and 3, one can see that as mentioned above, omitting the cured subgroup resulted in an overestimation of the survival of the susceptible mice.

6 Concluding remarks

In this paper, we discussed regression analysis of current status data in the presence of cured subgroup and informative censoring. For the problem, we employed the mixture cure rate model and frailty model approaches. To estimate regression parameters as well as other parameters, a semiparametric sieve maximum likelihood estimation approach was developed with the use of Bernstein polynomials. Also an EM algorithm was presented for the determination of the proposed estimators. The procedure has the advantage of reducing the dimensionality of the estimation problem, thus relieving the computation burden, and can be easily implemented. In addition, the proposed estimators were shown to be consistent and asymptotically normally distributed, and simulations studies suggested that the method works well for practical situations.

There exist several directions for future research. One is that in the preceding sections, it has been assumed that the failure time of interest follows the proportional hazards model marginally. It is apparent that this may be questionable in some situations and some other models such as the additive hazards model or the linear semiparametric transformation model may be more appropriate. Thus it would be useful to develop similar inference procedures for these models. Another direction for future research is that in the preceding sections, the focus has been on the mixture cure model and sometimes one may prefer promotion time model (Liu and Shen 2009; Zeng et al. 2006), which has the advantage of allowing one to model the underlying failure time variable uniformly. It is apparent that although the idea discussed above may still apply to the latter situation, it may not be easy or straightforward to establish the estimation procedure.

It is well-known that under right censoring, the independence censoring assumption cannot be tested without strong modeling and this is true for the situation discussed here. On the other hand, under the models described above, one may ask if it is possible to test the independence censoring assumption by using the estimator of \(\sigma ^2\). As discussed above, one may be careful about this as the simulation study suggested that in the case of dependent censoring with \(\sigma _0^2 > 0\), the estimator seems to perform well. On the other hand, for the situation of independent censoring with \(\sigma _0^2 = 0\), the estimator seems to be sensitive to the initial value of \(\sigma ^2\). In other words, more work is needed for this or one may want to develop some other more direct procedures for testing the assumption.

References

Burnham KP, Anderson DR (2002) Model selection and multimodel inference: a practical information-theoretic approach. Springer, New York

Chang IS, Wen CC, Wu YJ (2007) A profile likelihood theory for the correlated gamma-frailty model with current status family data. Stat Sin 17:1023–1046

Chen M, Ibrahim J, Sinha D (2002) Bayesian inference for multivariate survival data with a cure fraction. J Multivar Anal 80:101–126

Chen X, Fan Y, Tsyrennikov V (2006) Efficient estimation of semiparametric multivariate copula models. J Am Stat Assoc 101:1228–1240

Chen M, Tong X, Sun J (2009) A frailty model approach for regression analysis of multivariate current status data. Stat Med 28:3424–3426

Chen C, Lu T, Chen M, Hsu C (2012) Semiparametric transformation models for current status data with informative censoring. Biom J 54:641–656

Chen T (2013) Statistical issues and challenges in immuno-oncology. J Immunother Cancer 1:18

Fang H, Li G, Sun J (2005) Maximum likelihood estimation in a semiparametric logistic/proportional-hazards mixture model. Scand J Stat 32:59–75

Farewell VT (1982) The use of mixture models for the analysis of survival data with longterm survivors. Biometrics 38:1041–1046

Finkelstein DM, Schoenfeld DA (1989) Analysis of multiple tumor data from a rodent carcinogenicity experiment. Biometrics 45:219–230

Fletcher R (1987) Practical methods of optimization, 2nd edn. Wiley, New York

Huang J (1996) Efficient estimation for the proportional hazard model with interval censoring. Ann Stat 24:540–568

Huang J, Rossini AJ (1997) Sieve estimation for the proportional odds failure time regression model with interval censoring. J Am Stat Assoc 92:960–967

Huang X, Wolfe R (2002) A frailty model for informative censoring. Biometics 58:510–520

Jewell NP, van der Laan MJ (1995) Generalizations of current status data with applications. Lifetime Data Anal 1:101–109

Kalbfleisch JD, Prentice RL (2002) The statistical analysis of failure time data. Wiley, Hoboken

Lam KF, Xue H (2005) A semiparametric regression cure model with current status data. Biometrika 92:573–586

Li C, Taylor JMG, Judy PS (2001) Identifiability of cure models. Stat Probab Lett 54:389–395

Li Y, Tiwari R, Guha S (2007) Mixture cure survival models with dependent censoring. J R Stat Soc Series B 69:285–306

Lin D, Oakes D, Ying Z (1998) Additive hazards regression with current status data. Biometrika 85:289–298

Liu Hao, Shen Yu (2009) A semiparametric regression cure model for interval censored data. J Am Stat Assoc 104:1168–1178

Lorentz GG (1953) Bernstein polynomials, 1st edn. University Toronto Press, Toronto

Louis TA (1982) Finding the observed information matrix when using the EM algorithm. J R Stat Soc Ser B 40:226–233

Lu W, Ying Z (2004) On semiparametric transformation cure models. Biometrika 91:331–343

Ma S (2009) Cure model with current status data. Stat Sin 19:233–249

Othus M, Li Y, Tiwari R (2007) A class of semiparametric mixture cure survival models with dependent censoring. J Am Stat Assoc 104:1241–1250

Pollard D (1984) Convergence of stochastic processes. Springer, New York

Rondeau V, Schaffner E, Corbiere F, Gonzalez J and Pelissier S (2011) Cure frailty models for survival data: application to recurrences for breast cancer and to hospital readmissions for colorectal cancer. Stat Methods Med Res 1–18

Rossini AJ, Tsiatis AA (1996) A semiparametric proportional odds regression model for the analysis of current status data. J Am Stat Assoc 91:713–721

Shen X (1997) On methods of sieves and penalization. Ann Stat 25:2555–2591

Shen X, Wong WH (1994) Convergence rate of sieve estimates. Ann Stat 22:580–615

Sun J (2006) The statistical analysis of interval-censored failure time data. Springer, New York

Van der Varrt AW, Wellner JA (1996) Weak convergence and empirical processes. Springer, New York

Zeng D, Yin G, Ibrahim JG (2006) Semiparametric transformation models for survival data with a cure fraction. J Am Stat Assoc 101:670–684

Zhang Z, Sun J, Sun L (2005) Statistical analysis of current status data with informative observation times. Stat Med 24:1399–1407

Zhou Q, Tao Hu, Sun J (2016) A sieve semiparametric maximum likelihood approach for regression analysis of bivariate interval-censored failure time data. J Am Stat Assoc. doi:10.1080/01621459.1158113

Acknowledgments

The authors wish to thank the editor and two reviewers for their many constructive and helpful comments and suggestions. This work was partly supported by the NSFC of P. R. China (11201317, 11371062,11671274) to Hu, and NSF and a NIH Grant to Sun.

Author information

Authors and Affiliations

Corresponding author

Appendix: Proofs of the asymptotic properties

Appendix: Proofs of the asymptotic properties

In this appendix, we will sketch the proofs for Theorems 1–3 and for this, we will mainly use some results about empirical processes given in van der Vaart and Wellner (1996). In the following, for a function f and a random variable X with the distribution P, define \(Pf = \int f(x)dP(x)\) and \(P_n f = n^{-1}\sum _{i=1}^n f(X_i),\) and let K represent a generic constant that may vary from place to place. For the proof, we need the following regularity conditions.

-

(A1)

(i) \((\alpha ',\beta ',\gamma ',\sigma ^2)'\in {\mathcal {B}},\) where \({\mathcal {B}}\) is a compact subset of \(R^{2p+q+1}\); (ii) The rth derivative of \(\Lambda _T(\cdot )\) (\(\Lambda _C(\cdot )\)) is bounded and continuous.

-

(A2)

(i) Assume \(\sup _{\theta \in \Theta ,O}|l(\theta ,O)|n^{-\varsigma }\) is bounded, \(0<\varsigma <1/2\); (ii) Suppose that there is a sequences of positive numbers \(\{K_n\}\) such that for any \(\theta _1,\theta _2\in \Theta _n,\) \(|l(\theta ^1,O)- l(\theta ^2,O)|\le K_n(\Vert \alpha ^1-\alpha ^2\Vert +\Vert \beta ^1-\beta ^2\Vert +\Vert \gamma ^1-\gamma ^2\Vert +|(\sigma ^1)^2-(\sigma ^2)^2|+\Vert \Lambda _1^1-\Lambda _1^2\Vert _\infty +\Vert \Lambda _2^1-\Lambda _2^2\Vert _\infty ),\) where \(K_n=O(n^b),0<b<1/2.\)

-

(A3)

Let \(l(\theta ,O) = \log L_f ( \theta |O)\) with \(L_f(\theta |O)\) given in Sect. 2. For any \(\theta ^1,\theta ^2\in \Theta ,\) if \(l(\theta ^1,O)=l(\theta ^2,O),\) then \(\theta ^1=\theta ^2.\)

-

(A4)

For every \(\theta \) in a neighborhood of \(\theta _0\), \(P(l(\theta ,O)-l(\theta _0,O))\preceq -d^2(\theta , \theta _0)\), where \(\preceq \) means “smaller than, up to a constant”.

-

(A5)

(i). \(0<E[(\dot{l}(\theta _0,O)[v])^2]<\infty \) for \(v\ne 0,\) \(v\in V,\) where \(\dot{l}(\theta _0,O)[v]\) is defined in (S6) below. (ii). For \(\theta \in \{\theta :d(\theta ,\theta _0)=O(\delta _n)\},\) \(P\{\ddot{l}(\theta ,O)[\theta -\theta _0,\theta -\theta _0]-\ddot{l}(\theta _0,O)[\theta -\theta _0,\theta -\theta _0]\}=O(\delta _n^3)\) and \(\delta _n^3=O(n^{-1}),\) where \(\ddot{l}(\theta ,O)[v,\tilde{v}]\) is defined in (S7).

Condition (A1) is commonly used in the literature of semiparametric regression with interval censored data (e.g., Huang and Rossini 1997; Lam and Xue 2005; Ma 2009 and others). Note that since the likelihood function involves integral, the relationship between likelihood function and covariates is complex. So in order to avoid assuming the bounded support for covariates and high order derivative of log-likelihood exists, Conditions (A2), (A4) and (A5) are used. Conditions (A2), (A4) and (A5) can be verified under the boundedness conditions and differentiability of the log-likelihood function. In this case, \(\sigma =0\) and \(b=0\) in Condition (A2).

It is easy to see that Condition (A3) is an identification condition and some detailed discussion on the issue can be found in Li et al. (2001) among others. Actually it can be replaced be the following sufficient condition, which may be easier to understand. To describe the sufficient condition, define \(\vartheta =(\alpha ', \beta ', \gamma ', \sigma ^2)'\) and

Then a sufficient condition for the identifiability of the parameters can be that: There exists \(t^*\) in the support of the conditional distribution of \(\tilde{C}\) given \(X=x,Z=z\) for which there are \(2p +q+3\) different values \((\delta ,\rho ,X,Z)\) such that if

then \(a_1=0,\) \(a_2=a_3=0,\) where \((\delta ,\rho )=(0,0),(1,0),(0,1)\) or (1, 1). The proof for the sufficient condition above is similar to that for Theorem 2.1 in Chang et al. (2007), and similar conditions can be found, for example, in the context of multivariate current status data under Gamma frailty model (Chang et al. 2007).

Lemma 1

(Calculation of the covering number ) Under Conditions (A1) and (A2), the covering number of the class \({\mathcal {F}}=\{n^{-\varsigma }l(\theta ,O):\theta \in \Theta _n\}\) satisfies

with \(p_n=2p+q+1+2(m+1).\)

Lemma 2

(Uniform convergence) Under Conditions (A1) and (A2), we have

almost surely.

Now we are ready for the proofs of Theorems 1–3.

1.1 Proof of Theorem 1

By Lemma 1, for any \(\varepsilon >0,\) the covering number (definition 2.1.5 in van der Vaart and Wellner 1996) of the class \({\mathcal {F}}=\{n^{-\varsigma }l(\theta ,{O}): \theta \in \Theta _n\}\) satisfies

with \(p_n=2p+q+1+2(m+1).\) Adopting similar proofs of Theorem 37 in (1984, p. 34) (see also Lemma 2), we have

Let \(M(\theta , O)=-l(\theta , O),\)

Denote \(K_\epsilon =\{\theta : d(\theta , \theta _0)\ge \epsilon , \theta \in \Theta _n\}.\)

If \(\hat{\theta }_n\in K_\epsilon ,\) we have

By identification condition (A3), we obtain that \(\inf _{K_\epsilon }PM(\theta ,O)-PM(\theta _0,O)=\delta _\epsilon >0.\)

with \(\zeta _n=\zeta _{1n}+\zeta _{2n}.\) Hence, we can get that \(\zeta _n \ge \delta _\epsilon .\) Furthermore, we have \(\{\hat{\theta }_n \in K_{\epsilon } \}\subseteq \{\zeta _n \ge \delta _{\epsilon }\}.\) By (S1) and Strong Law of Large Numbers, we have \(\zeta _{1n}=o(1),\) \(\zeta _{2n}=o(1),\) a.s. Therefore, by \(\cup _{k=1}^{\infty }\cap _{n=k}^{\infty }\{\hat{\theta }_n \in K_{\epsilon } \} \subseteq \cup _{k=1}^{\infty }\cap _{n=k}^{\infty }\{\zeta _n \ge \delta _{\epsilon }\}, \) we complete the proof.

1.2 Proof of Theorem 2

For any \(\eta >0,\) define the class \({\mathcal {F}}_{\eta }=\{l(\theta ,O)-l(\theta _{n0},O): \theta \in \Theta _n, d(\theta ,\theta _{n0})\le \eta \}\) with \(\theta _{n0}=(\alpha _0,\beta _0,\gamma _0,\sigma _0^2, \Lambda _{1n0},\Lambda _{2n0}).\) Following the calculation of (1994, p. 597), we can establish that \(\log N_{[ ]}(\varepsilon ,{\mathcal {F}}_{\eta },\Vert \cdot \Vert _{2})\le K N\log (\eta /\varepsilon )\) with \(N=2(m+1),\) where \(N_{[ ]}(\varepsilon , {\mathcal {F}}, d)\) denotes the bracketing number (see the definition 2.1.6 in van der Vaart and Wellner 1996) with respect to the metric or semi-metric d of a function class \({\mathcal {F}}.\) Moreover, Condition (A4) lead to \(\Vert l(\theta ,O)-l(\theta _{n0},O)\Vert _{2}^2\le K \eta ^2\) for any \(l(\theta ,O)-l(\theta _{n0},O)\in {\mathcal {F}}_{\eta }.\)

Therefore, by Lemma 3.4.2 of van der Vaart and Wellner (1996), we obtain

where \(J_{\eta }(\varepsilon ,{\mathcal {F}}_{\eta },\Vert \cdot \Vert _{2})=\int _0^\eta \{1+\log N_{[ ]}(\varepsilon ,{\mathcal {F}}_{\eta },\Vert \cdot \Vert _{2})\}^{1/2}d\varepsilon \le C N^{1/2}\eta .\) The right-hand side of (S4) yields \(\phi _n(\eta )=C(N^{1/2}\eta +N/n^{1/2}).\) It is easy to see that \(\phi _n(\eta )/\eta \) decreasing in \(\eta ,\) and \(r_n^2\phi _n(1/r_n)=r_nN^{1/2}+r_n^2N/n^{1/2}<2n^{1/2},\) where \(r_n=N^{-1/2}n^{1/2}=n^{(-\nu +1)/2},0<\nu <1/2.\) Hence \(n^{(1-\nu )/2}d(\hat{\theta },\theta _{n0})=O_P(1)\) by Theorem 3.2.5 of van der Vaart and Wellner (1996). This, together with \(d(\theta _{n0},\theta _0)=O_p(n^{-r/2})\) (Theorem 1.6.2 of Lorentz 1953), yields that \(d(\hat{\theta },\theta _0)=O_p(n^{-(1/2-\nu )}+n^{-r/2})\). This completes the proof.

To prove Theorem 3, we need further notations. Denote V as the linear span of \(\Theta -\theta _0\), where \(\theta _0\) denotes the true value of \(\theta =(\alpha ,\beta ,\gamma ,\sigma ^2,\varphi )\) and \(\Theta _0\) the true parameter space. Let \(l(\theta ,O)\) be the log-likelihood for a sample of size one and \(\delta _n=(n^{-(1-\nu )/2}+n^{-r\nu }).\) For any \(\theta \in \{\theta \in \Theta _0: d(\theta ,\theta _0)=O(\delta _n)\},\) define the first order directional derivative of \(l(\theta ,O)\) at the direction \(v\in V\) as

and the second order directional derivative as

Define the Fisher inner product on the space V as \(<v,\tilde{v}>=P\Big \{\dot{l}(\theta ,O)[v]\dot{l}(\theta ,O)[\tilde{v}]\Big \}\) and the Fisher norm for \(v\in V\) as \(\Vert v\Vert ^{1/2}=<v,v>.\) Let \(\bar{V}\) be the closed linear span of V under the Fisher norm. Then \((\bar{V}, \Vert \cdot \Vert )\) is a Hilbert space and the proof is complete.

1.3 Proof of Theorem 3

Define the linear functional of \(\theta \) as \(\gamma (\theta )=b_1'\alpha +b_2'\beta +b_3'\gamma +b_4\sigma ^2,\) where \(b=(b_1',b_2',b_3',b_4)'\) is any vector of \(2p+q+1\) dimension with \(\Vert b\Vert \le 1.\) Let \(\vartheta =(\alpha ',\beta ',\gamma ',\sigma ^2)'.\) Also for any \(v=(v_\vartheta ,h_1,h_2)\in V,\) define

Firstly, we will show smoothness of \(\gamma (\theta ).\) we need to establish that

Also, by the results in Shen (1997), boundedness of \(\gamma (\theta )\) is required for \(\gamma (\theta )=b_1'\alpha +b_2'\beta +b_3'\gamma +b_4\sigma ^2\) to be estimable at the \(\sqrt{n}\)-rate. For each component \(\vartheta _k,\) \(k=1,2,\cdots ,2p+q+1,\) we denote by \(\varphi ^*_k=(h_{1k}^*, h_{2k}^*)\) the solution to

where \(l_\vartheta =(l_\alpha ',l_\beta ', l_\gamma ',l_{\sigma ^2})', \) \(l_\vartheta \) is the derivatives of \(l(\theta ,O)\) with respect to \(\vartheta ,\) \(l_{h_1^*}[h_1]\) and \(l_{h_2^*}[h_2^*]\) are the direction derivatives of \(l(\theta ,O)\) with respect to \(\Lambda _T,\) \(\Lambda _{C}\) at the direction \(h_1^*\) and \(h_2^*\), respectively. \(e_k\) is a \((2p+q+1)\)-dimensional vector of zeros except the k-th element being equal to 1. Define the k-th element of \(S_\vartheta \) as \(l_\vartheta \cdot e_k-l_{h_1^*}[h_{1k}^*]-l_{h_2^*}[h_{2k}^*], k=1,\ldots ,2p+q+1.\)

Thus, we can show that

From the minimization procedure defined in (S7), using similar arguments to Sect. 3.2 in Chen et al. (2006), we obtain

It follows by the Riesz representation theorem that there exists \(v^*\in \bar{V}\) such that \(\dot{\gamma }(\theta _0)[v]=<v^*,v>\) for all \(v \in \bar{V}\) and \(\Vert v^*\Vert ^2=\Vert \dot{\gamma }(\theta _0)\Vert .\) Thus it follows from the Cram\(\acute{e}\)r-Wold device that to prove Theorem 3, it suffices to show that

in distribution since \(b'( (\hat{\alpha } \, - \, \alpha _0)', (\hat{\beta } \, - \, \beta _0)',(\hat{\gamma } \, - \, \gamma _0)',(\hat{\sigma }^2-\sigma _0^2) )=\gamma (\hat{\theta })-\gamma (\theta _0)=\dot{\gamma }(\theta _0)[\hat{\theta }-\theta _0]=<\hat{\theta }-\theta _0,v^*>.\) For this, in the following, we will prove that \(\sqrt{n}<\hat{\theta }-\theta _0,v^*>\rightarrow N(0, \Vert v^*\Vert ^2)\) in distribution.

Let \(\varepsilon _n\) be any positive sequence satisfying \(\varepsilon _n=o(n^{-1/2}).\) For any \(v^*\in \Theta _0,\) by (A1)(ii) and Theorem 1.6.2 of Lorentz (1953), there exists \(\Pi _nv^*\in \Theta _n\) such that \(\Vert \Pi _nv^*-v^*\Vert =o(1)\) and \(\delta _n\Vert \Pi _nv^*-v^*\Vert =o(n^{-1/2}).\) Also define \(r[\theta -\theta _0,O]=l(\theta ,O)-l(\theta _0,O)-\dot{l}(\theta ,O)[\theta -\theta _0].\)

Then by definition of \(\hat{\theta },\) we have

For \(I_1\), Chebyshev inequality and \(\Vert \Pi _nv^*-v^*\Vert =o(1),\) we can conclude that \(I_1=\varepsilon _n o_p(n^{-1/2}).\) For \(I_2,\) we have

where \(\tilde{\theta }\) lies between \(\hat{\theta }\) and \(\hat{\theta }\pm \varepsilon _n\Pi _n v^*.\) By Theorem 2.8.4 of van der Vaart and Wellner (1996), we know that \(\{\dot{l}(\theta ,O)[\Pi _n v^*]-\dot{l}(\theta _0,O)[\Pi _n v^*]: \Vert \theta -\theta _0\Vert =O(\delta _n)\}\) is Donsker class. Therefore, by Corollary 2.3.12 of van der Vaart and Wellner (1996), we have \(I_2=\varepsilon _n\times o_p(n^{-1/2}).\)

For \(I_3\), note that

where \(\tilde{\theta }\) lies between \(\theta _0\) and \(\theta \) and the last equation is due to Taylor expansion, conditions (A5) (ii). Therefore,

where the last equality holds because of Cauchy–Schwartz inequality and the facts that \(\delta _n\Vert \Pi _nv^*-v^*\Vert =o(n^{-1/2})\) and \(\Vert \Pi _nv^*\Vert ^2\rightarrow \Vert v^*\Vert ^2.\) Combing the above facts, together with \(P\dot{l}(\theta _0,O)[v^*]=0,\) we can establish that

Therefore, we obtain that \(\sqrt{n}<\hat{\theta }-\theta _0,v^*>=\sqrt{n}(P_n-P)\{\dot{l}(\theta _0,O)[v^*]\}+o_p(1)\rightarrow N(0,\Vert v^*\Vert ^2),\) where the asymptotic normality is guaranteed by the Central Limits Theorem and the asymptotic variance being equal to \(\Vert v^*\Vert ^2=\Vert \dot{l}(\theta _0,O)[v^*]\Vert ^2.\) This together with condition (A5) implies that \(n^{1/2}(\gamma (\hat{\theta }) -\gamma (\theta _0))=n^{1/2}<\hat{\theta }-\theta _0,v^*>+o_p(1)\rightarrow N(0,\Vert v^*\Vert ^2)\) in distribution. Hence the semiparametric efficiency can be established by applying the result of Theorem 4 in Shen (1997), which completes the proof.

Rights and permissions

About this article

Cite this article

Liu, Y., Hu, T. & Sun, J. Regression analysis of current status data in the presence of a cured subgroup and dependent censoring. Lifetime Data Anal 23, 626–650 (2017). https://doi.org/10.1007/s10985-016-9382-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10985-016-9382-z