Abstract

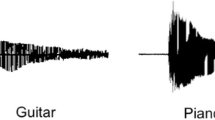

Most ecologically natural sensory inputs are not limited to a single modality. While it is possible to use real ecological materials as experimental stimuli to investigate the neural basis of multi-sensory experience, parametric control of such tokens is limited. By using artificial bimodal stimuli composed of approximations to ecological signals, we aim to observe the interactions between putatively relevant stimulus attributes. Here we use MEG as an electrophysiological tool and employ as a measure the steady-state response (SSR), an experimental paradigm typically applied to unimodal signals. In this experiment we quantify the responses to a bimodal audio-visual signal with different degrees of temporal (phase) congruity, focusing on stimulus properties critical to audiovisual speech. An amplitude modulated auditory signal (‘pseudo-speech’) is paired with a radius-modulated ellipse (‘pseudo-mouth’), with the envelope of low-frequency modulations occurring in phase or at offset phase values across modalities. We observe (i) that it is possible to elicit an SSR to bimodal signals; (ii) that bimodal signals exhibit greater response power than unimodal signals; and (iii) that the SSR power at specific harmonics and sensors differentially reflects the congruity between signal components. Importantly, we argue that effects found at the modulation frequency and second harmonic reflect differential aspects of neural coding of multisensory signals. The experimental paradigm facilitates a quantitative characterization of properties of multi-sensory speech and other bimodal computations.

Similar content being viewed by others

References

Amedi A, von Kriegstein K, van Atteveldt NM, Beauchamp MS, Naumer MJ (2005) Functional imaging of human crossmodal identification and object recognition. Exp Brain Res 166:559–571

Baayen RH (2008) languageR: data sets and functions with “Analyzing Linguistic Data: a practical introduction to statistics”. R package version 0.953

Baumann O, Greenlee MW (2007) Neural correates of coherent audiovisual motion perception. Cereb Cortex 17:1433–1443

Besle J, Fort A, Delpuech C, Giard MH (2004) Bimodal speech: early suppressive visual effects in human auditory cortex. Eur J Neurosci 20:2225–2234

Calvert GA, Brammer MJ, Bullmore ET, Campbell R, Iversen SD, David AS (1999) Response amplification in sensory-specific cortices during crossmodal binding. Neuroreport 10:2619–2623

Calvert GA, Campbell R, Brammer MJ (2000) Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Curr Biol 10:649–657

Calvert GA, Hansen PC, Iversen SD, Brammer MJ (2001) Detection of audio-visual integration sites in humans by application of electrophysiological criteria to the BOLD effect. NeuroImage 14:427–438

Campbell R (2008) The processing of audio-visual speech: empirical and neural bases. Philos Trans R Soc Lond B Biol Sci 363:1001–1010

Cappe C, Thut G, Romei V, Murray MM (2010) Auditory-visual multisensory interactions in humans: timing, topography, directionality, and sources. J Neurosci 30:12572–12580

Chandrasekaran C, Trubanova A, Stillittano S, Caplier A, Ghazanfar AA (2009) The natural statistics of audiovisual speech. PLoS Comput Biol 5:e1000436

de Cheveigné A, Simon JZ (2007) Denoising based on time-shift PCA. J Neurosci Methods 165:297–305

Dobie RA, Wilson MJ (1996) A comparison of t-test, F-test, and coherence methods of detecting steady-state auditory-evoked potentials, distortion product otoacoustic emissions, or other sinusoids. J Acoust Soc Am 100:2236–2246

Driver J, Spence C (1998) Crossmodal attention. Curr Opin Neurobiol 8:245–253

Fisher NI (1996) Statistical analysis of circular data. Cambridge University Press, Cambridge

Fort A, Delpuech C, Pernier J, Giard M-H (2002) Dynamics of cortico-subcortical cross-modal operations involved in audio-visual object detection in humans. Cereb Cortex 12:1031–1039

Gander PE, Bosnyak DJ, Roberts LE (2010) Evidence for modality-specific but not frequency specific modulation of human primary auditory cortex by attention. Hear Res 268:213–226

Ghazanfar AA, Schroeder CE (2006) Is neocortex essentially multisensory? Trends Cogn Sci 10:278–285

Grant KW, Seitz PF (2000) The use of visible speech cues for improving auditory detection of spoken sentences. J Acoust Soc Am 108:1197–1208

Hershenson M (1962) Reaction time as a measure of intersensory facilitation. J Exp Psychol 63:289

Howard MF, Poeppel D (2010) Discrimination of speech stimuli based on neuronal response phase patterns depends on acoustics but not comprehension. J Neurophysiol 105(5):2500–2511

Jenkins J III, Idsardi WJ, Poeppel D (2010) The analysis of simple and complex auditory signals in human auditory cortex: magnetoencephalographic evidence from M100 modulation. Ear Hear 31:515–526

Jones EG, Powell TP (1970) An anatomical study of converging sensory pathways within the cerebral cortex of the monkey. Brain 93:793–820

Kayser C, Petkov CI, Logothetis NK (2008) Visual modulation of neurons in auditory cortex. Cereb Cortex 18:1560–1574

Kelly SP, Gomez-Ramirez M, Foxe JJ (2008) Spatial attention modulates initial afferent activity in human primary visual cortex. Cereb Cortex 18:2629–2636

Lakatos P, Karmos G, Mehta AD, Ulbert I, Schroeder CE (2008) Entrainment of neuronal oscillations as a mechanism of attentional selection. Science 320:110

Lalor EC, Kelly SP, Pearlmutter BA, Reilly RB, Foxe JJ (2007) Isolating endogenous visuo-spatial attentional effects using the novel visual-evoked spread spectrum analysis (VESPA) technique. Eur J Neurosci 26:3536–3542

Luo H, Poeppel D (2007) Phase patterns of neuronal responses reliably discriminate speech in human auditory cortex. Neuron 54:1001–1010

Luo H, Wang Y, Poeppel D, Simon JZ (2006) Concurrent encoding of frequency and amplitude modulation in human auditory cortex: MEG evidence. J Neurophysiol 96:2712–2723

Luo H, Liu Z, Poeppel D (2010) Auditory cortex tracks both auditory and visual stimulus dynamics using low-frequency neuronal phase modulation. PloS Biol 8:e1000445

Macaluso E, Driver J (2005) Multisensory spatial interactions: a window onto functional integration in the human brain. Trends Neurosci 28:264–271

MATLAB (2009) Version R2009a. The Mathworks, Natick, MA

Mesulam MM (1998) From sensation to cognition. Brain 121:1013–1052

Miller BT, D’Esposito M (2005) Searching for “the Top” in top-down control. Neuron 48:535–538

Molholm S, Ritter W, Murray MM, Javitt DC, Schroeder CE, Foxe JJ (2002) Multisensory auditory-visual interactions during early sensory processing in humans: a high-density electrical mapping study. Cognitive Brain Res 14:115–128

Molholm S, Ritter W, Javitt DC, Foxe JJ (2004) Multisensory visual-auditory object recognition in humans: a high-density electrical mapping study. Cereb Cortex 14:452–465

Molholm S, Sehatpour P, Mehta AD, Shpaner M, Gomez-Ramirez M, Ortigue S, Dyke JP, Schwartz TH, Foxe JJ (2006) Audio-visual multisensory integration in superiour parietal lobule revealed by human intracranial recordings. J Neurophysiol 96:721–729

Molholm S, Martinez A, Shpaner M, Foxe JJ (2007) Object-based attention is multisensory: co-activation of an object’s representations in ignored sensory modalities. Eur J Neurosci 26:499–509

Müller MM, Teder W, Hillyard SA (1997) Magnetoencephalographic recording of steadystate visual evoked cortical activity. Brain Topogr 9:163–168

Murray MM, Foxe JJ, Wylie GR (2005) The brain uses single-trial multisensory memories to discriminate without awareness. NeuroImage 27:473–478

Oldfield RC (1971) The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9:97–113

Olson IR, Gatenby JC, Gore JC (2002) A comparison of bound and unbound audio-visual information processing in the human cerebral cortex. Cognitive Brain Res 14:129–138

Picton T, John M, Dimitrijevic A, Purcell D (2003) Human auditory steady-state responses. Int J Audiol 42:177–219

R computer program, Version 2.10.1. R Foundation for Statistical Computing, Vienna, Austria (2009)

Ross B, Borgmann C, Draganova R, Roberts LE, Pantev C (2000) A high-precision magnetoencephalographic study of human auditory steady-state responses to amplitude modulated tones. J Acoust Soc Am 108:679–691

Saupe K, Widmann A, Bendixen A, Müller MM, Schröger E (2009) Effects of intermodal attention on the auditory steady-state response and the event related potential. Psychophysiology 46:321–327

Schroeder CE, Foxe J (2005) Multisensory contributions to low-level, ‘unisensory’ processing. Curr Opin Neurobiol 15:454–458

Schroeder CE, Lakatos P (2009) Low-frequency neuronal oscillations as instruments of sensory selection. Trends Neurosci 32:9–18

Schroeder CE, Lakatos P, Kajikawa Y, Partan S, Puce A (2008) Neuronal oscillaions and visual amplification of speech. Trends Cogn Sci 12:106–113

Senkowski D, Molholm S, Gomez-Ramirez M, Foxe JJ (2006) Oscillatory beta activity predicts response speed during a multisensory audiovisual reaction time task: a high-density electrical mapping study. Cereb Cortex 16:1556–1565

Senkowski D, Talsma D, Grigutsch M, Herrmann CS, Woldorff MG (2007) Good times for multisensory integration: effects of the precision of temporal synchrony as revealed by gamma-band oscillations. Neuropsychologia 45:561–571

Senkowski D, Schneider TR, Foxe JJ, Engel AK (2008) Crossmodal binding through neural coherence: implications for multisensory processing. Trends Neurosci 31:401–409

Simon JZ, Wang Y (2005) Fully complex magnetoencephalography. J Neurosci Methods 149:64–73

Sohmer H, Pratt H, Kinarti R (1977) Sources of frequency following response (FFR) in man. Electroencephalogr Clin Neurophsyiol 42:656–664

Steeneken HJM, Houtgast T (1980) A physical method for measuring speech-transmission quality. J Acoust Soc Am 67:318–326

Stein BE, Meredith MA, Huneycutt WS, McDade L (1989) Behavioral indices of multisensory integration: orientation to visual cues is affected by auditory stimuli. J Cogn Neurosci 1:12–24

Sumby WH, Pollack I (1954) Visual contribution to speech intelligibility in noise. J Acoust Soc Am 26:212–215

Talsma D, Doty TJ, Strowd R, Woldorff MG (2006) Attentional capacity for processing concurrent stimuli is larger across sensory modalities than within a modality. Psychophysiology 43:541–549

Valdes JL, Perez-Abalo MC, Martin V, Savio G, Sierra C, Rodriguez E, Lins O (1997) Comparison of statistical indicators for the automatic detection of 80 Hz auditory steady state responses. Ear Hear 18:420–429

van Wassenhove V, Grant KW, Poeppel D (2007) Temporal window of integration in auditory-visual speech perception. Neuropsychologia 45:598–607

Wickham H (2009) ggplot2: elegant graphics for data analysis: Springer, New York

Acknowledgments

This project originated with a series of important discussions with Ken W. Grant (Auditory-Visual Speech Recognition Laboratory, Army Audiology and Speech Center, Walter Reed Army Medical Center). The authors would like to thank him for his extensive contributions to the conception of this work. The authors would like to thank Mary F. Howard and Philip J. Monahan for critical reviews of this manuscript. We would also like to thank Jeff Walker for technical assistance in data collection and Pedro Alcocer and Diogo Almeida for assistance with various R packages. This work was supported by the National Institute on Deafness and Other Communication Disorders of the National Institutes of Health: 2R01DC05660 to DP, JZS, and WJI and Training Grant DC-00046 support to JJIII and AER. Parts of this manuscript comprise portions of the first two authors’ doctoral theses.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Jenkins, J., Rhone, A.E., Idsardi, W.J. et al. The Elicitation of Audiovisual Steady-State Responses: Multi-Sensory Signal Congruity and Phase Effects. Brain Topogr 24, 134–148 (2011). https://doi.org/10.1007/s10548-011-0174-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10548-011-0174-1