Abstract

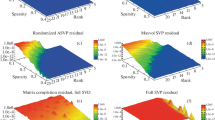

The development of randomized algorithms for numerical linear algebra, e.g. for computing approximate QR and SVD factorizations, has recently become an intense area of research. This paper studies one of the most frequently discussed algorithms in the literature for dimensionality reduction—specifically for approximating an input matrix with a low-rank element. We introduce a novel and rather intuitive analysis of the algorithm in [6], which allows us to derive sharp estimates and give new insights about its performance. This analysis yields theoretical guarantees about the approximation error and at the same time, ultimate limits of performance (lower bounds) showing that our upper bounds are tight. Numerical experiments complement our study and show the tightness of our predictions compared with empirical observations.

Similar content being viewed by others

Notes

To accommodate this, previous works also provide bounds in terms of the singular values of \(A\) past \(\sigma _{k+1}\).

This follows from Hölder’s inequality \(\mathbb {E}|XY| \le (\mathbb {E}|X|^{3/2})^{2/3} (\mathbb {E}|Y|^3)^{1/3}\) with \(X = g^{2/3},\,Y = g^{4/3}\).

References

Chen, Z., Dongarrar, J.J.: Condition numbers of gaussian random matrices. SIAM J. Matrix Anal. Appl. 27, 603–620 (2005)

Geman, S.: A limit theorem for the norm of random matrices. Ann. Probab. 8, 252–261 (1980)

Halko, N., Martinsson, P.-G., Tropp, J.A.: Finding structure with randomness probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev. 53, 217–288 (2011)

Horn, R.A., Johnson, C.R.: Matrix analysis. Cambridge University Press, Cambridge (1985)

Kent, J.T., Mardia, K.V., Bibby, J.M.: Multivariate analysis. Academic Press, New York (1976)

Martinsson, P.-G., Rokhlin, V., Tygert, M.: A randomized algorithm for the decomposition of matrices. Appl. Comput. Harmon. Anal. 30, 47–68 (2011)

Rokhlin, V., Szlam, A., Tygert, M.: A randomized algorithm for principal component analysis. SIAM J. Matrix Anal. Appl. 31, 1100–1124 (2009)

Rudelson, M., Vershynin, R.: Non-asymptotic theory of random matrices: extreme singular values. In: Proceedings of the International Congress of Mathematicians, pp. 1576–1602 (2010)

Sarlós, T.: Proceedings of the 47th annual ieee symposium on foundations of computer science. In: Proceedings of the International Congress of Mathematicians, pp. 143–152 (2006)

Silverstein, J.W.: The smallest eigenvalue of a large dimensional wishart matrix. Ann. Probab. 13, 1364–1368 (1985)

Woolfe, F., Liberty, E., Rokhlin, V., Tygert, M.: A fast randomized algorithm for the approximation of matrices. Appl. Comput. Harmon. Anal. 25, 335–366 (2008)

Acknowledgments

E. C. is partially supported by NSF via grant CCF-0963835 and by a gift from the Broadcom Foundation. We thank Carlos Sing-Long for useful feedback about an earlier version of the manuscript. These results were presented in July 2013 at the European Meeting of Statisticians. We would like to thank the reviewers for useful comments.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

We use well-known bounds to control the expectation of the extremal singular values of a Gaussian matrix. These bounds are recalled in [8], though known earlier.

Lemma 4.1

If \(m>n\) and \(A\) is a \(m \times n\) matrix with i.i.d. \(\mathcal {N}(0,1)\) entries, then

Next, we control the expectation of the norm of the pseudo-inverse \(A^\dagger \) of a Gaussian matrix \(A\).

Lemma 4.2

In the setup of Lemma 4.1, we have

Proof

The upper bound is the same as is used in [3] and follows from the work of [1]. For the lower bound, set \(B = (A^*A)^{-1}\) which has an inverse Wishart distribution, and observe that

where \(B_{11}\) is the entry in the \((1,1)\) position. It is known that \(B_{11} \mathop {=}\limits ^{d} 1/Y\), where \(Y\) is distributed as a chi-square variable with \(d = m - n + 1\) degrees of freedom [5, Page 72]. Hence,

\(\square \)

The limit laws below are taken from [10] and [2].

Lemma 4.3

Let \(A_{m,n}\) be a sequence of \(m \times n\) matrix with i.i.d. \(\mathcal {N}(0,1)\) entries such that \(\lim _{n \rightarrow \infty } m/n = c \ge 1\). Then

Rights and permissions

About this article

Cite this article

Witten, R., Candès, E. Randomized Algorithms for Low-Rank Matrix Factorizations: Sharp Performance Bounds. Algorithmica 72, 264–281 (2015). https://doi.org/10.1007/s00453-014-9891-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00453-014-9891-7