Abstract

The central result of classical game theory states that every finite normal form game has a Nash equilibrium, provided that players are allowed to use randomized (mixed) strategies. However, in practice, humans are known to be bad at generating random-like sequences, and true random bits may be unavailable. Even if the players have access to enough random bits for a single instance of the game their randomness might be insufficient if the game is played many times. In this work, we ask whether randomness is necessary for equilibria to exist in finitely repeated games. We show that for a large class of games containing arbitrary two-player zero-sum games, approximate Nash equilibria of the n-stage repeated version of the game exist if and only if both players have Ω(n) random bits. In contrast, we show that there exists a class of games for which no equilibrium exists in pure strategies, yet the n-stage repeated version of the game has an exact Nash equilibrium in which each player uses only a constant number of random bits. When the players are assumed to be computationally bounded, if cryptographic pseudorandom generators (or, equivalently, one-way functions) exist, then the players can base their strategies on “random-like” sequences derived from only a small number of truly random bits. We show that, in contrast, in repeated two-player zero-sum games, if pseudorandom generators do not exist, then Ω(n) random bits remain necessary for equilibria to exist.

Similar content being viewed by others

Notes

We have adopted Colin and Rowena from Aumann and Hart [1].

A variant of Proposition 1 with respect to pure equilibria is given in Osborne and Rubinstein [18] as Proposition 155.1.

Note that if \(\mathcal {L}\) does not output a hypothesis at the current stage, then Rowena chooses her action according to the same distribution as in \(\mathcal {D}\), her minmax strategy, and her expectation is v.

References

Aumann, R.J., Hart, S.: Long cheap talk. Econometrica 71(6), 1619–1660 (2003)

Budinich, M., Fortnow, L.: Repeated matching pennies with limited randomness. In: Proceedings 12Th ACM conference on electronic commerce (EC-2011), pp 111–118, San Jose (2011)

Benoît, J.-P., Krishna, V.: Finitely repeated games. Econometrica 53(4), 905–922 (1985)

Benoît, J.-P., Krishna, V.: Nash equilibria of finitely repeated games. Int. J. Game Theory 16(3), 197–204 (1987)

Blum, M., Micali, S.: How to generate cryptographically strong sequences of pseudo-random bits. SIAM J. Comput. 13(4), 850–864 (1984)

Dodis, Y., Halevi, S., Rabin, T.: A cryptographic solution to a game theoretic problem. In: Advances in cryptology - CRYPTO 2000, Proceedings 20th annual international cryptology conference, pp 112–130, Santa Barbara (2000)

Goldreich, O.: The foundations of cryptography - volume 1, basic techniques. Cambridge University Press (2001)

González-Díaz, J.: Finitely repeated games: a generalized Nash folk theorem. Games Econ. Behav. 55(1), 100–111 (2006)

Håstad, J., Impagliazzo, R., Levin, L.A., Luby, M.: A pseudorandom generator from any one-way function. SIAM J. Comput. 28(4), 1364–1396 (1999)

Halprin, R., Naor, M.: Games for extracting randomness. ACM Crossroads 17(2), 44–48 (2010)

Halpern, J.Y., Pass, R.: Algorithmic rationality: game theory with costly computation (2014)

Impagliazzo, R., Luby, M.: One-way functions are essential for complexity based cryptography (Extended Abstract). In: 30Th annual symposium on foundations of computer science, research triangle park, pp 230–235, North Carolina (1989)

Impagliazzo, R.: Pseudo-random generators for cryptography and for randomized algorithms. PhD thesis, University of California, Berkeley (1992)

Kalyanaraman, S., Umans, C.: Algorithms for playing games with limited randomness. In: Algorithms–ESA 2007, pp 323–334. Springer (2007)

Neyman, A., Okada, D.: Strategic entropy and complexity in repeated games. Games Econ. Behav. 29(1), 191–223 (1999)

Neyman, A., Okada, D.: Repeated games with bounded entropy. Games Econ. Behav. 30(2), 228–247 (2000)

Naor, M., Rothblum, G.N.: Learning to impersonate. In: Machine learning, proceedings of the twenty-third international conference (ICML 2006), pp 649–656, Pittsburgh (2006)

Osborne, M.J., Rubinstein, A.: A course in game theory. MIT press (1994)

Yao, A.C.-C.: Theory and applications of trapdoor functions (Extended Abstract). In: 23rd annual symposium on foundations of computer science, pp 80–91, Chicago (1982)

Author information

Authors and Affiliations

Corresponding author

Additional information

Pavel Hubáček: Supported by the I-CORE Program of the Planning and Budgeting Committee and The Israel Science Foundation (grant No. 4/11).

Moni Naor: Incumbent of the Judith Kleeman Professorial Chair. Research supported in part by grants from the Israel Science Foundation, BSF and Israeli Ministry of Science and Technology and from the I-CORE Program of the Planning and Budgeting Committee and the Israel Science Foundation (grant No. 4/11).

Jonathan Ullman: Parts of this work were done while the author was at Harvard University and Columbia University.

Appendices

Appendix A: Exploiting Low Entropy in Two-Player Zero-Sum Games

In this appendix we provide the proof of Theorem 5 that establishes that if one player uses a constant fraction less randomness in the repeated two-player zero-sum game, then the other player can obtain an average payoff that is larger than the value of the stage game by a constant.

We use the following lemma about performance of low-entropy strategies in two-player zero-sum games in the proof of Theorem 5.

Lemma 1

Let G=〈(A 1 ,A 2 ),u〉 be a two-player zero-sum strategic game of value v and let β>0 denote the minimal entropy of a minmax strategy for the column player in G. For every ε>0, there exists c ε >0 such that if σ is a strategy of the column player of entropy (1−ε)β then the row player has a strategy that achieves utility at least v+c ε against σ.

Proof

Let σ be an arbitrary strategy of Colin in G of entropy (1−𝜖)⋅β for some ε>0, and let ρ σ denote the best response strategy of Rowena to σ. First, we show that Rowena’s expected utility E[u(ρ σ ,σ)] is at least v + c for some c>0. Suppose to the contrary that Rowena’s best response to σ achieves expectation at most v. Let \(\hat {\rho }\) be the minmax strategy of Rowena in G, the profile \((\hat {\rho },\sigma )\) is a Nash equilibrium of G: Rowena’s minmax strategy guarantees at least the value of the game v. On the other hand, by the hypothesis her best response to σ achieves at most v, so Rowena’s expectation in \((\hat {\rho },\sigma )\) is equal to v. There are no profitable deviations for Colin, since he cannot decrease Rowena’s expectation below v given that she plays according to her minmax strategy. The strategy σ of Colin is of entropy (1−ε)⋅β<β, and the strategy profile \((\hat {\rho },\sigma )\) is a Nash equilibrium of G contradicting that β is the minimal entropy of Colin’s strategy in any Nash equilibrium of G. Hence, the best response to σ must increase Rowena’s expectation by a non-zero amount over v. The statement of the lemma follows by setting c ε to be the infimum of the set of all c achieved against Colin’s strategies of entropy (1−ε)⋅β. □

Theorem 5

Let G=〈(A 1 ,A 2 ),u〉 be a two-player zero-sum strategic game such that β>0 is the minimal entropy of any minmax strategy for the column player in G and let v be the value of G. For any 0<ε≤1, there exists c>0 such that if σ is a strategy of the column player of entropy (1−ε)βn in the n-stage repeated game of G then the row player has a deterministic strategy that achieves average payoff of at least v+c against σ.

Proof

Let σ be an arbitrary strategy of the column player (Colin) of Shannon entropy n⋅β(1−ε) for some ε∈[0,1]. Let ρ σ be the strategy of the row player (Rowena) that at each non-terminal history a plays the best response in G to Colin’s strategy σ(a). Rowena’s expectation \(\mathbf {E}_{a\gets (\rho _{\sigma },\sigma )}[u^{*}(a)]\) is

By the definition of conditional expectation, we rewrite her expectation as a summation over all terminal histories, i.e.,

Note that for every terminal history b∈A n the summands correspond to the expectation of Rowena at the non-terminal subhistories of b. For any terminal history b∈A n, the total sum of entropy used in σ at the subhistories of b is at most (1−ε)β n, which implies that there are at least \(n'= n \left (1- (1-\varepsilon )/(1-\frac {\varepsilon }{2})\right )\) subhistories of b where the Colin’s strategy has entropy at most \((1 - \frac {\varepsilon }{2})\beta \). To see this assume that there exists a terminal history b with less than n ′ subhistories where σ uses entropy at most \((1 - \frac {\varepsilon }{2})\beta \). Then the total entropy of σ on all subhistories of b is strictly larger than

a contradiction. As shown in Lemma 1, for each subhistory of b where Colin uses strategy of entropy at most \((1-\frac {\varepsilon }{2})\beta \), Rowena’s best response achieves at least v + c, where c = c ε/2>0 is a value determined by the game G (and a function of epsilon). On all other subhistories of b (with Colin’s strategy of entropy larger than \((1- \frac {\varepsilon }{2})\beta \)) the value of Rowena is at least v. Therefore the total utility (the sum of the expectations over all subhistories of b) is at least n v + c⋅n ′ = n(v + c ′), where \(c'=c\left (1- (1-\varepsilon )/(1-\frac {\varepsilon }{2})\right )>0\).

Since this holds for every terminal history of G n, it follows from (1) that the strategy ρ σ of Rowena achieves average expected utility at least v + c ′ against σ in G n. □

Note that the constant c by which the row player can exploit strategy of the column player of entropy (1−ε)β n is related to the possible gain of the row player in the stage game, given that the column player plays strategy of entropy (1−ε)β. To make the connection explicit, we use the following notation from Neyman and Okada [16]. Let G=〈(A 1,A 2),u〉 be the stage game and for γ≥0 define

Hence, U(γ) is the maximal expected utility the row player can guarantee with a strategy of entropy at most γ; or equivalently, −U(γ) is the minimal expected utility that the column player can achieve by a best response to any strategy of the row player of entropy at most γ. Note that U(0) is equal to the row player’s minmax level in pure strategies, and for all γ≥0, U(γ) is at most the value of the game. Using this notation the statement of Theorem 5 can be restated as:

Theorem 5 (restated)

. Let G=〈(A 1 ,A 2 ),u〉 be a two-player zero-sum strategic game such that β>0 is the minimal entropy of any minmax strategy for the column player in G and let v be the value of G. For any 0<ε≤1, if σ is a strategy of the row player of entropy (1−ε)βn in the n-stage repeated game of G then the column player has a deterministic strategy that achieves average payoff of at least \(-v-\left (1- \frac {(1-\varepsilon )}{(1-\frac {\varepsilon }{2})}\right )U((1-\frac {\varepsilon }{2})\beta )\) against σ.

We remark that an improved bound on the expectation can be obtained using the technique of Neyman and Okada and the column player can in fact achieve average expected utility at least −v−(cavU)((1−ε)β), where cavU is the smallest concave function larger or equal than U.

Theorem 6 below can be seen as a “converse” of Theorem 5. Specifically, we show that even if the players are restricted to strategies of entropy (1−ε)β n then there exists an \(\varepsilon ^{\prime }\)-Nash equilibrium of G n for some \(\varepsilon ^{\prime }\) proportional to ε.

Theorem 6

Let G be a two-player zero-sum strategic game such that the minimal entropy of a minmax strategy is β>0 for both players. There exists c>0 such that for all 0<ε≤1 and for all n, there exists a \(\left (c\cdot \frac {\lceil n\varepsilon \rceil +1}{n}\right )\) -Nash equilibrium of the n-stage repeated game of G in which the players’ strategies are of entropy at most (1−ε)βn.

Proof

Let σ be the strategy profile in the n-stage repeated game of G in which the players play in the first ⌊n(1−ε)⌋ stages according to their minmax strategies of minimal entropy (i.e., entropy β), and in the remaining ⌈n ε⌉ stages the players alternate between playing the (pure) action profiles a ∗∈A 1×A 2 and a ‡∈A 1×A 2, such that p ∗ = u(a ∗) is the maximum payoff of Rowena in G and p ‡ = u(a ‡) is the minimal payoff of Rowena in G. Note that by construction of σ, the players use strategies of entropy at most n⋅β(1−ε).

Assume ⌈n ε⌉ is odd (the argument for ⌈n ε⌉ even is analogous). The expected utility of Rowena in σ in the n-stage repeated game of G is

where v is the value of G. The expectation of every deviating strategy \(\sigma ^{\prime }_{2}\) of Colin is

hence Colin can increase his utility by at most \(\frac {1}{2n}(p^{*}-p^{\dagger })(\lceil n\varepsilon \rceil +1)\). Similarly, the increase in expectation from any deviating strategy of Rowena can be upper bounded by \(\frac {1}{2n}(p^{*}-p^{\dagger })(\lceil n\varepsilon \rceil -1)\). Therefore, σ is a \(\left (c\cdot \frac {\lceil n\varepsilon \rceil +1}{n}\right )\)-Nash equilibrium of the n-stage repeated game of G for \(c=\frac {1}{2}(p^{*}-p^{\dagger })\), and the statement of the proposition follows since \(\frac {1}{2}(p^{*}-p^{\dagger })\) is a constant independent of ε and n. □

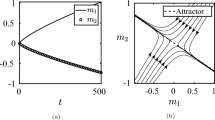

Appendix B: Matching Pennies

The game of matching pennies is a two-player zero-sum strategic game given by the payoff matrix in Fig. 3. Both players can either play Heads (H) or Tails (T). The only Nash equilibrium is the strategy profile \((\frac {1}{2}H+\frac {1}{2}T,\frac {1}{2}H+\frac {1}{2}T)\) in which both players randomize uniformly over H and T.

By Theorem 1, in the equilibrium for the n-stage repeated game of matching pennies both players randomize uniformly between playing Heads and Tails at each stage, and the entropy of the equilibrium strategy of each player is exactly n.

We now give a generalization of Lemma 3.1 from Budinich and Fortnow [2].

Theorem 7

For any ε∈[0,1], let σ be a strategy of the column player of entropy n(1−ε) in the n-stage repeated game of matching pennies. The row player has a deterministic strategy that achieves payoff of at least ε against σ.

Proof

Let σ be an arbitrary strategy of Colin of Shannon entropy n(1−ε) for some ε∈[0,1]. Let ρ σ be the strategy of Rowena that at each non-terminal history a plays the best response to Colin’s strategy σ(a). We can express Rowena’s expectation \(\mathbf {E}_{a\gets (\rho _{\sigma },\sigma )}[u^{*}(a)]\) as

which can be rewritten due to the definition of conditional expectation as a summation over terminal histories

For every terminal history b=(b 1,…,b n ), the total entropy of σ over the non-terminal subhistories of b is bounded by n(1−ε), i.e.,

We define ε 0=1−H(σ(∅)) and for every i∈{1,…,n−1} we define ε i =(1−H(σ(b 1,…,b i ))). Note that 0≤ε i ≤1 for every i∈{0,…,n−1} and from inequality (3) we get that \(\varepsilon \leq \frac {1}{n}{\sum }_{i=0}^{n-1}\varepsilon _{i}\). In order to conclude that Rowena’s expected utility in the strategy profile (ρ σ ,σ) is at least 𝜖, it is sufficient to show that for every subhistory b ′ of b the expectation E[u(ρ σ (b ′),σ(b ′))] is least 1−H(σ(b ′)).

For an arbitrary non-terminal history h, consider Rowena’s expectation in G given the strategy profile (ρ σ (h),σ(h)). Since ρ σ (h) is the best response to σ(h), Rowena’s expectation is 2p−1, where p is the probability of Colin’s most probable action at history h. We need to show that for all p∈[1/2,1]

For p equal 1/2 or 1, the left side and the right side of the inequality are equal. Since 2p−1 is a linear function and \(1+p\log _{2}(p)+(1-p)\log _{2}(1-p)\) is a convex function on [1/2,1], the inequality holds. This concludes the proof. □

It follows form Theorem 7 that if the players can use only strategies of entropy (1−𝜖)n (i.e., lower than n-times the entropy of an equilibrium of the single-shot matching pennies) then Nash equilibria in the n-stage repeated game of matching pennies do not exist.

Proposition 2

Let G n be the n-stage repeated game of matching pennies.

-

1.

For all 0≤ε≤1, if σ is an ε-Nash equilibrium of G n then the players’ strategies in σ are of entropy at least n(1−ε).

-

2.

For all 0≤ε≤1, there exists an \((\varepsilon +\frac {2}{n})\) -Nash equilibrium of G n in which the players’ strategies are of entropy at most (1−ε)n.

Proof

First, we show that any ε-Nash equilibrium σ in the n-stage repeated game of matching pennies comprises of strategies of entropy at least (1−ε)n. Assume that there is an ε-Nash equilibrium in which both players use a strategy of strictly smaller entropy than (1−ε)n, i.e., of entropy \((1-\varepsilon ^{\prime })n\) for some \(\varepsilon ^{\prime }>\varepsilon \). By Theorem 7, each player i has a strategy \(\sigma ^{\prime }_{i}\) that achieves at least \(\varepsilon ^{\prime }\) against σ −i . Since σ is an ε-Nash equilibrium then for any player i

This implies that for both players \(\mathbf {E}[u^{*}_{i}(\sigma )] >0\), however it cannot be the case that the expectation of both players is strictly larger than zero, since matching pennies is a zero-sum game.

Second, we show that if the players can use strategies of entropy (1−ε)n then there exists an \((\varepsilon +\frac {2}{n})\)-Nash equilibrium of the n-stage repeated game of matching pennies. To see this, consider a strategy profile in which the players play uniformly at random H and T in the first ⌊(1−ε)n⌋ stages and in the remaining ⌈ε n⌉ stages Rowena plays always H and Colin alternates between T and H (i.e., the outcome at stage ⌊(1−ε)n⌋+1 is (H,T)). If ⌈ε n⌉ is odd then Rowena’s expectation is \(-\frac {1}{n}\) and otherwise it is 0. Both Colin and Rowena can improve their expectation only in the last ⌈ε n⌉ stages by matching/countering the opponent, but any such deviation can achieve utility at most

Hence, both players can improve the utility by at most \(\varepsilon + \frac {2}{n}\) by deviating from the prescribed strategy profile, and it constitutes an \((\varepsilon + \frac {2}{n})\)-Nash equilibrium. □

1.1 B. 1 Matching Pennies with Computationally Efficient Players

In this section we prove the statement of Theorem 4 for the special case of the game of matching pennies without relying on the framework of adaptively changing distributions of Naor and Rothblum [17], but using the classical results on pseudorandomness discussed in Section 2.2. In particular, that if one-way functions do not exist, then the players cannot efficiently generate unpredictable sequences of bits using only a few truly random bits. Hence, in the repeated game of matching pennies any player can at some stage efficiently predict and exploit the next move of an opponent that uses amount of random bits sub-linear in the number of stages.

Theorem 8

If one-way functions do not exist then for any polynomial-size circuit family \(\{C_{n}\}_{n\in \mathbb {N}}\) implementing a strategy of Colin in the repeated game of matching pennies using at most n−1 random bits, there exists a polynomial time strategy of Rowena with expected utility δ(n) against C n for some noticeable function δ.

Proof

Let \(\{X_{n}\}_{n\in \mathbb {N}}\) be a probability ensemble defined for all n as the random variable over 2n-bit strings corresponding to the terminal histories in the n-stage repeated matching pennies (where H corresponds to 0 and T to 1) when Rowena plays uniformly at random and Colin plays according to C n . Note that X n is of length 2n and it can be generated in polynomial time given at most 2n−1 random bits, since Colin’s strategy uses random strings of length at most n−1.

Since one-way functions do not exist, the ensemble \(\{X_{n}\}_{n\in \mathbb {N}}\) cannot be pseudorandom. In particular, it cannot be unpredictable in polynomial time in the following sense. There exists a polynomial time predictor algorithm A that reads x←X n bit by bit and succeeds in predicting the next value with probability noticeably larger than one half. Formally, let n e x t A (x) be a function that returns the i-th bit of x if on input (1|x|,x) algorithm A reads only the first i−1<|x| bits of x, and returns a uniformly chosen bit in case A reads the entire string x. There exists a predictor algorithm A and some positive polynomial p, such that

where the probability is taken over the randomness of A.

We show that Rowena can guarantee for herself at least noticeable expected utility by emulating A on the transcript of the repeated game. Consider the strategy R A of Rowena that at each stage i samples a uniformly random bit r i , and if A(b i ) outputs any prediction \(c^{*}_{i}\) of Colin’s action then Rowena plays \(c^{*}_{i}\) (to match Colin) and otherwise it plays r i and uses the action played by Colin at stage i as the next input to A. After the stage in which A outputs a prediction R A plays uniformly at random. The expectation of Rowena can be lower bounded in the following way:

Recall that the actions of Rowena are chosen uniformly at random and the predictor A has to guess a uniformly random bit if it reads the whole terminal history x←X n . Hence, in order to gain noticeable advantage over one half, A must output its prediction to one of the actions of Colin with at least noticeable probability, i.e., \(\Pr [A\text { outputs} c^{*}_{i}]\) is at least \(\delta ^{\prime }(n)\) for some noticeable function \(\delta ^{\prime }\). Thus, the strategy R A achieves expectation at least \(\delta (n)=\delta ^{\prime }(n)\cdot (n\cdot p(n))^{-1}\), which is a noticeable function of n. □

Rights and permissions

About this article

Cite this article

Hubáček, P., Naor, M. & Ullman, J. When Can Limited Randomness Be Used in Repeated Games?. Theory Comput Syst 59, 722–746 (2016). https://doi.org/10.1007/s00224-016-9690-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00224-016-9690-4