Abstract

Introduction

Attentional networks are sensitive to sleep deprivation and increased time awake. However, existing evidence is inconsistent and may be accounted for by differences in chronotype or time-of-day. We examined the effects of sustained wakefulness over a normal “socially constrained” day (following 18 h of sustained wakefulness), following a night of normal sleep, on visual attention as a function of chronotype.

Methods

Twenty-six good sleepers (mean age 25.58; SD 4.26; 54 % male) completed the Attention Network Test (ANT) at two time points (baseline at 8 am; following 18-h sustained wakefulness at 2 am). The ANT provided mean reaction times (RTs), error rates, and the efficiency of three attentional networks—alerting, orienting, and executive control/conflict. The Morningness–Eveningness Questionnaire measured chronotype.

Results

Mean RTs were longer at time 2 compared to time 1 for those with increasing eveningness; the opposite was true for morningness. However, those with increasing morningness exhibited longer RT and made more errors, on incongruent trials at time 2 relative to those with increasing eveningness. There were no significant main effects of time or chronotype (or interactions) on attentional network scores.

Conclusion

Sustained wakefulness produced differential effects on visual attention as a function of chronotype. Whilst overall our results point to an asynchrony effect, this effect was moderated by flanker type. Participants with increasing eveningness outperformed those with increasing morningness on incongruent trials at time 2. The preservation of executive control in evening-types following sustained wakefulness is likely driven by differences in circadian phase between chronotypes across the day.

Similar content being viewed by others

Introduction

Sufficient sleep is necessary to maintain high levels of cognitive functioning during the waking period. Sleeping consistently less than 7 h has been associated with cumulative impairments in vigilant attention and alertness (Belenky et al. 2003), and experimental studies consistently show general impairments in vigilant attention following sleep restriction or sleep deprivation (Basner and Dinges 2011; Basner et al. 2011; Lim and Dinges 2008). It has been proposed that decrements in vigilant attention following sleep deprivation lay the foundation for impairments in other cognitive domains (Lim and Dinges 2008). At the same time, visual attention is a complex system of functionally and anatomically distinct brain networks, which underlie our ability to (1) maintain an “alert” state (alerting network), (2) “orient” attention to stimuli (orienting network), and (3) resolve conflict when numerous stimuli simultaneously compete for attention (executive control network) (Fernandez-Duque and Posner 2001; Petersen and Posner 2012). These networks are subserved by distinct neurobiological pathways (Fan and Posner 2004), which are likely differentially affected by sleep and extended periods of sustained wakefulness such as following sleep deprivation. Existing studies demonstrate general impairments in vigilant attention (akin to “alerting”) following sleep restriction or deprivation (e.g. Lim and Dinges 2008). However, few attempt to disentangle the effect of sleep deprivation or sustained wakefulness on individual attentional networks, and those that have report inconsistent findings (e.g. Jugovac and Cavallero 2012; Martella et al. 2011; Muto et al. 2012; Roca et al. 2012; Trujillo et al. 2009). In part, these inconsistencies may stem from uncontrolled circadian-variability, i.e. differences in the phase position of the circadian rhythm (an endogenous “biological clock” which dictates the timing of our physiological and behavioural processes) due to either inter-individual differences in chronotype, or time-of-day effects.

Chronotype refers to the tendency towards morningness or eveningness in the preferred timing of daily activities and sleep (Levandovski et al. 2013). Morning-types, the so-called larks, find it easy to arise in the morning, function best at this time, and fall asleep easily during early evening. Evening-types, on the other hand, the so-called owls, find it hard to get up early, are at their peak during late evening, and go to bed late, often in the early hours of the morning. Morning-types and evening-types have been shown to differ in the endogenous phase of the circadian rhythm (Baehr et al. 2000; Kerkhof and Van Dongen 1996); their homoeostatic regulation of sleep (Mongrain et al. 2005, 2006, 2011); and response to sleep fragmentation and deprivation (Mongrain et al. 2008; Taillard et al. 2011). These inter-individual differences between chronotypes influence behavioural differences in sleep timing and the profile of neurobehavioural functioning.

Furthermore, circadian-variability differentially affects neurobehavioural functioning, particularly attention, at different times of day (Valdez et al. 2012). This effect is likely due to circadian mechanisms driving alertness. Generally speaking, behavioural alertness and vigilant attention are subject to dynamic circadian variation across the normal waking day independent of increasing sleep pressure (our homoeostatic response to accumulated time awake, driving sleepiness). Neurobehavioural functioning is poor in the morning following awakening, which steadily improves across the day with an early evening peak (~6–9 pm), during which alertness and performance are relatively stable, which then progressively declines into the night (Goel et al. 2011; Mollicone et al. 2010). The circadian rhythm of neurobehavioural functioning largely parallels that of the core body temperature rhythm (Monk et al. 1997). However, the timing of these peaks and troughs in neurobehavioural functioning throughout the normal waking day is likely to vary as a function of chronotype, given the inter-individual differences in the timing of circadian phase. Indeed, Matchock and Mordkoff (2009) demonstrated differential effects of both chronotype and time-of-day on the efficiency of the attentional networks. Whilst the efficiency of the alerting network differed between morning/neither types and evening-types in the latter half of the day, the orienting network showed no chronotype or time-of-day effects; and the executive control network was consistent across chronotypes, but demonstrated peaks at midday and mid-afternoon. However, the grouping of morning/neither types compared to evening-types assumes similar phase timing between morning and neither types, rather than considering the possibility that differences between chronotypes may vary incrementally across the chronotype spectrum. Thus, to gain a more complete understanding of the influence of sustained wakefulness on attention, one must consider (1) attention as a multifaceted construct; (2) the influence of chronotype across the whole spectrum; and (3) the time-of-day of testing. That said, Matchock and Mordkoff (2009) examined differences in attention across only a 12-h day (testing at 08:00, 12:00, 16:00 and 20:00 h). If we are to assume that non-shift-workers who sleep in one primary nocturnal sleep bout typically remain awake around 16–18 h (given that the average sleep duration of adults is around 6–8 h; see Bin et al. 2012, for a review), it remains to be determined whether attentional efficiency diverges by chronotype in the later evening beyond 20:00 h (compared to morning testing). Indeed, others have demonstrated that cumulative effects of sleep restriction and deprivation on behavioural alertness begin to emerge after 16 h of sustained wakefulness (Van Dongen et al. 2003). Nevertheless, it remains unclear whether this pattern of response to sustained wakefulness is consistent across the full chronotype spectrum. Hypothetically, individuals with a tendency towards morningness might show enhanced attentional efficiency in the morning relative to both those with a tendency towards eveningness and evening testing. Attentional efficiency, on the other hand, could be preserved in those with eveningness tendencies during the late evening relative to both those with morningness tendencies and morning testing. These expectations follow a “synchrony effect” whereby evening-types perform better on a range of cognitive tasks in the evening and morning-types in the morning (see Adan et al. 2012, for a review; Horne and Östberg 1980; Kerkhof 1985; May and Hasher 1998; May et al. 1993). The effect may occur because the timing of the task coincides with peak levels of alertness, higher core body temperature, and arousal. Indeed, under socially constrained conditions (such as when one has to awaken early in the morning to commute to work), evening-types awaken closer to their body temperature nadir, when alertness is low (Baehr et al. 2000; Waterhouse et al. 2001), thus contributing to impaired performance relative to morning-types. On the other hand, during the evening, the onset of melatonin occurs at an earlier clock time in morning-types (Lack et al. 2009), decreasing alertness and contributing to poorer neurobehavioural performance compared to evening-types.

These considerations suggest that an investigation of the potential interactive impact of circadian-variability (chronotype and time-of-day) on the attentional networks’ functioning during sustained wakefulness is not complete without considering the corresponding effects across the total time frame of a typical waking day (i.e. 18 h of sustained wakefulness). Furthermore, whilst sleep restriction and sleep deprivation studies (utilising the constant routine and forced desynchrony protocols) have elegantly demonstrated the opposing forces of the circadian rhythm and homoeostatic sleep drive on neurobehavioural functioning (see Goel et al. 2013, for a review), the ecological validity of such studies is debatable. It is important to consider the potential interactive impact of circadian-variability on attentional functioning on a real-world level. The study reported below examines the influence of chronotype and time-of-day on the functional efficiency of the attentional networks under a typical “socially constrained” day (i.e. 8 am–2 am the following morning; 18 h of sustained wakefulness) following a night of normal sleep in good sleepers.

Methods

Participants

Participants were recruited from the general population of the north-east of England through poster advertisements, emails to staff and students of Northumbria University, and through social media. Thirty participants initially volunteered for the study; 26 provided complete data. All participants were self-reported good sleepers (mean Pittsburgh Sleep Quality Index [PSQI], Buysse et al. 1989, =3.54 [1.61]); were aged between 18 and 40 years of age (mean age 25.58; SD 4.26; 54 % male); did not have a history of/or current sleep, medical or psychiatric disorder, or drug/alcohol abuse; were not taking medications (including recreational drugs) which could affect their sleep; were not experiencing excessive daytime sleepiness (mean Epworth Sleepiness Scale [ESS], Johns 1992, score = 3.96 [2.46]); were not shift-workers; had not travelled across three time zones in the previous 3 months; had normal (or corrected-to-normal) vision; and were non-smokers. Participants were rewarded with a £40 “Love2Shop” voucher for their participation.

Measures

Chronotype

The Horne and Östberg Morningness–Eveningness Questionnaire (MEQ: Horne and Östberg 1976) was used to assess chronotype. The MEQ includes 19 self-reported items assessing preferred timing of daytime activities, sleep habits, hours of peak performance, and times of “feeling best” and maximum alertness. Responses are combined to provide a total score ranging between 16 and 86 with higher scores indicating a greater tendency towards morningness. We examined the continuous morningness–eveningness scale rather than extreme groups of morning- versus evening-types for greater power and to best represent the full chronotype spectrum.

Attention

The Attention Network Test (ANT: Fan et al. 2002) was used to examine the attentional networks’ performance (see Fig. 1). In the ANT, participants perform on centre-, double-, spatial-, or no-cue trials (100 ms) between two central fixation events. At the second central fixation (400 ms), the target arrow (left or right) is presented either above or below the fixation cross, and it is either presented alone (neutral condition), with two flankers either side pointing in the same direction (congruent condition), or with two flankers either side pointing in the opposite direction (incongruent condition) (lasting no longer than 1700 ms). Upon presentation of the target, participants are required to indicate by pressing designated keys on a computer keyboard whether the corresponding arrows point leftwards or rightwards. The ANT provides a raw reaction time (RT) measure for each of the conditions (cue type: no cue, centre cue, double cue, spatial cue; flanker type: neutral, congruent, incongruent). Additionally, the ANT provides specific measures of alerting, orienting and conflict resolution (executive control). The alerting score is calculated by subtracting the mean RT of the double-cue conditions (which alerts the participant to the imminent target, but does not provide information on its location either above or below the cross) from the mean RT of the no-cue conditions. The orienting score is calculated by subtracting the mean RT of the spatial-cue conditions (which alerts participants to the imminent target and provides information on its location) from the mean RT of the centre-cue conditions (which only alerts participants to the imminent target at one location). The conflict (executive control) score is calculated by subtracting the mean RT of all congruent flanked conditions from all incongruent flanked conditions (from all cue types). Greater scores typically indicate increase in processing difficulty: (a) maintaining alertness without a cue (alerting); (b) disengaging from the centre cue (orienting); or (c) resolving conflict (executive control) (Fan and Posner 2004). We first examined overall reaction times from the ANT as a function of cue type and flanker type, as well as chronotype and time-of-day; followed similarly by examination of error rates; and finally of attention network scores as a function of chronotype and time-of-day.

Reprinted with permission from Fan et al. (2002)

ANT procedure. a The four cue conditions, b the flanker types, and c an example of the procedure.

Procedure

The procedure for the present study comprised 3 stages.

Stage 1: Participant pre-screening

Prospective participants completed a brief screening questionnaire sent via email to confirm eligibility to participate. The screening questionnaire contained questions on general health, demographics, the Pittsburgh Sleep Quality Index (Buysse et al. 1989), the Epworth Sleepiness Scale (Johns 1992), and the Morningness–Eveningness Questionnaire (Horne and Östberg 1976). Eligible participants (good sleepers, no excessive sleepiness) completed an informed consent form and continued onto stage 2 of the study.

Stage 2: Week prior to sustained wakefulness protocol (SWP)

One week prior to the SWP, participants met with the researcher at the Northumbria Centre for Sleep Research, Northumbria University, to be informed of the risks and requirements of the protocol, to be provided with a questionnaire booklet outlining the protocol, and to familiarise themselves with the ANT task. Throughout the protocol, participants were informed that during and 6 h following the SWP, they must not drive, use tools/operate machinery, or perform any tasks that are demanding or may jeopardise their safety (i.e. running a bath), and that they must not do any of these until they had slept at least 6 h. Participants were provided with a one-week sleep diary to record their sleep patterns and an actiwatch (a watch-like device that records data on movement, and hence information on sleep–wake activity) to be worn during the week prior to the SWP to ensure that all participants did not suffer from a circadian rhythm disorder, or were excessively sleep-deprived prior to and during the SWP. Participants were required to continue to wear the actiwatch during the SWP to ensure adherence. During the week prior to the SWP, participants were required to adhere to their normal routine as much as possible; e.g. have a normal sleep duration (approximately 7.5–8.5 h per day) and maintain regular sleep schedule (e.g. approximate bed time of 11:30 pm ± 60 min, and waking up at approximately 7:30 am ± 60 min each night/morning). Only data from participants who obtained sufficient sleep (>6 h) and adhered to a stable sleep schedule the week prior to the SWP were included in the analyses. All recruited participants adhered to these requirements (actigraphy data unreported; available upon request from the first author).

Stage 3: Sustained Wakefulness Protocol (SWP)

-

Day 1, Morning session (7:45 am) (performed in participants’ homes): Participants were required to awaken at 7:30 am and were called by the researcher by telephone at 7:45 am to confirm that they were ready to commence the SWP and to be given instructions. Participants continued to wear the actiwatch. Participants were instructed that they should not have consumed breakfast, caffeine, alcohol or used recreational drugs prior to this session (or alcohol/recreational drugs the night before) and should not have performed any intense physical exercise. Participants were instructed to sit at their computer (connected to the Internet) to commence the ANT at 8 am. Upon completion, participants were free to go about their day as normal. Participants were required to refrain from consuming caffeine, alcohol or using recreational drugs during the protocol, should not perform any intense physical exercise, and should not take any naps (compliance confirmed by actigraphy).

-

Day 1, Evening session (7:45 pm): Twelve hours following the initial wake up time participants were called by the researcher to confirm that they had adhered to the protocol so far, that they were prepared to remain awake for the following 6-h period at home, and that there was someone home with them to act as their emergency contact in case their safety was compromised. Every hour the researcher called participants to ensure compliance to the protocol and to ensure their safety to continue.

-

Day 2, Early morning session (2 am): Eighteen hours following the beginning of the SWP, participants were called by the researcher to confirm that they had adhered to the protocol so far and that they were prepared to remain awake and to be given instructions. Participants were instructed to sit at their computer (connected to the Internet) to commence the ANT. Upon completion, participants called the researcher to monitor their health and safety. Participants were then permitted to retire to bed.

-

Day 2, Later morning: Upon awakening the next morning, participants called the researcher to ensure their safety to go about their day. Participants were then debriefed about the nature and aims of the study by phone, and a debrief sheet was also sent by email.

Compliance with ethical standards

The present study received full ethical approval from the Northumbria University Faculty of Health and Life Sciences Ethics Committee and has therefore been performed in accordance with the ethical standards laid down in the 1964 Declaration of Helsinki. Informed consent was obtained from all individual participants included in the study.

Results

Mean chronotype score was 51.54 (SD 6.99; range 36–63). Mean ANT reaction times were pooled from all correct trials for all participants (n = 26). Incorrect trials accounted for 8.69 % of the total trials. Additionally, trials with RT ± 2SD from the overall mean RT were excluded from analyses (6.25 %). Table 1 shows the mean RT and SD, and Table 2—the mean error rates and SD, for each of the experimental conditions (4 × cue type; 3 × flanker type) for time 1 (before sustained wakefulness; 8 am) and time 2 (following 18-h sustained wakefulness; 2 am). The distribution of RT scores across participants deviated from normal for 15 out of 24 conditions (Kolmogorov–Smirnov test <.05); thus, reciprocal transformations were applied to all variables prior to analysis (resulting in 3/24 variables deviating from normality). For those that still deviated from normality following transformation, skew and kurtosis were significantly reduced (skew range −.72 to −.90 [SE = .46]; kurtosis range −.49 to .96 [SE = .89]).

Mean reaction time

A repeated-measures ANCOVA was performed on mean (transformed) RT (reaction time), with time (1 = before sustained wake; 2 = following sustained wake), cue (no cue, centre, double, and spatial) and flanker type (neutral, congruent, and incongruent) as within-subject factors, and chronotype as covariate. Assumptions of sphericity were not met for the main effects of cue, flanker type, or the cue × flanker type interaction. In these cases, Greenhouse–Geisser correction to degrees of freedom was employed. There were significant main effects of cue (F[1.90, 72] = 5.28, p < .01, η 2 = .18) and time (F[1, 24] = 10.27, p < .01, η 2 = .30) on mean RT. Longer reaction times were exhibited on trials with no cue vs. all other cues and at time 2 versus time 1.

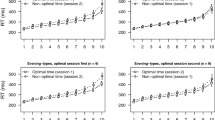

The time × chronotype interaction was significant (F[1, 24] = 10.16, p < .01, η 2 = .30) as were the flanker type × time (F[2, 48] = 3.43, p < .05, η 2 = .13) and flanker type × time × chronotype interactions (F[2, 48] = 3.64, p < .05, η 2 = .13). Figure 2 displays the time × chronotype interaction. Mean reaction times were longer at time 2 compared to time 1 for those with increasing tendency towards eveningness, whereas the opposite was true for morningness where reaction times were longer at time 1. For the flanker type × time interaction, mean reaction times were longer on both congruent and incongruent (vs. neutral) trials at time 2 compared to time 1.

Figure 3 displays the flanker type × time × chronotype interaction. Whilst at time 1 reaction times across all flanker type trial types were longer with increasing morningness (relative to eveningness), at time 2 reaction times were longer on incongruent trials only in those with increasing morningness (relative to eveningness). In other words, evening-types outperformed morning-types on incongruent trials at time 2.

Error rates

Table 2 shows the mean percentage of error rates and SD for each of the experimental conditions for time 1 (before sustained wakefulness; 8 am) and time 2 (following 18-h sustained wakefulness; 2 am). In order to examine error rates, we used generalised estimating equations (GEE, e.g. Hardin and Hilbe 2003). Unlike ANOVA, GEE allows for specifying distribution and link functions that are appropriate for analysing categorical frequencies. Here, we used a binomial distribution and logit link function (cf. Jaeger 2008) to model proportions of correct trials as a function of cue type (no cue, centre cue, double cue, and spatial cue) and flanker type (neutral, congruent, and incongruent) as within-subject variables, and chronotype as a covariate.

All main effects were non-significant; however, there were significant interactions: time × cue × flanker type (GS Χ 2 = 5.39(1), p = .02) such that significantly more errors were made at time 2 for centre cues on incongruent trials only; cue × flanker type × chronotype (GS Χ 2 = 4.39(1), p = .04) such that more errors were made on the centre-cue incongruent trials with increasing tendency towards morningness; and time × cue × flanker type × chronotype (GS Χ 2 = 5.57(1), p = .02) such that more errors were made at time 2 for the centre cue on incongruent trials with increasing tendency towards morningness.

Attentional network scores

The three attentional network scores (see Table 3) were calculated from correct trials (and after excluding +−2SD) as follows: alerting = (mean RT no-cue trials) − (mean RT double-cue trials); orienting = (mean RT centre-cue trials) − (mean RT of spatial-cue trials); conflict = (mean RT incongruent trials) − (mean RT congruent trials). Paired samples t tests revealed no significant differences in attentional network scores from time 1 to time 2 (all p’s > .05). A series of repeated-measures ANCOVAs were additionally run to examine the influence of chronotype as a covariate on attentional network scores from time 1 to time 2. No main effects or interactions were significant, though it is worth noting a trend towards significance on the time × chronotype interactions on both orienting and conflict scores (p = .08 and .07, respectively). Evening-types exhibited lower orienting scores at time 2 compared to time 1, whereas the opposite was true for morning-types. For conflict, evening-types exhibited lower scores at time 2 compared to time 1, whereas scores were equivalent at both time points for morning-types.

Discussion

Primarily, the present results demonstrate that 18 h of sustained wakefulness produced differential effects on visual attention as a function of chronotype, at least in terms of reaction time on a probe detection ANT task. Individuals with a tendency towards eveningness exhibited slower reaction times following 18 h of sustained wakefulness compared to initial testing at 8 am, whereas individuals with a tendency towards morningness exhibited the opposite. This finding is contrary to expectation based on the wealth of literature showing a “synchrony effect” (see Adan et al. 2012, for a review; Horne and Östberg 1980; May and Hasher 1998; May et al. 1993). Such an “asynchrony effect” (where performance is better at non-optimal time-of-day) may relate to the concept of “social jetlag”. Social jetlag refers to the misalignment between the endogenous circadian rhythm and time constraints imposed by social commitments (Wittmann et al. 2006). Forced awakenings at times out-of-sync with the biological drive for wakefulness impose physiological sensations akin to jetlag. Forcing evening-types to arise at 7:30 am in readiness for an 8 am test imposes an increased sleep debt on such individuals compared to morning-types, the latter of whom may have obtained a greater amount of sleep the night prior. Thus, at the subsequent 2 am testing, evening-types may have incurred an increased sleep debt which could account for the unexpected finding of longer RT at time 2 compared to time 1 for evening-types (though it is worth noting that all participants obtained at least 6 h sleep prior to testing).

An alternative explanation for the observed “asynchrony effect” is that sustained wakefulness on attention is modulated by compensatory mechanisms such as motivation or effort. At times of decreased efficiency and alertness, morning-types may need to employ greater effort allowing them to perform well on attentional tasks despite their decreasing arousal. Such a mechanism has been suggested to account for the absence of synchrony effects in relation to tasks that require a wide range of cognitive resources (Natale et al. 2003). However, to the best of our knowledge, this is the first demonstration of an asynchrony effect in attentional performance following sustained wakefulness. In a study of synchrony effects on general intelligence and visual-spatial and linguistic abilities, Freudenthaler and Neubauer (1992) demonstrated that level of motivation modulated the effects of chronotype and time-of-day on performance. The present data further suggest that a motivational mechanism may account for the asynchrony effect present for even bottom-up attentional processes. Others have found evidence of an asynchrony effect for tasks involving insightful problem solving, spatial aptitude, and implicit learning (Delpouve et al. 2014; Song and Stough 2000; Wieth and Zacks 2011). Interestingly, tasks related to the production of a well-learned pre-potent response rather than its inhibition demonstrate no synchrony/asynchrony effects (May and Hasher 1998). Differences between studies in evidence of synchrony/asynchrony effects are likely due to differences in the complexity of the tasks examined, or in the cognitive domain they employ.

Our data further suggest that the synchrony/asynchrony effect may depend on flanker congruency—on incongruent trials, those with increasing eveningness outperformed those with increasing morningness at time 2—evidence of a synchrony effect. Further, on examination of the error rate data, those with increasing morningness made more errors on centre-cue incongruent trials at time 2 than those with increasing eveningness. Our ANT data also indicate that evening-types exhibited a trend towards lower conflict scores (indicative of enhanced ability to resolve conflicting information) at time 2 compared to time 1, whereas the opposite was true of morning-types. Together, these results suggest that following 18 h of sustained wakefulness evening-types may be better at tasks of conflict resolution (which require inhibitory attentional processes) than morning-types. Strongest time-of-day effects are often observed for executive control of inhibitory functions compared to simple response tasks (Lustig et al. 2007), and synchrony effects are particularly evident on higher-order cognitive tasks requiring controlled processing of distracting stimuli (May 1999; May and Hasher 1998). In such studies, participants are worse at inhibiting distracting stimuli at their non-optimal time-of-day than at their time of optimal functioning.

It is likely that differential performance on inhibitory processing between chronotypes is due to differences in relative brain activation across the day. Schmidt and colleagues demonstrated that responses of brain regions known to be involved in monitoring and resolving cognitive conflict and error processing, in particular the anterior cingulate cortex and insula (Botvinick et al. 2001; Fan et al. 2003; Roberts and Hall 2008), are elevated in evening-types, but decreased in morning-types during the evening (Schmidt et al. 2012). Decrements in inhibitory control across the day may also be related to changes in frontal functioning (May and Hasher 1998). The lateral prefrontal cortex is, amongst other sites, involved in executive control of attention and modulated by dopamine (Fan and Posner 2004). Frontal areas are most vulnerable to the effects of sleep deprivation, demonstrating overall reduced activation as well as decreased response to cognitive tasks (Cajochen et al. 1999; Habeck et al. 2004; Jones and Harrison 2001). Interestingly, the effect of sleep deprivation on frontal areas, and particularly executive control functions, reflects individual differences and appears to be modulated by genes implicated in chronotype (Groeger et al. 2008; Vandewalle et al. 2009). Individuals with a genetic polymorphism in the PER3 clock gene predictive of morningness (5/5 tandem-repeat) are more vulnerable to executive control deficiencies following sleep loss (Groeger et al. 2008). The present results are congruent with these findings suggesting that those with a preference towards morningness show greater impairments in executive control following sustained wakefulness and that this difference (compared to eveningness tendencies) is likely due to functional deficiencies in frontal activation, driven at the molecular level by underlying genetic variability between chronotypes.

It is not possible, however, to dissociate whether this preservation in executive control in those with a tendency towards eveningness (relative to morningness) at time 2 is due to differential build-up of homoeostatic sleep pressure (i.e. increasing sleep propensity) over the waking period or differences in circadian phase between chronotypes. One could hypothesise that, given that frontal cortical areas are thought to be most sensitive to variations in homoeostatic sleep pressure (Cajochen et al. 1999), differential performance on inhibitory tasks between chronotypes is homoeostatically controlled. Previous research has demonstrated that evening-types exhibit a slower build-up of sleep pressure and can tolerate a higher homoeostatic load compared to morning-types (Mongrain and Dumont 2007; Taillard et al. 2003). It is possible that this tolerance to homoeostatic sleep pressure enables evening-types to outperform morning-types on complex tasks such as resolving conflict. Further, morning-types have a faster increase in theta–alpha activity (an indicator of sleep pressure; ~6–9 Hz, Cajochen et al. 1995) during wakefulness compared to evening-types (Taillard et al. 2003). Increased theta activity, particularly in frontal brain regions, is associated with decreased attention (Klimesch 1999; Mann et al. 1992); and a larger proportion of alpha power in lower frequency bands has been reported in individuals with difficulty with inhibitory control (Crawford et al. 1995; Klimesch 1999). It is, therefore, possible that the elevation in theta–alpha activity across the waking day reduces attention, particularly inhibitory control, and that this effect is exaggerated in morning-types.

Contrastingly, it is possible that the preservation of executive control in those with a tendency towards eveningness relative to morningness is due to circadian phase differences between chronotypes. Circadian rhythms of core body temperature and secretion of melatonin are typically 2–3 h later in evening-types compared to morning-types (Lack et al. 2009). Previous studies have shown that daily rhythms of alertness often mimic the circadian profile of core body temperature (Akerstedt and Gillberg 1982; Baehr et al. 2000; Johnson et al. 1992; Kleitman and Jackson 1950; Monk et al. 1997). Enhanced performance often corresponds to higher core body temperature. During the evening, alertness and performance considerably decline, coinciding with the falling limb of the core body temperature rhythm (Dijk et al. 1992)—an effect which will inevitably occur later in evening-types. Thus, the preserved executive control in evening-types following 18 h of sustained wakefulness can be attributed to both changes in homoeostatic and circadian processes. Indeed, studies using constant routine or forced desynchrony paradigms have demonstrated the influence of both processes on variation in sleepiness and performance across the waking period (e.g. Dijk et al. 1992; Mollicone et al. 2008; Zhou et al. 2011). Further research from us focuses on disentangling the effects of the circadian rhythm and homoeostatic sleep drive on attentional functioning.

In sum, our results provide novel evidence that following 18 h of sustained wakefulness, the ability to resolve conflict is preserved in those with increasing tendency towards eveningness. However, it should be noted that this effect was evident only in the reaction time and error rate data, whereas the effect on the derived attentional network scores only bordered on significance. This finding contradicts that of Matchock and Mordkoff (2009) who found chronotype and time-of-day effects on the alerting network, and time-of-day effects on executive control. Certain limitations of the present study offer potential explanations for this discrepancy. First, our sample was not selected on the basis of extreme chronotype; rather, we examined the full chronotype spectrum. This was intentional as it allowed us to demonstrate that differential effects on attentional processing emerge even between less extreme variations in chronotype. As such, the present pattern may be conservative, and a replication of the current study including groups of extreme morning- and evening-types is likely to yield stronger effects. Second, this study was performed in participants’ homes with little control over potential confounding factors such as ambient lighting, temperature, posture, and food intake—all of which can in principle affect behavioural alertness and circadian rhythmicity (Duffy and Czeisler 2009; Kräuchi et al. 1997; Paz and Berry 1997; Waterhouse et al. 2005). Additionally, we did not take into account possible effects of “social jetlag” on attentional performance between chronotypes. It is possible that 8 am and 2 am test times do not reflect the same circadian phase between individuals, even for those with similar chronotypes, due to differential accumulation of sleep debt across the week prior to the sustained wakefulness protocol. During the working week, evening-types may incur greater sleep debt due to a greater mismatch between preferred sleep–wake times and those imposed by work or social obligations. This could account for the “asynchrony effect” observed in our RT data. Investigation of sleep–wake times obtained on work- versus free-days would provide a better representation of true circadian phase not confounded by social jetlag. Third relates to the fact that repeated testing using the ANT may induce practice effects. Ishigami and Klein (2010) demonstrated that practice effects are particularly potent in affecting executive function as assessed by the ANT. This is evidenced by decreased reaction time on incongruent trials with repeated testing. However, our data demonstrate increased RT at time 2 compared to time 1 (for 10/12 conditions). This pattern of results is inconsistent with a practice-effects explanation of our data, but rather demonstrates that the time effects observed are due to processes associated with sustained wakefulness. Furthermore, our time effects varied as a function of chronotype—a finding which cannot be explained by practice. That said, future studies would benefit from including a control group of participants who perform the ANT tasks in the reversed order (i.e. 2 am testing prior to 8 am testing) to confirm the absence of practice effects in the current context. Fourth, it is possible that our sample size was underpowered to detect significant interaction effects (particularly for the trends towards significance for the time × chronotype interactions on both orienting and conflict score). Thus, these results should be considered preliminary, and future studies should address these questions within larger samples. A final consideration is that, by measuring three components of attention within one test, the ANT may result in increased variation in response times due to the fact that each trial exhibits an alerting, orienting, and executive control component. Future research investigating the influence of chronotype and sustained wakefulness on attention would benefit from examining the sensitivity of pure measures of alerting, orienting, and executive control.

Overall, our results contribute to a better understanding of the effects of sleep deprivation, chronotype, and time-of-day effects on cognitive functioning, by considering these relationships across the time frame of a normal “socially constrained” waking day. We conclude that attentional processing is not holistically impaired following 18 h of sustained wakefulness, but rather specific attentional domains are impaired or preserved as a function of chronotype. These effects are likely driven by relative differences and changes in frontal functioning and dopaminergic activity between chronotypes across the waking day. These findings can be usefully translated to other areas of cognitive processing. For example, successful inhibition is necessary for numerous cognitive processes, including speech production and language comprehension, given that such processes require careful control over thought and action (Arbuckle and Gold 1993; Gernsbacher 1993; Logan and Cowan 1984). Thus, it is possible that these linguistic processes are modulated by chronotype and time-of-day in a similar manner as attention. On a wider scale, our results highlight that morning-types may be particularly vulnerable to failures in executive control in the later evening, which may have direct implications on risk of accidents particularly on tasks requiring inhibitory control. Future research from ourselves will investigate associations between biological indices of circadian rhythm phase, EEG signatures of increasing sleep pressure (i.e. theta–alpha activity; slow-wave activity), and attentional performance to provide insight into the components of attention that are affected by the circadian rhythm or homoeostatic mechanisms.

References

Adan A, Archer SN, Hidalgo MP, Di Milia L, Natale V, Randler C (2012) Circadian typology: a comprehensive review. Chronobiol Int 29:1153–1175

Akerstedt T, Gillberg M (1982) Displacement of the sleep period and sleep deprivation. Hum Neurobiol 1:163–171

Arbuckle TY, Gold DP (1993) Aging, inhibition, and verbosity. J Gerontol 48:P225–P232

Baehr EK, Revelle W, Eastman CI (2000) Individual differences in the phase and amplitude of the human circadian temperature rhythm: with an emphasis on morningness–eveningness. J Sleep Res 9:117–127

Basner M, Dinges DF (2011) Maximizing sensitivity of the psychomotor vigilance test (PVT) to sleep loss. Sleep 34:581–591

Basner M, Mollicone D, Dinges DF (2011) Validity and sensitivity of a brief psychomotor vigilance test (PVT-B) to total and partial sleep deprivation. Acta Astronaut 69:949–959

Belenky G et al (2003) Patterns of performance degradation and restoration during sleep restriction and subsequent recovery: a sleep dose-response study. J Sleep Res 12:1–12

Bin YS, Marshall NS, Glozier N (2012) Secular trends in adult sleep duration: a systematic review. Sleep Med Rev 16:223–230

Botvinick MM, Braver TS, Barch DM, Carter CS, Cohen JD (2001) Conflict monitoring and cognitive control. Psychol Rev 108:624–652

Buysse DJ, Reynolds CF, Monk TH, Berman SR, Kupfer DJ (1989) The Pittsburgh Sleep Quality Index: a new instrument for psychiatric practice and research. Psychiat Res 28:192–213

Cajochen C, Brunner DP, Kräuchi K, Graw P, Wirz-Justice A (1995) Power density in theta/alpha frequencies of the waking EEG progressively increases during sustained wakefulness. Sleep 18:890–894

Cajochen C, Foy R, Dijk DJ (1999) Frontal predominance of a relative increase in sleep delta and theta EEG activity after sleep loss in humans. Sleep Res Online 2:65–69

Crawford HJ, Knebel TL, Vendemia JM, Kaplan L, Ratcliff B (1995) EEG activation patterns during tracking and decision-making tasks—differences between low and high sustained attention adults. In: International symposium on aviation psychology, 8th, Columbus, OH, 1995, pp 886–890

Delpouve J, Schmitz R, Peigneux P (2014) Implicit learning is better at subjectively defined non-optimal time of day. Cortex 58:18–22

Dijk D, Duffy JE, Czeisler CA (1992) Circadian and sleep/wake dependent aspects of subjective alertness and cognitive performance. J Sleep Res 1:112–117

Duffy JF, Czeisler CA (2009) Effect of light on human circadian physiology. Sleep Med Clin 4:165–177

Fan J, Posner M (2004) Human attentional networks. Psychiatr Praxis 31(Suppl 2):S210–S214

Fan J, McCandliss BD, Sommer T, Raz A, Posner MI (2002) Testing the efficiency and independence of attentional networks. J Cogn Neurosci 14:340–347

Fan J, Flombaum JI, McCandliss BD, Thomas KM, Posner MI (2003) Cognitive and brain consequences of conflict. NeuroImage 18:42–57

Fernandez-Duque D, Posner MI (2001) Brain imaging of attentional networks in normal and pathological states. J Clin Exp Neuropsychol 23:74–93

Freudenthaler HH, Neubauer AC (1992) Morningness–eveningness, time of day, and intellectual performance. In: VIth European conference on personality, Groningen, 16th–19th June, 1992

Gernsbacher MA (1993) Less skilled readers have less efficient suppression mechanisms. Psychol Sci 4:294–298

Goel N, Van Dongen HPA, Dinges DF (2011) Circadian rhythms in sleepiness, alertness, and performance. In: Kryger MH, Roth T, Dement WC (eds) Principles and practice of sleep medicine, 5th edn. Elsevier, Philadelphia, pp 445–455

Goel N, Basner M, Rao H, Dinges DF (2013) Circadian rhythms, sleep deprivation, and human performance. Prog Mol Biol Transl Sci 119:155

Groeger JA, Viola AU, Lo JCY, von Schantz M, Archer SN, Dijk DJ (2008) Early morning executive functioning during sleep deprivation is compromised by a PERIOD3 polymorphism. Sleep 31:1159–1167

Habeck C, Rakitin BC, Moeller J, Scarmeas N, Zarahn E, Brown T, Stern Y (2004) An event-related fMRI study of the neurobehavioral impact of sleep deprivation on performance of a delayed-match-to-sample task. Cogn Brain Res 18:306–321

Hardin JW, Hilbe JM (2003) Generalized estimating equations. Wiley, New York

Horne JA, Östberg O (1976) A self-assessment questionnaire to determine morningness–eveningness in human circadian rhythms. Int J Chronobiology 4:97–110

Horne JA, Östberg O (1980) Circadian performance differences between morning and evening ‘types’. Ergonomics 23:29–36

Ishigami Y, Klein RM (2010) Repeated measurement of the components of attention using two versions of the Attention Network Test (ANT): stability, isolability, robustness, and reliability. J Neurosci Method 190:117–128

Jaeger FT (2008) Categorical data analysis: away from ANOVAs (transformation or not) and towards logit mixed models. J Mem Lang 59:434–446

Johns MW (1992) Reliability and factor analysis of the Epworth Sleepiness Scale. Sleep 15:376–381

Johnson MP, Duffy JF, Dijk DJ, Ronda JM, Dyal CM, Czeisler CA (1992) Short-term memory, alertness and performance: a reappraisal of their relationship to body temperature. J Sleep Res 1:24–29

Jones K, Harrison Y (2001) Frontal lobe function, sleep loss and fragmented sleep. Sleep Med Rev 5:463–475

Jugovac D, Cavallero C (2012) Twenty-four hours of total sleep deprivation selectively impairs attentional networks. Exp Psychol 59:115–123

Kerkhof GA (1985) Inter-individual differences in the human circadian system: a review. Bio Psychol 20:83–112

Kerkhof GA, Van Dongen HPA (1996) Morning-type and evening-type individuals differ in the phase position of their endogenous circadian oscillator. Neurosci Lett 218:153–156

Kleitman N, Jackson DP (1950) Body temperature and performance under different routines. J Appl Physiol 3:309–328

Klimesch W (1999) EEG alpha and theta oscillations reflect cognitive and memory performance: a review and analysis. Brain Res Rev 29:169–195

Kräuchi K, Cajochen C, Wirz-Justice A (1997) A relationship between heat loss and sleepiness: effects of postural change and melatonin administration. J Appl Physiol 83:134–139

Lack LC, Bailey M, Lovato N, Wright H (2009) Chronotype differences in circadian rhythms of temperature, melatonin, and sleepiness as measured in a modified constant routine protocol. Nat Sci Sleep 1:1–8

Levandovski R, Sasso E, Hidalgo MP (2013) Chronotype: a review of the advances, limits and applicability of the main instruments used in the literature to assess human phenotype. Trends Psychiatry Psychother 35:3–11

Lim J, Dinges DF (2008) Sleep deprivation and vigilant attention. Molecular and biophysical mechanisms of arousal, alertness, and attention. Ann NY Acad Sci 1129:305–322

Logan GD, Cowan WB (1984) On the ability to inhibit thought and action: a theory of an act of control. Psychol Rev 91:295–327

Lustig C, Hasher LU, Zacks RT (2007) Inhibitory deficit theory: recent developments in a “New View”. In: Gorfein DS, MacLeod CM (eds) Inhibition in cognition. American Psychological Association, Washington, pp 145–162

Mann CA, Lubar JF, Zimmerman AW, Miller CA, Muenchen RA (1992) Quantitative analysis of EEG in boys with attention-deficit-hyperactivity disorder: controlled study with clinical implications. Pediatr Neurol 8:30–36

Martella D, Casagrande M, Lupianez J (2011) Alerting, orienting and executive control: the effects of sleep deprivation on attentional networks. Exp Brain Res 210:81–89

Matchock RL, Mordkoff JT (2009) Chronotype and time-of-day influences on the alerting, orienting, and executive components of attention. Exp Brain Res 192:189–198

May CP (1999) Synchrony effects in cognition: the costs and a benefit. Psychon Bull 6:142–147

May CP, Hasher LU (1998) Synchrony effects of inhibitory control over thought and action. J Exp Psychol Hum Percept Perform 24:363–379

May CP, Hasher LU, Stoltzfus ER (1993) Optimal time of day and the magnitude of age differences in memory. Psychol Sci 4:326–330

Mollicone DJ, Van Dongen HP, Rogers NL, Dinges DF (2008) Response surface mapping of neurobehavioral performance: testing the feasibility of split sleep schedules for space operations. Acta Astronaut 63:833–840

Mollicone DJ, Van Dongen H, Rogers NL, Banks S, Dinges DF (2010) Time of day effects on neurobehavioral performance during chronic sleep restriction. Aviat Space Env Med 81:735–744

Mongrain V, Dumont M (2007) Increased homeostatic response to behavioral sleep fragmentation in morning types compared to evening types. Sleep 30:773–780

Mongrain V, Carrier J, Dumont M (2005) Chronotype and sex effects on sleep architecture and quantitative sleep EEG in healthy young adults. Sleep 28:819–827

Mongrain V, Carrier J, Dumont M (2006) Difference in sleep regulation between morning and evening circadian types as indexed by antero-posterior analyses of the sleep EEG. Eur J Neurosci 23:497–504

Mongrain V, Noujaim J, Blais H, Dumont M (2008) Daytime vigilance in chronotypes: diurnal variations and effects of behavioral sleep fragmentation. Behav Brain Res 190:105–111

Mongrain V, Carrier J, Paquet J, Belanger-Nelson E, Dumont M (2011) Morning and evening-type differences in slow waves during NREM sleep reveal both trait and state-dependent phenotypes. PLoS ONE 6:e22679

Monk TH, Buysse DJ, Reynolds CF 3rd, Berga SL, Jarrett DB, Begley AE, Kupfer DJ (1997) Circadian rhythms in human performance and mood under constant conditions. J Sleep Res 6:9–18

Muto V et al (2012) Influence of acute sleep loss on the neural correlates of alerting, orientating and executive attention components. J Sleep Res 21:648–658

Natale V, Alzani A, Cicogna P (2003) Cognitive efficiency and circadian typologies: a diurnal study. Pers Individ Dif 35:1089–1105

Paz A, Berry E (1997) Effect of meal composition on alertness and performance of hospital night-shift workers. Ann Nutr Metab 41:291–298

Petersen SE, Posner MI (2012) The attention system of the human brain: 20 years after. Annu Rev Neurosci 35:73–89

Roberts KL, Hall DA (2008) Examining a supramodal network for conflict processing: a systematic review and novel functional magnetic resonance imaging data for related visual and auditory stroop tasks. J Cogn Neurosci 20:1063–1078

Roca J, Fuentes LJ, Marotta A, Lopez-Ramon MF, Castro C, Lupianez J, Martella D (2012) The effects of sleep deprivation on the attentional functions and vigilance. Acta Psychol 140:164–176

Schmidt C et al (2012) Circadian preference modulates the neural substrate of conflict processing across the day. PLoS ONE 7:e29658

Song J, Stough C (2000) The relationship between morningness ± eveningness, time-of-day, speed of information processing, and intelligence. Pers Individ Dif 29:1179–1190

Taillard J, Philip P, Coste O, Sagaspe P, Bioulac B (2003) The circadian and homeostatic modulation of sleep pressure during wakefulness differs between morning and evening chronotypes. J Sleep Res 12:275–282

Taillard J, Philip P, Claustrat B, Capelli A, Coste O, Chaumet G, Sagaspe P (2011) Time course of neurobehavioral alertness during extended wakefulness in morning- and evening-type healthy sleepers. Chronobiol Int 28:520–527

Trujillo LT, Kornguth S, Schnyer DM (2009) An ERP examination of the different effects of sleep deprivation on exogenously cued and endogenously cued attention. Sleep 32:1285–1297

Valdez P, Ramírez C, García A (2012) Circadian rhythms in cognitive performance: implications for neuropsychological assessment. Chronphysio Ther 2:81–92

Van Dongen HPA, Maislin G, Mullington JM, Dinges DF (2003) The cumulative cost of additional wakefulness: dose-response effects on neurobehavioral functions and sleep physiology from chronic sleep restriction and total sleep deprivation. Sleep 2:117–126

Vandewalle G et al (2009) Functional magnetic resonance imaging-assessed brain responses during an executive task depend on interaction of sleep homeostasis, circadian phase, and PER3 genotype. J Neurosci 29:7948–7956

Waterhouse J et al (2001) Temperature profiles, and the effect of sleep on them, in relation to morningness–eveningness in healthy female subjects. Chronobiol Int 18:227–247

Waterhouse J et al (2005) The circadian rhythm of core temperature: origin and some implications for exercise performance. Chronobiol Int 22:207–225

Wieth MB, Zacks RT (2011) Time of day effects on problem solving; when the non-optimal is optimal. Think Reason 17:387–401

Wittmann M, Dinich J, Merrow M, Roenneberg T (2006) Social jetlag: misalignment of biological and social time. Chronobiol Int 23:497–509

Zhou X, Ferguson SA, Matthews RW, Sargent C, Darwent D, Kennaway DJ, Roach GD (2011) Sleep, wake and phase dependent changes in neurobehavioral function under forced desynchrony. Sleep 34:931–941

Acknowledgments

This study was supported by the Department of Psychology, Northumbria University, and the Russian Academic Excellence Project “5-100”. The authors declare no conflicts of interest. We would like to thank Katarzyna Maczewska for her assistance with data collection and Professor Michael Posner for his comments on an earlier draft of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Barclay, N.L., Myachykov, A. Sustained wakefulness and visual attention: moderation by chronotype. Exp Brain Res 235, 57–68 (2017). https://doi.org/10.1007/s00221-016-4772-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-016-4772-8