Abstract

Digital documentation of cultural heritage requires high quality spatial and color information. However the 3D data accuracy is already sufficiently high for many applications, the color representation of surface remains unsatisfactory. In this paper we describe issues encountered during 3D and color digitization based on a real-world case study. We focus on documentation of the King’s Chinese Cabinet at Wilanów Palace (Warsaw, Poland). We show the scale of the undertaking and enumerate problems related to high resolution 3D scanning and reconstruction of the surface appearance despite object gloss, uneven illumination, limited field of view and utilization of multiple light sources and detectors. Our findings prove the complexity of cultural heritage digitization, justify the individual approach in each case and provide valuable guidelines for future applications.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The technology of 3D digital documentation of shape is widely adopted in the field of cultural heritage digitization [1, 2]. As the solutions mature they become easily available and offer better resolution and accuracy. However, these techniques put emphasis on the surface shape and either do not focus on the accuracy of the color reproduction or neglect it altogether. Independently, state-of-the-art multispectral and color digitization solutions exist and are also utilized in cultural heritage documentation [3, 4]. They are sometimes combined with the 3D imaging in order to provide full appearance model of a documented artifact [5, 6]. An important factor in capturing color of the imaged surface is its glossiness. Specular highlights affect the appearance and distort color information. Many specularity removal methods are described by Artusi et al. [7].

The presented work focuses on a combination of 3D surface digitization with color calibration and highlights removal in order to create a digital documentation of the Kings Chinese Cabinet at Wilanów Palace (Warsaw, Poland).

2 Investigated Object

King’s Chinese Cabinet is a unique example of interior decorative art of the XVIII century. Wooden panels were made using the European lacquer technique. This work is attributed to the famous German craftsman Martin Schnell and his workshop [8]. The original color scheme of the cabinet was much different from its state in 2009 when a very difficult decision to remove secondary coatings revealing the interior’s original surface was made. The conservation works were finished in 2012. The restored surface is diversified, containing lacquered matte and glossy fragments of different colors and texture (Fig. 1a). These variations make the digitization process challenging and enforce utilization of sophisticated acquisition techniques.

Two scans of the whole chamber’s surface with resolution 100 points per square millimeter were made. The first measurement took place in 2009, before the conservation work began. The second measurement was performed in 2015 [9]. The presented research is based on data collected during the second digital documentation session.

3 Measurement Setup

The measurement setup designed for the documentation is a dedicated device capable of simultaneous acquisition of 3D geometry and color. The main component of the setup is a structured light projection 3D scanner mounted on an industrial robot arm. Additionally, the robot is fixed on a vertical translation stage which also supports four matrix LED illuminators (Fig. 1a). In each position two series of images are captured. The first one collects data necessary for point cloud reconstruction, whereas the second series of images uses LED sources to capture texture data for color reconstruction. Because the same cameras are used for both sequences no additional texture mapping is necessary and each point in the resulting cloud features calibrated color information.

3.1 Structured Light Projection System

The structured light projection setup utilizes the well known sine fringes projection technique and Temporal Phase Shifting with Gray codes for unwrapped phase retrieval [10]. It consists of a DLP LED projector and two 9Mpix color, CCD cameras mounted symmetrically no both sides of the projector (Fig. 1b). Both cameras capture the same area of approximately 300 \(\times \) 200 mm which gives a scanning resolution of 100 points per mm\(^2\). The geometrical layout of the detectors is strictly related to the highly reflective properties of the scanned surface. It was important to capture the surface from two different directions in order to conveniently suppress specular highlights.

3.2 Illumination

Ideally, the illumination used for color acquisition should be diffuse, geometrically fixed and have high Color Rendering Index. However, due to specific object properties none of these assumptions could have been fully realized in practice. Because the task involved documentation of the whole room, including ceiling, it was not possible to provide fixed illumination without occlusions caused by the measurement setup itself. Moreover, the light sources must not have been susceptible to switching, because for each scanning direction, the structured light projection sequence must have been performed alternately with the color acquisition. Therefore usage of high-CRI tungsten lamps was not possible. Ultimately, a set of photographic, matrix LED illuminators was used.

The remaining problem was the distribution of the light sources. Ideally, a set of point light sources, distributed over a wide range of angles around the measured surface would be preferred. This way a model of bi-directional reflectance distribution function (BRDF) could have been estimated for each captured point. However, it was not feasible to realize in practice due to scanning time constraints and the amount of required raw data.

Using single, directional light source leads to specular highlights which are difficult to compensate for. Therefore the decision was made to use six independent lamps, switched one by one in order to capture a series of texture images. Two sources were mounted on the measurement head, near cameras, to provide illumination from the direction close to the observation direction. Four remaining sources were fixed to the robot mount in a rectangular grid. They provide the same illumination direction, regardless of the robot’s position (Fig. 1). The aim was to capture colorimetrically accurate texture of the scanned surface, without modeling the full BRDF response. Therefore the simplified light sources distribution was a compromise between appearance accuracy and real measurement conditions.

3.3 Automation of Scanning

The task of scanning the whole room with average resolution of 100 \(\text {points}/\text {mm}^2\) required automation of the acquisition process in order to collect all data in a reasonable time. To achieve this, the robot was programmed to move along a path which spanned a grid of 25 scanning positions, distributed on a \(5\times 5\) square. Such sequence of measurements was performed automatically. Additional advantage of the automatic positions was that the 3D scanner could use the robot coordinate frame, so that point clouds within the sequence are simultaneously fitted and represented as a consistent fragment of the wall. These patches consisting of 50 clouds (for both cameras) were later positioned along each other with an ICP algorithm.

4 Color Correction Method

The aim of the color correction is to obtain trichromatic (RGB) texture, free of specular highlights and uneven illumination for each cloud. Additionally, intensity levels of color components in areas of cloud overlap should be minimized.

The proposed correction assumes a two-way approach. The first objective is to correct the texture images colorimetrically, so that differences between light sources are eliminated. Additional advantage of this procedure is that color information is obtained in independent color spaces CIE XYZ and CIE Lab for each point. This allows for future color comparison and color difference calculation. The second correction objective is the illumination uniformization and elimination of highlights. Such procedure corrects the overall model appearance and retrieves color information in places where reflections occurred.

4.1 Colorimetric Calibration

Although the utilized light sources come from the same manufacturer, they exhibit slight variations in perceived color temperature. This makes it impossible to adjust cameras white balance to fit all six sources. Therefore raw texture images show different color casts. Consequently, a linear model transforming raw camera RGB values to CIE XYZ components had to be found for each camera – source pair. Such procedure was earlier described by Hardeberg in [11].

The model relies on a linear relationship between raw camera signal in three channels (red, green and blue) and the CIE XYZ coordinates, as in Eq. 1.

It is justified by the fact that the CIE XYZ factors are defined as a linear function of a spectral response, with color matching functions as parameters[12]. The assumption holds as long as the camera intensity response is linear, e.g. no gamma correction is applied to the input signal.

The model can be easily established if both, the camera RGB and reference CIE XYZ values are known for a set of color samples (Fig. 2). Let \(R[n \times 3]\) be the reference CIE XYZ coordinates and \(Q[n \times 3]\) the RGB response of n samples. Consequently, the model can be found by inverting the response matrix with a pseudo-inverse method.

Here we use a X-Rite ColorChecker chart as a source of calibration color samples. The response matrix R is built from the average of captured RGB values of each patch of the color target. The calibration procedure is performed for each camera and light source combination yielding 12 model matrices. They are applied to appropriate texture images. Finally, for each texture image from each light source the CIE LAB color representation is calculated.

The accuracy of color calibration was evaluated in two ways. In the first approach, the absolute one, the \(\varDelta E_{00}\) was calculated for each patch of the ColorChecker chart and for each calibration model, relative to the ground truth reference CIE Lab values of the color patches. Average \(\varDelta E\) values are presented in Table 1.

The second, relative accuracy evaluation is considered more important because it shows differences remaining between the calibration models. Accordingly, it directly influences visible color mismatch on overlapping point clouds. In this case average \(\varDelta E_{00}\) from all color patches was calculated between all combinations of the calibration models. Its distribution is shown in Fig. 3. The upper triangle of the table shows \(\varDelta E_{00}\) calculated from the raw data, before calibration, whereas the lower triangle illustrates the outcome after calibration.

Most of the calibrated \(\varDelta E_{00}\) values stay in range of (1.00; 3.00), which indicates small color difference, hardly noticeable for an average observer. They are also significantly lower than the initial accuracy, before color calibration. Moreover the relative differences are generally smaller than the ones calculated with respect to the ground truth CIE LAB for the ColorChecker chart. It indicates that, although the color calibration procedure has limited accuracy, it is sufficient for suppression of relative differences between illumination conditions. This observation fulfills the color correction objective.

4.2 Illumination Non-uniformity Correction and Highlights Suppression

The goal of the second correction step is to merge data acquired with specific lights in the way that compensates unwanted effects of highlights, sharp shadows and uneven lighting. This issue has been widely studied, both with 2D images and with final 3D models. The removal of artifacts from 2D images, especially highlights removal, can be roughly classified in two main categories: the ones working on a single image, which are mainly based on the analysis of the colors of the image, and the ones using a set of images [7]. Since with our system 6 additional images are acquired with a single scan, data redundancy required for multi-images algorithms is guaranteed. 3D-space methods are more robust but complex i.e. requiring geometry analysis of measured object or additional lights position calibration [13, 14].

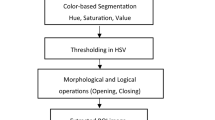

In the discussed solution, two important requirements lead us to choose an appropriate method. Firstly, with more than 10 thousand of separate scans to process we are looking for the fastest possible method. With complex post-processing algorithms, the duration of calculation could become prohibitively long. Secondly, data must meet the expectations of art conservators. Therefore, any estimations or averaging the data from the surroundings should be omitted. Consequently, the simplified version of multi-flash algorithm described in [15] was used. After colorimetric correction CIE XYZ are converted to CIE Lab color space and splitted in separate channels. Lightness and a,b color components are analyzed separately. To preserve documentary value of the scans, all calculations are performed per pixel (Fig. 2).

For each pixel information from 6 input textures, acquired with specific lights, is taken into consideration. Lightness is calculated as a mean value after rejecting maximum and minimum outliers, which refers to specular highlights and shadows. Due to color correction performed in the first step, between-textures deviation of a and b color components is low and final a and b are calculated as median of input values. Finally, modified L, a and b textures are stacked together and converted to sRGB color space, for visualization purposes, and color information is transferred from each pixel to corresponding vertex of cloud of points. Figure 4 shows the outcome of the texture correction procedure and outcome of the final 3D model.

Exemplary textures: a, b: input CIEXYZ data from 2 different light sources; c: final visualization of the L channel; d: final visualization of CIE Lab channels; e: final color-corrected 3D model of the room corner; f, g: wood panel fragment close-up artificially illuminated from two different directions. (Color figure online)

At the final step between-clouds averaging or segmentation and smoothing within specific segments can be performed. Each cloud is being divided into segments based on points’ hue. Afterward, a small neighborhood of each point is found among the overlapping clouds and the final color value is calculated as an average of colors of points from the neighborhood. Such procedure decrease the color noise, remove local texture non-uniformity and generally improve final visual effect by guaranteeing a smooth blending between clouds. Unfortunately, neighborhood averaging makes data not useful for documentation and archiving purposes where we expect reliable data without any misrepresentations.

5 Conclusion

We presented a robust color correction method for 3D documentation based on an exemplary cultural heritage object. Color calibration and highlights removal steps allow for faithful texture reconstruction despite glossiness of the scanned surface and non-uniform illumination conditions. With per-point calculations results remain trustworthy and satisfactory for art conservators.

The scale of the project lead to compromises regarding distribution of the light sources. Mounting light sources both, on the 3D scanner and the robot mount may affect the illumination uniformity for different scanning directions. Additionally, highlights removal method does not take advantage of the 3D geometry of the object because of calculations complexity for a large amount of captured raw data. Nevertheless, the collected information is sufficient to develop more sophisticated data processing methods in the future research.

References

Karaszewski, M., Sitnik, R., Bunsch, E.: On-line, collision-free positioning of a scanner during fully automated three-dimensional measurement of cultural heritage objects. Robot. Auton. Syst. 60(9), 1205–1219 (2012)

Pieraccini, M., Guidi, G., Atzeni, C.: 3D digitizing of cultural heritage. J. Cult. Herit. 2(1), 63–70 (2001)

Berns, R., Imai, F., Burns, P., Tzeng, D.Y.: Multi-spectral-based color reproduction research at the Munsell Color Science Laboratory. In: Proceedings of SPIE, Electronic Imaging: Processing, Printing, and Publishing in Color, Citeseer, pp. 14–25 (1998)

Cosentino, A.: Identification of pigments by multispectral imaging: a flowchart method. Herit. Sci. 2(1), 8 (2014)

Simon Chane, C., Schütze, R., Boochs, F., Marzani, F.S.: Registration of 3D and multispectral data for the study of cultural heritage surfaces. Sensors 13, 1004–1020 (2013). (Basel, Switzerland)

Sitnik, R., Krzesłowski, J., Mączkowski, G.: Processing paths from integrating multimodal 3D measurement of shape, color and BRDF. Int. J. Herit. Digit. Era 1, 25–44 (2012)

Artusi, A., Banterle, F., Chetverikov, D.: A survey of specularity removal methods. Comput. Graph. Forum 30(8), 2208–2230 (2011)

Kopplin, M., Kwiatkowska, A.: Dresdener Lackkunst in Schloss Wilanów, Münster (2005)

Bunsch, E., Sitnik, R., Hołowko, E., Karaszewski, M., Lech, K., Załuski, W.: In search for the perfect method of 3D documentation of cultural heritage. In: EVA Berlin, pp. 72–81 (2015)

Osten, W., Nadeborn, W., Andrae, P.: General hierarchical approach in absolute phase measurement. In: Proceedings of SPIE, Laser Interferometry VIII: Techniques and Analysis, vol. 2860, pp. 2–13 (1996)

Hardeberg, J.: Acquisition and reproduction of color images: colorimetric and multispectral approaches. Ph.D. thesis, École Nationale Supérieure des Télécommunication (2001)

Wyszecki, G., Stiles, W.S.: Color Science: Concepts and Methods, Quantitative Data and Formulae, pp. 4–30, 117–118, 249–253. Wiley, New York (2000)

Callieri, M., Cignoni, P., Corsini, M., Scopigno, R.: Mapping dense photographic data set on high-resolution sampled 3D models. Comput. Graph. 32, 464–473 (2008)

Dellepiane, M., Callieri, M., Corsini, M., Cignoni, P., Scopigno, R.: Artifacts removal for color projection on 3D models using flash light. In: Proceedings of the 10th International Conference on Virtual Reality, Archaeology and Cultural Heritage, pp. 77–84 (2009)

Feris, R., Raskar, R., Tan, K.H., Turk, M.: Specular reflection reduction with multi-flash imaging. In: IEEE 17th Brazilian Symposium on Computer Graphics and Image Processing, pp. 316–321 (2004)

Acknowledgments

This work has been supported by the project “Revitalization and digitalization of Wilanów, the only Baroque royal residence in Poland” co-financed by the European Union within the European Regional Development Fund under the Infrastructure and Environment 2007–2013 Operational Programme.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Lech, K., Mączkowski, G., Bunsch, E. (2016). Color Correction in 3D Digital Documentation: Case Study. In: Mansouri, A., Nouboud, F., Chalifour, A., Mammass, D., Meunier, J., Elmoataz, A. (eds) Image and Signal Processing. ICISP 2016. Lecture Notes in Computer Science(), vol 9680. Springer, Cham. https://doi.org/10.1007/978-3-319-33618-3_39

Download citation

DOI: https://doi.org/10.1007/978-3-319-33618-3_39

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-33617-6

Online ISBN: 978-3-319-33618-3

eBook Packages: Computer ScienceComputer Science (R0)