Abstract

The Situated approach to Situation Awareness (SA) holds that when the immediate task environment is present, an operator will form partial internal representations of a situation and offload detailed information to the environment to access later, as needed. In the context of air traffic control (ATC), Situated SA states operators store general features of the airspace internally, along with high priority information, and offload specific and low priority information. The following describes a method for testing these claims that involves combining the Situation Present Assessment Method (SPAM) with a web camera used to record eye movements to the radar display while probe questions are presented during a simulated air traffic control task. In the present study, probe queries address information specificity and information priority. Images from queries that are correctly responded to are coded for total number of glances and total glance duration. We argue that this technique is reliable for determining whether information is stored internally or offloaded by operators.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Situation awareness (SA) is a major topic in the human factors community. Although many theories claim that operators store internally very detailed mental models of a situation, a new approach called Situated SA holds that operators store very limited representations, offloading much of it and accessing it only when needed [1–3]. In what follows, we outline a method for studying Situated SA in the context of air traffic control (ATC) operations and present some data on its reliability.

The Situated approach to SA is partly motivated by perceptual research on change blindness, where simple changes made to a scene are hard to detect unless they are explicitly the focus of attention [4]. What this research shows is that people do not store internally a complete detailed model of their perceptual world. Instead they represent general scene schemata, consisting of an inventory of objects likely to be in a scene and the location of those objects [4]. People then use this information to guide their fixations when they are in need of more detailed information.

The Situated approach holds that interactions between the operators and their immediate task environment are crucial for maintaining SA [2, 3]. In particular, operators store internally some information and offload that which can be accessed with little effort from the environment. They form partial internal representations of a situation, which allows them to free up their working memory, whose resources can be devoted to other tasks. The Situated approach is consistent with recent studies on Google effects on memory that show people are less likely to be able to recall information if they believe they will be able to access it from a computer later on [5].

In the context of ATC operations, the Situated approach hypothesizes that controllers do not store internally a complete picture of the traffic patterns in the sector they are managing. Instead, they are likely to store internally information about general characteristics of their airspace, as well as high priority information – information that they will need to act on presently, like whether two aircraft are currently in conflicting paths. They are likely to offload specific information and low priority information onto their displays including, for example, aircraft call signs and whether two aircraft will be in conflict in five minutes or more.

In this paper we present a method for testing such claims by the situated approach, one that we believe can be generalized to other operational contexts. The method involves using the Situation Present Assessment Method (SPAM). It is an online probe technique for measuring Situated SA [6, 7]. Unlike other SA measurement techniques [1], SPAM queries participants while they are still engage in a task, granting them access to their displays while they are responding to probe queries [6, 7]. Participants are first presented with a ready prompt, as well as an acoustic tone to announce the arrival of the new query. If workload permits, participants respond to the ready prompt and a probe query is presented. When answering queries, participants can either use information represented internally, or they can access offloaded information from their display.

SPAM uses the reaction time (RT) between the presentation of the probe query and the onset of the participant’s response (i.e., the “probe latency”) to measure SA. SPAM assumes that fast probe latencies indicate good SA, with information being stored internally. Slightly slower probe latencies still indicate good SA, but it is assumed that information is being retrieved from the environment. Thus, if the location of the information in the environment is known, latencies will be longer than if the information is represented internally. However, when the location of the information is not known, probe latencies are assumed to be the slowest and participants are said to have poor SA, as they must then engage in a serial search of the display for the information.

Our method involves supplementing SPAM with a measure of participant eye glances, which allows us to determine whether lengthy SPAM reaction times really do reflect where operators store information. A web camera is used to capture images of participants while they respond to probe queries. This allows us to determine what information was retrieved internally and what was accessed from an external display. Web cameras were determined to be more cost effective than expensive eye tracking equipment, and would provide ample data for a test of the hypotheses outlined by Situated SA. Thus, for offloaded information participants should be more likely to turn to the radar display prior to answering probe questions than for information stored internally.

A key aspect of this method is also varying the type of information queried. We used two categories of information: We varied query specificity (specific vs. general) and priority (high vs. low). By combining the levels of these categories, four unique types of information can be examined (see Table 1).

2 Method

2.1 Participants

Participants for this study were 17 students enrolled in an aviation sciences program at Mount San Antonio College, studying for careers in air traffic control. Students completed a 16-week ATC radar internship in the Center for Human Factors in Advanced Aeronautics Technologies (CHAAT) at California State University, Long Beach prior to participating in the study. Over the course of the internship, participants trained on the simulation technology, gaining familiarity with the system.

2.2 Apparatus and Scenarios

Participants managed air traffic on a medium fidelity radar display that is simulated through the Multi Aircraft Control System (MACS) [8]. Participants’ experimental stations consisted of two computer monitors, a keyboard, and mouse. One monitor served as an ATC radar screen for managing air traffic in simulated Indianapolis Center (ZID-91) airspace, and contained screen capture software for replaying scenarios for later analyses. The second monitor was a touchscreen probe station. It was used to present participants with SA probe queries as well as capturing pictures in rapid succession of participants responding to these queries.

The MACS simulation software provided participants with NextGen tools that enabled the issuing of commands to an aircraft equipped with NextGen communications. Available tools included Datalink communications, trial planner with conflict probe, and conflict alerting. The latter warned participants when two NextGen equipped aircraft were in conflict with one another for a potential loss of separation (LOS; two aircraft within 5 nautical miles horizontally and 1000 ft vertically of one another). The trial planner provided participants with a means of adjusting flight plans for equipped aircraft, and advised participants of potential conflicts before they implemented changes.

Participants completed a 10-min training scenario and four 40-min test scenarios of 50 % mixed aircraft equipage; half of the aircraft throughout the scenario were equipped with NextGen technology and half were unequipped. The number of aircraft steadily increased through the first 10 min of each scenario, then remained consistent for the duration of the run. Six planned conflicts were built into each trial scenario.

2.3 Probe Queries

A subject matter expert (a retired ATC with 39 years of air traffic management experience) as well as a group of 12 ATC students (not part of this study), assisted in the development and classification of all probe queries. The questions were designed to address the different levels of each of the two information types of interest: specificity (general vs. specific) and priority (high vs. low). Questions topics included past and present conflicts, aircraft position, altitude, direction of aircraft travel, handoff status, and frequency changes. General questions asked about information such as conflicts, altitudes, position, and direction for any or all aircraft in the sector. Specific questions asked about information that related to a single, specified aircraft. High priority questions were related to events or information that required swift action (within 1 min of probe question presentation) to prevent a LOS, collision, or other incident. Low priority questions were those related to events or information that did not require immediate action (within 3 min of probe question presentation), but could be put off until later without incident.

SA measures were collected using the SPAM probe technique on the touchscreen monitor. Each scenario contained 12 queries: two specific/high priority questions, two general/high priority questions, two specific/low priority questions, two general/low priority questions, and four additional questions that were not relevant to this study. Queries began three minutes into a scenario and were asked once every three minutes after that. Query positions were counterbalanced by question category, as well as for predicted number of “yes” or “no” responses for each category, with four orders of probe queries developed for each of the four scenarios, producing 16 total orders. Aircraft call signs, waypoints, and cardinal directions were modified as questions were applied to each scenario. All questions were used an equal number of times, with no identical or similar questions appearing in the same scenario for a given participant. All relevant questions were accompanied by two response buttons marked “Yes” and “No.” Participants were instructed to answer probe queries as quickly and as accurately as possible.

2.4 Image Collection

The touchscreen probe station contained a built-in web camera (see Fig. 1). The SPAM software was programmed to activate the camera in conjunction with the presentation of probe queries. Probe stations were positioned to the right of each ATC radar scope and angled precisely at a 45°clockwise rotation from the radar display (see Fig. 2). This angle allowed for the web cameras to capture participant eye glances between the probe screens and the radar scopes.

The SPAM software was programmed to instruct the camera to take pictures once every 100 ms from the moment the “Ready?” button was pressed, without the use of flash to minimize probe intrusiveness. The program saved image files with the time of image capture and organized them for later analyses. These time stamps allowed researchers to determine total number and duration of eye glance at the ATC display.

2.5 Design

Independent variables included question specificity and priority. Dependent variables included the total number of glances at the ATC scope (a glance was measured as eye fixations on the ATC display for any duration), and total glance duration. They also included reaction time to answer SA probe questions correctly and number of questions answered correctly. These are not reported in what follows. Instead, we focus on a reliability analysis for the eye glance data.

2.6 Procedure

Upon arrival, participants were briefed about the simulation and data collection tools, and then signed consent forms. Participants first engaged in a 10-min training scenario to familiarize themselves with MACS. They then completed four 40-min trial scenarios, which had been counterbalanced for scenario number and probe query order. Participants were given at least a 10 min break after the training and between each trial.

2.7 Coding

Following data collection, probe query responses were coded for accuracy. Two raters used ATC radar scope videos and audio communication files to independently code probe query answers for all probe questions. Video and audio files were used to determine what information participants had access to and commands given at the time of probe query presentation. Rater responses were compared and any discrepancies were reviewed by a third rater. Overall, participants responded accurately to 80.5 % of SA queries used in this study. Only data from trials where participants answered correctly were used in the reliability analyses.

To determine whether our method of capturing eye glances by participants is reliable, two raters were used to conduct frame-by-frame analyses of SPAM image files (from runs included in a sample of 25 % of all trials from the study) to determine how many times during a single query response the ATC scope was glanced at and the total duration of eye glances at the ATC radar display. Glance location was determined by examining the participant’s sclera (the white area of the eye). When sclera was visible in relatively equal proportions on either side of the iris, particularly for the eye closest to the probe station, the image was coded as a participant viewing the probe station. When a participant was glancing to the right of the image (toward the location of the ATC display), and sclera was dominant on the left side of the iris in the participant’s right eye, with little to no sclera visible on the right side of the iris, the image was coded as a participant viewing the ATC radar scope. The total number of glances for a single probe query was tallied. Timestamps were collected for the images corresponding to each glance onset to the ATC display and glance end, and the duration of each glance was calculated. Durations of all glances for a single probe query were totaled.

Any images that did not meet the criteria for eye glances or that appeared to have participants speaking were examined closely to determine the circumstances for the query response. The image sets for these queries were analyzed with the voice communication files to determine if the participant was managing traffic or engaging in some other activity, despite having indicated that he or she was ready to respond to the probe query. Performing activities in conjunction with responding to probe queries would interfere with the SA measurement, thus these queries were excluded from analyses. A total of 92.4 % of the image sets for queries with accurate responses met the criteria to be usable for analyses.

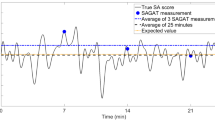

3 Results

Inter-rater reliability for the coding of total number of eye glances was 95 %. Overall, researchers were consistent with their assessment of what counted as an eye glance. Total glance latency was a little more difficult to get precisely times for, as it could easily be off by one or two SPAM image files; occasionally the program would duplicate an image. The inter-rater reliability for the exact times was low (.14), but the average difference in total eye glance latency between two raters was .57 s without removing any outliers. Once outliers were removed (3 outliers out of 80 total coded questions used for testing rater reliability) the average difference in total glance latency between the two raters dropped to .29 s. When compared to the actual averages of total eye glance latency, these numbers are quite small. Average total eye glance latencies for questions used for analyses ranged from 2.81 to 5.79 s, depending on information type (i.e., general/low priority, general/high priority, specific/low priority, and specific/high priority), with standard errors ranging from .32 s to .58 s. Inter-rater differences in total eye glance latency for all questions were therefore lower than the smallest standard error for a single information type.

4 Discussion

Combining the SPAM technique and measures of eye glance duration and latency provides a reliable, straightforward means of testing implications of a situated approach to SA. Through this method we will be able to examine whether operators store particular SA-related information internally or offload it onto a display, accessing it only as needed. We will analyze the eye glance data to determine whether operators glance more at displays and for longer when asked about specific information and low priority information than for general and high priority information.

Importantly, we will also be able to validate a key assumption of SPAM, which is that longer latencies to answer probe questions reflect whether operators are answering based on what they represent internally, or whether they are accessing information on a display. Although this claim has been made by proponents of SPAM [6, 7], it has not been validated to our knowledge. We will do so by correlating probe latencies with eye glance frequency and duration.

The method discussed herein is a promising way of addressing broad questions about where operators store SA related information – in internal memory or offloaded onto a display. This can be studied by examining whether or not operators have to look at primary operational display to prior to answering probe questions. However, this eye glance method is likely to be insufficient if more precise details are required as to where on the display they are looking. Indeed, if one wants to determine, for example, whether operators know exactly where on the display to access information or have to look around for it, the present method would likely be insufficient. The number of glances might be telling, but in such circumstances eye tracking technology would likely be most effective, and indeed some research on SA has made use of it [9, 10]. Fortunately, not all questions require that level of precision. This is because eye tracking technology is generally cumbersome to implement, expensive, and the data obtained difficult to process. Whenever it is possible reliable, but low-tech alternatives are desirable.

References

Endsley, M.R.: Measurement of situation awareness in dynamic systems. Hum. Factors Ergon. Soc. 37(1), 65–84 (1995)

Chiappe, D.L., Strybel, T.Z., Vu, K.P.L.: Mechanisms for the acquisition of situation awareness in situated agents. Theor. Issues Ergon. Sci. 13(6), 625–647 (2012)

Chiappe, D.L., Strybel, T.Z., Vu, K.P.L.: A situated approach to the understanding of dynamic situations. J. Cogn. Eng. Decis. Making (in press)

Rensink, R.A.: The dynamic representation of scenes. Vis. Cogn. 7(1–3), 17–42 (2000)

Sparrow, B., Liu, J., Wegner, D.: Google effects on memory: cognitive consequences of having information at our fingertips. Science 333, 776–778 (2011)

Durso, F.T., Dattel, A.R.: SPAM: The real-time assessment of SA. In: Banbury, S., Tremblay, S. (eds.) A cognitive approach to situation awareness: Theory, measures, and application, pp. 137–154. Ashgate, Burlington (2004)

Durso, F.T., Truitt, T.R., Hackworth, C.A., Crutchfield, J.M., Nikolic, D., Moertl, P.M., Ohrt, D., Manning, C.A.: Expertise and chess: A pilot study comparing situation awareness methodologies. In: Garland, D.J., Endsley, M.R. (eds.) Experimental Analysis and Measurement of Situation Awareness, pp. 295–303. Embry-Riddle Aeronautical University Press, Daytona Beach (1995)

Prevot, T.: Exploring the many perspectives of distributed air traffic management: the multi-aircraft control system MACS. In: Chatty, S., Hansman, J., Boy, G. (eds.) Proceedings of the HCI-Aero 2002, pp. 149–154. AAAI Press, Menlo Park (2002)

Hauland, G.: Measuring individual and team situation awareness during planning tasks in training of en route air traffic control. Int. J. Aviat. Psychol. 18(3), 290–304 (2008)

Moore, K., Gugerty, L.: Development of a novel measure of situation awareness: The case for eye movement analysis. Proc. Hum. Factors Ergon. Soc. Annu. Meet 54(19), 1650–1654 (2010)

Acknowledgement

This project was supported by NASA cooperative agreement NNX09AU66A, Group 5 University Research Center: Center for Human Factors in Advanced Aeronautics Technologies (Brenda Collins, Technical Monitor).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Sturre, L., Chiappe, D., Vu, KP.L., Strybel, T.Z. (2015). Using Eye Movements to Test Assumptions of the Situation Present Assessment Method. In: Yamamoto, S. (eds) Human Interface and the Management of Information. Information and Knowledge in Context. HIMI 2015. Lecture Notes in Computer Science(), vol 9173. Springer, Cham. https://doi.org/10.1007/978-3-319-20618-9_5

Download citation

DOI: https://doi.org/10.1007/978-3-319-20618-9_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-20617-2

Online ISBN: 978-3-319-20618-9

eBook Packages: Computer ScienceComputer Science (R0)