Abstract

Mean field theory is a device to analyze the collective behavior of a dynamical system comprising many interacting particles. The theory allows to reduce the behavior of the system to the properties of a handful of parameters. In neural circuits, these parameters are typically the firing rates of distinct, homogeneous subgroups of neurons. Knowledge of the firing rates under conditions of interest can reveal essential information on both the dynamics of neural circuits and the way they can subserve brain function. The goal of this chapter is to provide an elementary introduction to the mean field approach for populations of spiking neurons. We introduce the general idea in networks of binary neurons, starting from the most basic results and then generalizing to more relevant situations. This allows to derive the mean field equations in a simplified setting. We then derive the mean field equations for populations of integrate-and-fire neurons. An effort is made to derive the main equations of the theory using only elementary methods from calculus and probability theory. The chapter ends with a discussion of the assumptions of the theory and some of the consequences of violating those assumptions. This discussion includes an introduction to balanced and metastable networks and a brief catalogue of successful applications of the mean field approach to the study of neural circuits.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

- Leaky integrate-and-fire neuron

- Binary neuron

- Logistic neuron

- Neural population

- Neural circuits

- Firing rate

- Asynchronous state

- Bistability

- Multistability

- Metastable dynamics

1 Introduction

The purpose of this chapter is to give an elementary introduction to mean field theory for populations of spiking neurons. Mean field theory is a conceptually simple, but far reaching method developed in physics to explain a wide range of phenomena, most notably to understand the nature of phase transitions (Binney et al., 1992; Le Bellac et al., 2004; Parisi, 1998). At its heart, it consists of neglecting fluctuations in the interaction between the units defining the system, and it can lead to qualitatively correct insights with relatively little effort. For example, a mean field assumption on the energy potential of a non-ideal gas leads quickly to the van der Waals equation of state (Binney et al., 1992). Mean field results also have a weak dependence on the microscopic details of the system, promising to extract general principles that apply to a large class of seemingly unrelated models.

As neural circuits of the brain comprise a large number of interconnected neurons, they are ideally suited to a mean field analysis. Very often, the goal is to capture properties of neural circuits that occur during typical behavior. Typical behaviors pertain to large networks and should not depend on the specific number of neurons (as long as this number is large), or the details about the neuron model, or the precise values of the synaptic weights. For this reason, we are often interested in the properties averaged across the distribution of possible weights and in the limit of infinite network size. Some important properties, such as the existence of a sharp phase transition, are only obtainable in this limit. In neuroscience, phase transitions are related, for example, to the existence of memory phases (Amit, 1989) or to transitions between qualitatively different dynamical regimes (Sanchez-Vives et al., 2017).

In this chapter, we present the main ideas of the theory in a network of simplified neurons with probabilistic spiking. Including a probabilistic element allows to interpret the neural activities as random variables and to articulate the approach in a general language. All the main steps of the approach, together with its neural applications, are already available in this simple system and can be grasped unencumbered by the technical difficulties that arise in networks of spiking neurons. When presenting the theory for integrate-and-fire neurons, an effort is made to eschew those difficulties and rely only on standard calculus and probability theory. The assumptions of the theory, and some possible departures from its predictions, are also discussed. Three important examples, bistable, metastable, and balanced networks, are also briefly considered.

We hope that this chapter will remove a gap in the existing literature by presenting an elementary introduction to the application of mean field theory to networks of spiking neurons.

2 Networks of Binary Neurons

Consider a network of N binary neurons xi ∈{0, 1}, mutually connected by synapses Jij and receiving external input Ii,ext coming from distant neurons in, e.g., different brain areas. The input current to unit i is therefore

where the sum goes over all N neurons j except neuron i itself. We assume that time proceeds in discrete time steps. At each time step, unit xi will emit a spike (xi = 1) if the input current is larger than a threshold θ. For convenience and greater generality, it is best to assume a probabilistic process of spike emission. We assume that neuron i will emit a spike with probability

where β is a parameter that controls the level of stochasticity of the spike emission process. Note that, due to the term Ii,ext − θ in Eq. 6.2, the effect of the thresholds can be included in the external currents. Therefore, in most of the following, we set θ = 0 and keep the external currents constant. The following arguments generalize easily to the case of stochastic external currents.

Since the probability must be bounded by 1, a convenient choice for p(x) is the logistic function,

where \(\mathcal S\) is the logistic function defined by the above equation. Normalization implies p(xi = 0) = 1 − p(xi = 1), and therefore,

We can therefore write p(xi) compactly as

\(\mathcal S(I_i)\) is plotted in Fig. 6.1a for several values of β. Note that when β →∞, we retrieve the deterministic model of spike emission (see Fig. 6.1a, dotted line). On the other hand, when β → 0, p(xi = 1) = 0.5, and the network becomes a population of independent neurons. Hence, β also controls the degree of mutual influence among the neurons. Because of Eq. 6.3, we call this model the (binary) logistic neuron.

(a) Plot of the logistic function \(\mathcal S(x) = { 1 \over 1 + e^{-2 \beta x}}\) (Eq. 6.3) as a function of x = Ii for several values of β. For infinite β, the curve becomes the Heaviside function Θ(x) = 0 if x < 0 and Θ(x) = 1 if x ≥ 0 (dotted line). (b) Spiking activity of a population of 1000 binary neurons (only 25 shown; each line is a neuron, and each dot is a spike). Here, β = 2, the external currents and the synaptic weights were uniformly distributed across neurons: Ii,ext = −1.1u, Jij = 2u∕N, where u is a random variable uniformly distributed between zero and one: \(u \sim \mathcal U(0,1)\). At each time step, all neurons are updated simultaneously, based on the value of their input current

At every discrete time step, all neurons’ activities are updated at the same time, according to the probabilistic rule Eq. 6.5.

3 Characterization of Neural Activity

The activity of a population of logistic neurons is shown in Fig. 6.1b for a given choice of parameters. This is an example of “raster plot,” where each line is the spike train emitted by one neuron, and each dot is a spike time. We note that the activity of the network is stationary in the following sense: the mean input current Ii, and therefore the probability of emitting a spike given Ii, does not change with time. The activity still looks erratic, because the neurons probabilistically flip their states over time. This dynamical behavior is called the “asynchronous irregular” regime of cortical neurons (Abbott and van Vreeswijk, 1993; Amit and Brunel, 1997b; Gerstner, 2000; Renart et al., 2010; van Vreeswijk and Sompolinsky, 1996), to distinguish it from other collective behaviors such as global oscillations, regular spiking, bursting, and so on (a full account of the possible dynamical behaviors of one relevant model can be found in refs. Brunel (2000); Brunel and Hakim (1999)).

The asynchronous regime is therefore the one in which the firing rates are constant in time, but the activities of the single neurons are uncorrelated and erratic, resembling a stochastic process. This regime is often observed in cortical circuits when recordings are made in behaving animals (Amit, 1995; Compte et al., 2003; Holt et al., 1996; London et al., 2010), and it can be reproduced also in networks of neurons via a number of mechanisms. We will say more about this later on. In the asynchronous irregular regime, the average spike counts do not change, an observation that often motivates arguments of firing rate coding (Amit, 1995; London et al., 2010). Even so, firing rates do not completely characterize the dynamics of populations of neurons. The variability of the inter-spike intervals could also be of interest. Another relevant property is the temporal correlation of each neuron (Compte et al., 2003; Joelving et al., 2007) as well the pattern of pair-wise spike count correlations among different neurons (Doiron et al., 2016; Josić et al., 2009). In principle, all higher-order correlations among neurons would be of interest, although they are much harder to quantify (Gao et al., 2017; Ohiorhenuan et al., 2010; Riehle et al., 1997).

Although mean field theory is “custom-made” to succeed in the asynchronous irregular regime, it can often provide information on the other aspects of the dynamics mentioned above. In this introductory account, we shall limit ourselves to the characterization of the asynchronous regime.

3.1 Firing Rate

As pointed out in the previous section, when the general goal is to understand the aggregate, macroscopic behavior of populations of cortical neurons, a collection of firing rates is a relevant place to start. Intuitively, the firing rate is a measure of the average activity of a neuron (or the whole network) based on the spike count, however there exists more than one definition of firing rate (see e.g. chapter 1 of Dayan and Abbott (2001)). For the logistic neuron, the firing rate of neuron xi (at any time t) can be defined with respect to the probability measure Eq. 6.5 and is a function of Ii(t):

Here, the symbol 〈⋅〉 is used for the average with respect to the distribution Eq. 6.5. Since Ii(t) =∑j ≠ iJijxj(t) + Ii,ext, fi is a function of all xj(t) with j ≠ i. Since the latter are random variables, fi is also a random variable. We have averaged the spiking activity of unit i, but this average depends on the activities of the other neurons. Therefore, in some cases we further average fi(t) over the remaining xj:

where the vector  does not contain xi. We call this quantity the average firing rate to distinguish it from the firing rate fi(t). One could also be interested in higher moments of fi or, in general, in its probability distribution. We will see later that this probabilistic notion of firing rate is useful also in deterministic networks that are nevertheless capable of generating stochastic-like activity. Note that in a recurrent network, the xj will depend in turn on {fk}; in other words, Eq. 6.9 is a self-consistent equation (more on this later). We now provide a few concrete examples that are relevant for the following.

does not contain xi. We call this quantity the average firing rate to distinguish it from the firing rate fi(t). One could also be interested in higher moments of fi or, in general, in its probability distribution. We will see later that this probabilistic notion of firing rate is useful also in deterministic networks that are nevertheless capable of generating stochastic-like activity. Note that in a recurrent network, the xj will depend in turn on {fk}; in other words, Eq. 6.9 is a self-consistent equation (more on this later). We now provide a few concrete examples that are relevant for the following.

Constant Input

If the neuron is probed by a constant input current Ii, then from Eq. 6.8, we simply have

This quantity is analogous to the frequency–current (f-I) curve in neurophysiology (La Camera et al., 2008). In more general contexts, this function goes under such names as “gain function,” “transfer function,” or “response function.” Figure 6.1 shows that the response function of the logistic neuron is a sigmoidal function of the input current. Mean field theory reflects the generic properties of the response function such as its sigmoidal shape—although its detailed shape may also qualitatively change the behavior of some networks (Kadmon and Sompolinsky, 2015; Mattia and Del Giudice, 2002).

Note that different neurons may have different f-I curves \(\mathcal S_i\), in which case Eq. 6.10 is replaced by \(f_i = \mathcal S_i(I_i)\), but the arguments given below proceed in much the same way. Also note that, since f ∈ [0, 1], f values should be interpreted in units of maximal firing rate; for example, interpreting each time step as a time bin of 10 ms, f = 0.1 would correspond to a firing rate of 10 spikes/s.

Gaussian Input Current

In mean field theory, one considers the input current to be either a constant, as in Eq. 6.10, or a Gaussian random variable Ii(t) = Ii(z(t)). At every step, z(t) takes a random value according to a distribution G(z) that we consider time-independent throughout this chapter. When the spiking activity of the neuron depends only on the current value of the input, as is the case of our logistic neuron, the firing rate is given by an average over the distribution of z(t):

Here we have suppressed the dependence on time due to our assumption of stationary distribution G(z). Note that, in a recurrent network, z in turn depends on the activity of the network. We will see examples later on.

Measuring the Firing Rate

How do we measure, in practice, the firing rate of neurons? When the neural activity is stationary, i.e., the spiking probability does not change with time, the average firing rate can also be computed as the average spike count over time:

where ni(T) is the number of spikes emitted by neuron i over a sufficiently long time T. Note that 〈fi〉 gives the average firing rates of the neurons even as they will continuously flip their activity states (between spiking and non-spiking), as shown in Fig. 6.1b. Since the spike trains are erratic, local temporal fluctuations of activity around the mean firing rates are expected, but they are suppressed by the dynamics of the network if the asynchronous state is stable. When all neurons in a population have the same mean firing rate, the latter can be estimated more accurately via an ensemble average, such as that defined in Appendix A.1.1. The ensemble average also allows to measure the temporal modulations of firing rate in non-stationary situations.

4 The Mean Field Equations

The goal of mean field theory is to predict the behavior of our network and in particular how this behavior depends on its parameters. This is not an easy task, due to the interactions between the neurons.

The main idea of the mean field approximation is to replace the interaction between a neuron and its afferents with a mean field generated by the latter.

In other words, one assumes that the neurons in the network receive an input current equal to the mean input generated by their presynaptic neurons (in physics, where this approach was invented, atoms and elementary particles are under the effect of “fields,” which explains the name “mean field”). The argument is as follows. One notes that the input current is a sum of N random variables. If the individual variables are independent and N is large, the central limit theorem tells us that the sum tends to follow a Gaussian distribution. Therefore, we write

where η(t) is a temporally fluctuating Gaussian variable with stationary statistics (the extension to time-dependent processes will not be considered in this chapter). We later show how to include the effect of η in our mean field approximation. But to start, we simply assume that the fluctuations ofIican be neglected. If the weights Jij are constants, this leads to the mean field approximation:

where, to lighten the notation, by fj we mean the firing rate averaged over the activity of the whole network, 〈fj(x)〉x, see Eq. 6.9. Each neuron therefore experiences an input current that is equal to its mean. Replacing Ii with its mean value into Eq. 6.10, we get

This is our first example of mean field equations. They are a set of N coupled equations for the firing rates fi of the N neurons in our population. Comparison with Eq. 6.9 shows that we have replaced \(\langle \mathcal S(I) \rangle \) with \(\mathcal S(\langle I \rangle )\):

Since \(\mathcal S\) is a non-linear function, this relationship cannot be correct, in principle. The idea is that the input currents Ii(x), as random variables, converge to their means 〈Ii(x)〉 in the limit N →∞, in which case \(f_i \to \mathcal S(\langle I_i(x) \rangle )\). This procedure is basically an application of the law of large numbers to Ii(x). This requires some care, an issue we consider in Sect. 6.7.1.

Note the following about Eq. 6.15:

-

Spiking has disappeared, and it has been replaced by smooth variables fi.

-

The mean field equations are self-consistent equations in that the same mean firing rates appear on both the left- and right-hand sides of the equations.

The self-consistency requirement is due to the recurrent nature of the network, wherein the output of a neuron is also an input to all the other neurons. This is even more apparent if we write these equations in vectorial form, after defining the vector of firing rates f = {f1, f2, …, fN}, the vector of external inputs Iext, and the synaptic matrix J (having elements Jij with Jii = 0):

In this equation, the vector f is required to be the same on the left- and right-hand sides, and for this reason, it is called a “fixed point”:

Definition 1 (Fixed Points)

The self-consistent solutions of the mean field equations are called fixed points of the network’s activity.

Depending on the nature of the model, there may be multiple fixed points, which may be stable or unstable. Often the aim is to build a model with fixed points having desired properties. We shall see examples later.

In summary, the mean field equations are self-consistent equations for the average firing rates, obtained under the hypothesis that we can replace the input to each neuron with its mean value.

4.1 Solving the Mean Field Equations

One way to solve the mean field equations is to use a fictitious dynamics that converges to the solution. One convenient dynamics is the following:

At equilibrium, this system gives our mean field equations 6.14–6.15 for the pair (I∗, f∗), where \(f^* = \mathcal S(I^*(f^*))\) are the fixed points of this coupled system.

The full dynamics of the pair (I, f) would require closing an equation for the moments of I as a function of f (see, e.g., Bressloff (2009); Buice and Chow (2013) for examples of this kind of approach); however, the simplified dynamics 6.18 is effective at finding the fixed points of our network.

Remark 1

The model Eqs. 6.18 is an example of “rate model” of Cowan–Wilson type (Wilson and Cowan, 1972) and has been used in different contexts. For symmetric synaptic weights, it can be used as a “mean field version” of a stochastic network called the Boltzmann machine (which is closely related to our binary logistic network), see Hopfield (1984) and Ch. 7 of Dayan and Abbott (2001). For Gaussian random weights with zero mean and variance g2∕N, it has been analyzed to explore the ability of neural networks to produce chaotic dynamics in the firing rates (Sompolinsky et al., 1988). In general, rates models are ad hoc descriptions of neural dynamics that can be derived as mean field approximations of microscopic models. Note that the same rate model can be interpreted as the mean field approximation of more than one microscopic description, see e.g. Chow and Karimipanah (2020); Cowan et al. (2016) for recent reviews.

4.2 Random Weights

A highly relevant case is when the synaptic weights are random variables sampled from a given distribution. This is motivated by the fact that weight distributions in cortex are wide (Buzsáki and Mizuseki, 2014). As we are interested in the typical behavior of the network, we must average our quantities of interest over the distribution of synaptic weights. Importantly, once the weights are sampled, they are kept fixed (or “quenched”). It is said that they give rise to quenched noise, in contrast to fast noise emerging from the spiking dynamics of the neurons. Quenched noise is very important as it allows to include the effect of heterogeneities in the description of the collective behavior of neural circuits.

Also in this case, the mean field approximation assumes that we can replace the current with its mean:

where we have used the symbol 〈〈⋅〉〉 to indicate an average with respect to the distribution of the weights and with respect to the distribution of the temporal values of the activities xj(t). We shall use the symbol [⋅] for the former and 〈⋅〉 for the latter, so that

where we have assumed the weights are independent samples from a distribution with mean J and that the neural activities and the weights are uncorrelated variables. Note that the average 〈xj〉 now depends on the distribution of the weights (this will be clearer in Sect. 6.5.1); however, we simply write 〈xj〉 to simplify the notation.

Performing the mean field approximation, Eq. 6.16, we get the mean field equations:

We defer an analysis of the approximations performed so far to a later section (Sect. 6.7); first, we show how the theory can be used to make predictions on the network’s activity.

4.2.1 Heterogeneous Population

From the mean field equations 6.21, we guess (see the next subsection) that they can admit a solution with different firing rates across neurons only if the external input currents (or the response functions) are different for different neurons. An example is shown in Fig. 6.2a for a network of excitatory neurons with a uniform distribution of external currents. The firing rates are widely distributed across neurons and are well captured by the mean field equations (panel B). This network can be interpreted as a collection of neurons with different characteristics (e.g., by choosing θi = −Ii,ext and setting all external currents to zero), and therefore, it is a model of a heterogeneous network.

Heterogeneous network with mean field predictions. (a) A raster plot from the network of Fig. 6.1b (only 25 neurons shown). (b) The firing rates predicted in mean field (vertical axis) vs. the firing rates observed in the simulation of panel A via Eq. 6.12 (with T = 10, 000 time steps). The dashed line is the identity line. Note how the firing rates in the network span almost the entire range of admissible values, and the points are mostly located along the identity line, which confirms the good agreement between the mean field predictions and the actual firing rates

There is another meaning in which a network can be considered heterogeneous, i.e., in the presence of random connectivity. This will be considered in Sect. 6.5.2.

4.2.2 Homogeneous Population

If the neurons are identical and receive identical external current, then the mean field equations 6.21 have an evident symmetry: for large N, the input current will be the same for all neurons and all neurons in the network will have the same firing rate, as shown in Fig. 6.3a.

Homogeneous network with mean field prediction. (a) Raster plot from the same network of Fig. 6.1b except N = 100 and Iext = −0.6 for all neurons. All neurons have the same firing rate. The thick curve is the ensemble average (see Appendix A.1.1) with a fixed bin size of 10 time steps (average firing rate across time bins is 0.13, or 13 spikes/s in suitable units). (b) The firing rate of the neurons in A can be obtained from the graphical solution of the mean field equation 6.24 with g = 1. The dashed line represents the equation y = f, while the full line is \(y=\mathcal S(f)\). The intersection point of these two lines (circle) gives the fixed point f∗ (here f∗ = 0.13, in agreement with the firing rates observed in panel A). Since the slope of \(\mathcal S(f)\) is < 1 at the fixed point, the activity of the network is stable at this point. Inset: network’s activity (top) in response to an input perturbation (bottom) shows that the fixed point is stable

Under the hypothesis of equal firing rates across neurons, the mean input current becomes

and is the same for all neurons. Note that we have used the symbol μi for the mean input current. This is a customary notation and will be used extensively later on.

By using 6.22, the mean field equations 6.21 become N copies of the scalar equation

The graphical solution of this equation is shown in Fig. 6.3b for J = 1∕N and is f∗ = 0.13, in agreement with the firing rate observed in the simulation of Fig. 6.3a. Note how the activity of a single neuron (and hence the single-neuron response function) is sufficient in this case to describe the activity of the whole population.

Remark 2

The scaling of J with N is motivated by the fact that the input current is proportional to N; as the size of the population increases (as required by the mean field approximation), the input will saturate the activity of all the neurons. Instead, by taking J = g∕N, where g is a constant, Eq. 6.23 reads

an equation in which N has disappeared—hence valid in the infinite network. Synaptic weight scaling will be considered in more detail in Sect. 6.7.1.

Remark 3

The approach used in this section illustrates a typical reasoning of mean field theories: one lays out hypotheses that are intuitive consequences of the mean field assumptions (Gaussian current, uniform firing rates); one then derives and solves the equations; and finally one checks, a posteriori and self-consistently, that the hypotheses were correct.

Stability of the Fixed Points

The mean field equations also tell us about the stability of the fixed point, at least with respect to the dynamics Eq. 6.18. For the model of Eq. 6.24, the dynamics is the same for all neurons and reads

A fixed point f∗ of this model is stable if the slope of the transfer function at f∗ is smaller than 1,

while it is unstable if this slope is larger than one. This is a standard result of linear stability analysis and can be understood as follows. The fixed point x∗ of the system \(\dot x = -x + \Phi (x)\) is obtained for Φ(x∗) = x∗. For x > x∗ but very close to x∗, stability requires \(\dot x = \Phi (x)-x<0\) (so that x will decrease back to x∗), which is true if Φ(x) lies below x. In turn, this is true if the slope of Φ(x) at the fixed point is smaller than the slope of y = x, i.e., for Φ′(x∗) < 1. One can similarly work out the other cases. Using the fact that I∗ = gf∗ + Iext together with the chain rule of derivation, we obtain Eq. 6.26.

Although Eq. 6.25 is not the real dynamics of the network, it captures the stability of its fixed points—as long as the network is large enough. This is shown in the inset of Fig. 6.3b, where the activity returns to f∗ after the removal of a rather strong perturbation. Note that we only have N = 100 in this example.

Bistability

For a suitable choice of parameters, the single homogeneous population of Fig. 6.3 can be bistable, in the sense that it can have two stable points of activity: one at low firing rate and one at high firing rate. This is shown in Fig. 6.4. Note that, given \(\mathcal S(x)\), the mean field equations 6.24 depend only on g and Iext. As g is increased, the shape of \(\mathcal S(f)\) will change. For g = 1.2, there are three intersection points. Based on Eq. 6.26, the middle point (white circle) is unstable, while the other two are stable (black circles). This means that the network can be found in one of the two stable activity regimes: one at low firing rate and one at high firing rate.

Bistability in the network of Fig. 6.3. (a) Graphical solution of the mean field equations 6.24 for g = 1.2. There are now three intersection points, two of which stable (black circles, firing rates 0.17 and 0.83, respectively). (b) Bifurcation diagram of the network of panel A. The plot shows the fixed points as g is varied. The interval 1.16 < g < 1.3 (vertical full lines) is the bistability interval with three fixed points: one unstable (on the dashed branch) and two stable. Vertical dotted line corresponds to g = 1.2 used in panel A. (c) Raster plot and ensemble average for the network in panel A with N = 1000 (same keys as in Fig. 6.3a). The activity is initially at the lower fixed point and, after a transient stimulation (shown at the bottom), enters the higher fixed point. See the text for details

Bistability is an important property that has been used to model perception (Moreno-Bote et al., 2007), memory (Amit and Brunel, 1997b), and decision-making (Wang, 2002), and therefore, it is of interest to establish under what conditions a neural circuit can be bistable. This is done with the aid of a bifurcation diagram, which plots the fixed points as a function of the mean synaptic weight, as shown in Fig. 6.4b. From the diagram, we see that the network is bistable for 1.16 < g < 1.3, whereas outside this interval the network is monostable (there is only one fixed point). Inside the bistable region, a vertical line will intersect the diagram at three points, two stable and one unstable (located on the dashed branch). These points correspond to intersection points in the related plot of panel A. The values g = 1.16 and g = 1.3 are the critical points, since as they are crossed by g, a qualitative different behavior emerges.

Figure 6.4c illustrates the bistable network as a model of short-term memory (Amit, 1995; Funahashi et al., 1989; Miller et al., 1996; Miyashita and Chang, 1988). Let us assume that our network contains neurons that respond to a particular sensory stimulus (such as a visual image). In the absence of the stimulus, the network is in the lower fixed point. At time 300, an input current mimicking the presence of the sensory input is turned on, causing the activity to rise. After the stimulus is removed, the activity settles on the higher fixed point. Since the activity at the higher fixed point persists after the removal of the stimulus, it may be interpreted as an internal representation of the stimulus. In this example with a single population, one can only accommodate one memory; however, this restriction can be avoided by partitioning the network into subpopulations of neurons (Amit and Brunel, 1997b). This model is discussed next.

4.3 Clustered Networks

The homogeneous network is the basis for an important generalization, one in which the network is partitioned into M homogeneous subpopulations or clusters. We now consider this case. Given populations α and β with mean synaptic weights Jαβ for all i ∈ α and j ∈ β, proceeding as done in Sect. 6.4.2, we have

where Nβ is the number of neurons in cluster β (not to be confused with the parameter of the logistic function) and fβ is the population average of the neuronal firing rates in cluster β. Note that 6.27 is the same for all neurons in cluster α. The mean firing rate of any neuron in population α is therefore given by the mean field equations:

where we have assumed all neurons of the same populationreceive the same external current. Note that now determining the fixed points and their stability requires a generalization of the analysis of Sect. 6.4.2.2 (Mascaro and Amit, 1999; Mazzucato et al., 2016).

One special case of clustered network is the excitatory–inhibitory recurrent network. In this case, we have two populations, one having excitatory (E) neurons and one having inhibitory (I) neurons, and 4 types of mean synaptic weights Jαβ: JEE, JII, JEI, and JIE, where, e.g., JEI are the mean synaptic weights from inhibitory to excitatory neurons. Note that this model respects Dale’s law, stating that neurons can be excitatory or inhibitory, but not both.

Clustered networks are much studied, especially in the context of integrate-and-fire neurons. Since the fluctuations of the neural activity play an essential role in clustered networks, we first show how to incorporate these ingredients in the theory and defer a discussion of clustered networks to the end of Sect. 6.6.

5 Extensions

In this section, we consider two very important extensions of the theory, the incorporation of the variability of the input current generated by the network itself and the random connectivity of the neurons. We start from the former.

5.1 The Impact of the Input Variance on the Mean Firing Rates

In deriving our mean field approximation, we have replaced the current with its mean input. The input is the sum of many contributions and therefore, by the central limit theorem, converges to a Gaussian random variable in the thermodynamic limit N →∞. A Gaussian distribution is characterized by its mean and variance. Hence, by incorporating the impact of the variance of Ii into our model, we can go beyond mean field and provide a more accurate description of the network’s behavior.

We can deduce heuristically the effect of Gaussian fluctuations arguing as follows. We replace the input current with its Gaussian approximation valid in the large N limit:

where \(z(t) \sim \mathcal N(0,1)\) is a standard Gaussian variable and \(\mu _i, \sigma _i^2\) are the mean and variance of Ii, respectively. Figure 6.5a shows that the temporal fluctuations of the input current are indeed well described by a Gaussian distribution.

(a) Distribution of input currents across time for the network of Fig. 6.3 at the fixed point. To avoid variations in current across neurons, equal weights equal to g∕N were used (with N = 1000). The distribution is described well by a Gaussian distribution with mean and variance predicted by mean field theory Eqs. 6.39 (dashed). (b) Response function of the noise-driven logistic neuron: comparison of Eq. 6.31 (full line) and its approximation Eq. 6.32 (dashed) with simulations of the logistic neuron driven by Gaussian input current (dots). The output firing rates are functions of the input firing rates through μi(fin), σi(fin) given by Eqs. 6.39

For convenience, the constant term Ii,ext has been included into the mean μi. The firing rate, for a given value of z(t) = z, is given by Eq. 6.8

where β is constant. Mean field amounts to setting z = 0. Now we can relax this hypothesis and compute the average firing rate Eq. 6.11 by adding up all contributions μi + σiz, each weighted by the probability of z:

This is the response function of the binary logistic neuron when the fluctuations of the input current are taken into account, as shown in Fig. 6.5b. This function is closely approximated by a logistic function with a different parameter β′ (see, e.g., Maragakis et al. (2008) and Fig. 6.5b):

where

Now there are two sources of noise: β and σi, where the latter originates from the activity of the network itself.

When σi is small, this function approaches \(f_i=\mathcal S(\mu _i)\), i.e., Eq. 6.10 evaluated at the mean current, from which we recover our mean field equation Eq. 6.15. When σi is large enough, though, it endows the network with its own source of variability due to Gaussian nature of the input current. Hence, it is no longer necessary to assume an intrinsic form of noise β, and we can allow our model to be deterministic by setting β →∞. In this limit, one immediately gets

Comparison with Eq. 6.3 shows that this is our previous response function \(\mathcal S(\mu _i)\) with \(\beta \propto \sigma _i^{-1}\) (recall that in Eq. 6.3 we had set θ = 0). There are important differences, however:

-

Now the noise affecting the spike probability (hence, the firing rate) is the result of the random input current rather than intrinsic noise in the spiking mechanism (which is now deterministic).

-

Unlike β, σi is not constant but depends on the activity of the network—in particular, it depends on the firing rates of the other neurons, as we shall see shortly.

-

Equation 6.34 shows that the firing rate is a sigmoidal function of μi, with σi controlling its slope.

It turns out that this heuristic picture, including the dependence of the firing rate on μi, σi in the form (μi − θ)∕σi, is correct also for more realistic models of spiking neurons (Sect. 6.6). The reason is intuitively simple: the firing rate is determined by the distance between μi and θin units ofσi: the difference μi − θ, on its own, is not sufficient to determine the firing rate.

Remark 4

What does, in the deterministic model where β →∞, make the input current behave as a stochastic variable? This has to do with the chaotic nature of the dynamics resulting from ingredients such as quenched synaptic weights, random connectivity (discussed later), and the recurrent nature of the network. More details will be given later.

5.1.1 The Moments of the Input Current

To close the self-consistency loop of the mean field equations, we need to determine the dependence of μi, σi on the firing rates of the presynaptic neurons. For the mean, we have Eq. 6.20, which we write here in the equivalent form:

To compute the variance, we can use the formula for the variance of the product of two independent random variables applied to Jijxj (in the following, \(\mathbb E(z)\) denotes the generic expectation of z, and note that \(x_j^2=x_j\)):

where the approximation is valid for small fj, which is a relevant case in cortex. Although we know the exact result Eq. 6.36, we chose to emphasize the approximate result in 6.37, because, as we shall see later, there is a sense in which this result is exact in networks of spiking neurons. Also, low firing rates tend to decorrelate the activities of the neurons, an assumption required to apply the central limit theorem and to add up the variances coming from the N neurons of the network, which from Eq. 6.37 gives

For the homogeneous population of Fig. 6.5 where the synaptic weights were set equal to g∕N, using these formulae, we obtain

which are the same for all neurons. The Gaussian density function with these parameters predicts well the current’s temporal fluctuations, as shown in Fig. 6.5a. Note how in this case the variance will vanish in the thermodynamic limit due to our choice Jij ∼ 1∕N (but see Sect. 6.7.1).

5.1.2 Extended Mean Field Theory

When taking into account the variance of the input, the self-consistent mean field equations read, in vectorial notation, as

An important example is the clustered network of Sect. 6.4.3. In that case, all neurons in the same cluster receive current with the same input and variance, and we obtain

where [J]αβ and [J2]αβ are the mean and the second moment of the synaptic weights between neurons of populations α and β. Note that while the terms in the mean input can be positive or negative (depending on the sign of [J]αβ, which is negative if population β is inhibitory), the variance is the sum of positive terms. This is because we have assumed that the inputs coming from different neurons are independent, i.e., their covariances vanish.

Strictly speaking, the theory is valid in the thermodynamic limit, which in the clustered network requires some care. Approximately, however, we expect good predictions for a large enough number of neurons in each cluster. Also, it is possible to have situations in which \(\sigma ^2_{\alpha } \to 0\) in the limit (see, e.g., Eqs. 6.39), and if no external fluctuations are added, the network’s behavior becomes deterministic. We discuss ways to keep a finite variance for N →∞ in Sect. 6.7.1.

5.2 Random Connectivity

So far, all neurons were connected to all other neurons in the network (with the exclusion of themselves). In real cortical circuits, however, neurons are connected to different numbers and types of other neurons. Even neglecting the heterogeneity in cell types, random connectivity can have a meaningful impact on the dynamics of the network and its stationary activity regimes.

A simple, and widely used, model of random connectivity is to assume that any two neurons are connected by a synapse with probability c. Calling cij ∈{0, 1} the random variable representing whether or not a synaptic connection exists from presynaptic neuron j, its mean and variance are c and c(1 − c), respectively. To leverage our previous result Eq. 6.37, now in need of generalization, it is convenient to redefine the synaptic weight to include cij:

We further assume that cij and Jij are independent random variables (i.e., synapses of different strengths are equally likely to exist). It follows that the mean input in mean field becomes

while for the variance, we have, from Eq. 6.37 (note that \(c_{ij}^2=c_{ij}\)),

and therefore,

For a network with M clusters, denoting with cαβ the mean connectivity from neurons in clusters β to neurons in clusters α, summing up over the neurons in each cluster, we obtain the generalization of Eq. 6.41:

The fixed points (and their stability) can be found with a linearized dynamics for the coupled vectors \(\{\mu _{\alpha }, \sigma ^2_{\alpha }\}\), which generalizes the methods of Sect. 6.4.2.2, see e.g. Mascaro and Amit (1999); Mazzucato et al. (2016).

Remark 5

In some models, the input current to population α comes from Next Poisson spike trains with rate fext, connectivity cα,ext, and synapses Jα,ext, in which case the external current has both a mean and a variance,

which enter the right-hand sides of Eqs. 6.46.

In the next section, we introduce clustered networks of spiking neurons in continuous time, and we will see that the mean field equations are given, also in that case, by Eqs. 6.40 and 6.46. The only difference will be in the sigmoidal response function \(\mathcal S\).

In the example considered in this section, connections among neurons are made randomly and independently with a fixed probability, a structure sometimes called Erdös–Rényi connectivity. However, mean field theory can also be developed in networks with more complex connectivity structures (see, e.g., Nykamp et al. (2017)).

6 Networks of Integrate-and-Fire Neurons

The theory developed so far can be applied to networks of integrate-and-fire neurons. This is a more relevant case because of its greater biological significance and the possibility for the theory to be directly tested in experiment.

6.1 Leaky Integrate-and-Fire Neuron

For concreteness, we shall develop the theory for networks of leaky integrate-and-fire (LIF) neurons. LIF neurons are characterized by their membrane potential V (t) at time t according to the standard model

Here, VL is the resting potential, τ is the membrane time constant, Jij are the synaptic weights in voltage units, δ(t) is the Dirac’s delta function, and \(t_k^j\) is the time of the kth spike emitted by presynaptic neuron j. The two rightmost terms represent the input current: the synaptic and external current, respectively. Note that both terms are in units of voltage/time; to obtain these terms in units of current, one should divide them by Cm, the membrane capacitance. To simplify the formulae, here we assume Cm = 1 and keep the input current in units of voltage/time. When the inputs contain excitatory and inhibitory spike trains, this model goes also under the name of Stein’s model (Stein, 1965). Since this model lacks the non-linear conductances responsible for action potential generation, we complement it with boundary conditions on V to mimic the emission of a spike. Specifically: when V hits a threshold θ, a spike is said to be emitted and V is immediately reset to a value Vr, where it is clamped for a refractory period τr. After a time τr, the dynamics Eq. 6.48 resumes.

This behavior is illustrated in Fig. 6.6 for the case of a single excitatory input spike train and synaptic weight J. As shown in the left panel, V jumps by J upon arrival of a presynaptic spike and decays exponentially in between spikes, in keeping with the solution to Eq. 6.48:

where  is a constant term that represents the new equilibrium value of the membrane potential in the presence of a constant external current. Figure 6.6b shows the emission of spikes (followed by a reset) when V hits the threshold θ = −45 mV.

is a constant term that represents the new equilibrium value of the membrane potential in the presence of a constant external current. Figure 6.6b shows the emission of spikes (followed by a reset) when V hits the threshold θ = −45 mV.

Membrane potential of the LIF neuron, Eq. 6.48, driven by one excitatory Poisson spike train with firing rates 300 Hz for sub-threshold input (panel A) and 1200 Hz for supra-threshold input (panel B). The input spike train is shown at the bottom of each panel (vertical ticks). Neuron parameters: VL = −65 mV (dashed line in A), θ = −45 mV (dashed line in B), Vr = −60 mV, τr = 2 ms, τ = 20 ms, J = 1 mV. The external current was set to zero

6.1.1 The Moments of the Free Membrane Potential

Analogously to the situation with the binary neuron, we need to determine the response function of this model neuron, i.e., its firing rate as a function of the input current. After reabsorbing Ii,ext into \(V_{i,L}^*\), the input current is given by the synaptic input current, i.e. (see Eq. 6.48)

However, the neuron emits a spike when Vi, not Ii, exceeds the threshold. Assuming a stationary input, after a transient Vi reaches the steady state (from Eq. 6.49):

We are therefore interested in characterizing this term.

Just as before, Vi is the sum of contributions coming from many neurons. Assuming independent or, at most, weakly correlated neurons, Vi follows approximately a Gaussian distribution with mean μi and variance \(\sigma ^2_i\). Let us indicate with JE the excitatory weights and with JI the inhibitory ones. Moreover, we assume that the inputs \(\sum _k \delta (t-t_k^j)\) are independent Poisson spike trains with mean fE and fI, respectively. Then (see appendix A.1.2 for details),

Note that Eq. 6.52 is valid for the free membrane potential, i.e., in the absence of output spikes. Nevertheless, μi and σi also determine the firing rate of the neuron, as we show next.

6.1.2 The Response Function of the LIF Neuron

The response function of the LIF neuron is difficult to compute despite the simplicity of the model. Fortunately, a closed formula is known under the so-called diffusion approximation, an approximation valid when:

-

(i)

The number of presynaptic inputs is large, but each synaptic input contributes a very small perturbation to the membrane potential.

-

(ii)

The values of the input current in successive time bins are independent (this is true if, e.g., the input current is the sum of independent Poisson spike trains).

The diffusion approximation is pictorially illustrated in Fig. 6.7.

Diffusion approximation for the LIF neuron. (a) LIF neuron response to two spike trains (shown below the membrane potential trace), one excitatory (upward tickmarks) and one inhibitory (downward tickmarks). (b) Diffusion approximation to (a): same neuron driven by fluctuating Gaussian input current with the same mean and variance as the Poisson input in (a). Note that although the membrane potential and the spike times differ in the two cases, the firing rates of the output spike trains match. (c) Same as (a) for smaller J but larger input firing rates. The membrane potential looks smoother than in (a) and already rather similar to its diffusion approximation shown in (d). (d) Diffusion approximation to (c).

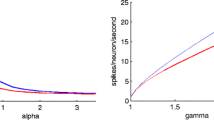

We note that condition (i) is rather realistic in cortex, where values of J are estimated to be about 1∕20 or less of the difference θ − VL (for example, J ≈ 0.5 mV with spike thresholds 10–20 mV above rest; see e.g. Shadlen and Newsome (1994)). Condition (ii), also known as the white noise approximation, should hold self-consistently in the whole network, and for finite values of the synaptic weights, it remains an approximation (Lerchner et al., 2006; Pena et al., 2018; Vellmer and Lindner, 2019) (we will say a bit more on this in Sect. 6.7.2). For feedforward input, however, the diffusion approximation gives excellent results for the firing rate of the LIF neuron, as shown in Fig. 6.8. The response function shown in the figure reads (Amit and Brunel, 1997b; Amit and Tsodyks, 1992; Johannesma, 1968)

where \( \mathop {\mathrm {erf}}(x)\) is the error function and μ, σ are given by Eqs. 6.52. As a reminder, the error function is defined as

Response function of the LIF neuron driven by synaptic input. The plots show the stationary firing rate as a function of fin, the firing rate of excitatory presynaptic inputs. Dots: firing rate from simulations of Eq. 6.48 with Jij = 1 and input firing rate reported on the horizontal axis. Line: response function under the diffusion approximation, Eq. 6.53

where \(z \sim \mathcal N(0,1)\) is a standard Gaussian random variable. Two different derivations of Eq. 6.53 can be found e.g. in Johannesma (1968) and Brunel (2000) (see also Siegert (1951)). Figure 6.8 shows that Eq. 6.53 is in excellent agreement with simulations despite a finite J (in figure, J = 1 and θ − VL = 20). It has also been determined experimentally that this response function describes quite accurately the response function of real cortical neurons (La Camera et al., 2008).

With the response function in hand, we can write the self-consistent mean field equations for this model (in vectorial notation):

where Φi is given by 6.53 and μi, σi are given by 6.52. In the more general case of M clusters with random connectivity, Eq. 6.52 generalizes to (see Sect. 6.5.2)

where [J]αβ < 0 if β is an inhibitory population, and some terms may reflect a synaptic input coming from external pools of neurons (see Eq. 6.47). For simplicity, we have assumed the same equilibrium value for all neurons in the same population ( ), a restriction that can be easily removed. Note the similarity of Eqs. 6.56 with the relations 6.46 valid for the binary neuron, and note that 6.56 holds for the membrane potential. For the input current, Eq. 6.50, expressions identical to 6.46 hold (see appendix A.1.2 for details).

), a restriction that can be easily removed. Note the similarity of Eqs. 6.56 with the relations 6.46 valid for the binary neuron, and note that 6.56 holds for the membrane potential. For the input current, Eq. 6.50, expressions identical to 6.46 hold (see appendix A.1.2 for details).

Although we have outlined the theory for networks of LIF neurons, the same theory applies to networks of other integrate-and-fire neurons, such as the quadratic and exponential integrate-and-fire neurons, and even to some conductance-based models. The main difference is in the response function to be used; see, e.g., Fourcaud-Trocmé et al. (2003); Fusi and Mattia (1999); La Camera et al. (2004); Richardson (2004).

Spiking networks with inhibitory and excitatory populations can exhibit a repertoire of different behaviors. They can produce fast global oscillations with asynchronous spiking in single neurons, globally synchronized states as well as states of asynchronous activity (e.g., Brunel (2000); Brunel and Hakim (1999); Gerstner (2000); Mattia and Del Giudice (2002); van Vreeswijk and Sompolinsky (1998)). In the presence of multiple clusters, complex network configurations are possible (Mazzucato et al., 2015), including configurations wherein an excitatory cluster is active on the backdrop of a globally spontaneous activity state. Such a model was first introduced and analyzed in Amit and Brunel (1997b) with the mean field approach outlined here and was proposed as a biologically plausible model of working memory capable of storing and retrieving multiple memories. In particular, if single neurons can code for multiple stimuli (as observed in real cortical neurons), an extensive number of stimuli can be accommodated (Curti et al., 2004). These networks are rather complex but still amenable to a mean field analysis that is a direct generalization of the approach outlined here. Networks of this kind have also been proposed as mechanistic models of decision-making in cortical circuits, see, e.g., Wang (2008). More recently, similar models have been used to explain the emergence of slow fluctuations in firing rates. We discuss them in Sect. 6.7.3.

7 Validity of the Mean Field Approximation

In the mean field procedure carried out in Sect. 6.4, we have assumed that the sum over many independent inputs causes the input current Ii to be distributed as a Gaussian-distributed variable. We have then neglected the fluctuations of Ii. By doing so, we have assumed that each neuron receives the mean input generated by the other neurons and by the external currents. In Sect. 6.5, we have included the effect of the Gaussian fluctuations into the theory, as well as the effect of random connectivity. In this picture, each neuron receives a Gaussian input current with given mean and variance that depend, self-consistently, on the activities of the other neurons.

In this section, we consider the assumptions made so far and some of the consequences of violating those assumptions.

7.1 Implications of the Thermodynamic Limit and Synaptic Scaling

We first note that the theory applies only to large networks. Only when the input is the sum over many independent terms, we can apply the central limit theorem and replace the input current with a Gaussian variable. Therefore, this assumption requires to perform the thermodynamic limit N →∞, which in turn implies that the synaptic weights must be scaled with N for the input current to remain finite in the limit.

For concreteness, we consider a single population of binary neurons with constant external current. The input is a sum of N terms of order 1; assuming independent contributions from presynaptic neurons, both the mean and the variance will grow as N (neglecting Ii,ext for now, we focus on the recurrent contributions):

where the symbol \(\mathcal O(N)\)means “order N” as N →∞, i.e., \(\mathcal O(N)/N \to constant\). Receiving a large input, all neurons will saturate to their maximal activity value, which would render the state of the network useless for computation. Therefore, we must rescale the synaptic weights so as to produce a finite activity.

Two main scaling options have been used: one is to scale the weights as Jij ∼ g∕N and the other is to scale them as \(J_{ij} \sim g/\sqrt {N}\).

-

With the first choice, Jij ∼ g∕N, we obtain

$$\displaystyle \begin{aligned} \langle I_i \rangle \sim \mathcal{O}(1), \quad Var(I_i) \sim {1 \over N}. \end{aligned} $$(6.58)The variance vanishes in the limit. In this case, the input current ceases to fluctuate, and all neurons receive exactly the same input and therefore will have the same neural activity. The mean field prediction in this case is fi = f for all i, with f given by the self-consistent Eq. 6.24,

$$\displaystyle \begin{aligned} f = {\mathcal S}(g f + I_{ext}), \end{aligned} $$(6.59)analyzed in Sect. 6.4.2.2.

-

With the second choice, \(J_{ij} \sim g/\sqrt {N}\), we have

$$\displaystyle \begin{aligned} \langle I_i \rangle \sim \mathcal{O}(\sqrt{N}), \quad Var(I_i) \sim \mathcal O(1). \end{aligned} $$(6.60)In this case, the mean input tends to increase with N, while the variance remains finite (“order 1”). The prediction is that all neurons’ activities will saturate to their maximal value, as in the absence of scaling. This can be avoided by adding inhibitory populations of neurons, as shown next.

7.1.1 Balanced Networks

The problem encountered with the \(1/\sqrt {N}\) scaling may be rescued by inhibition. Let us consider a network with one excitatory and one inhibitory population, each of size N. For simplicity, we consider constant synapses in each population. By rescaling all weights as \(J_{{\alpha }{\beta }} = \tilde J_{{\alpha }{\beta }}/\sqrt {N}\) and the external current as \(\mu _{E,ext} \sim \tilde \mu _{E,ext} \sqrt {N}\), Eq. 6.41 gives

In the same limit, the variances remain finite:

Now we require that the mean inputs remain \(\mathcal O (1)\) in the limit of large N. This requires the inhibitory and excitatory components in Eq. 6.61 to cancel out in the limit, at least within order \(1/\sqrt {N}\):

or, in matrix notation,

Networks with this property are known as “balanced” networks (van Vreeswijk and Sompolinsky, 1996, 1998) and are the object of much research because they share many properties of real cortical circuits, including erratic spike trains that are difficult to explain without the balance hypothesis (see the next section). Note that the input current retains its variability in the thermodynamic limit, justifying the introduction of σ in the extended mean field theory of Sect. 6.5.1 (an alternative justification would be the presence of an external fluctuating input for any N (Amit and Brunel, 1997a,b), in which case the synapses can be scaled as 1∕N).

Remark 6

We must observe that cortical neurons are not connected to all other neurons in their neural circuit, but they are connected to, say, K ≪ N neurons, where K can be large. Therefore, the theory outlined above can be made more realistic by assuming random connectivity with mean c = K∕N, in which case Eq. 6.46 holds. In the limit N →∞, K →∞ with K∕N → 0, and \(J_{\alpha \beta } \sim 1/\sqrt {K}\), from the above equations, we see that the mean will grow as \(\sqrt {K}\), while the variance will remain finite. All the arguments remain the same, except that we replace N with K. In this version of the theory, synapses are required to scale as the inverse of the square root of their mean number of afferents K, rather than the total number of neurons. Partial evidence for a \(1/\sqrt {K}\) scaling of cortical synapses has been reported in Barral and Reyes (2016).

Remark 7

We should notice that the argument of the previous remark holds only if one can prove that correlations vanish in the thermodynamic limit. Technically, this requires \(K < \ln N\) (Derrida et al., 1987). However, it turns out that, in a balanced network, such requirement is not necessary (Renart et al., 2010). See also Sect. 6.7.2.

7.1.2 Mean Field Theory of Balanced Networks

The mean field theory of the balanced network proceeds as follows: the balanced solution f∗ is given by the solution to Eq. 6.67, i.e.,

This is a necessary condition for the existence of the balanced state; additional conditions must be imposed to guarantee positive, non-saturating firing rates (van Vreeswijk and Sompolinsky, 1998). In a recurrent network, we have the additional requirement that the output rate of a neuron in a population must match its own input firing rate:

where Φα is the response function of the neurons in the α population. Note that \(f_E^*\) and \(f_I^*\) given by Eq. 6.68 provide a value for the variances (according to Eq. 6.63) but do not provide a value for the input means μE,I defined in Eq. 6.61 (these are of order 1 but are not necessarily zero, even in the thermodynamic limit). Therefore, one imposes the balance condition and derives μE,I self-consistently:

Note that μE,I depend on \(f_{E,I}^*\): for example, if the external currents are varied, one obtains new \(f_{E,I}^*\) values and thus new μE,I from the self-consistent equations above.

We make a few more important remarks regarding balanced networks:

-

Equation 6.67 shows that the balanced state requires an external current, and the external current must be of order \(\sqrt {K}\) (see Remark 6). Without an external current, Eq. 6.67 reads \(\tilde Jf=0\). This case is problematic in several ways. For example, when a non-zero solution exists for the firing rates, the latter could have large fluctuations in the null subspace of \(\tilde J\), and the asynchronous state could be lost. Mean field theory with a singular synaptic matrix is, in general, problematic.

-

Equation 6.68 implies that the balanced rates depend linearly on the external input current, which is at odds with the bistability studied in Sect. 6.4.2.2 resulting in macroscopic changes in firing rates. In other words, a network cannot be balanced and bistable at the same time (Renart et al., 2007). To obtain a bistable balanced network, other sources of non-linearity must be leveraged, such as short-term plasticity (Barbieri and Brunel, 2007; Mongillo et al., 2012).

-

In a balanced network, rates dynamically adjust to balance excitatory and inhibitory inputs, so that the mean and the variance of the input remain of order 1 (van Vreeswijk and Sompolinsky, 1998). When the mean input is below threshold, firing is due to input fluctuations and this regime is stable for continuous perturbations of the input. This produces erratic spike trains without the need for fine tuning.

7.2 The Role of Correlations

Correlations of neural activity come in two main flavors, spatial (called cross-correlations) and temporal (called autocorrelations). Mean field theory makes specific assumptions about them: in the most basic form, both forms of correlations are supposed to vanish in the thermodynamic limit. We briefly discuss the role of correlations in this section.

Spatial Correlations

The application of the central limit theorem invoked in Sect. 6.7.1 also requires negligible correlations between the activities of the neurons. For example, if the synaptic weights are symmetric, Jij = Jji, then the random variables Jijxj and Jjixi could be correlated. If the variables are highly correlated, global oscillations of the firing rates may emerge, and the asynchronous regime is lost. Sparse connections typically reduce the correlations between neurons and are very often invoked (see below); other mechanisms, such as the balance of excitation and inhibition discussed in the previous section, are effective at reducing correlations even in networks that are not sparse (Helias et al., 2014; Renart et al., 2010).

Definition 2 (Sparseness)

A network is sparse when its neurons receive a mean number of connections K ≪ N, such that the average connectivity \(c={K \over N} \to 0\) as N →∞.

Note that the definition above does not exclude the possibility that K →∞ in the thermodynamic limit. In fact, in the theory of balanced networks, we take both N →∞ and K →∞. In finite networks, where K, N, and J are all finite, sparseness becomes a messier concept. A more useful approach in that case might be to specify the conditions on the values of K and J resulting in negligible correlations between the spike trains coming from different neurons. Normally, in this regime, mean field theory will be quite accurate.

In the presence of correlations, some of the formulae derived earlier may not hold. For example, consider Eq. 6.20 and its generalization to random connectivity: by using the general fact that 〈yx〉 = 〈y〉〈x〉 + Cov(y, x), we get (with 〈y〉 = 〈cijJij〉 = c[J])

where c = K∕N and  . We see that if the mean covariances do not vanish in the limit, the two terms on the right-hand side have the same order of magnitude. A similar argument applies to the sum over the presynaptic neurons of quantity 6.37. In this case, we have to use the more general formula for the variance of \(\sum _j^N c_{ij} J_{ij} x_j\):

. We see that if the mean covariances do not vanish in the limit, the two terms on the right-hand side have the same order of magnitude. A similar argument applies to the sum over the presynaptic neurons of quantity 6.37. In this case, we have to use the more general formula for the variance of \(\sum _j^N c_{ij} J_{ij} x_j\):

Note that the first term of 6.75 is a sum over N terms, whereas the second is a sum over \(\mathcal O(N^2)\) terms, and therefore, the second term may not negligible compared to the first.

It must be noted that introducing scaling laws for c = K∕N and Jij in these formulae may not be sufficient to determine the impact of the covariance terms. For example, if the network is in the asynchronous regime, the covariance terms vanish by definition. In general, several ingredients in addition to connectivity contribute to the degree of cross-correlations between spike trains in a recurrent network of spiking neurons (see, e.g., Ostojic et al. (2009)), and each case may have to be analyzed separately. Two important examples in which cross-correlations vanish in large networks are balanced networks (even dense ones (Helias et al., 2014; Renart et al., 2010)) and networks with \(K < \ln N\) (Derrida et al., 1987).

Temporal Correlations

Another important assumption of the theory is the absence of temporal correlations in the activity of single neurons, as quantified by their autocovariance (AC). Whereas the firing rate is the average of the activity, e.g. 〈xi〉, the AC at lag τ is given by

Often one computes the autocorrelation instead, which is just a normalized version of the AC. The AC is a measure of the similarity between the activities of a neuron at two time points. For example, the AC of \(x(t)=\cos {}(\omega t)\) is itself a cosine function. In the asynchronous regime with stationary firing rate, the AC is a delta function,

We refer to this assumption as the “white noise” approximation. The presence of temporal correlations poses two main problems to the theory:

-

Determination of the firing rates. When the activity depends only on the current value of the input, as in our network of binary logistic neurons, the autocorrelation does not affect the determination of the firing rates. The activity of LIF neurons, however, depends on the previous history at least back to the time of their previous spike. The response function of the LIF neuron (Eq. 6.53) correctly describes the firing rate only for white noise input. It is often argued that in a large network, the sum of many input spike trains will converge to a delta-correlated input current, but in general this is not strictly correct (Câteau and Reyes, 2006; Lindner, 2006; Moreno-Bote et al., 2008). The temporal correlations present in the input spike trains may therefore survive in a large network. Examples of such correlations in spiking neurons are due to a finite refractory period, which introduces a negative AC at very short lags, the finite rise and decay time of receptor-mediated current, and firing rate adaptation. Improved response functions in the presence of synaptic filtering have been found, e.g., Fourcaud and Brunel (2002); Moreno-Bote and Parga (2004). However, the recurrent nature of the network may induce finite correlation times in a network that otherwise has no built-in temporal correlations (Fulvi Mari (2000); Lerchner et al. (2006)), which leads us to the next point.

-

Self-consistent theory of correlations. Aside from the firing rates, a satisfactory theory should also determine self-consistently the autocovariance of the activity of a recurrent network. Self-consistent descriptions of AC have been obtained with a variety of methods, some also applicable to spiking networks (Harish and Hansel, 2015; Lerchner et al., 2006; Mastrogiuseppe and Ostojic, 2017; Pena et al., 2018; Sompolinsky et al., 1988; Vellmer and Lindner, 2019). These efforts have shed light on the dynamical behaviors of neural networks, as well as the transitions among them, as one or a few key parameters are varied.

7.3 Finite Size Effects

The theory requires the thermodynamic limit N →∞. However, it typically works well also in finite networks, as confirmed by the agreement with numerical simulations. In a finite network, however, discrepancies from the mean field predictions can be observed. Fluctuations in the network’s activity can destabilize fixed points that would otherwise be stable in the infinite network (for some relevant applications, see e.g. Braun and Mattia (2010); Miller and Wang (2006)). In this section, we briefly discuss two possible consequences of having a finite number of neurons: a spatial variation of firing rates and metastability.

Spatial Variation of Firing Rates

Mean field theory assumes that all neurons of a homogeneous population have the same firing rate. Due to random connectivity, neurons will receive input from a mean number of K = cN neurons, with variance c(1 − c)N = K(1 − K∕N). The cell-to-cell fluctuations in the number of inputs scale therefore as \(\sqrt {K}\). When K is large, the fluctuations are negligible compared to the mean (the distribution converges to a δ function centered in K), so that all neurons receive the same number of inputs K. In a finite network, however, fluctuations in the number of inputs can induce variability in the firing rates across neurons. This is especially true in a balanced network, where the mean input scales as \(\sqrt {K}\), the same order of magnitude of the spatial fluctuations (van Vreeswijk and Sompolinsky, 1998). Both in these models and real cortical circuits, the spatial distributions can be quite wide but are well predicted by a mean field analysis that treats the distribution of firing rates self-consistently, see, e.g., Amit and Brunel (1997a).

Metastability

The spatially distributed firing rates mentioned in the previous paragraph tend to be stable despite the finite size of the network. A different phenomenon is metastability, where the firing rates in subpopulations of neurons are homogeneous and well predicted by mean field theory, but the activity of the network is not stationary. Metastability occurs when the stable fixed points of activity are destabilized by fluctuations due to finite N. For metastability to occur, one needs at least two stable fixed points that lose stability in the finite network. This case is illustrated in Fig. 6.9a, b for the network of Fig. 6.4 with g = 1.1, Iext = −0.6∕g, and N = 100. When this network has fewer than 1000 neurons, there are enough fluctuations to cause the network’s activity to randomly flip between the two fixed points shown in panel A. The larger N, the longer the time spent in each point.

Metastability due to finite size effects. (a) Graphical solution of the mean field equations for the binary network of Fig. 6.4 with g = 1.1, Iext = −0.6∕g. (b) Rasters and ensemble average of the network of panel A with N = 100 neurons. Finite size effects cause the activity of the network to flip among the two fixed points shown in (a). (c) Mean field analysis of a clustered network of LIF neurons with 30 excitatory clusters, analogous to the bifurcation diagram of Fig. 6.4b. In this case, there are multiple upper branches, each characterized by a different number of simultaneously active clusters (from 1 to 8). A new branch appears as soon as the relative potentiation of synaptic weights inside clusters (J+) crosses a critical point (vertical red lines). (d) Raster plot of the network of panel C for J+ = 5.2 (green vertical line) showing rich metastable dynamics. Note that this network is completely deterministic. Panels C and D adapted from Mazzucato et al. (2015)

In a network partitioned into many clusters and including recurrent inhibition, a mean field analysis shows the existence of a large variety of fixed points (Mazzucato et al., 2015), and the interplay of recurrent inhibition and finite size fluctuations brings about a rich metastable dynamics (Deco and Hugues, 2012; Litwin-Kumar and Doiron, 2012; Mazzucato et al., 2015), as shown in Fig. 6.9c, d.

This occurs when the mean synaptic weights inside the excitatory clusters are strong enough, with the critical point being accurately predicted by mean field theory. In fact, there are a multitude of critical points for the mean synaptic weights, as shown by the vertical red lines in Fig. 6.9c. Above the smallest critical point, only one excitatory cluster can be active at any given time. Above a second critical point, up to 2 clusters can be active, and in general beyond the nth critical point, up to n clusters can be active. See Mazzucato et al. (2015) for details.

8 Discussion and Conclusions

In this chapter, we have presented an elementary introduction to the mean field approach for populations of spiking neurons. This is an approach borrowed from physics that allows to study the behavior of large networks by replacing the input to a neuron with a mean field generated by its afferent neurons. A key feature is self-consistency, which has two meanings: in the first, it means that the properties of input and output neurons in homogeneous populations must match; in the second, it means that the conditions assumed ab initio to develop the theory (such as Gaussian current) must indeed occur. In the most basic setting, we focus on matching self-consistently the firing rates and neglect other important properties such as the autocorrelations of the neurons. The theory, however, can be generalized to include those properties as well.

It may seem strange that a theory based on neglecting fluctuations or assuming stationary behavior can give useful predictions in the presence of both fluctuations and temporal dynamics. Yet we are familiar with the success of such theories in physics where, in studying large systems composed of interacting particles, thermal fluctuations will cause the particles to move around or to flip their spins, while macroscopic properties of the system, such as volume, pressure, or energy, may remain constant.

The theory is, strictly speaking, only valid in the thermodynamic limit and under restrictive conditions, such as stationary activity and low correlations among neurons. However, it can be extended in a number of ways, for example by including the fluctuations of the input current or determining, self-consistently, the spatial variation of firing rates across the neurons of a finite network. The theory has allowed an understanding of the behavior of networks partitioned into populations of excitatory and inhibitory neurons, each of which can be further partitioned into sub-clusters of different cell types along the lines discussed in Sects. 6.5.2 and 6.7.3.

There are many other ways in which the theory can be extended. By looking at the self-consistent autocorrelation in mean field, it was found that rate models such as Eqs. 6.18 can exhibit deterministic chaos in the dynamics of the firing rates (Aljadeff et al., 2015; Kadmon and Sompolinsky, 2015; Molgedey, 1992; Rajan et al., 2010; Sompolinsky et al., 1988). In this context, the mean field approach is more commonly referred to as “dynamical mean field theory” (Crisanti and Sompolinsky, 2018; Schuecker et al., 2016). Similar efforts are being carried out in spiking networks, where the possibility of firing rate chaos is still an open question (Harish and Hansel, 2015; Ostojic, 2014; Wieland et al., 2015). Other ways in which the theory can be extended include ways to determine self-consistently the effects of firing rate adaptation (Gigante et al., 2007; La Camera et al., 2004; Treves, 1993), neuromodulators (Brunel and Wang, 2001), temporal and spatial correlations (Ginzburg, 1994; Lerchner et al., 2006; Meyer and van Vreeswijk, 2002; Vellmer and Lindner, 2019), short-term plasticity (Barbieri and Brunel, 2007; Mongillo et al., 2012), voltage-dependent conductances (Capone et al., 2019; Kumar et al., 2008; Sanzeni et al., 2020), spatial topology (Pyle and Rosenbaum, 2017; Wilson and Cowan, 1973), and more. Within these extensions, mean field theory has been applied to increasingly more realistic models of neural activity.

Mean field theory can also be useful when its assumptions are violated, as, e.g., in metastable networks. These networks can have a large number of stable configurations for N →∞, which give rise to rich metastable dynamics in the case of a network of finite size. This type of metastable dynamics has been found in the neural activity of humans and other behaving animals (La Camera et al., 2019; Miller, 2016). Mean field theory allows to locate the metastable regime on a bifurcation diagram and accurately predict the firing rates of neural clusters (Mazzucato et al., 2015) during metastable activity. Metastable networks can also explain the emergence of slow fluctuations and the quenching of trial-to-trial variability in response to sensory stimulation (Deco and Hugues, 2012; Litwin-Kumar and Doiron, 2012).

Some of the studies mentioned above were actually performed using a version of mean field theory known as the population density approach (Abbott and van Vreeswijk, 1993; Brunel, 2000; Brunel and Hakim, 1999; Fusi and Mattia, 1999; Knight, 1972, 2000; Mattia and Del Giudice, 2002; Nykamp and Tranchina, 2000; Treves, 1993; Vellmer and Lindner, 2019). In this approach, one obtains not only the stationary distribution of the firing rates, but also the distribution of the membrane potentials. By leveraging perturbative solutions of a Fokker–Planck equation, this approach can help uncover the dynamics emerging from the instability of the asynchronous regime, and it has been used to build complete phase diagrams of networks of spiking neurons (Brunel, 2000; Brunel and Hakim, 1999). In some special cases, exact results in the thermodynamic limit for the dynamics of both the firing rate and the membrane potential have been obtained (Montbrió et al., 2015). Mean field theory, dynamical field theory, and the population density approach are complementary approaches for the practitioner, who may choose one approach or the other according to the problem at hand.

In conclusion, models of neural circuits are complex dynamical systems capable of a large repertoire of behaviors. Mean field theory, in his diverse incarnations, is one of the few tools at our disposal (and arguably the most successful) at predicting the collective behavior of such models. As we learn more about the potential link between neural dynamics and brain function, these methods are being rediscovered and sharpened to deal with increasingly more sophisticated applications. We hope that this elementary introduction can be useful as a first exposure to the ideas and methods of this approach as applied to networks of spiking neurons, while referring, e.g., to Amit (1989); Hertz et al. (1991) for comprehensive treatments in the field of neural computation and to Del Giudice et al. (2003); Gerstner et al. (2014); Hertz et al. (2004); Renart et al. (2004) for applications to spiking neurons and related topics.