Abstract

The Reference-based Super-resolution (RefSR) super-resolves a low-resolution (LR) image given an external high-resolution (HR) reference image, where the reference image and LR image share similar viewpoint but with significant resolution gap (\(8{\times }\)). Existing RefSR methods work in a cascaded way such as patch matching followed by synthesis pipeline with two independently defined objective functions, leading to the inter-patch misalignment, grid effect and inefficient optimization. To resolve these issues, we present CrossNet, an end-to-end and fully-convolutional deep neural network using cross-scale warping. Our network contains image encoders, cross-scale warping layers, and fusion decoder: the encoder serves to extract multi-scale features from both the LR and the reference images; the cross-scale warping layers spatially aligns the reference feature map with the LR feature map; the decoder finally aggregates feature maps from both domains to synthesize the HR output. Using cross-scale warping, our network is able to perform spatial alignment at pixel-level in an end-to-end fashion, which improves the existing schemes [1, 2] both in precision (around 2 dB–4 dB) and efficiency (more than 100 times faster).

L. Fang—This work is supported in part by Natural Science Foundation of China (NSFC) under contract No. 61722209, 61331015 and 61522111, in part by the National key foundation for exploring scientific instrument of China No. 2013YQ140517.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Reference-based super-resolution (RefSR) methods [2] utilizes an extra high resolution (HR) reference image to help super-resolve the low resolution (LR) image that shares similar viewpoint. Benefit from the high resolution details in reference image, RefSR usually leads to competitive performance compared to single-image SR (SISR). While RefSR has been successfully applied in light-field reconstruction [1,2,3] and giga-pixel video synthesis [4], it remains a challenging and unsolved problem, due to the parallax and the huge resolution gap (8x) exist between HR reference image and LR image. Essentially, how to transfer the high-frequency details from the reference image to the LR image is the key to the success of RefSR. This leads to the two critical issues in RefSR, i.e., image correspondence between the two input images and high resolution synthesis of the LR image.

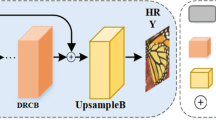

Left: the ‘patch maching + synthesis’ pipeline of [2], middle: the proposed end-to-end CrossNet, right: results comparisons.

In the initial work of [1], to develop image correspondences between the two inputs, the gradient features on the down-sampled patches in the HR reference are used for patch-based matching, while patch averaging is designed for the image synthesis. However, the oversimplified and down-sampled correspondence estimation of [1] does not take advantage of the high frequency information for matching, while the synthesizing step does not utilize high resolution image prior for better fusion. To address the above two limitations, a recent work [2] replaces the gradient feature of [1] with the convolutional neural network (CNN) learned features to improve the matching accuracy, and then proposes an additional CNN which utilizes the state-of-the-art single image super-resolution (SISR) algorithm [5] for patch synthesis. However, the ‘patch matching + patch synthesis’ scheme of [1, 2] are fundamentally limited. Firstly, the adopted sliding averaging blurs the output image and causes grid artifacts. Moreover, patch-based synthesis is inherently incapable in handling the non-rigid image deformation caused by viewpoint changes. To impose the non-rigid deformation to patch-based algorithms, [3] enriches the reference images by iteratively applying non-uniform warping before the patch synthesis. However, directly warping between the low and high resolution images is inaccurate. In addition, such iterative combination of patch matching and warping introduces heavy computational burden, e.g. around 30 min for synthesizing an image.

In this paper, we propose CrossNet, an end-to-end convolutional neural network based on the idea of ‘warping + synthesis’ for reference-based image super-resolution. We discard the idea of ‘patch matching’ and replace it with ‘warping’, which enables the design of ‘Encoder-Warping-Decoder’ structure, as shown in Fig. 1. Such structure contains two encoders to extract multi-scale features from LR and reference image respectively. We take advantage of the warping module originated from spatial transformer network (STN) [6], and integrate it to our HR reference image encoder. Compared with the patch matching based methods, warping naturally supports non-rigid deformation to overcome the parallax challenge in RefSR. More over, we extract multi-scale features in the encoder, and then perform multi-scale spatial alignment using warping, as shown in Fig. 1. The introduced multi-scale features capture the complementary scale information from two images, which help to alleviate the huge resolution gap challenge in RefSR. Finally, the decoder aggregates features to synthesize the HR output. Overall, our model is fully end-to-end trainable and does not require pretraining the flow estimator.

Extensive experiments have shown the superior performance of CrossNet (around 2 dB–4 dB gain) compared to state-of-the-art SISR and RefSR methods, under different datasets with large/small viewpoint disparities and different scales. Our trained model that generalized to external dataset including Stanford light field maintains the ability to retain high frequency details. More importantly, CrossNet is efficient in terms that it generates a \(320\times 512\) image within one second, while [1, 2] and [3] take 86.3 s, 105.0 s and around 30 min to perform the same task, respectively.

2 Related Work

2.1 Single-Image Super-Resolution

The single-image super-resolution (SISR) problem aims to super-resolve an LR image without additional references. Despite that, the SISR problems are closely related to the Reference-based Super-resolution (RefSR) problem. In the early days, approaches based on adaptive sampling [7, 8] has been applied to SISR. However, such approaches did not utilize the statistics of nature images. In contrast, model-based approaches try to design image prior which helps to super-resolve the image-specific patterns. Such works usually utilize edge prior [9], total variation model [10], hyper-Laplacian prior [11], sparsity priors [12,13,14,15], or exemplar patches [16, 17].

More recently, the SISR problem was casted into a supervised regression problem, which try to learn a mapping function from LR patches to HR patches. Those works relies on varieties of learning techniques including nearest-neighbor search [18, 19], decision tree [20], random forests [21], simple function [22, 23], Gaussian process regression [24], and deep neural networks.

With the increasing model capacity of the deep neural networks, the SISR performance has been rapidly improved. Since the appearance of the first deep learning-based SR method [25], a large number of works have been proposed to further improve the SISR performance. For example, Dong et al. [26] and Shi et al. [27] accelerate the efficiency of SISR by computing features on low-resolution domains. Kim et al. [28] proposed a 20-layers deep network for predicting the bicubic upsampling residue. Ledig et al. [5] proposed a deep residue network with adversarial training for SISR. Lai et al. [29] reconstructed the sub-band residuals using a multi-stage Laplacian network. Lim et al. [30] improved [5] by introducing a multi-scale feature extraction residue block for better performance. Because of the impressive performance of the MDSR network from [30], we employ MDSR as a sub-module for LR images feature extraction and RefSR synthesis.

2.2 Reference-Based Super-Resolution

Recent works such as [1,2,3, 31,32,33] uses additional reference images from different viewpoints to help super-resolving the LR input, which forms a new kind of SR method called RefSR. Specifically, Boominathan et al. [1] used an DSLR captured high-definition image as reference, and applies a patch-based synthesizing algorithm using non-local mean [19] for super-resolving the low-definition light-field images. Wu et al. [33] improved such algorithm by employing patch registration before the nearest neighbor searching, then applies dictionary learning for reconstruction. Wang et al. [3] iterate the patch synthesizing step of [1] for enriching the exemplar database. Zheng et al. [34] decompose images into subbands by frequencies and apply patch matching for high-frequency subband reconstruction. Recently, Zheng et al. [2] proposed a deep learning-based approach for the cross-resolution patch matching and synthesizing, which significantly boosts the accuracy of RefSR. However, the patch-based synthesizing algorithms are inherently incapable in handling the non-rigid image deformation that is often caused by the irregular foreground shapes. Under such cases, patch-based synthesize causes issues such as blocky artifact and blurring effect. Despite that sliding windows [1, 2] or iterative refinement [3] mitigate such difficulties to some extends, these strategies usually introduce heavy computational cost. On the contrary, our fully convolutional network makes it possible to achieve more than 100 times speedup compared to existing RefSR approaches, allowing the model to be applicable for real-time applications.

2.3 Image/Video Synthesis Using Warping

Our task is also related to image/video synthesis tasks that use additional images from other viewpoints or frames. Such tasks include view synthesis [35, 36], video denoising [37], super-resolution [37,38,39], interpolation or extrapolation [40, 41]. To solve this type of problems, deep neural networks based of the design of “warping and synthesis” has been recently proposed. Specifically, the additional images are backward warped to the target image using the estimated flow. Afterward, the warped image is used for image/frame synthesis using an additional synthesis module. We follow such “warping and synthesis” pipeline. However, our approach is different from existing works in the following ways: (1) in stead of the common practice where warping was performed on image-domain at pixel-scale [35, 36, 40, 41], our approach performs multi-scale warping on feature domain, which accelerates the model convergence by allowing flow to be globally updated at higher scales. (2) after the warping operations, a novel fusion scheme is proposed for image synthesis. Our fusion scheme is different from the existing synthesizing practices that include image-domain early fusion (concatenation) [36, 40] and linearly combining images [35, 41].

3 Approach

Our reference-based super resolution scheme, named CrossNet, is based on a fully convolutional cross-scale alignment module that spatially aligns the reference image information to the LR image domain. Along with the cross-scale alignment module, an encoder-decoder structure is proposed to directly synthesize the RefSR output in an end-to-end, and fully convolutional fashion. The entire network is plotted in Fig. 2. In Sect. 3.1, we introduce the designs and properties of the fully convolutional cross scale alignment module. In Sect. 3.2, the end-to-end network structure is described, followed by the image synthesis loss function depicted in Sect. 3.3.

3.1 Fully Convolutional Cross-Scale Alignment Module

Since the reference image is captured at different view points from LR image, it is necessary to perform spatial alignment. In [1,2,3], such correspondence is estimated by matching every LR patches with its surrounding reference patches. However, such sparsely-sampled and non-rigidly upsampled correspondence can easily fail around the region with varying depth or disparity.

Cross-Scale Warping. We propose cross-scale warping to perform non-rigid image transformation. Comparing to patch matching, we do not assume the depth plane to be locally constant. Our proposed cross-scale warping operation considers a pixel-wise shift vector V:

which assigns a specific shift vector for each pixel location, so that it avoids the blocky and blurry artifacts.

Cross-Scale Flow Estimator. As shown on the top of Fig. 2, given an upsampled LR image and its corresponding reference image, we adopt the widely used FlowNetS [42] as our flow estimator to generate the cross-scale correspondence at multiple scale. To further improve the FlowNetS, we replace the final \({\times }4\) bilinear upsampling layer of FlownetS with two \({\times }2\) upsampling module, whereas each \({\times }2\) upsampling module contains a skip connection structure following a deconvolution layer. Such additional upsampling procedure allow the modified model to predict the flow-field with much finer definition. The modified flow estimator works to generate the multi-scale flow-fields as follows:

where the \(I_{REF}\) denotes the reference image, and \(I_{LR\uparrow }\) denotes an representative Single-Image SR (SISR) approach [30] upsampled the LR image (\(I_{LR}\)):

More discussions on the choice of flow estimator are presented in discussion in Sect. 4.3.

3.2 End-to-End Network Structure

The patch matching calculates pixel-wise flow using a sliding window scheme. Such matching is computationally expensive, compared with the proposed fully convolutional network for cross-scale flow field estimation.

Resorting the cross-scale warping as a key component for spatial alignment, we propose an end-to-end network for RefSR synthesis. Our network, contains a LR image encoder which extracts multi-scale feature maps from the LR image \(I_L\), a reference image encoder which extracts and aligns the reference image feature maps at multiple scales, and a decoder which perform multi-scale feature fusion and synthesis using the U-Net [43] structure. Figure 2 summarizes the structure of our proposed CrossNet. The major modules, i.e., encoder, estimator and decoder, are elaborated as follows.

LR Image Encoder. Given the LR image \(I_L\), we design a LR image encoder to extract reference feature maps at 4 scales. Specifically, we utilize SISR approach in Eq. 3 to upsample the LR image. After that, we convolve the upsampled images with 64 filters (of size \(5 \times 5\)) with stride 1 to extract feature map at scale 0. We repeatedly convolve the feature map at the scale \(i-1\) (for \(0 < i \le 3\)) with 64 filters (of size \(5 \times 5\)) with stride 2 to extract feature map at scale i. Such operations can be represented as

where \(F^{(i)}_{LR}\) is the LR feature map at scale i, \(\sigma \) stands for the activation function of rectified linear unit (ReLU) [44], \(*\) denotes convolution, and \(\Downarrow _2\) denotes 2D sampling with stride 2.

Note that resorting independent SISR approaches (such as [30]) to encode LR image owns two advantages. First, the SISR approaches that are validated on large-scale external datasets help the LR image encoder to generalize better on unseen scenes. Second, new state-of-the-art SISR approaches can be easily integrated into our system to improve the performance without changing our network structures.

Reference Image Encoder. Given the raw reference image \(I_R\), a 4 scale feature extraction network with the exact structure from Eq. 4 are used to sequentially extract reference image features \(\{F^{(0)}_{REF},F^{(1)}_{REF},F^{(2)}_{REF},F^{(3)}_{REF}\}\) from multiple scales. We allow the reference feature extractor and the LR feature extractor to learn different weights, which helps the two sets of features to complement each other.

After that, we perform backward warping operation on the reference image features \(F^{(i)}_R\) using the cross-scale flow \(V^{(i)}\) in Eq. 2, to generate the spatially aligned feature \(\hat{F}^{(i)}_{R}\).

More discussions on the multi-scale warping are presented in Sect. 4.3.

Decoder. After extracting the LR image feature and the warped reference image feature at different scales, a U-Net like decoder is proposed to perform fusion and SR synthesis. Specifically, the warped features and the LR image features at scale i (for \(0 \le i \le 3\)), as well as the decoder feature from scale \(i-1\) (if any) are concatenated following a deconvolution layer with 64 filters (of size \(4 \times 4\)) and stride 2 to generate decoder features at scale i,

where \(\star \) denotes the deconvolution operation.

After generating the decoder feature at scale 0, three additional convolution layers with filter sizes \(5 \times 5\) and filter number \(\{64, 64, 3\}\) are added to perform post-fusion and to generate the SR output,

3.3 Loss Function

Our network can be directly trained to synthesize the SR output. Given the network prediction \(I_{p}\), and the ground truth high-resolution image \(I_{HR}\), the loss function can be written as

where \(\rho (x)=\sqrt{x^2+0.001^2}\) is the Charbonnier penalty function [45], N is the number of samples, i and s iterate over training samples and spatial locations, respectively.

4 Experiment

4.1 Dataset

The representative Flower dataset [46] and Light Field Video (LFVideo) dataset [41] are used here. The Flower dataset [46] contains 3343 flowers and plants light-field images captured by Lytro ILLUM camera, whereas each light field image has \(376 \times 541\) spatial samples, and \(14 \times 14\) angular samples. Following [46], we extract the central \(8 \times 8\) grid of angular sample to avoid invalid images, and randomly divide the dataset into 3243 images for training and 100 images for testing. The LFVideo dataset [41] contains real-scene light-field image captured by Lytro ILLUM camera. Similar to the Flower dataset, each light field image has \(376 \times 541\) spatial samples and \(8 \times 8\) angular samples. There are in total 1080 light-field samples for training and 270 light-field samples for testing.

For model training using these two dataset, the LR and reference images are randomly selected from the \(8 \times 8\) angular grid. While for testing, the LR images at angular position \((i, i), 0 < i \le 7\) and reference images at position (0, 0) are selected for evaluating RefSR algorithms. As our model requires the input size being a factor of 32, the images from the two dataset are cropped to \(320 \times 512\) for training and validation.

To validate the generalization ability of CrossNet, we also test it on the images from Stanford Light Field dataset [47] and Scene Light Field dataset [48], where we apply our trained model using sliding windows approach, with windows size being \(512\times 512\) and stride being 256 to output the SR result of the entire image. More details are presented in the generalization analysis in 4.2.

4.2 Evaluation

We train the CrossNet for 200K iterations on the Flower and LFVideo datasets for \({\times }4\) and \({\times }8\) SR respectively. The learning rates are initially set to 1e−4 and 7e−5 for the two dataset respectively, and decay to 1e−5 and 7e−6 after 150k iterations. As optimizer, the Adam [49] is used with \(\beta _1 = 0.9\), and \(\beta _1 = 0.999\). In comparison to CrossNet, we also test the latest RefSR algorithms SS-Net [2] and PatchMatch [1], and the representative SISR approaches including SRCNN [25], VDSR [28] and MDSR [30].

We evaluate the results using three image quality metrics: PSNR, SSIM [50], and IFC [51]. Table 1 shows quantitative comparisons for \({\times }4\) and \({\times }8\) RefSR under the two parallax settings, where the reference images are sampled at position (0, 0) while LR images are sampled at position (1, 1) and (7, 7). Examining Table 1, the proposed CrossNet outperforms the previous approaches considerably under various settings including small/large parallax, different upsampling scales and different datasets, achieving 2 dB–4 dB gain in general.

For better comparison, we also visualize the PSNR performance under different parallax setting in Fig. 3. As expected, the RefSR approaches such as CrossNet, PatchMatch, SS-Net outperform SISR approaches owe to the high-frequency details provided by reference images. However, RefSR results deteriorate as the parallax enlarges, due to the fact that the correspondence searching is more difficult for large parallax. In contrast, the performance of SISR approaches appears as ‘U-shape’ for different views, i.e., at the corners of LF image for disparity being (1, 1) and (7, 7), the SISR performs slightly better. This is probably due to the occurrence of easily super-resolved invalid region becomes larger at corners. Finally, it can be seen that the proposed CrossNet consistently outperforms the resting approaches under different disparities, datasets and scales.

Figure 4 presents the visual comparisons of CrossNet with SISR approaches including SRCNN, VDSR, MDSR and RefSR approaches including PatchMatch and SS-Net under the challenging \({\times }8\) scale setting. Benefiting from the reference image, RefSR approaches show competitive results compared to the SISR methods, where the high frequency details are explicitly retained. Among them, the proposed CrossNet can further provide finer details, resembling the details in ground truth image. More visual comparison are shown in the supplementary material and supplementary videoFootnote 1.

Generalization: To further estimate the cross-dataset generalization capacity of our model, we report the results on Stanford light field dataset (Lego Gantry) [47] and the Scene Light Field dataset [48], where the former one contains light field images captured by a Canon Digital Rebel XTi that set on a movable Mindstorms motors on the Lego gantry, and images from the latter one are also captured on a motorized stage with a standard DSLR camera. Under such equipment settings, the captured light-field images of the two datasets have much large parallax comparing to the ones captured by Lytro ILLUM cameras. The parallax discrepancy between datasets yields difficulty to our trained model, as our model is not particularly trained with large parallax.

To handle these two datasets, we employ a parallax augmentation procedure during training, which randomly offsets the reference input by \([-15,15]\) pixels both horizontally and vertically. We take the pre-trained model parameters using LFVideo dataset (in Sect. 4.2) as the initialization, and re-train the CrossNet on the Flower dataset for 200K iterations in order to achieve better generalization. We use 7e−5 as the initial learning rate, and decay the learning rate using factors 0.5, 0.2, 0.1 at 50K, 100K, 150K iterations.

Tables 2 and 3 compare in PSNR measurement our re-trained model with PatchMatch [1], SS-Net [2] for \({\times }8\) RefSR on the Stanford light-field dataset and the Scene Light Field dataset respectively. It can be seen that our approach outperforms the resting approaches with different parallax settings on the Stanford dataset. On average, our approach outperforms the competitive SS-Net by 1.79–2.50 dB on the Stanford light-field dataset and 2.84 dB on the Stanford light-field dataset.

Efficiency: It is worth mentioning that the proposed CrossNet generates an \(320\times 512\) image for \({\times }8\) RefSR within 1 s, i.e., 0.75 s to perform SISR preprocessing using the MDSR [30] model, and 0.12 s to synthesize the final output. In contrast, the PatchMatch [1] takes 86.3 s to run on Matlab2016 using GPU parallelization while the SS-Net [2] takes on average 105.6 s running on GPU. The above running times are profiled using a machine with 8 Intel Xeon CPU (3.4 GHz) and a GeForce GTX 1080 GPU, while the model inferences of our CrossNet and SS-Net [2] are implemented on Python with Theano deep learning package [52].

4.3 Discussions

One may concern that our loss is designed for image synthesis, and does not explicitly define terms for flow estimation. However, since the correctly aligned features are extremely informative for decoder to reconstruct high-frequency details, our model actually learns to predict optical flow by aligning features maps in an unsupervised fashion. To validate the effectiveness of the learned flow by aligning feature, we visualize the intermediate flow field at all scales in Fig. 5(d), where flow predictions at scales \(0,1,2,3 ({\times }1,{\times }2,{\times }4,{\times }8)\) are reasonably coherent, yet noisy flow predictions are observed at scales \(4,5 ({\times }16,{\times }32)\), because the flow at scale 4, 5 are not used for the feature-domain warping.

Flow visualization and comparison for sample #1, #99 in the flower \({\times }8\) testing set. (a) the HR image, (b) (c) (d) flow visualization of PatchMatch [1], SS-Net [2], and our approach respectively. In (d), the flow is visualized at scales \({\times }1,{\times }2,{\times }4\) (row 1), and \({\times }8,{\times }32,{\times }64\) (row 2).

In addition to the multi-scale feature warping module proposed in this paper, we investigate a single-scale image warping counterpart which performs reference image warping before the following image encoder for feature extraction. This counterpart is inspired by the common practice in [35, 41] that performs image warping before synthesis. More concretely, our single-scale image warping counterpart performs image warping using the flow from scale 0: \(\hat{I}_{REF} = warp(I_{REF}, V^{(0)}).\) After that, reference image encoder with the same structure is used to extract features from the warped reference image. Without changing the structure of encoder and decoder, such image warping counterpart CrossNet-iw has the same model size as CrossNet.

We train both CrossNet-iw and CrossNet according to the same procedure in Sect. 4.2. We also adopt a pretraining strategy to train CrossNet-iw. We pretrain the flow estimator of WS-SRNet with image warping task for 100K iterations, and then apply the joint training for another 100K iterations, resulting the CrossNet-iw-p model. Figure 6 shows the PSNR convergence curves on training set for \({\times }8\)RefSR on the Flower and LFVideo dataset. It can be noticed that our CrossNet converges faster than the CrossNet-iw counterparts. At the end of the training, CrossNet outperforms CrossNet-iw 0.20 dB and 0.27 dB on training set. Table 4 shows the RefSR precision on the test sets with three representative point views. CrossNet outperforms CrossNet-iw, especially on small parallax setting. It is reasonable because the training uses random sampled pairs from the LF grid, which are mostly took up by small parallax training pairs.

As our method relies on the cross-scale flow estimators, it is also important to study the flow predicting capacities of different flow estimator. For such purpose, we train the FlowNetS and our modified model (FlowNetS+) on the Flower and the LFVideo dataset for warping the reference images to the ground truth images given the reference and LR image as input. As shown In Table 5, while the FlowNetS+ contains 2% more parameters in comparison to FlowNetS, the additional upscaling layers of FlowNetS+ reasonably improves the warping precision in both the Flower dataset [46] and the LFVideo dataset [41], as they help to generate finer flow field. In addition, we also observe that by plain warping, the FlowNetS+ achieves notably better compatible performance compared to SS-Net [2], as depicted by the SS-Net (\({\times }8\)) row in Table 1.

5 Conclusion

Aiming for the challenge large-scale (\(8{\times }\)) super-resolution problem, we propose an end-to-end reference-based super resolution network named as CrossNet, where the input is a low-resolution (LR) image and a high-resolution (HR) reference image that shares similar view-point, the output is the super-resolved (\(4{\times }\) or \(8{\times }\)) result of LR image. The pipeline of CrossNet is full-convolutional, containing encoder, cross-scale warping, and decoder respectively. Extensive experiment on several large-scale datasets demonstrate the superior performance of CrossNet (around 2 dB–4 dB) compared to previous methods. More importantly, CrossNet achieves a speedup of more than 100 times compared to existing RefSR approaches, allowing the model to be applicable for real-time applications.

Notes

References

Boominathan, V., Mitra, K., Veeraraghavan, A.: Improving resolution and depth-of-field of light field cameras using a hybrid imaging system. In: ICCP, pp. 1–10. IEEE (2014)

Zheng, H., Ji, M., Wang, H., Liu, Y., Fang, L.: Learning cross-scale correspondence and patch-based synthesis for reference-based super-resolution. In: BMVC (2017)

Wang, Y., Liu, Y., Heidrich, W., Dai, Q.: The light field attachment: turning a DSLR into a light field camera using a low budget camera ring. IEEE Trans. Vis. Comput. Graph. (2016)

Yuan, X., Lu, F., Dai, Q., Brady, D., Yebin, L.: Multiscale gigapixel video: a cross resolution image matching and warping approach. In: IEEE International Conference on Computational Photography (2017)

Ledig, C., et al.: Photo-realistic single image super-resolution using a generative adversarial network. arXiv preprint arXiv:1609.04802 (2016)

Jaderberg, M., Simonyan, K., Zisserman, A., et al.: Spatial transformer networks. In: Advances in Neural Information Processing Systems, pp. 2017–2025 (2015)

Li, X., Orchard, M.T.: New edge-directed interpolation. IEEE Trans. Image Process. 10(10), 1521–1527 (2001)

Zhang, L., Wu, X.: An edge-guided image interpolation algorithm via directional filtering and data fusion. IEEE Trans. Image Process. 15(8), 2226–2238 (2006)

Tai, Y.W., Liu, S., Brown, M.S., Lin, S.: Super resolution using edge prior and single image detail synthesis. In: 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2400–2407. IEEE (2010)

Babacan, S.D., Molina, R., Katsaggelos, A.K.: Total variation super resolution using a variational approach. In: 2008 15th IEEE International Conference on Image Processing, ICIP 2008, pp. 641–644. IEEE (2008)

Krishnan, D., Fergus, R.: Fast image deconvolution using hyper-Laplacian priors. In: Advances in Neural Information Processing Systems, pp. 1033–1041 (2009)

Yang, J., Wright, J., Huang, T., Ma, Y.: Image super-resolution as sparse representation of raw image patches. In: CVPR. IEEE (2008) 1–8

Yang, J., Wright, J., Huang, T.S., Ma, Y.: Image super-resolution via sparse representation. IEEE Trans. Image Process. 19(11), 2861–2873 (2010)

Kim, K.I., Kwon, Y.: Single-image super-resolution using sparse regression and natural image prior. TPAMI 32(6), 1127–1133 (2010)

Yang, J., Wang, Z., Lin, Z., Cohen, S., Huang, T.: Coupled dictionary training for image super-resolution. IEEE Trans. Image Process. 21(8), 3467–3478 (2012)

Glasner, D., Bagon, S., Irani, M.: Super-resolution from a single image. In: ICCV, pp. 349–356. IEEE (2009)

Freeman, W.T., Jones, T.R., Pasztor, E.C.: Example-based super-resolution. IEEE Comput. Graph. Appl. 22(2), 56–65 (2002)

Chang, H., Yeung, D.Y., Xiong, Y.: Super-resolution through neighbor embedding. In: CVPR, vol. 1, p. I. IEEE (2004)

Buades, A., Coll, B., Morel, J.M.: A non-local algorithm for image denoising. In: 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2005, vol. 2, pp. 60–65. IEEE (2005)

Salvador, J., Pérez-Pellitero, E.: Naive bayes super-resolution forest. In: ICCV, pp. 325–333 (2015)

Schulter, S., Leistner, C., Bischof, H.: Fast and accurate image upscaling with super-resolution forests. In: CVPR, pp. 3791–3799 (2015)

Yang, C.Y., Yang, M.H.: Fast direct super-resolution by simple functions. In: ICCV, pp. 561–568 (2013)

Yang, J., Lin, Z., Cohen, S.: Fast image super-resolution based on in-place example regression. In: CVPR, pp. 1059–1066 (2013)

He, H., Siu, W.C.: Single image super-resolution using Gaussian process regression. In: CVPR, pp. 449–456. IEEE (2011)

Dong, C., Loy, C.C., He, K., Tang, X.: Learning a deep convolutional network for image super-resolution. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8692, pp. 184–199. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10593-2_13

Dong, C., Loy, C.C., Tang, X.: Accelerating the super-resolution convolutional neural network. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 391–407. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46475-6_25

Shi, W., et al.: Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: CVPR, pp. 1874–1883 (2016)

Kim, J., Kwon Lee, J., Mu Lee, K.: Accurate image super-resolution using very deep convolutional networks. In: CVPR, pp. 1646–1654 (2016)

Lai, W.S., Huang, J.B., Ahuja, N., Yang, M.H.: Deep Laplacian pyramid networks for fast and accurate super-resolution. In: CVPR, pp. 624–632 (2017)

Lim, B., Son, S., Kim, H., Nah, S., Lee, K.M.: Enhanced deep residual networks for single image super-resolution. In: CVPRW, vol. 1, p. 3 (2017)

Wanner, S., Goldluecke, B.: Spatial and angular variational super-resolution of 4D light fields. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7576, pp. 608–621. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33715-4_44

Mitra, K., Veeraraghavan, A.: Light field denoising, light field superresolution and stereo camera based refocussing using a GMM light field patch prior. In: 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 22–28. IEEE (2012)

Wu, J., Wang, H., Wang, X., Zhang, Y.: A novel light field super-resolution framework based on hybrid imaging system. In: 2015 Visual Communications and Image Processing (VCIP), pp. 1–4. IEEE (2015)

Zheng, H., Guo, M., Wang, H., Liu, Y., Fang, L.: Combining exemplar-based approach and learning-based approach for light field super-resolution using a hybrid imaging system. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2481–2486 (2017)

Kalantari, N.K., Wang, T.C., Ramamoorthi, R.: Learning-based view synthesis for light field cameras. ACM Trans. Graph. (TOG) 35(6), 193 (2016)

Ji, D., Kwon, J., McFarland, M., Savarese, S.: Deep view morphing. Technical report (2017)

Xue, T., Chen, B., Wu, J., Wei, D., Freeman, W.T.: Video enhancement with task-oriented flow. arXiv preprint arXiv:1711.09078 (2017)

Sajjadi, M.S., Vemulapalli, R., Brown, M.: Frame-recurrent video super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6626–6634 (2018)

Tao, X., Gao, H., Liao, R., Wang, J., Jia, J.: Detail-revealing deep video super-resolution

Liu, Z., Yeh, R., Tang, X., Liu, Y., Agarwala, A.: Video frame synthesis using deep voxel flow. In: ICCV, vol. 2 (2017)

Wang, T.C., Zhu, J.Y., Kalantari, N.K., Efros, A.A., Ramamoorthi, R.: Light field video capture using a learning-based hybrid imaging system. ACM Trans. Graph. (TOG) 36(4), 133 (2017)

Fischer, P., et al.: FlowNet: learning optical flow with convolutional networks. arXiv preprint arXiv:1504.06852 (2015)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Nair, V., Hinton, G.E.: Rectified linear units improve restricted Boltzmann machines. In: Proceedings of the 27th International Conference on Machine Learning (ICML 2010), pp. 807–814 (2010)

Bruhn, A., Weickert, J., Schnörr, C.: Lucas/Kanade meets Horn/Schunck: combining local and global optic flow methods. IJCV 61(3), 211–231 (2005)

Srinivasan, P.P., Wang, T., Sreelal, A., Ramamoorthi, R., Ng, R.: Learning to synthesize a 4D RGBD light field from a single image. In: ICCV, vol. 2, p. 6 (2017)

The (new) stanford light field archive. http://lightfield.stanford.edu/lfs.html

Kim, C., Zimmer, H., Pritch, Y., Sorkine-Hornung, A., Gross, M.H.: Scene reconstruction from high spatio-angular resolution light fields. ACM TOG (2013)

Kingma, D., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Sheikh, H.R., Bovik, A.C., De Veciana, G.: An information fidelity criterion for image quality assessment using natural scene statistics. IEEE Trans. Image Process. 14(12), 2117–2128 (2005)

Bergstra, J., et al.: Theano: a CPU and GPU math compiler in Python

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Zheng, H., Ji, M., Wang, H., Liu, Y., Fang, L. (2018). CrossNet: An End-to-End Reference-Based Super Resolution Network Using Cross-Scale Warping. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds) Computer Vision – ECCV 2018. ECCV 2018. Lecture Notes in Computer Science(), vol 11210. Springer, Cham. https://doi.org/10.1007/978-3-030-01231-1_6

Download citation

DOI: https://doi.org/10.1007/978-3-030-01231-1_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01230-4

Online ISBN: 978-3-030-01231-1

eBook Packages: Computer ScienceComputer Science (R0)